Measuring Emotion Perception Ability Using AI-Generated Stimuli: Development and Validation of the PAGE Test

Abstract

1. Introduction

2. Study 1: Test Construction and Image Validation

2.1. Emotion Selection

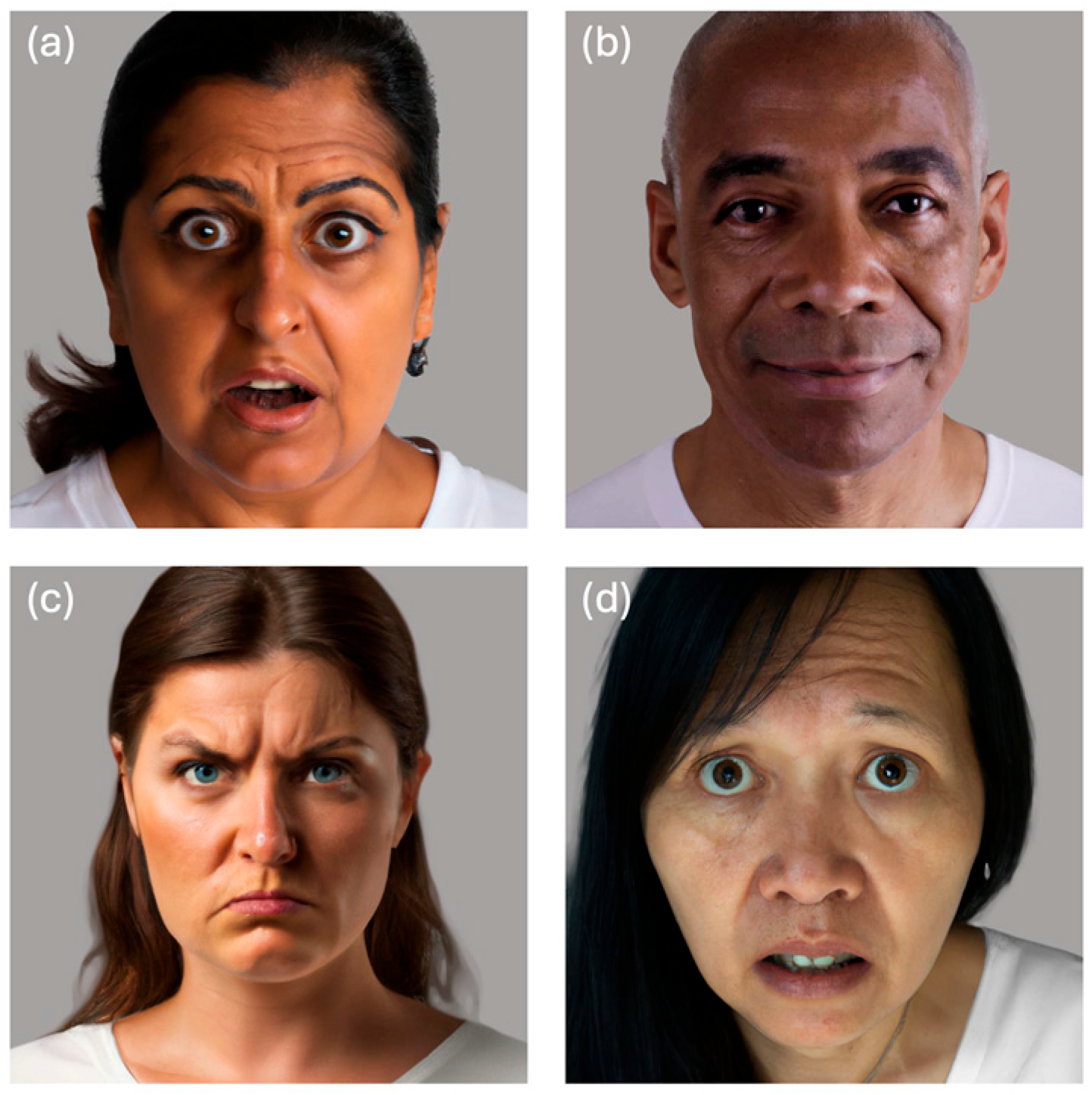

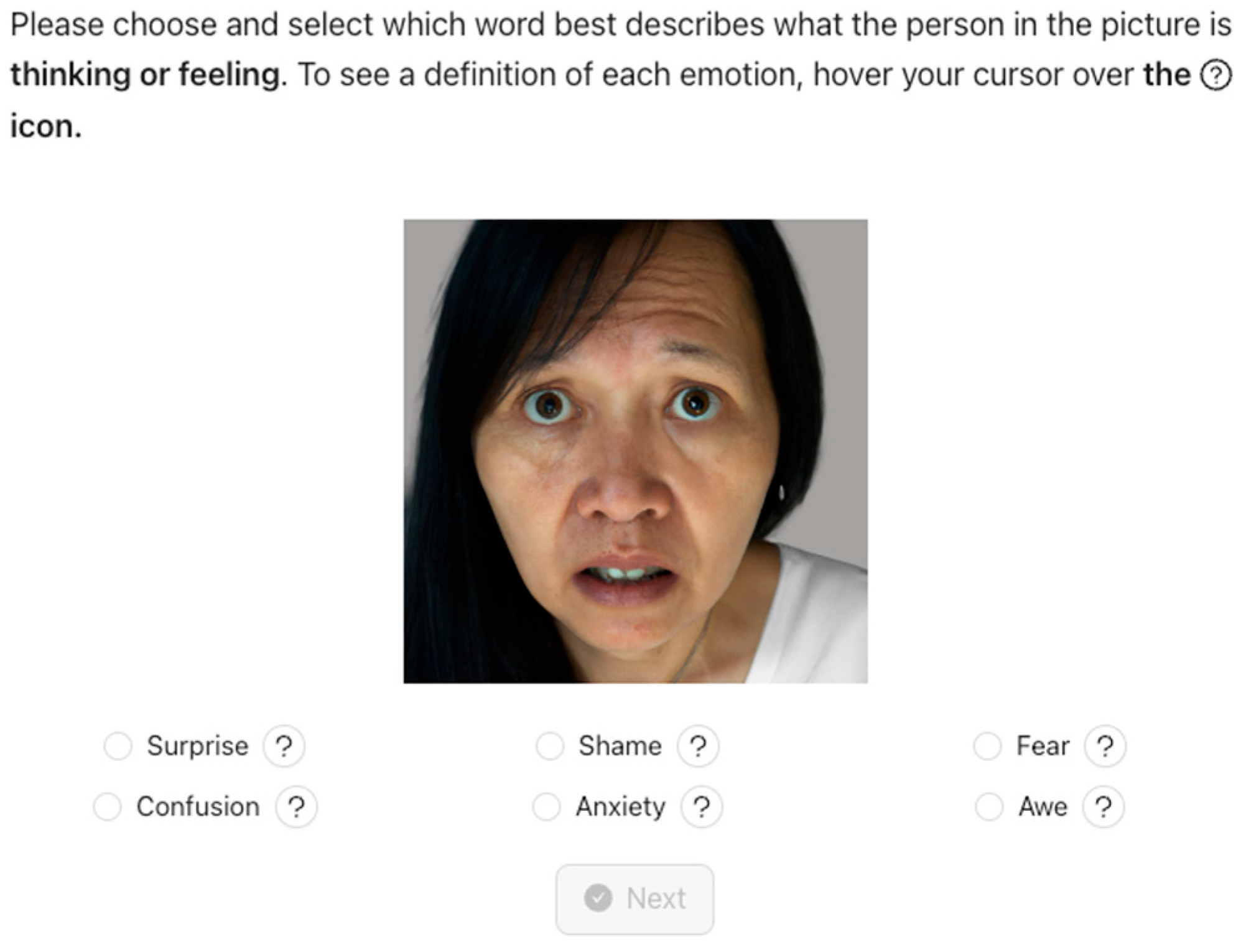

2.2. Generating Emotional Faces

2.3. Validation and Selection of Stimuli

2.4. Test Construction

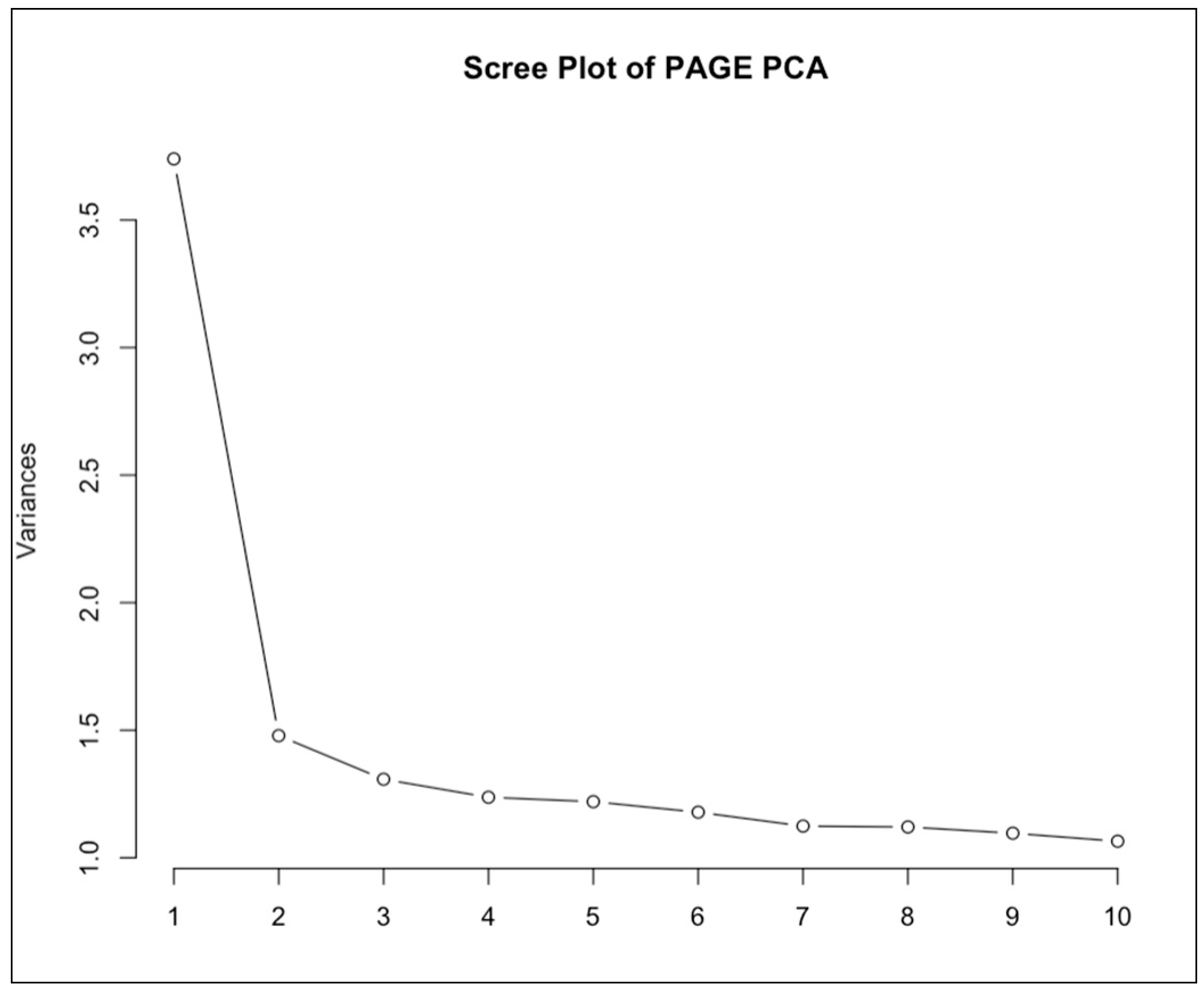

3. Study 2a: Measurement Properties of PAGE

3.1. Participants and Procedure

3.2. Results

4. Study 2b: Convergent Validity of PAGE

4.1. Reading the Mind in the Eyes Test (RMET)

4.2. Participants and Procedure

4.3. Results

5. Study 3: Predictive Validity of the PAGE Assessment

5.1. Participants

5.2. Experiment Procedures

5.3. Results

6. Discussion, Limitations and Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Study 1 | Study 2a | Study 2b ^ | Study 3 * | |

|---|---|---|---|---|

| Number | 500 | 1010 | 741 | 116 |

| Ethnicity (%) | ||||

| White | 57.8 | 44.5 | 50.5 | 17.0 |

| Black/African American | 5.8 | 22.3 | 21.5 | 16.1 |

| Latino/Hispanic 0 | 3.4 | 15.4 | 13.2 | - |

| Asian ° | 20.0 | 17.9 | 14.8 | 56.2 |

| Other/not reported | 0.0 | 0.0 | 0.0 | 10.7 |

| Age | ||||

| Mean (SD) | 34.0 (9.4) | 36.7 (9.1) | 37.6 (9.1) | 25.4 (4.5) |

| 18–29 (%) | 41.8 | 26.4 | 23.6 | 83.6 |

| 30–39 (%) | 32.6 | 36.6 | 35.2 | 16.4 |

| 40–59 (%) | 25.2 | 36.9 | 41.2 | - |

| 60–74 (%) | 0.4 | - | - | - |

| Female (%) | 49 | 50 | 50 | 43 |

| Full-time workers (%) | 46 | 91 | 100 | - |

| Country | US | US | US | UK |

| Ethnicity | Count | Age | Count | Gender | Count |

|---|---|---|---|---|---|

| Caucasian | 11 | 20–29 | 5 | Female | 17 |

| Black | 8 | 30–39 | 16 | Male | 18 |

| Latino | 9 | 40–59 | 13 | Total | 35 |

| Asian | 4 | 60 | 1 | ||

| Indian | 2 | Total | 35 | ||

| Multi-racial | 1 | ||||

| Total | 35 |

Appendix B

| 1 | Thanks to the advice of an anonymous reviewer, we acknowledge that it is currently unclear whether DALL-E can reliably generate culturally specific expressions. As such, the advantage of including ethnically diverse stimuli in the PAGE test is more likely to lie in promoting inclusivity for research participants rather than in capturing meaningful cultural display rules. |

| 2 | We conducted post hoc sensitivity analyses excluding participants with extremely low scores and those identified as likely inattentive responders based on response times. Across all thresholds, the reliability (Cronbach’s α) remained substantively unchanged. We thank an anonymous reviewer for this suggestion. |

| 3 | We thank an anonymous reviewer for suggesting this method. Parcels were constructed by averaging item difficulties, with mean difficulty levels carefully balanced across parcels (0.62–0.72). |

| 4 | We thank an anonymous reviewer for noting that that conducting exploratory and confirmatory analyses on the same sample does not constitute strict cross-validation and may capitalize on chance variance (Fokkema and Greiff 2017). Future work should replicate the factor structure in an independent sample. |

| 5 | This analysis accounts for the dependency structure of the data—whereby managers are present in multiple groups—by using a multilevel model with random effects for managers. |

| 6 | Specifically, repeated random assignment allows us to identify the average total contribution of each manager by taking the average of their group scores (as noted in Weidmann et al. 2024). |

| 7 | We thank an anonymous reviewer for pointing this out. |

| 8 | As above, we thank an anonymous reviewer for noting this. |

| 9 | We thank a second anonymous reviewer for this suggestion. |

References

- Acheampong, Alex, De-Graft Owusu-Manu, Ernest Kissi, and Portia Atswei Tetteh. 2023. Assessing the Influence of Emotional Intelligence (EI) on Project Performance in Developing Countries: The Case of Ghana. International Journal of Construction Management 23: 1163–73. [Google Scholar] [CrossRef]

- Ambadar, Zara, Jonathan W. Schooler, and Jeffrey F. Cohn. 2005. Deciphering the Enigmatic Face: The Importance of Facial Dynamics in Interpreting Subtle Facial Expressions. Psychological Science 16: 403–10. [Google Scholar] [CrossRef]

- Artsi, Yaara, Vera Sorin, Eli Konen, Benjamin S. Glicksberg, Girish Nadkarni, and Eyal Klang. 2024. Large Language Models for Generating Medical Examinations: Systematic Review. BMC Medical Education 24: 354. [Google Scholar] [CrossRef]

- Aryadoust, Vahid, Azrifah Zakaria, and Yichen Jia. 2024. Investigating the Affordances of OpenAI’s Large Language Model in Developing Listening Assessments. Computers and Education: Artificial Intelligence 6: 100204. [Google Scholar] [CrossRef]

- Baron-Cohen, Simon, Sally Wheelwright, Jacqueline Hill, Yogini Raste, and Ian Plumb. 2001. The ‘Reading the Mind in the Eyes’ Test Revised Version: A Study with Normal Adults, and Adults with Asperger Syndrome or High-functioning Autism. Journal of Child Psychology and Psychiatry 42: 241–51. [Google Scholar] [CrossRef]

- Bänziger, Tanja, Didier Grandjean, and Klaus R. Scherer. 2009. Emotion Recognition from Expressions in Face, Voice, and Body: The Multimodal Emotion Recognition Test (MERT). Emotion 9: 691–704. [Google Scholar] [CrossRef]

- Bänziger, Tanja, Klaus R. Scherer, Judith A. Hall, and Robert Rosenthal. 2011. Introducing the MiniPONS: A Short Multichannel Version of the Profile of Nonverbal Sensitivity (PONS). Journal of Nonverbal Behavior 35: 189–204. [Google Scholar] [CrossRef]

- Bell, Morris, Gary Bryson, and Paul Lysaker. 1997. Positive and Negative Affect Recognition in Schizophrenia: A Comparison with Substance Abuse and Normal Control Subjects. Psychiatry Research 73: 73–82. [Google Scholar] [CrossRef] [PubMed]

- Benitez-Quiroz, C. Fabian, Ronnie B. Wilbur, and Aleix M. Martinez. 2016. The Not Face: A Grammaticalization of Facial Expressions of Emotion. Cognition 150: 77–84. [Google Scholar] [CrossRef]

- Bhandari, Shreya, Yunting Liu, Yerin Kwak, and Zachary A. Pardos. 2024. Evaluating the Psychometric Properties of ChatGPT-Generated Questions. Computers and Education: Artificial Intelligence 7: 100284. [Google Scholar] [CrossRef]

- Boone, Thomas R., and Katja Schlegel. 2016. Is there a general skill in perceiving others accurately? In The Social Psychology of Perceiving Others Accurately. Edited by Judith A. Hall, Marianne Schmid Mast and Tessa V. West. Cambridge: Cambridge University Press. [Google Scholar] [CrossRef]

- Caplin, Andrew, David J. Deming, Søren Leth-Petersen, and Ben Weidmann. 2023. Economic Decision-Making Skill Predicts Income in Two Countries. Working Paper No. 31674. Working Paper Series; Cambridge: National Bureau of Economic Research. [Google Scholar] [CrossRef]

- Castro, Marcela, André Barcaui, Bouchaib Bahli, and Ronnie Figueiredo. 2022. Do the Project Manager’s Soft Skills Matter? Impacts of the Project Manager’s Emotional Intelligence, Trustworthiness, and Job Satisfaction on Project Success. Administrative Sciences 12: 141. [Google Scholar] [CrossRef]

- Conley, May I., Danielle V. Dellarco, Estee Rubien-Thomas, Alexandra O. Cohen, Alessandra Cervera, Nim Tottenham, and Betty Jo Casey. 2018. The Racially Diverse Affective Expression (RADIATE) Face Stimulus Set. Psychiatry Research 270: 1059–67. [Google Scholar] [CrossRef]

- Connolly, Hannah L., Carmen E. Lefevre, Andrew W. Young, and Gary J. Lewis. 2020. Emotion Recognition Ability: Evidence for a Supramodal Factor and Its Links to Social Cognition. Cognition 197: 104166. [Google Scholar] [CrossRef]

- Cordaro, Daniel T., Marc Brackett, Lauren Glass, and Craig L. Anderson. 2016. Contentment: Perceived Completeness across Cultures and Traditions. Review of General Psychology 20: 221–35. [Google Scholar] [CrossRef]

- Cordaro, Daniel T., Rui Sun, Dacher Keltner, Shanmukh Kamble, Niranjan Huddar, and Galen McNeil. 2018. Universals and Cultural Variations in 22 Emotional Expressions across Five Cultures: Emotion. Emotion 18: 75–93. [Google Scholar] [CrossRef]

- Cordaro, Daniel T., Rui Sun, Shanmukh Kamble, Niranjan Hodder, Maria Monroy, Alan Cowen, Yang Bai, and Dacher Keltner. 2020. The Recognition of 18 Facial-Bodily Expressions across Nine Cultures. Emotion 20: 1292–300. [Google Scholar] [CrossRef]

- Cowen, Alan S., and Dacher Keltner. 2020. What the Face Displays: Mapping 28 Emotions Conveyed by Naturalistic Expression. American Psychologist 75: 349–64. [Google Scholar] [CrossRef]

- Côté, Stéphane. 2014. Emotional Intelligence in Organizations. Annual Review of Organizational Psychology and Organizational Behavior 1: 459–88. [Google Scholar] [CrossRef]

- Côté, Stéphane, Paulo N. Lopes, Peter Salovey, and Christopher T. H. Miners. 2010. Emotional Intelligence and Leadership Emergence in Small Groups. The Leadership Quarterly 21: 496–508. [Google Scholar] [CrossRef]

- Dasborough, Marie T., Neal M. Ashkanasy, Ronald H. Humphrey, Peter D. Harms, Marcus Credé, and Dustin Wood. 2022. Does Leadership Still Not Need Emotional Intelligence? Continuing ‘The Great EI Debate’. The Leadership Quarterly 33: 101539. [Google Scholar] [CrossRef]

- Ekman, Paul. 1992. Are There Basic Emotions? Psychological Review 99: 550–53. [Google Scholar] [CrossRef]

- Ekman, Paul. 2007. The Directed Facial Action Task: Emotional Responses Without Appraisal. In Handbook of Emotion Elicitation and Assessment. Edited by James A. Coan and John J. B. Allen. New York: Oxford University Press. [Google Scholar] [CrossRef]

- Ekman, Paul, E. Richard Sorenson, and Wallace V. Friesen. 1969. Pan-Cultural Elements in Facial Displays of Emotion. Science 164: 86–88. [Google Scholar] [CrossRef]

- Elfenbein, Hillary Anger. 2023. Emotion in Organizations: Theory and Research. Annual Review of Psychology 74: 489–517. [Google Scholar] [CrossRef] [PubMed]

- Elfenbein, Hillary Anger, and Nalini Ambady. 2002. On the Universality and Cultural Specificity of Emotion Recognition: A Meta-Analysis. Psychological Bulletin 128: 203–35. [Google Scholar] [CrossRef] [PubMed]

- Elfenbein, Hillary Anger, and Nalini Ambady. 2003. Universals and Cultural Differences in Recognizing Emotions. Current Directions in Psychological Science 12: 159–64. [Google Scholar] [CrossRef]

- Elfenbein, Hillary Anger, Maw Der Foo, Judith White, Hwee Hoon Tan, and Voon Chuan Aik. 2007. Reading Your Counterpart: The Benefit of Emotion Recognition Accuracy for Effectiveness in Negotiation. Journal of Nonverbal Behavior 31: 205–23. [Google Scholar] [CrossRef]

- Farh, Crystal I. C. Chien, Myeong-Gu Seo, and Paul E. Tesluk. 2012. Emotional Intelligence, Teamwork Effectiveness, and Job Performance: The Moderating Role of Job Context: Journal of Applied Psychology. Journal of Applied Psychology 97: 890–900. [Google Scholar] [CrossRef]

- Fokkema, Marjolein, and Samuel Greiff. 2017. How Performing PCA and CFA on the Same Data Equals Trouble: Overfitting in the Assessment of Internal Structure and Some Editorial Thoughts on It. European Journal of Psychological Assessment 33: 399–402. [Google Scholar] [CrossRef]

- Goetz, Jennifer L., Dacher Keltner, and Emiliana Simon-Thomas. 2010. Compassion: An Evolutionary Analysis and Empirical Review. Psychological Bulletin 136: 351–74. [Google Scholar] [CrossRef]

- Gosling, Samuel D., Peter J. Rentfrow, and William B. Swann. 2003. A Very Brief Measure of the Big-Five Personality Domains. Journal of Research in Personality 37: 504–28. [Google Scholar] [CrossRef]

- Hall, Judith A., and Frank J. Bernieri, eds. 2001. Interpersonal Sensitivity: Theory and Measurement. Mahwah: Lawrence Erlbaum Associates Publishers. [Google Scholar]

- Higgins, Wendy C., Robert M. Ross, Robyn Langdon, and Vince Polito. 2023. The ‘Reading the Mind in the Eyes’ Test Shows Poor Psychometric Properties in a Large, Demographically Representative U.S. Sample. Assessment 30: 1777–89. [Google Scholar] [CrossRef]

- Hirshleifer, Jack. 1983. From Weakest-Link to Best-Shot: The Voluntary Provision of Public Goods. Public Choice 41: 371–86. [Google Scholar] [CrossRef]

- Horton, John J. 2023. Large Language Models as Simulated Economic Agents: What Can We Learn from Homo Silicus? arXiv arXiv:2301.07543. [Google Scholar] [CrossRef]

- Joseph, Dana L., and Daniel A. Newman. 2010. Emotional Intelligence: An Integrative Meta-Analysis and Cascading Model. Journal of Applied Psychology 95: 54–78. [Google Scholar] [CrossRef]

- Kaya, Murtaza, Ertan Sonmez, Ali Halici, Harun Yildirim, and Abdil Coskun. 2025. Comparison of AI-Generated and Clinician-Designed Multiple-Choice Questions in Emergency Medicine Exam: A Psychometric Analysis. BMC Medical Education 25: 949. [Google Scholar] [CrossRef]

- Keltner, Dacher. 1995. Signs of Appeasement: Evidence for the Distinct Displays of Embarrassment, Amusement, and Shame. Journal of Personality and Social Psychology 68: 441–54. [Google Scholar] [CrossRef]

- Keltner, Dacher, and Jonathan Haidt. 1999. Social Functions of Emotions at Four Levels of Analysis. Cognition and Emotion 13: 505–21. [Google Scholar] [CrossRef]

- Kim, Heesu Ally, Jasmine Kaduthodil, Roger W. Strong, Laura T. Germine, Sarah Cohan, and Jeremy B. Wilmer. 2024. Multiracial Reading the Mind in the Eyes Test (MRMET): An Inclusive Version of an Influential Measure. Behavior Research Methods 56: 5900–17. [Google Scholar] [CrossRef] [PubMed]

- Kohler, Christian G., Travis H. Turner, Warren B. Bilker, Colleen M. Brensinger, Steven J. Siegel, Stephen J. Kanes, Raquel E. Gur, and Ruben C. Gur. 2003. Facial Emotion Recognition in Schizophrenia: Intensity Effects and Error Pattern. American Journal of Psychiatry 160: 1768–74. [Google Scholar] [CrossRef]

- LaPalme, Matthew L., Sigal G. Barsade, Marc A. Brackett, and James L. Floman. 2023. The Meso-Expression Test (MET): A Novel Assessment of Emotion Perception. Journal of Intelligence 11: 145. [Google Scholar] [CrossRef]

- Little, Todd D., William A. Cunningham, Golan Shahar, and Keith F. Widaman. 2002. To Parcel or Not to Parcel: Exploring the Question, Weighing the Merits. Structural Equation Modeling: A Multidisciplinary Journal 9: 151–73. [Google Scholar] [CrossRef]

- Lundqvist, Daniel, Anders Flykt, and Arne Öhman. 1998. Karolinska Directed Emotional Faces. Stockholm: Karolinska Institute, Department of Clinical Neuroscience, Psychology Section. [Google Scholar] [CrossRef]

- Ma, Debbie S., Joshua Correll, and Bernd Wittenbrink. 2015. The Chicago Face Database: A Free Stimulus Set of Faces and Norming Data. Behavior Research Methods 47: 1122–35. [Google Scholar] [CrossRef] [PubMed]

- Marcus, Gary, Ernest Davis, and Scott Aaronson. 2022. A Very Preliminary Analysis of DALL-E 2. arXiv arXiv:2204.13807. [Google Scholar] [CrossRef]

- Martin, Rod A., Glen E. Berry, Tobi Dobranski, Marilyn Horne, and Philip G. Dodgson. 1996. Emotion Perception Threshold: Individual Differences in Emotional Sensitivity. Journal of Research in Personality 30: 290–305. [Google Scholar] [CrossRef]

- Matsumoto, David, and Paul Ekman. 2004. The Relationship Among Expressions, Labels, and Descriptions of Contempt. Journal of Personality and Social Psychology 87: 529–40. [Google Scholar] [CrossRef]

- Matsumoto, David, Jeff LeRoux, Carinda Wilson-Cohn, Jake Raroque, Kristie Kooken, Paul Ekman, Nathan Yrizarry, Sherry Loewinger, Hideko Uchida, Albert Yee, and et al. 2000. A New Test to Measure Emotion Recognition Ability: Matsumoto and Ekman’s Japanese and Caucasian Brief Affect Recognition Test (JACBART). Journal of Nonverbal Behavior 24: 179–209. [Google Scholar] [CrossRef]

- Mayer, John D., Peter Salovey, David R. Caruso, and Gill Sitarenios. 2003. Measuring Emotional Intelligence with the MSCEIT V2.0. Emotion 3: 97–105. [Google Scholar] [CrossRef]

- Mill, Aire, Jüri Allik, Anu Realo, and Raivo Valk. 2009. Age-Related Differences in Emotion Recognition Ability: A Cross-Sectional Study. Emotion 9: 619–30. [Google Scholar] [CrossRef]

- Miller, Elizabeth J., Ben A. Steward, Zak Witkower, Clare A. M. Sutherland, Eva G. Krumhuber, and Amy Dawel. 2023. AI Hyperrealism: Why AI Faces Are Perceived as More Real Than Human Ones. Psychological Science 34: 1390–403. [Google Scholar] [CrossRef]

- Nowicki, Stephen, and Marshall P. Duke. 1994. Individual Differences in the Nonverbal Communication of Affect: The Diagnostic Analysis of Nonverbal Accuracy Scale. Journal of Nonverbal Behavior 18: 9–35. [Google Scholar] [CrossRef]

- Perkins, Adam M., Sophie L. Inchley-Mort, Alan D. Pickering, Philip J. Corr, and Adrian P. Burgess. 2012. A Facial Expression for Anxiety. Journal of Personality and Social Psychology 102: 910–24. [Google Scholar] [CrossRef] [PubMed]

- Phillips, Louise H., and Gillian Slessor. 2011. Moving Beyond Basic Emotions in Aging Research. Journal of Nonverbal Behavior 35: 279–86. [Google Scholar] [CrossRef]

- Prkachin, Kenneth M. 1992. The Consistency of Facial Expressions of Pain: A Comparison across Modalities. Pain 51: 297–306. [Google Scholar] [CrossRef]

- Ramesh, Aditya, Prafulla Dhariwal, Alex Nichol, Casey Chu, and Mark Chen. 2022. Hierarchical Text-Conditional Image Generation with CLIP Latents. arXiv arXiv:2204.06125. [Google Scholar] [CrossRef]

- Reeve, Johnmarshall. 1993. The Face of Interest. Motivation and Emotion 17: 353–75. [Google Scholar] [CrossRef]

- Riedl, Christoph, Young Ji Kim, Pranav Gupta, Thomas W. Malone, and Anita Williams Woolley. 2021. Quantifying Collective Intelligence in Human Groups. Proceedings of the National Academy of Sciences 118: e2005737118. [Google Scholar] [CrossRef]

- Rodrigues, Nuno J. P., and Catarina I. V. Matos. 2024. The Relationship Between Managers’ Emotional Intelligence and Project Management Decisions. Administrative Sciences 14: 318. [Google Scholar] [CrossRef]

- Rozin, Paul, and Adam B. Cohen. 2003. High Frequency of Facial Expressions Corresponding to Confusion, Concentration, and Worry in an Analysis of Naturally Occurring Facial Expressions of Americans. Emotion 3: 68–75. [Google Scholar] [CrossRef]

- Ruffman, Ted, Julie D. Henry, Vicki Livingstone, and Louise H. Phillips. 2008. A Meta-Analytic Review of Emotion Recognition and Aging: Implications for Neuropsychological Models of Aging. Neuroscience & Biobehavioral Reviews 32: 863–81. [Google Scholar] [CrossRef]

- Saharia, Chitwan, William Chan, Saurabh Saxena, Lala Li, Jay Whang, Emily L. Denton, Kamyar Ghasemipour, Raphael Gontijo Lopes, Burcu Karagol Ayan, Tim Salimans, and et al. 2022. Photorealistic Text-to-Image Diffusion Models with Deep Language Understanding. arXiv arXiv:2205.11487. [Google Scholar] [CrossRef]

- Salovey, Peter, and John D. Mayer. 1990. Emotional Intelligence. Imagination, Cognition and Personality 9: 185–211. [Google Scholar] [CrossRef] [PubMed]

- Scherer, Klaus R., and Heiner Ellgring. 2007a. Are Facial Expressions of Emotion Produced by Categorical Affect Programs or Dynamically Driven by Appraisal? Emotion 7: 113–30. [Google Scholar] [CrossRef]

- Scherer, Klaus R., and Heiner Ellgring. 2007b. Multimodal Expression of Emotion: Affect Programs or Componential Appraisal Patterns? Emotion 7: 158–71. [Google Scholar] [CrossRef]

- Scherer, Klaus R., and Ursula Scherer. 2011. Assessing the Ability to Recognize Facial and Vocal Expressions of Emotion: Construction and Validation of the Emotion Recognition Index. Journal of Nonverbal Behavior 35: 305–26. [Google Scholar] [CrossRef]

- Schlegel, Katja, and Klaus R. Scherer. 2016. Introducing a Short Version of the Geneva Emotion Recognition Test (GERT-S): Psychometric Properties and Construct Validation. Behavior Research Methods 48: 1383–92. [Google Scholar] [CrossRef]

- Schlegel, Katja, Didier Grandjean, and Klaus R. Scherer. 2014. Introducing the Geneva Emotion Recognition Test: An Example of Rasch-Based Test Development. Psychological Assessment 26: 666–72. [Google Scholar] [CrossRef]

- Schlegel, Katja, Marc Mehu, Jacobien M. van Peer, and Klaus R. Scherer. 2018. Sense and Sensibility: The Role of Cognitive and Emotional Intelligence in Negotiation. Journal of Research in Personality 74: 6–15. [Google Scholar] [CrossRef]

- Shiota, Michelle N., Belinda Campos, Christopher Oveis, Matthew J. Hertenstein, Emiliana Simon-Thomas, and Dacher Keltner. 2017. Beyond Happiness: Building a Science of Discrete Positive Emotions. American Psychologist 72: 617–43. [Google Scholar] [CrossRef] [PubMed]

- Stypułkowski, Michał, Konstantinos Vougioukas, Sen He, Maciej Zięba, Stavros Petridis, and Maja Pantic. 2023. Diffused Heads: Diffusion Models Beat GANs on Talking-Face Generation. arXiv arXiv:2301.03396. [Google Scholar] [CrossRef]

- Thompson, Ashley E., and Daniel Voyer. 2014. Sex Differences in the Ability to Recognise Non-Verbal Displays of Emotion: A Meta-Analysis. Cognition & Emotion 28: 1164–95. [Google Scholar] [CrossRef]

- Thorndike, Robert M., and Tracy M. Thorndike-Christ. 2010. Measurement and Evaluation in Psychology and Education, 8th ed. London: Pearson. [Google Scholar]

- Tottenham, Nim, James W. Tanaka, Andrew C. Leon, Thomas McCarry, Marcella Nurse, Todd A. Hare, David J. Marcusd, Alissa Westerlund, Betty Jo Casey, and Charles Nelson. 2009. The NimStim Set of Facial Expressions: Judgments from Untrained Research Participants. Psychiatry Research 168: 242–49. [Google Scholar] [CrossRef]

- Tracy, Jessica L., and David Matsumoto. 2008. The Spontaneous Expression of Pride and Shame: Evidence for Biologically Innate Nonverbal Displays. Proceedings of the National Academy of Sciences 105: 11655–60. [Google Scholar] [CrossRef] [PubMed]

- Tracy, Jessica L., and Richard W. Robins. 2004. Show Your Pride: Evidence for a Discrete Emotion Expression. Psychological Science 15: 194–97. [Google Scholar] [CrossRef] [PubMed]

- van Kleef, Gerben A. 2016. The Interpersonal Dynamics of Emotion. Cambridge: Cambridge University Press. [Google Scholar]

- Weidmann, Ben, and David J. Deming. 2021. Team Players: How Social Skills Improve Team Performance. Econometrica 89: 2637–57. [Google Scholar] [CrossRef]

- Weidmann, Ben, Joseph Vecci, Farah Said, David J. Deming, and Sonia R. Bhalotra. 2024. How Do You Find a Good Manager? Working Paper No. 32699. Working Paper Series; Cambridge: National Bureau of Economic Research. [Google Scholar] [CrossRef]

- Young, Steven G., Kurt Hugenberg, Michael J. Bernstein, and Donald F. Sacco. 2012. Perception and Motivation in Face Recognition: A Critical Review of Theories of the Cross-Race Effect. Personality and Social Psychology Review 16: 116–42. [Google Scholar] [CrossRef]

| Test | Emotional Range | Ethnic Diversity | Practical Challenges | Number of Items |

|---|---|---|---|---|

| DANVA-2 (Nowicki and Duke 1994) | 4 emotions | Caucasian, Black | Not freely available | 48 |

| BLERT (Bell et al. 1997) | 7 emotions | Caucasian | 15–20 min | 21 |

| JACBART (Matsumoto et al. 2000) | 7 emotions | Asian, Caucasian | Not freely available | 56 |

| RMET (Baron-Cohen et al. 2001) | 26 mental states | Caucasian | None | 36 |

| PERT-96 (Kohler et al. 2003) | 5 emotions | Diverse | None | 96 |

| MSCEIT Perception Tests (Mayer et al. 2003) | 5 emotions | Caucasian | Not freely available | 50 |

| MERT (Bänziger et al. 2009) | 10 emotions | Caucasian | 45–60 min | 120 |

| MiniPONS (Bänziger et al. 2011) | 2 affective situations | Caucasian | 15–20 min | 64 |

| ERI (Scherer and Scherer 2011) | 5 emotions | Caucasian | 15–20 min | 60 |

| GERT-S (Schlegel and Scherer 2016) | 14 emotions | Caucasian | 15–20 min; No customization | 42 |

| MET (LaPalme et al. 2023) | 17 emotions | Diverse | 15–20 min | 64 |

| MRMET (Kim et al. 2024) | 18 mental states | Diverse | None | 37 or 10 |

| Difficulty Range | Number of Items |

|---|---|

| 0.30 ≤ p < 0.50 | 3 (8.6%) |

| 0.50 ≤ p < 0.70 | 18 (51.4%) |

| 0.70 ≤ p < 0.90 | 14 (40%) |

| Average Causal Contributions of Managers | |||||||

|---|---|---|---|---|---|---|---|

| (1) | (2) | (3) | (4) | (5) | (6) | (7) | |

| PAGE | 0.303 ** | 0.241 * | 0.230 * | 0.273 * | |||

| (0.095) | (0.096) | (0.105) | (0.113) | ||||

| RMET | 0.146 | 0.067 | −0.025 | −0.125 | |||

| (0.092) | (0.093) | (0.111) | (0.116) | ||||

| Big5 | X | X | X | X | X | ||

| Demographics | X | X | X | ||||

| Observations | 109 | 109 | 109 | 109 | 109 | 109 | 109 |

| R2 | 0.088 | 0.171 | 0.223 | 0.023 | 0.124 | 0.184 | 0.233 |

| Adjusted R2 | 0.079 | 0.122 | 0.117 | 0.014 | 0.073 | 0.073 | 0.118 |

| First Period | Second Period | Final Period | |

|---|---|---|---|

| (1) | (2) | (3) | |

| PAGE | 0.120 | 0.085 | 0.235 * |

| (0.116) | (0.102) | (0.101) | |

| Constant | −0.003 | −0.013 | 0.043 |

| (0.113) | (0.100) | (0.099) | |

| Observations | 87 | 87 | 87 |

| R2 | 0.013 | 0.008 | 0.060 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Weidmann, B.; Xu, Y. Measuring Emotion Perception Ability Using AI-Generated Stimuli: Development and Validation of the PAGE Test. J. Intell. 2025, 13, 116. https://doi.org/10.3390/jintelligence13090116

Weidmann B, Xu Y. Measuring Emotion Perception Ability Using AI-Generated Stimuli: Development and Validation of the PAGE Test. Journal of Intelligence. 2025; 13(9):116. https://doi.org/10.3390/jintelligence13090116

Chicago/Turabian StyleWeidmann, Ben, and Yixian Xu. 2025. "Measuring Emotion Perception Ability Using AI-Generated Stimuli: Development and Validation of the PAGE Test" Journal of Intelligence 13, no. 9: 116. https://doi.org/10.3390/jintelligence13090116

APA StyleWeidmann, B., & Xu, Y. (2025). Measuring Emotion Perception Ability Using AI-Generated Stimuli: Development and Validation of the PAGE Test. Journal of Intelligence, 13(9), 116. https://doi.org/10.3390/jintelligence13090116