The Adaptation of the Wechsler Intelligence Scale for Children—5th Edition (WISC-V) for Indonesia: A Pilot Study

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Instrument

2.3. Adaptation Process of the WISC-V-ID

2.3.1. Permission for Translation and Adaptation

2.3.2. Expert Review

2.3.3. Forward and Backward Translation

2.4. Procedure

2.5. Analyses

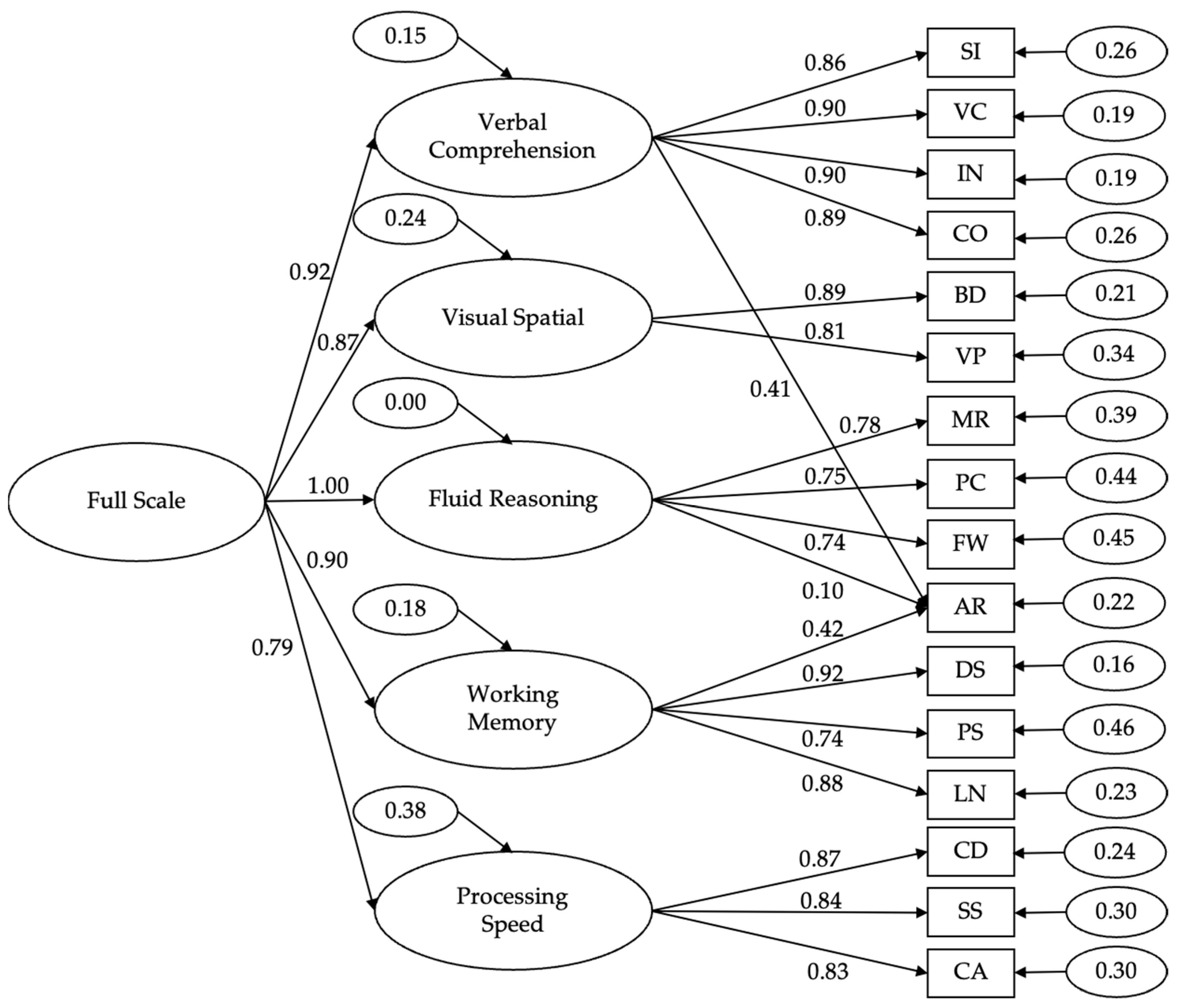

3. Results

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Subtest | N of Items | Cronbach’s α by Age Group | McDonald’s ω by Age Group | ||||||

|---|---|---|---|---|---|---|---|---|---|

| 6–9 (n = 67) | 10–12 (n = 66) | 13–16 (n = 88) | Overall (n = 211) | 6–9 (n = 67) | 10–12 (n = 66) | 13–16 (n = 88) | Overall (n = 211) | ||

| BD | 13 | 0.77 (4.12) | 0.76 (4.89) | 0.72 (4.88) | 0.79 (4.87) | 0.80 (3.84) | 0.82 (4.23) | 0.82 (3.91) | 0.85 (4.12) |

| SI | 23 | 0.84 (2.70) | 0.72 (2.58) | 0.76 (2.67) | 0.84 (2.74) | 0.86 (2.52) | 0.73 (2.53) | 0.77 (2.61) | 0.86 (2.58) |

| MR | 32 | 0.78 (2.11) | 0.61 (2.03) | 0.63 (1.92) | 0.76 (2.04) | 0.78 (2.11) | 0.43 (2.46) | 0.63 (1.92) | 0.73 (2.18) |

| DS | 54 | 0.89 (2.01) | 0.81 (1.99) | 0.79 (2.18) | 0.89 (2.15) | 0.90 (1.92) | 0.81 (1.99) | 0.79 (2.18) | 0.90 (2.04) |

| CD | Not Calculated | ||||||||

| VC | 29 | 0.79 (2.92) | 0.83 (3.40) | 0.82 (3.46) | 0.89 (3.43) | 0.81 (2.78) | 0.84 (3.30) | 0.83 (3.37) | 0.90 (3.32) |

| FW | 34 | 0.80 (2.03) | 0.79 (1.91) | 0.79 (1.79) | 0.83 (1.96) | 0.72 (2.40) | 0.42 (3.18) | 0.80 (1.74) | 0.84 (1.91) |

| VP | 29 | 0.77 (1.89) | 0.86 (1.83) | 0.80 (1.87) | 0.85 (1.87) | 0.79 (1.81) | 0.87 (1.76) | 0.82 (1.77) | 0.86 (1.78) |

| PS | 26 | 0.85 (2.99) | 0.81 (2.93) | 0.79 (2.97) | 0.85 (3.07) | 0.86 (2.89) | 0.83 (2.77) | 0.80 (2.90) | 0.87 (2.90) |

| SS | Not Calculated | ||||||||

| IN | 31 | 0.81 (1.54) | 0.79 (1.52) | 0.76 (1.62) | 0.88 (1.64) | 0.83 (1.46) | 0.81 (1.45) | 0.79 (1.51) | 0.89 (1.57) |

| PC | 27 | 0.79 (1.83) | 0.74 (1.85) | 0.61 (1.81) | 0.78 (1.85) | 0.80 (1.78) | 0.75 (1.81) | 0.61 (1.81) | 0.79 (1.82) |

| LN | 30 | 0.87 (1.63) | 0.73 (1.43) | 0.63 (1.48) | 0.85 (1.58) | 0.89 (1.50) | 0.70 (1.51) | 0.62 (1.50) | 0.85 (1.60) |

| CA | Not Calculated | ||||||||

| CO | 19 | 0.74 (2.12) | 0.69 (2.39) | 0.76 (2.58) | 0.83 (2.43) | 0.76 (2.04) | 0.69 (2.39) | 0.78 (2.47) | 0.84 (2.36) |

| AR | 34 | 0.88 (1.80) | 0.79 (1.95) | 0.75 (1.98) | 0.88 (1.99) | 0.89 (1.72) | 0.80 (1.90) | 0.77 (1.90) | 0.89 (1.90) |

| Subtest | N of Items | Original–Without Discontinue Rules | Original—With Discontinue Rules | Reordered—Without Discontinue Rules | Reordered—With Discontinue Rules |

|---|---|---|---|---|---|

| BD | 13 | 0.79 (4.87) | 0.81 (4.79) | 0.79 (4.87) | 0.81 (4.79) |

| SI | 23 | 0.84 (2.74) | 0.87 (2.65) | 0.84 (2.74) | 0.86 (2.63) |

| MR | 32 | 0.76 (2.04) | 0.88 (1.68) | 0.76 (2.04) | 0.88 (1.66) |

| DS | 54 | 0.89 (2.15) | 0.89 (2.15) | 0.89 (2.15) | 0.89 (2.11) |

| VC | 29 | 0.89 (3.43) | 0.93 (3.14) | 0.89 (3.43) | 0.93 (3.16) |

| FW | 34 | 0.83 (1.96) | 0.92 (1.66) | 0.82 (1.96) | 0.91 (1.67) |

| VP | 29 | 0.85 (1.87) | 0.89 (1.71) | 0.85 (1.87) | 0.89 (1.71) |

| PS | 26 | 0.85 (3.07) | 0.86 (3.02) | 0.85 (3.07) | 0.88 (2.98) |

| IN | 31 | 0.88 (1.64) | 0.90 (1.57) | 0.88 (1.66) | 0.90 (1.57) |

| PC | 27 | 0.78 (1.85) | 0.86 (1.64) | 0.78 (1.85) | 0.86 (1.62) |

| LN | 30 | 0.85 (1.58) | 0.88 (1.55) | 0.85 (1.58) | 0.87 (1.55) |

| CO | 19 | 0.83 (2.43) | 0.86 (2.31) | 0.83 (2.43) | 0.85 (2.35) |

| AR | 34 | 0.88 (1.99) | 0.90 (1.87) | 0.88 (1.98) | 0.90 (1.88) |

| Subtest | Original—Without Discontinue Rules | Original—With Discontinue Rules | Reordered—Without Discontinue Rules | Reordered—With Discontinue Rules |

|---|---|---|---|---|

| BD | 28.38 (10.63) | 27.83(11.05) | 28.38 (10.63) | 27.83 (11.05) |

| SI | 21.82 (6.90) | 21.05 (7.33) | 21.82 (6.90) | 21.08 (7.24) |

| MR | 18.77 (4.20) | 16.87 (4.93) | 18.77 (4.20) | 16.78 (4.80) |

| DS | 24.48 (6.45) | 24.00 (6.53) | 24.48 (6.45) | 24.00 (6.53) |

| VC | 25.48 (10.48) | 22.04 (12.14) | 25.48 (10.48) | 24.36 (11.79) |

| FW | 21.76 (4.80) | 19.95 (5.83) | 21.76 (4.80) | 20.15 (5.68) |

| VP | 15.69 (4.76) | 14.76 (5.28) | 15.69 (4.76) | 14.77 (5.29) |

| PS | 28.43 (8.04) | 28.03 (8.17) | 28.43 (8.04) | 27.55 (8.62) |

| IN | 16.63 (4.74) | 15.87 (4.86) | 16.63 (4.74) | 16.07 (4.93) |

| PC | 13.22 (3.98) | 12.08 (4.32) | 13.22 (3.98) | 12.01 (4.31) |

| LN | 15.71 (4.12) | 15.35 (4.5) | 15.71 (4.12) | 15.35 (4.50) |

| CO | 15.63 (5.90) | 14.80 (6.09) | 15.63 (5.90) | 15.05 (6.06) |

| AR | 17.77 (5.71) | 16.96 (5.95) | 17.77 (5.71) | 17.00 (5.97) |

References

- Basaraba, Deni L., Paul Yovanoff, Pooja Shivraj, and Leanne R. Ketterlin-Geller. 2020. Evaluating Stopping Rules for Fixed-Form Formative Assessments: Balancing Efficiency and Reliability. Practical Assessment, Research & Evaluation 25: 8. Available online: https://scholarworks.umass.edu/pare/vol25/iss1/8 (accessed on 9 March 2024).

- Benson, Nicholas F., Randy G. Floyd, John H. Kranzler, Tanya L. Eckert, Sarah A. Fefer, and Grant B. Morgan. 2019. Test Use and Assessment Practices of School Psychologists in the United States: Findings from the 2017 National Survey. Journal of School Psychology 72: 29–48. [Google Scholar] [CrossRef]

- Billard, Catherine, Camille Jung, Arnold Munnich, Sahawanatou Gassama, Monique Touzin, Anne Mirassou, and Thiébaut-Noël Willig. 2021. External Validation of BMT-i Computerized Test Battery for Diagnosis of Learning Disabilities. Frontiers in Pediatrics 9: 733713. [Google Scholar] [CrossRef]

- BPS. 2022. Analisis Profil Penduduk Indonesia Mendeskripsikan Peran Penduduk Dalam Pembangunan. Badan Pusat Statistik. Available online: https://www.bps.go.id/id/publication/2022/06/24/ea52f6a38d3913a5bc557c5f/analisis-profil-penduduk-indonesia.html (accessed on 24 April 2024).

- Caemmerer, Jacqueline M., Timothy Z. Keith, and Matthew R. Reynolds. 2020. Beyond Individual Intelligence Tests: Application of Cattell-Horn-Carroll Theory. Intelligence 79: 101433. [Google Scholar] [CrossRef]

- Canivez, Gary L., Marley W. Watkins, and Stefan C. Dombrowski. 2016. Factor Structure of the Wechsler Intelligence Scale for Children–Fifth Edition: Exploratory Factor Analyses with the 16 Primary and Secondary Subtests. Psychological Assessment 28: 975–86. [Google Scholar] [CrossRef] [PubMed]

- Casaletto, Kaitlin B., Anya Umlauf, Jennifer Beaumont, Richard Gershon, Jerry Slotkin, Natacha Akshoomoff, and Robert K. Heaton. 2015. Demographically Corrected Normative Standards for the English Version of the NIH Toolbox Cognition Battery. Journal of the International Neuropsychological Society 21: 378–91. [Google Scholar] [CrossRef]

- Chen, Yuhe, Simeng Ma, Xiaoyu Yang, Dujuan Liu, and Jun Yang. 2023. Screening Children’s Intellectual Disabilities with Phonetic Features, Facial Phenotype and Craniofacial Variability Index. Brain Sciences 13: 155. [Google Scholar] [CrossRef]

- Cohen, Ronald Jay, and Mark Swerdlik. 2009. Psychological Testing and Assessment: An Introduction to Tests and Measurement, 7th ed. New York: McGraw Hill. [Google Scholar]

- Corthals, Paul. 2010. Nine- to Twelve-year Olds’ Metalinguistic Awareness of Homonymy. International Journal of Language & Communication Disorders 45: 121–28. [Google Scholar] [CrossRef]

- Dang, Hoang-Minh, Bahr Weiss, Amie Pollack, and Minh Cao Nguyen. 2011. Adaptation of the Wechsler Intelligence Scale for Children-IV (WISC-IV) for Vietnam. Psychological Studies 56: 387–92. [Google Scholar] [CrossRef]

- Daniel, Mark H. 1997. Intelligence Testing: Status and Trends. American Psychologist 52: 1038–45. [Google Scholar] [CrossRef]

- Deary, Ian J., Lars Penke, and Wendy Johnson. 2010. The Neuroscience of Human Intelligence Differences. Nature Reviews Neuroscience 11: 201–11. [Google Scholar] [CrossRef] [PubMed]

- Dunn, Thomas J., Thom Baguley, and Vivienne Brunsden. 2014. From Alpha to Omega: A Practical Solution to the Pervasive Problem of Internal Consistency Estimation. British Journal of Psychology 105: 399–412. [Google Scholar] [CrossRef]

- Ferrer, Emilio, Kirstie J. Whitaker, Joel S. Steele, Chloe T. Green, Carter Wendelken, and Silvia A. Bunge. 2013. White Matter Maturation Supports the Development of Reasoning Ability through Its Influence on Processing Speed. Developmental Science 16: 941–51. [Google Scholar] [CrossRef] [PubMed]

- Flynn, James R. 2007. What Is Intelligence?: Beyond the Flynn Effect, 1st ed. New York: Cambridge University Press. [Google Scholar]

- Fry, Astrid F., and Sandra Hale. 2000. Relationships among Processing Speed, Working Memory, and Fluid Intelligence in Children. Biological Psychology 54: 1–34. [Google Scholar] [CrossRef]

- Gignac, Gilles E. 2007. Multi-Factor Modeling in Individual Differences Research: Some Recommendations and Suggestions. Personality and Individual Differences 42: 37–48. [Google Scholar] [CrossRef]

- Hale, Sandra. 1990. A Global Developmental Trend in Cognitive Processing Speed. Child Development 61: 653. [Google Scholar] [CrossRef] [PubMed]

- Hayes, Andrew F., and Jacob J. Coutts. 2020. Use Omega Rather than Cronbach’s Alpha for Estimating Reliability. But…. Communication Methods and Measures 14: 1–24. [Google Scholar] [CrossRef]

- Hendriks, Marc P. H., Ruiter Selma, Mark Schittekatte, and Anne-Marie Bos. 2017. WISC-V-NL Technische Handleiding. Nederlandse Bewerking. Amsterdam: Pearson. [Google Scholar]

- Hernández, Ana, María D. Hidalgo, Ronald K. Hambleton, and Juana Gómez-Benito. 2020. International Test Commission Guidelines for Test Adaptation: A Criterion Checklist. Psicothema 32: 390–98. [Google Scholar] [CrossRef] [PubMed]

- Hu, Li-tze, and Peter M. Bentler. 1999. Cutoff Criteria for Fit Indexes in Covariance Structure Analysis: Conventional Criteria versus New Alternatives. Structural Equation Modeling: A Multidisciplinary Journal 6: 1–55. [Google Scholar] [CrossRef]

- International Test Commission. 2017. The ITC Guidelines for Translating and Adapting Tests, 2nd ed. Available online: https://www.intestcom.org/files/guideline_test_adaptation_2ed.pdf (accessed on 24 April 2024).

- Jensen, Arthur Robert. 1998. The g Factor: The Science of Mental Ability. Human Evolution, Behavior, and Intelligence. Westport: Praeger. [Google Scholar]

- Jorgensen, Terrence D., Sunthud Pornprasertmanit, Alexander M. Schoemann, and Yves Rosseel. 2025. semTools: Useful Tools for Structural Equation Modeling (version 0.5-7). Available online: https://cran.r-project.org/web/packages/semTools/index.html (accessed on 30 May 2025).

- Kail, Robert. 1991. Developmental Change in Speed of Processing during Childhood and Adolescence. Psychological Bulletin 109: 490–501. [Google Scholar] [CrossRef]

- Kaplan, Robert M., and Dennis P. Saccuzzo. 2012. Psychological Testing: Principles, Applications, and Issues. Toronto: Wadsworth Cengage Learning. [Google Scholar]

- Kaufman, Alan S., Susan Engi Raiford, and Diane L. Coalson. 2016. Intelligent Testing with the WISC-V. Hoboken: John Wiley & Sons. [Google Scholar]

- Kline, Rex B. 2016. Principles and Practice of Structural Equation Modeling, 4th ed. New York: Guilford publications. [Google Scholar]

- Korkman, Marit, Sarianna Barron-Linnankoski, and Pekka Lahti-Nuuttila. 1999. Effects of Age and Duration of Reading Instruction on the Development of Phonological Awareness, Rapid Naming, and Verbal Memory Span. Developmental Neuropsychology 16: 415–31. [Google Scholar] [CrossRef]

- Lecerf, Thierry, and Gary L. Canivez. 2018. Complementary Exploratory and Confirmatory Factor Analyses of the French WISC–V: Analyses Based on the Standardization Sample. Psychological Assessment 30: 793–808. [Google Scholar] [CrossRef]

- Leifer, Eric, and James Troendle. 2019. Factorial2x2: Design and Analysis of a 2×2 Factorial Trial. Available online: https://cran.r-project.org/web/packages/factorial2x2/factorial2x2.pdf (accessed on 30 May 2025).

- Leonard, Skyler, Elizabeth C. Loi, and Emily K Olsen. 2023. Pediatric Epilepsy Patients Demonstrate Stability and Variability in Verbal Learning and Memory Functions Over Time. Journal of Pediatric Neuropsychology 9: 127–40. [Google Scholar] [CrossRef]

- Lozano-Ruiz, Alvaro, Ahmed F Fasfous, Inmaculada Ibanez-Casas, Francisco Cruz-Quintana, Miguel Perez-Garcia, and María Nieves Pérez-Marfil. 2021. Cultural Bias in Intelligence Assessment Using a Culture-Free Test in Moroccan Children. Archives of Clinical Neuropsychology 36: 1502–10. [Google Scholar] [CrossRef] [PubMed]

- MacCallum, Robert C., Michael W. Browne, and Hazuki M. Sugawara. 1996. Power Analysis and Determination of Sample Size for Covariance Structure Modeling. Psychological Methods 1: 130–49. [Google Scholar] [CrossRef]

- Maki, Kathrin E., and Sarah R. Adams. 2019. A Current Landscape of Specific Learning Disability Identification: Training, Practices, and Implications. Psychology in the Schools 56: 18–31. [Google Scholar] [CrossRef]

- Malkewitz, Camila Paola, Philipp Schwall, Christian Meesters, and Jochen Hardt. 2023. Estimating Reliability: A Comparison of Cronbach’s α, McDonald’s Ωt and the Greatest Lower Bound. Social Sciences & Humanities Open 7: 100368. [Google Scholar] [CrossRef]

- Maulana, Andri. 2020. Cross Culture Understanding in EFL Teaching: An Analysis for Indonesia Context. Linguists: Journal of Linguistics and Language Teaching 6: 98. [Google Scholar] [CrossRef]

- McGill, Ryan J., Thomas J. Ward, and Gary L. Canivez. 2020. Use of Translated and Adapted Versions of the WISC-V: Caveat Emptor. School Psychology International 41: 276–94. [Google Scholar] [CrossRef]

- Miller, Daniel C., and Ryan J. McGill. 2016. Review of the WISC–V. In Intelligent Testing with the WISC-V. Edited by Alan S. Kaufman, Susan Engi Raiford and Diane L. Coalson. Hoboken: Wiley Online Library, pp. 645–62. [Google Scholar]

- Miller, Laura T., Emily C. Bumpus, and Scott Lee Graves. 2021. The State of Cognitive Assessment Training in School Psychology: An Analysis of Syllabi. Contemporary School Psychology 25: 149–56. [Google Scholar] [CrossRef]

- Mous, Sabine E., Nikita K. Schoemaker, Laura M. E. Blanken, Sandra Thijssen, Jan Van Der Ende, Tinca J. C. Polderman, Vincent W. V. Jaddoe, Albert Hofman, Frank C. Verhulst, Henning Tiemeier, and et al. 2017. The Association of Gender, Age, and Intelligence with Neuropsychological Functioning in Young Typically Developing Children: The Generation R Study. Applied Neuropsychology: Child 6: 22–40. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, Christopher Minh, Shathani Rampa, Mathew Staios, T. Rune Nielsen, Busisiwe Zapparoli, Xinyi Emily Zhou, Lingani Mbakile-Mahlanza, Juliet Colon, Alexandra Hammond, Marc Hendriks, and et al. 2024. Neuropsychological Application of the International Test Commission Guidelines for Translation and Adapting of Tests. Journal of the International Neuropsychological Society 30: 621–34. [Google Scholar] [CrossRef]

- Nippold, Marilyn A., Susan L. Hegel, Linda D. Uhden, and Silvia Bustamante. 1998. Development of Proverb Comprehension in Adolescents: Implications for Instruction. Journal of Children’s Communication Development 19: 49–55. [Google Scholar] [CrossRef]

- Nunnally, Jum, and Ira H. Bernstein. 1994. Psychometric Theory, 3rd ed. New York: McGraw-Hill. [Google Scholar]

- Paauw, Scott. 2009. One Land, One Nation, One Language: An Analysis of Indonesia’s National Language Policy. University of Rochester Working Papers in the Language Sciences 5: 2–16. [Google Scholar]

- Paek, Insu. 2015. An Investigation of the Impact of Guessing on Coefficient α and Reliability. Applied Psychological Measurement 39: 264–77. [Google Scholar] [CrossRef]

- Pauls, Franz, and Monika Daseking. 2021. Revisiting the Factor Structure of the German WISC-V for Clinical Interpretability: An Exploratory and Confirmatory Approach on the 10 Primary Subtests. Frontiers in Psychology 12: 710929. [Google Scholar] [CrossRef]

- Pritchard, Alison E., Carly A. Nigro, Lisa A. Jacobson, and E. Mark Mahone. 2012. The Role of Neuropsychological Assessment in the Functional Outcomes of Children with ADHD. Neuropsychology Review 22: 54–68. [Google Scholar] [CrossRef]

- Priyamvada, Richa, Ranjan Rupesh, Soumya Sharma, and Suprakash Chaudhury. 2024. Efficacy of Cognitive Rehabilitation in Various Psychiatric Disorders. In A Guide to Clinical Psychology: Therapies. New York: Nova Medicine and Health, pp. 167–76. [Google Scholar]

- Rasheed, Muneera A., Sofia Pham, Uzma Memon, Saima Siyal, Jelena Obradović, and Aisha K. Yousafzai. 2018. Adaptation of the Wechsler Preschool and Primary Scale of Intelligence-III and Lessons Learned for Evaluating Intelligence in Low-Income Settings. International Journal of School & Educational Psychology 6: 197–207. [Google Scholar] [CrossRef]

- R Core Team. 2021. R: A Language and Environment for Statistical Computing. Vienna: R Foundation for Statistical Computing. Available online: https://www.R-project.org/ (accessed on 1 March 2024).

- Reeve, Charlie L., and Jennifer E. Charles. 2008. Survey of Opinions on the Primacy of g and Social Consequences of Ability Testing: A Comparison of Expert and Non-Expert Views. Intelligence 36: 681–88. [Google Scholar] [CrossRef]

- Revelle, William. 2023. Psych: Procedures for Psychological, Psychometric, and Personality Research. Available online: https://cran.r-project.org/web/packages/psych/index.html (accessed on 1 March 2024).

- Rodríguez-Cancino, Marcela, and Andrés Concha-Salgado. 2024. The Internal Structure of the WISC-V in Chile: Exploratory and Confirmatory Factor Analyses of the 15 Subtests. Journal of Intelligence 12: 105. [Google Scholar] [CrossRef]

- Rosseel, Yves. 2012. Lavaan: An R Package for Structural Equation Modeling. Journal of Statistical Software 48: 1–36. [Google Scholar] [CrossRef]

- Schneider, W. Joel, and Kevin S. McGrew. 2018. The Cattell–Horn–Carroll Theory of Cognitive Abilities. In Contemporary Intellectual Assessment: Theories, Tests, and Issues, 4th ed. New York: The Guilford Press, pp. 73–163. [Google Scholar]

- Schroeders, Ulrich, Stefan Schipolowski, and Oliver Wilhelm. 2015. Age-Related Changes in the Mean and Covariance Structure of Fluid and Crystallized Intelligence in Childhood and Adolescence. Intelligence 48: 15–29. [Google Scholar] [CrossRef]

- Suwartono, Christiany. 2016. Alat Tes Psikologi Konteks Indonesia: Tantangan Psikologi Di Era MEA. Jurnal Psikologi Ulayat 3: 1–6. [Google Scholar] [CrossRef]

- Suwartono, Christiany, Marc P. H. Hendriks, Lidia L. Hidajat, Magdalena S. Halim, and Roy P. C. Kessels. 2023. The Development of a Short Form of the Indonesian Version of the Wechsler Adult Intelligence Scale—Fourth Edition. Journal of Intelligence 11: 154. [Google Scholar] [CrossRef] [PubMed]

- Śmigasiewicz, Kamila, Mathieu Servant, Solène Ambrosi, Agnès Blaye, and Borís Burle. 2021. Speeding-up While Growing-up: Synchronous Functional Development of Motor and Non-Motor Processes across Childhood and Adolescence. PLoS ONE 16: e0255892. [Google Scholar] [CrossRef]

- Van de Vijver, Fons J. R., Lawrence G. Weiss, Donald H. Saklofske, Abigail Batty, and Aurelio Prifitera. 2019. A Cross-Cultural Analysis of the WISC-V. In WISC-V. Clinical Use and Interpretation. London: Academic Press, pp. 223–44. [Google Scholar] [CrossRef]

- Viladrich, Carme, Ariadna Angulo-Brunet, and Eduardo Doval. 2017. Un Viaje Alrededor de Alfa y Omega Para Estimar La Fiabilidad de Consistencia Interna. Anales de Psicología 33: 755. [Google Scholar] [CrossRef]

- Watkins, Marley W. 2017. The Reliability of Multidimensional Neuropsychological Measures: From Alpha to Omega. The Clinical Neuropsychologist 31: 1113–26. [Google Scholar] [CrossRef]

- Watkins, Marley W., Stefan C. Dombrowski, and Gary L. Canivez. 2018. Reliability and Factorial Validity of the Canadian Wechsler Intelligence Scale for Children–Fifth Edition. International Journal of School & Educational Psychology 6: 252–65. [Google Scholar] [CrossRef]

- Wechsler, David. 1949. Wechsler Intelligence Scale for Children. Wechsler Intelligence Scale for Children. San Antonio: Psychological Corporation. [Google Scholar]

- Wechsler, David. 1974. Manual for the Wechsler Intelligence Scale for Children—Revised. San Antonio: The Psychological Corporation. [Google Scholar]

- Wechsler, David. 2014a. Wechsler Intelligence Scale for Children—Fifth Edition. Bloomington: Pearson Clinical Assessment. [Google Scholar]

- Wechsler, David. 2014b. Wechsler Intelligence Scale for Children—Fifth Edition: Canadian. Toronto: Pearson Canada Assessment. [Google Scholar]

- Wechsler, David. 2015. Escala de Inteligencia de Wechsler Para Niños-V. Madrid: Pearson Educación. [Google Scholar]

- Wechsler, David. 2016a. Wechsler Intelligence Scale for Children and Adolescents—Fifth Edition: Adaptation Françise. Paris: ECPA. [Google Scholar]

- Wechsler, David. 2016b. Wechsler Intelligence Scale for Children, Fifth Edition: Australian and New Zealand Standardized Edition. Sydney: Pearson. [Google Scholar]

- Wechsler, David. 2016c. Wechsler Intelligence Scale for Children—Fifth UK Edition. London: Harcourt Assessment. [Google Scholar]

- Wechsler, David. 2017. Wechsler Intelligence Scale for Children—Fifth Edition (WISC-V). Technisches Manual. Deutsche Fassung von F. Petermann. Frankfurt a. M.: Pearson. [Google Scholar]

- Wechsler, David. 2018. Wechsler Intelligence Scale for Children—Fifth Edition. Technical and Interpretive Manual (Taiwan Version). Taipei: Chinese Behavioral Science Corporation. [Google Scholar]

- Weiss, Lawrence G., Donald H. Saklofske, James A. Holdnack, and Aurelio Prifitera. 2015. WISC-V Assessment and Interpretation: Scientist-Practitioner Perspectives. San Diego: Academic Press. [Google Scholar]

- Wilson, Christopher J., Stephen C. Bowden, Linda K. Byrne, Louis-Charles Vannier, Ana Hernandez, and Lawrence G. Weiss. 2023. Cross-National Generalizability of WISC-V and CHC Broad Ability Constructs across France, Spain, and the US. Journal of Intelligence 11: 159. [Google Scholar] [CrossRef]

- Zimmerman, Donald W., and Richard H. Williams. 2003. A New Look at the Influence of Guessing on the Reliability of Multiple-Choice Tests. Applied Psychological Measurement 27: 357–71. [Google Scholar] [CrossRef]

| Subtest | Maximum Score of Test | Correct Answer | ||

|---|---|---|---|---|

| Range | Mean | SD | ||

| Block Design (BD) | 58 | 5–56 | 28.38 | 10.63 |

| Similarities (SI) | 46 | 2–41 | 21.82 | 6.90 |

| Matrix Reasoning (MR) | 32 | 3–28 | 18.77 | 4.20 |

| Digit Span (DS) | 54 | 4–38 | 24.48 | 6.45 |

| Coding (CD) | 117 | 13–97 | 47.22 | 18.29 |

| Vocabulary (VC) | 54 | 4–47 | 25.48 | 10.48 |

| Figure Weights (FW) | 34 | 3–31 | 21.76 | 4.80 |

| Visual Puzzles (VP) | 29 | 2–26 | 15.69 | 4.76 |

| Picture Span (PS) | 49 | 1–46 | 28.43 | 8.04 |

| Symbol Search (SS) | 60 | 3–60 | 28.28 | 9.32 |

| Information (IN) | 31 | 1–23 | 16.63 | 4.74 |

| Picture Concepts (PC) | 27 | 1–21 | 13.22 | 3.98 |

| Letter-Number Sequencing (LN) | 30 | 3–24 | 15.71 | 4.12 |

| Cancellation (CA) | 128 | 15–118 | 61.12 | 21.13 |

| Comprehension (CO) | 38 | 2–34 | 15.63 | 5.90 |

| Arithmetic (AR) | 34 | 4–29 | 17.77 | 5.71 |

| Subtest | Range of Score | Item Discrimination | Item Difficulty | ||||

|---|---|---|---|---|---|---|---|

| Range | Mean | SD | Range | Mean | SD | ||

| Block Design (BD) | 0–7 | 0.11–0.75 | 0.45 | 0.22 | 0.13–3.69 | 2.17 | 1.12 |

| Similarities (SI) | 0–2 | 0.25–0.68 | 0.43 | 0.13 | 0.05–1.93 | 0.94 | 0.70 |

| Matrix Reasoning (MR) | 0–1 | −0.12–0.63 | 0.34 | 0.20 | 0.06–1.00 | 0.59 | 0.32 |

| Digit Span (DS) | 0–1 | 0.07–0.56 | 0.37 | 0.12 | 0.00–0.99 | 0.49 | 0.38 |

| Coding (CD) | Not calculated | ||||||

| Vocabulary (VC) | 0–2 | 0.08–0.73 | 0.47 | 0.17 | 0.22–1.91 | 0.87 | 0.40 |

| Figure Weights (FW) | 0–1 | −0.08–0.65 | 0.38 | 0.21 | 0.14–0.99 | 0.64 | 0.32 |

| Visual Puzzles (VP) | 0–1 | 0.02–0.62 | 0.39 | 0.18 | 0.06–0.99 | 0.54 | 0.33 |

| Picture Span (PS) | 0–2 | 0.11–0.6 | 0.44 | 0.12 | 0.01–1.94 | 1.09 | 0.63 |

| Symbol Search (SS) | Not calculated | ||||||

| Information (IN) | 0–1 | 0.03–0.67 | 0.44 | 0.17 | 0.00–0.99 | 0.50 | 0.35 |

| Picture Concepts (PC) | 0–1 | 0.07–0.60 | 0.34 | 0.14 | 0.01–0.97 | 0.49 | 0.33 |

| Letter-Number Sequencing (LN) | 0–1 | 0.06–0.71 | 0.40 | 0.17 | 0.01–0.99 | 0.54 | 0.39 |

| Cancellation (CA) | Not calculated | ||||||

| Comprehension (CO) | 0–2 | 0.14–0.63 | 0.45 | 0.14 | 0.01–1.95 | 0.82 | 0.59 |

| Arithmetic (AR) | 0–1 | −0.01–0.72 | 0.40 | 0.18 | 0.01–1.00 | 0.52 | 0.33 |

| Subtest | N of Items | Cronbach’s α by Age Group | McDonald’s ω by Age Group | ||||||

|---|---|---|---|---|---|---|---|---|---|

| 6–9 (n = 67) | 10–12 (n = 66) | 13–16 (n = 88) | Overall (n = 211) | 6–9 (n = 67) | 10–12 (n = 66) | 13–16 (n = 88) | Overall (n = 211) | ||

| BD | 13 | 0.81 (4.01) | 0.78 (4.85) | 0.74 (4.74) | 0.81 (4.79) | 0.85 (3.56) | 0.84 (4.14) | 0.84 (3.72) | 0.87 (3.97) |

| SI | 23 | 0.88 (2.51) | 0.77 (2.44) | 0.80 (2.56) | 0.86 (2.63) | 0.90 (2.29) | 0.76 (2.49) | 0.81 (2.50) | 0.88 (2.51) |

| MR | 32 | 0.89 (1.60) | 0.85 (1.66) | 0.88 (1.22) | 0.88 (1.66) | 0.90 (1.52) | 0.86 (1.60) | 0.80 (1.57) | 0.89 (1.59) |

| DS | 54 | 0.90 (2.01) | 0.82 (1.94) | 0.80 (2.13) | 0.89 (2.11) | 0.91 (1.91) | 0.84 (1.83) | 0.80 (2.13) | 0.90 (2.06) |

| CD | Not Calculated | ||||||||

| VC | 29 | 0.84 (2.35) | 0.89 (7.63) | 0.86 (3.36) | 0.93 (3.16) | 0.85 (2.28) | 0.90 (7.28) | 0.88 (3.11) | 0.94 (2.89) |

| FW | 34 | 0.90 (1.57) | 0.91 (4.38) | 0.88 (1.53) | 0.91 (1.67) | 0.92 (1.40) | 0.93 (3.86) | 0.89 (1.47) | 0.93 (1.5) |

| VP | 29 | 0.85 (1.62) | 0.91 (3.84) | 0.88 (1.68) | 0.89 (1.71) | 0.86 (1.56) | 0.91 (3.84) | 0.90 (1.53) | 0.91 (1.59) |

| PS | 26 | 0.88 (2.84) | 0.84 (2.85) | 0.83 (2.90) | 0.88 (2.98) | 0.89 (2.72) | 0.86 (2.67) | 0.84 (2.81) | 0.90 (2.71) |

| SS | Not Calculated | ||||||||

| IN | 31 | 0.85 (1.40) | 0.85 (1.37) | 0.80 (1.54) | 0.90 (1.57) | 0.87 (1.30) | 0.86 (1.32) | 0.84 (1.37) | 0.91 (1.37) |

| PC | 27 | 0.85 (1.54) | 0.84 (1.62) | 0.79 (1.61) | 0.86 (1.62) | 0.86 (1.49) | 0.85 (1.57) | 0.80 (1.58) | 0.87 (1.55) |

| LN | 30 | 0.90 (1.55) | 0.83 (1.40) | 0.72 (1.46) | 0.87 (1.55) | 0.91 (1.47) | 0.82 (1.44) | 0.47 (2.02) | 0.88 (1.53) |

| CA | Not Calculated | ||||||||

| CO | 19 | 0.77 (2.00) | 0.72 (2.35) | 0.80 (2.46) | 0.85 (2.35) | 0.79 (1.91) | 0.70 (2.43) | 0.82 (2.33) | 0.85 (2.35) |

| AR | 34 | 0.90 (1.68) | 0.83 (1.84) | 0.82 (1.89) | 0.90 (1.88) | 0.89 (1.77) | 0.85 (1.73) | 0.84 (1.78) | 0.91 (1.79) |

| Age Group | Goodness of Fit Index | ||||||

|---|---|---|---|---|---|---|---|

| χ2 | df | CFI | TLI | RMSEA | SMSR | AIC | |

| Model 1 | |||||||

| 6–9 | 141.80 | 99 | 0.94 | 0.92 | 0.08 | 0.06 | 6239 |

| 10–12 | 142.31 | 99 | 0.92 | 0.90 | 0.08 | 0.08 | 6168 |

| 13–16 | 179.23 | 99 | 0.87 | 0.84 | 0.10 | 0.09 | 8216 |

| All Ages | 240.10 | 99 | 0.95 | 0.94 | 0.08 | 0.04 | 20,944 |

| Model 2 | |||||||

| 6–9 | 128.38 | 97 | 0.95 | 0.94 | 0.07 | 0.06 | 6230 |

| 10–12 | 130.72 | 97 | 0.93 | 0.92 | 0.07 | 0.08 | 6160 |

| 13–16 | 157.98 | 97 | 0.90 | 0.88 | 0.08 | 0.09 | 8198 |

| All Ages | 210.38 | 97 | 0.96 | 0.95 | 0.07 | 0.04 | 20,919 |

| Age Group | ||||

|---|---|---|---|---|

| Composite | 6–9 | 10–12 | 13–16 | Overall |

| Verbal Comprehension (Gc) | 0.90 | 0.86 | 0.88 | 0.93 |

| Visual Spatial (Gv) | 0.79 | 0.83 | 0.74 | 0.84 |

| Fluid Reasoning (Gf) | 0.73 | 0.65 | 0.69 | 0.80 |

| Working Memory (Gwm) | 0.90 | 0.82 | 0.78 | 0.91 |

| Processing Speed (Gs) | 0.79 | 0.81 | 0.59 | 0.89 |

| Full Scale IQ | 0.93 | 0.92 | 0.92 | 0.96 |

| Subtest | BD | SI | MR | DS | CD | VC | FW | VP | PS | SS | IN | PC | LN | CA | CO | AR |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Age * | 0.53 | 0.61 | 0.55 | 0.65 | 0.76 | 0.71 | 0.46 | 0.44 | 0.53 | 0.58 | 0.72 | 0.53 | 0.64 | 0.72 | 0.68 | 0.67 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yudiana, W.; Hendriks, M.P.H.; Suwartono, C.; Novita, S.; Abidin, F.A.; Kessels, R.P.C. The Adaptation of the Wechsler Intelligence Scale for Children—5th Edition (WISC-V) for Indonesia: A Pilot Study. J. Intell. 2025, 13, 76. https://doi.org/10.3390/jintelligence13070076

Yudiana W, Hendriks MPH, Suwartono C, Novita S, Abidin FA, Kessels RPC. The Adaptation of the Wechsler Intelligence Scale for Children—5th Edition (WISC-V) for Indonesia: A Pilot Study. Journal of Intelligence. 2025; 13(7):76. https://doi.org/10.3390/jintelligence13070076

Chicago/Turabian StyleYudiana, Whisnu, Marc P. H. Hendriks, Christiany Suwartono, Shally Novita, Fitri Ariyanti Abidin, and Roy P. C. Kessels. 2025. "The Adaptation of the Wechsler Intelligence Scale for Children—5th Edition (WISC-V) for Indonesia: A Pilot Study" Journal of Intelligence 13, no. 7: 76. https://doi.org/10.3390/jintelligence13070076

APA StyleYudiana, W., Hendriks, M. P. H., Suwartono, C., Novita, S., Abidin, F. A., & Kessels, R. P. C. (2025). The Adaptation of the Wechsler Intelligence Scale for Children—5th Edition (WISC-V) for Indonesia: A Pilot Study. Journal of Intelligence, 13(7), 76. https://doi.org/10.3390/jintelligence13070076