An Assessment of Human–AI Interaction Capability in the Generative AI Era: The Influence of Critical Thinking

Abstract

1. Introduction

2. Theoretical Framework

2.1. Definition and Core Elements of Human–AI Interaction Capability

2.2. The Nature and Assessment of Critical Thinking

3. The Rubric for the Iterative Generation of HAII-Capability

4. Task Design and Research Method

4.1. Task Design

- (1).

- Ill-structuredness. Ill-structuredness serves as a core design principle because an ill-structured problem inherently embodies both complexity and challenge. Ill-structured problems are those on which opposing or contradictory evidence and opinions exist, for which there is not a single, correct solution that can be determined by employing a specific decision-making process (Kitchner 1983). Jonassen (2000) distinguished between well-structured and ill-structured problems, proposing 11 types of problems with varying characteristics. These problems range from well structured to ill structured as follows: logical problems, algorithmic problems, story problems, rule-using problems, decision making problems, trouble-shooting problems, diagnosis-solution problems, strategic performance problems, case analysis problem, design problems, and dilemmas. Therefore, in the task design of this study, tasks similar to dilemmas were chosen as much as possible, to ensure that participants could directly obtain the final answer from GenAI, thus providing space for sufficient interaction with GenAI (Ku 2009).

- (2).

- Authenticity. Authentic problems hold greater intrinsic value and significance for humans, thereby more readily stimulating interest in their resolution. To ensure task authenticity, this study selected one problem from the social domain and one from the scientific domain, both drawn from existing and relevant problems in society and life.

- (3).

- Limited experientiality. Whenever a problem is authentic, it is challenging to prevent participants from drawing on their prior experiences and existing knowledge. Therefore, it is impossible to completely exclude the influence of participants’ varying experiences on their interactive performance. However, when selecting tasks, a balance must be struck. On one hand, participants should have some prior knowledge of the problem to serve as a starting point for exploration; on the other hand, most participants should not have extensive experience with the problem, ensuring there is room for further exploration and allowing us to fully assess their ability to interact with GenAI through their interactive behaviors.Based on the above criteria, this study selected one scientific problem scenario and one social problem scenario, and developed specific task requirements, as detailed in Table 3.

4.2. Research Method

4.2.1. Participants and Procedure

4.2.2. Materials

5. Results

5.1. Reliability

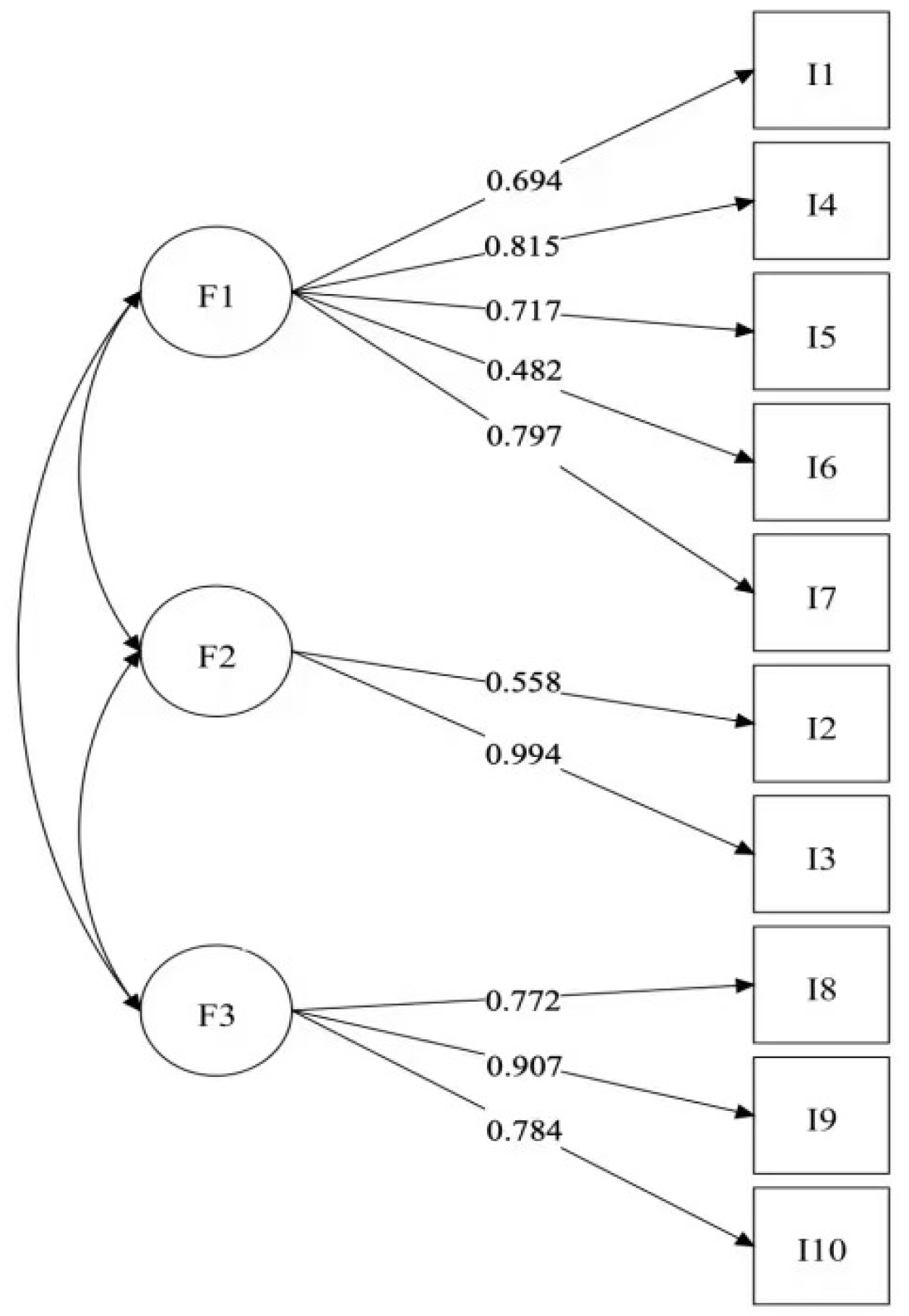

5.2. Validity

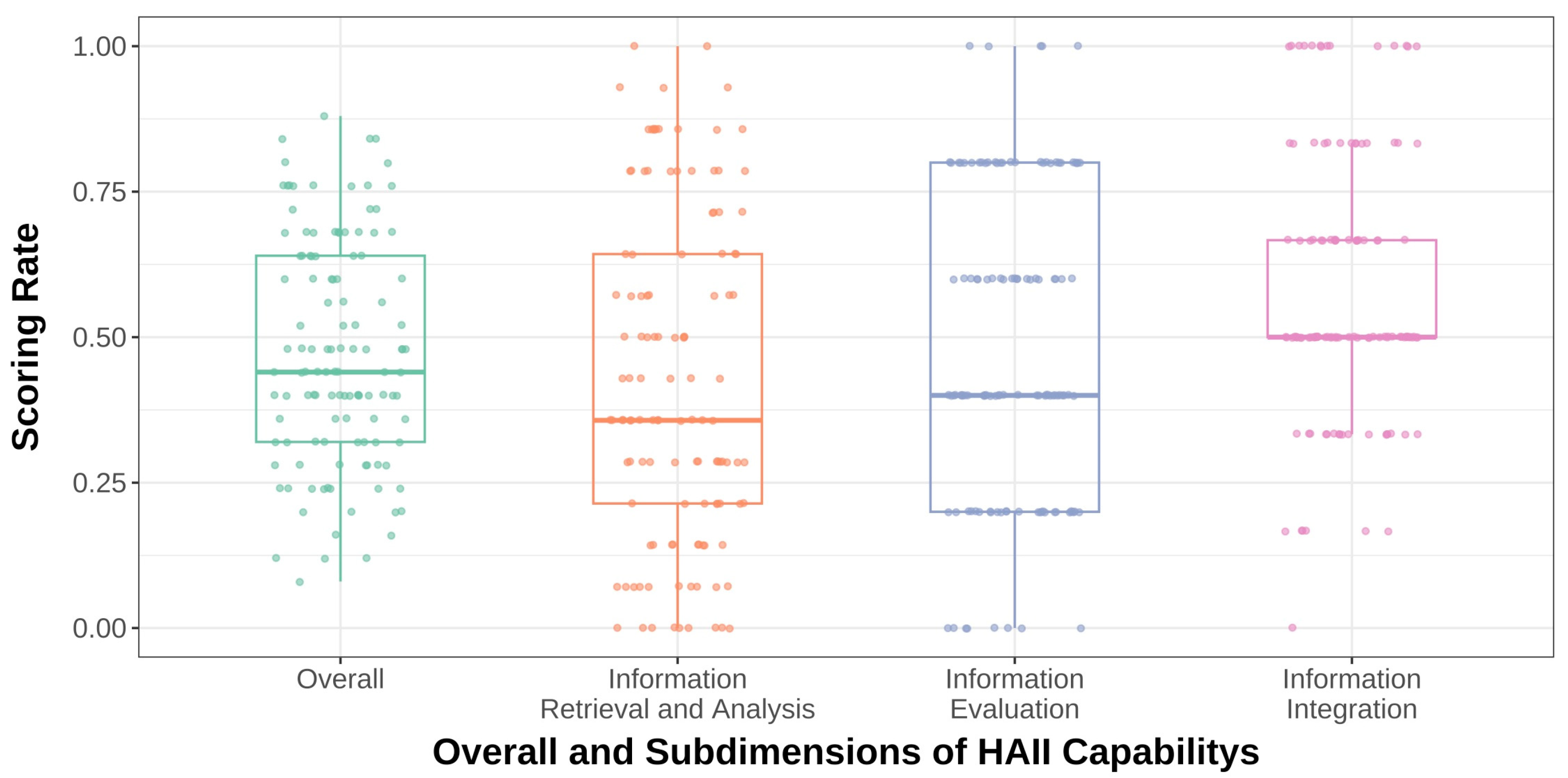

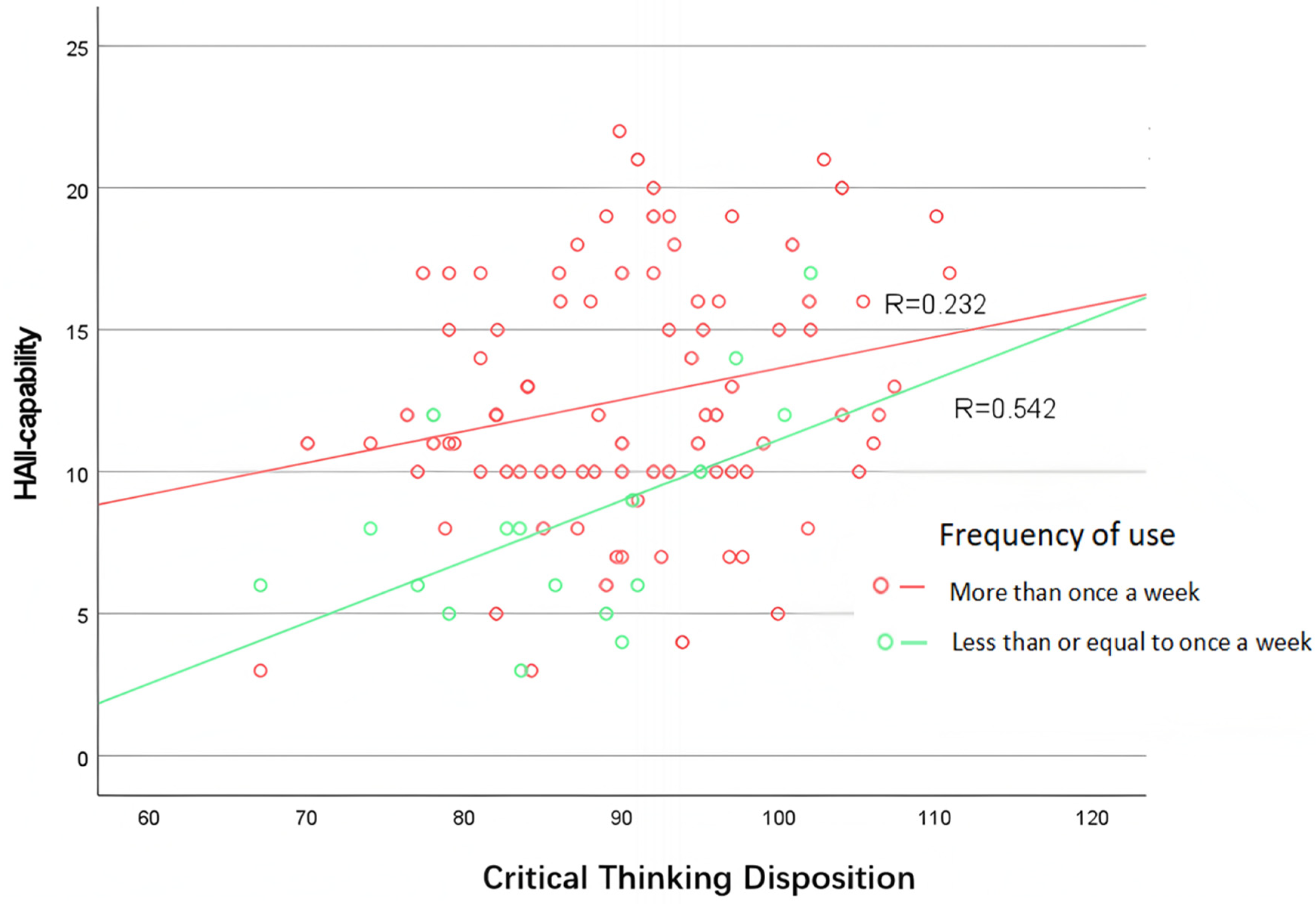

5.3. Overview and Influencing Factors of Students’ HAII-Capability

6. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Dimension | Scoring Rubrics | 0 | 1 | 2 | 3 |

|---|---|---|---|---|---|

| F1: Information Retrieval and Analysis | U1: Number of valid prompts | Up to three prompts | Between 3 and 5 prompts | Between 5 and 10 prompts | At least 10 prompts |

| U2: Cognitive levels of questions | All prompts focus on low-order thinking such as knowledge, comprehension | 1 to 2 high-order prompts, such as application, analysis, synthesis, and evaluation | More than 3 high-order prompts | Most of prompts are higher-order cognitive levels. | |

| U3: Multi-perspective prompts | — | Prompts from 1 to 2 perspectives | Prompts from 3 to 5 perspectives | Prompts from more than 5 perspectives | |

| U4: Follow-up inquiries on GenAI responses | No follow-up inquiries on GenAI responses | Follow-up inquiries into basic factual knowledge | Follow-up inquiries stemming from deliberate analysis and reasoning | — | |

| U5: Continuously refining prompts based on GenAI responses | The prompt does not undergo any adjustments | There are 1 to 2 adjustments made | There are more than 3 adjustments made | — | |

| U6: The prompt chain exhibits structure and logical relations | There is no logical relationship between the prompts | Prompts exhibit 1 to 3 instances of logical relationships | Prompts exhibit 3 to 5 instances of logical relationships | Prompts exhibit more than 5 instances of logical relationships | |

| F2: Information Evaluation | U7: Frequency and quality of justified queries | Obvious errors in GenAI responses go unqueried | No room for queries in GenAI responses/selective query | Querying and analysis take place | — |

| U8: Critically evaluating and substantiating relevance, credibility, and logical strength in GenAI responses | No evaluation of relevance, credibility, and logical strength | Evaluation of three aspects without justification | Evaluation of three aspects with partial justification | Evaluation of three aspects with full justification and examples | |

| F3: Information Integration | U9: Screening and refining evidence from information | Conclusions are drawn without any supporting evidence | The GenAI response is copied without selecting fully relevant evidence to support the conclusion | Evidence is carefully selected from the GenAI response to ensure the necessity and relevance of the arguments | — |

| U10: Justifying from multiple perspectives | Only one aspect is considered | Analyzing multiple aspects, but with limited depth | Multiple aspects are thoroughly and comprehensively analyzed | — | |

| U11: Logical consistency of argumentation | Direct copying of GenAI response without independent reasoning | Superficial processing with logical gaps | Refined processing with a complete logical chain | — |

References

- ACRL Board. 2016. Framework for Information Literacy for Higher Education. Chicago: Association of College and Research Librarie. [Google Scholar]

- Aloisi, Cesare, and A. Callaghan. 2018. Threats to the validity of the Collegiate Learning Assessment (CLA+) as a measure of critical thinking skills and implications for Learning Gain. Higher Education Pedagogies 3: 57–82. [Google Scholar] [CrossRef]

- American Library Association. 2000. Information Literacy Competency Standards for Higher Education. Tucson: The University of Arizona Libraries. [Google Scholar]

- Azcárate, Asunción Lopez-Varela. 2024. Foresight Methodologies in Responsible GenAI Education: Insights from the Intermedia-Lab at Complutense University Madrid. Education Sciences 14: 834. [Google Scholar] [CrossRef]

- Bang, Yejin, Samuel Cahyawijaya, Nayeon Lee, Wenliang Dai, Dan Su, Bryan Wilie, and Pascale Fung. 2023. A multitask, multilingual, multimodal evaluation of chatgpt on reasoning, hallucination, and interactivity. arXiv arXiv:2302.04023. [Google Scholar]

- Bernard, Robert M., Zhang Dai, Philip C. Abrami, Fiore Sicoly, Evgueni Borokhovski, and Michael A. Surkes. 2008. Exploring the structure of the Watson–Glaser Critical Thinking Appraisal: One scale or many subscales? Thinking Skills and Creativity 3: 15–22. [Google Scholar] [CrossRef]

- Bower, Matt, Jodie Torrington, Jennifer W. M. Lai, Peter Petocz, and Mark Alfano. 2024. How should we change teaching and assessment in response to increasingly powerful generative Artificial Intelligence? Outcomes of the ChatGPT teacher survey. Education and Information Technologies 29: 15403–39. [Google Scholar] [CrossRef]

- Bundy, Alan. 2004. Australian and New Zealand information literacy framework. Principles, Standards and Practice 2: 48. [Google Scholar]

- Byrnes, James P., and Kevin N. Dunbar. 2014. The nature and development of critical-analytic thinking. Educational Psychology Review 26: 477–93. [Google Scholar] [CrossRef]

- Dan, Wu, and Jing Liu. 2022. Algorithmic Literacy in the Era of Artificial Intelligence: Connotation Analysis and Competency Framework Construction. Journal of Library Science in China 48: 43–56. [Google Scholar]

- Dwyer, Christopher P., Michael J. Hogan, and Ian Stewart. 2014. An integrated critical thinking framework for the 21st century. Thinking Skills and Creativity 12: 43–52. [Google Scholar] [CrossRef]

- Else, Holly. 2023. By ChatGPT fool scientists. Nature 613: 423. [Google Scholar] [CrossRef]

- Ennis, Robert H. 1993. Critical thinking assessment. Theory into Practice 32: 179–86. [Google Scholar] [CrossRef]

- Facione, Peter A. 1990. Critical Thinking: A Statement of Expert Consensus for Purposes of Educational Assessment and Instruction (The Delphi Report). Millbrae: The California Academic Press. [Google Scholar]

- Facione, Peter A., Noreen C. Facione, and Carol Ann F. Giancarlo. 1996. The motivation to think in working and learning. New Directions for Higher Education, 67–80. [Google Scholar] [CrossRef]

- Feng, Jianjun. 2023. How Do We View ChatGPT’s Challenges to Education. China Educational Technology 7: 1–6+13. [Google Scholar]

- Halpern, Diane F. 2007. The Nature and Nurture of Critical Thinking. Cambridge: Cambridge University Press. [Google Scholar]

- Halpern, Diane F. 2013. Thought and Knowledge: An Introduction to Critical Thinking. Hove: Psychology press. [Google Scholar]

- Heersmink, Richard, Barend de Rooij, María Jimena Clavel Vázquez, and Matteo Colombo. 2024. A phenomenology and epistemology of large language models: Transparency, trust, and trustworthiness. Ethics and Information Technology 26: 41. [Google Scholar] [CrossRef]

- Hou, Yubo, Qiangqiang Li, and Hao Li. 2022. Chinese critical thinking: Structure and measurement. Beijing Da Xue Bao 58: 383–90. [Google Scholar]

- Huang, Ruhua, Leyi Shi, Yingqiang Wu, and Tian Chen. 2024a. Constructing Content Framework for Artificial Intelligence Literacy Constructing Content Framework for Artificial Intelligence Literacy. Documentation, Information & Knowledge 41: 27–37. [Google Scholar]

- Huang, Xiaowei, Wenjie Ruan, Wei Huang, Gaojie Jin, Dong Yi, Changshun Wu, and Mustafa A. Mustafa. 2024b. A survey of safety and trustworthiness of large language models through the lens of verification and validation. Artificial Intelligence Review 57: 175. [Google Scholar] [CrossRef]

- Jiang, Liming, Yujie Liu, and Fang Luo. 2022. Assessing critical thinking about real-world problems: Status quo and challenges. Chinese Journal of Distance Education 12: 58–67, 77, 83. (In Chinese). [Google Scholar]

- Jiao, Jianli. 2023. ChatGPT: A Friend or an Enemy of School Education? Modern Educational Technology 33: 5–15. [Google Scholar]

- Jonassen, David H. 2000. Toward a design theory of problem solving. Educational Technology Research and Development 48: 63–85. [Google Scholar] [CrossRef]

- Kitchner, Karen Strohm. 1983. Cognition, metacognition, and epistemic cognition: A three-level model of cognitive processing. Human Development 26: 222–32. [Google Scholar] [CrossRef]

- Kong, Siu-Cheung, William Man-Yin Cheung, and Guo Zhang. 2021. Evaluation of an artificial intelligence literacy course for university students with diverse study backgrounds. Computers and Education: Artificial Intelligence 2: 100026. [Google Scholar] [CrossRef]

- Ku, Kelly Y. L. 2009. Assessing students’ critical thinking performance: Urging for measurements using multi-response format. Thinking Skills and Creativity 4: 70–76. [Google Scholar] [CrossRef]

- Liang, Jiachen, Jiayin Wang, and Shen-Hung Chou. 2020. A designed platform for programming courses. Paper presented at 2020 9th International Congress on Advanced Applied Informatics (IIAI-AAI), Kitakyushu, Japan, September 1–15; pp. 290–93. [Google Scholar]

- Lo, Leo S. 2023. The CLEAR path: A framework for enhancing information literacy through prompt engineering. The Journal of Academic Librarianship 49: 102720. [Google Scholar] [CrossRef]

- Miao, Fengchun, and Wayne Holmes. 2021. International Forum on GenAI and the Futures of Education, Developing Competencies for the GenAI Era, 7–8 December 2020: Synthesis Report. Paris: United Nations Educational, Scientific and Cultural Organization. [Google Scholar]

- Mo, Zuying, Daqing Pang, Huan Liu, and Yueming Zhao. 2023. Analysis on AIGC false information problem and root cause from the perspective of information quality. Documentation, Information and Knowledge 40: 32–40. [Google Scholar]

- Paul, Richard, and Linda Elder. 2008. The Miniature Guide to Critical Thinking Concepts and Tools. Dillon Beach: Foundation for Critical Thinking Press. [Google Scholar]

- Qiu, Yannan, and Zhengtao Li. 2023. Challenges, Integration and Changes: A Review of the Conference on ChatGPT and Future Education. Modern Distance Education Research 35: 3–12+21. [Google Scholar]

- Ramos, Howard. 2009. Review of Developing research questions: A guide for social scientists, by Patrick White. Canadian Journal of Sociology 34: 1145–47. [Google Scholar] [CrossRef]

- Ruan, Yifan, and Zhibo Wang. 2023. The Ethical Risks of, and Countermeasures against, ChatGPT in Ideological and Political Education. Studies on Core Socialist Values 9: 50–58. [Google Scholar]

- Shavelson, Richard J., Olga Zlatkin-Troitschanskaia, and Julián P. Mariño. 2018. International performance assessment of learning in higher education (iPAL): Research and development. In Assessment of Learning Outcomes in Higher Education: Cross-National Comparisons and Perspectives. Berlin/Heidelberg: Springer, pp. 193–214. [Google Scholar]

- van den Berg, Geesje, and Elize du Plessis. 2023. ChatGPT and Generative AI: Possibilities for Its Contribution to Lesson Planning Plessis, Critical Thinking and Openness in Teacher Education. Education Sciences 13: 998. [Google Scholar] [CrossRef]

- Wang, Bingcheng, Pei-Luen Patrick Rau, and Tianyi Yuan. 2023a. Measuring user competence in using artificial intelligence: Validity and reliability of artificial intelligence literacy scale. Behaviour & Information Technology 42: 1324–37. [Google Scholar]

- Wang, Mi, and Zhixian Zhong. 2008. On designing learning environments for promoting knowledge construction. Open Education Research 14: 22–7. [Google Scholar]

- Wang, Youmei, Dan Wang, Weiyi Liang, and Chenchen Liu. 2023b. Aladdin’s lamp” or “Pandora’s box”: The potential and risks of ChatGPT’s educational application. Modern Distance Education Research 2: 48–56. [Google Scholar]

- Wu, Hongzhi. 2014. Socratic model of critical thinking. Journal of Yan’an University (Social Sciences Edition) 36: 5–13. [Google Scholar]

- Xing, Yao, Fang Wang, and Wenjie Li. 2024. Generation Evolution of Complex Cognition of Graduate Students in the GenAIGC Environment—“Evidentiary Reasoning” Based on ChatGPT. Modern Educational Technology 34: 47–59. [Google Scholar]

- Yuan, Chien Wen, Hsin-yi Sandy Tsai, and Yuting Chen. 2024. Charting competence: A holistic scale for measuring proficiency in artificial intelligence literacy. Journal of Educational Computing Research 62: 1675–704. [Google Scholar] [CrossRef]

- Zeng, Xiaomu, Ping Sun, Mengli Wang, and Weichun Du. 2006. The development of information literacy competency standards for higher education in Beijing. Journal of Academic Libraries 3: 64–67. [Google Scholar]

- Zhang, Sufang. 1998. The Definition of Information Capacity. Library and Information Service 12: 6, 16–17. [Google Scholar]

- Zhou, Xue, Teng Da, and Al-Samarraie Hosam. 2024. The Mediating Role of Generative AI Self-Regulation on Students’ Critical Thinking and Problem-Solving. Education Sciences 14: 1302. [Google Scholar] [CrossRef]

- Zurkowski, Paul G. 1974. The Information Service Environment Relationships and Priorities. Related Paper No.5.[R]. Washington, DC: National Commission on Libraries and Information Science. [Google Scholar]

| HAII-Capability | |

|---|---|

| Information Retrieval | Be able to analyze the initial information, query AIGC for information, clearly comprehend the meaning conveyed by the information, and promptly follow up when something is not understood. During the interaction, continuously identify the gap between the required information and the information provided by AIGC, and adaptively query AIGC to obtain further information. |

| Information Analysis | |

| Information Evaluation | Be able to critically evaluate the relevance, credibility, and logical strength of the information and assess whether the information has omissions or biases |

| Information Integration | Be able to extract, filter, and synthesize the relevant main points from the information provided by AIGC according to the requirements of the task, and summarize (or organize) the information or draw reasonable conclusions based on the existing claims and evidence provided by AIGC, combined with one’s own ideas, from multiple aspects and with complete logic. |

| Dimensions | Criteria |

|---|---|

| F1: Information Retrieval and analysis | U1: Number of valid prompts |

| U2: Cognitive levels of questions | |

| U3: Multi-perspective prompts | |

| U4: Follow-up inquiries on GenAI responses | |

| U5: Continuously refining prompts based on GenAI responses | |

| U6: Prompt chain exhibits structure and logical relations | |

| F2: Information evaluation | U7: Frequency and quality of justified queries |

| U8: Critically evaluating and substantiating relevance, credibility, and logical strength in GenAI responses | |

| F3: Information integration | U9: Screening and refining evidence from information |

| U10: Justifying from multiple perspectives | |

| U11: Logical consistency of argumentation |

| Problem Domain | Problem Scenario | Prompts |

|---|---|---|

| Social issues | During this year’s Two Sessions, the representatives of the National People’s Congress made many suggestions, such as ‘suggesting the establishment of an electronic medical record platform’ to unify patient cases from across the country into a comprehensive platform. The public has also been actively discussing this. Please communicate with ChatGPT to gather as much information as possible and complete the following tasks. | 1. Based on its response and your own contemplation, decide for yourself whether to support this issue and provide reasons |

| 2. Evaluate the relevance, credibility, and logicality of ChatGPT’s responses, and please provide examples to illustrate your points. | ||

| Scientific issues | If there is a myopic patient, the currently known treatment methods include Femtosecond Laser, Femtosecond-assisted LASIK, Excimer Laser, and Phakic Intraocular Lens Implantation. Please communicate with ChatGPT to gather as much information as possible and complete the following tasks | 1. Choose a treatment method that minimizes bodily harm, has the fewest subsequent side effects, and has a low probability of recurrence, or do not treat at all, and explain the reasons for your choice. |

| 2. Assess the relevance, credibility, and logicality of ChatGPT’s responses, and illustrate with examples |

| Six Evaluators | Evaluators 1 and 2 | Evaluators 3 and 4 | Evaluators 5 and 6 |

|---|---|---|---|

| 0.756 | 0.916 | 0.851 | 0.765 |

| Total | Information Retrieval and Analysis | Information Evaluation | Information Integration |

|---|---|---|---|

| 0.75 | 0.816 | 0.716 | 0.713 |

| F1: Information retrieval and analysis | U2 | 0.734 | ||

| U3 | 0.776 | |||

| U4 | 0.733 | |||

| U5 | 0.503 | |||

| U6 | 0.778 | |||

| F2: Information evaluation | U7 | 0.768 | ||

| U8 | 0.611 | |||

| F3: Information integration | U9 | 0.697 | ||

| U10 | 0.790 | |||

| U11 | 0.854 |

| Models | χ2/DF | CFI | TLI | RMSEA | SRMR |

|---|---|---|---|---|---|

| One-factor | 6.37 | 0.57 | 0.44 | 0.21 | 0.18 |

| Three-factor | 1.34 | 0.975 | 0.965 | 0.053 | 0.068 |

| Information Retrieval and Analysis | Information Evaluation | Information Integration | Total | |

|---|---|---|---|---|

| Critical thinking disposition | 0.355 ** | 0.14 | 0.043 | 0.317 ** |

| Information Retrieval and Analysis | Information Evaluation | Information Integration | Total | |||||

|---|---|---|---|---|---|---|---|---|

| M ± SD | t | M ± SD | t | M ± SD | t | M ± SD | t | |

| Gender | ||||||||

| Male | 6.0 ± 3.9 | 0.1 | 3.0 ± 1.2 | 4.2 ** | 3.6 ± 1.4 | 0.8 | 12.6 ± 5.0 | 1.4 * |

| Female | 5.9 ± 3.9 | 2.0 ± 1.2 | 3.3 ± 1.3 | 11.3 ± 4.6 | ||||

| Discipline | ||||||||

| Science | 6.6 ± 3.9 | 2.6 ** | 2.6 ± 1.4 | 2.2 * | 3.4 ± 1.5 | 0.1 | 12.8 ± 5.0 | 2.8 ** |

| Humanities | 4.8 ± 3.6 | 2.0 ± 1.0 | 3.4 ± 1.1 | 10.3 ± 3.9 | ||||

| Usage Frequency | ||||||||

| >Once a week | 6.5 ± 3.7 | 4.1 ** | 2.5 ± 1.3 | 2.6 * | 3.4 ± 1.3 | −0.2 | 12.5 ± 4.6 | 4.1 ** |

| <=Once a week | 2.8 ± 3.2 | 1.7 ± 0.9 | 3.5 ± 1.2 | 8.0 ± 3.5 | ||||

| F1 | F2 | F3 | Total | |||||

|---|---|---|---|---|---|---|---|---|

| M ± SD | F | M ± SD | F | M ± SD | F | M ± SD | F | |

| Task 1: (Social Issues) | ||||||||

| Fully Parallel | 5.1 ± 4.4 | 5.5 ** | 4.6 ± 2.6 | 0.5 | 2.6 ± 1.6 | 3.8 * | 12.3 ± 5.2 | 6.3 ** |

| Fully Progressive | 5.7 ± 3.7 | 4.7 ± 3.2 | 3.1 ± 1.7 | 13.5 ± 6.4 | ||||

| Initially Parallel then Progressive | 8.0 ± 3.9 | 5.2 ± 3.3 | 3.6 ± 1.7 | 16.8 ± 6.8 | ||||

| Task 2: (Scientific Issues) | ||||||||

| Fully Parallel | 7.1 ± 4.5 | 8.8 ** | 4.7 ± 2.5 | 1.8 | 2.5 ± 1.6 | 0.4 | 14.4 ± 6.2 | 7.7 ** |

| Fully Progressive | 9.5 ± 3.2 | 4.6 ± 3.1 | 2.9 ± 1.6 | 17.0 ± 4.2 | ||||

| Initially Parallel then Progressive | 10.6 ± 3.5 | 5.7 ± 2.8 | 2.5 ± 1.7 | 19.0 ± 5.0 | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, F.; Yan, X.; Su, H.; Shen, R.; Mao, G. An Assessment of Human–AI Interaction Capability in the Generative AI Era: The Influence of Critical Thinking. J. Intell. 2025, 13, 62. https://doi.org/10.3390/jintelligence13060062

Li F, Yan X, Su H, Shen R, Mao G. An Assessment of Human–AI Interaction Capability in the Generative AI Era: The Influence of Critical Thinking. Journal of Intelligence. 2025; 13(6):62. https://doi.org/10.3390/jintelligence13060062

Chicago/Turabian StyleLi, Feiming, Xinyu Yan, Hongli Su, Rong Shen, and Gang Mao. 2025. "An Assessment of Human–AI Interaction Capability in the Generative AI Era: The Influence of Critical Thinking" Journal of Intelligence 13, no. 6: 62. https://doi.org/10.3390/jintelligence13060062

APA StyleLi, F., Yan, X., Su, H., Shen, R., & Mao, G. (2025). An Assessment of Human–AI Interaction Capability in the Generative AI Era: The Influence of Critical Thinking. Journal of Intelligence, 13(6), 62. https://doi.org/10.3390/jintelligence13060062