Bootstrap Exploratory Graph Analysis of the WISC–V with a Clinical Sample

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Instruments

2.3. Analysis

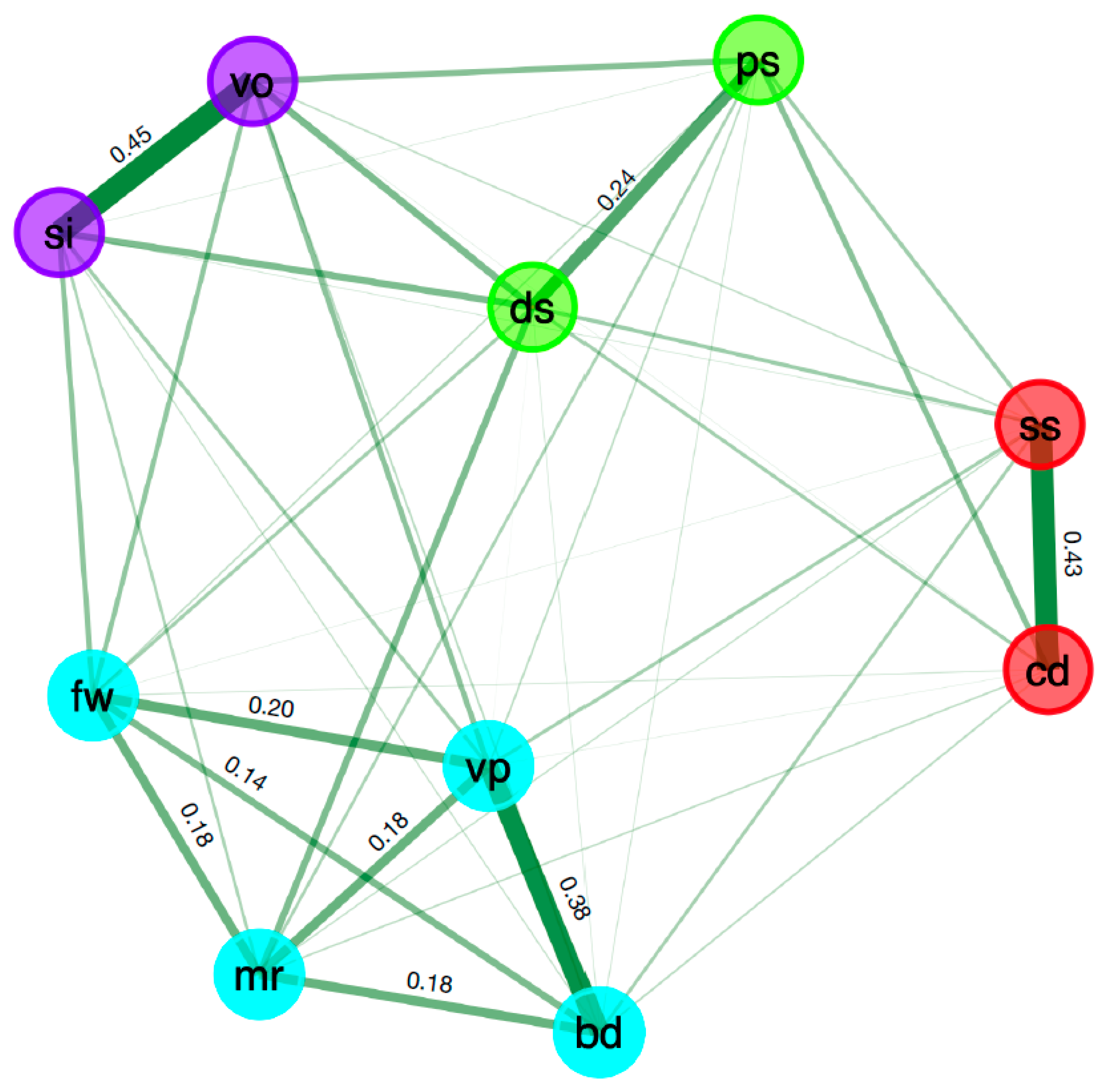

3. Results

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- American Educational Research Association, American Psychological Association, and National Council on Measurement in Education. 2014. Standards for Educational and Psychological Testing. Washington, DC: American Educational Research Association. [Google Scholar]

- Benson, Nicholas F., Randy G. Floyd, John H. Kranzler, Tanya L. Eckert, Sarah A. Fefer, and Grant B. Morgan. 2019. Test use and assessment practices of school psychologists in the United States: Findings from the 2017 national survey. Journal of School Psychology 72: 29–48. [Google Scholar] [CrossRef] [PubMed]

- Borsboom, Denny. 2022. Possible futures for network psychometrics. Psychometrika 87: 253–65. [Google Scholar] [CrossRef] [PubMed]

- Braden, Jeffrey P., and Bradley C. Niebling. 2012. Using the joint test standards to evaluate the validity evidence for intelligence tests. In Contemporary Intellectual Assessment: Theories, Tests, and Issues, 3rd ed. Edited by D. P. Flanagan and P. L. Harrison. New York: Guilford, pp. 739–57. [Google Scholar]

- Bulut, Okan, Damien C. Cormier, Alexandra M. Aquilina, and Hatice C. Bulut. 2021. Age and sex invariance of the Woodcock-Johnson IV tests of cognitive abilities: Evidence form psychometric network modeling. Journal of Intelligence 9: 35. [Google Scholar] [CrossRef]

- Canivez, Gary L., and Marley W. Watkins. 2016. Review of the Wechsler Intelligence Scale for Children-Fifth Edition: Critique, commentary, and independent analyses. In Intelligent Testing with the WISC–V. Edited by A. S. Kaufman, S. E. Raiford and D. L. Coalson. Hoboken: Wiley, pp. 683–702. [Google Scholar]

- Canivez, Gary L., Ryan J. McGill, Stefan C. Dombrowski, Marley W. Watkins, Alison E. Pritchard, and Lisa A. Jacobson. 2020. Construct validity of the WISC–V in clinical cases: Exploratory and confirmatory factor analyses of the 10 primary subtests. Assessment 27: 274–96. [Google Scholar] [CrossRef]

- Chen, Hsinyi, Ou Zhang, Susan Engi Raiford, Jianjun Zhu, and Lawrence G. Weiss. 2015. Factor invariance between genders on the Wechsler Intelligence Scale for Children-Fifth Edition. Personality and Individual Differences 86: 1–5. [Google Scholar] [CrossRef]

- Chen, Jiahua, and Zehua Chen. 2008. Extended Bayesian information criteria for model selection with large model spaces. Biometrika 95: 759–71. [Google Scholar] [CrossRef]

- Christensen, Alexander P., and Hudson Golino. 2021a. Estimating the stability of psychological dimensions via bootstrap exploratory graph analysis: A Monte Carlo simulation and tutorial. Psych 3: 479–500. [Google Scholar] [CrossRef]

- Christensen, Alexander P., and Hudson Golino. 2021b. On the equivalency of factor and network loadings. Behavior Research Methods 53: 1563–80. [Google Scholar] [CrossRef] [PubMed]

- Christensen, Alexander P., Hudson Golino, and Paul J. Silvia. 2020. A psychometric network perspective on the validity and validation of personality trait questionnaires. European Journal of Personality 34: 1095–108. [Google Scholar] [CrossRef]

- Cosemans, Tim, Yves Rosseel, and Sarah Gelper. 2022. Exploratory graph analysis for factor retention: Simulation results for continuous and binary data. Educational and Psychological Measurement 82: 880–910. [Google Scholar] [CrossRef]

- Costantini, Giulio, Juliette Richetin, Emanuele Preti, Erica Casini, Sacha Epskamp, and Marco Perugini. 2019. Stability and variability of personality networks: A tutorial on recent developments in network psychometrics. Personality and Individual Differences 136: 68–78. [Google Scholar] [CrossRef]

- Dombrowski, Stefan C., A. Alexander Beaujean, Ryan J. McGill, Nicholas F. Benson, and W. Joel Schneider. 2019. Using exploratory bifactor analysis to understand the latent structure of multidimensional psychological measures: An applied example featuring the WISC–V. Structural Equation Modeling: A Multidisciplinary Journal 26: 847–60. [Google Scholar] [CrossRef]

- Dombrowski, Stefan C., Gary L. Canivez, and Marley W. Watkins. 2017. Factor Structure of the 10 WISC–V primary subtests in four standardization age groups. Contemporary School Psychology 22: 90–104. [Google Scholar] [CrossRef]

- Dombrowski, Stefan C., Ryan J. McGill, and Grant B. Morgan. 2021. Monte Carlo modeling of contemporary intelligence test (IQ) factor structure: Implications for IQ assessment, interpretation, and theory. Assessment 28: 977–93. [Google Scholar] [CrossRef] [PubMed]

- Dombrowski, Stefan C., Ryan J. McGill, Marley W. Watkins, Gary L. Canivez, Alison E. Pritchard, and Lisa A. Jacobson. 2022. Will the real theoretical structure of the WISC–V please stand up? Implications for clinical interpretation. Contemporary School Psychology 26: 492–503. [Google Scholar] [CrossRef]

- Epskamp, Sacha, and Eiko I. Fried. 2022. Bootnet: Bootstrap Methods for Various Network Estimation Routines. Version 1.5. Vienna: R Foundation for Statistical Computing. [Google Scholar]

- Epskamp, Sacha, Denny Borsboom, and Eiko I. Fried. 2018. Estimating psychological networks and their accuracy: A tutorial paper. Behavior Research Methods 50: 195–212. [Google Scholar] [CrossRef]

- Flanagan, Dawn P., and Vincent C. Alfonso. 2017. Essentials of WISC–V Assessment. Hoboken: John Wiley. [Google Scholar]

- Flora, David B., and Jessica K. Flake. 2017. The purpose and practice of exploratory and confirmatory factor analysis in psychological research: Decisions for scale development and validation. Canadian Journal of Behavioural Science 49: 78–88. [Google Scholar] [CrossRef]

- Friedman, Jerome, Trevor Hastie, and Robert Tibshirani. 2008. Sparse inverse covariance estimation using the graphical lasso. Biostatistics 9: 432–41. [Google Scholar] [CrossRef]

- Gignac, Gilles E. 2016. Residual group-level factor associations: Possibly negative implications for the mutualism theory of general intelligence. Intelligence 55: 69–78. [Google Scholar] [CrossRef]

- Golino, Hudson F., and Andreas Demetriou. 2017. Estimating the dimensionality of intelligence like data using exploratory graph analysis. Intelligence 62: 54–70. [Google Scholar] [CrossRef]

- Golino, Hudson F., and Sacha Epskamp. 2017. Exploratory graph analysis: A new approach for estimating the number of dimensions in psychological research. PLoS ONE 12: 1–26. [Google Scholar] [CrossRef] [PubMed]

- Golino, Hudson, Alexander P. Christensen, and Luis Eduardo Garrido. 2022a. Exploratory graph analysis in context. Psicologia: Teoria e Prática 24: 1–10. [Google Scholar] [CrossRef]

- Golino, Hudson, Alexander P. Christensen, Luis Eduardo Garrido, and Laura Jamison. 2022b. EGAnet: Exploratory Graph Analysis-A Framework for Estimating the Number of Dimensions in Multivariate Data Using Network Psychometrics. Version 1.2.3. Vienna: R Foundation for Statistical Computing. [Google Scholar]

- Golino, Hudson, Dingjing Shi, Alexander P. Christensen, Luis Eduardo Garrido, Maria Dolores Nieto, Ritu Sadana, Jotheeswaran Amuthavalli Thiyagarajan, and Agustin Martinez-Molina. 2020. Investigating the performance of exploratory graph analysis and traditional techniques to identify the number of latent factors: A simulation and tutorial. Psychological Methods 25: 292–320. [Google Scholar] [CrossRef]

- Gorsuch, Richard L. 1988. Exploratory factor analysis. In Handbook of Multivariate Experimental Psychology, 2nd ed. Edited by John R. Nesselroade and Raymond B. Cattell. New York: Plenum, pp. 231–58. [Google Scholar]

- Gottfredson, Linda S. 2016. A g theorist on why Kovacs and Conway’s process overlap theory amplifies, not opposes, g theory. Psychological Inquiry 27: 210–17. [Google Scholar] [CrossRef]

- Horn, John L. 1965. A rationale and test for the number of factors in factor analysis. Psychometrika 30: 179–85. [Google Scholar] [CrossRef] [PubMed]

- Isvoranu, Adela-Maria, and Sacha Epskamp. 2021. Which estimation method to choose in network psychometrics? Deriving guidelines for applied researchers. Psychological Methods, Advance Online Publication. [Google Scholar] [CrossRef] [PubMed]

- Isvoranu, Adela-Maria, Sacha Epskamp, Lourens Waldorp, and Denny Borsboom. 2022. Network Psychometrics with R: A Guide for Behavioral and Social Scientists. London: Taylor & Francis. [Google Scholar]

- Jamison, Laura, Alexander P. Christensen, and Hudson Golino. 2021. Optimizing Walktrap’s community detection in networks using the total entropy fit index. PsyArXiv. [Google Scholar] [CrossRef]

- Kan, Kees-Jan, Hannelies de Jonge, Han L. J. van der Maas, Stephen Z. Levine, and Sacha Epskamp. 2020. How to compare psychometric factor and network models. Journal of Intelligence 8: 35. [Google Scholar] [CrossRef]

- Kan, Kees-Jan, Han L. J. van der Maas, and Stephen Z. Levine. 2019. Extending psychometric network analysis: Empirical evidence against g in factor of mutualism? Intelligence 73: 52–62. [Google Scholar] [CrossRef]

- Kaufman, Alan S., Susan Engi Raiford, and Diane L. Coalson. 2016. Intelligent testing with the WISC–V. Hoboken: Wiley. [Google Scholar]

- Kranzler, John H., Kathrin E. Maki, Nicholas F. Benson, Tanya L. Eckert, Randy G. Floyd, and Sarah A. Fefer. 2020. How do school psychologists interpret intelligence tests for the identification of specific learning disabilities? Contemporary School Psychology 24: 445–56. [Google Scholar] [CrossRef]

- Lockwood, Adam B., Nicholas Benson, Ryan L. Farmer, and Kelsey Klatka. 2022. Test use and assessment practices of school psychology training programs: Findings from a 2020 survey of US faculty. Psychology in the Schools 59: 698–725. [Google Scholar] [CrossRef]

- Marsman, M., D. Borsboom, J. Kruis, S. Epskamp, R. van Bork, L. J. Waldorp, H. L. J. van der Maas, and G. Maris. 2018. An introduction to network psychometrics: Relating Ising network models to item response theory models. Multivariate Behavioral Research 53: 15–35. [Google Scholar] [CrossRef] [PubMed]

- McGrew, Kevin S., W. Joel Schneider, Scott L. Decker, and Okan Bulut. 2023. A psychometric network analysis of CHC intelligence measures: Implications for research, theory, and interpretation of broad CHC scores “beyond g”. Journal of Intelligence 11: 19. [Google Scholar] [CrossRef]

- Montgomery, Alyssa, Erica Torres, and Jamie Eiseman. 2018. Using the joint test standards to evaluate the validity evidence for intelligence tests. In Contemporary Intellectual Assessment: Theories, Tests, and Issues, 4th ed. Edited by D. P. Flanagan and E. M. McDonough. New York: Guilford, pp. 841–52. [Google Scholar]

- Neal, Zachary P., Miriam K. Forbes, Jennifer Watling Neal, Michael J. Brusco, Robert Krueger, Kristian Markon, Douglas Steinley, Stanley Wasserman, and Aiden G. C. Wright. 2022. Critiques of network analysis of multivariate data in psychological science. Nature Reviews Methods Primers 2: 90. [Google Scholar] [CrossRef]

- Neubeck, Markus, Julia Karbach, and Tanja Könen. 2022. Network models of cognitive abilities in younger and older adults. Intelligence 90: 1–9. [Google Scholar] [CrossRef]

- Pons, Pascal, and Matthieu Latapy. 2006. Computing communities in large networks using random walks. Journal of Graph Algorithms and Application 10: 191–218. [Google Scholar] [CrossRef]

- R Core Team. 2022. R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing. Available online: https://www.Rproject.org (accessed on 1 February 2023).

- Reynolds, Matthew R., and Timothy Z. Keith. 2017. Multi-group and hierarchical confirmatory factor analysis of the Wechsler Intelligence Scale for Children-Fifth Edition: What does it measure? Intelligence 62: 31–47. [Google Scholar] [CrossRef]

- Sattler, Jerome M., Ron Dumont, and Diane L. Coalson. 2016. Assessment of Children: WISC–V and WPPSI–IV. San Diego: Jerome M. Sattler Publisher. [Google Scholar]

- Schmank, Christopher J., Sara Anne Goring, Kristof Kovacs, and Andrew R. A. Conway. 2021. Investigating the structure of intelligence using latent variable and psychometric network modeling: A commentary and reanalysis. Journal of Intelligence 9: 8. [Google Scholar] [CrossRef]

- Schneider, W. Joel, and Kevin S. McGrew. 2018. The Cattell-Horn-Carroll theory of cognitive abilities. In Contemporary Intellectual Assessment: Theories, Tests, and Issues, 4th ed. Edited by D. P. Flanagan and E. M. McDonough. New York: Guilford, pp. 73–163. [Google Scholar]

- Sotelo-Dynega, Marlene, and Shauna G. Dixon. 2014. Cognitive assessment practices: A survey of school psychologists. Psychology in the Schools 51: 1031–45. [Google Scholar] [CrossRef]

- Tibshirani, Robert. 1996. Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society: Series B (Methodological) 58: 267–88. [Google Scholar] [CrossRef]

- van Bork, Riet, Mijke Rhemtulla, Lourens J. Waldorp, Joost Kruis, Shirin Rezvanifar, and Denny Borsboom. 2021. Latent variable models and networks: Statistical equivalence and testability. Multivariate Behavioral Research 56: 175–98. [Google Scholar] [CrossRef] [PubMed]

- Van Der Maas, Han LJ, Kees-Jan Kan, Maarten Marsman, and Claire E. Stevenson. 2017. Network models for cognitive development and intelligence. Journal of Intelligence 5: 16. [Google Scholar] [CrossRef] [PubMed]

- Velicer, Wayne F. 1976. Determining the number of components from the matrix of parital correlations. Psychometrika 41: 321–27. [Google Scholar] [CrossRef]

- Wasserman, John D. 2019. Deconstructing CHC. Applied Measurement in Education 32: 249–68. [Google Scholar] [CrossRef]

- Watkins, Marley W. 2021. A Step-by-Step Guide to Exploratory Factor Analysis with R and Rstudio. New York: Taylor & Francis. [Google Scholar]

- Watkins, Marley W., and Gary L. Canivez. 2022. Assessing the psychometric utility of IQ scores: A tutorial using the Wechsler Intelligence Scale for Children-Fifth Edition. School Psychology Review 51: 619–33. [Google Scholar] [CrossRef]

- Wechsler, David. 2014. Wechsler Intelligence Scale for Children-Fifth Edition Technical and Interpretive Manual. Bloomington: NCS Pearson. [Google Scholar]

- Wright, Caroline Vaile, Shannon G. Beattie, Daniel I. Galper, Abere Sawaqdeh Church, Lynn F. Bufka, Virginia M. Brabender, and Bruce L. Smith. 2017. Assessment practices of professional psychologists: Results of a national survey. Professional Psychology: Research and Practice 48: 73–78. [Google Scholar] [CrossRef]

| Race/Ethnicity | N | Percent | Sex | |

|---|---|---|---|---|

| Female | Male | |||

| White | 3685 | 51.5 | 1303 | 2382 |

| Black | 2057 | 28.8 | 706 | 1351 |

| Hispanic | 223 | 3.1 | 72 | 151 |

| Multi-racial | 587 | 8.2 | 201 | 386 |

| Unknown/other | 597 | 8.4 | 235 | 362 |

| Total | 7149 | 2517 | 4632 | |

| Percent | 35.2 | 64.8 | ||

| ICD Diagnosis | n | Percent |

|---|---|---|

| ADHD | 3502 | 48.99 |

| Other nervous system disorders | 938 | 13.12 |

| Anxiety disorders | 767 | 10.73 |

| Adjustment disorders | 384 | 5.37 |

| Mood disorders | 369 | 5.16 |

| Epilepsy | 201 | 2.81 |

| Oncologic conditions | 153 | 2.14 |

| Disruptive behavior disorders | 147 | 2.06 |

| Congenital abnormalities | 130 | 1.82 |

| Chromosomal abnormalities | 71 | 0.99 |

| Autism spectrum disorders | 64 | 0.90 |

| Traumatic brain injury | 58 | 0.81 |

| Other behavioral and emotional disorders | 51 | 0.71 |

| Unspecified | 38 | 0.53 |

| Hearing loss and ear disorders | 37 | 0.52 |

| Cerebral palsy | 36 | 0.50 |

| Learning disabilities | 29 | 0.41 |

| Speech/language disorders | 26 | 0.36 |

| Tics and movement disorders | 24 | 0.34 |

| Endocrine and metabolic disorders | 23 | 0.32 |

| Intellectual disabilities | 22 | 0.31 |

| Blood and immune disorders | 18 | 0.25 |

| Cerebrovascular and cardiac disorders | 17 | 0.24 |

| Prenatal and newborn disorders | 16 | 0.22 |

| Spina bifida | 14 | 0.20 |

| Muscular dystrophy | 8 | 0.11 |

| Kidney/urinary/digestive disorders | 6 | 0.09 |

| Total | 7149 | 100.00 |

| Score | n | M | SD | Skewness | Kurtosis |

|---|---|---|---|---|---|

| Block Design | 7149 | 8.7 | 3.4 | +0.14 | −0.18 |

| Similarities | 7149 | 9.2 | 3.3 | +0.01 | −0.04 |

| Matrix Reasoning | 7149 | 9.0 | 3.4 | +0.06 | −0.13 |

| Digit Span | 7149 | 7.9 | 3.1 | +0.12 | +0.13 |

| Coding | 7149 | 7.5 | 3.3 | +0.01 | −0.37 |

| Vocabulary | 7149 | 9.1 | 3.6 | +0.05 | −0.50 |

| Figure Weights | 7149 | 9.5 | 3.1 | −0.01 | −0.25 |

| Visual Puzzles | 7149 | 9.6 | 3.3 | −0.03 | −0.38 |

| Picture Span | 7149 | 8.5 | 3.2 | +0.13 | −0.16 |

| Symbol Search | 7149 | 8.2 | 3.2 | +0.01 | +0.01 |

| Verbal Comprehension | 7050 | 95.4 | 17.5 | −0.03 | −0.15 |

| Visual–Spatial | 7052 | 95.2 | 17.4 | +0.10 | −0.15 |

| Fluid Reasoning | 7050 | 95.6 | 16.8 | +0.02 | −0.36 |

| Working Memory | 7051 | 89.9 | 16.0 | +0.13 | −0.10 |

| Processing Speed | 7049 | 87.6 | 17.1 | −0.13 | −0.03 |

| Full-Scale IQ | 6647 | 91.0 | 17.4 | +0.20 | −0.25 |

| BD | SI | MR | DS | CD | VO | FW | VP | PS | SS | |

|---|---|---|---|---|---|---|---|---|---|---|

| BD | – | 0.02 | 0.19 | 0.01 | 0.04 | 0.04 | 0.13 | 0.42 | 0.02 | 0.07 |

| SI | 0.51 | – | 0.07 | 0.14 | −0.01 | 0.51 | 0.10 | 0.06 | 0.00 | 0.02 |

| MR | 0.62 | 0.51 | – | 0.15 | 0.04 | −0.03 | 0.19 | 0.19 | 0.06 | 0.03 |

| DS | 0.48 | 0.56 | 0.53 | – | 0.07 | 0.14 | 0.07 | −0.01 | 0.26 | 0.08 |

| CD | 0.40 | 0.36 | 0.39 | 0.43 | – | 0.01 | 0.02 | 0.01 | 0.12 | 0.48 |

| VO | 0.52 | 0.74 | 0.49 | 0.58 | 0.38 | – | 0.10 | 0.11 | 0.13 | 0.03 |

| FW | 0.60 | 0.55 | 0.60 | 0.50 | 0.38 | 0.56 | – | 0.21 | 0.03 | 0.01 |

| VP | 0.73 | 0.56 | 0.64 | 0.50 | 0.41 | 0.58 | 0.65 | – | 0.04 | 0.07 |

| PS | 0.43 | 0.45 | 0.45 | 0.56 | 0.43 | 0.51 | 0.44 | 0.46 | – | 0.08 |

| SS | 0.45 | 0.40 | 0.42 | 0.45 | 0.63 | 0.42 | 0.41 | 0.46 | 0.44 | – |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Watkins, M.W.; Dombrowski, S.C.; McGill, R.J.; Canivez, G.L.; Pritchard, A.E.; Jacobson, L.A. Bootstrap Exploratory Graph Analysis of the WISC–V with a Clinical Sample. J. Intell. 2023, 11, 137. https://doi.org/10.3390/jintelligence11070137

Watkins MW, Dombrowski SC, McGill RJ, Canivez GL, Pritchard AE, Jacobson LA. Bootstrap Exploratory Graph Analysis of the WISC–V with a Clinical Sample. Journal of Intelligence. 2023; 11(7):137. https://doi.org/10.3390/jintelligence11070137

Chicago/Turabian StyleWatkins, Marley W., Stefan C. Dombrowski, Ryan J. McGill, Gary L. Canivez, Alison E. Pritchard, and Lisa A. Jacobson. 2023. "Bootstrap Exploratory Graph Analysis of the WISC–V with a Clinical Sample" Journal of Intelligence 11, no. 7: 137. https://doi.org/10.3390/jintelligence11070137

APA StyleWatkins, M. W., Dombrowski, S. C., McGill, R. J., Canivez, G. L., Pritchard, A. E., & Jacobson, L. A. (2023). Bootstrap Exploratory Graph Analysis of the WISC–V with a Clinical Sample. Journal of Intelligence, 11(7), 137. https://doi.org/10.3390/jintelligence11070137