Assessing Metacognitive Regulation during Problem Solving: A Comparison of Three Measures

Abstract

1. Introduction

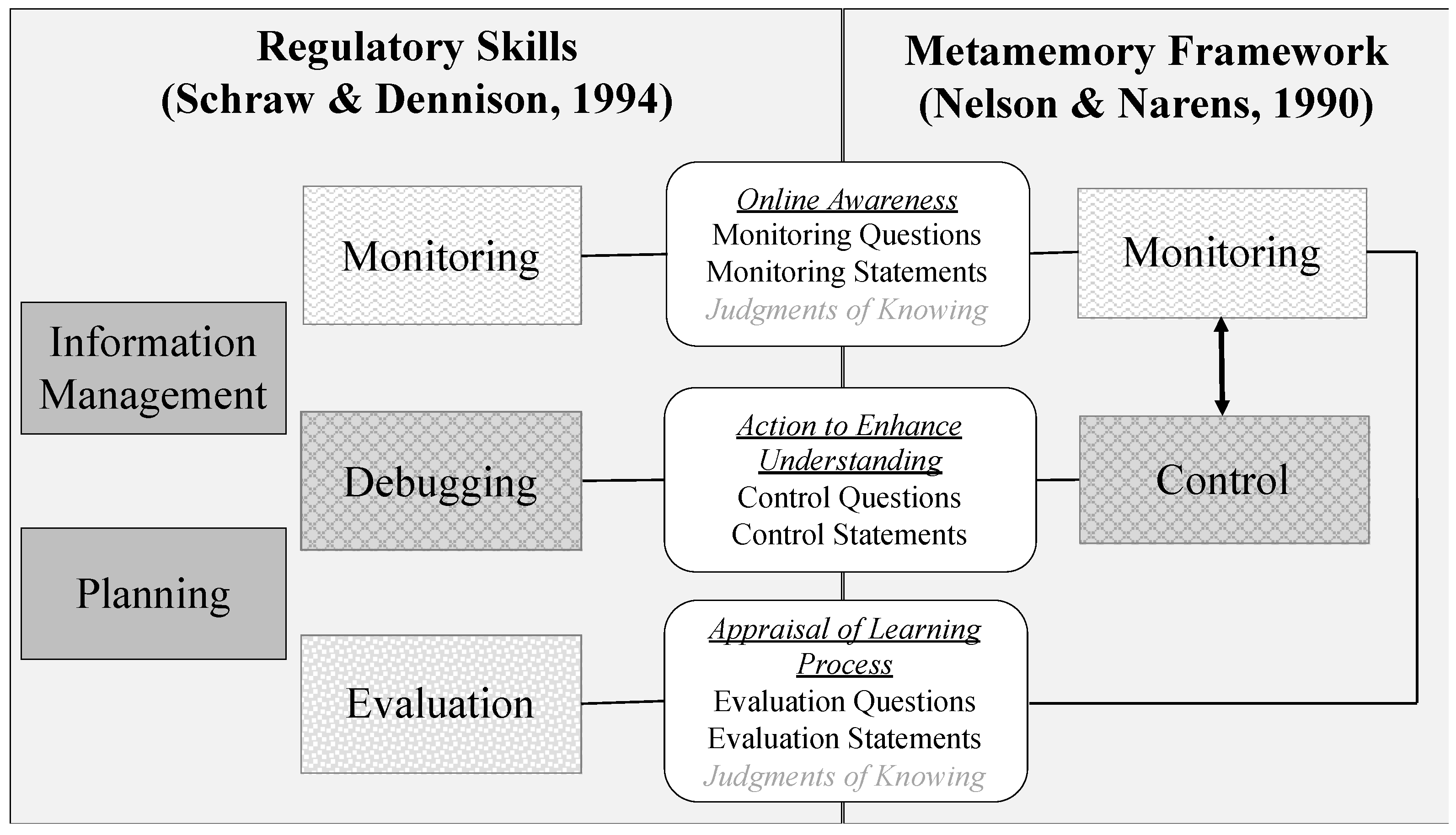

1.1. Theory and Measurement: An Issue of Grain Size

1.2. Relation among Measures

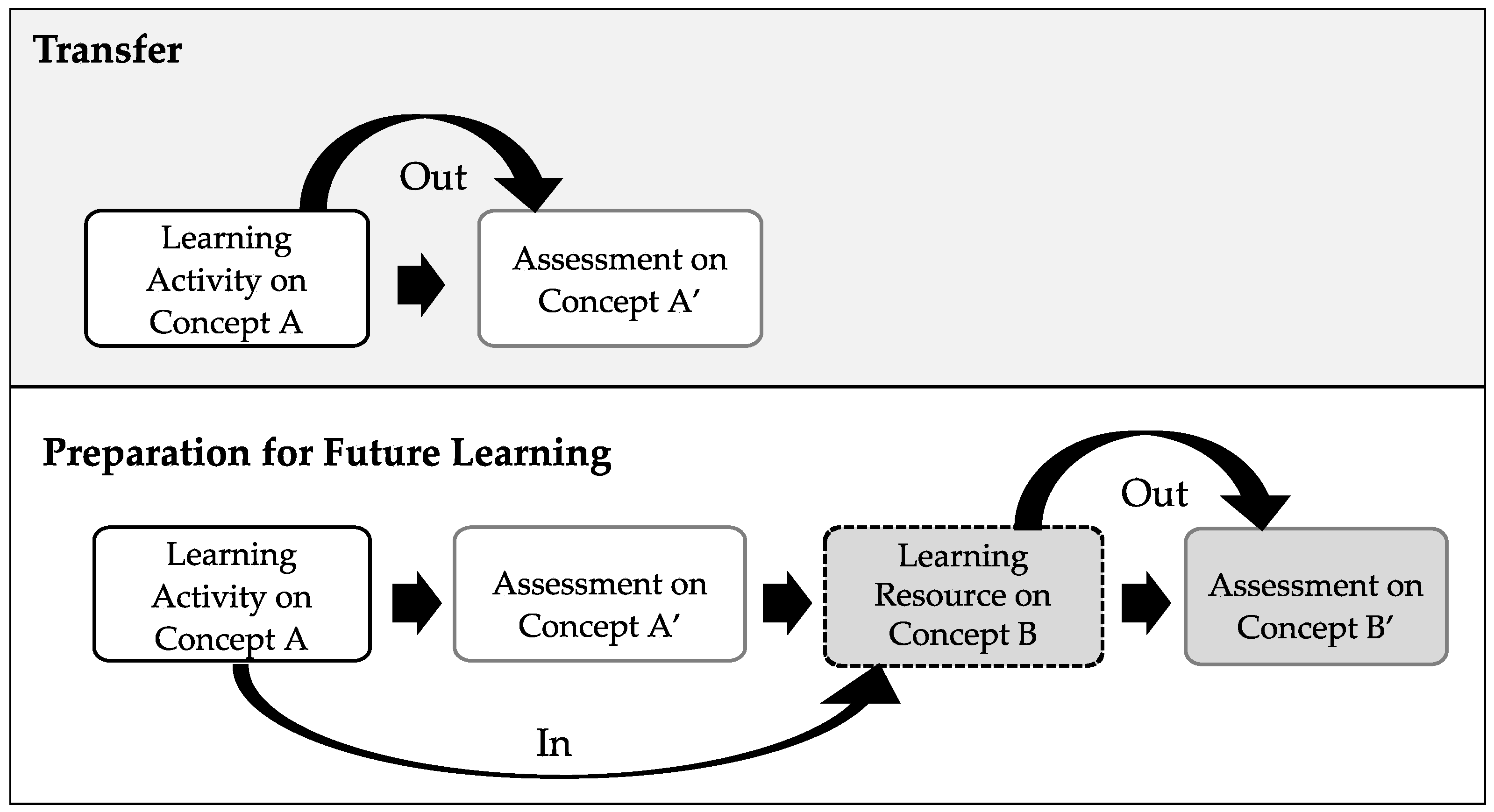

1.3. Relations to Robust Learning

1.3.1. Verbal Protocols and Learning

1.3.2. Questionnaires and Learning

1.3.3. Metacognitive Judgments—JOKs and Learning

1.3.4. Summary of the Relations to Robust Learning

1.4. Measurement Validity

1.4.1. Validity of Verbal Protocols

1.4.2. Validity of Questionnaires

1.4.3. Validity of Metacognitive Judgments—JOKs

1.4.4. Summary of Measurement Validity

1.5. Underlying Processes of the Measures

1.6. Current Work

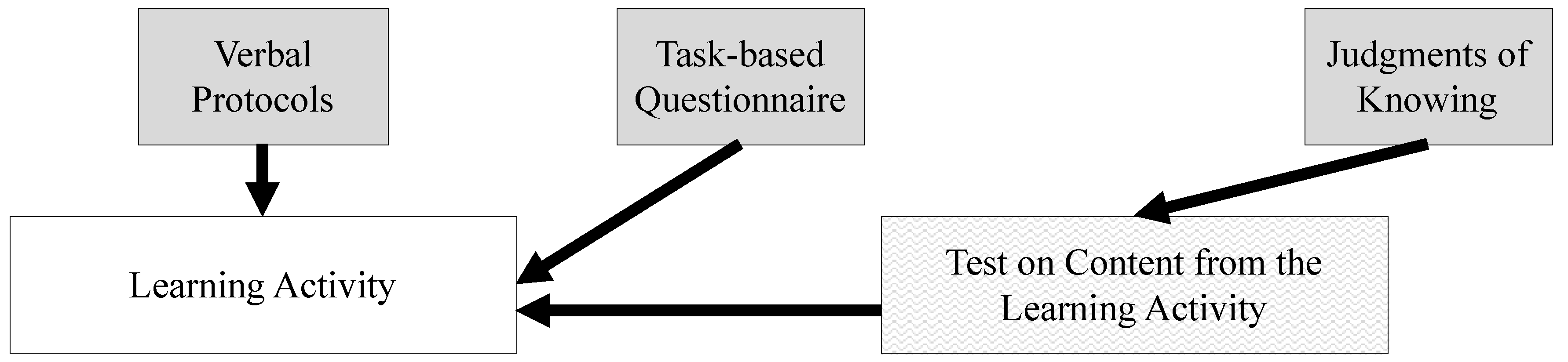

2. Materials and Methods

2.1. Participants

2.2. Design

2.3. Materials

2.3.1. Learning Pre-Test

2.3.2. Learning Task

2.3.3. Learning Post-Test

2.3.4. Verbal Protocols

2.3.5. Task-Based Metacognitive Questionnaire

2.3.6. Use of JOKS

2.4. Procedure

3. Results

3.1. Relation within and across Metacognitive Measures

3.2. Relation between Metacognitive Measures and Learning

3.2.1. Learning and Test Performance

3.2.2. Verbal Protocols and Learning Outcomes

3.2.3. Task-Based Questionnaire and Learning Outcomes

3.2.4. JOKs and Learning Outcomes

3.2.5. Competing Models

4. Discussion

4.1. Relation of Measures

4.2. Robust Learning

4.3. Theoretical and Educational Implications

4.4. Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Alexander, Patricia A. 2013. Calibration: What is it and why it matters? An introduction to the special issue on calibrating calibration. Learning and Instruction 24: 1–3. [Google Scholar] [CrossRef]

- Alfieri, Louis, Patricia J. Brooks, Naomi J. Aldrich, and Harriet R. Tenenbaum. 2011. Does discovery-based instruction enhance learning? Journal of Educational Psychology 103: 1–18. [Google Scholar] [CrossRef]

- Azevedo, Roger, and Amy M. Witherspoon. 2009. Self-regulated use of hypermedia. In Handbook of Metacognition in Education. Edited by Douglas J. Hacker, John Dunlosky and Arthur C. Graesser. Mahwah: Erlbaum. [Google Scholar]

- Azevedo, Roger. 2020. Reflections on the field of metacognition: Issues, challenges, and opportunities. Metacognition Learning 15: 91–98. [Google Scholar] [CrossRef]

- Belenky, Daniel M., and Timothy J. Nokes-Malach. 2012. Motivation and transfer: The role of mastery-approach goals in preparation for future learning. Journal of the Learning Sciences 21: 399–432. [Google Scholar] [CrossRef]

- Berardi-Coletta, Bernadette, Linda S. Buyer, Roger L. Dominowski, and Elizabeth R. Rellinger. 1995. Metacognition and problem solving: A process-oriented approach. Journal of Experimental Psychology: Learning, Memory, and Cognition 21: 205–23. [Google Scholar] [CrossRef]

- Binbasaran-Tuysuzoglu, Banu, and Jeffrey Alan Greene. 2015. An investigation of the role of contingent metacognitive behavior in self-regulated learning. Metacognition and Learning 10: 77–98. [Google Scholar] [CrossRef]

- Bransford, John D., and Daniel L. Schwartz. 1999. Rethinking transfer: A simple proposal with multiple implications. Review of Research in Education 24: 61–100. [Google Scholar] [CrossRef]

- Brown, Ann L. 1987. Metacognition, executive control, self-regulation, and other more mysterious mechanisms. In Metacognition, Motivation, and Understanding. Edited by Franz Emanuel Weinert and Rainer H. Kluwe. Hillsdale: Lawrence Erlbaum Associates, pp. 65–116. [Google Scholar]

- Brown, Ann L., John D. Bransford, Roberta A. Ferrara, and Joseph C. Campione. 1983. Learning, remembering, and understanding. In Handbook of Child Psychology: Vol. 3. Cognitive Development, 4th ed. Edited by John H. Flavell and Ellen M. Markman. New York: Wiley, pp. 77–166. [Google Scholar]

- Chi, Michelene T. H., Miriam Bassok, Matthew W. Lewis, Peter Reimann, and Robert Glaser. 1989. Self-explanations: How students study and use examples in learning to solve problems. Cognitive Science 13: 145–82. [Google Scholar] [CrossRef]

- Cromley, Jennifer G., and Roger Azevedo. 2006. Self-report of reading comprehension strategies: What are we measuring? Metacognition and Learning 1: 229–47. [Google Scholar] [CrossRef]

- Dentakos, Stella, Wafa Saoud, Rakefet Ackerman, and Maggie E. Toplak. 2019. Does domain matter? Monitoring accuracy across domains. Metacognition and Learning 14: 413–36. [Google Scholar] [CrossRef]

- Dunlosky, John, and Janet Metcalfe. 2009. Metacognition. Thousand Oaks: Sage Publications, Inc. [Google Scholar]

- Ericsson, K. Anders, and Herbert A. Simon. 1980. Verbal reports as data. Psychological Review 87: 215–51. [Google Scholar] [CrossRef]

- Flavell, John H. 1979. Metacognition and cognitive monitoring: A new area of cognitive-developmental inquiry. American Psychologist 34: 906–11. [Google Scholar] [CrossRef]

- Fortunato, Irene, Deborah Hecht, Carol Kehr Tittle, and Laura Alvarez. 1991. Metacognition and problem solving. Arithmetic Teacher 38: 38–40. [Google Scholar] [CrossRef]

- Gadgil, Soniya, Timothy J. Nokes-Malach, and Michelene T. H. Chi. 2012. Effectiveness of holistic mental model confrontation in driving conceptual change. Learning and Instruction 22: 47–61. [Google Scholar] [CrossRef]

- Gadgil, Soniya. 2014. Understanding the Interaction between Students’ Theories of Intelligence and Learning Activities. Doctoral dissertation, University of Pittsburgh, Pittsburgh, PA, USA. [Google Scholar]

- Greene, Jeffrey Alan, and Roger Azevedo. 2009. A macro-level analysis of SRL processes and their relations to the acquisition of a sophisticated mental model of a complex system. Contemporary Educational Psychology 34: 18–29. [Google Scholar] [CrossRef]

- Hacker, Douglas J., John Dunlosky, and Arthur C. Graesser. 2009. Handbook of Metacognition in Education. New York: Routledge. [Google Scholar]

- Howard, Bruce C., Steven McGee, Regina Shia, and Namsoo S. Hong. 2000. Metacognitive self-regulation and problem-solving: Expanding the theory base through factor analysis. Paper presented at the Annual Meeting of the American Educational Research Association, New Orleans, LA, USA, April 24–28. [Google Scholar]

- Howard-Rose, Dawn, and Philip H. Winne. 1993. Measuring component and sets of cognitive processes in self-regulated learning. Journal of Educational Psychology 85: 591–604. [Google Scholar] [CrossRef]

- Howie, Pauline, and Claudia M. Roebers. 2007. Developmental progression in the confidence-accuracy relationship in event recall: Insights provided by a calibration perspective. Applied Cognitive Psychology 21: 871–93. [Google Scholar] [CrossRef]

- Hu, Li-tze, and Peter M. Bentler. 1999. Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling: A Multidisciplinary Journal 6: 1–55. [Google Scholar] [CrossRef]

- Hunter-Blanks, Patricia, Elizabeth S. Ghatala, Michael Pressley, and Joel R. Levin. 1988. Comparison of monitoring during study and during testing on a sentence-learning task. Journal of Educational Psychology 80: 279–83. [Google Scholar] [CrossRef]

- Jacobs, Janis E., and Scott G. Paris. 1987. Children’s metacognition about reading: Issues in definition, measurement, and instruction. Educational Psychologist 22: 255–78. [Google Scholar] [CrossRef]

- Kapur, Manu, and Katerine Bielaczyc. 2012. Designing for productive failure. Journal of the Learning Sciences 21: 45–83. [Google Scholar] [CrossRef]

- Kapur, Manu. 2008. Productive failure. Cognition and Instruction 26: 379–424. [Google Scholar] [CrossRef]

- Kapur, Manu. 2012. Productive failure in learning the concept of variance. Instructional Science 40: 651–72. [Google Scholar] [CrossRef]

- Kelemen, William L., Peter J. Frost, and Charles A. Weaver. 2000. Individual differences in metacognition: Evidence against a general metacognitive ability. Memory & Cognition 28: 92–107. [Google Scholar] [CrossRef]

- Kistner, Saskia, Katrin Rakoczy, Barbara Otto, Charlotte Dignath-van Ewijk, Gerhard Büttner, and Eckhard Klieme. 2010. Promotion of self-regulated learning in classrooms: Investigating frequency, quality, and consequences for student performance. Metacognition and Learning 5: 157–71. [Google Scholar] [CrossRef]

- Koedinger, Kenneth R., Albert T. Corbett, and Charles Perfetti. 2012. The knowledge-learning-instruction framework: Bridging the science-practice chasm to enhance robust student learning. Cognitive Science 36: 757–98. [Google Scholar] [CrossRef] [PubMed]

- Kraha, Amanda, Heather Turner, Kim Nimon, Linda Reichwein Zientek, and Robin K. Henson. 2012. Tools to support interpreting multiple regression in the face of multicollinearity. Frontiers in Psychology 3: 44. [Google Scholar] [CrossRef]

- Lin, Xiaodong, and James D. Lehman. 1999. Supporting learning of variable control in a computer-based biology environment: Effects of prompting college students to reflect on their own thinking. Journal of Research in Science Teaching 36: 837–58. [Google Scholar] [CrossRef]

- Mazancieux, Audrey, Stephen M. Fleming, Céline Souchay, and Chris J. A. Moulin. 2020. Is there a G factor for metacognition? Correlations in retrospective metacognitive sensitivity across tasks. Journal of Experimental Psychology: General 149: 1788–99. [Google Scholar] [CrossRef]

- McDonough, Ian M., Tasnuva Enam, Kyle R. Kraemer, Deborah K. Eakin, and Minjung Kim. 2021. Is there more to metamemory? An argument for two specialized monitoring abilities. Psychonomic Bulletin & Review 28: 1657–67. [Google Scholar] [CrossRef]

- Meijer, Joost, Marcel V. J. Veenman, and Bernadette H. A. M. van Hout-Wolters. 2006. Metacognitive activities in text studying and problem solving: Development of a taxonomy. Educational Research and Evaluation 12: 209–37. [Google Scholar] [CrossRef]

- Meijer, Joost, Marcel V. J. Veenman, and Bernadette H. A. M. van Hout-Wolters. 2012. Multi-domain, multi-method measures of metacognitive activity: What is all the fuss about metacognition … indeed? Research Papers in Education 27: 597–627. [Google Scholar] [CrossRef]

- Meijer, Joost, Peter Sleegers, Marianne Elshout-Mohr, Maartje van Daalen-Kapteijns, Wil Meeus, and Dirk Tempelaar. 2013. The development of a questionnaire on metacognition for students in higher education. Educational Research 55: 31–52. [Google Scholar] [CrossRef]

- Messick, Samuel. 1989. Validity. In Educational Measurement, 3rd ed. Edited by Robert L. Linn. New York: Macmillan, pp. 13–103. [Google Scholar]

- Muis, Krista R., Philip H. Winne, and Dianne Jamieson-Noel. 2007. Using a multitrait-multimethod analysis to examine conceptual similarities of three self-regulated learning inventories. The British Journal of Educational Psychology 77: 177–95. [Google Scholar] [CrossRef] [PubMed]

- Nelson, Thomas O. 1996. Gamma is a measure of the accuracy of predicting performance on one item relative to another item, not the absolute performance on an individual item Comments on Schraw. Applied Cognitive Psychology 10: 257–60. [Google Scholar] [CrossRef]

- Nelson, Thomas O., and L. Narens. 1990. Metamemory: A theoretical framework and new findings. Psychology of Learning and Motivation 26: 125–73. [Google Scholar] [CrossRef]

- Nietfeld, John L., Li Cao, and Jason W. Osborne. 2005. Metacognitive monitoring accuracy and student performance in the postsecondary classroom. The Journal of Experimental Education 74: 7–28. [Google Scholar]

- Nietfeld, John L., Li Cao, and Jason W. Osborne. 2006. The effect of distributed monitoring exercises and feedback on performance, monitoring accuracy, and self-efficacy. Metacognition and Learning 1: 159–79. [Google Scholar] [CrossRef]

- Nokes-Malach, Timothy J., Kurt Van Lehn, Daniel M. Belenky, Max Lichtenstein, and Gregory Cox. 2013. Coordinating principles and examples through analogy and self-explanation. European Journal of Education of Psychology 28: 1237–63. [Google Scholar] [CrossRef]

- O’Neil, Harold F., Jr., and Jamal Abedi. 1996. Reliability and validity of a state metacognitive inventory: Potential for alternative assessment. Journal of Educational Research 89: 234–45. [Google Scholar] [CrossRef]

- Pan, Steven C., and Timothy C. Rickard. 2018. Transfer of test-enhanced learning: Meta-analytic review and synthesis. Psychological Bulletin 144: 710–56. [Google Scholar] [CrossRef] [PubMed]

- Pintrich, Paul R., and Elisabeth V. De Groot. 1990. Motivational and self-regulated learning components of classroom academic performance. Journal of Educational Psychology 82: 33–40. [Google Scholar] [CrossRef]

- Pintrich, Paul R., Christopher A. Wolters, and Gail P. Baxter. 2000. Assessing metacognition and self-regulated learning. In Issues in the Measurement of Metacognition. Edited by Gregory Schraw and James C. Impara. Lincoln: Buros Institute of Mental Measurements. [Google Scholar]

- Pintrich, Paul R., David A. F. Smith, Teresa Garcia, and Wilbert J. McKeachie. 1991. A Manual for the Use of the Motivated Strategies for Learning Questionnaire (MSLQ). Ann Arbor: The University of Michigan. [Google Scholar]

- Pintrich, Paul R., David A. F. Smith, Teresa Garcia, and Wilbert J. McKeachie. 1993. Predictive validity and reliability of the Motivated Strategies for Learning Questionnaire (MSLQ). Educational and Psychological Measurement 53: 801–13. [Google Scholar] [CrossRef]

- Pressley, Michael, and Peter Afflerbach. 1995. Verbal Protocols of Reading: The Nature of Constructively Responsive Reading. New York: Routledge. [Google Scholar]

- Pulford, Briony D., and Andrew M. Colman. 1997. Overconfidence: Feedback and item difficulty effects. Personality and Individual Differences 23: 125–33. [Google Scholar] [CrossRef]

- Renkl, Alexander. 1997. Learning from worked-out examples: A study on individual differences. Cognitive Science 21: 1–29. [Google Scholar] [CrossRef]

- Richey, J. Elizabeth, and Timothy J. Nokes-Malach. 2015. Comparing four instructional techniques for promoting robust learning. Educational Psychology Review 27: 181–218. [Google Scholar] [CrossRef]

- Roll, Ido, Vincent Aleven, and Kenneth R. Koedinger. 2009. Helping students know “further”—Increasing the flexibility of students ’ knowledge using symbolic invention tasks. In Proceedings of the 33rd Annual Conference of the Cognitive Science Society. Edited by Niels A. Taatgen and Hedderik Van Rijn. Austin: Cognitive Science Society, pp. 1169–74. [Google Scholar]

- Schellings, Gonny L. M., Bernadette H. A. M. van Hout-Wolters, Marcel V. J. Veenman, and Joost Meijer. 2013. Assessing metacognitive activities: The in-depth comparison of a task-specific questionnaire with think-aloud protocols. European Journal of Psychology of Education 28: 963–90. [Google Scholar] [CrossRef]

- Schellings, Gonny, and Bernadette Van Hout-Wolters. 2011. Measuring strategy use with self-report instruments: Theoretical and empirical considerations. Metacognition and Learning 6: 83–90. [Google Scholar] [CrossRef]

- Schoenfeld, Alan H. 1992. Learning to think mathematically: Problem solving, metacognition, and sense making in mathematics. In Handbook for Research on Mathematics Teaching and Learning. Edited by Douglas Grouws. New York: Macmillan, pp. 334–70. [Google Scholar]

- Schraw, Gregory, and David Moshman. 1995. Metacognitive theories. Educational Psychology Review 7: 351–71. [Google Scholar] [CrossRef]

- Schraw, Gregory, and Rayne Sperling Dennison. 1994. Assessing metacognitive awareness. Contemporary Educational Psychology 19: 460–75. [Google Scholar] [CrossRef]

- Schraw, Gregory, Fred Kuch, and Antonio P. Gutierrez. 2013. Measure for measure: Calibrating ten commonly used calibration scores. Learning and Instruction 24: 48–57. [Google Scholar] [CrossRef]

- Schraw, Gregory, Michael E. Dunkle, Lisa D. Bendixen, and Teresa DeBacker Roedel. 1995. Does a general monitoring skill exist? Journal of Educational Psychology 87: 433–444. [Google Scholar] [CrossRef]

- Schraw, Gregory. 1995. Measures of feeling-of-knowing accuracy: A new look at an old problem. Applied Cognitive Psychology 9: 321–32. [Google Scholar] [CrossRef]

- Schraw, Gregory. 1996. The effect of generalized metacognitive knowledge on test performance and confidence judgments. The Journal of Experimental Education 65: 135–46. [Google Scholar] [CrossRef]

- Schraw, Gregory. 2009. A conceptual analysis of five measures of metacognitive monitoring. Metacognition and Learning 4: 33–45. [Google Scholar] [CrossRef]

- Schwartz, Daniel L., and John D. Bransford. 1998. A time for telling. Cognition and Instruction 16: 475–522. [Google Scholar] [CrossRef]

- Schwartz, Daniel L., and Taylor Martin. 2004. Inventing to prepare for future learning: The hidden efficiency of encouraging original student production in statistics instruction. Cognition and Instruction 22: 129–84. [Google Scholar] [CrossRef]

- Schwartz, Daniel L., John D. Bransford, and David Sears. 2005. Efficiency and innovation in transfer. In Transfer of Learning from a Modern Multidisciplinary Perspective. Edited by Jose Mestre. Greenwich: Information Age Publishers, pp. 1–51. [Google Scholar]

- Sperling, Rayne A., Bruce C. Howard, Lee Ann Miller, and Cheryl Murphy. 2002. Measures of children’s knowledge and regulation of cognition. Contemporary Educational Psychology 27: 51–79. [Google Scholar] [CrossRef]

- Sperling, Rayne A., Bruce C. Howard, Richard Staley, and Nelson DuBois. 2004. Metacognition and self-regulated learning constructs. Educational Research and Evaluation 10: 117–39. [Google Scholar] [CrossRef]

- Van der Stel, Manita, and Marcel V. J. Veenman. 2010. Development of metacognitive skillfulness: A longitudinal study. Learning and Individual Differences 20: 220–24. [Google Scholar] [CrossRef]

- Van der Stel, Manita, and Marcel V. J. Veenman. 2014. Metacognitive skills and intellectual ability of young adolescents: A longitudinal study from a developmental perspective. European Journal of Psychology of Education 29: 117–37. [Google Scholar] [CrossRef]

- Van Hout-Wolters, B. H. A. M. 2009. Leerstrategieën meten. Soorten meetmethoden en hun bruikbaarheid in onderwijs en onderzoek. [Measuring learning strategies. Different kinds of assessment methods and their usefulness in education and research]. Pedagogische Studiën 86: 103–10. [Google Scholar]

- Veenman, Marcel V. J. 2005. The assessment of metacognitive skills: What can be learned from multi- method designs? In Lernstrategien und Metakognition: Implikationen für Forschung und Praxis. Edited by Cordula Artelt and Barbara Moschner. Berlin: Waxmann, pp. 75–97. [Google Scholar]

- Veenman, Marcel V. J., Bernadette H. A. M. Van Hout-Wolters, and Peter Afflerbach. 2006. Metacognition and learning: Conceptual and methodological considerations. Metacognition and Learning 1: 3–14. [Google Scholar] [CrossRef]

- Veenman, Marcel V. J., Frans J. Prins, and Joke Verheij. 2003. Learning styles: Self-reports versus thinking-aloud measures. British Journal of Educational Psychology 73: 357–72. [Google Scholar] [CrossRef]

- Veenman, Marcel V. J., Jan J. Elshout, and Joost Meijer. 1997. The generality vs. domain-specificity of metacognitive skills in novice learning across domains. Learning and Instruction 7: 187–209. [Google Scholar] [CrossRef]

- Veenman, Marcel V. J., Pascal Wilhelm, and Jos J. Beishuizen. 2004. The relation between intellectual and metacognitive skills from a developmental perspective. Learning and Instruction 14: 89–109. [Google Scholar] [CrossRef]

- Winne, Philip H. 2011. A cognitive and metacognitive analysis of self-regulated learning. In Handbook of Self-Regulation of Learning and Performance. Edited by Barry J. Zimmerman and Dale H. Schunk. New York: Routeledge, pp. 15–32. [Google Scholar]

- Winne, Philip H., and Allyson F. Hadwin. 1998. Studying as self-regulated learning. In Metacognition in Educational Theory and Practice. Edited by Douglas J. Hacker, John Dunlosky and Arthur C. Graesser. Hillsdale: Erlbaum, pp. 277–304. [Google Scholar]

- Winne, Philip H., and Dianne Jamieson-Noel. 2002. Exploring students’ calibration of self reports about study tactics and achievement. Contemporary Educational Psychology 27: 551–72. [Google Scholar] [CrossRef]

- Winne, Philip H., Dianne Jamieson-Noel, and Krista Muis. 2002. Methodological issues and advances in researching tactics, strategies, and self-regulated learning. In Advances in Motivation and Achievement: New Directions in Measures and Methods. Edited by Paul R. Pintrich and Martin L. Maehr. Greenwich: JAI Press, vol. 12, pp. 121–55. [Google Scholar]

- Wolters, Christopher A. 2004. Advancing achievement goal theory: Using goal structures and goal orientations to predict students’ motivation, cognition, and achievement. Journal of Educational Psychology 96: 236–50. [Google Scholar] [CrossRef]

- Zepeda, Cristina D., J. Elizabeth Richey, Paul Ronevich, and Timothy J. Nokes-Malach. 2015. Direct Instruction of Metacognition Benefits Adolescent Science Learning, Transfer, and Motivation: An In Vivo Study. Journal of Educational Psychology 107: 954–70. [Google Scholar] [CrossRef]

- Zimmerman, Barry J. 2001. Theories of self-regulated learning and academic achievement: An overview and analysis. In Self-Regulated Learning and Academic Achievement: Theoretical Perspectives. Edited by Barry J. Zimmerman and Dale H. Schunk. Mahwah: Erlbaum, pp. 1–37. [Google Scholar]

| Measurement | Metacognitive Skill | Timing | Framing of the Assessment | Analytical Measures | Predicted Learning Outcome |

|---|---|---|---|---|---|

| Verbal Protocols | Monitoring, Control/Debugging, and Evaluating | Concurrent | Task based | Inter-rater reliability, Cronbach’s alpha | Learning, transfer, and PFL |

| Questionnaires | Monitoring, Control/Debugging, and Evaluating | Retrospective | Task based | Second-Order CFA, Cronbach’s alpha | Learning, transfer, and PFL |

| Metacognitive Judgments—JOKs | Monitoring and Monitoring Accuracy | Retrospective | Test items | Cronbach’s alpha, Average, Mean Absolute accuracy, Gamma, and Discrimination measures | Learning, transfer, and PFL |

| Code Type | Definition | Transcript Examples |

|---|---|---|

| Monitoring | Checking one’s understanding about what the task is asking them to do; making sure they understand what they are learning/doing. | “I’m gonna figure out a pretty much the range of them from vertically and horizontally? I’m not sure if these numbers work (inaudible)”. “That doesn’t make sense”. |

| Control/Debugging | An action to correct one’s understanding or to enhance one’s understanding/progress. Often involves using a different strategy or rereading. | “I’m re-reading the instructions a little bit” “So try a different thing”. |

| Conceptual Error Correction | A statement that reflects an understanding that something is incorrect with their strategy or reflects noticing a misconception about the problem. | “I’m thinking of finding a better system because, most of these it works but not for Smythe’s finest because it’s accurate, it’s just drifting”. |

| Calculation Error Correction | Noticing of a small error that is not explicitly conceptual. Small calculator errors would fall into this category. | “4, whoops”. |

| Evaluation | Reflects on their work to make sure they solved the problem accurately. Reviews for understanding of concepts as well as reflects on accurate problem-solving procedures such as strategies. | “Gotta make sure I added all that stuff together correctly”. “Let’s see, that looks pretty good”. “Let’s check the match on these.” |

| Item | Original Construct | [Min, Max] | M (SD) | Standardized Factor | Residual Estimate | Variance |

|---|---|---|---|---|---|---|

| Monitoring | .90 | .94 | ||||

| During the activity, I found myself pausing to regularly to check my comprehension. | MAI (Schraw and Dennison 1994) | [1, 7] | 4.20 (1.78) | .90 | .81 | 0.19 |

| During the activity, I kept track of how much I understood the material, not just if I was getting the right answers. | MSLQ Adaptation (Wolters 2004) | [1, 7] | 4.18 (1.60) | .83 | .69 | 0.31 |

| During the activity, I checked whether my understanding was sufficient to solve new problems. | Based on verbal protocols | [1, 7] | 4.47 (1.59) | .77 | .59 | 0.41 |

| During the activity, I tried to determine which concepts I didn’t understand well. | MSLQ (Pintrich et al. 1991) | [1, 7] | 4.44 (1.65) | .85 | .73 | 0.27 |

| During the activity, I felt that I was gradually gaining insight into the concepts and procedures of the problems. | AILI (Meijer et al. 2013) | [2, 7] | 5.31 (1.28) | .75 | .56 | 0.44 |

| During the activity, I made sure I understood how to correctly solve the problems. | Based on verbal protocols | [1, 7] | 4.71 (1.46) | .90 | .80 | 0.20 |

| During the activity, I tried to understand why the procedure I was using worked. | Strategies (Belenky and Nokes-Malach 2012) | [1, 7] | 4.40 (1.74) | .78 | .62 | 0.39 |

| During the activity, I was concerned with how well I understood the procedure I was using. | Strategies (Belenky and Nokes-Malach 2012) | [1, 7] | 4.38 (1.81) | .74 | .55 | 0.45 |

| Control/Debugging | .81 | .66 | ||||

| During the activity, I reevaluated my assumptions when I got confused. | MAI (Schraw and Dennison 1994) | [2, 7] | 5.09 (1.58) | .94 | .89 | 0.11 |

| During the activity, I stopped and went back over new information that was not clear. | MAI (Schraw and Dennison 1994) | [1, 7] | 5.09 (1.54) | .65 | .42 | 0.58 |

| During the activity, I changed strategies when I failed to understand the problem. | MAI (Schraw and Dennison 1994) | [1, 7] | 4.11 (1.67) | .77 | .60 | 0.40 |

| During the activity, I kept track of my progress and, if necessary, I changed my techniques or strategies. | SMI (O’Neil and Abedi 1996) | [1, 7] | 4.51 (1.52) | .89 | .79 | 0.21 |

| During the activity, I corrected my errors when I realized I was solving problems incorrectly. | SMI (O’Neil and Abedi 1996) | [2, 7] | 5.36 (1.35) | .50 | .25 | 0.75 |

| During the activity, I went back and tried to figure something out when I became confused about something. | MSLQ (Pintrich et al. 1991) | [2, 7] | 5.20 (1.58) | .87 | .75 | 0.25 |

| During the activity, I changed the way I was studying in order to make sure I understood the material. | MSLQ (Pintrich et al. 1991) | [1, 7] | 3.82 (1.48) | .70 | .49 | 0.52 |

| During the activity, I asked myself questions to make sure I understood the material. | MSLQ (Pintrich et al. 1991) | [1, 7] | 3.60 (1.59) | .49 | .25 | 0.76 |

| REVERSE During the activity, I did not think about how well I was understanding the material, instead I was trying to solve the problems as quickly as possible. | Based on verbal protocols | [1, 7] | 3.82 (1.72) | .54 | .30 | 0.71 |

| Evaluation | .84 | .71 | ||||

| During the activity, I found myself analyzing the usefulness of strategies I was using. | MAI (Schraw and Dennison 1994) | [1, 7] | 5.02 (1.55) | .48 | .23 | 0.77 |

| During the activity, I reviewed what I had learned. | Based on verbal protocols | [2, 7] | 5.04 (1.40) | .57 | .33 | 0.67 |

| During the activity, I checked my work all the way through each problem. | IMSR (Howard et al. 2000) | [1, 7] | 4.62 (1.72) | .94 | .88 | 0.12 |

| During the activity, I checked to see if my calculations were correct. | IMSR (Howard et al. 2000) | [1, 7] | 4.73 (1.97) | .95 | .91 | 0.09 |

| During the activity, I double-checked my work to make sure I did it right. | IMSR (Howard et al. 2000) | [1, 7] | 4.38 (1.87) | .89 | .79 | 0.21 |

| During the activity, I reviewed the material to make sure I understood the information. | MAI (Schraw and Dennison 1994) | [1, 7] | 4.49 (1.71) | .69 | .48 | 0.52 |

| During the activity, I checked to make sure I understood how to correctly solve each problem. | Based on verbal protocols | [1, 7] | 4.64 (1.57) | .86 | .75 | 0.26 |

| Measure | Variable | N | Min | Max | M | SE | SD |

|---|---|---|---|---|---|---|---|

| Verbal Protocols | Monitoring | 44 | 0.00 | 0.29 | 0.05 | 0.01 | 0.06 |

| Control/Debugging | 44 | 0.00 | 0.06 | 0.01 | 0.002 | 0.02 | |

| Evaluation | 44 | 0.00 | 0.16 | 0.04 | 0.01 | 0.04 | |

| Questionnaire | Monitoring | 45 | 1.13 | 6.75 | 4.51 | 0.19 | 1.29 |

| Control/Debugging | 45 | 2.33 | 6.44 | 4.51 | 0.16 | 1.08 | |

| Evaluation | 45 | 2.14 | 7.00 | 4.70 | 0.19 | 1.28 | |

| JOKs | Mean | 45 | 2.00 | 5.00 | 4.31 | 0.09 | 0.60 |

| Mean Absolute Accuracy | 45 | 0.06 | 0.57 | 0.22 | 0.02 | 0.13 | |

| Discrimination | 45 | −3.75 | 4.5 | 1.43 | 0.33 | 2.21 |

| Variable | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | |

|---|---|---|---|---|---|---|---|---|---|---|

| VPs | 1. Monitoring | - | .09 | .01 | −.36 * | −.10 | −.16 | −.41 * | − .07 | −.14 |

| 2. Control/Debugging | - | .16 | .12 | −.08 | .14 | −.16 | .03 | −.08 | ||

| 3. Evaluation | - | .29 † | .31 * | .37 * | −.10 | .02 | .01 | |||

| Qs | 4. Monitoring | - | .73 ** | .73 ** | .26 † | .06 | .02 | |||

| 5. Control/Debugging | - | .65 ** | .02 | −.02 | −.03 | |||||

| 6. Evaluation | - | .15 | .11 | −.09 | ||||||

| JOKs | 7. Average | - | .14 | .39 ** | ||||||

| 8. Mean Absolute Accuracy | - | − .76 ** | ||||||||

| 9. Discrimination | - | |||||||||

| Measure | N | Min | Max | M | SE | SD |

|---|---|---|---|---|---|---|

| First Learning Activity | 45 | 0.00 | 0.75 | 0.40 | 0.03 | 0.18 |

| Transfer | 45 | 0.17 | 0.94 | 0.64 | 0.03 | 0.21 |

| PFL | 45 | 0.00 | 1.00 | 0.49 | 0.08 | 0.51 |

| Variable | β | t | p | VIF |

|---|---|---|---|---|

| Monitoring statements | −0.37 | −2.51 | .02 * | 1.01 |

| Control/Debugging statements | −0.05 | −0.32 | .75 | 1.03 |

| Evaluation statements | −0.03 | −0.17 | .87 | 1.02 |

| Constant | 10.06 | <.001 *** |

| Variable | β | t | p | VIF |

|---|---|---|---|---|

| Self-reported Evaluation | 0.24 | 1.71 | .095 | 1.03 |

| Monitoring Statements | −0.24 | −1.60 | .12 | 1.22 |

| JOK Average | 0.23 | 1.53 | .13 | 1.21 |

| Constant | −0.08 | .93 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zepeda, C.D.; Nokes-Malach, T.J. Assessing Metacognitive Regulation during Problem Solving: A Comparison of Three Measures. J. Intell. 2023, 11, 16. https://doi.org/10.3390/jintelligence11010016

Zepeda CD, Nokes-Malach TJ. Assessing Metacognitive Regulation during Problem Solving: A Comparison of Three Measures. Journal of Intelligence. 2023; 11(1):16. https://doi.org/10.3390/jintelligence11010016

Chicago/Turabian StyleZepeda, Cristina D., and Timothy J. Nokes-Malach. 2023. "Assessing Metacognitive Regulation during Problem Solving: A Comparison of Three Measures" Journal of Intelligence 11, no. 1: 16. https://doi.org/10.3390/jintelligence11010016

APA StyleZepeda, C. D., & Nokes-Malach, T. J. (2023). Assessing Metacognitive Regulation during Problem Solving: A Comparison of Three Measures. Journal of Intelligence, 11(1), 16. https://doi.org/10.3390/jintelligence11010016