Do Attentional Lapses Account for the Worst Performance Rule?

Abstract

:1. Introduction

1.1. The Attentional Lapses Account of the WPR and Its Examination

1.2. Multiverse Manifestation and Measurement of Attentional Lapses

1.3. Identifying Occurrences of the WPR

2. Study 1

2.1. Materials and Methods

2.1.1. Participants

2.1.2. Materials

Berlin Intelligence Structure Test (BIS)

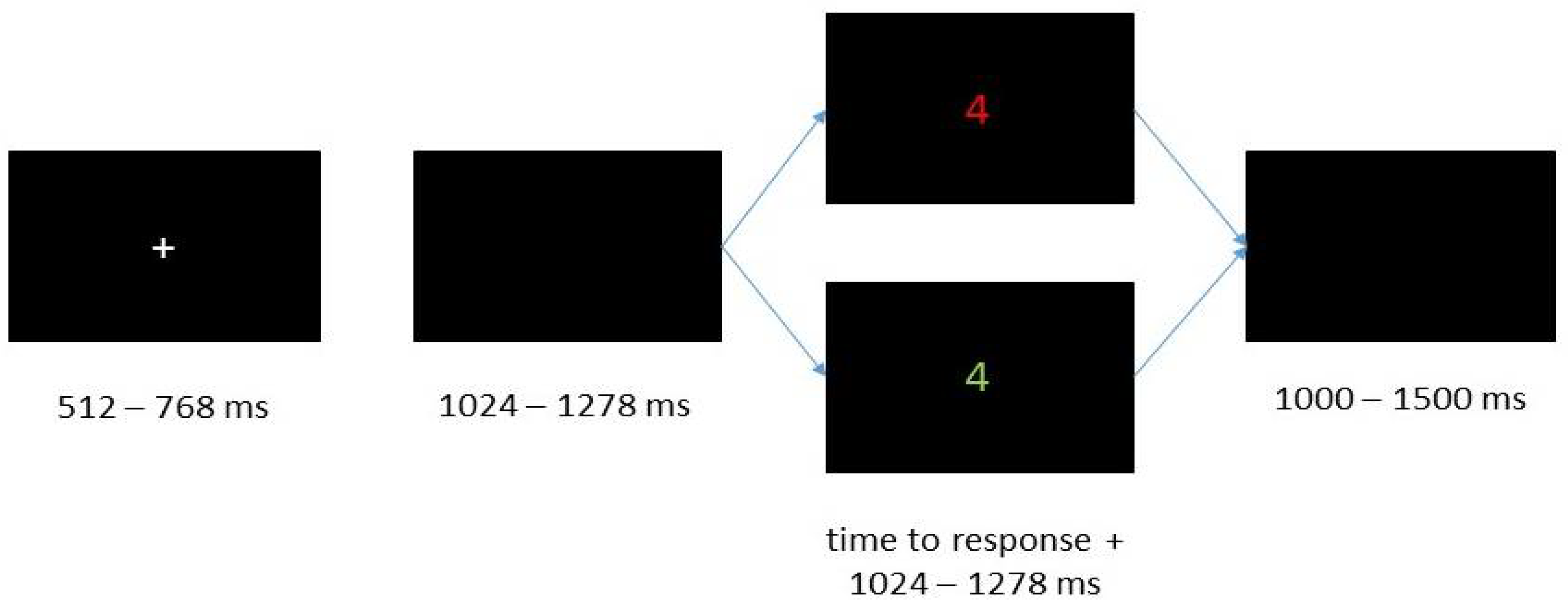

Choice RT Task: Switching Task

Online Thought-Probing Procedure

Questionnaire of Spontaneous Mind Wandering (Q-SMW)

Metronome Response Task (MRT)

Electrophysiological Correlates of Attentional Lapses

2.1.3. Procedure

2.1.4. EEG Recording

2.1.5. Data Analyses

Analysis of Behavioral and Self-Report Data

Preprocessing of Electrophysiological Data for Event-Related Potentials (ERPs)

Preprocessing and Time-Frequency Decomposition of Electrophysiological Data

Analyses of the Worst Performance Rule

2.2. Results

2.2.1. Descriptive Results

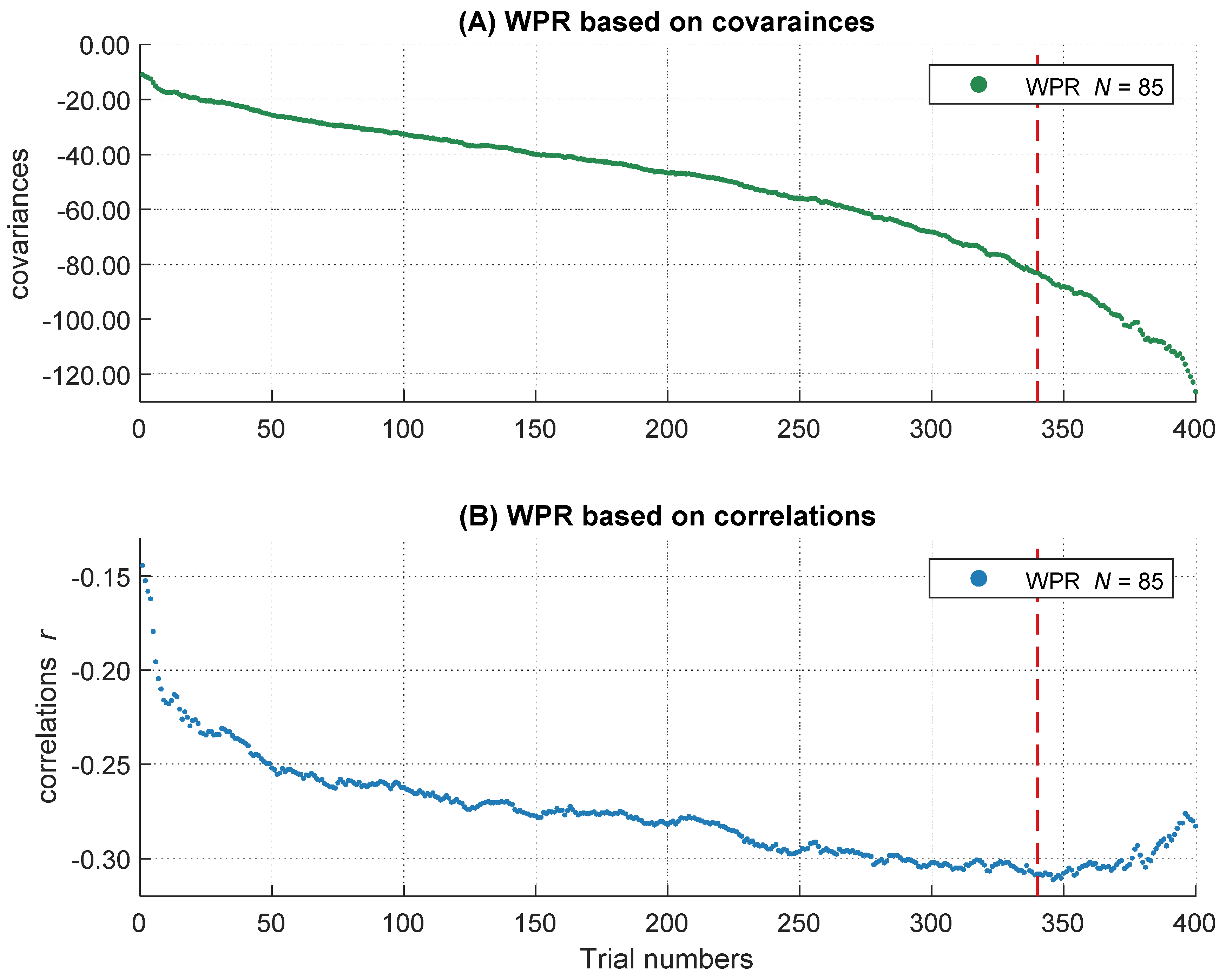

2.2.2. Descriptive Analyses of Covariance and Correlation Patterns over the RT Distribution

2.2.3. The Worst Performance Rule with Unstandardized Coefficients (Covariances)

2.2.4. Do Individual Differences in Behavioral and Self-Reported Measures of Attentional Lapses Account for the WPR with Unstandardized Coefficients (Covariances)

Questionnaire of Spontaneous Mind Wandering (Q-SMW)

Metronome Response Task (MRT)

2.2.5. Do Individual Differences in Electrophysiological Measures of Attentional Lapses Account for the WPR with Unstandardized Coefficients (Covariances)

ERP Analyses

Time-Frequency Analyses

Alpha-Power

Theta-Power

The Combined Effect on the Unstandardized Worst Performance Pattern of All Predictors with a Substantial Contribution (TUTs, MRT, Theta-Power)

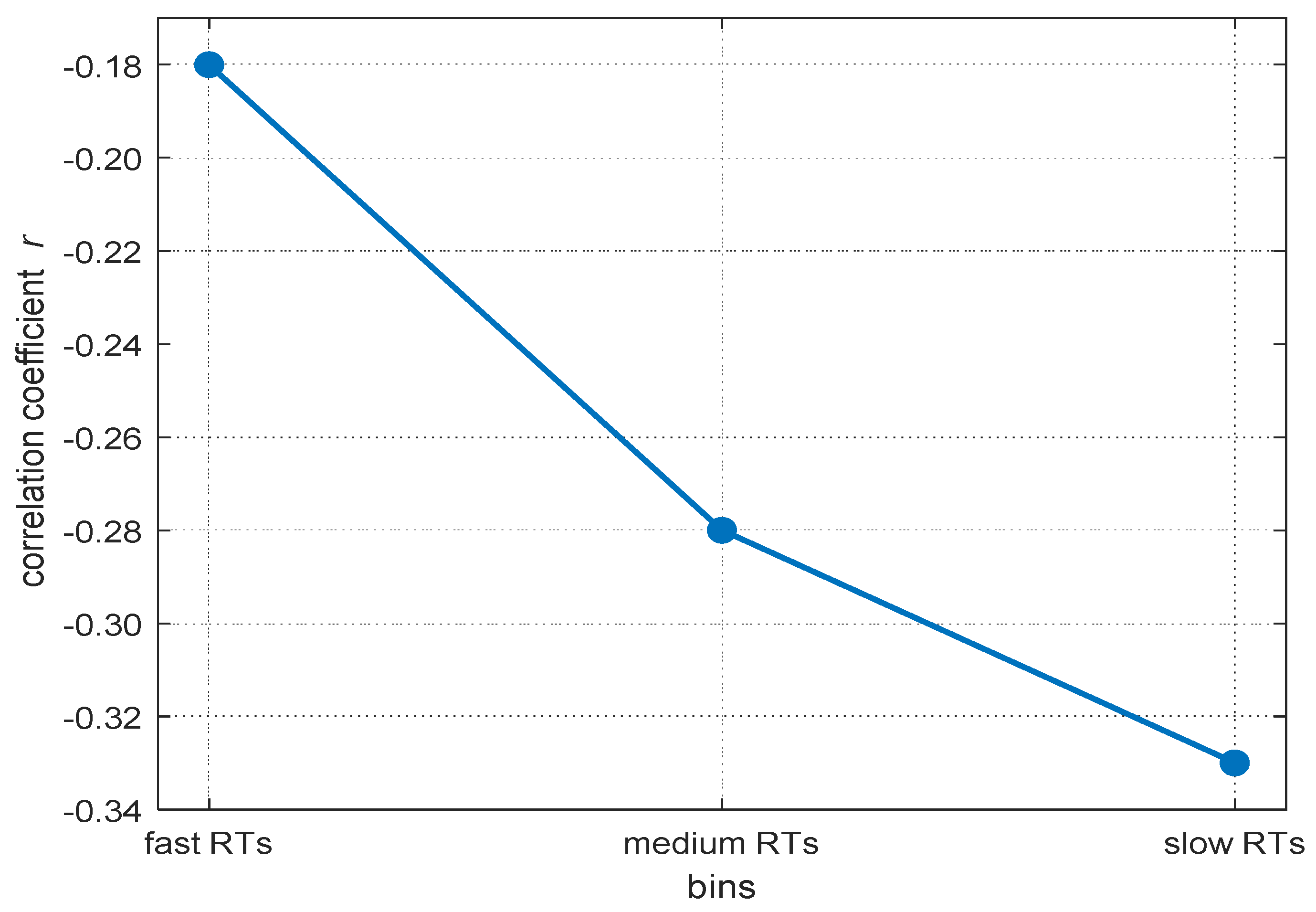

2.2.6. The Worst Performance Rule with Standardized Coefficients (Correlations)

2.2.7. Do Individual Differences in Behavioral and Self-Reported Measures of Attentional Lapses Account for the WPR with Standardized Coefficients (Correlations)

Questionnaire of Spontaneous Mind Wandering (Q-SMW)

Metronome Response Task (MRT)

2.2.8. Do Individual Differences in Electrophysiological Measures of Attentional Lapses Account for the WPR with Standardized Coefficients (Correlations)

ERP Analyses

Time-Frequency Analyses

2.3. Discussion

2.3.1. Influence of Covariates on the WPR in Covariances

2.3.2. Influence of Covariates on the WPR in Correlations

2.3.3. Low Correlation and Unpredicted Correlations with Attentional Lapses Measures

2.3.4. Interim Conclusion

3. Study 2

3.1. Materials and Methods

3.1.1. Participants

3.1.2. Materials

Sustained Attention Task (SART)

Letter-Flanker

Arrow-Flanker

Number-Stroop

Working Memory Capacity

Online Thought-Probing Procedure

3.1.3. Data Preparation and Analyses

3.2. Results

3.2.1. Descriptive Analyses

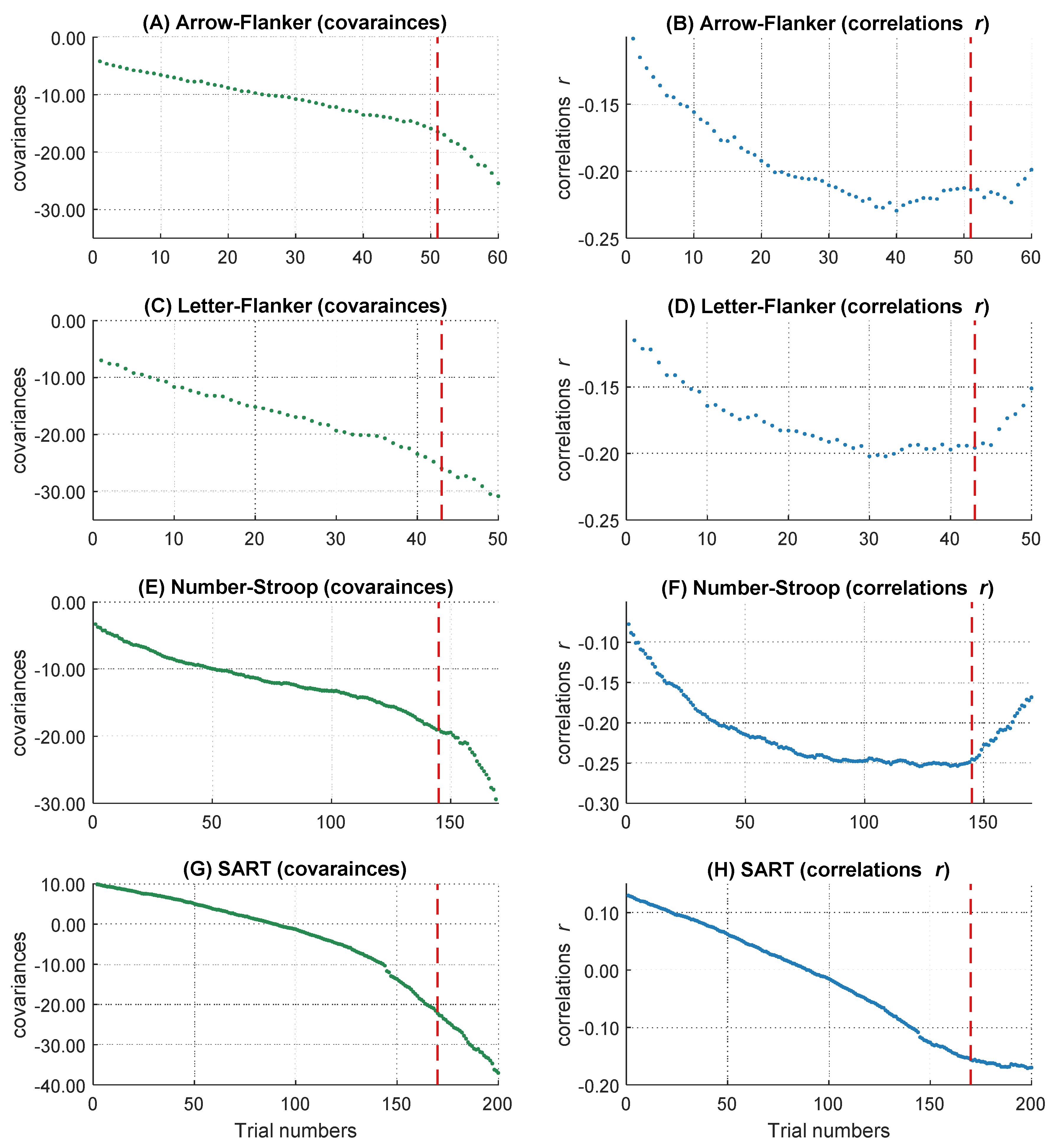

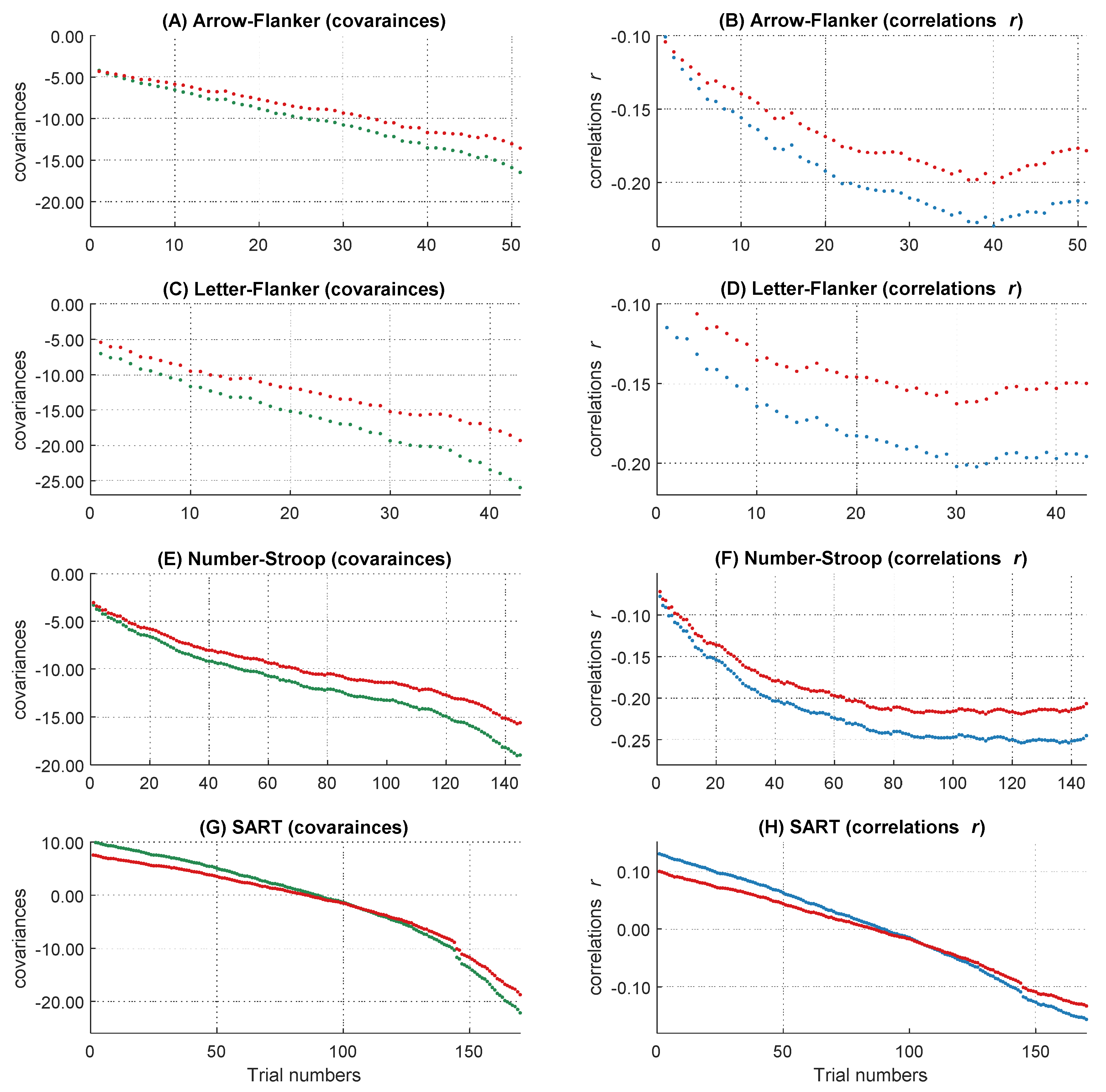

3.2.2. The Worst Performance Rule with Unstandardized Coefficients (Covariances)

3.2.3. The Worst Performance Rule with Standardized Coefficients (Correlations)

3.3. Discussion

4. General Discussion

4.1. Alternative Accounts of the Worst Performance Rule

4.2. The Curious Course in Very Slow RTs

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

| 1 | Many thanks to an anonymous reviewer for this suggestion. |

| 2 | We used the same data used by Welhaf et al. (2020) in Study 2. |

References

- Akaike, Hirotogu. 1998. Information Theory and an Extension of the Maximum Likelihood Principle. In Selected Papers of Hirotugu Akaike. Edited by Emanuel Parzen, Kunio Tanabe and Genshiro Kitagawa. New York: Springer, pp. 199–213. [Google Scholar] [CrossRef]

- Allison, Brendan Z., and John Polich. 2008. Workload Assessment of Computer Gaming Using a Single-Stimulus Event-Related Potential Paradigm. Biological Psychology 77: 277–83. [Google Scholar] [CrossRef] [Green Version]

- Anderson, Thomas, Rotem Petranker, Hause Lin, and Norman A. S. Farb. 2021. The Metronome Response Task for Measuring Mind Wandering: Replication Attempt and Extension of Three Studies by Seli et al. Attention, Perception, & Psychophysics 83: 315–30. [Google Scholar] [CrossRef]

- Arnau, Stefan, Christoph Löffler, Jan Rummel, Dirk Hagemann, Edmund Wascher, and Anna-Lena Schubert. 2020. Inter-Trial Alpha Power Indicates Mind Wandering. Psychophysiology 57: e13581. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Arnicane, Andra, Klaus Oberauer, and Alessandra S. Souza. 2021. Validity of Attention Self-Reports in Younger and Older Adults. Cognition 206: 104482. [Google Scholar] [CrossRef]

- Atchley, Rachel, Daniel Klee, and Barry Oken. 2017. EEG Frequency Changes Prior to Making Errors in an Easy Stroop Task. Frontiers in Human Neuroscience 11: 521. [Google Scholar] [CrossRef] [Green Version]

- Baird, Benjamin, Jonathan Smallwood, Antoine Lutz, and Jonathan W. Schooler. 2014. The Decoupled Mind: Mind-Wandering Disrupts Cortical Phase-Locking to Perceptual Events. Journal of Cognitive Neuroscience 26: 2596–607. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Baldwin, Carryl L., Daniel M. Roberts, Daniela Barragan, John D. Lee, Neil Lerner, and James S. Higgins. 2017. Detecting and Quantifying Mind Wandering during Simulated Driving. Frontiers in Human Neuroscience 11: 406. [Google Scholar] [CrossRef]

- Barron, Evelyn, Leigh M. Riby, Joanna Greer, and Jonathan Smallwood. 2011. Absorbed in Thought: The Effect of Mind Wandering on the Processing of Relevant and Irrelevant Events. Psychological Science 22: 596–601. [Google Scholar] [CrossRef] [PubMed]

- Bates, Douglas, Martin Mächler, Ben Bolker, and Steve Walker. 2015. Fitting Linear Mixed-Effects Models Using Lme4. Journal of Statistical Software 67: 1–48. [Google Scholar] [CrossRef]

- Baumeister, Alfred, and George Kellas. 1968. Reaction Time and Mental Retardation. In International Review of Research in Mental Retardation, 3rd ed. New York and London: Academic Press, pp. 163–93. [Google Scholar]

- Ben-Shachar, Mattan, Daniel Lüdecke, and Dominique Makowski. 2020. Effectsize: Estimation of Effect Size Indices and Standardized Parameters. Journal of Open Source Software 5: 2815. [Google Scholar] [CrossRef]

- Berger, Hans. 1929. Über das Elektrenkephalogramm des Menschen. Archiv für Psychiatrie und Nervenkrankheiten 87: 527–70. [Google Scholar] [CrossRef]

- Boehm, Udo, Maarten Marsman, Han L. J. van der Maas, and Gunter Maris. 2021. An Attention-Based Diffusion Model for Psychometric Analyses. Psychometrika 86: 938–72. [Google Scholar] [CrossRef]

- Braboszcz, Claire, and Arnaud Delorme. 2011. Lost in Thoughts: Neural Markers of Low Alertness during Mind Wandering. NeuroImage 54: 3040–47. [Google Scholar] [CrossRef] [PubMed]

- Burnham, Kenneth P., and David R. Anderson. 2002. Model Selection and Multimodel Inference: A Practical Information-Theoretic Approach, 2nd ed. New York: Springer. [Google Scholar] [CrossRef] [Green Version]

- Carriere, Jonathan S. A., Paul Seli, and Daniel Smilek. 2013. Wandering in Both Mind and Body: Individual Differences in Mind Wandering and Inattention Predict Fidgeting. Canadian Journal of Experimental Psychology/Revue Canadienne de Psychologie Expérimentale 67: 19–31. [Google Scholar] [CrossRef]

- Cavanagh, James F., and Michael J. Frank. 2014. Frontal Theta as a Mechanism for Cognitive Control. Trends in Cognitive Sciences 18: 414–21. [Google Scholar] [CrossRef] [Green Version]

- Cavanagh, James F., Laura Zambrano-Vazquez, and John J. B. Allen. 2012. Theta Lingua Franca: A Common Mid-frontal Substrate for Action Monitoring Processes. Psychophysiology 49: 220–38. [Google Scholar] [CrossRef] [Green Version]

- Compton, Rebecca J., Dylan Gearinger, and Hannah Wild. 2019. The Wandering Mind Oscillates: EEG Alpha Power Is Enhanced during Moments of Mind-Wandering. Cognitive, Affective, & Behavioral Neuroscience 19: 1184–91. [Google Scholar] [CrossRef]

- Conway, Andrew R. A., Nelson Cowan, Michael F. Bunting, David J. Therriault, and Scott R. B. Minkoff. 2002. A Latent Variable Analysis of Working Memory Capacity, Short-Term Memory Capacity, Processing Speed, and General Fluid Intelligence. Intelligence 30: 163–83. [Google Scholar] [CrossRef]

- Cooper, Nicholas R., Rodney J. Croft, Samuel J. J. Dominey, Adrian P. Burgess, and John H. Gruzelier. 2003. Paradox Lost? Exploring the Role of Alpha Oscillations during Externally vs. Internally Directed Attention and the Implications for Idling and Inhibition Hypotheses. International Journal of Psychophysiology 47: 65–74. [Google Scholar] [CrossRef]

- Coyle, Thomas R. 2001. IQ Is Related to the Worst Performance Rule in a Memory Task Involving Children. Intelligence 29: 117–29. [Google Scholar] [CrossRef]

- Coyle, Thomas R. 2003. A Review of the Worst Performance Rule: Evidence, Theory, and Alternative Hypotheses. Intelligence 31: 567–87. [Google Scholar] [CrossRef]

- Delorme, Arnaud, and Scott Makeig. 2004. EEGLAB: An Open Source Toolbox for Analysis of Single-Trial EEG Dynamics Including Independent Component Analysis. Journal of Neuroscience Methods 134: 9–21. [Google Scholar] [CrossRef] [Green Version]

- Diascro, Matthew N., and Nathan Brody. 1993. Serial versus Parallel Processing in Visual Search Tasks and IQ. Personality and Individual Differences 14: 243–45. [Google Scholar] [CrossRef]

- Doebler, Philipp, and Barbara Scheffler. 2016. The Relationship of Choice Reaction Time Variability and Intelligence: A Meta-Analysis. Learning and Individual Differences 52: 157–66. [Google Scholar] [CrossRef]

- Dutilh, Gilles, Joachim Vandekerckhove, Alexander Ly, Dora Matzke, Andreas Pedroni, Renato Frey, Jörg Rieskamp, and Eric-Jan Wagenmakers. 2017. A Test of the Diffusion Model Explanation for the Worst Performance Rule Using Preregistration and Blinding. Attention, Perception, & Psychophysics 79: 713–25. [Google Scholar] [CrossRef] [Green Version]

- Edwards, Allen L. 1976. An Introduction to Linear Regression and Correlation. San Francisco: W. H. Freeman. [Google Scholar]

- Fernandez, Sébastien, Delphine Fagot, Judith Dirk, and Anik de Ribaupierre. 2014. Generalization of the Worst Performance Rule across the Lifespan. Intelligence 42: 31–43. [Google Scholar] [CrossRef]

- Frank, David J., Brent Nara, Michela Zavagnin, Dayna R. Touron, and Michael J. Kane. 2015. Validating Older Adults’ Reports of Less Mind-Wandering: An Examination of Eye Movements and Dispositional Influences. Psychology and Aging 30: 266–78. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Frischkorn, Gidon, Anna-Lena Schubert, Andreas Neubauer, and Dirk Hagemann. 2016. The Worst Performance Rule as Moderation: New Methods for Worst Performance Analysis. Journal of Intelligence 4: 9. [Google Scholar] [CrossRef] [Green Version]

- Frischkorn, Gidon, Anna-Lena Schubert, and Dirk Hagemann. 2019. Processing Speed, Working Memory, and Executive Functions: Independent or Inter-Related Predictors of General Intelligence. Intelligence 75: 95–110. [Google Scholar] [CrossRef] [Green Version]

- Gelman, Andrew, and Hal Stern. 2006. The Difference Between ‘Significant’ and ‘Not Significant’ Is Not Itself Statistically Significant. The American Statistician 60: 328–31. [Google Scholar] [CrossRef] [Green Version]

- Hanel, Paul H. P., and Katia C. Vione. 2016. Do Student Samples Provide an Accurate Estimate of the General Public?” Edited by Martin Voracek. PLoS ONE 11: e0168354. [Google Scholar] [CrossRef] [Green Version]

- Hanslmayr, Simon, Joachim Gross, Wolfgang Klimesch, and Kimron L. Shapiro. 2011. The Role of Alpha Oscillations in Temporal Attention. Brain Research Reviews 67: 331–43. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jäger, Adolf O. 1984. Intelligenzstrukturforschung: Konkurrierende Modelle, Neue Entwicklungen, Perspektiven. [Structural Research on Intelligence: Competing Models, New Developments, Perspectives]. Psychologische Rundschau 35: 21–35. [Google Scholar]

- Jäger, A. O., H. M. Süß, and A. Beauducel. 1997. Berliner Intelligenzstruktur-Test. Form 4. Göttingen: Hogrefe. [Google Scholar]

- Jensen, Arthur R. 1992. The Importance of Intraindividual Variation in Reaction Time. Personality and Individual Differences 13: 869–81. [Google Scholar] [CrossRef]

- Kam, Julia W. Y., and Todd C. Handy. 2013. The Neurocognitive Consequences of the Wandering Mind: A Mechanistic Account of Sensory-Motor Decoupling. Frontiers in Psychology 4: 725. [Google Scholar] [CrossRef] [Green Version]

- Kam, Julia W. Y., Elizabeth Dao, James Farley, Kevin Fitzpatrick, Jonathan Smallwood, Jonathan W. Schooler, and Todd C. Handy. 2011. Slow Fluctuations in Attentional Control of Sensory Cortex. Journal of Cognitive Neuroscience 23: 460–70. [Google Scholar] [CrossRef] [Green Version]

- Kane, Michael J., David Z. Hambrick, Stephen W. Tuholski, Oliver Wilhelm, Tabitha W. Payne, and Randall W. Engle. 2004. The Generality of Working Memory Capacity: A Latent-Variable Approach to Verbal and Visuospatial Memory Span and Reasoning. Journal of Experimental Psychology: General 133: 189–217. [Google Scholar] [CrossRef] [PubMed]

- Kane, Michael J., David Z. Hambrick, and Andrew R. A. Conway. 2005. Working Memory Capacity and Fluid Intelligence Are Strongly Related Constructs: Comment on Ackerman, Beier, and Boyle (2005). Psychological Bulletin 131: 66–71. [Google Scholar] [CrossRef] [Green Version]

- Kane, Michael J., Andrew R. A. Conway, David Z. Hambrick, and Randall W. Engle. 2008. Variation in Working Memory Capacity as Variation in Executive Attention and Control. In Variation in Working Memory. Edited by Andrew Conway, Chris Jarrold, Michael Kane, Akira Miyake and John Towse. Oxford: Oxford University Press, pp. 21–48. [Google Scholar] [CrossRef] [Green Version]

- Kane, Michael J., Matt E. Meier, Bridget A. Smeekens, Georgina M. Gross, Charlotte A. Chun, Paul J. Silvia, and Thomas R. Kwapil. 2016. Individual Differences in the Executive Control of Attention, Memory, and Thought, and Their Associations with Schizotypy. Journal of Experimental Psychology. General 145: 1017–48. [Google Scholar] [CrossRef]

- Kleiner, Mario, David Brainard, Denis Pelli, A. Ingling, R. Murray, and C. Broussard. 2007. What’s New in Psychtoolbox-3. Perception 36: 1–16. [Google Scholar]

- Kok, Albert. 2001. On the Utility of P3 Amplitude as a Measure of Processing Capacity. Psychophysiology 38: 557–77. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kranzler, John H. 1992. A Test of Larson and Alderton’s (1990) Worst Performance Rule of Reaction Time Variability. Personality and Individual Differences 13: 255–61. [Google Scholar] [CrossRef]

- Krawietz, Sabine A., Andrea K. Tamplin, and Gabriel A. Radvansky. 2012. Aging and Mind Wandering during Text Comprehension. Psychology and Aging 27: 951–58. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kuznetsova, Alexandra, Per B. Brockhoff, and Rune H. B. Christensen. 2017. LmerTest Package: Tests in Linear Mixed Effects Models. Journal of Statistical Software 82: 1–26. [Google Scholar] [CrossRef] [Green Version]

- Kyllonen, Patrick C., and Raymond E. Christal. 1990. Reasoning Ability Is (Little More than) Working-Memory Capacity?!”. Intelligence 14: 389–433. [Google Scholar] [CrossRef]

- Larson, Gerald E., and David L. Alderton. 1990. Reaction Time Variability and Intelligence: A ‘Worst Performance’ Analysis of Individual Differences. Intelligence 14: 309–25. [Google Scholar] [CrossRef]

- Leite, Fábio P. 2009. Should IQ, Perceptual Speed, or Both Be Used to Explain Response Time?”. The American Journal of Psychology 122: 517–26. [Google Scholar]

- Leszczynski, Marcin, Leila Chaieb, Thomas P. Reber, Marlene Derner, Nikolai Axmacher, and Juergen Fell. 2017. Mind Wandering Simultaneously Prolongs Reactions and Promotes Creative Incubation. Scientific Reports 7: 10197. [Google Scholar] [CrossRef] [Green Version]

- Lopez-Calderon, Javier, and Steven J. Luck. 2014. ERPLAB: An Open-Source Toolbox for the Analysis of Event-Related Potentials. Frontiers in Human Neuroscience 8: 213. [Google Scholar] [CrossRef] [Green Version]

- Maillet, David, and Daniel L. Schacter. 2016. From Mind Wandering to Involuntary Retrieval: Age-Related Differences in Spontaneous Cognitive Processes. Neuropsychologia 80: 142–56. [Google Scholar] [CrossRef] [Green Version]

- Maillet, David, Roger E. Beaty, Megan L. Jordano, Dayna R. Touron, Areeba Adnan, Paul J. Silvia, Thomas R. Kwapil, Gary R. Turner, R. Nathan Spreng, and Michael J. Kane. 2018. Age-Related Differences in Mind-Wandering in Daily Life. Psychology and Aging 33: 643–53. [Google Scholar] [CrossRef] [Green Version]

- Maillet, David, Lujia Yu, Lynn Hasher, and Cheryl L. Grady. 2020. Age-Related Differences in the Impact of Mind-Wandering and Visual Distraction on Performance in a Go/No-Go Task. Psychology and Aging 35: 627–38. [Google Scholar] [CrossRef] [PubMed]

- McVay, Jennifer C., and Michael J. Kane. 2009. Conducting the Train of Thought: Working Memory Capacity, Goal Neglect, and Mind Wandering in an Executive-Control Task. Journal of Experimental Psychology: Learning, Memory, and Cognition 35: 196–204. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- McVay, Jennifer C., and Michael J. Kane. 2010. Does Mind Wandering Reflect Executive Function or Executive Failure? Comment on Smallwood and Schooler (2006) and Watkins (2008). Psychological Bulletin 136: 188–97. [Google Scholar] [CrossRef] [Green Version]

- McVay, Jennifer C., and Michael J. Kane. 2012. Drifting from Slow to ‘d’oh!’: Working Memory Capacity and Mind Wandering Predict Extreme Reaction Times and Executive Control Errors. Journal of Experimental Psychology: Learning, Memory, and Cognition 38: 525–49. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Miller, Edward M. 1994. Intelligence and Brain Myelination: A Hypothesis. Personality and Individual Differences 17: 803–32. [Google Scholar] [CrossRef]

- Mognon, Andrea, Jorge Jovicich, Lorenzo Bruzzone, and Marco Buiatti. 2011. ADJUST: An Automatic EEG Artifact Detector Based on the Joint Use of Spatial and Temporal Features. Psychophysiology 48: 229–40. [Google Scholar] [CrossRef]

- Mrazek, Michael D., Dawa T. Phillips, Michael S. Franklin, James M. Broadway, and Jonathan W. Schooler. 2013. Young and Restless: Validation of the Mind-Wandering Questionnaire (MWQ) Reveals Disruptive Impact of Mind-Wandering for Youth. Frontiers in Psychology 4: 560. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nash, J. C., and R. Varadhan. 2011. Unifying Optimization Algorithms to Aid Software System Users: Optimx for R. Journal of Statistical Software 43: 1–14. Available online: http://www.jstatsoft.org/v43/i09/ (accessed on 23 December 2021).

- O’Connell, Redmond G., Paul M. Dockree, Ian H. Robertson, Mark A. Bellgrove, John J. Foxe, and Simon P. Kelly. 2009. Uncovering the Neural Signature of Lapsing Attention: Electrophysiological Signals Predict Errors up to 20 s before They Occur. Journal of Neuroscience 29: 8604–11. [Google Scholar] [CrossRef] [Green Version]

- Oberauer, Klaus, Ralf Schulze, Oliver Wilhelm, and Heinz-Martin Süß. 2005. Working Memory and Intelligence--Their Correlation and Their Relation: Comment on Ackerman, Beier, and Boyle (2005). Psychological Bulletin 131: 61–65. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Polich, John. 2007. Updating P300: An Integrative Theory of P3a and P3b. Clinical Neurophysiology: Official Journal of the International Federation of Clinical Neurophysiology 118: 2128–48. [Google Scholar] [CrossRef] [Green Version]

- R Core Team. 2021. R: A Language and Environment for Statistical Computing. Vienna: R Foundation for Statistical Computing. [Google Scholar]

- Rammsayer, Thomas, and Stefan Troche. 2016. Validity of the Worst Performance Rule as a Function of Task Complexity and Psychometric g: On the Crucial Role of g Saturation. Journal of Intelligence 4: 5. [Google Scholar] [CrossRef] [Green Version]

- Randall, Jason G., Frederick L. Oswald, and Margaret E. Beier. 2014. Mind-Wandering, Cognition, and Performance: A Theory-Driven Meta-Analysis of Attention Regulation. Psychological Bulletin 140: 1411–31. [Google Scholar] [CrossRef]

- Ratcliff, Roger. 1978. A Theory of Memory Retrieval. Psychological Review 85: 59–108. [Google Scholar] [CrossRef]

- Ratcliff, Roger, Florian Schmiedek, and Gail McKoon. 2008. A Diffusion Model Explanation of the Worst Performance Rule for Reaction Time and IQ. Intelligence 36: 10–17. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ratcliff, Roger, Anjali Thapar, and Gail McKoon. 2010. Individual Differences, Aging, and IQ in Two-Choice Tasks. Cognitive Psychology 60: 127–57. [Google Scholar] [CrossRef] [Green Version]

- Ratcliff, Roger, Anjali Thapar, and Gail McKoon. 2011. The Effects of Aging and IQ on Item and Associative Memory. Journal of Experimental Psychology. General 140: 464–87. [Google Scholar] [CrossRef] [Green Version]

- Revelle, W. 2020. Psych: Procedures for Psychological, Psychometric, and Personality Research. R Package Version 2.0.12. Evanston: Northwestern University, Available online: https://CRAN.R-project.org/package=psych (accessed on 16 March 2021).

- Robison, Matthew K., Ashley L. Miller, and Nash Unsworth. 2020. A Multi-Faceted Approach to Understanding Individual Differences in Mind-Wandering. Cognition 198: 104078. [Google Scholar] [CrossRef] [PubMed]

- Salthouse, Timothy A. 1993. Attentional Blocks Are Not Responsible for Age-Related Slowing. Journal of Gerontology 48: P263–P270. [Google Scholar] [CrossRef]

- Salthouse, Timothy A. 1998. Relation of Successive Percentiles of Reaction Time Distributions to Cognitive Variables and Adult Age. Intelligence 26: 153–66. [Google Scholar] [CrossRef]

- Saville, Christopher W. N., Kevin D. O. Beckles, Catherine A. MacLeod, Bernd Feige, Monica Biscaldi, André Beauducel, and Christoph Klein. 2016. A Neural Analogue of the Worst Performance Rule: Insights from Single-Trial Event-Related Potentials. Intelligence 55: 95–103. [Google Scholar] [CrossRef] [Green Version]

- Scharfen, Jana, Judith Marie Peters, and Heinz Holling. 2018. Retest Effects in Cognitive Ability Tests: A Meta-Analysis. Intelligence 67: 44–66. [Google Scholar] [CrossRef]

- Schmiedek, Florian, Klaus Oberauer, Oliver Wilhelm, Heinz-Martin Süß, and Werner W. Wittmann. 2007. Individual Differences in Components of Reaction Time Distributions and Their Relations to Working Memory and Intelligence. Journal of Experimental Psychology: General 136: 414–29. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Schmitz, Florian, and Oliver Wilhelm. 2016. Modeling Mental Speed: Decomposing Response Time Distributions in Elementary Cognitive Tasks and Correlations with Working Memory Capacity and Fluid Intelligence. Journal of Intelligence 4: 13. [Google Scholar] [CrossRef] [Green Version]

- Schmitz, Florian, Dominik Rotter, and Oliver Wilhelm. 2018. Scoring Alternatives for Mental Speed Tests: Measurement Issues and Validity for Working Memory Capacity and the Attentional Blink Effect. Journal of Intelligence 6: 47. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Schönbrodt, Felix D., and Marco Perugini. 2013. At What Sample Size Do Correlations Stabilize? ” Journal of Research in Personality 47: 609–12. [Google Scholar] [CrossRef] [Green Version]

- Schubert, Anna-Lena. 2019. A Meta-Analysis of the Worst Performance Rule. Intelligence 73: 88–100. [Google Scholar] [CrossRef] [Green Version]

- Schubert, Anna-Lena, Dirk Hagemann, Andreas Voss, Andrea Schankin, and Katharina Bergmann. 2015. Decomposing the Relationship between Mental Speed and Mental Abilities. Intelligence 51: 28–46. [Google Scholar] [CrossRef]

- Schubert, Anna-Lena, Gidon T. Frischkorn, Dirk Hagemann, and Andreas Voss. 2016. Trait Characteristics of Diffusion Model Parameters. Journal of Intelligence 4: 7. [Google Scholar] [CrossRef] [Green Version]

- Schubert, Anna-Lena, Gidon T. Frischkorn, and Jan Rummel. 2020. The Validity of the Online Thought-Probing Procedure of Mind Wandering Is Not Threatened by Variations of Probe Rate and Probe Framing. Psychological Research 84: 1846–56. [Google Scholar] [CrossRef] [Green Version]

- Seli, Paul, James Allan Cheyne, and Daniel Smilek. 2013. Wandering Minds and Wavering Rhythms: Linking Mind Wandering and Behavioral Variability. Journal of Experimental Psychology: Human Perception and Performance 39: 1–5. [Google Scholar] [CrossRef] [PubMed]

- Seli, Paul, Jonathan S. A. Carriere, David R. Thomson, James Allan Cheyne, Kaylena A. Ehgoetz Martens, and Daniel Smilek. 2014. Restless Mind, Restless Body. Journal of Experimental Psychology: Learning, Memory, and Cognition 40: 660–68. [Google Scholar] [CrossRef] [PubMed]

- Sheppard, Leah D., and Philip A. Vernon. 2008. Intelligence and Speed of Information-Processing: A Review of 50 Years of Research. Personality and Individual Differences 44: 535–51. [Google Scholar] [CrossRef]

- Shipstead, Zach, Tyler L. Harrison, and Randall W. Engle. 2016. Working Memory Capacity and Fluid Intelligence: Maintenance and Disengagement. Perspectives on Psychological Science 11: 771–99. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Skrondal, Anders, and Petter Laake. 2001. Regression among Factor Scores. Psychometrika 66: 563–75. [Google Scholar] [CrossRef] [Green Version]

- Smallwood, Jonathan, and Jonathan W. Schooler. 2006. The Restless Mind. Psychological Bulletin 132: 946–58. [Google Scholar] [CrossRef]

- Smallwood, Jonathan, and Jonathan W. Schooler. 2015. The Science of Mind Wandering: Empirically Navigating the Stream of Consciousness. Annual Review of Psychology 66: 487–518. [Google Scholar] [CrossRef]

- Smallwood, Jonathan, Merrill McSpadden, and Jonathan W. Schooler. 2007. The Lights Are on but No One’s Home: Meta-Awareness and the Decoupling of Attention When the Mind Wanders. Psychonomic Bulletin & Review 14: 527–33. [Google Scholar] [CrossRef] [Green Version]

- Smallwood, Jonathan, Emily Beach, Jonathan W. Schooler, and Todd C. Handy. 2008. Going AWOL in the Brain: Mind Wandering Reduces Cortical Analysis of External Events. Journal of Cognitive Neuroscience 20: 458–69. [Google Scholar] [CrossRef]

- Sorjonen, Kimmo, Guy Madison, Bo Melin, and Fredrik Ullén. 2020. The Correlation of Sorted Scores Rule. Intelligence 80: 101454. [Google Scholar] [CrossRef] [Green Version]

- Sorjonen, Kimmo, Guy Madison, Tomas Hemmingsson, Bo Melin, and Fredrik Ullén. 2021. Further Evidence That the Worst Performance Rule Is a Special Case of the Correlation of Sorted Scores Rule. Intelligence 84: 101516. [Google Scholar] [CrossRef]

- Steindorf, Lena, and Jan Rummel. 2020. Do Your Eyes Give You Away? A Validation Study of Eye-Movement Measures Used as Indicators for Mindless Reading. Behavior Research Methods 52: 162–76. [Google Scholar] [CrossRef] [Green Version]

- Sudevan, Padmanabhan, and David A. Taylor. 1987. The Cuing and Priming of Cognitive Operations. Journal of Experimental Psychology: Human Perception and Performance 13: 89–103. [Google Scholar] [CrossRef]

- Thomson, David R., Paul Seli, Derek Besner, and Daniel Smilek. 2014. On the Link between Mind Wandering and Task Performance over Time. Consciousness and Cognition 27: 14–26. [Google Scholar] [CrossRef] [PubMed]

- Thut, Gregor, Annika Nietzel, Stephan A. Brandt, and Alvaro Pascual-Leone. 2006. α-Band Electroencephalographic Activity over Occipital Cortex Indexes Visuospatial Attention Bias and Predicts Visual Target Detection. Journal of Neuroscience 26: 9494–502. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Unsworth, Nash, Thomas S. Redick, Chad E. Lakey, and Diana L. Young. 2010. Lapses in Sustained Attention and Their Relation to Executive Control and Fluid Abilities: An Individual Differences Investigation. Intelligence 38: 111–22. [Google Scholar] [CrossRef]

- Verhaeghen, Paul, and Timothy A. Salthouse. 1997. Meta-Analyses of Age–Cognition Relations in Adulthood: Estimates of Linear and Nonlinear Age Effects and Structural Models. Psychological Bulletin 122: 231–49. [Google Scholar] [CrossRef]

- Verleger, Rolf. 2020. Effects of Relevance and Response Frequency on P3b Amplitudes: Review of Findings and Comparison of Hypotheses about the Process Reflected by P3b. Psychophysiology 57: e13542. [Google Scholar] [CrossRef] [Green Version]

- Watkins, Edward R. 2008. Constructive and Unconstructive Repetitive Thought. Psychological Bulletin 134: 163–206. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Weinstein, Yana. 2018. Mind-Wandering, How Do I Measure Thee with Probes? Let Me Count the Ways. Behavior Research Methods 50: 642–61. [Google Scholar] [CrossRef] [Green Version]

- Welhaf, Matthew S., Bridget A. Smeekens, Matt E. Meier, Paul J. Silvia, Thomas R. Kwapil, and Michael J. Kane. 2020. The Worst Performance Rule, or the Not-Best Performance Rule? Latent-Variable Analyses of Working Memory Capacity, Mind-Wandering Propensity, and Reaction Time. Journal of Intelligence 8: 25. [Google Scholar] [CrossRef] [PubMed]

- Wickham, Hadley, Mara Averick, Jennifer Bryan, Winston Chang, Lucy DAgostino McGowan, Romain François, and Garrett Grolemund. 2019. Welcome to the Tidyverse. Journal of Open Source Software 4: 1686. [Google Scholar] [CrossRef]

| Mean | SD | Reliability | N | |

|---|---|---|---|---|

| ACC | 96 | 2 | --- | 85 |

| RT | 836.69 | 154.06 | .99 | 85 |

| Intelligence | 1498.29 | 80.02 | .79 | 85 |

| IQ | 94.58 | 16.12 | .79 | 85 |

| TUT | 26.07 | 19.24 | .96 | 85 |

| Q-SMW over all | 37.64 | 8.88 | .81 | 85 |

| Q-SMW/item | 5.38 | 1.29 | --- | 85 |

| MRT | 73.49 | 29.45 | .99 | 85 |

| P1 amplitude | 0.94 | 1.34 | .96 | 84 |

| P3 amplitude | 3.91 | 2.97 | .99 | 84 |

| Alpha power | 1.20 | 0.94 | .92 | 84 |

| Theta power | 0.00 | 0.84 | .72 | 84 |

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | |

|---|---|---|---|---|---|---|---|---|---|

| 1. Mean RT | |||||||||

| 2. SD RT | .86 *** | ||||||||

| 3. Intelligence | −.29 ** | −.30 ** | |||||||

| 4. TUT | −.12 | −.27 * | .15 | ||||||

| 5. Q-SMW | −.11 | −.04 | .09 | .30 ** | |||||

| 6. MRT | .31 ** | .32 ** | −.27 * | −.03 | −.11 | ||||

| 7. P1 amplitude | −.11 | −.06 | .03 | −.02 | .06 | −.22 * | |||

| 8. P3 amplitude | .03 | .03 | −.05 | .01 | −.07 | −.02 | .27 * | ||

| 9. Alpha power | −.18 | −.16 | .03 | −.11 | −.13 | .06 | .06 | .02 | |

| 10. Theta power | −.18 | −.19 | .18 | .09 | .09 | .03 | −.09 | −.16 | −.05 |

| RT On | b-Weight (Standard Error) | df | t-Value | Random Effect SD | p |

|---|---|---|---|---|---|

| Intercept | 835.82 (15.86) | 85 | 52.62 | 146.45 | <.001 |

| intelligence | −44.18 (15.98) | 85 | −2.77 | .007 | |

| trial number | 146.99 (5.20) | 85 | 28.26 | 47.95 | <.001 |

| trial number × intelligence = WPR | −14.93 (5.23) | 85 | −2.85 | .005 |

| RT On | b-Weight (Standard Error) | df | t-Value | Random Effect SD | p |

|---|---|---|---|---|---|

| intercept | 835.82 (15.40) | 85 | 54.29 | 96.56 | <.001 |

| intelligence | −44.18 (15.49) | 85 | −2.85 | .005 | |

| trial number | 146.99 (4.91) | 85 | 29.91 | 47.38 | <.001 |

| control | −835.82 (0.27) | 57630 | −3091.39 | <.001 | |

| trial number × intelligence = WPR | −14.93 (4.94) | 85 | −3.02 | .003 | |

| intelligence × control | 15.10 (0.27) | 57630 | 55.53 | <.001 | |

| trial number × control | −146.99 (0.23) | 57630 | −627.78 | <.001 | |

| trial number × intelligence × control | 6.05 (0.24) | 57630 | 25.70 | <.001 |

| Mean | SD | Reliability | N | |

|---|---|---|---|---|

| RT AF | 461.03 | 49.65 | .99 | 463 |

| RT LF | 532.35 | 85.93 | .99 | 416 |

| RT Stroop | 508.34 | 49.86 | .99 | 460 |

| RT SART | 510.62 | 81.94 | .99 | 441 |

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | |

|---|---|---|---|---|---|---|---|---|---|

| 1. Mean RT AF | |||||||||

| 2. SD RT AF | .65 *** | ||||||||

| 3. Mean RT LF | .53 *** | .42 *** | |||||||

| 4. SD RT LF | .34 *** | .40 *** | .73 *** | ||||||

| 5. Mean RT Stroop | .63 *** | .40 *** | .49 *** | .33 *** | |||||

| 6. SD RT Stroop | .31 *** | .48 *** | .30 *** | .32 *** | .52 *** | ||||

| 7. Mean RT SART | .11 * | −.04 | .12 * | .05 | .24 *** | .02 | |||

| 8. SD RT SART | .13 ** | .18 *** | .14 ** | .16 ** | .23 *** | .28 *** | .21 *** | ||

| 9. WMC | −.20 *** | −.22 *** | −.19 *** | −.20 *** | −.23 *** | −.25 *** | −.01 | −.23 *** | |

| 10. TUT | .12 * | .20 *** | .19 *** | .26 *** | .16 ** | .22 *** | −.02 | .21 *** | −.23 *** |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Löffler, C.; Frischkorn, G.T.; Rummel, J.; Hagemann, D.; Schubert, A.-L. Do Attentional Lapses Account for the Worst Performance Rule? J. Intell. 2022, 10, 2. https://doi.org/10.3390/jintelligence10010002

Löffler C, Frischkorn GT, Rummel J, Hagemann D, Schubert A-L. Do Attentional Lapses Account for the Worst Performance Rule? Journal of Intelligence. 2022; 10(1):2. https://doi.org/10.3390/jintelligence10010002

Chicago/Turabian StyleLöffler, Christoph, Gidon T. Frischkorn, Jan Rummel, Dirk Hagemann, and Anna-Lena Schubert. 2022. "Do Attentional Lapses Account for the Worst Performance Rule?" Journal of Intelligence 10, no. 1: 2. https://doi.org/10.3390/jintelligence10010002

APA StyleLöffler, C., Frischkorn, G. T., Rummel, J., Hagemann, D., & Schubert, A.-L. (2022). Do Attentional Lapses Account for the Worst Performance Rule? Journal of Intelligence, 10(1), 2. https://doi.org/10.3390/jintelligence10010002