Abstract

Identifying human face shape and eye attributes is the first and most vital process before applying for the right hairstyle and eyelashes extension. The aim of this research work includes the development of a decision support program to constitute an aid system that analyses eye and face features automatically based on the image taken from a user. The system suggests a suitable recommendation of eyelashes type and hairstyle based on the automatic reported users’ eye and face features. To achieve the aim, we develop a multi-model system comprising three separate models; each model targeted a different task, including; face shape classification, eye attribute identification and gender detection model. Face shape classification system has been designed based on the development of a hybrid framework of handcrafting and learned feature. Eye attributes have been identified by exploiting the geometrical eye measurements using the detected eye landmarks. Gender identification system has been realised and designed by implementing a deep learning-based approach. The outputs of three developed models are merged to design a decision support system for haircut and eyelash extension recommendation. The obtained detection results demonstrate that the proposed method effectively identifies the face shape and eye attributes. Developing such computer-aided systems is suitable and beneficial for the user and would be beneficial to the beauty industrial.

1. Introduction

The human face plays an important role in daily life. Pursuing beauty, especially facial beauty, is the nature of human beings. As the demand for aesthetic surgery has increased widely over the past few years, an understanding of beauty is becoming increasingly important for medical settings. The physical beauty of faces affects many social outcomes, such as mate choices and hiring decisions [1,2]. Thus, the cosmetic industry has produced various products that target to enhance different parts of the human body, including hair, skin, eye, eyebrow, and lips. Not surprisingly, research topics based on the face features have a long track record in psychology, and many other scientific fields [3]. During recent decades, computer vision systems have played a major role in obtaining an image from a camera to process and analyse it in a manner similar to natural human vision system [4]. Computer vision algorithms have recently attracted increasing attention and been considered one of hottest topics due to its significant role in healthcare, industrial and commercial applications [4,5,6,7,8,9]. Facial image processing and analysis are essential techniques that help extract information from images of human faces. The extracted information such as locations of facial features such as eyes, nose, eyebrows, mouth, and lips, can play a major role in several fields, such as medical purposes, security purposes, cosmetic industry, social media applications, and recognition [5]. Several techniques have been developed to localise these parts and extract them for analysis [10]. The landmarks used in computational face analysis often resemble the anatomical soft tissue landmarks that are used by physicians [11]. Recently, advanced technologies such as artificial intelligence and machine/deep learning algorithms have helped the beauty industry in several ways, from providing statistical bases for attractiveness and helping people alert their looks to developing products which would tackle specific needs of customers [12,13]. Furthermore, cloud computing facilities and data centre services have gained a lot of attention due to their significant role for customers’ access to such products by building web-based and mobile applications [14].

In the literature, there have been many facial attribute analysis methods presented to recognise whether a specific facial attribute is present in a given image. The main aim of developing facial attribute analysis methods was to build a bridge between feature representations required by real-world computer vision tasks and human-understandable visual descriptions [15,16]. Deep learning-based facial attribute analysis methods can generally be grouped into two categories: holistic methods which exploit the relationships among attributes to get more discriminative cues [17,18,19] and part-based methods that emphasise facial details for detecting localisation features [20,21]. Unlike the existing facial attribute analysis methods, which focus on recognising whether a specific facial attribute is present in a given face image or not, our proposed method suggests that all concerned attributes are present but in more than one label.

Furthermore, many automated face shape classification systems were presented in the literature. Many of these published face classification methods consider extracting the face features manually then passing them to three classifiers for classification, including linear discriminant analysis (LDA), artificial neural networks (ANN), and support vector machines (SVM) [22,23], k-nearest neighbours [24], and probabilistic neural networks [25]. Furthermore, Bansode et al. [26] proposed a face shape identification method based on three criteria which are region similarity, correlation coefficient and fractal dimensions. Recently, Pasupa et al. [27] presented a hybrid approach combining VGG convolutional neural network (CNN) with SVM for face shape classification. Moreover, Emmanuel [28] adopted pretrained Inception CNN for classifying face shape using features extracted automatically by CNN. The work presented by researchers showed progress in face shape interpretation; however, existing face classification systems require more effort to achieve better performance. The aforementioned methods perform well only on images taken from subjects looking straight towards the camera and their body in a controlled position and acquired under a clear light setting.

Recently, many approaches were developed for building fashion recommender systems [29,30]. Conducting a deep search in the literature seeking the existing recommendation systems leads to find out two hairstyle recommendation systems [27,31]. The system developed by Liang and Wang [31] considers many attributes such as age, gender, skin colour, occupation and customer rating for recommending hairstyle. However, the recommended haircut style might not fit the beauty experts’ recommendations based on face shape attributes. Furthermore, the hairstyle recommender system presented by [27] is not general and focuses only on women. To the best of our knowledge, our proposed eyelashes and hairstyle recommendation system is the first study conducted to automatically make a recommendation of a suitable eyelash type based on computer vision techniques.

Facial attributes such as eye features, including shape, size, position, setting, face shape, and contour determine what makeup technique, eyelashes extension and haircut styles should be applied. Therefore, it is important for beautician to know clients’ face and eye features before stepping into the makeup-wearing, haircut style and eyelashes extension applying. The face and eye features are essential for beauty expert because different types of face shape and eye features are critical information to decide what kind of eye shadows, eyeliners, eyelashes extension, haircut style and colour of cosmetics are best suited to a particular individual. Thus, automation of facial attribute analysis tasks based on developing computer-based models for cosmetic purposes would help to easing people’s life and reducing time and effort spent by beauty experts. Furthermore, virtual consultation and recommendation systems based on facial and eye attributes [27,31,32,33,34,35,36] have secured a foothold in the market and individuals are opening up to the likelihood of substituting a visit to a physical facility with an online option or use a specific-task software. Use of the innovation of virtual recommendation systems has numerous advantages; including accessibility, enhanced privacy and communication, cost saving, and comfort. Hence, our work’s motivation is to automate the identification of eye attributes and face shape and subsequently produce a recommendation to the user for the appropriate eyelashes and suitable hairstyle.

This research work includes the development of system with friendly graphical user interface that can analyse eye and face attributes from an input image. Based on the detected eye and face features, the system suggests a suitable recommendation of eyelashes and hairstyles for both men and women. The proposed framework integrates three main models: face shape classification model, gender prediction model for predicting the gender of user, and eye attribute identification model to make a decision on the input image. Machine and deep learning approaches with various facial image analysis strategies including facial landmark detection, facial alignment, and geometry measurements schemes are designed to establish and realise the developed system. The main contributions and advantages of this work are summarised as follows:

- We introduce a new framework merging two types of virtual recommendation, including hairstyle and eyelashes. The overall framework is novel and promising.

- The proposed method is able to extract many complex facial features, including eye attributes and face shape, accomplishing the extraction of features and detection simultaneously.

- The developed system could help the worker in beauty centres and reduce their workload by automating their manual work and can produce subjective results, particularly with a large dataset.

- Our user-friendly interface system has been evaluated on a dataset provided with a diversity of lighting, age, and ethnicity.

- The labelling of a publicly available dataset with three eye attributes, five face shape classes, and gender has been conducted by a beauty expert to run our experiments.

- In the face shape classification method, we developed a method based on merging the handcrafted features with deep learning features.

- We presented a new geometrical measurement method to determine the eye features, including eye shape, position, and setting, based on the coordinates of twelve detected eye landmarks.

2. Development of Hairstyle and Eyelash Recommendation System

2.1. Data Preparation: Facial and Eye Attributes

A publicly available dataset, MUCT [37], is used to conduct our experiments and carry out the evaluation on the developed face shape and eye attribute identification system. The MUCT database consists of 3755 faces captured from 276 subjects with 76 manual landmarks. The database was created to provide more diversity of lighting, age and gender with an obstacle (some subjects wear glasses) than other available landmarked 2D face databases. The resolution of images is . Five webcams were used to photograph each subject. The five webcams are located in five different positions but not on the left of the subject. Each subject was photographed with two or three lighting setting producing ten different lighting setups in all five webcams. To achieve diversity without too many images, not every subject was photographed with every lighting setup.

The MUCT dataset does not provide face shape and eye attribute labels. Therefore, we recruited a beauty expert to provide the ground truth by labelling the images in the dataset. To formulate our research problem, we initially set up the attributes required to be identified. Face shape and eye specifications/attributes required to be detected in this research work are defined as follow:

- Eye Shape: Two attributes (Almond or Round),

- Eye Setting: Three attributes (Wide, Close, or Proportional),

- Eye Position/Pitch: Three attributes (Upturned, Downturned, or Straight),

- Face Shape: Five attributes (Round, Oval, Square, Rectangle (Oblong), Heart Shape).

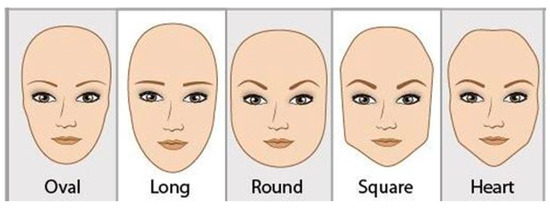

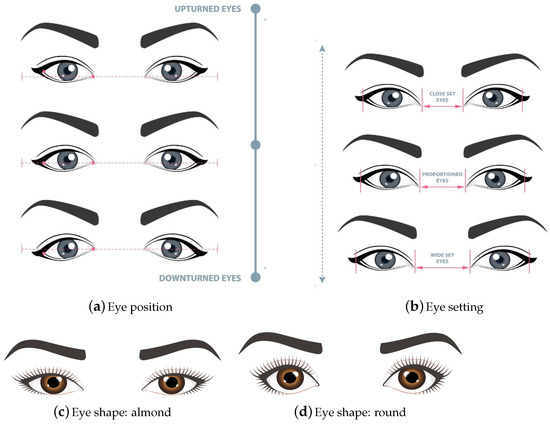

Identifying these attributes would help automatically report eyelashes and haircut style recommendations that meet the eye and face attributes. Illustrations of these attributes are shown in Figure 1 and Figure 2.

Figure 1.

Categories of face shape [38].

Figure 2.

Eye attributes. Adapted from [39,40].

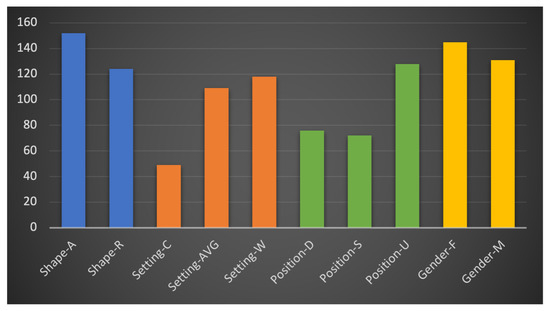

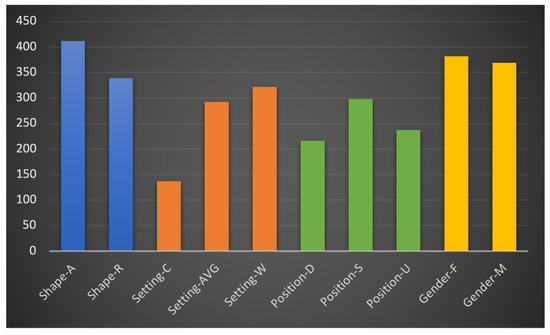

The process of determining the facial shape and eye attributes manually by beauty experts can be carried out in several stages, such as outlining the face, measuring the width and length of the face, jaw, forehead, cheekbones, the gap between eyes, and outer and inner corner of the eye. According to the beauty experts, the face shape can be recognised as five categories, namely: oval, square, round, oblong, and heart shapes [41]. The guidelines provided by beauty experts Derrick and Brooke [41,42] have been followed to label the face shape of 276 subjects into five classes. Furthermore, to provide the ground truth of eye attributes, the detailed guideline and described criteria presented in [43] has been followed by a beauty expert. The distribution of data labelled manually is depicted by exploratory data analysis in Figure 3 and Figure 4 describing the distribution of attributes over 276 subjects and per one camera, respectively.

Figure 3.

Distribution of attributes over 276 subject.

Figure 4.

Distribution of attributes over 751 image (per one camera).

2.2. Developed Multi-Model System

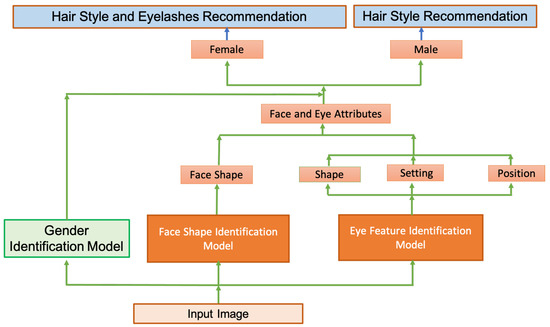

To develop a personalised and automatic approach to finding the appropriate haircut and/or eyelash style for men and women, we develop a multi-model system comprising three separate models, as shown in Figure 5. Each individual model is dedicated to achieving a specific task, including face shape identification, eye attribute identification, and gender identification. The diagram shows that the eye features extracted from an input image by the developed eye feature identification model are merged with identified face shape class. The gender of the individual in the input image is detected by the gender identification model. Thus, the face and eye attributes are combined with identified gender information. If the gender in the input image is a male, then a hairstyle recommendation for men is given; otherwise, both hairstyle and eyelashes recommendations are reported. The description of the three models are explained as follows:

Figure 5.

Block diagram of proposed method including three models: face shape identification model, eye feature identification model, gender identification model.

2.2.1. Face Shape Identification Model

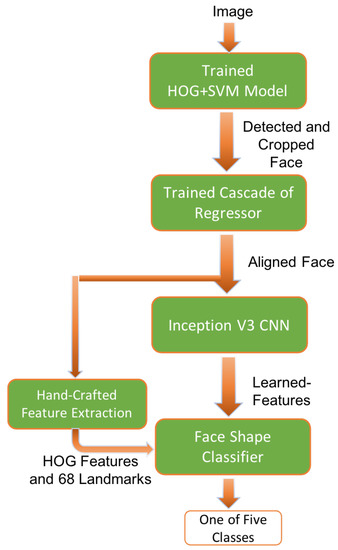

To identify the face shape, our developed model [44] that was designed by merging hand-crafted features with automatically learned features was trained and tested on data from MUCT. MUCT data has been randomly split into 3255 images for training, and 500 images was retained for testing. The developed model (shown in Figure 6) achieves the classification as follows: (1) detect the face region and crop it using a model trained on the histogram of oriented gradients (HOG) features with Support Vector Machine (SVM) model as classifier [45], (2) the detected face is aligned using the detected face landmarks (68 landmarks) by the ensemble of regression tree method (ERT) [46], and (3) Finally, the aligned images are used for training and evaluating Inception V3 convolutional neural network [47] along with hand-engineered HOG features and landmarks to classify the face into one of five classes. For further details about the face shape identification model, please refer to our developed system in [44].

Figure 6.

The developed face shape classification system [44].

2.2.2. Eye Attributes Identification

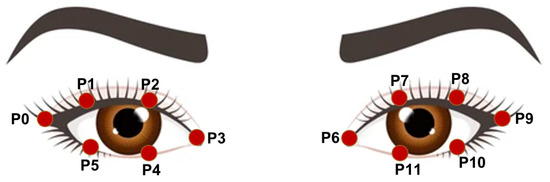

The geometry of face/eye measurements based on the coordinates of detected eye landmarks are determined to develop this model. Thus, the eye specifications (shape, setting, position) are obtained. First, the face region is detected, and the landmarks of the eye region are localised using the methods presented in our face shape classification system [44,45,46]. We further customised the landmark predictor to return just the locations of the eyes using dlib library. Only six landmarks per eye—12 in two eyes—are taken into consideration for predicting eye attributes, as shown in Figure 7. Given 12 eye landmark points where , the eye specifications can be calculated using geometrical eye measurements as follows:

Figure 7.

The six landmarks taken into consideration to predict the eye attributes using geometrical measurements.

- Eye Setting: The setting of eye can be determined by calculating the ratio of the distance between two eyes to the eye width . The width of the eye can be found by measuring the distance from the start and the end of an individual eye. The distance between two facial landmark points d can be obtained using the Euclidean distance.The threshold values for category classification are empirically chosen and estimated as follow:From Equation ( 6), the threshold value used to determine (Close) label is calculated by finding the median of subjects’ eye setting measurements. Thus, the eye setting value of a subject that is less than the median is labelled as (Close). Likewise, the middle number between the median and the highest number (the maximum) is harnessed to differentiate between (Proportional) and (Wide) labels. For example, the eye setting value of a subject that is greater than the median and less than the middle number is labelled as (Proportional); otherwise, it is labelled as (Wide).

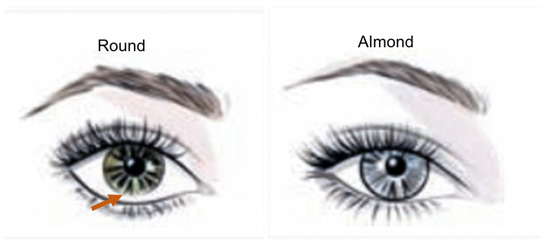

- Eye Shape: The criterion for discriminating round eye from almond eyes is that, for round eyes, the white sclera area below the iris is visible [43] as illustrated in Figure 8. Our proposed criteria exploits eye-aspect-ratio rule to determine the eye shape as follows:The threshold values for category classification are empirically chosen and estimated as follow:From Equation (8), the threshold value used to differentiate between (Almond) and (Round) labels is calculated by finding the median of subjects’ eye shape measurements. Thus, the eye shape value that is less than the median is labelled as (Almond); otherwise, it is labelled as (Round).

Figure 8. Round vs. almond shape.

Figure 8. Round vs. almond shape. - Eye Position: Eye position categorised into up-turned, down-turned and straight can be determined using geometrical calculations by measuring the slope angle (degree). The slope angle is defined as the angle measured between a horizontal line and a slanted line (the line drawn between landmark points (, ) and (, ) for , (, ) for ). The equation of eye position identification can be defined as follows:where represents the angle between the line drawn between landmark points (, ) and (, ) for , (, ) for and each point is represented as .where and are the slope of each line formulated to calculate the angle which is defined as follows:The threshold values for category classification are empirically chosen and estimated as follow:From Equation (12), the threshold value used to determine (Up-turned) label is calculated by finding the median of subjects’ eye position measurements. Thus, the eye position value that is greater than the median is labelled as (Up-turned). Likewise, the value between zero and the median is labelled as (Straight). Any value of less than zero (negative) is identified as (Down-turned).

2.2.3. Gender Identification

Gender identification is a pre-requisite step in the our developed framework. If the gender in the input image is male then the recommendation engine reports only the suitable hairstyle for men, otherwise; the system recommends for hairstyle and eyelash extension for women. The deep learning approach developed in [48] has been adopted to achieve this task. Convolutional neural network composed of three convolutional layers, each followed by a rectified linear operation and pooling layer is implemented. The first two layers also follow the normalisation using local response normalisation. The first convolutional layer contains 96 filters of pixels, the second convolutional layer contains 256 filters of pixels, the third and final convolutional layer contains 384 filters of pixels. Finally, two fully connected layers are added, each containing 512 neurons.

The dataset of the Adience Benchmark [48] is used to train the gender identification model. The Adience Benchmark is a collection of unfiltered face images collected from Flickr. It contains 26,580 images of 2284 unique subjects that are unconstrained, extreme blur (low-resolution), with occlusions, out-of-plane pose variations, and have different expressions. Images are first rescaled to , and a crop of is fed to the network. All MUCT images composed of 52.5% female were used for testing.

2.2.4. Rule-Based Hairstyle and Eyelash Recommendation System

The right shape of artificial eyelash extensions can be used as a way to correct and balance facial symmetry and open the eyes wider. Eyelash Extensions can produce an illusion of various eye effects giving an appearance of makeup on the eye without applying it. It also can create an appearance of a lifted eye, or even a more feline look being produced [49]. For the best hairstyle, instead of choosing the latest fashion, it should be attempted instead to pick a style that fits face shape. The correct haircut that fits the face shape will frame and balance it expertly while showing the best features for a flattering and complementary appearance [50,51].

The guidelines from specialised websites [49,50,51] are followed to implement the rules of the recommendation engine for hairstyle and eyelashes in our proposed system. The rule-based inference engine system focuses on knowledge representation and applies rules to obtain new information by existing knowledge. When the data (eye attributes, face shape, gender) matches rule conditions, the inference engine can interpret the facts in the knowledge base by applying logical rules and deduce the suitable recommendation using if-then operations. For instance,

IF (Gender is Male) AND (Face Shape is Round) THEN Haircut Recommendation is Side part, French crop, pompadour.

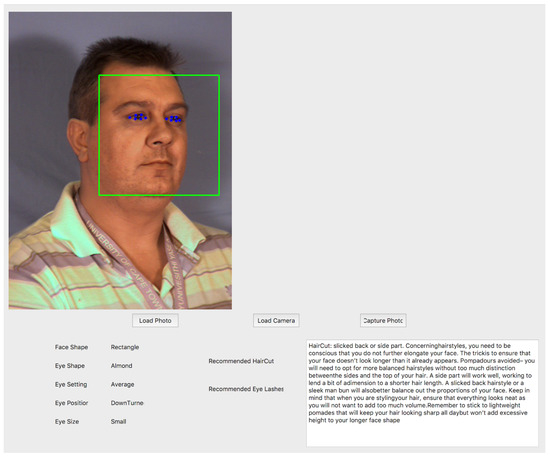

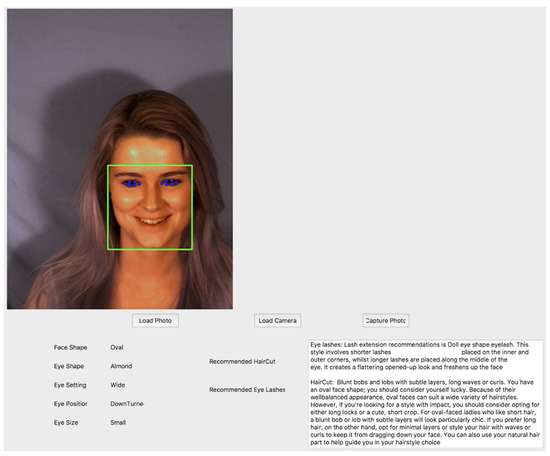

Figure 9 and Figure 10 present the graphical user interface (GUI) of the developed system showing an input image (man and woman) with the automatically detected landmarks, automatically extracted facial/eye attributes and the recommended haircut and eyelash extension.

Figure 9.

GUI of facial and eye attributes predication showing man example.

Figure 10.

GUI of facial and eye attributes predication showing woman example.

3. Results and Discussions

A new approach based on merging three models in one framework has been proposed for the simultaneous detection of the face shape, eye attributes, and gender from webcam images. The designed framework achieves the detection by extracting hand-crafted features, learning features automatically, and exploiting the face geometry measurements. To evaluate the performance of the proposed system, different measurements, including accuracy (Acc.), sensitivity (Sn.), specificity (Sp.), Matthews Correlation Coefficient (MCC), Precision (Pr), and score, have been calculated. These metrics are defined as follows:

where , = , : True Positive, : True Negative, : False Positive, : False Negative

Table 1 depicts the confusion matrix of face shape classes, reporting an identification accuracy of 85.6% on 500 test images (100 image per each class). To compare our face shape classification model with the existing face shape classification methods, Table 2 presents a comparison in terms of classification accuracy. It can be noticed that our developed face shape classification system [44] outperforms the other methods in the literature.

Table 1.

Confusion matrix representing the five classes of face shape.

Table 2.

The performance of proposed face shape classification methodology compared to the existing methods in the literature.

Initially, images belong to one subject were excluded from data because of failure detecting the eye landmarks of this subject in the eye attributes identification system due to extreme eye closing and obstacle. Thus, the number of subjects that will be considered for evaluation is 275. For the gender identification, the gender detection model has achieved prediction performance of , , , , and in terms of accuracy, sensitivity, specificity, precision, and score measures; respectively, on 275 subjects. Likewise, the gender detection model achieved , , , , and ; respectively, using the same metrics in terms of 748 image per camera. Table 3 presents the confusion matrix obtained from gender identification system. The first part of Table 3 shows the gender prediction in terms of the number of subjects, whereas the second part explores the prediction in terms of images per camera. In terms of comparison with the existing methods, authors in [48] achieved an accuracy of on the Adience dataset [48]. On the same dataset, Duan et al. [52] reported accuracy of using convolutional neural network (CNN) and extreme learning machine (ELM) method. On Pascal VOC [53] dataset, De et al. [54] obtained accuracy of based on a deep learning approach.

Table 3.

Confusion matrix representing the gender identification. The first part of matrix represents the identification in terms of number of subjects; whereas the second part explores the prediction in terms of images per one camera. F: Female, M: Male.

There are five webcams used to photograph each subject. In the following experiments, we name the webcams with five letters (A–E) including, Cam A, Cam B, Cam C, Cam D, and Cam E. Symbols X and Y (used in Cam X vs. Cam Y) refer to any individual webcam from the five cameras. Our developed system has provided flavour results on eye attributes identification. Table 4, Table A1 and Table A2 in Appendix A report eye setting attributes detection performance using various evaluation metrics and state the obtained results from the proposed system by comparing the eye setting attribute prediction performance in one camera with ground truth using confusion matrices, and compare the prediction performance in each camera with another camera using confusion matrices, respectively. Likewise, Table 5, Table A3 and Table A4 report eye position classes detection performance using various evaluation metrics and state the obtained results from the proposed system by comparing the eye position classes prediction performance in one camera with ground truth using confusion matrices, and comparing the prediction performance in each camera with another camera using confusion matrices, respectively. In the same way, Table 6, Table A5 and Table A6 present eye shape labels prediction performance.

Table 4.

Performance evaluation of proposed system for eye setting attributes detection. Acc.: accuracy, Sn.: Sensitivity, Sp.: Specificity, Pr.: Precision, : score, MCC: Matthews Correlation Coefficient, GT: Ground Truth, Cam: Camera.

Table 5.

Performance evaluation of proposed system for eye position attributes detection. Acc.: accuracy, Sn.: Sensitivity, Sp.: Specificity, Pr.: Precision, : score, MCC: Matthews Correlation Coefficient, GT: Ground Truth.

Table 6.

Performance evaluation of proposed system for eye shape attributes detection. Acc.: accuracy, Sn.: Sensitivity, Sp.: Specificity, Pr.: Precision, : score, MCC: Matthews Correlation Coefficient, GT: Ground Truth.

From the reported results of eye attributes, it can be noticed that the model achieves the highest performance on eye shape detection, reporting accuracy of 0.7535, sensitivity of 0.7168, specificity of 0.7861, precision of 0.7464, F1 score of 0.7311 and MCC of 0.5043 while the lowest results were obtained from eye position class identification giving 0.6869, 0.7075, 0.8378, 0.6917, 0.6958, and 0.5370 using the same evaluation metrics, respectively. Likewise, the model reveals the results of 0.7021, 0.6845, 0.8479, 0.7207, 0.6926, and 0.5501 on eye setting detection in terms of the same aforementioned metrics. Although the system shows many misclassified prediction of eye attributes, it could be considered to be tolerated prediction cases (skew is small). By “tolerated prediction”, we mean that most misclassified cases are resulted from predicting the class into average labels, which is clear in all the reported confusion matrices. For example, in Table A3, the major predictions of images in Cam E were misclassified as straight rather than upturned label giving: downturned label: 149, straight: 64, upturned: 0. This proves that the predicted measurements of attributes are closer to the ground truth value. This also can be clearly noticed in the reported confusion matrix of the eye setting shown in Table A1.

To study the correlation of prediction among different webcams, all the evaluation metrics mentioned earlier, as well as confusion matrices, have been utilised to depict the variance and closeness of prediction. For instance, Table 4 presents the correlation between cameras (such as Cam A vs. Cam B and so on) in terms of evaluation metrics. Whilst, Table A2 reports the confusion matrices explaining the correlation of prediction among different webcams, which shows good prediction agreement among images captured from different cameras’ positions. Matthews Correlation Coefficient (MCC) is a correlation measurement that returns a value between +1 and −1, where −1 value refers to a total disagreement between two assessments and +1 represents the perfect detection (agreement). The range of obtained MCC value (between two webcams) was (0.3250–0.9145), which indicates of strong correlation of prediction among various webcam positions.

In comparison with the existing methods considering eye attributes detection, Borza et al. [55] and Aldibaja [56] presented eye shape estimation method. Yet, they have not reported the accuracy of the shape detection and instead reported eye region segmentation performance. To the best of our knowledge, our proposed method for eye attributes detection seeking the suitable eyelashes recommendation is the first work carried out automatically based on computer vision approaches. Thus, we could not find more related works in the literature to conduct further comparison with the results obtained from our framework. To further investigate our model’s performance, we validated the developed framework on external data represented by sample images of celebrities, as shown in Table 7. Thus, we have demonstrated that the system generalises well on another data and performs well under variant conditions such as camera position, obstacle (wearing glasses), light condition, gender and age. Yet, on the other hand, it should be considered that the proposed framework brings up some limitations, including: (1) the developed system has not been evaluated on diverse ethnic and racial groups, (2) the presented research work has not considered eye situation characteristics analysis such as mono-lid, hooded, crease, deep-set, and prominent. However, these limitations are due to a lack of labelled data captured from variant races and ethnicities. These challenges can be overcome when larger annotated data becomes available. Moreover, the threshold parameter values used for converting scalar value into a nominal label of eye attribute are selected empirically. For future studies, studying the possibility of using automatic parameter tuning strategies (heuristic mechanisms) for determining and setting optimum threshold values could be investigated.

Table 7.

Performance evaluation of proposed system on external image data. Red cells refer to incorrect detection while last column shows the true label.

4. Conclusions

In this paper, a decision support system for appropriate eyelashes and hairstyle recommendation was developed. The developed framework was evaluated on dataset provided with diversity of lighting, age, and ethnicity. The developed system, based on integrating three models, has proven efficient performance in the identification of face shape and eye attributes. Face and eye attributes measurement tasks are usually carried out by an expert person manually before applying the treatment of individual eyelashes extension and hairstyle. Measuring face and eye characteristics costs time and effort. The proposed system could alleviate the need for additional time and effort made by expert before each treatment. Furthermore, face shape identification automatically is a challenging duty due to the complexity of face and the possible variations in rotation, size, illumination, hairstyle, age, and expressions. Moreover, the existence of face occlusion from hats and glasses also adds difficulties to the classification process. Our model trained on diverse images for automatic face shape classification would be viable in many other decision support systems such as eyeglasses style recommendation systems. In future work, we aim to extend our developed GUI desktop software and build a cloud-based decision support system using a mobile application. The extended system targets users’ facial and eye analysis via cloud server programs based on the images captured and uploaded from mobile phones to the server. Furthermore, the detected facial and eye attributes could be harnessed to develop a makeup recommendation system. Another important aspect that could be considered to be potential future work is studying the algorithm’s computational complexity to achieve the best and fastest convergence in the developed framework.

Author Contributions

T.A. designed and performed the experiments. W.A.-N. conceived the idea of research work and ran the project. B.A.-B. contributed to the data analysis and paper writing up. All authors have read and agreed to the published version of the manuscript.

Funding

Theiab Alzahrani was funded by the Kingdom of Saudi Arabia government.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study through a data collection process conducted by the authors who created this data and made it publicly available for scientific research purposes.

Data Availability Statement

The data presented in this study are openly available in http://www.milbo.org/muct, accessed on 26 April 2021.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations and Abbreviations

Abbreviations

Abbreviations

| Shape-A | Eye shape-Almond |

| Shape-R | Eye shape-Round |

| Setting-AVG | Eye setting-Average |

| Setting-C | Eye setting-Close |

| Setting-W | Eye setting-Wide |

| Position-D | Eye position-Downturned |

| Position-S | Eye position-Straight |

| Position-U | Eye position-Upturned |

| Gender-F | Gender-Female |

| Gender-M | Gender-Male |

| F-Square | Face shape-Square |

| F-Rectangle | Face shape-Rectangle |

| F-Round | Face shape-Round |

| F-Heart | Face shape-Heart |

| F-Oval | Face shape-Oval |

Appendix A

Table A1.

Confusion matrices depict eye setting attributes prediction in each webcam versus the ground truth.

Table A1.

Confusion matrices depict eye setting attributes prediction in each webcam versus the ground truth.

| Predicted | Desired | |||

|---|---|---|---|---|

| Close | Average | Wide | ||

| Cam A | Close | 111 | 72 | 1 |

| Average | 23 | 162 | 61 | |

| Wide | 3 | 58 | 257 | |

| Cam B | Close | 112 | 94 | 3 |

| Average | 19 | 152 | 65 | |

| Wide | 6 | 46 | 251 | |

| Cam C | Close | 122 | 95 | 7 |

| Average | 13 | 168 | 58 | |

| Wide | 2 | 29 | 254 | |

| Cam D | Close | 110 | 82 | 2 |

| Average | 21 | 152 | 67 | |

| Wide | 6 | 58 | 250 | |

| Cam E | Close | 108 | 75 | 3 |

| Average | 25 | 171 | 70 | |

| Wide | 4 | 46 | 246 | |

| All Cameras | Close | 563 | 418 | 16 |

| Average | 101 | 805 | 321 | |

| Wide | 21 | 237 | 1258 | |

Table A2.

Confusion matrices depict eye setting attributes prediction in each individual camera versus another camera.

Table A2.

Confusion matrices depict eye setting attributes prediction in each individual camera versus another camera.

| Cam X vs. Cam Y | Cam B | |||

|---|---|---|---|---|

| Close | Average | Wide | ||

| Cam A | Close | 154 | 29 | 1 |

| Average | 53 | 128 | 65 | |

| Wide | 2 | 79 | 237 | |

| Cam C | ||||

| Cam A | Close | 165 | 19 | 0 |

| Average | 50 | 134 | 62 | |

| Wide | 9 | 86 | 223 | |

| Cam D | ||||

| Cam A | Close | 147 | 37 | 0 |

| Average | 44 | 133 | 69 | |

| Wide | 3 | 70 | 245 | |

| Cam E | ||||

| Cam A | Close | 145 | 38 | 1 |

| Average | 38 | 152 | 56 | |

| Wide | 3 | 76 | 239 | |

| Cam C | ||||

| Cam B | Close | 169 | 40 | 0 |

| Average | 47 | 125 | 64 | |

| Wide | 8 | 74 | 221 | |

| Cam D | ||||

| Cam B | Close | 156 | 49 | 4 |

| Average | 38 | 132 | 66 | |

| Wide | 0 | 59 | 244 | |

| Cam E | ||||

| Cam B | Close | 153 | 54 | 2 |

| Average | 31 | 133 | 72 | |

| Wide | 2 | 79 | 222 | |

| Cam D | ||||

| Cam C | Close | 160 | 57 | 7 |

| Average | 33 | 122 | 84 | |

| Wide | 1 | 61 | 223 | |

| Cam E | ||||

| Cam C | Close | 161 | 54 | 9 |

| Average | 25 | 140 | 74 | |

| Wide | 0 | 72 | 213 | |

| Cam E | ||||

| Cam D | Close | 148 | 44 | 2 |

| Average | 35 | 137 | 68 | |

| Wide | 3 | 85 | 226 | |

Table A3.

Confusion matrices depict eye position attributes prediction in each webcam versus the ground truth.

Table A3.

Confusion matrices depict eye position attributes prediction in each webcam versus the ground truth.

| Predicted | Desired | |||

|---|---|---|---|---|

| Downturned | Straight | Upturned | ||

| Cam A | Downturned | 161 | 78 | 1 |

| Straight | 52 | 192 | 65 | |

| Upturned | 0 | 28 | 171 | |

| Cam B | Downturned | 156 | 70 | 0 |

| Straight | 56 | 198 | 84 | |

| Upturned | 1 | 30 | 153 | |

| Cam C | Downturned | 155 | 85 | 2 |

| Straight | 56 | 180 | 74 | |

| Upturned | 2 | 33 | 161 | |

| Cam D | Downturned | 165 | 68 | 0 |

| Straight | 48 | 195 | 81 | |

| Upturned | 0 | 35 | 156 | |

| Cam E | Downturned | 149 | 75 | 0 |

| Straight | 64 | 205 | 65 | |

| Upturned | 0 | 18 | 172 | |

| All Cameras | Downturned | 786 | 376 | 3 |

| Straight | 276 | 970 | 369 | |

| Upturned | 3 | 144 | 813 | |

Table A4.

Confusion matrices depict eye position attributes prediction in each individual camera versus another camera.

Table A4.

Confusion matrices depict eye position attributes prediction in each individual camera versus another camera.

| Cam X vs. Cam Y | Cam B | |||

|---|---|---|---|---|

| Downturned | Straight | Upturned | ||

| Cam A | Downturned | 152 | 87 | 1 |

| Straight | 74 | 179 | 56 | |

| Upturned | 0 | 72 | 127 | |

| Cam C | ||||

| Cam A | Downturned | 158 | 80 | 2 |

| Straight | 83 | 169 | 57 | |

| Upturned | 1 | 61 | 137 | |

| Cam D | ||||

| Cam A | Downturned | 152 | 87 | 1 |

| Straight | 81 | 160 | 68 | |

| Upturned | 0 | 77 | 122 | |

| Cam E | ||||

| Cam A | Downturned | 152 | 88 | 0 |

| Straight | 72 | 183 | 54 | |

| Upturned | 0 | 63 | 136 | |

| Cam C | ||||

| Cam B | Downturned | 148 | 76 | 2 |

| Straight | 92 | 164 | 82 | |

| Upturned | 2 | 70 | 112 | |

| Cam D | ||||

| Cam B | Downturned | 145 | 81 | 0 |

| Straight | 87 | 169 | 82 | |

| Upturned | 1 | 74 | 109 | |

| Cam E | ||||

| Cam B | Downturned | 146 | 80 | 0 |

| Straight | 77 | 187 | 74 | |

| Upturned | 1 | 67 | 116 | |

| Cam D | ||||

| Cam C | Downturned | 145 | 96 | 1 |

| Straight | 86 | 152 | 72 | |

| Upturned | 2 | 76 | 118 | |

| Cam E | ||||

| Cam C | Downturned | 146 | 94 | 2 |

| Straight | 77 | 171 | 62 | |

| Upturned | 1 | 69 | 126 | |

| Cam E | ||||

| Cam D | Downturned | 135 | 98 | 0 |

| Straight | 89 | 161 | 74 | |

| Upturned | 0 | 75 | 116 | |

Table A5.

Confusion matrices depict eye shape attributes prediction in each webcam versus the ground truth.

Table A5.

Confusion matrices depict eye shape attributes prediction in each webcam versus the ground truth.

| Predicted | Desired | ||

|---|---|---|---|

| Round | Almond | ||

| Cam A | Round | 256 | 104 |

| Almond | 80 | 308 | |

| Cam B | Round | 256 | 101 |

| Almond | 80 | 311 | |

| Cam C | Round | 248 | 103 |

| Almond | 88 | 309 | |

| Cam D | Round | 252 | 100 |

| Almond | 84 | 312 | |

| Cam E | Round | 242 | 88 |

| Almond | 94 | 324 | |

| All Cameras | Round | 1254 | 496 |

| Almond | 426 | 1564 | |

Table A6.

Confusion matrices depict eye shape attributes prediction in each individual camera versus another camera.

Table A6.

Confusion matrices depict eye shape attributes prediction in each individual camera versus another camera.

| Cam X vs. Cam Y | Cam B | ||

|---|---|---|---|

| Round | Almond | ||

| Cam A | Round | 338 | 22 |

| Almond | 19 | 369 | |

| Cam C | |||

| Cam A | Round | 335 | 25 |

| Almond | 16 | 372 | |

| Cam D | |||

| Cam A | Round | 340 | 20 |

| Almond | 12 | 376 | |

| Cam E | |||

| Cam A | Round | 320 | 40 |

| Almond | 10 | 378 | |

| Cam C | |||

| Cam B | Round | 327 | 30 |

| Almond | 24 | 367 | |

| Cam D | |||

| Cam B | Round | 334 | 23 |

| Almond | 18 | 373 | |

| Cam E | |||

| Cam B | Round | 317 | 40 |

| Almond | 13 | 378 | |

| Cam D | |||

| Cam C | Round | 328 | 23 |

| Almond | 24 | 373 | |

| Cam E | |||

| Cam C | Round | 313 | 38 |

| Almond | 17 | 380 | |

| Cam E | |||

| Cam D | Round | 320 | 32 |

| Almond | 10 | 386 | |

References

- Shahani-Denning, C. Physical attractiveness bias in hiring: What is beautiful is good. Hofstra Horiz 2013, 14–17. Available online: https://www.hofstra.edu/pdf/orsp_shahani-denning_spring03.pdf (accessed on 26 April 2021).

- Kertechian, S. The impact of beauty during job applications. J. Hum. Resour. Manag. Res. 2016, 2016, 598520. [Google Scholar] [CrossRef]

- Jain, A.K.; Li, S.Z. Handbook of Face Recognition; Springer: New York, NY, USA, 2011; Volume 1. [Google Scholar]

- Nixon, M.S. Feature Extraction & Image Processing for Computer Vision; Academic Press: Cambridge, MA, USA, 2012. [Google Scholar]

- Garcia, C.; Ostermann, J.; Cootes, T. Facial image processing. EURASIP J. Image Video Process. 2008, 207, 1–33. [Google Scholar]

- Zivkovic, M.; Bacanin, N.; Venkatachalam, K.; Nayyar, A.; Djordjevic, A.; Strumberger, I.; Al-Turjman, F. COVID-19 cases prediction by using hybrid machine learning and beetle antennae search approach. Sustain. Cities Soc. 2021, 66, 102669. [Google Scholar] [CrossRef] [PubMed]

- Vandenhende, S.; Georgoulis, S.; Proesmans, M.; Dai, D.; Van Gool, L. Revisiting Multi-Task Learning in the Deep Learning Era. arXiv 2020, arXiv:2004.13379. [Google Scholar]

- De Rosa, G.H.; Papa, J.P.; Yang, X.S. Handling dropout probability estimation in convolution neural networks using meta-heuristics. Soft Comput. 2018, 22, 6147–6156. [Google Scholar] [CrossRef]

- Al-Bander, B.; Alzahrani, T.; Alzahrani, S.; Williams, B.M.; Zheng, Y. Improving fetal head contour detection by object localisation with deep learning. In Annual Conference on Medical Image Understanding and Analysis; Springer: Cham, Switzerland, 2019; pp. 142–150. [Google Scholar]

- Wu, Y.; Ji, Q. Facial landmark detection: A literature survey. Int. J. Comput. Vis. 2019, 127, 115–142. [Google Scholar] [CrossRef]

- Koestinger, M.; Wohlhart, P.; Roth, P.M.; Bischof, H. Annotated Facial Landmarks in the Wild: A Large-scale, Real-world Database for Facial Landmark Localization. In Proceedings of the 2011 IEEE International Conference on Computer Vision Workshops (ICCV Workshops), Barcelona, Spain, 6–13 November 2011; pp. 2144–2151. [Google Scholar]

- Priya, M. Artificial Intelligence and the New Age Beauty and Cosmetics Industry. Available online: https://www.prescouter.com/2019/06/artificial-intelligence-and-the-new-age-beauty-and-cosmetics-industry/ (accessed on 1 April 2020).

- Edwards, C. AI in the Beauty Industry: The Tech Making the Future Beautiful. Available online: https://www.verdict.co.uk/ai-in-the-beauty-industry/ (accessed on 1 April 2020).

- Panda, S.K.; Jana, P.K. Load balanced task scheduling for cloud computing: A probabilistic approach. Knowl. Inf. Syst. 2019, 61, 1607–1631. [Google Scholar] [CrossRef]

- Zheng, X.; Guo, Y.; Huang, H.; Li, Y.; He, R. A survey of deep facial attribute analysis. Int. J. Comput. Vis. 2020, 1–33. [Google Scholar] [CrossRef]

- Liu, Z.; Luo, P.; Wang, X.; Tang, X. Deep learning face attributes in the wild. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3730–3738. [Google Scholar]

- Rudd, E.M.; Günther, M.; Boult, T.E. Moon: A mixed objective optimization network for the recognition of facial attributes. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 19–35. [Google Scholar]

- Hand, E.M.; Chellappa, R. Attributes for improved attributes: A multi-task network utilizing implicit and explicit relationships for facial attribute classification. Proc. Thirty First Aaai Conf. Artif. Intell. 2017, 31, 4068–4074. [Google Scholar]

- Cao, J.; Li, Y.; Zhang, Z. Partially shared multi-task convolutional neural network with local constraint for face attribute learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4290–4299. [Google Scholar]

- Kalayeh, M.M.; Gong, B.; Shah, M. Improving facial attribute prediction using semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6942–6950. [Google Scholar]

- Mahbub, U.; Sarkar, S.; Chellappa, R. Segment-based methods for facial attribute detection from partial faces. IEEE Trans. Affect. Comput. 2018, 11, 601–613. [Google Scholar] [CrossRef]

- Sunhem, W.; Pasupa, K. An approach to face shape classification for hairstyle recommendation. In Proceedings of the 2016 Eighth International Conference on Advanced Computational Intelligence (ICACI), Chiang Mai, Thailand, 14–16 February 2016; pp. 390–394. [Google Scholar]

- Sarakon, P.; Charoenpong, T.; Charoensiriwath, S. Face shape classification from 3D human data by using SVM. In Proceedings of the 7th 2014 Biomedical Engineering International Conference, Fukuoka, Japan, 26–28 November 2014; pp. 1–5. [Google Scholar]

- Zafar, A.; Popa, T. Face and Eye-ware Classification using Geometric Features for a Data-driven Eye-ware Recommendation System. In Graphics Interface Conference; ACM Library: New York, NY, USA, 2016; pp. 183–188. [Google Scholar]

- Rahmat, R.F.; Syahputra, M.D.; Andayani, U.; Lini, T.Z. Probabilistic neural network and invariant moments for men face shape classification. In IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2018; Volume 420, p. 012095. [Google Scholar]

- Bansode, N.; Sinha, P. Face Shape Classification Based on Region Similarity, Correlation and Fractal Dimensions. Int. J. Comput. Sci. Issues (IJCSI) 2016, 13, 24. [Google Scholar]

- Pasupa, K.; Sunhem, W.; Loo, C.K. A hybrid approach to building face shape classifier for hairstyle recommender system. Expert Syst. Appl. 2019, 120, 14–32. [Google Scholar] [CrossRef]

- Tio, E.D. Face Shape Classification Using Inception V3. 2017. Available online: https://github.com/adonistio/inception-face-shape-classifier (accessed on 1 June 2019).

- Kang, W.C.; Fang, C.; Wang, Z.; McAuley, J. Visually-aware fashion recommendation and design with generative image models. In Proceedings of the 2017 IEEE International Conference on Data Mining (ICDM), New Orleans, LA, USA, 18–21 November 2017; pp. 207–216. [Google Scholar]

- Lodkaew, T.; Supsohmboon, W.; Pasupa, K.; Loo, C.K. Fashion Finder: A System for Locating Online Stores on Instagram from Product Images. In Proceedings of the 2018 10th International Conference on Information Technology and Electrical Engineering (ICITEE), Bali, Indonesia, 24–26 July 2018; pp. 500–505. [Google Scholar]

- Liang, Z.; Wang, F. Research on the Personalized Recommendation Algorithm for Hairdressers. Open J. Model. Simul. 2016, 4, 102–108. [Google Scholar] [CrossRef][Green Version]

- Wang, Y.F.; Chuang, Y.L.; Hsu, M.H.; Keh, H.C. A personalized recommender system for the cosmetic business. Expert Syst. Appl. 2004, 26, 427–434. [Google Scholar] [CrossRef]

- Scherbaum, K.; Ritschel, T.; Hullin, M.; Thormählen, T.; Blanz, V.; Seidel, H.P. Computer-suggested facial makeup. In Computer Graphics Forum; Wiley Online Library: Hoboken, NJ, USA, 2011; Volume 30, pp. 485–492. [Google Scholar]

- Chung, K.Y. Effect of facial makeup style recommendation on visual sensibility. Multimed. Tools Appl. 2014, 71, 843–853. [Google Scholar] [CrossRef]

- Alashkar, T.; Jiang, S.; Fu, Y. Rule-based facial makeup recommendation system. In Proceedings of the 2017 12th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2017), Washington, DC, USA, 30 May–3 June 2017; pp. 325–330. [Google Scholar]

- Zhao, Z.; Zhou, L.; Zhang, T. Intelligent recommendation system for eyeglass design. In International Conference on Applied Human Factors and Ergonomics; Springer: Cham, Switzerland, 2019; pp. 402–411. [Google Scholar]

- Milborrow, S.; Morkel, J.; Nicolls, F. The MUCT landmarked face database. Pattern Recognit. Assoc. S. Afr. 2010, 201, 1–6. Available online: http://www.milbo.org/muct (accessed on 26 April 2021).

- Presleehairstyle. Wedding Veils: Denver Wedding Hairstylist. Available online: https://www.presleehairstyle.com/blog/2017/5/31/wedding-veils-denver-wedding-hairstylist-braiding-specialist-and-updo-artist (accessed on 1 February 2020).

- Aumedo. Make Up Eyes: Make-Up Tips for Every Eye Shape. Available online: https://www.aumedo.de/make-up-tipps-fuer-jede-augenform/ (accessed on 1 February 2020).

- Moreno, A. How to Pick the Best Lashes for Your Eye Shape. Available online: https://www.iconalashes.com/blogs/news/how-to-pick-the-best-lashes-for-your-eye-shape (accessed on 1 May 2020).

- Derrick, J. Is Your Face Round, Square, Long, Heart or Oval Shaped? Available online: https://www.byrdie.com/is-your-face-round-square-long-heart-or-oval-shaped-345761 (accessed on 1 June 2019).

- Shunatona, B. A Guide to Determining Your Face Shape (If That’s Something You Care about). Available online: https://www.cosmopolitan.com/style-beauty/beauty/a22064408/face-shape-guide/ (accessed on 1 February 2020).

- WikiHow. How to Determine Eye Shape. Available online: https://www.wikihow.com/Determine-Eye-Shape#aiinfo (accessed on 1 February 2020).

- Alzahrani, T.; Al-Nuaimy, W.; Al-Bander, B. Hybrid feature learning and engineering based approach for face shape classification. In Proceedings of the 2019 International Conference on Intelligent Systems and Advanced Computing Sciences (ISACS), Taza, Morocco, 26–27 December 2019; pp. 1–4. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the International Conference on Computer Vision & Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 886–893. [Google Scholar]

- Kazemi, V.; Sullivan, J. One millisecond face alignment with an ensemble of regression trees. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1867–1874. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2818–2826. [Google Scholar]

- Levi, G.; Hassner, T. Age and gender classification using convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Boston, MA, USA, 7–12 June 2015; pp. 34–42. [Google Scholar]

- Creasey, C. Eye Shapes and Eyelash Extensions—What Works Best for You? Available online: https://www.sydneyeyelashextensions.com/eye-shapes-and-eyelash-extensions-what-works-best-for-you/ (accessed on 1 March 2020).

- Brewer, T. The Most Flattering Haircuts for Your Face Shape. Available online: https://www.thetrendspotter.net/haircuts-for-face-shape/ (accessed on 1 March 2020).

- Mannah, J. The Best Men’s Hairstyles for Your Face Shape. Available online: https://www.thetrendspotter.net/find-the-perfect-hairstyle-haircut-for-your-face-shape/ (accessed on 1 March 2020).

- Duan, M.; Li, K.; Yang, C.; Li, K. A hybrid deep learning CNN–ELM for age and gender classification. Neurocomputing 2018, 275, 448–461. [Google Scholar] [CrossRef]

- Everingham, M.; Eslami, S.A.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes challenge: A retrospective. Int. J. Comput. Vis. 2015, 111, 98–136. [Google Scholar] [CrossRef]

- de Araujo Zeni, L.F.; Jung, C.R. Real-time gender detection in the wild using deep neural networks. In Proceedings of the 2018 31st Conference on Graphics, Patterns and Images (SIBGRAPI), Parana, Brazil, 29 October–1 November 2018; pp. 118–125. [Google Scholar]

- Borza, D.; Darabant, A.S.; Danescu, R. Real-time detection and measurement of eye features from color images. Sensors 2016, 16, 1105. [Google Scholar] [CrossRef] [PubMed]

- Aldibaja, M.A.J. Eye Shape Detection Methods Based on Eye Structure Modeling and Texture Analysis for Interface Systems. Ph.D. Thesis, Toyohashi University of Technology, Toyohashi, Japan, 2015. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).