Abstract

With the rapid growth of simulation software packages, generating practical tools for simulation-based optimization has attracted a lot of interest over the last decades. In this paper, a modified method of Estimation of Distribution Algorithms (EDAs) is constructed by a combination with variable-sample techniques to deal with simulation-based optimization problems. Moreover, a new variable-sample technique is introduced to support the search process whenever the sample sizes are small, especially in the beginning of the search process. The proposed method shows efficient results by simulating several numerical experiments.

1. Introduction

Realistic systems often lack a sufficient amount of real data for the purposes of output response evaluation. This fact represents a blocking obstacle to the design process of most of the optimization techniques. However, several processes require optimization at some stage, e.g., engineering design, medical treatment, supply chain management, finance, and manufacturing [1,2,3,4,5,6,7,8,9]. Therefore, real data alternatives are investigated. For instance, data augmentation is used to expand small-sized sets by applying some transformations to these given real sets [10,11]. Nonetheless, the nature and size limitation of some real data set do not guarantee sufficient diversity of the generated augmented data. Therefore, simulated data are a good choice either for these cases or even other cases. Recently, the combination between simulation and optimization has drawn much attention. The term “simulation-based optimization” is commonly used instead of “stochastic optimization,” since almost all recent simulation software package contain an optimization procedure for creating applicable simulation methods [12,13].

Simulation-based optimization has been used for optimization of real world problems accompanied with some kind of uncertainties in the form of randomness. These problems may need to deal with systems and models, which are highly nonlinear and/or have large-scale dimensions. These kinds of problems can be classified as stochastic programming problems, in which a stochastic probability function is used to estimate the underlying uncertainty. A problem of such kind is defined mathematically as follows [14]:

where f is a real-valued function defined on search space with objective variables and is a random variable whose probability density function is . The choice of the optimal simulation parameters is critical to performance. However, the optimal selection of these parameters is still a quite challenging task. Because the simulation process is complicated, the objective function is subjected to different noise levels, followed by expensive computational evaluation. The simulation complication is characterized by:

- the calculations and time cost of the objective function,

- the difficulty of computing the exact gradient of the objective function, as well as the high cost and time consuming element of calculating numerical approximations of it, and

- the included noise in objective functions.

To deal with these issues, global search methods should be invoked in order to avoid using classical nonlinear programming methods, which fail to solve these types of multiple local optima problems.

Recently, a great interest has been given to using some artificial tools in optimization. Metaheuristics play a great role in either real-life simulation or invoking intelligent learned procedures [15,16,17,18,19,20,21,22,23,24]. Metaheuristics exhibit good degrees of robustness for a wide range of problems. However, these methods suffer from slow convergence in cases of complex problems, which leads to high computational cost. This slow convergence may come from the random structures of exploring the global search space of such methods. On the other side, metaheuristics cannot utilize local information to infer promising search directions. On the contrary, a main characteristic of local search methods is characterized by their fast conversion. Local search methods exploit local information to estimate local mathematical or logical movements in order to determine promising search directions. However, the local search method can easily be stuck at local minima, i.e., it is highly probable to miss global solutions. Therefore, design hybrid methods that combine metaheuristics and local search are highly needed to obtain practical efficient solvers [25,26,27]. Estimation of Distribution Algorithms (EDAs) are promising metaheuristics—in which exploration for potential solutions in search space depends on building and sampling explicit probabilistic models of promising candidates’ solutions [28,29,30,31].

There are several optimization and search techniques that have been developed to deal with the considered problem. The variable-sample method is one of these techniques [32]. The main idea of the variable-sample method is to convert a stochastic optimization problem to a deterministic one. This is achieved by estimating the objective function that contains random variables at a certain solution by sampling it with several trails. This type of Monte Carlo simulation can obtain approximate values of the objective function. The accuracy of such simulation depends on the sample-size; therefore, using a large enough size is recommended. The variable-sample method controls the variability of such sample-size in order to maintain the convergence of search methods to optimal solutions under certain conditions. A random search algorithm called Sampling Pure Random Search (SPRS) was previously reported in [32] by invoking the variable-sample method. In SPSR, the objective function with random variables is estimated at a certain input by the average of variable-sized samples at that input. Under certain conditions, the SPSR algorithm can converge to local optimal solutions.

More accurate solutions could be obtained in many cases for stochastic programming problem, e.g., [15,18,33]. In this paper, we present a novel metaheuristic method of Estimation of Distribution Algorithm (EDA). The proposed method is combined with the SPRS method [32], and a modified sampling search method called Min-Max Sampling Search (MMSS) in order to deal with the stochastic programming problem. The main modification in the MMSS method is to use a function transformation instead of the average of the function values, especially when the samples size is not sufficiently large. Actually, the proposed EDA-based search method starts searching the space with relatively small sample sizes in order to achieve faster exploration. Subsequently, the EDA-based method increases sample sizes while the search process progresses. The proposed modified MMSS method restricts the noisy values of the estimated function in small-size samples at the early stages of the search process. Several experiments with their technical discussion have been done in order to test the performance of the proposed methods.

The rest of the paper is organized as follows. The main structures of the estimation of distribution algorithms and variable-sample methods are highlighted in Section 2 and Section 3, respectively. In Section 4, we highlight the main components of the proposed EDA-based methods. In Section 5, we investigate parameter tuning of the proposed methods and explain the experimentation work conducted for evaluation purposes. Detailed results and discussions are given in Section 6. Finally, the paper is concluded in Section 7.

2. Estimation of Distribution Algorithms

The EDAs have been widely studied in the context of global optimization [28,29,30,34]. The use of the probabilistic models in optimization offers some significant advantages comparing with other types of metahuristics [35,36,37,38]. Firstly, the initial population is generated randomly to fill the search space. Then, the best individuals are used to build the probabilistic model. Usually, the best individuals are selected in order to push the search space to the promising regions. New candidate solutions replace the old solutions partly or as a whole, where the individual of highest quality is the target, and the quality of a solution is measured by its associated fitness value. Generally, EDAs execute a repeated process of evaluation, selection, model building, model sampling, and replacement.

The main objective of EDA is to improve the estimation of the solution space probability distribution by exploiting samples generated by the current estimated distribution. All generated samples are subjected to a selection method to pick certain points to be used for probability distribution estimation. Algorithm 1 explains the main steps of standard EDAs.

| Algorithm 1 Pseudo code for the standard EDAs |

|

Many studies have been done in continuous EDAs. Generally, there are two major branches in continuous EDAs. One is widely used that is based on the Gaussian distribution model [28,39] and another major based on the histogram model [40]. The Marginal Distribution Algorithm for continuous domains (UMDAc) is a simple real valued EDA which uses a univariate factorization of normal density in the structure of the probabilistic model. It has been introduced by Larrañaga et al. [41,42]. Some statistical tests were carried out in each generation and for each variable in order to determine the density function that best fits the variables. In this case, the factorization of the joint density function is represented as:

where is a set of local parameters. The learning process to obtain such joint density function is shown in Algorithm 2.

| Algorithm 2 Learning the joint density function |

|

3. Variable Sampling Path

The variable-sample (VS) method [32] is defined as a class of Monte Carlo methods that is used to deal with unconstrained stochastic optimization problems. Sampling Pure Random Search (SPRS) is the random search algorithm introduced by Homem-De-Mello [32] that invokes the VS method. The sample of average approximation with a variable sampling size scheme replaces the objective function in each main iteration of the SPRS algorithm. Specifically, an estimation of the objective function can be computed as:

where are sample values of the random variable of the objective function. Under certain conditions, the SPRS can converge to a local optimal solution [32]. Algorithm 3 demonstrates the formal algorithm of (SPRS) method.

| Algorithm 3 Sampling Pure Random Search (SPRS) Algorithm |

|

4. Estimation of Distribution Algorithms for Simulation-Based Optimization

We propose an EDA-based method to deal with the simulation-based global optimization problems. The proposed method combines the EDA with a modified version of the variable-sample method of Algorithm 3. We suggest a modification to the variable-sample method in order to avoid sample low quality resulted by small sample sizes. This modification depends on a function transformation, as shown in Algorithm 4.

4.1. Function Transformation

The search process is affected with sample sizes. Using large sizes is time consuming, while using small ones yielding low-quality approximation of function values. A good search strategy is to start the search with relatively small-size samples and then increase the sample size while the search is going on. To avoid degradation of the search process when the sample sizes are small in the early stages of the search, a new function transformation is proposed by re-estimating the fitness function if the sample size (N) falls under a certain threshold, i.e., with a predefined . Specifically, the samples of size N at solution x are evaluated and sorted. Then, the highest and lowest objective function values of these samples are averaged and denoted by and , respectively. Then, a new transformed estimation of the objective function at x is computed using the computed values; and :

with suitable transformation function . The choice of a function transformation is preformed empirically to select the function whose best performance among several candidate functions. We found that the following function gives the best performance:

where is a weight parameter (with ) that depends on the sample size N at the current iteration. Algorithm 4 uses the above-mentioned function transformation to modify the sampling search method in Algorithm 3.

| Algorithm 4 Min-Max Sampling Search (MMSS) Algorithm |

|

4.2. The Proposed EDA-Based Method

The proposed EDA-based method is a combination of estimation of distribution algorithms with the UMDAc technique [42], in which the stochastic function values can be estimated using sampling techniques. In the simulation-based optimization problem, the objective (fitness) function contains a random variable. Therefore, function values can be approximated using variable sample techniques, which is illustrated in two different ways as in the SPRS and MMSS techniques.

Algorithm 5 illustrates the main steps of the proposed EDA-based method. The initialization process in the EDA has been applied to create M diverse individuals within the search area using a diversification technique similar to those used in [24,43,44]. Besides this exploration process, a local search has been applied to each individual to improve the characteristics of the initial population. The EDA selection process uses the standard EDA selection scheme, in which the best L individuals with the best fitness function values have been chosen from generation to be survive to the next generation . In order to improve the performance of the search process, an intensification step has been applied to the best solution in each generation.

| Algorithm 5 The proposed EDA-based Algorithm |

|

The proposed EDA-based method can also be used for deterministic objective function according to the way of evaluating the fitness function in Steps 1:b and 2:c of Algorithm 5, and this yields three versions of the proposed EDA-based method:

- EDA-D: If the objective function has no noise, and its values are directly calculated from the function form.

- EDA-SPRS: If the objective function contains random variables and its values are estimated using the SPRS technique.

- EDA-MMSS: If the objective function contains random variables and its values are estimated using the MMSS technique.

The main difference between difference between EDA-SPRS and EDA-MMSS is in the function evaluation step. The sample path method SPRS depends on sample average approximation in the evaluation function process. However, the search process is not sufficiently appropriate with small sample sizes. This problem affects the quality of the final solution. EDA-MMSS algorithm is modified by applying the same main steps of the EDA-SPRS method, which were explained in the previous subsection, except the function evaluation method. A modified sample path method is defined to evaluate the function values in a small sample size by Equation (5), while the SPRS method is accepted when the number of sample size is sufficiently large. Algorithm 5 is used with Algorithm 4 for the evaluation function to deal with a stochastic optimization problem in order to improve the performance of SPRS method with a small sample size.

5. Numerical Experiments

Experiments are conducted to prove the efficiency of the proposed method and its versions. We use various benchmark data sets for evaluation purposes. In this section, we explain the the details of the experimentation procedures.

5.1. Test Functions

The proposed algorithm is tested using several well-known benchmark functions [45,46,47,48] in order to check its ground truth of the method efficiency without the effect of noise. A set of classical test functions are listed in Table 1 that are conducting initial tests for parameter setting and performance analysis of the proposed global optimization method. The characteristics of these test functions are diverse enough to cover many kinds of difficulties that usually arise in global optimization problems. For more professional comparisons, another set of benchmark functions ( − ) with higher degrees of difficulty were used. Specifically, the CEC 2005 test set [47,48] is also invoked in comparisons; see Appendix A.

Table 1.

Classical Global Optimization (CGO) test functions.

For simulation-based optimization, two different benchmark test sets are used. The first one (SBO—Set A) consists of four well-known classical test functions; Goldstein and Price, Rosenbrock, Griewank, and Pinter functions that are used to compose seven test problems ( − ) [49,50,51]. The details of these seven test functions are shown in Table 2. The mathematical definitions of the test functions are given in Appendix B. The other test set (SBO - Set B) contains 13 test functions (–) with Gaussian noise (); see Appendix C for the function definitions of this test set.

Table 2.

Classical Simulation-Based Optimization (CSBO) test functions.

5.2. Parameter Settings

Table 3 shows the used parameters in the proposed EDAs-based method. An empirical parameter tuning process was followed to find the best values of the parameter set. All parameters are tested to obtain standard settings for the proposed algorithms. The maximum number of function evaluations , which is used as a termination criteria, has two different values according to whether the problem is stochastic or deterministic. The EDA population size R and number of selected individuals in each iteration S are considered as measurable effects to build an efficient algorithm. While the main task is to make a balance between the exploration process and avoid the high complexity, we use large R to explore the search space, and then continue with limited selected individuals S to control the complexity of building and sampling the model. The theoretical part of choosing the parameters of the EDAs has been discussed in [29]. The number of sample size , which is used in Algorithm 3 and Algorithm 4, is adapted to be started with and then gradually increased to reach . The threshold of the small sample size and parameters used in function transformation in Label 4 are chosen experimentally.

Table 3.

Parameter settings.

5.3. Performance Analysis

In this section, we compare the performance of EDA-SPRA and EDA-MMSS methods. The results are reported in Table 4; these results are the average of errors for obtaining the global optima over 25 independent runs with 500,000 maximum objective function evaluations for each run. In the experimentation, the errors of obtained solutions are denoted by , which is defined as:

where x is the best solution obtained by the methods, and is the optimal solution. The results represented in Table 4 show the effect of the function transportation step on the final result. Table 4 reveals that the performance of EDA-MMSS method is better than that of EDA-SPRA at obtaining better solutions in average. Actually, the EDA-MMSS code could reach better average solutions than the EDA-SPRA code in five out of seven test functions. This favors the efficiency of the proposed sampling technique.

Table 4.

The averages and the best solutions obtained by the EDA-SPRA and EDA-MMSS using the SBO Test Set A.

The Wilcoxon rank-sum test [52,53,54] is used to measure the performance of all the methods compared. This test is known as a non-parametric test [55,56], which our experiments support. The statistical measures used in this test are the sum of rankings obtained in each comparison and the p-value associated. Typically, the differences between the performance values of the two methods on i-th out of n results are calculated. Afterwards, based on the absolute values of these differences, they are ranked. The ranks and are computed as follows:

The statistics of the rank-sum test for an initial comparison between the proposed EDA-SPRS and EDA-MMSS methods are shown in Table 5. Although the EDA-MMSS method could obtain better solutions in four out of seven than the EDA-SPRS method, there is no significant difference between the two compared methods at significance level 0.05.

Table 5.

Wilcoxon rank-sum test for the results of Table 4.

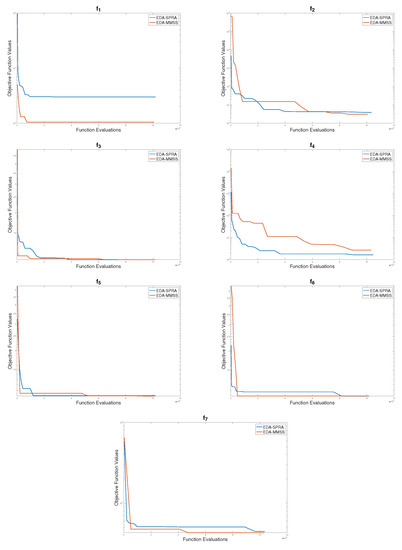

Figure 1 shows the performance of EDA-SPRA and EDA-MMSS for one random run, and the figure illustrates the significant difference of the search behavior between the two methods, and how the restriction process of the noisy part of the EDA-MMSS in the beginning of the search process affects the quality of the individual later.

Figure 1.

The performance of EDA-SPRA and EDA-MMSS.

The EDA-MMSS method manages to reach better solutions than the EDA-SPRA method in five out of seven cases shown in Figure 1. Therefore, the proposed MMSS technique could help the search method to perform better exploration and reach near global optimal solutions.

6. Results and Discussion

The results of the proposed methods on global optimization and simulation-based optimization problems are discussed in this section.

6.1. Numerical Results on Global Optimization

For global optimization problem, we applied the EDA-D algorithm to ten test problems, which are illustrated in Table 1, before applying the EDA-D algorithm on stochastic optimization problems in order to guarantee its efficiency. Then, it has been compared with other metaheuristic methods. The heuristic solution x is said to be optimal in case of the gap defined in Equation (6) being less than or equal to . Table 6 reported the average for 25 independent runs of EDA method for each function, and it is compared with the Directed Scatter Search (DSS) method, which is introduced in [51] with 5000 maximum number of function evaluations for each method. The results shown in Table 6 that the performance of the EDA-D code show promising performance, and its values show its ability of obtaining global minima for 6 of 10 test problems. The comparison statistics are stated in Table 7, which indicates the similar behavior of the two compared methods at the significance level 0.05.

Table 6.

The averages of obtained by the DSS and EDA-D using the CGO test set.

Table 7.

Wilcoxon rank-sum test for the results of Table 6.

For a more professional comparison, more sophisticated functions were used to compare the results of the proposed method with those of some benchmark methods. The hard test set test functions –, stated in Appendix A, are invoked in testing the performance of the proposed EDA-D method against seven benchmark differential evolutionary methods [57]. The results of the proposed methods and the seven compared methods using the hard test functions - with are reported in Table 8. This table contains average errors with 25 independent trials for each method. Results of the methods used in the comparison are taken from [57]. These comparisons indicate that the proposed method is promising, and its results are similar to those of the methods used in the comparison. Comparative statistics for the results in Table 8 are presented in Table 9 using the rank-sum statistical test. The results of this statistical test indicate that there is no significant difference between the proposed method and the global optimization benchmark methods used in the comparison at the significance level 0.05. This indicates that the proposed method is promising in the field of deterministic global optimization. This motivates the idea of combining the EDA-D with sampling methods to solve stochastic global optimization problems.

Table 8.

Compared average error gabs of global optimization m thods using the hard test set.

Table 9.

Wilcoxon rank-sum test for the results of Table 8.

6.2. Simulation Based Optimization Results

The proposed method has been compared with another metahuristic method to demonstrate its performance in terms of simulation optimization. The EDA-MMSS method has been compared with Evolution Strategies and Scatter Search for a simulation-based global optimization problem, which is introduced in [51]. Table 10 shows the comparison among the proposed method, Directed Evolution Strategies for Simulation-based (DESSP), and Scatter Search for Simulation-Based Optimization (DSSSP). The averages of the function values for the tested functions, and the best values over 25 independent runs for EDA-MMSS, DESSP, and DSSSP are simulated to seven test function problems presented in Table 2 with 500,000 maximum function evaluations, and their processing times are shown in Table 11. From Table 10, the EDA-MMSS method has shown superior performance for this function, especially for high dimensional function .

Table 10.

Best and average function values using the SBO Test Set A.

Table 11.

Averages of processing time (in seconds) for obtaining results in Table 10.

Table 12 provides a statistical comparisons for the results in Table 10 and Table 11. Although there were no significant differences between the results of the proposed method and those of the methods used in the comparison in terms of solution qualities, it is clear that the proposed method obtained better results on solution averages. This indicates the robustness of the proposed method. Moreover, the proposed method shows superior performance in saving processing times as shown in Table 11 and Table 12.

The last experiment was conducted to compare the results of the proposed methods with those of recent benchmark methods in simulation-based optimization. Eight methods [58] are used in this final comparison and their results are reported in Table 13 using the SBO Test Set B. The proposed methods could obtain better results than seven out of eight methods used in this comparison. Comparative statistics for these results are reported in Table 14 using the rank-sum statistical test. These statistics indicate that there is no difference between the results of the proposed EDA-SPRS and EDA-MMSS methods. Moreover, the proposed methods have overcome 7 out of 8 methods used in the comparison, which is clear from the p-values.

Table 13.

Compared average error gabs of simulation-based optimization methods using the SBO Test Set B with .

Table 14.

Wilcoxon rank-sum test for the results of Table 13.

7. Conclusions

In this paper, a modified method of Estimation of Distribution Algorithms for simulation-based optimization is proposed to find the global optimum or near-optimum of noisy objective functions. The proposed method is composed of combining a modified version of Estimation of Distribution Algorithm with a new sampling technique. This technique is a variable-sample method, in which function transportation is used with small-size samples in order to reduce the large dispersion resulting from random variables. The proposed sampling technique helps the search method to perform better exploration and hence to reach near global optimal solutions. The obtained results indicate the promising performance of the proposed method versus some existing state-of-the-arts methods, especially in terms of robustness and processing time.

Author Contributions

Conceptualization, A.-R.H. and A.A.A.; methodology, A.-R.H., A.A.A., and A.E.A.-H.; software, A.A.A.; validation, A.-R.H. and A.A.A.; formal analysis, A.-R.H., A.A.A., and A.E.A.-H.; investigation, A.-R.H. and A.A.A.; resources, A.-R.H., A.A.A., and A.E.A.-H.; data creation, A.-R.H. and A.A.A.; writing—original draft preparation, A.-R.H. and A.A.A.; writing—review and editing, A.-R.H. and A.E.A.-H.; visualization, A.-R.H., A.A.A., and A.E.A.-H.; project administration, A.-R.H.; funding acquisition, A.-R.H.’All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded by the National Plan for Science, Technology, and Innovation (MAARIFAH)—King Abdulaziz City for Science and Technology—the Kingdom of Saudi Arabia, award number (13-INF544-10).

Acknowledgments

The authors would like to thank King Abdulaziz City for Science and Technology, the Kingdom of Saudi Arabia, for supporting project number (13-INF544-10).

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Hard Test Functions

Twenty-five hard test functions - are listed in Table A1 with their global minima and bounds. The reader is directed to [47,48] for more details on these functions.

Table A1.

Hard test functions.

Table A1.

Hard test functions.

| h | Function Name | Bounds | Global Min |

|---|---|---|---|

| Shifted sphere function | |||

| Shifted Schwefel’s function 1.2 | |||

| Shifted rotated high conditioned elliptic function | |||

| Shifted Schwefel’s function 1.2 with noise in fitness | |||

| Schwefel’s function 2.6 with global optimum on bounds | |||

| Shifted Rosenbrock’s function | 390 | ||

| Shifted rotated Griewank’s function without bounds | |||

| Shifted rotated Ackley’s function with global optimum | |||

| on bounds | |||

| Shifted Rastrigin’s function | |||

| Shifted rotated Rastrigin’s function | |||

| Shifted rotated Weierstrass function | 90 | ||

| Schwefel’s function 2.13 | |||

| Expanded extended Griewank’s + Rosenbrock’s function | |||

| Expanded rotated extended Scaffer’s function | |||

| Hybrid composition function | 120 | ||

| Rotated hybrid composition function | 120 | ||

| Rotated hybrid composition function with noise in fitness | 120 | ||

| Rotated hybrid composition function | 10 | ||

| Rotated hybrid composition function with narrow | 10 | ||

| basin global optimum | |||

| Rotated hybrid composition function with global | 10 | ||

| optimum on the bounds | |||

| Rotated hybrid composition function | 360 | ||

| Rotated hybrid composition function with high | 360 | ||

| condition number matrix | |||

| Non-Continuous rotated hybrid composition function | 360 | ||

| Rotated hybrid composition function | 260 | ||

| Rotated hybrid composition function without bounds | 260 |

Appendix B. Classical Test Functions—Set A

Appendix B.1. Goldstein and Price Function

Definition:

Search space:

Global minimum:

Appendix B.2. Rosenbrock Function

Definition:

Search space:

Global minimum:

Appendix B.3. Griewank Function

Definition:

Search space:

Global minimum:

Appendix B.4. Pinter Function

Definition: , where x0 = xn and xn+1 = x1.

Search space:

Global minimum:

Appendix B.5. Modified Griewank Function

Definition:

Search space:

Global minimum:

Appendix B.6. Griewank function with non-Gaussian noise

Definition:

The simulation noise is changed to the uniform distribution U(−17.32, 17.32)

Search space:

Global minimum:

Appendix B.7. Griewank function with (50D)

Definition:

Search space:

Global minimum:

Appendix C. Classical Test Functions—Set B

Appendix C.1. Ackley Function

Definition:

Search space:

Global minimum:

Appendix C.2. Alpine Function

Definition:

Search space:

Global minimum:

Appendix C.3. Axis Parallel Function

Definition:

Search space:

Global minimum:

Appendix C.4. DeJong Function

Definition:

Search space:

Global minimum:

Appendix C.5. Drop Wave Function

Definition:

Search space:

Global minimum:

Appendix C.6. Griewank Function

Definition:

Search space:

Global minimum:

Appendix C.7. Michalewicz Function

Definition:

Search space:

Global minima:

Appendix C.8. Moved Axis Function

Definition:

Search space:

Global minimum:

Appendix C.9. Pathological Function

Definition:

Search space:

Appendix C.10. Rastrigin Function

Definition:

Search space:

Global minimum:

Appendix C.11. Rosenbrock Function

Definition:

Search space:

Global minimum:

Appendix C.12. Schwefel Function

Definition:

Search space:

Global minimum:

Appendix C.13. Tirronen Function

Definition:

Search space:

References

- Kizhakke Kodakkattu, S.; Nair, P. Design optimization of helicopter rotor using kriging. Aircr. Eng. Aerosp. Technol. 2018, 90, 937–945. [Google Scholar] [CrossRef]

- Kim, P.; Ding, Y. Optimal design of fixture layout in multistation assembly processes. IEEE Trans. Autom. Sci. Eng. 2004, 1, 133–145. [Google Scholar] [CrossRef]

- Kleijnen, J.P. Simulation-optimization via Kriging and bootstrapping: A survey. J. Simul. 2014, 8, 241–250. [Google Scholar] [CrossRef]

- Fu, M.C.; Hu, J.Q. Sensitivity analysis for Monte Carlo simulation of option pricing. Probab. Eng. Inf. Sci. 1995, 9, 417–446. [Google Scholar] [CrossRef]

- Plambeck, E.L.; Fu, B.R.; Robinson, S.M.; Suri, R. Throughput optimization in tandem production lines via nonsmooth programming. In Proceedings of the 1993 Summer Computer Simulation Conference, Boston, MA, USA, 19–21 July 1993; pp. 70–75. [Google Scholar]

- Pourhassan, M.R.; Raissi, S. An integrated simulation-based optimization technique for multi-objective dynamic facility layout problem. J. Ind. Inf. Integr. 2017, 8, 49–58. [Google Scholar] [CrossRef]

- Semini, M.; Fauske, H.; Strandhagen, J.O. Applications of discrete-event simulation to support manufacturing logistics decision-making: a survey. In Proceedings of the 38th Conference on Winter Simulation, Monterey, CA, USA, 3–6 December 2006; pp. 1946–1953. [Google Scholar]

- Chong, L.; Osorio, C. A simulation-based optimization algorithm for dynamic large-scale urban transportation problems. Transp. Sci. 2017, 52, 637–656. [Google Scholar] [CrossRef]

- Gürkan, G.; Yonca Özge, A.; Robinson, S.M. Sample-path solution of stochastic variational inequalities. Math. Program. 1999, 84, 313–333. [Google Scholar] [CrossRef]

- Frühwirth-Schnatter, S. Data augmentation and dynamic linear models. J. Time Ser. Anal. 1994, 15, 183–202. [Google Scholar] [CrossRef]

- Van Dyk, D.A.; Meng, X.L. The art of data augmentation. J. Comput. Graph. Stat. 2001, 10, 1–50. [Google Scholar] [CrossRef]

- Andradóttir, S. Handbook of Simulation: Principles, Methodology, Advances, Applications, and Practice. Available online: https://www.wiley.com/en-us/Handbook+of+Simulation%3A+Principles%2C+Methodology%2C+Advances%2C+Applications%2C+and+Practice-p-9780471134039 (accessed on 16 March 2020).

- Gosavi, A. Simulation-Based Optimization: Parametric Optimization Techniques and Reinforcement Learning. Available online: https://www.researchgate.net/publication/238319435_Simulation-Based_Optimization_Parametric_Optimization_Techniques_and_Reinforcement_Learning (accessed on 16 March 2020).

- Fu, M.C. Optimization for simulation: Theory vs. practice. INFORMS J. Comput. 2002, 14, 192–215. [Google Scholar] [CrossRef]

- BoussaïD, I.; Lepagnot, J.; Siarry, P. A survey on optimization metaheuristics. Inf. Sci. 2013, 237, 82–117. [Google Scholar] [CrossRef]

- Glover, F.W.; Kochenberger, G.A. Handbook of Metaheuristics; Springer: Boston, MA, USA, 2006. [Google Scholar]

- Ribeiro, C.C.; Hansen, P. Essays and surveys in metaheuristics; Springer Science & Business Media: New York, NY, USA, 2012. [Google Scholar]

- Siarry, P. Metaheuristics. Available online: https://link.springer.com/book/10.1007/978-3-319-45403-0#about (accessed on 18 March 2020).

- Pellerin, R.; Perrier, N.; Berthaut, F. A survey of hybrid metaheuristics for the resource-constrained project scheduling problem. Eur. J. Oper. Res. 2019, 27, 437. [Google Scholar] [CrossRef]

- Mohamed, A.W.; Hadi, A.A.; Mohamed, A.K. Gaining-sharing knowledge based algorithm for solving optimization problems: A novel nature-inspired algorithm. Int. J. Mach. Learn. Cybern. 2019, 1–29. [Google Scholar] [CrossRef]

- Doğan, B.; Ölmez, T. A new metaheuristic for numerical function optimization: Vortex Search algorithm. Inf. Sci. 2015, 293, 125–145. [Google Scholar] [CrossRef]

- Huang, C.; Li, Y.; Yao, X. A Survey of Automatic Parameter Tuning Methods for Metaheuristics. Available online: https://ieeexplore.ieee.org/document/8733017 (accessed on 16 March 2020).

- Wang, J.; Zhang, Q.; Abdel-Rahman, H.; Abdel-Monem, M.I. A rough set approach to feature selection based on scatter search metaheuristic. J. Syst. Sci. Complex. 2014, 27, 157–168. [Google Scholar] [CrossRef]

- Hedar, A.; Ali, A.F.; Hassan, T. Genetic algorithm and tabu search based methods for molecular 3D-structure prediction. Numer. Algebra Control. Optim. 2011, 1, 191–209. [Google Scholar] [CrossRef]

- Hedar, A.; Fukushima, M. Heuristic pattern search and its hybridization with simulated annealing for nonlinear global optimization. Optim. Methods Softw. 2004, 19, 291–308. [Google Scholar] [CrossRef]

- Hedar, A.; Fukushima, M. Directed evolutionary programming: To wards an improved performance of evolutionary programming. In Proceedings of the IEEE International Conference on Evolutionary Computation, Vancouver, BC, Canada, 16–21 July 2006; pp. 1521–1528. [Google Scholar]

- Hedar, A.; Fukushima, M. Derivative-free filter simulated annealing method for constrained continuous global optimization. J. Glob. Optim. 2006, 35, 521–549. [Google Scholar] [CrossRef]

- Larrañaga, P.; Lozano, J.A. Estimation of Distribution Algorithms: A New Tool For Evolutionary Computation; Springer Science & Business Media: Boston, MA, USA, 2001. [Google Scholar]

- Hauschild, M.; Pelikan, M. An introduction and survey of estimation of distribution algorithms. Swarm Evol. Comput. 2011, 1, 111–128. [Google Scholar] [CrossRef]

- Yang, Q.; Chen, W.N.; Li, Y.; Chen, C.P.; Xu, X.M.; Zhang, J. Multimodal estimation of distribution algorithms. IEEE Trans. Cybern. 2016, 47, 636–650. [Google Scholar] [CrossRef] [PubMed]

- Krejca, M.S.; Witt, C. Theory of Estimation-of-Distribution Algorithms. Available online: https://www.researchgate.net/publication/337425690_Theory_of_Estimation-of-Distribution_Algorithms (accessed on 16 March 2020).

- Homem-De-Mello, T. Variable-sample methods for stochastic optimization. ACM Trans. Model. Comput. Simul. (Tomacs) 2003, 13, 108–133. [Google Scholar] [CrossRef]

- Faris, H.; Aljarah, I.; Mirjalili, S. Improved monarch butterfly optimization for unconstrained global search and neural network training. Appl. Intell. 2018, 48, 445–464. [Google Scholar] [CrossRef]

- Lozano, J.A.; Larrañaga, P.; Inza, I.; Bengoetxea, E. Towards a New Evolutionary Computation: Advances on Estimation of Distribution Algorithms. Available online: https://link.springer.com/book/10.1007/3-540-32494-1#about (accessed on 18 March 2020).

- Baluja, S. Population-Based Incremental Learning. A Method for Integrating Genetic Search Based Function Optimization and Competitive Learning. Available online: https://www.ri.cmu.edu/pub_files/pub1/baluja_shumeet_1994_2/baluja_shumeet_1994_2.pdf (accessed on 16 March 2020).

- Mühlenbein, H.; Paass, G. From Recombination of Genes to the Estimation Of Distributions I. Binary Parameters. Available online: http://www.muehlenbein.org/estbin96.pdf (accessed on 16 March 2020).

- Pelikan, M.; Goldberg, D.E.; Lobo, F.G. A survey of optimization by building and using probabilistic models. Comput. Optim. Appl. 2002, 21, 5–20. [Google Scholar] [CrossRef]

- Dong, W.; Wang, Y.; Zhou, M. A latent space-based estimation of distribution algorithm for large-scale global optimization. Soft Comput. 2018, 8, 1–23. [Google Scholar] [CrossRef]

- Sebag, M.; Ducoulombier, A. Extending Population-Based Incremental Learning To Continuous Search Spaces. Available online: http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.42.1884 (accessed on 16 March 2020).

- Tsutsui, S.; Pelikan, M.; Goldberg, D.E. Evolutionary Algorithm Using Marginal Histogram Models in Continuous Domain. Available online: http://medal-lab.org/files/2001019.pdf (accessed on 16 March 2020).

- Larranaga, P.; Etxeberria, R.; Lozano, J.; Pena, J.; Pe, J. Optimization by Learning and Simulation of Bayesian and Gaussian Networks. Available online: http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.41.1895 (accessed on 16 March 2020).

- Larrañaga, P.; Etxeberria, R.; Lozano, J.A.; Peña, J.M. Optimization in cOntinuous Domains by Learning and Simulation of Gaussian Networks. Available online: http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.31.3105 (accessed on 16 March 2020).

- Wang, J.; Hedar, A.; Zheng, G.; Wang, S. Scatter search for rough set attribute reduction. In Proceedings of the International Joint Conference on Computational Sciences and Optimization, Sanya, China, 24–26 April 2009; pp. 531–535. [Google Scholar]

- Hedar, A.; Ali, A.F. Genetic algorithm with population partitioning and space reduction for high dimensional problem. In Proceedings of the International Conference on Computer Engineering & Systems, Cairo, Egypt, 14–16 December 2009; pp. 151–156. [Google Scholar]

- Hedar, A.; Fukushima, M. Minimizing multimodal functions by simplex coding genetic algorithm. Optim. Methods Softw. 2003, 18, 265–282. [Google Scholar] [CrossRef]

- Floudas, C.A.; Pardalos, P.M.; Adjiman, C.; Esposito, W.R.; Gümüs, Z.H.; Harding, S.T.; Klepeis, J.L.; Meyer, C.A.; Schweiger, C.A. Handbook of Test Problems in Local and Global Optimization; Springer Science & Business Media: Boston, MA, USA, 2013. [Google Scholar]

- Liang, J.J.; Suganthan, P.N.; Deb, K. Novel composition test functions for numerical global optimization. In Proceedings of the 2005 IEEE Swarm Intelligence Symposium, Pasadena, CA, USA, 8–10 June 2005. [Google Scholar]

- Suganthan, P.N.; Hansen, N.; Liang, J.J.; Deb, K.; Chen, Y.-P.; Auger, A.; Tiwari, S. Problem Definitions and Evaluation Criteria for the CEC 2005 Special Session on Real-Parameter Optimization. Available online: http://www.cmap.polytechnique.fr/~nikolaus.hansen/Tech-Report-May-30-05.pdf (accessed on 16 March 2020).

- He, D.; Lee, L.H.; Chen, C.H.; Fu, M.C.; Wasserkrug, S. Simulation optimization using the cross-entropy method with optimal computing budget allocation. ACM Trans. Model. Comput. Simul. 2010, 20, 4. [Google Scholar] [CrossRef]

- Hedar, A.; Allam, A.A. Scatter Search for Simulation-Based Optimization. In Proceedings of the 2017 International Conference on Computer and Applications, Dubai, UAE, 6–7 September 2017; pp. 244–251. [Google Scholar]

- Hedar, A.; Allam, A.A.; Deabes, W. Memory-Based Evolutionary Algorithms for Nonlinear and Stochastic Programming Problems. Mathematics 2019, 7, 1126. [Google Scholar] [CrossRef]

- García, S.; Fernández, A.; Luengo, J.; Herrera, F. A study of statistical techniques and performance measures for genetics-based machine learning: accuracy and interpretability. Soft Comput. 2009, 13, 959. [Google Scholar] [CrossRef]

- Sheskin, D.J. Handbook of Parametric and Nonparametric Statistical Procedures; The CRC Press: Boca Raton, FL, USA, 2003. [Google Scholar]

- Zar, J.H. Biostatistical Analysis; Pearson: London, UK, 2013. [Google Scholar]

- Derrac, J.; García, S.; Molina, D.; Herrera, F. A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm Evol. Comput. 2011, 1, 3–18. [Google Scholar] [CrossRef]

- García-Martínez, S.; Molina, D.; Lozano, M.; Herrera, F. A study on the use of non-parametric tests for analyzing the evolutionary algorithms’ behavior: a case study on the CEC’2005 special session on real parameter optimization. J. Heuristics 2009, 15, 617–644. [Google Scholar] [CrossRef]

- Fan, Q.; Yan, X.; Xue, Y. Prior knowledge guided differential evolution. Soft Comput. 2017, 21, 6841–6858. [Google Scholar] [CrossRef]

- Gosh, A.; Das, S.; Mallipeddi, R.; Das, A.K.; Dash, S.S. A modified differential evolution with distance-based selection for continuous optimization in the presence of noise. IEEE Access 2017, 5, 26944–26964. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).