Decision-Level Multi-Sensor Fusion to Improve Limitations of Single-Camera-Based CNN Classification in Precision Farming: Application in Weed Detection

Abstract

1. Introduction

2. Materials and Methods

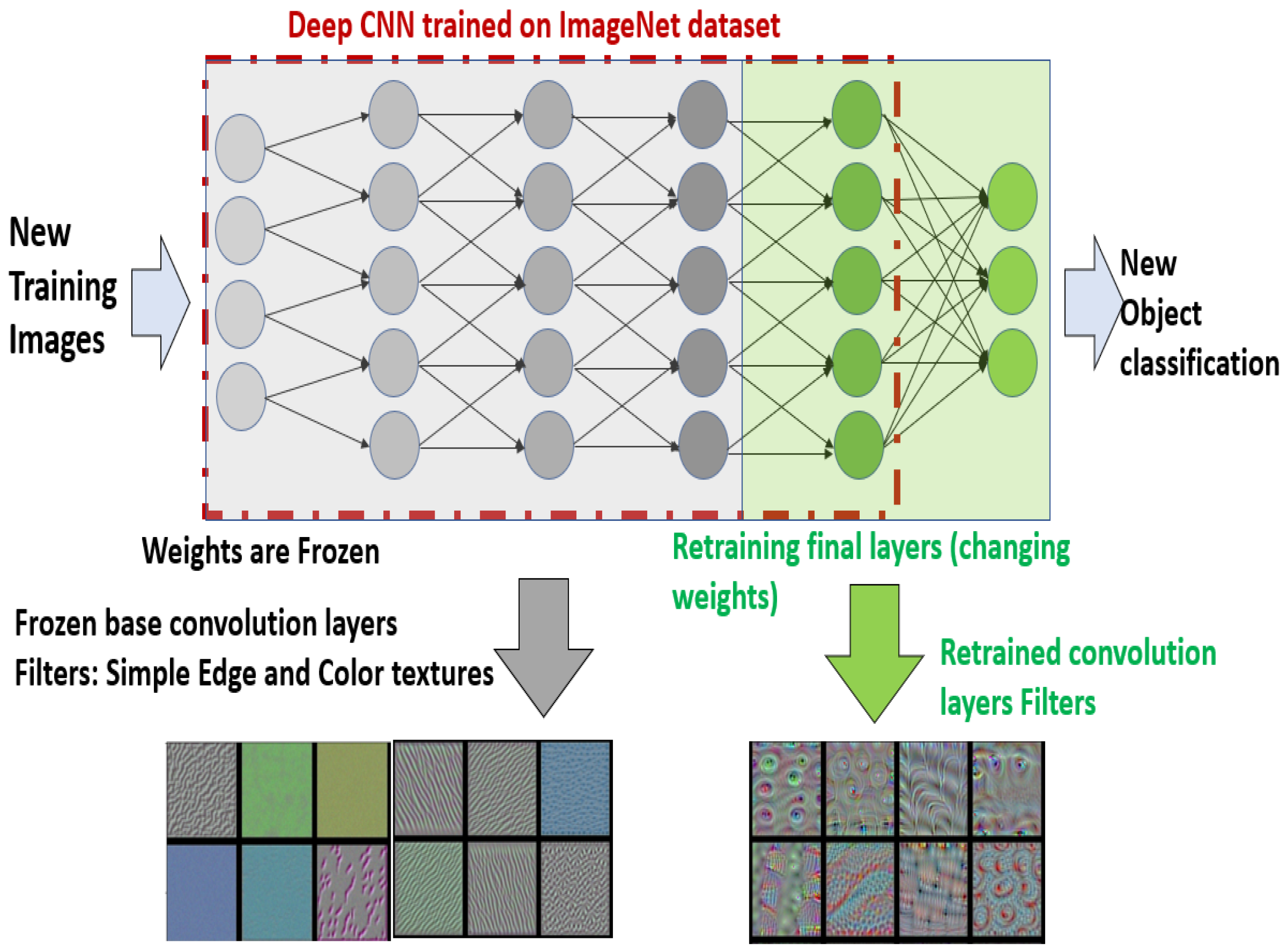

2.1. CNN and Transfer-Learning

- CNNs have the ability to acquire translation-invariant characteristics. This implies that when a Convolutional Neural Network (CNN) acquires knowledge about a certain feature located in the upper right corner of one picture, it possesses the capability to identify the same feature in the lower left corner of a different image.

- CNN is capable of acquiring knowledge pertaining to spatial hierarchies of patterns. The initial convolution layers are responsible for acquiring basic visual features such as edges and colors. The second convolutional layer will acquire knowledge of patterns that are composed of patterns from the first layer, such as edges and colors. These patterns may include colored edges and other similar features. The upper layers will acquire a greater understanding of intricate aspects. When the training images pertain to cats, the upper layers of the network will acquire knowledge of distinctive features such as eyes and ears. The aforementioned attribute of CNN allows for the effective utilization of transfer learning, a topic that will be further explored in the subsequent discussion.

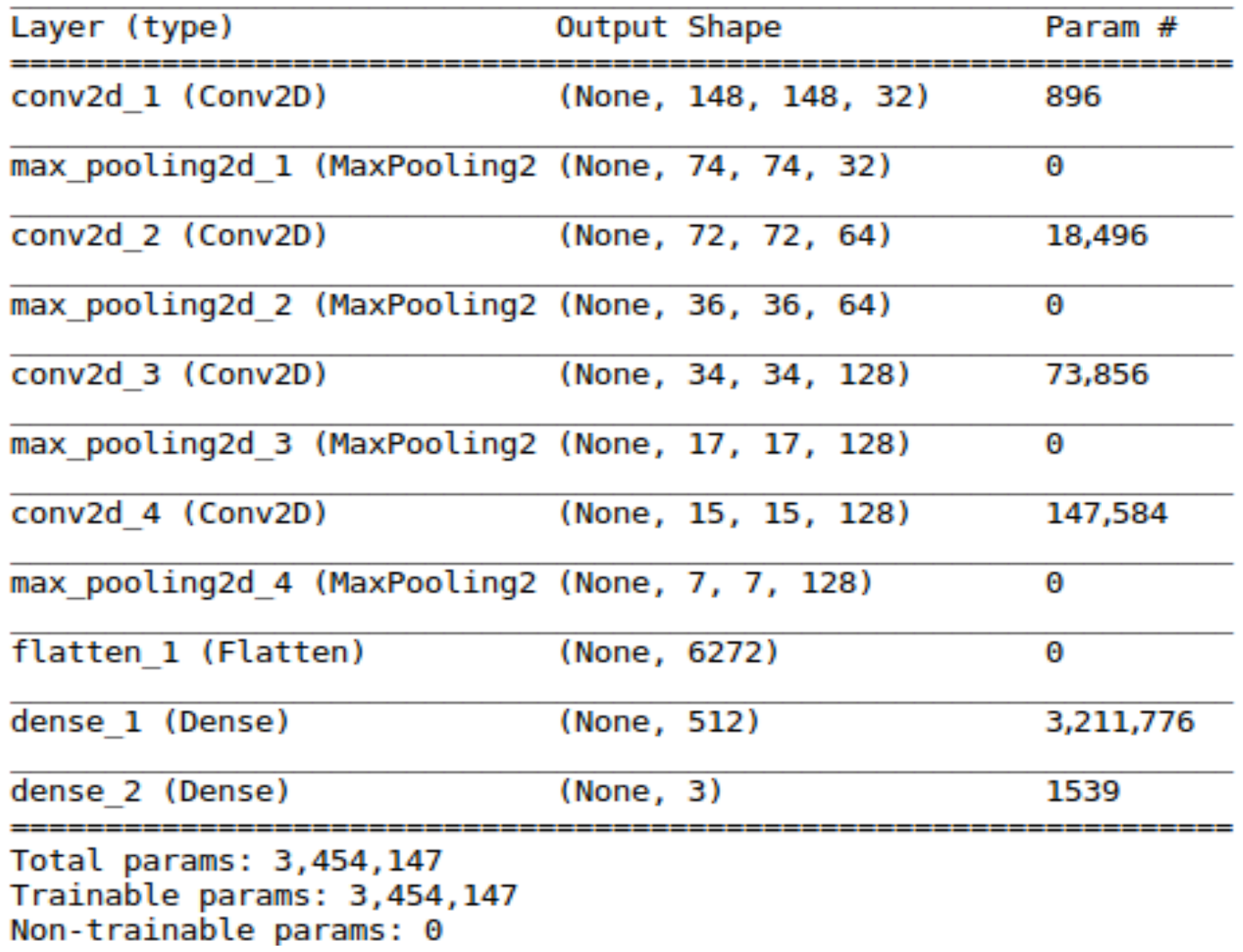

2.1.1. CNN Model

2.1.2. Transfer Learning with VGG16

2.2. Decision Level Sensor Fusion

2.2.1. Dempster–Shafer Rule of Combination

2.2.2. Sensor Fusion Algorithm to Eliminate Paradoxes

- Completely Conflicting Paradox: When there are two sensors and one sensor completely contradicts the other sensor’s output. For example, consider andSensor 1:Sensor 2:and suppose proposition A is true; then the two sensors provide completely conflicting information. The conflict factor, calculated using Equation (2), is , indicating total disagreement between the evidences from sensor 1 and sensor 2. Under such conditions, the DS combination rule becomes inapplicable.

- “One Ballot Veto” Paradox: In a multi-sensor system (with more than two sensors), one sensor may provide an output that completely contradicts the readings from all other sensors. The following example illustrates such a scenario for a given frame. Consider and suppose proposition A is true, andSensor 1:Sensor 2:Sensor 3:Sensor 4:It is evident that sensor 2 is faulty, as its output contradicts those of the other three sensors. Applying the DS combination rule yields and . These fusion results contradict the assumed proposition that A is true. A high value of K indicates significant conflict among the sensors. This counterintuitive outcome is primarily due to the erroneous readings from sensor 2.

- “Total Trust” Paradox: In this case, one sensor strongly contradicts the other, although they share a common focal element with low supporting evidence. For example, consider the frame andSensor 1:Sensor 2:Applying Equation (1) and (2), and . In this case, common sense indicates that either or should be correct, yet the incorrect proposition B is identified as true.

- Step 1: Construct a multi-sensor information matrix for space domain fusion by considering a system with N sources of evidence (sensors) within the frame representing the objects to be classified.

- Step 2: Measure the relative distance between the evidence. Consider two mass functions and defined over the discriminant frame ; the Jousselme distance between and is defined as follows:where and |.| indicates cardinality.

- Step 3: Compute the total evidence distance for each sensor.

- Step 4: Compute the global average of evidence distance.

- Step 5: Determine the belief entropy of each sensor based on Equation (9) and perform normalization.

- Step 6: The set of evidence is divided into two categories: credible evidence and incredible evidence, as defined by Equations (13) and (14):

- Step 7: Update the original evidences.where is the sensor evidence weight.

- Step 8: Aggregate the modified evidence for () times (n is the number of sensors) with the DS combination rule by using Equations (1) and (2).

- Enhances the constraints of the first DS combination principle.

- Combines results from both homogeneous and heterogeneous sensors at the decision level.

- Has the capability to identify and rectify malfunctioning sensors, thus ensuring that the inaccurate readings from these sensors do not adversely impact the fused findings.

| Algorithm 1 Time Domain Fusion using Multiple Sensors |

|

| Algorithm 2 Space Domain Fusion using Multiple Sensors |

|

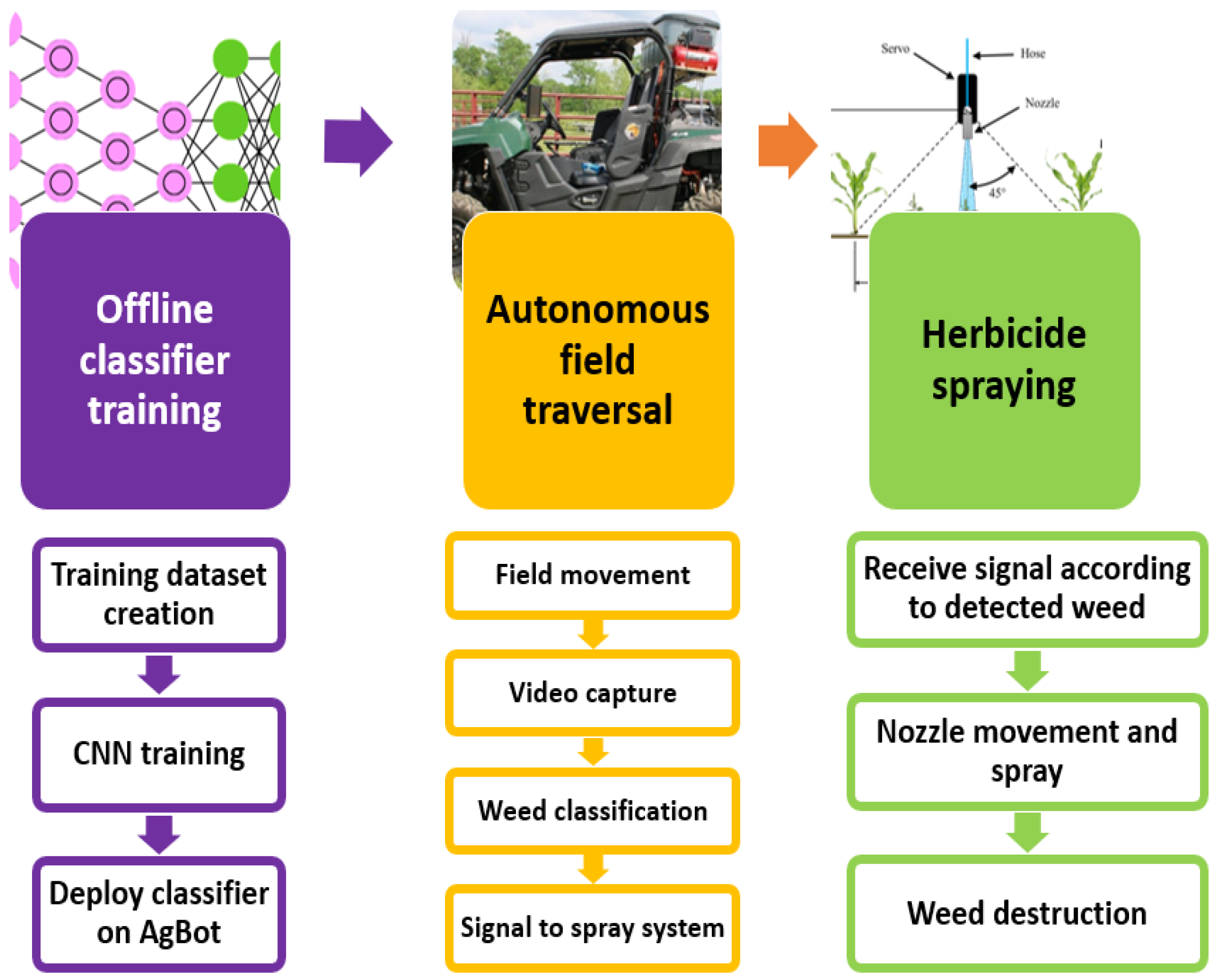

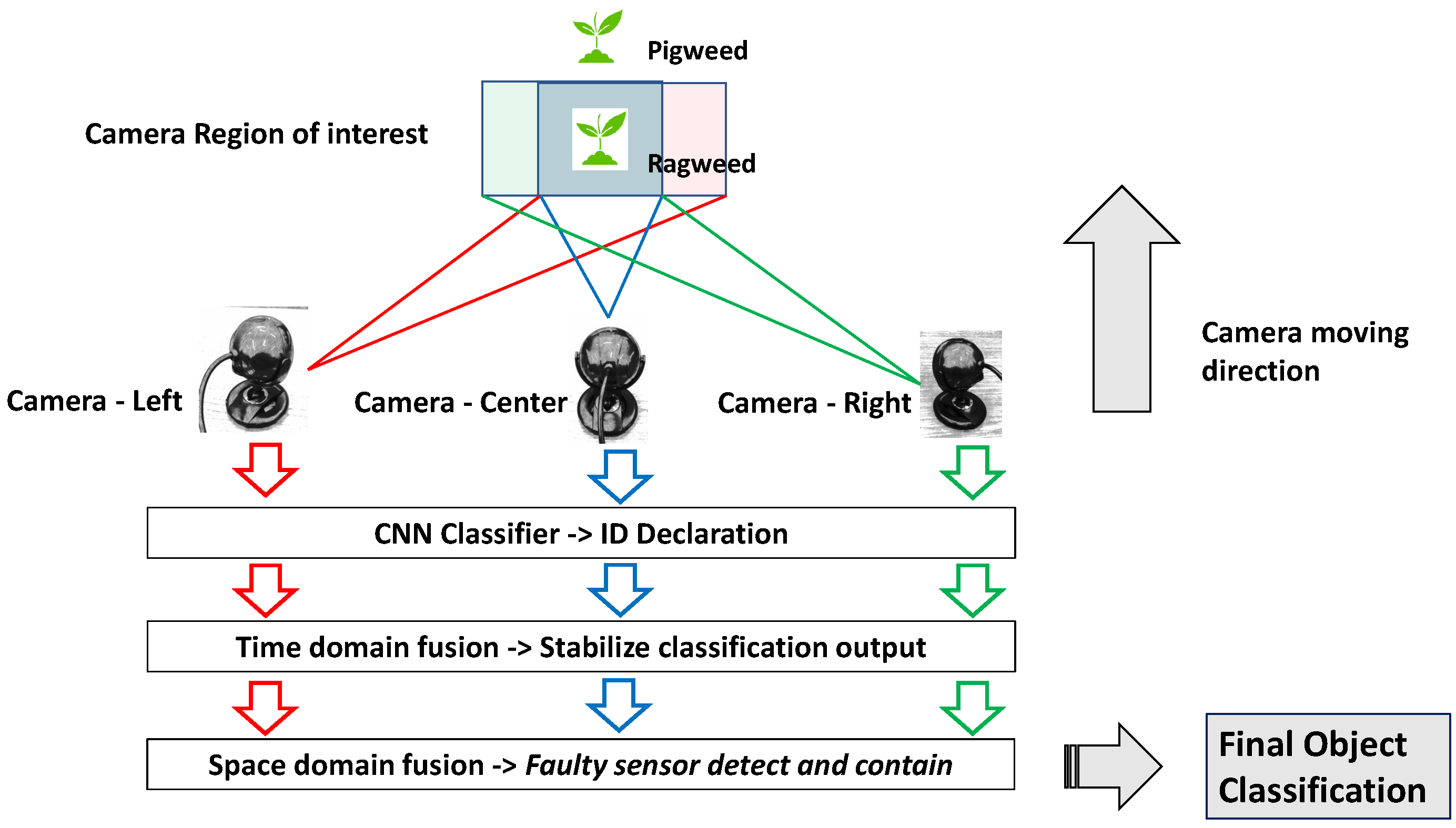

2.3. Methodologies

2.3.1. Weed Image Dataset and Image Processing

2.3.2. Conducting Transfer Learning on VGG16

2.3.3. Real-Time Classification from Single-Camera Video Input

2.3.4. Implementation of Sensor Fusion Algorithm

3. Results

3.1. Classification Report

3.2. Real-Time Classification from a Single Camera Video Input

3.2.1. 1 Pigweed in Video Frame (Figure 9)

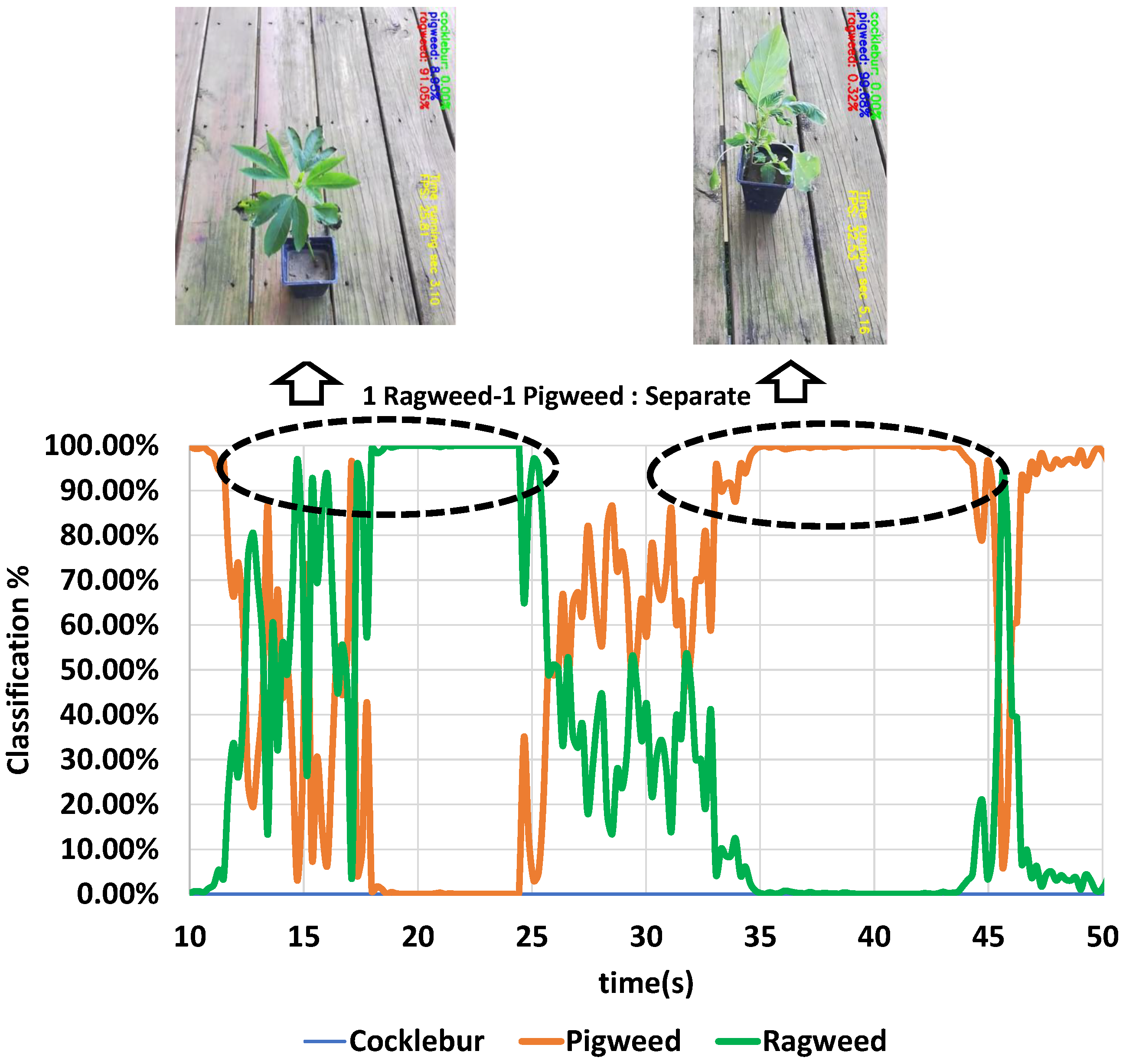

3.2.2. 1 Ragweed and 1 Pigweed in the Video, Separately Placed (Figure 10)

3.2.3. 1 Ragweed in the Frame, Noise Artificially Introduced (Figure 11)

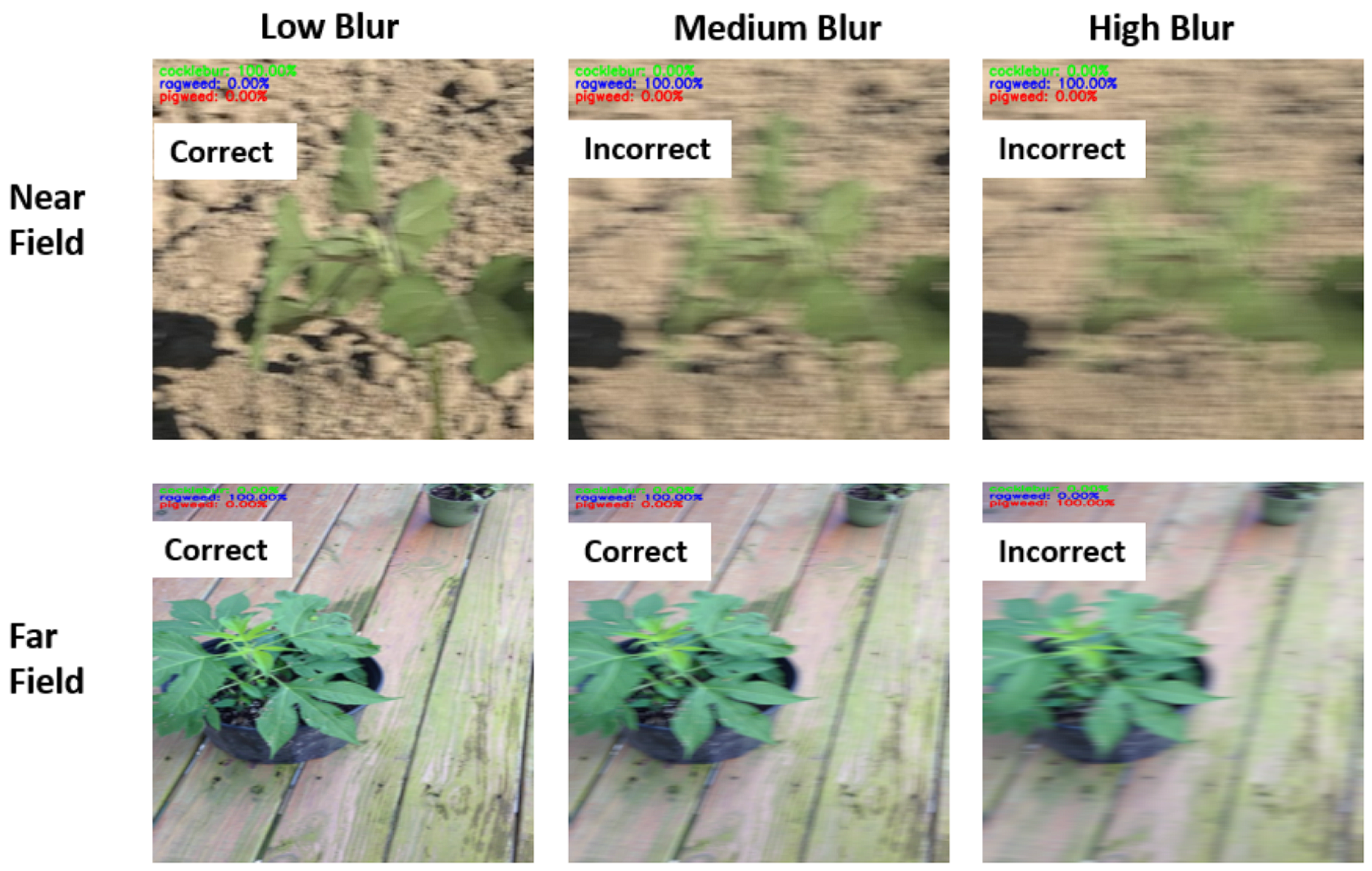

3.2.4. 1 Ragweed in the Frame, Blurriness Artificially Introduced (Figure 12)

3.3. Sensor Fusion-Based Weed Detection Under Different Operating Conditions

3.3.1. Performance Analysis of the Proposed Method

- Sensor 1:

- Sensor 2:

- Sensor 3:

- Sensor 4:

3.3.2. When a Faulty Sensor Is Present

3.3.3. When Weed Is Partially Occluded

4. Discussion

- Retraining and Fine-Tuning of VGG16 CNN Architecture: A VGG16 model, pre-trained on ImageNet, was fine-tuned using a domain-specific weed dataset to improve classification performance for target weed species. Transfer learning allowed the model to learn robust, discriminative features from a relatively small dataset, improving generalizability in diverse field conditions.

- Real-Time Deployment on Autonomous Agricultural Platform (AgBOT): The retrained model was deployed on AgBOT with a monocular RGB camera. The performance was evaluated under real operating conditions, including varying illumination, occlusion, and motion-induced noise. The system demonstrates scores of 0.96, 0.94, and 0.95 for Cocklebur, Pigweed, and Ragweed, respectively. The overall classification accuracy is observed to be 94%. Moreover, the model achieves an average inference time of 0.266 s on CPU and 0.032 s on GPU, confirming the suitability of the approach for real-time applications.

- Design and Evaluation of Multi-Sensor Fusion Algorithm: While the single-sensor setup shows promising results, its performance degrades under specific scenarios such as partial occlusion or sensor malfunction, leading to misclassification and unreliable control signals for downstream actuators (e.g., herbicide sprayers). To mitigate this, the study introduces a decision-level fusion strategy incorporating time domain and space domain reasoning.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Gilbert, N. Case studies: A hard look at GM crops. Nat. News 2013, 497, 24–26. [Google Scholar] [CrossRef]

- Khan, N.; Medlock, G.; Graves, S.; Anwar, S. GPS Guided Autonomous Navigation of a Small Agricultural Robot with Automated Fertilizing System; Technical Report, SAE Technical Paper; SAE International: Warrendale, PA, USA, 2018. [Google Scholar]

- Fung, M.L.; Chen, M.Z.; Chen, Y.H. Sensor fusion: A review of methods and applications. In Proceedings of the 2017 29th Chinese Control And Decision Conference (CCDC), Chongqing, China, 28–30 May 2017; pp. 3853–3860. [Google Scholar]

- Heinrich, S.; Motors, L. Flash memory in the emerging age of autonomy. In Proceedings of the Flash Memory Summit, Santa Clara, CA, USA, 8–10 August 2017; pp. 1–10. [Google Scholar]

- Singh, V.; Singh, D. Development of an Approach for Early Weed Detection with UAV Imagery. In Proceedings of the IGARSS 2022—2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 4879–4882. [Google Scholar] [CrossRef]

- Reedha, R.; Dericquebourg, E.; Canals, R.; Hafiane, A. Transformer Neural Network for Weed and Crop Classification of High Resolution UAV Images. Remote Sens. 2022, 14, 592. [Google Scholar] [CrossRef]

- Moazzam, S.I.; Khan, U.S.; Nawaz, T.; Qureshi, W.S. Crop and Weeds Classification in Aerial Imagery of Sesame Crop Fields Using a Patch-Based Deep Learning Model-Ensembling Method. In Proceedings of the 2022 2nd International Conference on Digital Futures and Transformative Technologies (ICoDT2), Rawalpindi, Pakistan, 24–26 May 2022; pp. 1–7. [Google Scholar] [CrossRef]

- Ahmad, M.; Adnan, A.; Chehri, A. A Real-Time IoT and Image Processing based Weeds Classification System for Selective Herbicide. In Proceedings of the 2022 IEEE 95th Vehicular Technology Conference: (VTC2022-Spring), Helsinki, Finland, 19–22 June 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Ota, K.; Louhi Kasahara, J.Y.; Yamashita, A.; Asama, H. Weed and Crop Detection by Combining Crop Row Detection and K-means Clustering in Weed Infested Agricultural Fields. In Proceedings of the 2022 IEEE/SICE International Symposium on System Integration (SII), Narvik, Norway, 9–12 January 2022; pp. 985–990. [Google Scholar] [CrossRef]

- Chen, Y.; Wu, Z.; Zhao, B.; Fan, C.; Shi, S. Weed and corn seedling detection in field based on multi feature fusion and support vector machine. Sensors 2020, 21, 212. [Google Scholar] [CrossRef]

- Rodríguez-Garlito, E.C.; Paz-Gallardo, A.; Plaza, A. Automatic Detection of Aquatic Weeds: A Case Study in the Guadiana River, Spain. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 8567–8585. [Google Scholar] [CrossRef]

- Garibaldi-Márquez, F.; Flores, G.; Mercado-Ravell, D.A.; Ramírez-Pedraza, A.; Valentín-Coronado, L.M. Weed Classification from Natural Corn Field-Multi-Plant Images Based on Shallow and Deep Learning. Sensors 2022, 22, 3021. [Google Scholar] [CrossRef]

- Potena, C.; Nardi, D.; Pretto, A. Fast and accurate crop and weed identification with summarized train sets for precision agriculture. In Proceedings of the International Conference on Intelligent Autonomous Systems, Shanghai, China, 3–7 July 2016; pp. 105–121. [Google Scholar]

- Sharpe, S.M.; Schumann, A.W.; Yu, J.; Boyd, N.S. Vegetation detection and discrimination within vegetable plasticulture row-middles using a convolutional neural network. Precis. Agric. 2020, 21, 264–277. [Google Scholar] [CrossRef]

- Reddy, L.U.K.; Rohitharun, S.; Sujana, S. Weed Detection Using AlexNet Architecture In The Farming Fields. In Proceedings of the 2022 3rd International Conference for Emerging Technology (INCET), Belgaum, India, 27–29 May 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Espejo-Garcia, B.; Mylonas, N.; Athanasakos, L.; Fountas, S.; Vasilakoglou, I. Towards weeds identification assistance through transfer learning. Comput. Electron. Agric. 2020, 171, 105306. [Google Scholar] [CrossRef]

- Sunil, G.; Zhang, Y.; Koparan, C.; Ahmed, M.R.; Howatt, K.; Sun, X. Weed and crop species classification using computer vision and deep learning technologies in greenhouse conditions. J. Agric. Food Res. 2022, 9, 100325. [Google Scholar] [CrossRef]

- Yu, J.; Sharpe, S.M.; Schumann, A.W.; Boyd, N.S. Deep learning for image-based weed detection in turfgrass. Eur. J. Agron. 2019, 104, 78–84. [Google Scholar] [CrossRef]

- Al-Badri, A.H.; Ismail, N.A.; Al-Dulaimi, K.; Rehman, A.; Abunadi, I.; Bahaj, S.A. Hybrid CNN Model for Classification of Rumex Obtusifolius in Grassland. IEEE Access 2022, 10, 90940–90957. [Google Scholar] [CrossRef]

- Olsen, A.; Konovalov, D.A.; Philippa, B.; Ridd, P.; Wood, J.C.; Johns, J.; Banks, W.; Girgenti, B.; Kenny, O.; Whinney, J.; et al. DeepWeeds: A multiclass weed species image dataset for deep learning. Sci. Rep. 2019, 9, 2058. [Google Scholar] [CrossRef] [PubMed]

- Du, Y.; Zhang, G.; Tsang, D.; Jawed, M.K. Deep-CNN based Robotic Multi-Class Under-Canopy Weed Control in Precision Farming. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 2273–2279. [Google Scholar] [CrossRef]

- Dodge, S.; Karam, L. Understanding how image quality affects deep neural networks. In Proceedings of the 2016 Eighth International Conference on Quality of Multimedia Experience (QoMEX), Lisbon, Portugal, 6–8 June 2016; pp. 1–6. [Google Scholar]

- Wang, A.; Zhang, W.; Wei, X. A review on weed detection using ground-based machine vision and image processing techniques. Comput. Electron. Agric. 2019, 158, 226–240. [Google Scholar] [CrossRef]

- Rodrigo, M.; Oturan, N.; Oturan, M.A. Electrochemically assisted remediation of pesticides in soils and water: A review. Chem. Rev. 2014, 114, 8720–8745. [Google Scholar] [CrossRef] [PubMed]

- Marshall, E. Field-scale estimates of grass weed populations in arable land. Weed Res. 1988, 28, 191–198. [Google Scholar] [CrossRef]

- Tian, L.; Reid, J.F.; Hummel, J.W. Development of a precision sprayer for site-specific weed management. Trans. ASAE 1999, 42, 893–900. [Google Scholar] [CrossRef]

- Medlin, C.R.; Shaw, D.R. Economic comparison of broadcast and site-specific herbicide applications in nontransgenic and glyphosate-tolerant Glycine max. Weed Sci. 2000, 48, 653–661. [Google Scholar] [CrossRef]

- Åstrand, B.; Baerveldt, A.J. An agricultural mobile robot with vision-based perception for mechanical weed control. Auton. Robot. 2002, 13, 21–35. [Google Scholar] [CrossRef]

- Choi, Y.K.; Lee, S.J. Development of advanced sonar sensor model using data reliability and map evaluation method for grid map building. J. Mech. Sci. Technol. 2015, 29, 485–491. [Google Scholar] [CrossRef]

- Shalal, N.; Low, T.; McCarthy, C.; Hancock, N. A preliminary evaluation of vision and laser sensing for tree trunk detection and orchard mapping. In Proceedings of the Australasian Conference on Robotics and Automation (ACRA 2013), Sydney, Australia, 2–4 December 2013; pp. 1–10. [Google Scholar]

- Garcia-Alegre, M.C.; Martin, D.; Guinea, D.M.; Guinea, D. Real-time fusion of visual images and laser data images for safe navigation in outdoor environments. In Sensor Fusion-Foundation and Applications; IntechOpen: London, UK, 2011. [Google Scholar]

- Farooq, A.; Jia, X.; Hu, J.; Zhou, J. Transferable Convolutional Neural Network for Weed Mapping With Multisensor Imagery. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4404816. [Google Scholar] [CrossRef]

- Gai, J.; Tang, L.; Steward, B. Plant localization and discrimination using 2D+ 3D computer vision for robotic intra-row weed control. In Proceedings of the 2016 ASABE Annual International Meeting, Orlando, FL, USA, 17–20 July 2016; p. 1. [Google Scholar]

- Rosebrock, A. Deep Learning for Computer Vision with Python: Starter Bundle; PyImageSearch: Philadelphia, PA, USA, 2017. [Google Scholar]

- Chollet, F. Deep Learning mit Python und Keras: Das Praxis-Handbuch vom Entwickler der Keras-Bibliothek; MITP-Verlags GmbH & Co. KG: Frechen, Germany, 2018. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Mohanty, S.P.; Hughes, D.P.; Salathé, M. Using deep learning for image-based plant disease detection. Front. Plant Sci. 2016, 7, 1419. [Google Scholar] [CrossRef] [PubMed]

- Karpathy, A.; Toderici, G.; Shetty, S.; Leung, T.; Sukthankar, R.; Fei-Fei, L. Large-scale video classification with convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1725–1732. [Google Scholar]

- Ramcharan, A.; Baranowski, K.; McCloskey, P.; Ahmed, B.; Legg, J.; Hughes, D. Using Transfer Learning for Image-Based Cassava Disease Detection. arXiv 2017, arXiv:1707.03717. [Google Scholar]

- Khan, M.N.; Anwar, S. Paradox Elimination in Dempster–Shafer Combination Rule with Novel Entropy Function: Application in Decision-Level Multi-Sensor Fusion. Sensors 2019, 19, 4810. [Google Scholar] [CrossRef] [PubMed]

- Jiang, W.; Luo, Y.; Qin, X.Y.; Zhan, J. An improved method to rank generalized fuzzy numbers with different left heights and right heights. J. Intell. Fuzzy Syst. 2015, 28, 2343–2355. [Google Scholar] [CrossRef]

- Dempster, A.P. Upper and lower probabilities induced by a multivalued mapping. In Classic Works of the Dempster-Shafer Theory of Belief Functions; Springer: Berlin/Heidelberg, Germany, 2008; pp. 57–72. [Google Scholar]

- Shafer, G. A Mathematical Theory of Evidence; Princeton University Press: Princeton, NJ, USA, 1976; Volume 42. [Google Scholar]

- Bossé, E.; Roy, J. Fusion of identity declarations from dissimilar sources using the Dempster-Shafer theory. Opt. Eng. 1997, 36, 648–657. [Google Scholar] [CrossRef]

- Dasarathy, B.V. Sensor Fusion: Architectures, Algorithms, and Applications VI. In Proceedings of the Sensor Fusion: Architectures, Algorithms, and Applications VI, Orlando, FL, USA, 3–5 April 2002; Volume 4731. [Google Scholar]

- Jiang, W.; Wei, B.; Liu, X.; Li, X.; Zheng, H. Intuitionistic fuzzy power aggregation operator based on entropy and its application in decision making. Int. J. Intell. Syst. 2018, 33, 49–67. [Google Scholar] [CrossRef]

- Ma, J.; Liu, W.; Miller, P.; Zhou, H. An evidential fusion approach for gender profiling. Inf. Sci. 2016, 333, 10–20. [Google Scholar] [CrossRef]

- Zhang, L.; Ding, L.; Wu, X.; Skibniewski, M.J. An improved Dempster–Shafer approach to construction safety risk perception. Knowl.-Based Syst. 2017, 132, 30–46. [Google Scholar] [CrossRef]

- Yuan, K.; Xiao, F.; Fei, L.; Kang, B.; Deng, Y. Modeling sensor reliability in fault diagnosis based on evidence theory. Sensors 2016, 16, 113. [Google Scholar] [CrossRef]

- Sabahi, F. A novel generalized belief structure comprising unprecisiated uncertainty applied to aphasia diagnosis. J. Biomed. Inform. 2016, 62, 66–77. [Google Scholar] [CrossRef]

- Deng, Y. Deng Entropy: A Generalized Shannon Entropy to Measure Uncertainty. 2015. Available online: http://vixra.org/pdf/1502.0222v1.pdf (accessed on 16 July 2025).

- Ye, F.; Chen, J.; Tian, Y. A robust DS combination method based on evidence correction and conflict redistribution. J. Sens. 2018, 2018, 6526018. [Google Scholar] [CrossRef]

- Khan, M.N.; Anwar, S. Time-Domain Data Fusion Using Weighted Evidence and Dempster–Shafer Combination Rule: Application in Object Classification. Sensors 2019, 19, 5187. [Google Scholar] [CrossRef] [PubMed]

- Tammina, S. Transfer learning using vgg-16 with deep convolutional neural network for classifying images. Int. J. Sci. Res. Publ. 2019, 9, 143–150. [Google Scholar] [CrossRef]

- Bali, S.; Tyagi, S. Evaluation of transfer learning techniques for classifying small surgical dataset. In Proceedings of the 2020 10th International Conference on Cloud Computing, Data Science & Engineering (Confluence), Noida, India, 29–31 January 2020; pp. 744–750. [Google Scholar]

- Kaur, T.; Gandhi, T.K. Automated brain image classification based on VGG-16 and transfer learning. In Proceedings of the 2019 International Conference on Information Technology (ICIT), Bhubaneswar, India, 19–21 December 2019; pp. 94–98. [Google Scholar]

- Murphy, R.R. Dempster-Shafer theory for sensor fusion in autonomous mobile robots. IEEE Trans. Robot. Autom. 2002, 14, 197–206. [Google Scholar] [CrossRef]

- Yong, D.; WenKang, S.; ZhenFu, Z.; Qi, L. Combining belief functions based on distance of evidence. Decis. Support Syst. 2004, 38, 489–493. [Google Scholar] [CrossRef]

- Han, D.Q.; Deng, Y.; Han, C.Z.; Hou, Z.Q. Weighted evidence combination based on distance of evidence and uncertainty measure. J. Infrared Millim. Waves 2011, 30, 396–400. [Google Scholar] [CrossRef]

- Wang, J.; Xiao, F.; Deng, X.; Fei, L.; Deng, Y. Weighted evidence combination based on distance of evidence and entropy function. Int. J. Distrib. Sens. Netw. 2016, 12, 3218784. [Google Scholar] [CrossRef]

- Jiang, W.; Wei, B.; Xie, C.; Zhou, D. An evidential sensor fusion method in fault diagnosis. Adv. Mech. Eng. 2016, 8, 1687814016641820. [Google Scholar] [CrossRef]

| Study | Sensor/Method | Approach/Architecture | Crop/Weed Type | Performance Metrics |

|---|---|---|---|---|

| Ahmed et al. (2022) [8] | Image-based | Circular Mean Intensity (CMI) | Broad-Leaf, Narrow-Leaf, No Weed | Classification accuracy: 96% |

| Ota et al. (2022) [9] | RGB camera | K-means Clustering | Cabbage + Weeds | score for crop detection: 0.919; score for weed detection: 0.999 |

| Chen et al. (2020) [10] | RGB camera | SVM + Multi-feature fusion | Corn + Weeds | Detection accuracy: 97.5% |

| Rodriguez-Garlito et al. (2022) [11] | Satellite (Sentinel-2A) | CNN vs. RF vs. K-means | Aquatic Plants | CNN best performance |

| Garibaldi-Marquez et al. (2022) [12] | RGB | CNN vs. SVM | Zea mays, NLW, BLW | CNN accuracy: 97% |

| Potena et al. (2016) [13] | RGB + NIR images | Dual CNNs (segmentation + classification) | Crops and Weeds | mAP: 98.7% |

| Sharpe et al. (2020) [14] | RGB camera | CNN | 3 Vegetation Classes | F1 score: 95% |

| Reddy et al. (2022) [15] | RGB camera | AlexNet, ResNet, DenseNet | Weedcrop, Deepweed, Plantseedlings | AlexNet performed best |

| Espejo-Garcia et al. (2020) [16] | RGB camera | Fine-tuned CNN + SVM/XGBoost | Tomato, Cotton, Nightshade, Velvetleaf | F1 score: 99.29% |

| Sunil et al. (2022) [17] | RGB camera | VGG16 vs. SVM | Corn + 10 Total Classes | F1 score: 93–97.5%, Corn: 100% |

| Yu et al. (2019) [18] | RGB camera | VGGNet vs. GoogLeNet | Weeds in Bermuda Grass | F1 score > 95% |

| Al-Badri et al. (2022) [19] | RGB camera | VGG-16, ResNet50, Inception-v3 | Rumex Obtusifolius in Grassland | F1 score: 95.9% |

| Olsen et al. (2019) [20] | RGB camera | InceptionV3, ResNet50 | 8 Weed Classes | Accuracy > 95% |

| Du et al. (2021) [21] | RGB camera | MobileNetV2 on Jetson Nano | General Weeds | High accuracy, low latency |

| Dodge et al. (2016) [22] | RGB camera with simulated noise | CNN noise robustness study | General | Accuracy decline under blur/noise |

| Farooq et al. (2021) [32] | Dual-camera | Partial transfer learning (CNN) | Weed classes | F1 score: 77.4% |

| Gai et al. (2016) [33] | Kinect v2 (depth + RGB) | Adaboost vs. Neural Net | Lettuce, Broccoli | Test error ∼6% (Adaboost) |

| Dataset | Cocklebur | Pigweed | Ragweed | Total |

|---|---|---|---|---|

| Train image set (used for training the classifiers) | 544 | 505 | 552 | 1601 |

| Validation image set (used for tuning hyperparameters) | 65 | 62 | 69 | 196 |

| Test image set (used for classification report) | 65 | 62 | 69 | 196 |

| Total images | 674 | 629 | 690 | 1993 |

| True Label | Cocklebur | 65 | 0 | 0 |

| Ragweed | 4 | 55 | 3 | |

| Pigweed | 0 | 4 | 65 | |

| Cocklebur | Ragweed | Pigweed | ||

| Predicted label | ||||

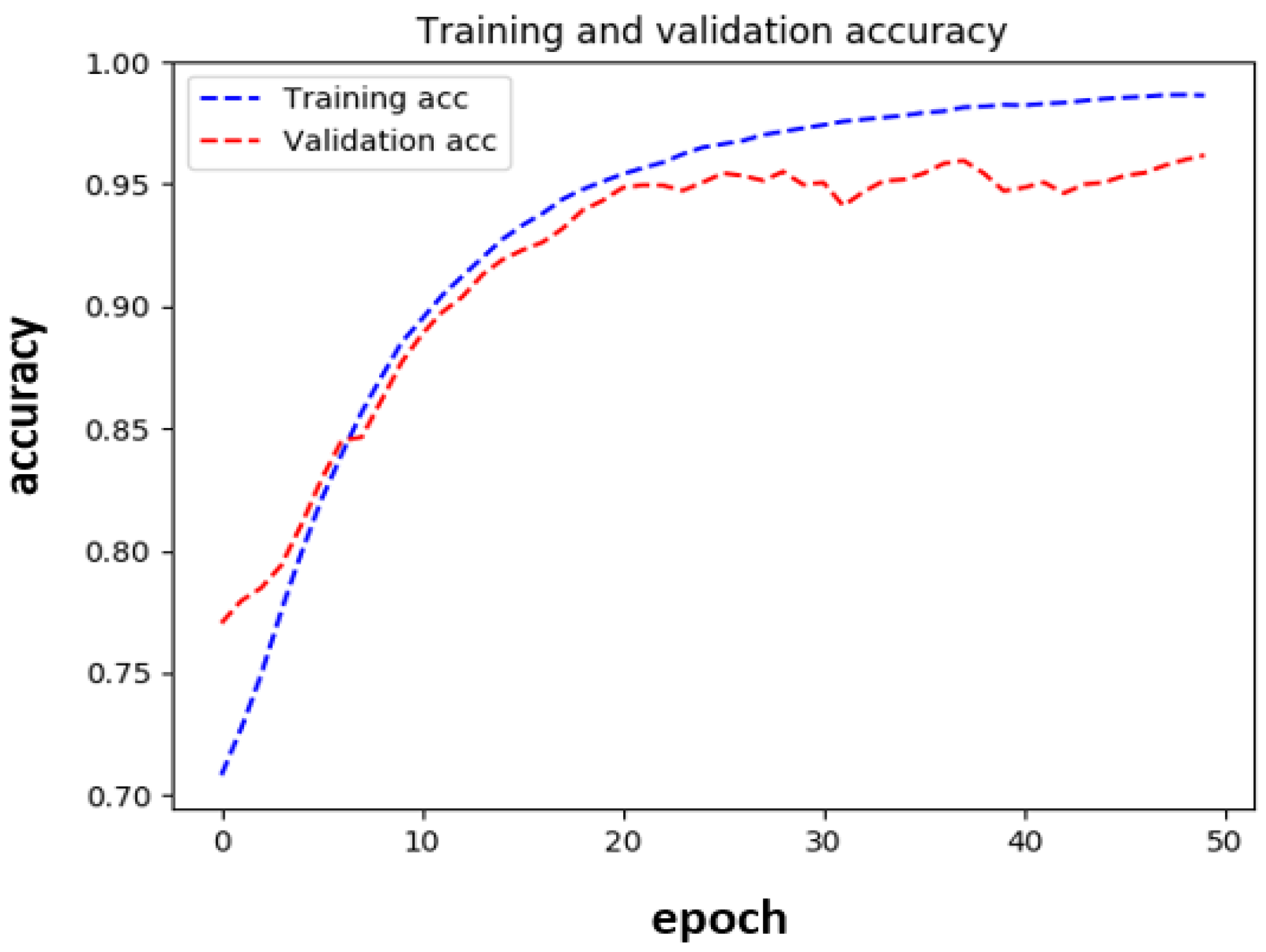

| Model-1 | Model-2 | Model-3 | |||||||

|---|---|---|---|---|---|---|---|---|---|

| C | P | R | C | P | R | C | P | R | |

| Precision | 0.79 | 0.74 | 0.89 | 0.96 | 0.92 | 0.79 | 0.94 | 0.94 | 0.96 |

| Recall | 1 | 0.68 | 0.74 | 1 | 0.7 | 0.94 | 1 | 0.89 | 0.94 |

| F1 score | 0.88 | 0.71 | 0.81 | 0.98 | 0.79 | 0.86 | 0.96 | 0.94 | 0.95 |

| Training accuracy | 0.85 | 0.97 | 0.99 | ||||||

| Validation accuracy | 0.8 | 0.93 | 0.97 | ||||||

| Testing accuracy | 0.81 | 0.88 | 0.94 | ||||||

| Image size | 150 × 150 × 3 | 299 × 299 × 3 | 150 × 150 × 3 | ||||||

| Inference time | 0.064 s (CPU) | 3.68 s (CPU) | 0.266 s (CPU), 0.032 s (GPU) | ||||||

| Methods | |||

|---|---|---|---|

| Dempster [42] | m(A) = 0, m(B) = 0.8969, m(C) = 0.1031 | m(A) = 0, m(B) = 0.8969, m(C) = 0.1031 | m(A) = 0, m(B) = 0.8969, m(C) = 0.1031 |

| Murphy [57] | m(A) = 0.0964, m(B) = 0.8119, m(C) = 0.0917, m(AC) = 0 | m(A) = 0.4619, m(B) = 0.4497, m(C) = 0.0794, m(AC) = 0.0090 | m(A) = 0.8362, m(B) = 0.1147, m(C) = 0.0410, m(AC) = 0.0081 |

| Deng [58] | m(A) = 0.0964, m(B) = 0.8119, m(C) = 0.0917, m(AC) = 0 | m(A) = 0.4974, m(B) = 0.4054, m(C) = 0.0888, m(AC) = 0.0084 | m(A) = 0.9089, m(B) = 0.0444, m(C) = 0.0379, m(AC) = 0.0089 |

| Han [59] | m(A) = 0.0964, m(B) = 0.8119, m(C) = 0.0917, m(AC) = 0 | m(A) = 0.5188, m(B) = 0.3802, m(C) = 0.0926, m(AC) = 0.0084 | m(A) = 0.9246, m(B) = 0.0300, m(C) = 0.0362, m(AC) = 0.0092 |

| Wang [60] recalculated | m(A) = 0.0964, m(B) = 0.8119, m(C) = 0.0917, m(AC) = 0 | m(A) = 0.6495, m(B) = 0.2367, m(C) = 0.1065, m(AC) = 0.0079 | m(A) = 0.9577, m(B) = 0.0129, m(C) = 0.0200, m(AC) = 0.0094 |

| Jiang [61] | m(A) = 0.0964, m(B) = 0.8119, m(C) = 0.0917, m(AC) = 0 | m(A) = 0.7614, m(B) = 0.1295, m(C) = 0.0961, m(AC) = 0.0130 | m(A) = 0.9379, m(B) = 0.0173, m(C) = 0.0361, m(AC) = 0.0087 |

| Proposed | m(A) = 0.0057, m(B) = 0.9691, m(C) = 0.0252, m(AC) = 0 | m(A) = 0.7207, m(B) = 0.1541, m(C) = 0.1178m (AC) = 0.0070 | m(A) = 0.9638, m(B) = 0.0019, m(C) = 0.0224, m(AC) = 0.0117 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khan, M.N.; Rahi, A.; Hasan, M.A.; Anwar, S. Decision-Level Multi-Sensor Fusion to Improve Limitations of Single-Camera-Based CNN Classification in Precision Farming: Application in Weed Detection. Computation 2025, 13, 174. https://doi.org/10.3390/computation13070174

Khan MN, Rahi A, Hasan MA, Anwar S. Decision-Level Multi-Sensor Fusion to Improve Limitations of Single-Camera-Based CNN Classification in Precision Farming: Application in Weed Detection. Computation. 2025; 13(7):174. https://doi.org/10.3390/computation13070174

Chicago/Turabian StyleKhan, Md. Nazmuzzaman, Adibuzzaman Rahi, Mohammad Al Hasan, and Sohel Anwar. 2025. "Decision-Level Multi-Sensor Fusion to Improve Limitations of Single-Camera-Based CNN Classification in Precision Farming: Application in Weed Detection" Computation 13, no. 7: 174. https://doi.org/10.3390/computation13070174

APA StyleKhan, M. N., Rahi, A., Hasan, M. A., & Anwar, S. (2025). Decision-Level Multi-Sensor Fusion to Improve Limitations of Single-Camera-Based CNN Classification in Precision Farming: Application in Weed Detection. Computation, 13(7), 174. https://doi.org/10.3390/computation13070174