Short-Term Load Forecasting in Distribution Substation Using Autoencoder and Radial Basis Function Neural Networks: A Case Study in India

Abstract

1. Introduction

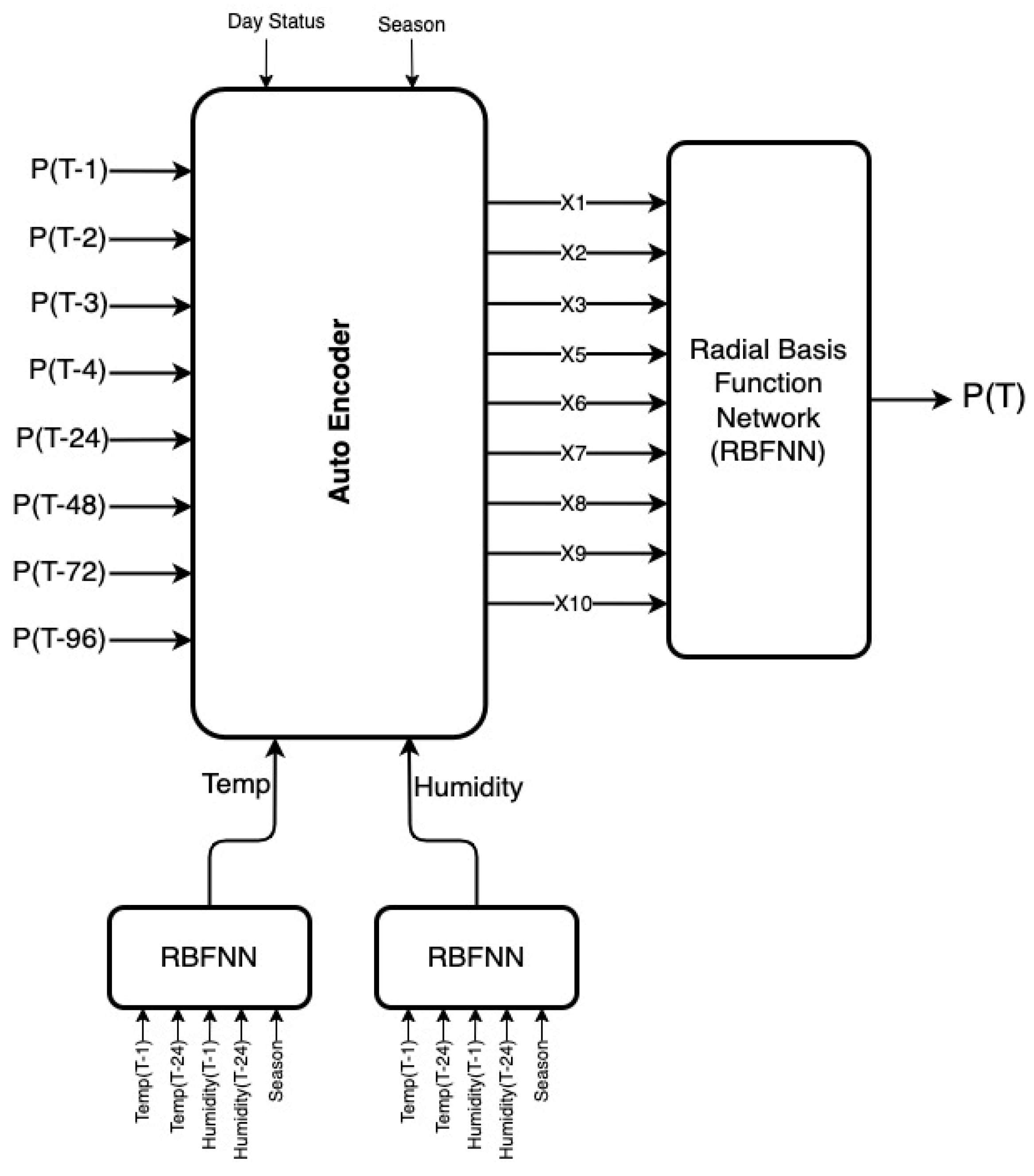

- Electric power is forecasted one hour ahead by considering the status of the day, i.e., weekend/weekday. As the 33/11 kV substation is located in Godishala, Warangal, where all offices and colleges are closed on Sunday, only Sunday is considered the weekend.

- Electric power is forecasted one hour ahead by considering the season. In India, there are three seasons, i.e., Winter (November–February), Summer (March–June), and Rainy (July–October).

- Electric power is forecasted one hour ahead by considering the weather condition in terms of temperature and humidity.

- Electric power is forecasted one hour ahead by considering the last four hours of load data, i.e., P(T-1), P(T-2), P(T-3), and P(T-4), and by considering the load of the last four days, i.e., P(T-24), P(T-48), P(T-72), and P(T-96).

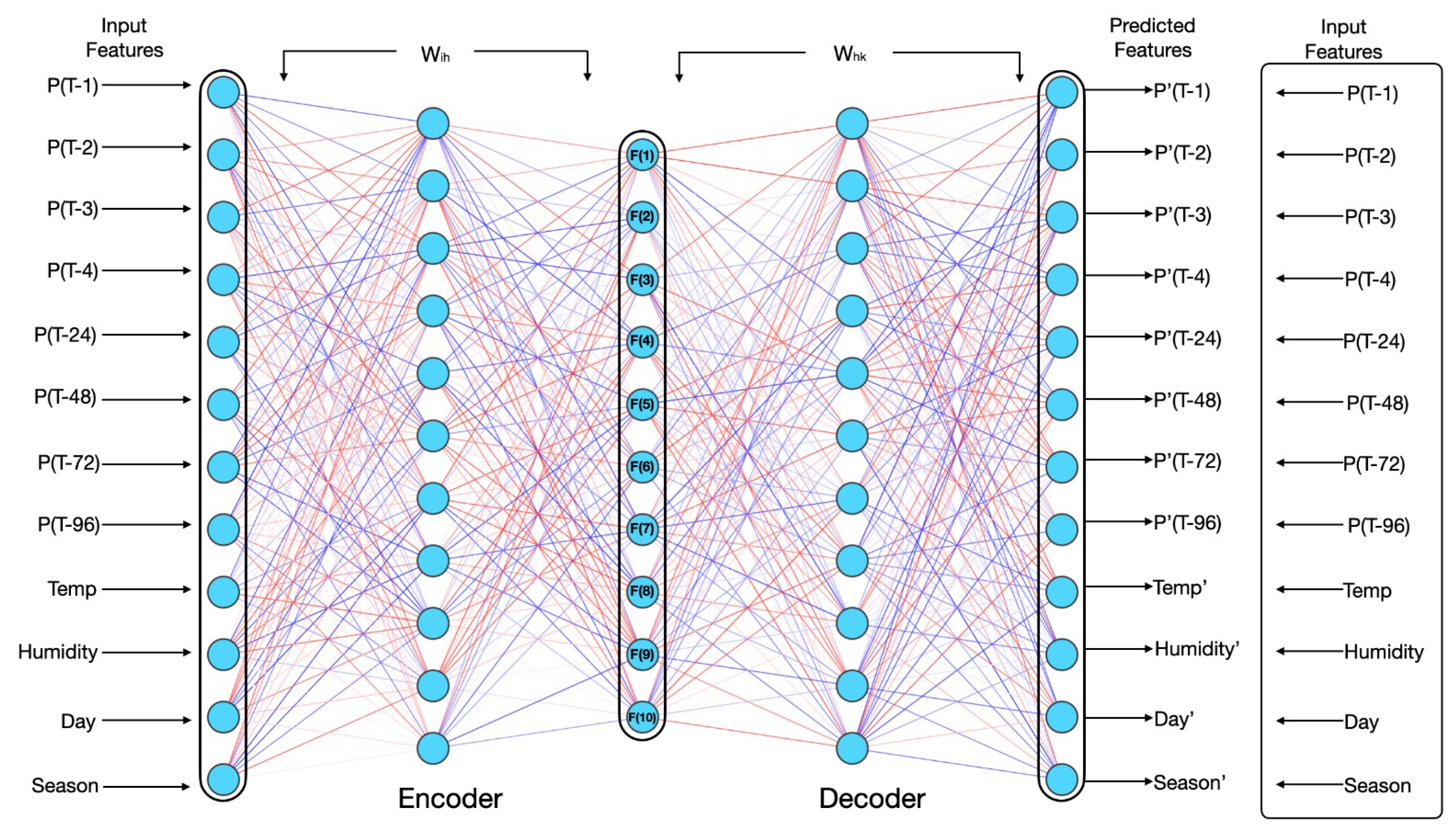

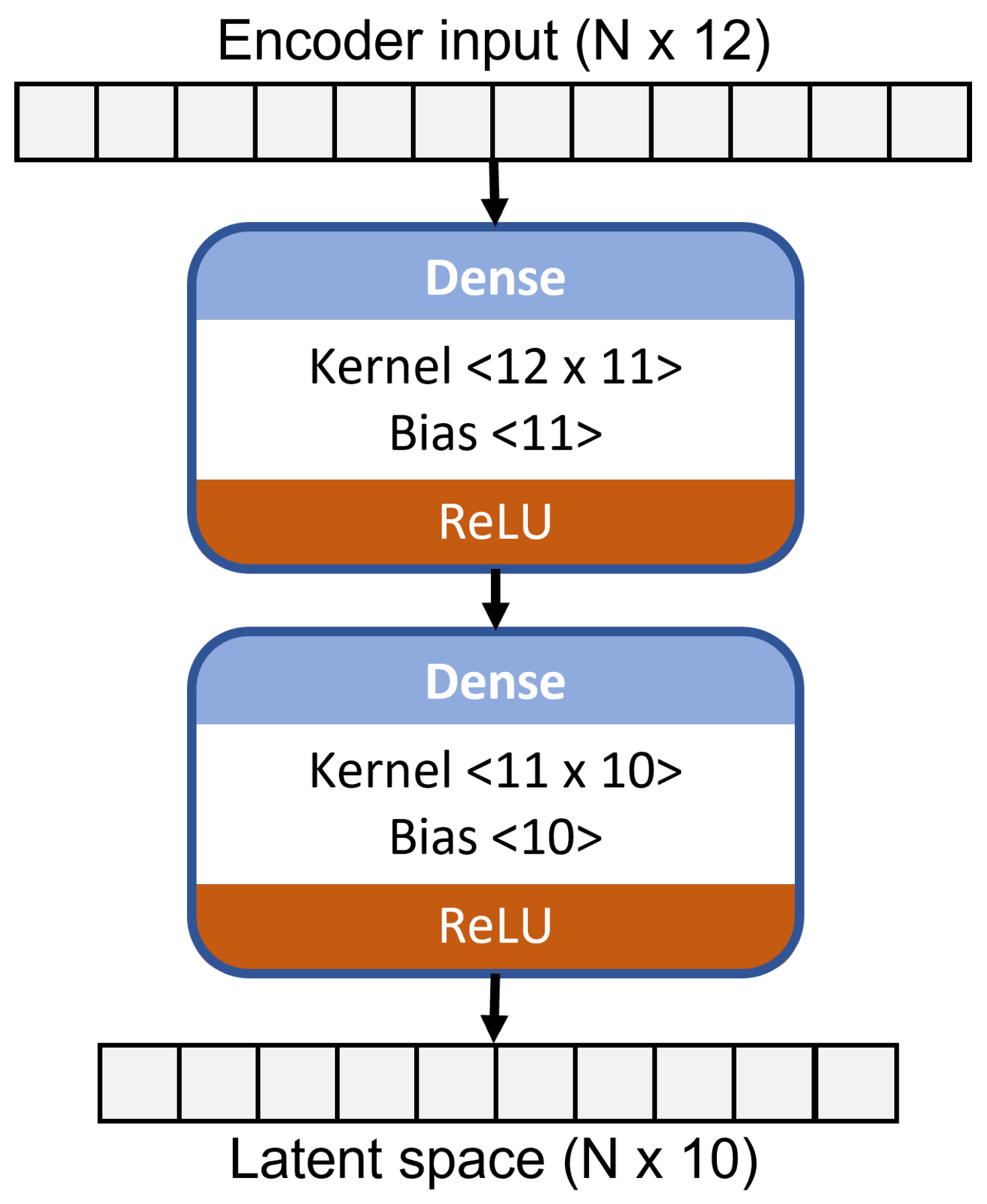

- A new optimal autoencoder architecture is developed to reduce the dimensions of the dataset from 8664 × 12 to 8664 × 9.

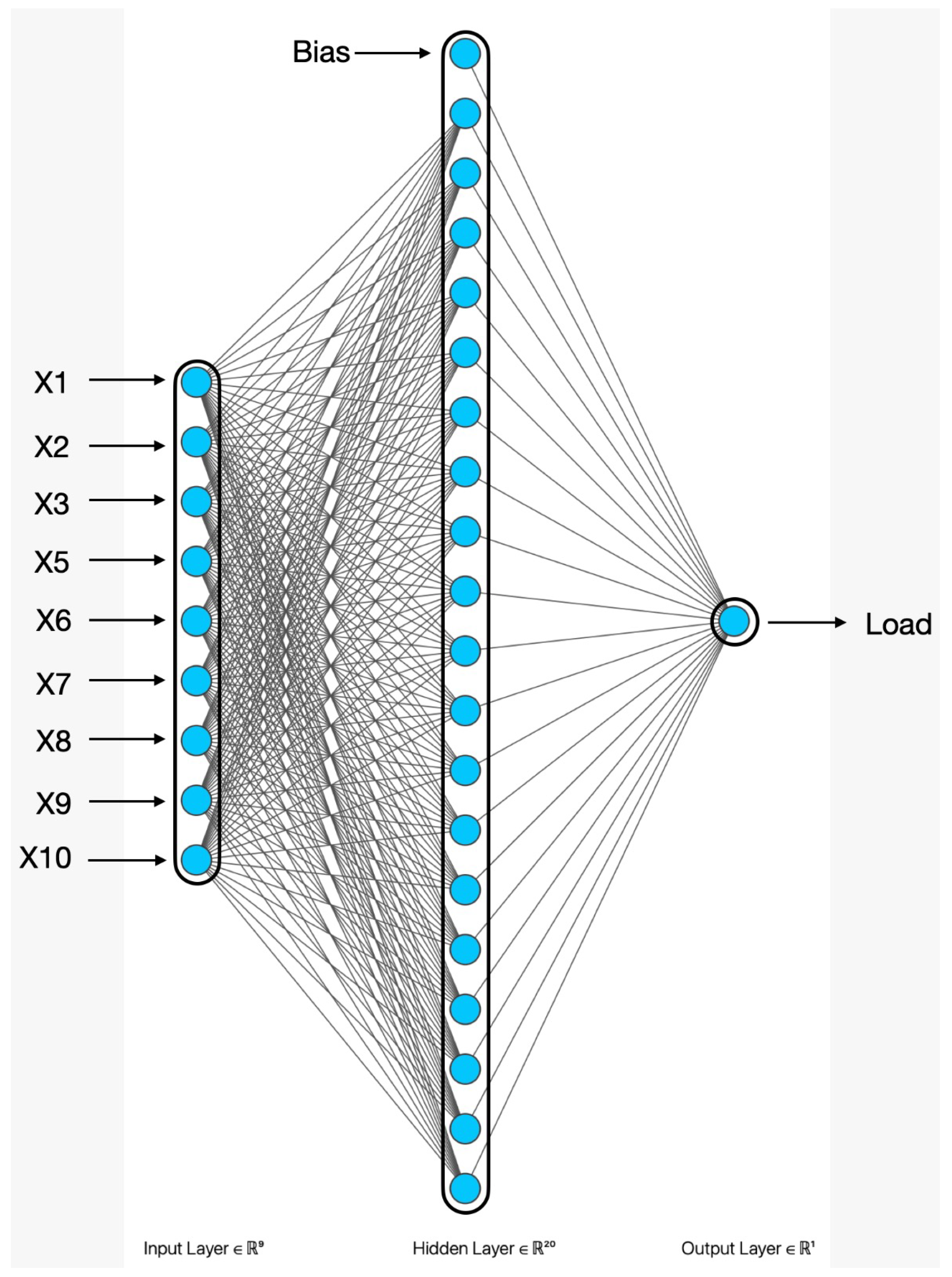

- A new optimal RBFNN architecture is developed to forecast the load using the compressed data.

2. Materials and Methods

2.1. Active Power Load Dataset

2.2. Dimensionality Reduction Using Autoencoder (AE)

2.3. Radial Basis Function Neural Network (RBFNN)

- Electricity load patterns are highly nonlinear and depend on multiple factors (e.g., time, temperature, holidays). RBFNN uses Gaussian radial basis functions that allow it to approximate complex functions more effectively than simple linear models.

- Unlike deep learning models (e.g., LSTMs, CNNs), RBFNNs require fewer training epochs because they rely on localized activation functions.

- Traditional RBFNNs rely on raw input features, which may contain noise or irrelevant information.

3. Results

- Dimensionality reduction using AE;

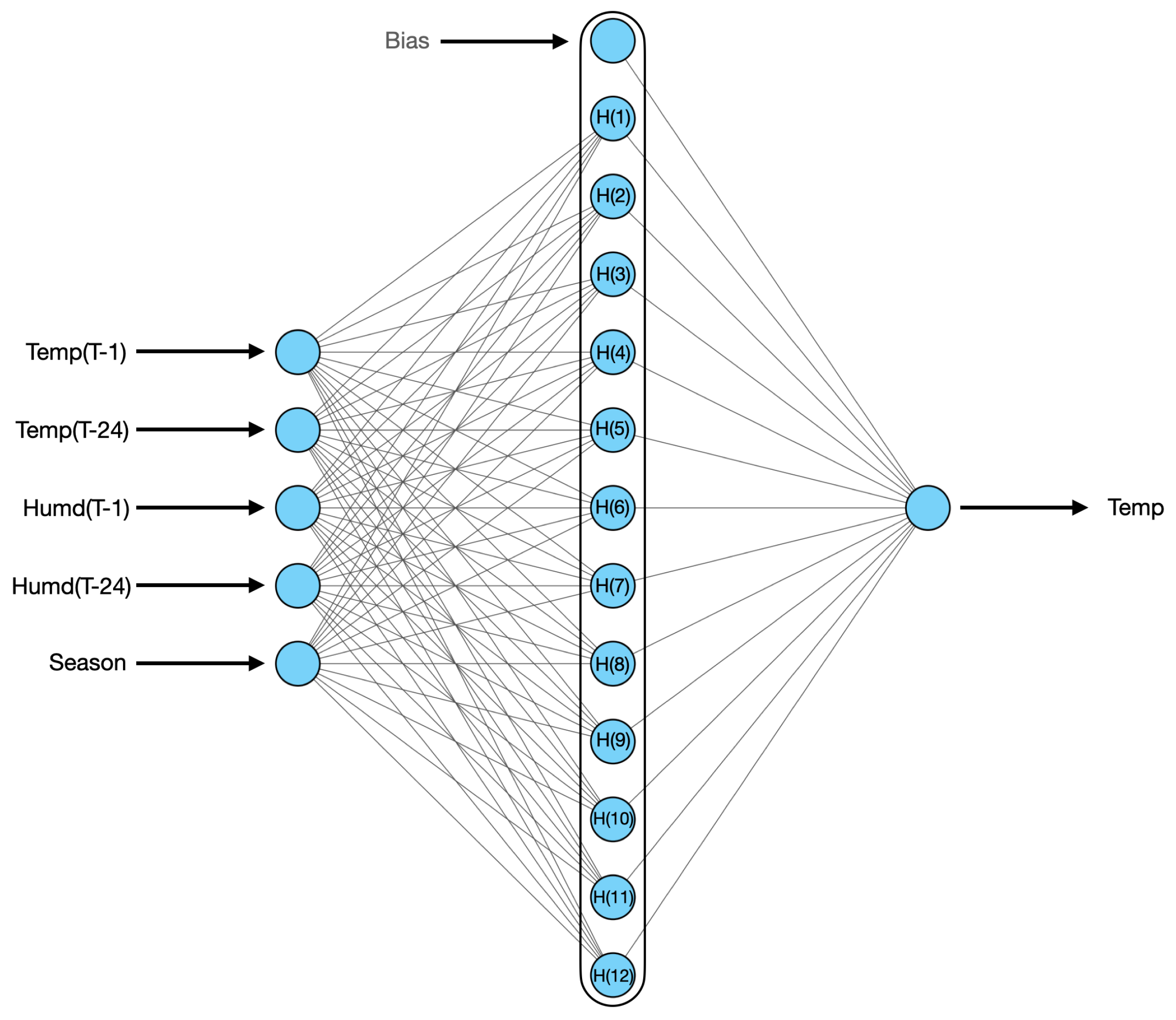

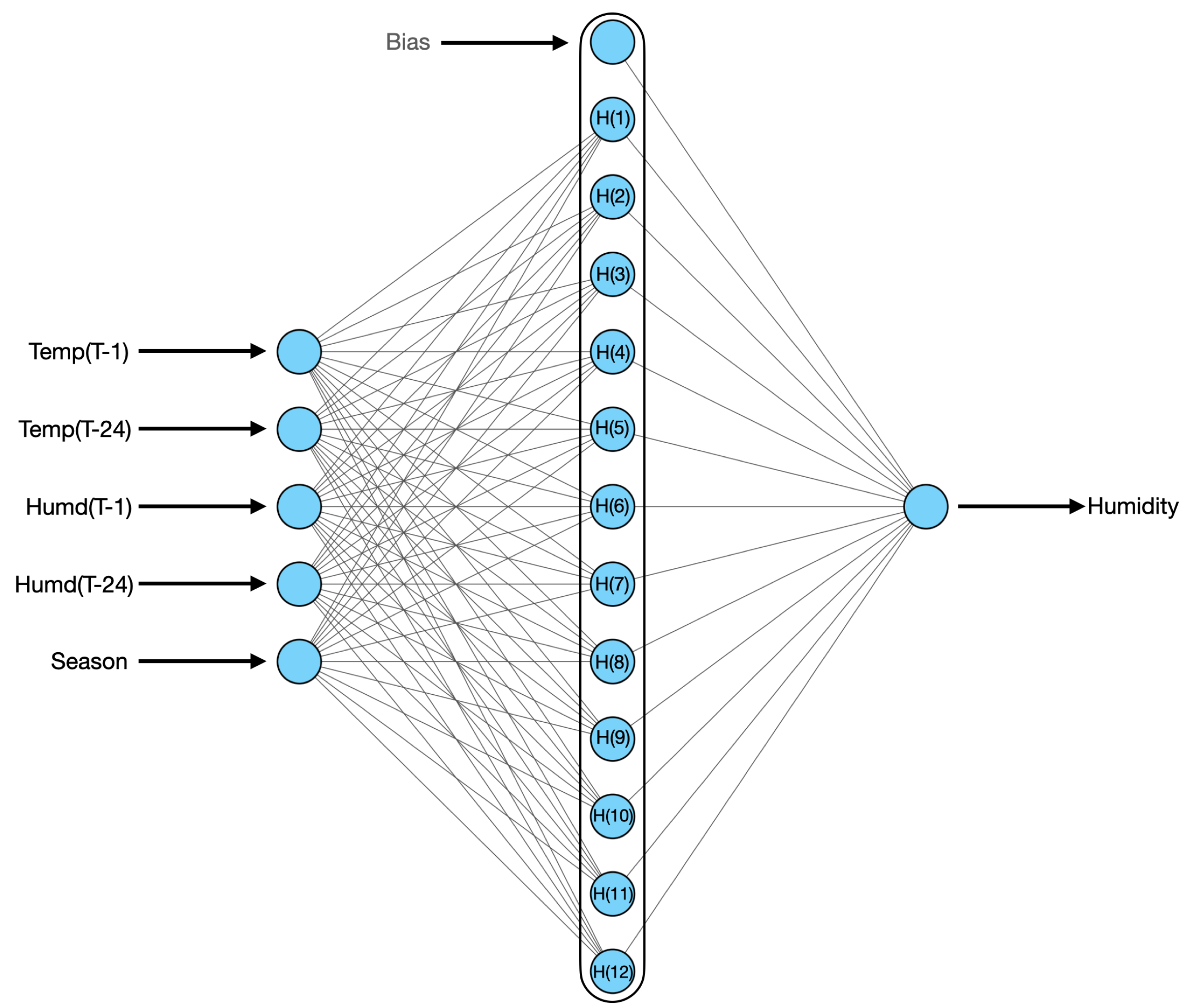

- Temperature forecasting using RBFNN;

- Humidity forecasting using RBFNN;

- Load forecasting using RBFNN;

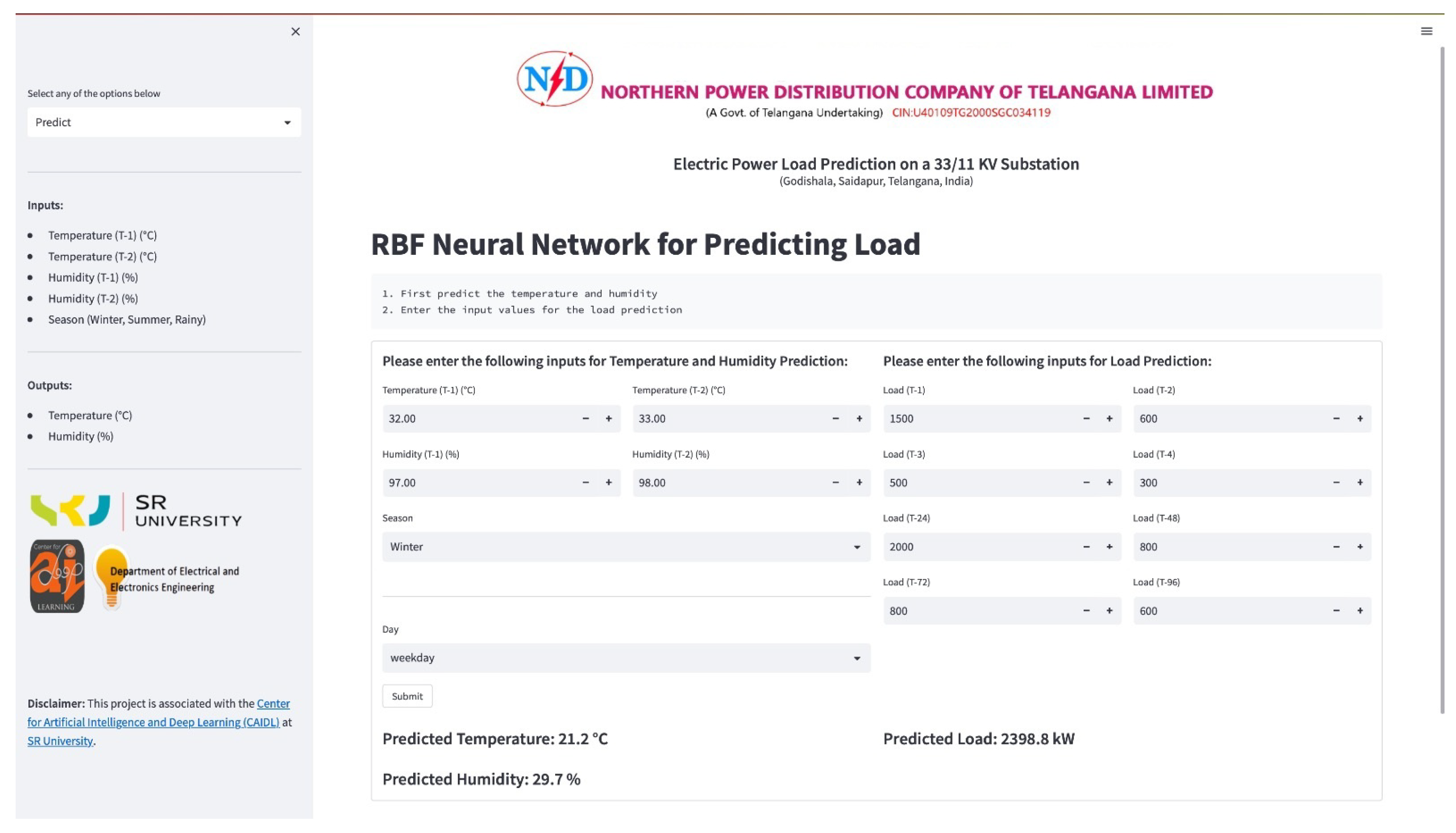

- Web application development.

3.1. Data Insights

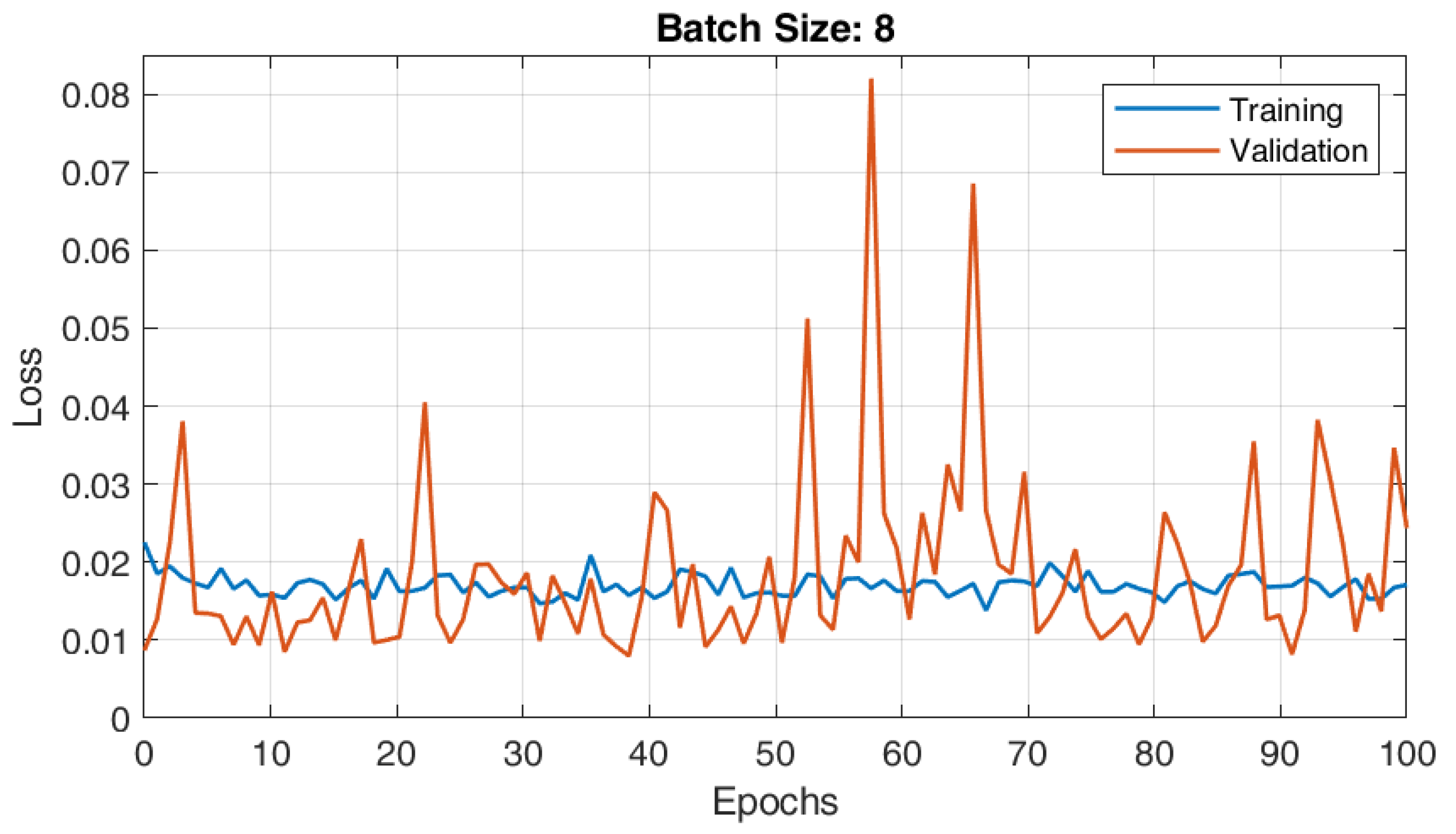

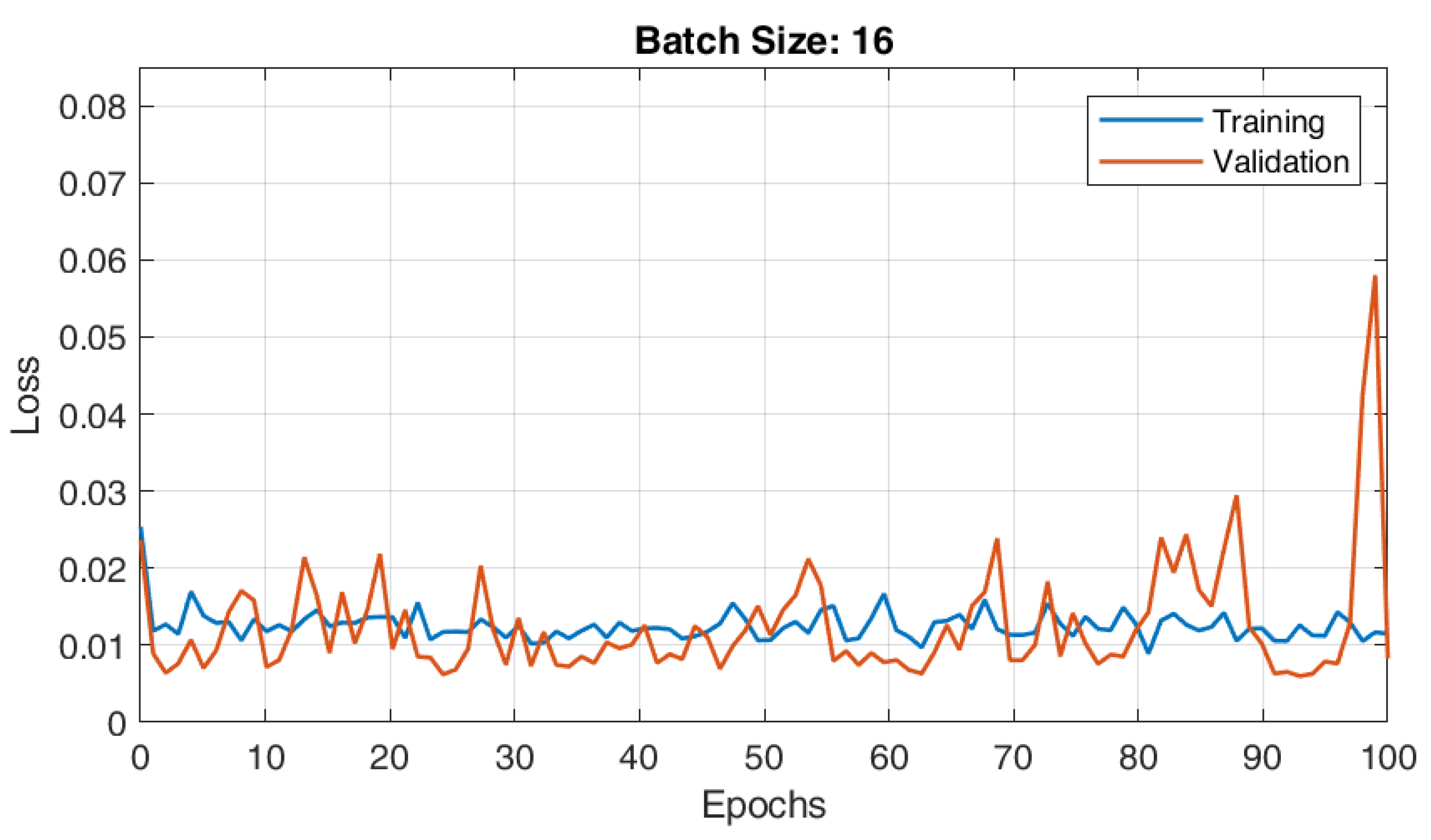

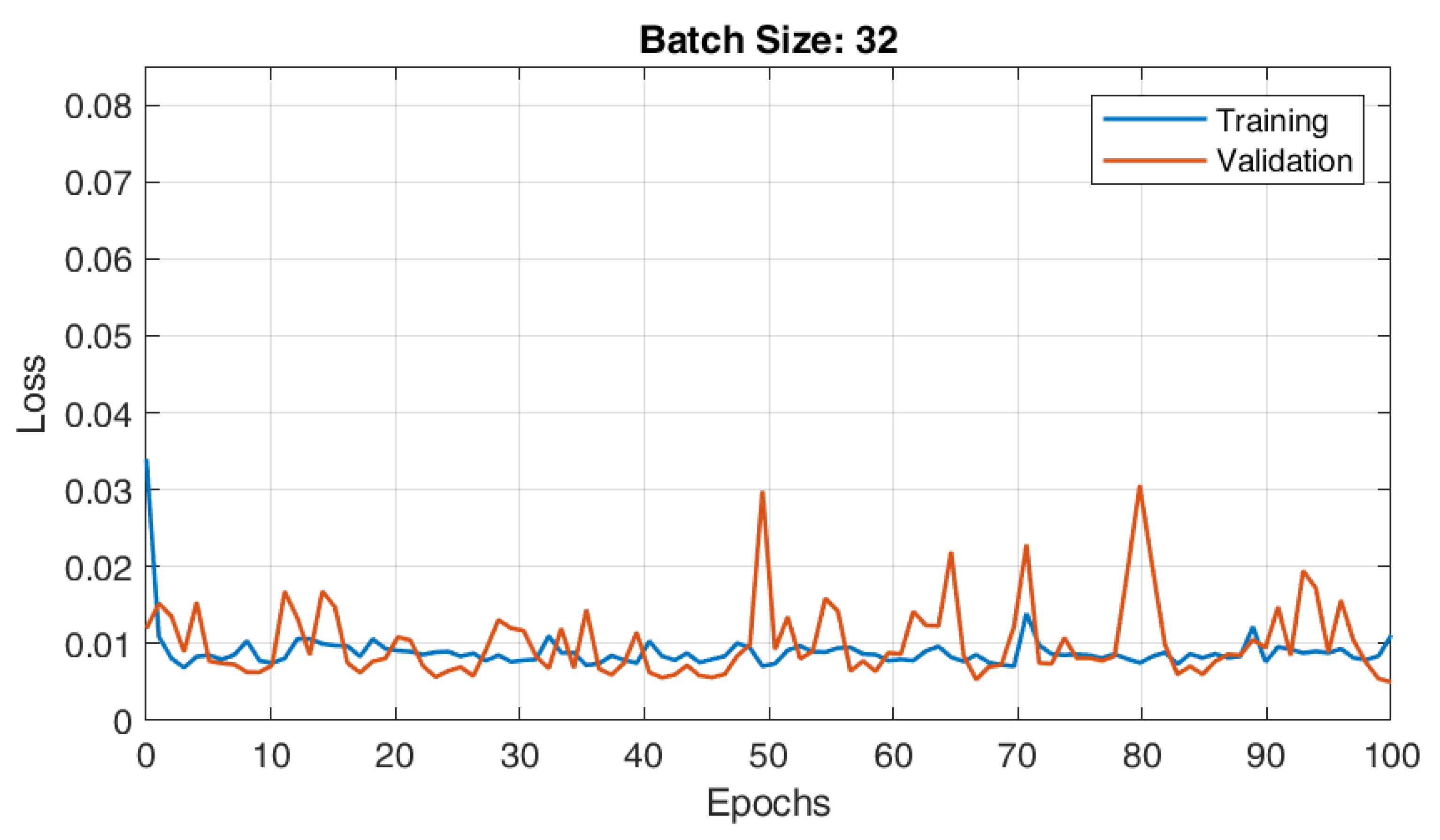

3.2. Optimal AE for Dimensionality Reduction

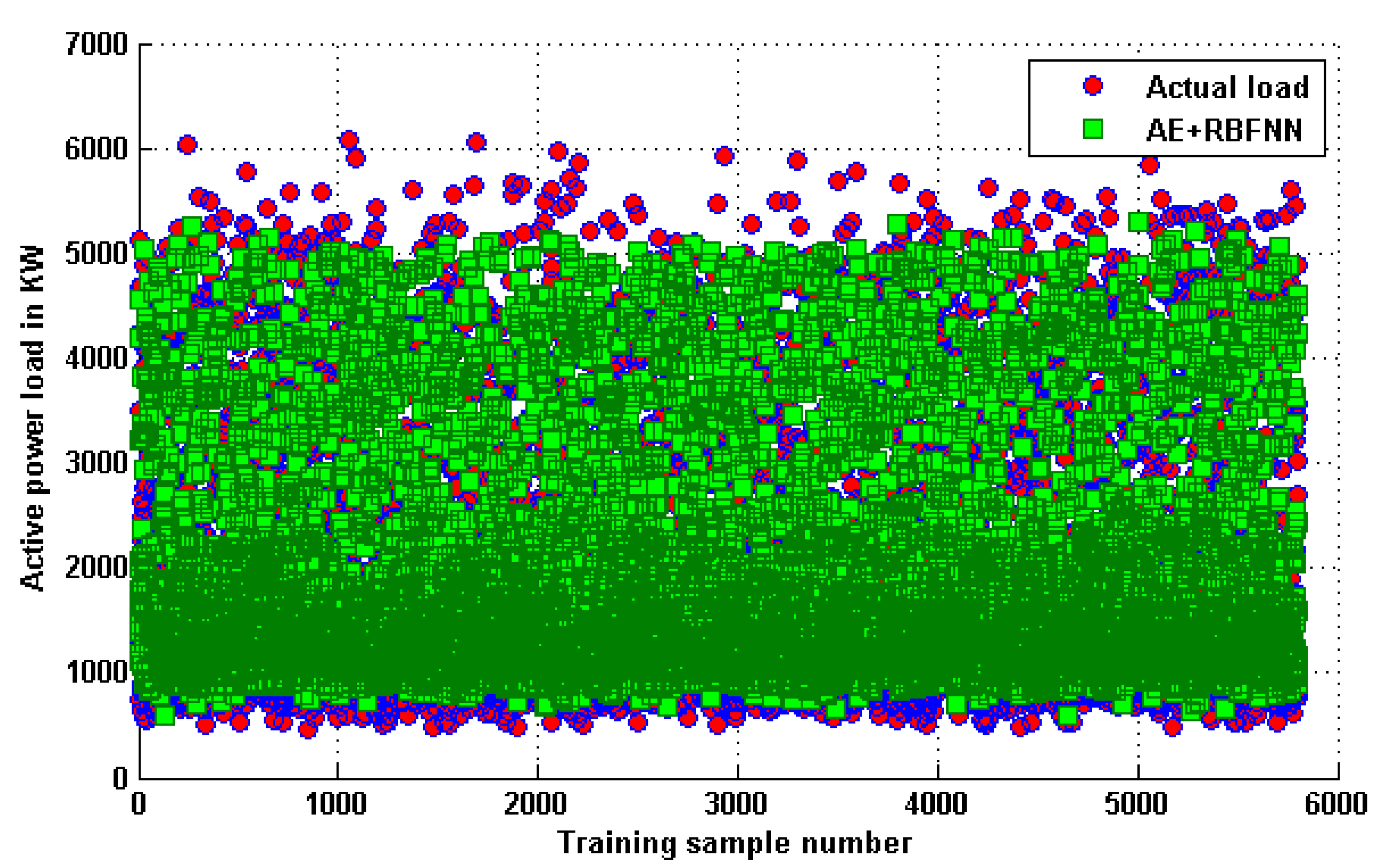

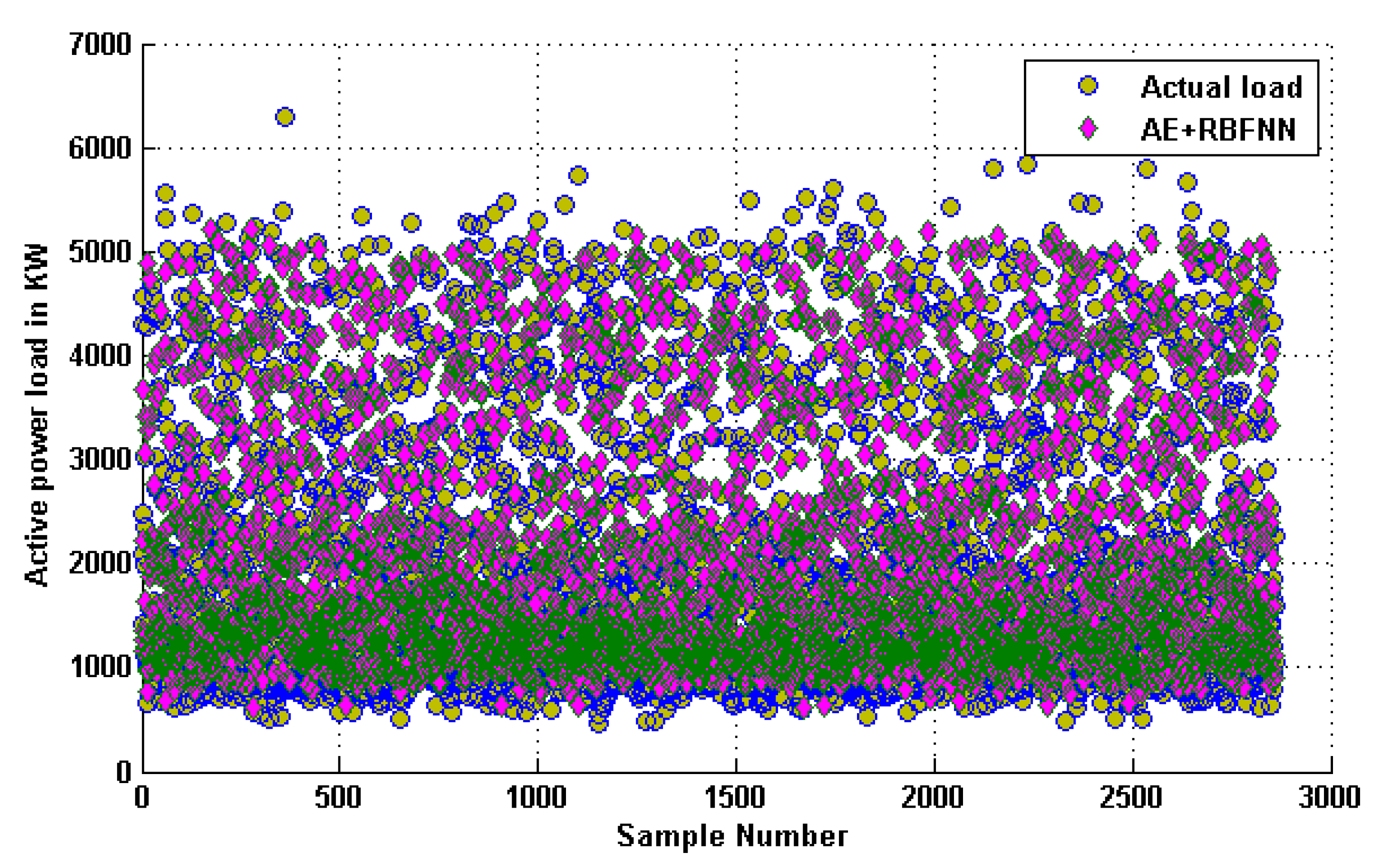

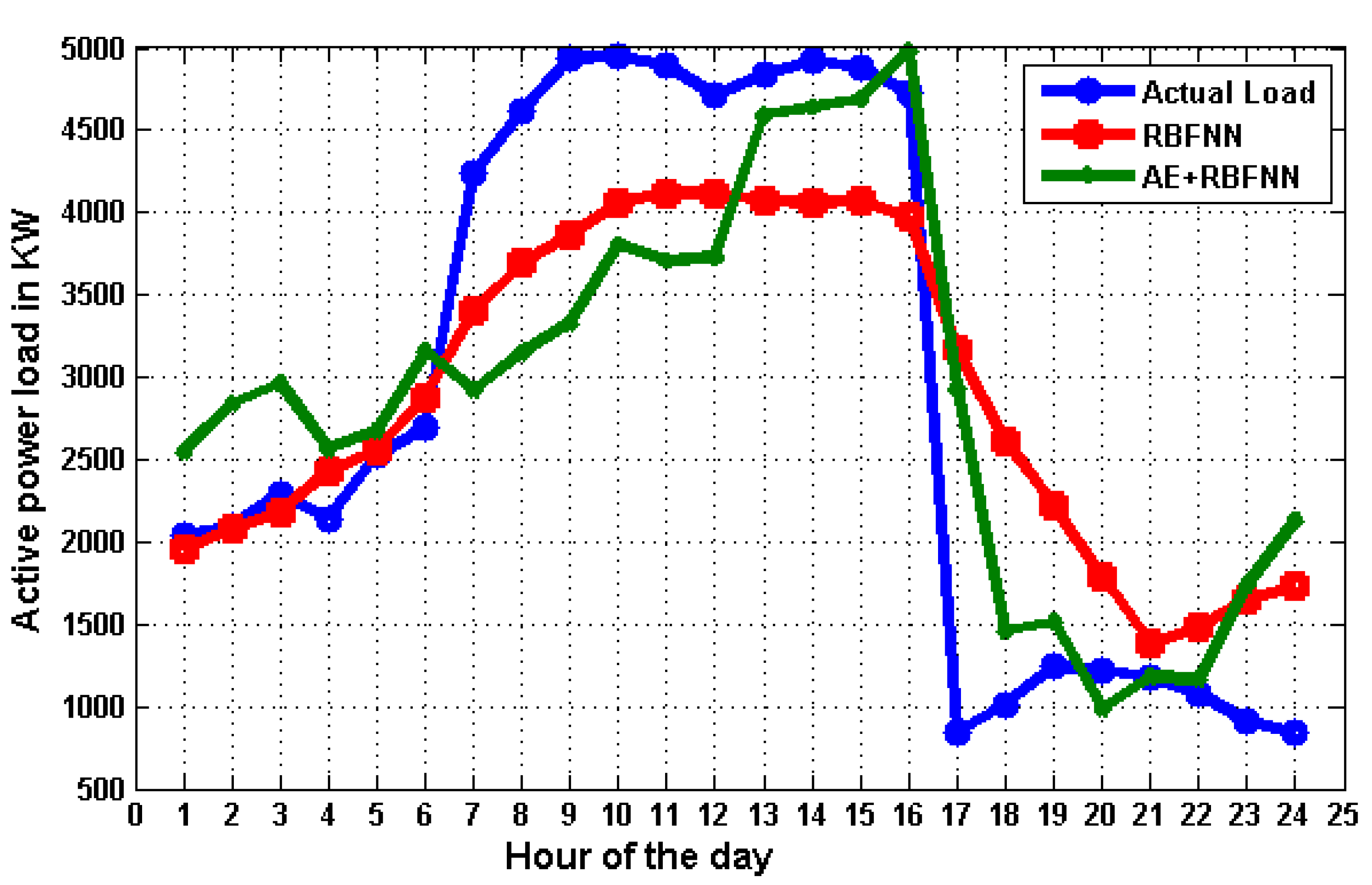

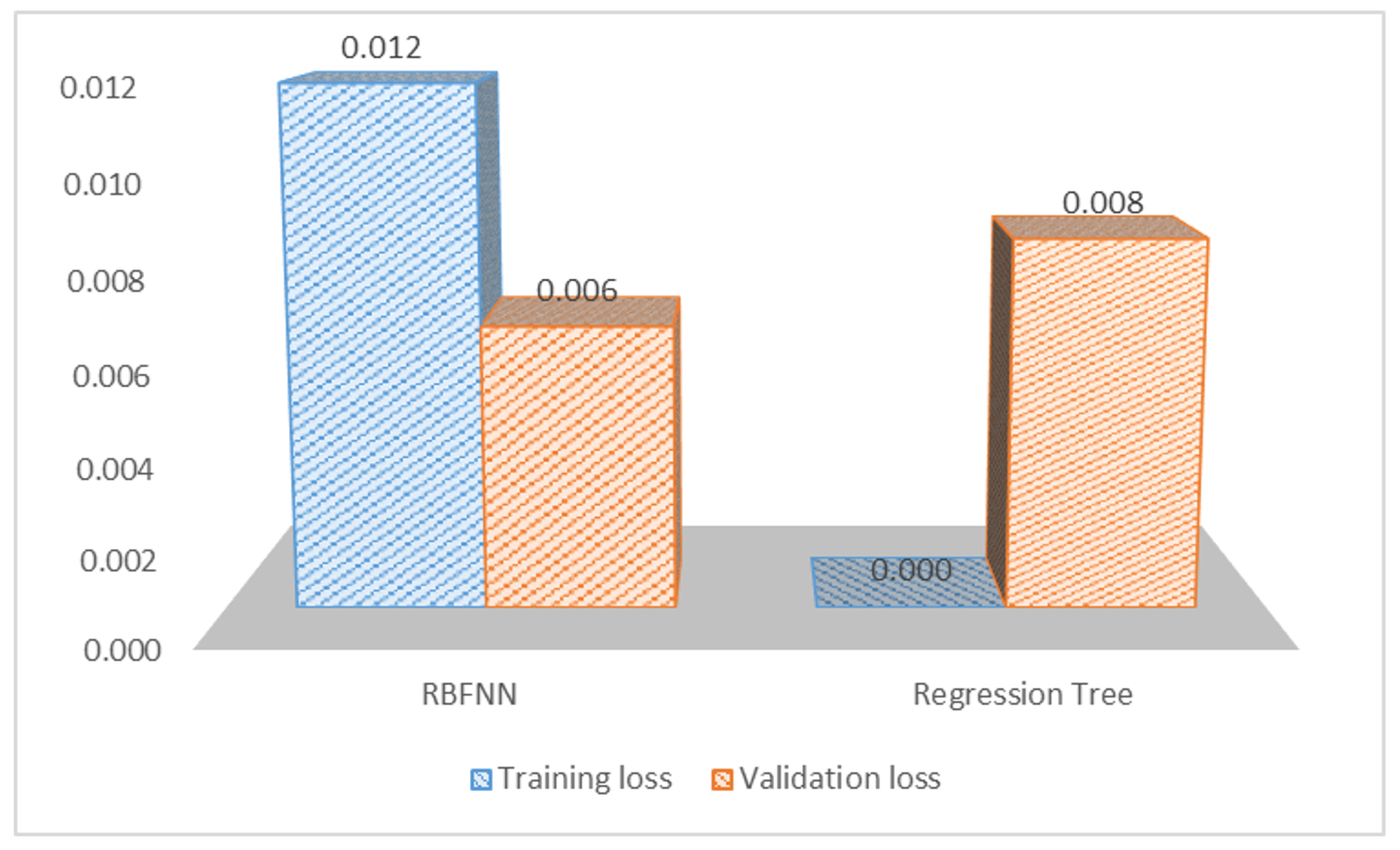

3.3. Optimal RBFNN Model for Forecasting Active Power Load

3.4. Comparative Analysis

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Almeshaiei, E.; Soltan, H. A methodology for electric power load forecasting. Alex. Eng. J. 2011, 50, 137–144. [Google Scholar] [CrossRef]

- Vanting, N.B.; Ma, Z.; Jørgensen, B.N. A scoping review of deep neural networks for electric load forecasting. Energy Inform. 2021, 4, 49. [Google Scholar] [CrossRef]

- Giglio, E.; Luzzani, G.; Terranova, V.; Trivigno, G.; Niccolai, A.; Grimaccia, F. An efficient artificial intelligence energy management system for urban building integrating photovoltaic and storage. IEEE Access 2023, 11, 18673–18688. [Google Scholar] [CrossRef]

- Mansoor, M.; Grimaccia, F.; Leva, S.; Mussetta, M. Comparison of echo state network and feed-forward neural networks in electrical load forecasting for demand response programs. Math. Comput. Simul. 2021, 184, 282–293. [Google Scholar] [CrossRef]

- Dagdougui, H.; Bagheri, F.; Le, H.; Dessaint, L. Neural network model for short-term and very-short-term load forecasting in district buildings. Energy Build. 2019, 203, 109408. [Google Scholar] [CrossRef]

- Su, P.; Tian, X.; Wang, Y.; Deng, S.; Zhao, J.; An, Q.; Wang, Y. Recent trends in load forecasting technology for the operation optimization of distributed energy system. Energies 2017, 10, 1303. [Google Scholar] [CrossRef]

- Neusser, L.; Canha, L.N. Real-time load forecasting for demand side management with only a few days of history available. In Proceedings of the IEEE 4th International Conference on Power Engineering, Energy and Electrical Drives, Istanbul, Turkey, 13–17 May 2013; pp. 911–914. [Google Scholar]

- Jahromi, A.J.; Mohammadi, M.; Afrasiabi, S.; Afrasiabi, M.; Aghaei, J. Probability density function forecasting of residential electric vehicles charging profile. Appl. Energy 2022, 323, 119616. [Google Scholar] [CrossRef]

- Massaoudi, M.; S. Refaat, S.; Abu-Rub, H.; Chihi, I.; Oueslati, F.S. PLS-CNN-BiLSTM: An end-to-end algorithm-based Savitzky–Golay smoothing and evolution strategy for load forecasting. Energies 2020, 13, 5464. [Google Scholar] [CrossRef]

- Yin, L.; Xie, J. Multi-temporal-spatial-scale temporal convolution network for short-term load forecasting of power systems. Appl. Energy 2021, 283, 116328. [Google Scholar] [CrossRef]

- Syed, D.; Abu-Rub, H.; Ghrayeb, A.; Refaat, S.S.; Houchati, M.; Bouhali, O.; Bañales, S. Deep learning-based short-term load forecasting approach in smart grid with clustering and consumption pattern recognition. IEEE Access 2021, 9, 54992–55008. [Google Scholar] [CrossRef]

- Munkhammar, J.; van der Meer, D.; Widén, J. Very short term load forecasting of residential electricity consumption using the Markov-chain mixture distribution (MCM) model. Appl. Energy 2021, 282, 116180. [Google Scholar] [CrossRef]

- Guo, W.; Che, L.; Shahidehpour, M.; Wan, X. Machine-Learning based methods in short-term load forecasting. Electr. J. 2021, 34, 106884. [Google Scholar] [CrossRef]

- Timur, O.; Üstünel, H.Y. Short-Term Electric Load Forecasting for an Industrial Plant Using Machine Learning-Based Algorithms. Energies 2025, 18, 1144. [Google Scholar] [CrossRef]

- Eskandari, H.; Imani, M.; Moghaddam, M.P. Convolutional and recurrent neural network based model for short-term load forecasting. Electr. Power Syst. Res. 2021, 195, 107173. [Google Scholar] [CrossRef]

- Guo, W.; Liu, S.; Weng, L.; Liang, X. Power Grid Load Forecasting Using a CNN-LSTM Network Based on a Multi-Modal Attention Mechanism. Appl. Sci. 2025, 15, 2435. [Google Scholar] [CrossRef]

- Perçuku, A.; Minkovska, D.; Hinov, N. Enhancing Electricity Load Forecasting with Machine Learning and Deep Learning. Technologies 2025, 13, 59. [Google Scholar] [CrossRef]

- Cao, W.; Liu, H.; Zhang, X.; Zeng, Y.; Ling, X. Short-Term Residential Load Forecasting Based on the Fusion of Customer Load Uncertainty Feature Extraction and Meteorological Factors. Sustainability 2025, 17, 1033. [Google Scholar] [CrossRef]

- Sheng, Z.; Wang, H.; Chen, G.; Zhou, B.; Sun, J. Convolutional residual network to short-term load forecasting. Appl. Intell. 2021, 51, 2485–2499. [Google Scholar] [CrossRef]

- Hong, T.; Pinson, P.; Fan, S.; Zareipour, H.; Troccoli, A.; Hyndman, R.J. Probabilistic energy forecasting: Global energy forecasting competition 2014 and beyond. Int. J. Forecast. 2016, 32, 896–913. [Google Scholar] [CrossRef]

- Veeramsetty, V.; Mohnot, A.; Singal, G.; Salkuti, S.R. Short term active power load prediction on a 33/11 kv substation using regression models. Energies 2021, 14, 2981. [Google Scholar] [CrossRef]

- Li, B.; Liao, Y.; Liu, S.; Liu, C.; Wu, Z. Research on Short-Term Load Forecasting of LSTM Regional Power Grid Based on Multi-Source Parameter Coupling. Energies 2025, 18, 516. [Google Scholar] [CrossRef]

- Zhao, Y.; Li, J.; Chen, C.; Guan, Q. A Diffusion–Attention-Enhanced Temporal (DATE-TM) Model: A Multi-Feature-Driven Model for Very-Short-Term Household Load Forecasting. Energies 2025, 18, 486. [Google Scholar] [CrossRef]

- Veeramsetty, V.; Sai Pavan Kumar, M.; Salkuti, S.R. Platform-Independent Web Application for Short-Term Electric Power Load Forecasting on 33/11 kV Substation Using Regression Tree. Computers 2022, 11, 119. [Google Scholar] [CrossRef]

- Abumohsen, M.; Owda, A.Y.; Owda, M. Electrical load forecasting using LSTM, GRU, and RNN algorithms. Energies 2023, 16, 2283. [Google Scholar] [CrossRef]

- Subray, S.; Tschimben, S.; Gifford, K. Towards Enhancing Spectrum Sensing: Signal Classification Using Autoencoders. IEEE Access 2021, 9, 82288–82299. [Google Scholar] [CrossRef]

- Atienza, R. Advanced Deep Learning with Keras: Apply Deep Learning Techniques, Autoencoders, GANs, Variational Autoencoders, Deep Reinforcement Learning, Policy Gradients, and More; Packt Publishing Ltd.: Birmingham, UK, 2018. [Google Scholar]

- Veeramsetty, V.; Kiran, P.; Sushma, M.; Babu, A.M.; Rakesh, R.; Raju, K.; Salkuti, S.R. Active power load data dimensionality reduction using autoencoder. In Power Quality in Microgrids: Issues, Challenges and Mitigation Techniques; Springer: Berlin/Heidelberg, Germany, 2023; pp. 471–494. [Google Scholar]

- Wurzberger, F.; Schwenker, F. Learning in deep radial basis function networks. Entropy 2024, 26, 368. [Google Scholar] [CrossRef]

- Yu, Q.; Hou, Z.; Bu, X.; Yu, Q. RBFNN-based data-driven predictive iterative learning control for nonaffine nonlinear systems. IEEE Trans. Neural Networks Learn. Syst. 2019, 31, 1170–1182. [Google Scholar] [CrossRef]

- Liu, J. Radial Basis Function (RBF) Neural Network Control for Mechanical Systems: Design, Analysis and Matlab Simulation; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Lu, Y.; Cheung, Y.M.; Tang, Y.Y. Self-adaptive multiprototype-based competitive learning approach: A k-means-type algorithm for imbalanced data clustering. IEEE Trans. Cybern. 2019, 51, 1598–1612. [Google Scholar] [CrossRef]

- Lillicrap, T.P.; Santoro, A.; Marris, L.; Akerman, C.J.; Hinton, G. Backpropagation and the brain. Nat. Rev. Neurosci. 2020, 21, 335–346. [Google Scholar] [CrossRef]

- Kandilogiannakis, G.; Mastorocostas, P.; Voulodimos, A.; Hilas, C. Short-Term Load Forecasting of the Greek Power System Using a Dynamic Block-Diagonal Fuzzy Neural Network. Energies 2023, 16, 4227. [Google Scholar] [CrossRef]

- Zaini, F.A.; Sulaima, M.F.; Razak, I.A.W.A.; Othman, M.L.; Mokhlis, H. Improved Bacterial Foraging Optimization Algorithm with Machine Learning-Driven Short-Term Electricity Load Forecasting: A Case Study in Peninsular Malaysia. Algorithms 2024, 17, 510. [Google Scholar] [CrossRef]

- Ng, W.W.; Xu, S.; Wang, T.; Zhang, S.; Nugent, C. Radial basis function neural network with localized stochastic-sensitive autoencoder for home-based activity recognition. Sensors 2020, 20, 1479. [Google Scholar] [CrossRef]

- Yang, Y.; Wang, P.; Gao, X. A novel radial basis function neural network with high generalization performance for nonlinear process modelling. Processes 2022, 10, 140. [Google Scholar] [CrossRef]

- Veeramsetty, V.; Kiran, P.; Sushma, M.; Salkuti, S.R. Weather Forecasting Using Radial Basis Function Neural Network in Warangal, India. Urban Sci. 2023, 7, 68. [Google Scholar] [CrossRef]

- Lee, C.M.; Ko, C.N. Short-term load forecasting using adaptive annealing learning algorithm based reinforcement neural network. Energies 2016, 9, 987. [Google Scholar] [CrossRef]

- Liu, P.; Zheng, P.; Chen, Z. Deep learning with stacked denoising auto-encoder for short-term electric load forecasting. Energies 2019, 12, 2445. [Google Scholar] [CrossRef]

- Vaccaro Benet, P.; Ugalde-Ramírez, A.; Gómez-Carmona, C.D.; Pino-Ortega, J.; Becerra-Patiño, B.A. Identification of Game Periods and Playing Position Activity Profiles in Elite-Level Beach Soccer Players Through Principal Component Analysis. Sensors 2024, 24, 7708. [Google Scholar] [CrossRef]

- Tsionas, M.; Assaf, A.G. Regression trees for hospitality data analysis. Int. J. Contemp. Hosp. Manag. 2023, 35, 2374–2387. [Google Scholar] [CrossRef]

- Song, C.Y. A Study on Learning Parameters in Application of Radial Basis Function Neural Network Model to Rotor Blade Design Approximation. Appl. Sci. 2021, 11, 6133. [Google Scholar] [CrossRef]

| Reference | Features | ||||||

|---|---|---|---|---|---|---|---|

| A | B | C | D | E | F | G | |

| [9] | √ | √ | - | - | - | - | LGBM, XGB, MLP |

| [10] | √ | - | - | - | - | - | CNN |

| [11] | √ | √ | √ | - | - | - | DNN |

| [12] | √ | - | - | - | - | - | MCM |

| [13] | √ | - | - | - | - | - | SVM, RF, LSTM |

| [14] | √ | - | - | √ | - | - | MLPNN, GDBT |

| [15] | √ | - | - | - | CNN | GRU, LSTM | |

| [16] | √ | - | - | - | CNN | LSTM | |

| [17] | √ | - | - | - | - | Linear Regression, LSTM | |

| [18] | √ | √ | √ | - | - | CNN | LSTM |

| [19] | √ | √ | - | √ | √ | - | CNN, DRN |

| [20] | √ | √ | - | - | - | - | Probabilistic Model |

| [21] | √ | - | - | - | - | - | Linear Regression |

| [22] | √ | - | - | - | - | - | IPSO, LSTM |

| [23] | √ | - | - | - | - | - | GRU, Diffusion Model |

| [24] | √ | √ | √ | √ | √ | - | Regression Tree |

| A: Historical load data | B: Historical temperature data | ||||||

| C: Historical humidity data | D: Day type | E: Season | |||||

| F: Dimensionality reduction | G: AI model | ||||||

| Feature | RBFNN | AE | RBFNN + AE |

|---|---|---|---|

| Handles Non-Linearity | √ | √ | √ |

| Feature Extraction | × | √ | √ |

| Robust to Noise | × | √ | √ |

| Fast Training | √ | √ | √ |

| Interpretable Model | √ | × | √ |

| Handling Missing Data | × | √ | √ |

| Task | Dataset | Model | Data Shape | |

|---|---|---|---|---|

| Training | Testing | |||

| Data compression | Load Dataset | AE | 5804 × 12 | 2860 × 12 |

| Load forecasting | Compressed Data | RBFNN | 5804 × 9 | 2860 × 9 |

| Weather forecasting | Weather Data | RBFNN | 5846 × 5 | 2890 × 5 |

| Parameter | P(T-1) | P(T-2) | P(T-3) | P(T-4) | P(T-24) | P(T-48) |

|---|---|---|---|---|---|---|

| count | 8664 | 8664 | 8664 | 8664 | 8664 | 8664 |

| mean | 2121 | 2121 | 2121 | 2121 | 2121 | 2121 |

| std | 1294 | 1294 | 1294 | 1294 | 1295 | 1295 |

| min | 458 | 456 | 456 | 456 | 456 | 432 |

| 25% | 1063 | 1063 | 1063 | 1063 | 1063 | 1062 |

| 50% | 1673 | 1673 | 1673 | 1673 | 1673 | 1673 |

| 75% | 3001 | 3001 | 3001 | 3001 | 3005 | 3006 |

| max | 6306 | 6306 | 6306 | 6306 | 6306 | 6306 |

| Parameter | P(T-72) | P(T-96) | DAY | SEASON | TEMP | HUMIDITY |

| count | 8664 | 8664 | 8664 | 8664 | 8664 | 8664 |

| mean | 2120 | 2120 | 0 | 1 | 81 | 68 |

| std | 1295 | 1295 | 0 | 1 | 9 | 21 |

| min | 432 | 412 | 0 | 0 | 50 | 14 |

| 25% | 1061 | 1061 | 0 | 0 | 77 | 52 |

| 50% | 1673 | 1673 | 0 | 1 | 81 | 72 |

| 75% | 3006 | 3007 | 0 | 2 | 86 | 87 |

| max | 6306 | 6306 | 1 | 2 | 108 | 102 |

| Latent Space | Mean Square Error | |||

|---|---|---|---|---|

| BS: 8 | BS: 16 | |||

| Training | Validation | Training | Validation | |

| 11 | 0.0180 | 0.0198 | 0.0091 | 0.0090 |

| 10 | 0.0117 | 0.0109 | 0.0082 | 0.0077 |

| 9 | 0.0116 | 0.0125 | 0.0125 | 0.0120 |

| 8 | 0.0140 | 0.0144 | 0.0123 | 0.0125 |

| 7 | 0.0228 | 0.0222 | 0.0173 | 0.0164 |

| 6 | 0.0285 | 0.0266 | 0.0336 | 0.0328 |

| 5 | 0.0345 | 0.0373 | 0.0369 | 0.0368 |

| 4 | 0.0349 | 0.0371 | 0.0392 | 0.0394 |

| Latent Space | Losses | |||

| BS: 32 | BS: 64 | |||

| Training | Validation | Training | Validation | |

| 11 | 0.0077 | 0.0078 | 0.0076 | 0.0075 |

| 10 | 0.0061 | 0.0064 | 0.0061 | 0.0062 |

| 9 | 0.0097 | 0.0096 | 0.0062 | 0.0062 |

| 8 | 0.0093 | 0.0090 | 0.0086 | 0.0083 |

| 7 | 0.0152 | 0.0146 | 0.0131 | 0.0130 |

| 6 | 0.0185 | 0.0182 | 0.0216 | 0.0212 |

| 5 | 0.0350 | 0.0368 | 0.0330 | 0.0316 |

| 4 | 0.0338 | 0.0351 | 0.0323 | 0.0311 |

| Hidden Neurons | Reconstructed Data Variance | Variance of Original Data | Variance Gap | Objective |

|---|---|---|---|---|

| 11 | 0.3092 | 0.0630 | −0.2463 | 0.200547 |

| 10 | 0.2974 | 0.0630 | −0.2345 | 0.16609 |

| 9 | 0.4507 | 0.0630 | −0.3877 | 0.276458 |

| 8 | 0.7597 | 0.0630 | −0.6967 | 0.542518 |

| 7 | 0.4626 | 0.0630 | −0.3997 | 0.419536 |

| 6 | 0.4945 | 0.0630 | −0.4315 | 0.543784 |

| 5 | 0.1415 | 0.0630 | −0.0786 | 0.552839 |

| 4 | 0.0685 | 0.0630 | −0.0055 | 0.490237 |

| Parameter | X1 | X2 | X3 | X4 | X5 | X6 | X7 | X8 | X9 | X10 |

|---|---|---|---|---|---|---|---|---|---|---|

| count | 8664 | 8664 | 8664 | 8664 | 8664 | 8664 | 8664 | 8664 | 8664 | 8664 |

| mean | 0.875 | 0.288 | 1.525 | 0.000 | 2.777 | 0.755 | 0.921 | 1.358 | 1.046 | 2.144 |

| std | 0.587 | 0.228 | 0.540 | 0.000 | 0.891 | 0.547 | 0.286 | 0.552 | 0.530 | 0.734 |

| min | 0.000 | 0.000 | 0.000 | 0.000 | 0.780 | 0.000 | 0.000 | 0.000 | 0.029 | 0.000 |

| 25% | 0.342 | 0.000 | 1.322 | 0.000 | 2.129 | 0.366 | 0.834 | 1.005 | 0.662 | 1.737 |

| 50% | 0.817 | 0.312 | 1.673 | 0.000 | 2.575 | 0.718 | 1.002 | 1.404 | 0.887 | 2.202 |

| 75% | 1.428 | 0.477 | 1.925 | 0.000 | 3.295 | 1.160 | 1.114 | 1.743 | 1.335 | 2.633 |

| max | 2.606 | 0.822 | 2.371 | 0.000 | 5.518 | 2.532 | 1.549 | 2.638 | 3.036 | 4.194 |

| Original Data Variance | Autoencoder Data Variance | PCA Data Variance | Variance Gap for Between Original and Autoencoder Data | Variance Gap for Between Original and PCA Data |

|---|---|---|---|---|

| 0.0630 | 0.2974 | 0.7540 | −0.2344 | −0.691 |

| Centroids | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 4 | 0.0082 | 0.0105 | 0.0057 | ||||||||

| 6 | 0.0066 | 0.0053 | 0.0044 | 0.0034 | 0.0032 | ||||||

| 8 | 0.0081 | 0.0077 | 0.0065 | 0.0033 | 0.0034 | 0.0043 | 0.0109 | ||||

| 10 | 0.0081 | 0.0049 | 0.0079 | 0.0079 | 0.0046 | 0.0079 | 0.0064 | 0.0032 | 0.0026 | ||

| 12 | 0.0075 | 0.0043 | 0.0034 | 0.0037 | 0.0039 | 0.0037 | 0.0024 | 0.0031 | 0.0024 | 0.0022 | 0.0028 |

| Width Factor | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Centroids | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

| 4 | 0.0165 | 0.0122 | 0.0105 | ||||||

| 6 | 0.0122 | 0.0139 | 0.0100 | 0.0112 | 0.0083 | ||||

| 8 | 0.0134 | 0.0100 | 0.0098 | 0.0088 | 0.0112 | 0.0095 | 0.0082 | ||

| 10 | 0.0188 | 0.0099 | 0.0098 | 0.0086 | 0.0086 | 0.0164 | 0.0087 | 0.0083 | 0.0083 |

| 12 | 0.0136 | 0.0135 | 0.0111 | 0.0090 | 0.0123 | 0.0095 | 0.0096 | 0.0084 | 0.0078 |

| Centroids | Width Factor (P) | |||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | 18 | 19 | 20 | 21 | 22 | 23 | |

| 7 | 0.0123 | 0.0099 | 0.0086 | 0.0162 | 0.0137 | 0.0075 | ||||||||||||||||

| 9 | 0.0106 | 0.0182 | 0.0077 | 0.0076 | 0.0086 | 0.0074 | 0.0071 | 0.0074 | ||||||||||||||

| 11 | 0.0117 | 0.0133 | 0.0079 | 0.0074 | 0.0075 | 0.0076 | 0.0083 | 0.0074 | 0.0170 | 0.0139 | ||||||||||||

| 13 | 0.0113 | 0.0101 | 0.0159 | 0.0087 | 0.0085 | 0.0085 | 0.0456 | 0.0072 | 0.0079 | 0.0153 | 0.0086 | 0.0101 | ||||||||||

| 15 | 0.0108 | 0.0108 | 0.0106 | 0.0081 | 0.0113 | 0.0075 | 0.0088 | 0.0082 | 0.0084 | 0.0135 | 0.0071 | 0.0109 | 0.0149 | 0.0077 | ||||||||

| 17 | 0.0107 | 0.0217 | 0.0109 | 0.0117 | 0.0109 | 0.0163 | 0.0082 | 0.0077 | 0.0074 | 0.0207 | 0.0245 | 0.0088 | 0.0070 | 0.0119 | 0.0085 | 0.0068 | ||||||

| 19 | 0.0132 | 0.0098 | 0.0090 | 0.0090 | 0.0102 | 0.0093 | 0.0173 | 0.0085 | 0.0083 | 0.0070 | 0.0065 | 0.0123 | 0.0073 | 0.0086 | 0.0084 | 0.0102 | 0.0125 | 0.0098 | ||||

| 21 | 0.0113 | 0.0116 | 0.0092 | 0.0087 | 0.0086 | 0.0106 | 0.0078 | 0.0193 | 0.0096 | 0.0097 | 0.0088 | 0.0307 | 0.0148 | 0.0074 | 0.0097 | 0.0172 | 0.0141 | 0.0112 | 0.0071 | 0.0069 | ||

| 23 | 0.0127 | 0.0184 | 0.0101 | 0.0083 | 0.0141 | 0.0080 | 0.0155 | 0.0067 | 0.0081 | 0.0078 | 0.0077 | 0.0071 | 0.0071 | 0.0066 | 0.0102 | 0.0200 | 0.0130 | 0.0082 | 0.0067 | 0.0180 | 0.0108 | 0.0104 |

| Centroids | Width Factor (P) | |||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | 18 | 19 | 20 | 21 | 22 | 23 | |

| 7 | 0.0128 | 0.0097 | 0.0091 | 0.0102 | 0.0092 | 0.0093 | ||||||||||||||||

| 9 | 0.0110 | 0.0091 | 0.0090 | 0.0089 | 0.0085 | 0.0089 | 0.0091 | 0.0089 | ||||||||||||||

| 11 | 0.0122 | 0.0104 | 0.0088 | 0.0088 | 0.0082 | 0.0095 | 0.0087 | 0.0088 | 0.0102 | 0.0087 | ||||||||||||

| 13 | 0.0118 | 0.0112 | 0.0091 | 0.0098 | 0.0085 | 0.0088 | 0.0086 | 0.0086 | 0.0094 | 0.0102 | 0.0098 | 0.0087 | ||||||||||

| 15 | 0.0116 | 0.0108 | 0.0102 | 0.0113 | 0.0083 | 0.0085 | 0.0092 | 0.0095 | 0.0079 | 0.0102 | 0.0086 | 0.0095 | 0.0103 | 0.0100 | ||||||||

| 17 | 0.0113 | 0.0106 | 0.0112 | 0.0097 | 0.0086 | 0.0093 | 0.0090 | 0.0092 | 0.0104 | 0.0096 | 0.0132 | 0.0098 | 0.0087 | 0.0101 | 0.0091 | 0.0106 | ||||||

| 19 | 0.0128 | 0.0102 | 0.0113 | 0.0108 | 0.0101 | 0.0090 | 0.0099 | 0.0111 | 0.0114 | 0.0084 | 0.0119 | 0.0085 | 0.0094 | 0.0104 | 0.0099 | 0.0097 | 0.0092 | 0.0131 | ||||

| 21 | 0.0120 | 0.0102 | 0.0106 | 0.0100 | 0.0101 | 0.0096 | 0.0090 | 0.0090 | 0.0098 | 0.0114 | 0.0090 | 0.0101 | 0.0125 | 0.0090 | 0.0088 | 0.0140 | 0.0111 | 0.0098 | 0.0094 | 0.0102 | ||

| 23 | 0.0127 | 0.0111 | 0.0101 | 0.0102 | 0.0107 | 0.0127 | 0.0110 | 0.0130 | 0.0104 | 0.0089 | 0.0098 | 0.0083 | 0.0104 | 0.0118 | 0.0112 | 0.0106 | 0.0104 | 0.0127 | 0.0095 | 0.0108 | 0.0101 | 0.0130 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Veeramsetty, V.; Konda, P.K.; Dongari, R.C.; Salkuti, S.R. Short-Term Load Forecasting in Distribution Substation Using Autoencoder and Radial Basis Function Neural Networks: A Case Study in India. Computation 2025, 13, 75. https://doi.org/10.3390/computation13030075

Veeramsetty V, Konda PK, Dongari RC, Salkuti SR. Short-Term Load Forecasting in Distribution Substation Using Autoencoder and Radial Basis Function Neural Networks: A Case Study in India. Computation. 2025; 13(3):75. https://doi.org/10.3390/computation13030075

Chicago/Turabian StyleVeeramsetty, Venkataramana, Prabhu Kiran Konda, Rakesh Chandra Dongari, and Surender Reddy Salkuti. 2025. "Short-Term Load Forecasting in Distribution Substation Using Autoencoder and Radial Basis Function Neural Networks: A Case Study in India" Computation 13, no. 3: 75. https://doi.org/10.3390/computation13030075

APA StyleVeeramsetty, V., Konda, P. K., Dongari, R. C., & Salkuti, S. R. (2025). Short-Term Load Forecasting in Distribution Substation Using Autoencoder and Radial Basis Function Neural Networks: A Case Study in India. Computation, 13(3), 75. https://doi.org/10.3390/computation13030075