1. Introduction

Nonlinear systems of equations,

, where

, frequently arise in many fields of engineering and science [

1,

2]. Consequently, finding solutions to these nonlinear systems is a challenging task. Solving these systems analytically is either extremely difficult or rarely feasible. To address this, numerous researchers have proposed iterative techniques for approximating solutions to nonlinear.

One of the oldest and simplest iterative methods is Newton’s method [

3,

4], which is defined as follows:

where

represents the Jacobian matrix of

F evaluated at

. Newton’s method exhibits quadratic convergence, provided that the root sought is simple and the initial estimate is sufficiently close to the solution.

Numerous higher-order techniques have been developed in the literature [

5,

6,

7], many of which considered Newton’s method as a first step. However, in various practical scenarios, the first-order Fréchet derivative

either does not exist or is computationally expensive to evaluate. To address such situations, Traub [

4] proposed a Jacobian-free approach, defined as follows:

where

represents the first-order divided difference operator of

F, and

, with

being an arbitrary constant. For

, this method simplifies to the multidimensional Steffensen’s method, as formulated by Samanskii in [

8]. These methods also have a quadratic order of convergence. We recall that the mapping

satisfies

and the components of the matrix associated with

can be obtained using the formula of the divided difference operator; we utilize the following first-order divided difference operator [

3]:

Toenhance the order of convergence, several researchers have developed third-order methods [

9,

10,

11,

12], each requiring one function evaluation of

F, two evaluations of its derivative

, and two matrix inversions per iteration. Cordero and Torregrosa [

13] introduced two additional third-order methods: one method requiring one function evaluation

F and three evaluations of

, while the other method demands one function evaluation of

F and four evaluations of its Fréchet derivative

, along with two matrix inversions. Another third-order method, proposed by Darvishi and Barati [

14], employs two function evaluations of

F, two evaluations of

, and two matrix inversions per iteration. Further advancements in third-order methods were made by Darvishi and Barati [

15], as well as Potra and Ptak [

16], both introducing methods that require two function evaluations, one evaluation of the function’s derivative, and one matrix inversion per iteration.

Moreover, several higher-order iterative schemes have been developed. Babajee et al. [

17] presented a fourth-order approach involving one function evaluation of

F, two evaluations of

, and two matrix inversions per iteration. Another fourth-order method, proposed by Cordero et al. [

18], is based on two function and two Jacobian evaluations along with one matrix inversion. Additionally, the authors in [

6] introduced another fourth-order method that utilizes three function evaluations of

F, one evaluation of

, and one matrix inversion per iteration.

It is evident that increasing the order of convergence in iterative methods often leads to a higher computational cost per iteration, posing a significant challenge for higher-order methods. Consequently, when developing new iterative methods, maintaining a low computational cost is essential. Motivated by this, we propose new iterative schemes that achieve fourth-order convergence while minimizing the number of function evaluations per iteration. The proposed method requires one function evaluation of F, one divided difference, and one matrix inversion of per iteration.

This manuscript is organized as follows. In

Section 2, a new parametric fourth-order method is presented along with its convergence analysis.

Section 3 discussed the efficiency index of the proposed method. In

Section 4, various numerical experiments are conducted to validate the theoretical results and compare the results of the proposed algorithms with some existing methods. Finally, the paper concludes with a summary of findings.

4. Numerical Results

In this section, we examine several numerical problems to evaluate the effectiveness of the proposed methods. The newly introduced schemes, denoted as , , and , correspond to the parameter values , , and , respectively. These methods are analyzed and compared against the following system approaches.

- 1.

Cordero et al. [19], denoted as , , respectively.

for

and

.

where

.

We note that the authors in (

20) and (

21) used

and

with

.

- 2.

Grau et al. [11], denoted as - 3.

Cordero et al. [20], denoted as .

where

and

- 4.

Cordero et al. [21], denoted as .

where

represents Newton’s method for systems and

, and

,

- 5.

Sharma and Arora [22], denoted as .

To numerically verify the convergence order established in Theorem 1, we present the iteration number

k, the error of the residual

, the error between two consecutive iterations

, and the approximate computational order of convergence (ACOC). The ACOC denoted as

is calculated as defined in [

13]:

where

,

,

, and

are four consecutive approximations in the iterative process.

Mathematica 11.1.1 [

23] was used for all numerical computations, and stopping criteria

and

is used to reduce roundoff errors and the configurations of our computer are given below:

A processor Intel(R) Core(TM) i3-1005G1 (Intel, Santa Clara, CA, USA).

CPU @ 1.20 GHz (64 bit machine) (Intel, Santa Clara, CA, USA).

Microsoft Windows 10 Pro (Microsoft Corporation, Albuquerque, NM, USA).

In all tables,

denotes

. The results from

Table 1,

Table 2,

Table 3,

Table 4,

Table 5 and

Table 6 show that the proposed methods give better results than existing methods and

Table 7 presents the number of iterations required by each method for the respective examples. A detailed discussion of the outcomes is provided separately for each example.

Example 1. Consider the Bratu problem [24], which has a wide range of applications, including the fuel ignition model in thermal combustion, thermal reactions, radioactive heat transfer, chemical reactor theory, the Chandrasekhar model of the universe’s expansion, and nanotechnology [25,26,27,28]. The problem is formulated as follows:Using the discretization of finite differences, the boundary value problem (1) is transformed into a non-linear system of size with step size . The central difference approach, which is provided as follows, has been used for the second derivative.which further yields the succeeding system of nonlinear equationTable 1 presents the computational comparison of the solution to this problem. The convergence of the proposed and existing schemes approaching the solution is listed in Table 1. The error estimates of the proposed methods outperform the existing methods from the first iteration, as shown in Table 1. Furthermore, for all proposed schemes, the estimated order of convergence aligns perfectly with the theoretical one. The approximate solution obtained with method is shown in Figure 1. Example 2. Consider the well-known Hammerstein integral equation, as described in [3], given by , where , and This integral equation is formulated into a system of nonlinear equations by making use of Gauss Legendre quadrature formula defined aswhere and are the x-coordinates and the weights, respectively, at eight nodes given in the following Table. By approximating with for , we obtain the following system of nonlinear equations:with The x-coordinates and weights used in the Gauss Legendre quadrature procedure are as follows: | | |

| 1 | | |

| 2 | | |

| 3 | | |

| 4 | | |

| 5 | | |

| 6 | | |

| 7 | | |

| 8 | | |

The convergence of the proposed and existing schemes approaching the solution

is listed in Table 2. In Table 2, the error estimates of the proposed methods outperform the existing methods from the first iteration. and provided poor results compared the remaining methods. Example 3. Broyden Tridiagonal Function: The Broyden Tridiagonal Function [29] is widely used in numerical mathematics and optimization as a benchmark for testing nonlinear solvers and optimization algorithms. Its importance stems from its tridiagonal structure, sparsity, and nonlinearity, which make it a manageable yet sufficiently complex system to evaluate computational methods. The nonlinear system formed by broyden tridiagonal function is defined as follows:The convergence of the proposed and existing schemes approaching the solution is listed in Table 3. It is observed in Table 3 that, for all proposed schemes, the computational order of convergence is approximately simillar with the theoretical one while all existing methods have different computational order of convergence than theoretical convergence order. Example 4. Consider the Frank-Kamenetskii problem [30], which is governed by the differential equation:To transform the boundary value problem (4) into a system of nonlinear equations with 50 unknown variables, we apply a finite difference discretization using a step size of . Furthermore, the second derivative is approximated using the central difference scheme as follows:The solution of this problem is examined and demonstrated in Table 4. In this case, the best results were achieved by the proposed methods. Furthermore, for all the proposed schemes, the estimated order of convergence aligns perfectly with the theoretical one.

Example 5. Broyden Banded Function: The Broyden Banded Function [29] is another benchmark problem often used in the study of numerical methods for solving nonlinear systems of equations. Unlike the Broyden Tridiagonal Function, which is tridiagonal, this function has a more general banded structure, meaning that each equation depends not just on immediate neighbors, but on a subset of variables within a certain band. The Broyden Banded Function for a system of n equations with variables is given by the following:where where p is the bandwidth. The convergence of the proposed and existing schemes approaching the solution

is listed in Table 5. In this example, we observe again that the proposed methods achieve the best error estimates from the very first iterations.

Example 6. Application on a Model of Nutrient Diffusion in a Biological Substrate [31]. Nutrient diffusion plays a crucial role in biological systems, affecting cellular metabolism, tissue engineering, and microbial growth. Mathematical models of nutrient diffusion help predict nutrient availability, optimize bioreactors, and enhance medical treatments such as drug delivery. Here, we explores the application of a diffusion model in a biological substrate, analyzing factors influencing diffusion rates and their implications.

Consider a two-dimensional biological substrate that acts as a growth medium or cultivation area for microorganisms. Our goal is to study the diffusion and distribution of nutrients within this medium, as they play a crucial role in the development and viability of the organisms present. The corresponding mathematical formulation is given below: Here, denotes the nutrient concentration at any location in the substrate. The equation models nutrient diffusion, with the term representing interactions between nutrient concentration and biochemical processes occurring within the medium.

The boundary conditions describe how nutrients behave at the substrate’s edges. At the lateral boundaries ( and ), they may indicate initial concentrations or continuous nutrient supply. At the upper and lower boundaries ( and ), they can correspond to nutrient absorption by plant roots or interactions with microorganisms present on the surface.

Solving this problem provides insights into nutrient distribution within the substrate and its influence on organism growth and health. This knowledge can contribute to optimizing agricultural methods and improving biological productivity.

As an example, the authors solve this equation for a small system using a block-wise finite difference approach. The discretization involves creating a mesh:with mesh points defined as , , and , . The discrete form of the equation is given by the following: This discretized representation allows us to numerically solve for using computational methods. Therefore, by approximating the partial derivatives using central divided differences, we have the following:for and . Now, we denote . Simplifying the notation, we obtain the following:with , , and here . The convergence of the proposed and existing schemes approaching the solution

is listed in Table 6. In this case, the best results were obtained with the proposed methods. and showed poor results compared to the remaining methods.

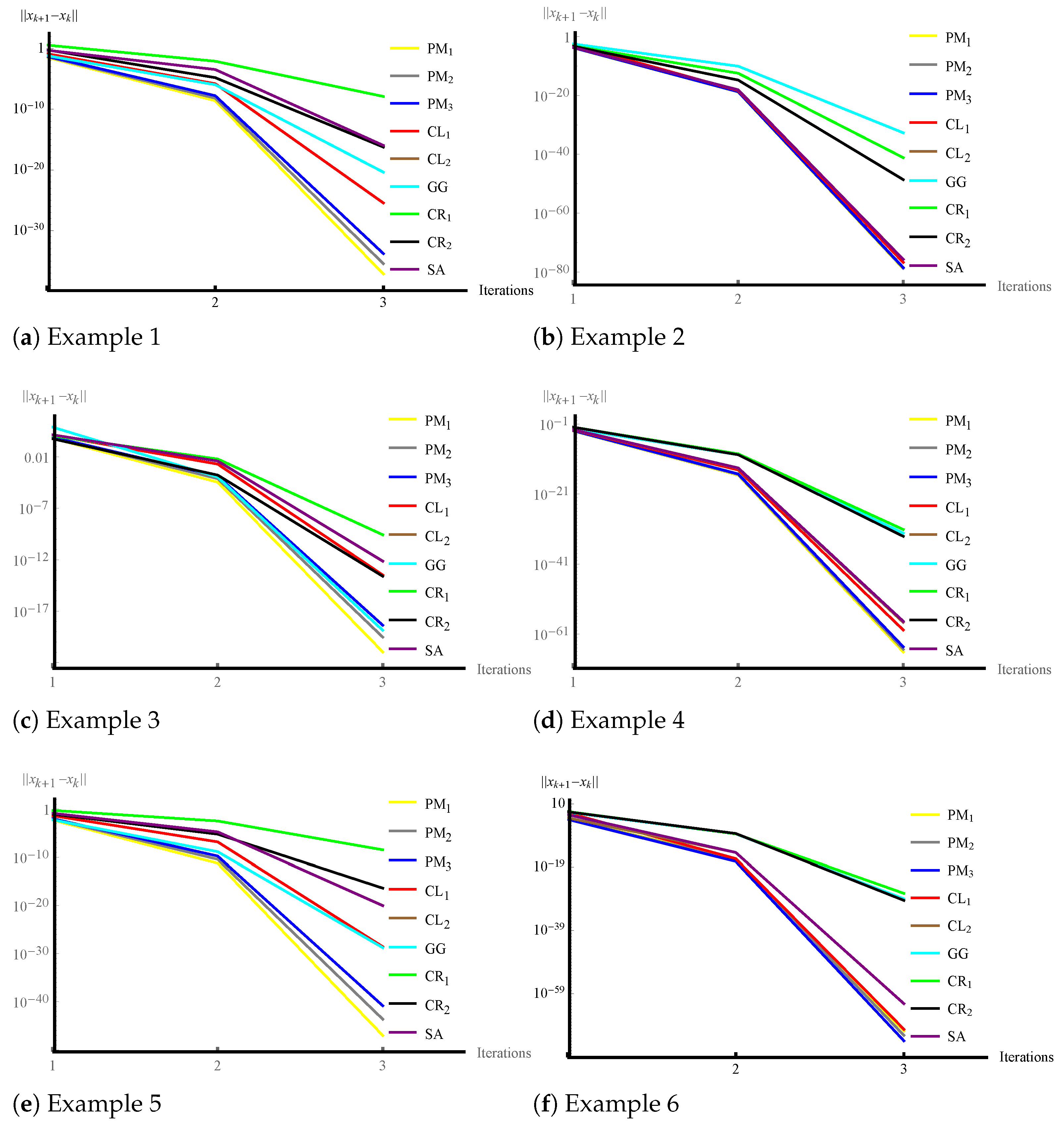

Remark 2. The graphical error analysis for Examples 1–6 is illustrated in Figure 2. It is evident from all the subfigures in Figure 2 that our method reduces the error faster than existing techniques. Finally, Table 8 shows the CPU times used by each method for each example. It can be seen that the proposed methods provide the best computation times. A schematic of the algorithm for the family of proposed methods is shown in Appendix A.