Abstract

The significance of age estimation arises from its applications in various fields, such as forensics, criminal investigation, and illegal immigration. Due to the increased importance of age estimation, this area of study requires more investigation and development. Several methods for age estimation using biometrics traits, such as the face, teeth, bones, and voice. Among then, teeth are quite convenient since they are resistant and durable and are subject to several changes from childhood to birth that can be used to derive age. In this paper, we summarize the common biometrics traits for age estimation and how this information has been used in previous research studies for age estimation. We have paid special attention to traditional machine learning methods and deep learning approaches used for dental age estimation. Thus, we summarized the advances in convolutional neural network (CNN) models to estimate dental age from radiological images, such as 3D cone-beam computed tomography (CBCT), X-ray, and orthopantomography (OPG) to estimate dental age. Finally, we also point out the main innovations that would potentially increase the performance of age estimation systems.

1. Introduction

Recently, many applications based on biometrics have been used in chronological age estimation [1]. Soft biometrics is the science of automatically recognizing people based on their physical or behavioral characteristics, such as the face, teeth, and voice [2,3]. Through biometrics, it is possible to know a person’s age, gender, and race. The biometric features most often used for chronological age estimation are the teeth, face, voice, bones, hand (palm), iris, and fingerprints [2]. Apart from forensic medicine, soft biometrics have a number of applications, including healthcare, age-related security control, and establishing the age of illegal immigrants without valid proof of birth for adults or unaccompanied minors.

Dental age is deemed vital as tooth development shows less variability than other developmental features as well as low variability with chronological age [4]. The eruption of teeth in an individual is important in human maturity assessment. Dental development involves several changes from childhood to death. These changes in teeth can be used to derive age, gender, and race attributes at every developmental stage of a person [5]. Applications of dental age assessment include forensic age estimation for cadavers in legal cases, for instance, associated with fires, crashes, accidents, and homicides, as well as use in medical specialties, such as in clinical practice in orthodontics, dentistry, pediatrics, pediatric endocrinology, orthopedics, and the investigation of dental malocclusions [6].

Various methods are used in dental age estimation. Biometrics morphological methods are based on the evaluation of teeth ex vivo [7]. Biochemical methods are based on the racemization of amino acids [8]. Radiological techniques also play an indispensable role in human age determination. These methods offer simple non-invasive and reproducible means of providing proof of age for both living or dead human beings. Radiographic images that can be used in age identification include panoramic radiographs, X-rays, magnetic resonance images (MRI), and cone-beam computed tomography (CBCT) images [9,10]. Panoramic radiographs are the most commonly used by dentists. Age can also be estimated by X-ray based on the cervical vertebrae, referred to as cephalometry. Various studies have shown a relationship between the shape of the cervical vertebrae and age; the determination of skeletal age using cervical vertebral maturity (CVM) is evaluated on a head radiograph routinely used in orthodontic practice. The dentist can estimate the patient’s age based on cervical vertebrae and cephalic X-ray [11].

Deep learning is one of the most important applications of artificial intelligence. Deep learning is the latest technology that has proven its value in many different fields and becoming the preferred method for analyzing medical images due to its accuracy and speed compared to traditional methods. Some studies have used deep learning to estimate the dental age in forensic medicine and to provide dental proof for certification of birth. We surveyed traditional methods used for dental age estimation, in addition to conducting a survey of deep learning (CNNs) methods used to estimate dental age. CNN methods have been employed for age estimation analysis, resulting in improved accuracy in age estimation [12,13]. Deep learning and CNN, as learning-based feature-representation methods, are used to learn discriminative semantic features in image recognition tasks. Recently, researchers have made widespread use of deep CNNs to automate and significantly increase the accuracy of age estimation [14,15,16]. If applied, the use of CNN in automated age estimation could increase accuracy and reduce the human effort entailed in forensic investigations [17]. This paper addresses chronological age estimation based on common biometrics; more specifically, we focus on the teeth (dental age). In addition to reviewing traditional methods used in dental age estimation, we survey dental age estimation using machine and deep learning techniques with different radiographs (OPG, MRI, CBCT) [17,18]. The study involves a comparison of traditional approaches with deep learning approaches for dental age estimation and considers why deep learning approaches have outperformed other methods in recent years [17]. Based on a survey of the common CNN models used in age estimation, we consider the challenges and obstacles in dental age estimation and provide suggestions for ways to increase the accuracy of the estimates obtained. In addition, we collected several datasets used in dental age estimation. To our knowledge, and based on a thorough review of related literature, no survey of research focusing on dental age estimation using deep learning models has previously been conducted.

2. A Review of Biometrics-Based Age-Estimation Methods

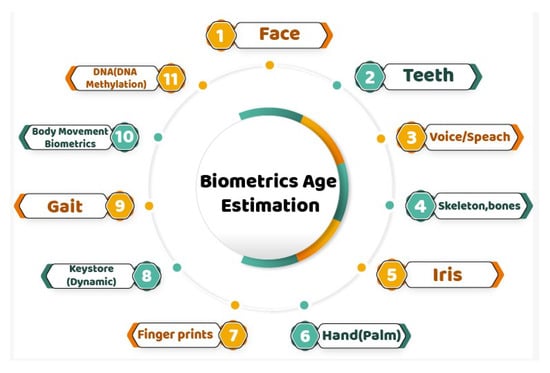

Automatic human identification systems recognize human age using various biometrics, which constitutes a challenging task. Biometric measures are information carriers that reflect many features of the human body from birth to old age. Recent studies have used biometrics for age estimation and have provided very valuable results. Figure 1 shows biometrics that are used in age estimation, which are summarized in some detail as follows.

Figure 1.

Biometrics-based age-estimation methods.

Face: The human face is an attribute that distinguishes each person from others. The human face includes many features, such as facial hair, eyebrows, wrinkles, freckles, age spots, hair color, skin texture, gender characteristics, changes in bone structure, genetic makeup, and ethnicity that can be used for age estimation [19,20]. The face remains the most dominant and informative structure for biometric recognition systems. The human face provides a pool of useful information about the person, such as identity, age, ethnic group, gender, posture, and expression [21].

Teeth: Teeth constitute a unique part of the human body as they are the most resistant and durable features even after death. The eruption of teeth in an individual is an important means of human maturity assessment. Dental development involves several changes from childhood to death. These physiological changes in teeth can be used to determine age, gender, race, and similar attributes at every developmental stage of a person [14]. They are currently the most important means of identifying people in incidents of loss and death, medical crime, and for forensic and dental age estimation purposes.

Voice/Speech: Voice or speech is a unique physiological signal which not only contains information about linguistic content, such as words, accents, and language, but also conveys information about para-linguistic content, such as height, age, gender, and emotions [22,23]. Physiological changes are reflected in the voice from childhood to adulthood through changes in the larynx and vocal folds. Certain acoustic and prosodic features can be related to the physical age of the speaker, including mel spectra with mean and vocal track length normalization (VTLN), specific combinations of plosives and vowels, long-term features of pitch and formants, jitter, shimmer, intensity, and short-term frame-based features, such as Mel frequency cepstral coefficients (MFCCs) [24]. Several studies have been conducted based on voice/speech feature extraction to identify a reliable method for pattern classification for age estimation [25].

Skeleton/Bone: Radiologists use bone growth indicators as maturity parameters based on bone ossification measures, including for the hand-wrist, femur-tibia, and clavicle [26]. Information concerning growth can be obtained from cephalography and hand and wrist radiographs. Hand and wrist radiography are useful for age estimation since they are straightforward, inexpensive, and non-invasive. The femur is the longest and strongest bone in the human skeleton and is normally preserved in forensic contexts. Owen Loverjoy, in 1985, examined radiographic changes in the clavicle, humerus, and femur bones to determine skeletal maturity (age) at death. The clavicle is a non-weight-bearing bone and is usually found as an entire bone. Computed tomography (CT) is used to determine the epiphyseal maturation of the clavicle bone, with X-ray examination of the hand and pelvis also used to assess the age of an individual in forensic contexts [18].

Iris: The iris is a part of the human body that is regarded as invariant to within-class variation, thus presenting an ideal biometric feature [27]. It is a biological feature that provides perceptible information. The iris is formed within a few months of an infant’s birth and remains relatively unchanged throughout a person’s lifetime. For this reason, the iris is regarded as an aging-invariant biometric feature [28]. A cataract is associated with clouding of the lens requiring surgical treatment and glaucoma is associated with increased blood pressure in the eye that causes spots on the iris. It has been shown that cataract-removal surgery and alteration of iris appearance due to glaucoma affect the performance of iris-recognition systems. Usually, such diseases occur in subjects belonging to older age groups. Therefore, although the appearance of the iris is not directly affected by aging, the probability of observing inconsistencies between an iris-based biometric template and the current appearance of a subject’s iris increases with age [29].

Hand(palm): Hand biometric templates can be based either on the texture of the palm (palm print) or on hand geometry [30]. In the case of palm prints, aging effects are attributed to similar causes as those encountered with fingerprints, mainly loss of elasticity, wear, and injuries. Age-related modifications of hand geometry are usually recorded during the growth of the skeleton that takes place during childhood and puberty [30,31]. Therefore, hand-geometry-based features display increased aging variation during the growth process; however, after the growth process is completed, the geometry of hands remains reasonably stable, limiting the adverse effects of aging on hand-geometry-based biometric templates [31].

Fingerprint: A fingerprint is a biological marker for human identification [32]. Gender and age estimation represent new research domains for fingerprints [33]. The basic fingerprint pattern remains the same, while the size and shape of the fingerprint vary from infancy to old age. Morphological features, such as ridges, valleys, thickness, size, number of pores, and curvelet domain are the main areas considered in fingerprint-based estimation.

Keystroke (dynamics): Keystroke dynamics refers to the process of measuring and assessing human’s typing rhythm on digital devices [34], such as computer keyboards, mobile phones, or touch-screen panels. A form of digital footprint is created from human interaction with these devices. Age detection via keystroke analysis can provide circumstantial evidence to support the identification of a suspect in the case of account or identity theft [35]. Moreover, this method can be used as part of a warning system for age discrepancies of users participating in online chats. There are additional applications, such as the validation of information provided during a registration process [36].

Gait: Human gait refers to a person’s manner of walking. Gait pattern significantly changes with advancing age as gait speed decreases with increasing human age. Stride-based properties, reduced velocity, shorter step length, variable increased step timing, shoulder-hip ratio, and waist-hip ratio are used to for age identification [37].

Body movement biometrics: Body movement biometric templates fall under the general category of behavioral biometrics. Body movement biometrics involves the storage of information about the way that certain actions are accomplished by different individuals so that it is possible to recognize subjects based on their actions [38]. Behavioral biometrics authentication systems are affected significantly by aging since both the dynamics and style of human body movement are heavily dependent on age. Both direct aging effects, mainly caused by modification in muscle strength, and indirect aging caused by certain diseases, such as reduced sight and reduced hearing, can affect behavioral biometric templates [39].

DNA (DNA methylation): DNA methylation is a useful candidate for estimating the age at the time of death in forensics [40]. CpG methylation and DNA methylation markers have been utilized to indicate aging in the blood. Recent studies have shown that the aging process is highly related to changes in CpG and DNA methylation patterns. The ELOVL2 gene is a commonly used DNA methylation marker in age estimation. DNA methylation profiling is used to estimate age in forensic cases [41,42]. Genes are mainly used to estimate biological age and are rarely used to estimate chronological age.

Age Estimation Application Fields

The importance of age estimation arises from its applications in various areas, including the following:

- Medicine

Age estimation is an essential task in forensic science for age determination of cadavers carried out in criminal cases and for the assessment of mutilated victims of serious incidents, such as fires, crashes, accidents, homicides, feticides, and infanticides [43,44]. In clinical practice, age estimation is used in treatment planning for various abnormalities, such as pediatric endocrinopathy and orthodontic malocclusion, when the birth certificate is not available. In addition, in anthropological research, age estimation is consider an important tool [43].

- Security and Surveillance

In the domain of security and surveillance, age estimation can be used in the surveillance and monitoring of alcohol and cigarette vending machines, and in bars, to prevent underage access to alcoholic drinks and cigarettes. Additionally, age estimation can be used to restrict children’s access to adult websites and movies [45]. Age estimation can also be significant in controlling ATM money transfer fraud by monitoring a particular age group that is apt to commit such crimes [46]. As well as it can be used to control access to electronic customer relationship management (ECRM); ECRM is the use of Internet-based technologies, such as websites, emails, forums, and chat rooms, for the effective management of interactions with clients and individuals [47]. Since customers of different ages usually have different preferences, companies may use automatic age estimation to monitor market trends and customize their products and services to meet the needs and preferences of customers in different age groups.

- Illegal Immigration and Civil Issues

Age estimation can be used in the context of illegal immigration, where the age and identity of unknown people require to be determined [48]. Age estimation can also be utilized for civil processes and issues, including marriage contracts or insurance, child abuse, legal consent, asylum proceedings, and social benefits. It can also be used concerning cases of premature births and adoption. In a criminal investigation, accused persons can be identified based on age information gathered from different sources. In juvenile law, age estimation is important as no delinquent juvenile can be sentenced to death or imprisonment and a court may choose to send an offending juvenile to a juvenile home [49]. Age estimation has a role in age simulation to assist in the identification of missing persons. Age simulation can also be used to identify old people from previous images of them for the purpose of identification [50].

3. Traditional Methods in Dental Age Estimation

In the early 19th century, because of the economic depression due to the industrial revolution, juvenile work and criminality were serious social problems. Edwin Saunders, a dentist, was the first to publish information regarding dental implications for age assessment, presenting a pamphlet entitled “Teeth A Test of Age” to the English parliament in 1837. Since then, various methods have been used for dental age estimation, such as morphological methods [7] and biochemical methods [8], as well as radiological techniques [9]. These techniques are summarized as follows: Morphological methods are based on the evaluation of teeth (ex vivo), which requires the extraction of teeth for microscopic preparation. These methods, however, may not be acceptable for ethical, religious, cultural, or scientific reasons because of the evaluation of teeth outside of the body (ex vivo). Morphological methods have been described by Gustafson (1950), Dalitz (1962), Bang and Ramm (1970), Johanson (1971), Maples (1978), and Solheim (1993) [8]. Biochemical methods are based on the racemization of amino acids. Aspartic acid has been reported to have the highest racemization rate of all amino acids and to be stored during aging. Levels of D-aspartic acids increase with age. Some biochemical methods were described in 1975, 1976 and 1995 [8]. Radiographic assessment of age is a simple, non-invasive, and reproducible method that can be employed for both living and dead human beings. Radiographic images used in age identification include intraoral periapical radiographs, lateral oblique radiographs, cephalometric radiographs, and panoramic radiographs, with methods used including X-ray imaging, magnetic resonance imaging (MRI), cone-beam computed tomography (CBCT), digital imaging, and use of advanced imaging technologies [9,10]. Radiological techniques have recently become an essential tool for identification in forensic science [51].

3.1. Dental Age Estimation in Children and Adolescents

Dental age estimation in children and adolescents is based on the time of emergence of the tooth in the oral cavity and tooth calcification. In 1941, Schour and Masseler studied the development of deciduous and permanent teeth, describing 21 chronological steps occurring from 4 months to 21 years of age, and published numerical development charts for them [52].

- Nolla’s method

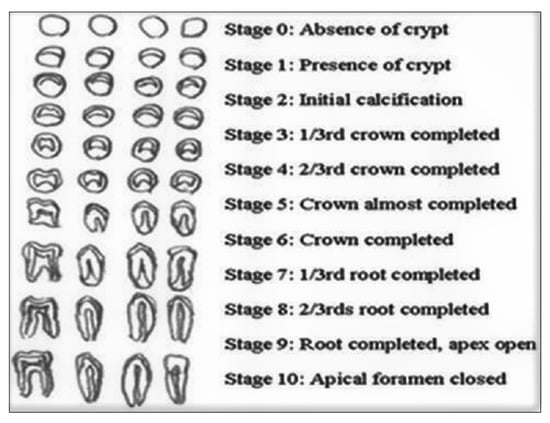

In 1960, Nolla developed a method in which the development of each tooth was divided into ten recognizable stages and categorically numbered from 1 to 10 [7,52]. Nolla’s developmental stages are listed below.

Stage 10: Apical end of root completed. Stage 9: Root almost complete; open apex. Stage 8: Two-third of root completed. Stage 7: One-third of root completed. Stage 6: Crown completed. Stage 5: Crown almost completed. Stage 4: Two-third of crown completed. Stage 3: One-third of crown completed. Stage 2: Initial calcification. Stage 1: Presence of crypt. Stage 0: Absence of crown. Figure 2 shows a Nolla dental development chart (10 stages) [7,52]. The stages of development are based on age.

Figure 2.

Dental development chart by Nolla [52].

- Moorees’ method

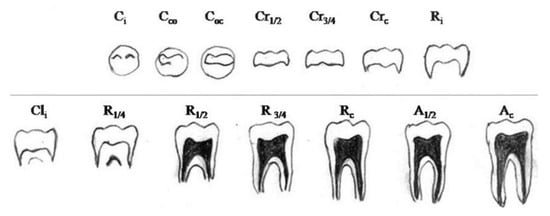

In this method, dental development is understood in terms of 14 stages of mineralization in the development of single and multi-rooted teeth (14 stages, from initial cusp formation to apical closure for both genders). Figure 3 shows the 14 stages of tooth formation including multi-rooted tooth initial cusp formation (Ci), coalescence of cusp (Cco), cusp outline complete (Coc), crown half complete(Cr1/2), crown three-quarter complete (Cr 3/4), crown complete (Crc), initial root formation (Ri), initial cleft formation (Cli), root length quarter(R 1/4), root length half (R1/2), root length three-quarters (R 3/4), root length complete (Rc), apex half closed (A1/2), apical closure complete (Ac). This method identifies these stages and assigns a certain age to each tooth. After this, the age scores are averaged to obtain the dental age [53,54].

Figure 3.

14 stages of tooth formation of multi-rooted tooth [54].

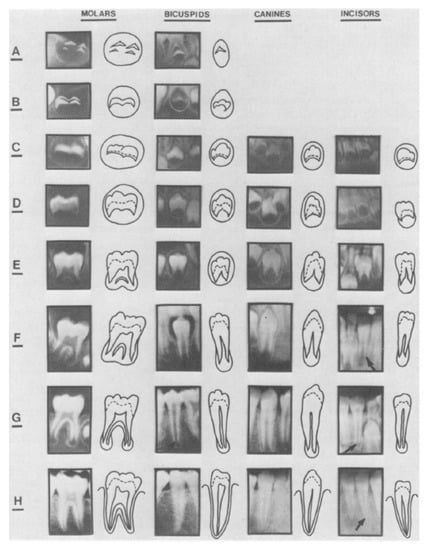

- Demirijian’s method

Demirijian’s method is one of the most frequently used methods to estimate chronological age due to its simplicity and ease of standardization [53,55]. Demirjian and his colleagues introduced a system of age estimation based on tooth development stages in which teeth development is divided into eight stages [6] for each sex, marked from stages A to H in Figure 4. Demirjian’s method is based theoretically on eight developmental stages ranging from crown and root formation to apex closure of the seven left permanent mandibular teeth. The stage descriptions are as follows:

Figure 4.

Developmental stages of the permanent dentition [55].

- A:

- The beginning of calcification is seen at the superior level of the crypt in the form of an inverted cone or cones. There is no fusion of these calcified points.

- B:

- The fusion of the calcified points forms one or several cusps which unite to give a regularly outlined occlusal surface.

- C:

- Enamel formation is complete at the occlusal surface. Its extension and convergence towards the cervical region are seen. The beginning of a dentinal deposit is seen. The outline of the pulp chamber has a curved shape at the occlusal border.

- D:

- The crown formation is completed down to the cementoenamel junction. The superior border of the pulp chamber in the uniradicular teeth has a definite curved form, being concave towards the cervical region. The projection of the pulp horns, if present, gives an outline shaped like an umbrella top. In molars, the pulp chamber has a trapezoidal form, the beginning of root formation.

- E:

- The uniradicular teeth walls of the pulp chamber now form straight lines the continuity of which is broken by the presence of the pulp horn, which is larger than in the previous stage. The root length is less than the crown height. In molars, the initial formation of the radicular bifurcation is seen in the form of either a calcified point or a semi-lunar shape. The root length is still less than the crown height.

- F:

- In uniradicular teeth, the teeth walls of the pulp chamber now form a more or less isosceles triangle. The apex ends in a funnel shape. The root length is equal to or greater than the crown height. In molars, the calcified region of the bifurcation has developed further down from its semi-lunar stage to give the roots a more definite and distinct outline with funnel-shaped endings. The root length is equal to or greater than the crown height.

- G:

- The walls of the root canal are now parallel and the canal’s apical end is still partially open.

- H:

- The apical end of the root canal is completely closed. The periodontal membrane has a uniform width around the root and the apex.

Each stage is assigned a score. The sum of the scores provides an assessment of the maturity of the individual’s teeth and an estimate of the age of the teeth. Hence each tooth will have a rating, which is assessed by the procedure described in [6,55].

- Cameriere’s method

In 2006, Cameriere et al. published a method of age estimation based on the measurement of the ratio between the length of the projection of the open apices and the length of the tooth axis major (known as the open apices method). Briefly, the method uses the seven left mandibular teeth. The first step is to identify the teeth with closed apices, which are counted; the sum is abbreviated N0. For the rest of the teeth with open apices, the distance between the inner sides of the open apex (for single-root teeth), or the sum of distances between the inner sides of the open apices (for multi-root teeth), is measured. These measurements are abbreviated to Ai (i = 1–7) and are then divided by the tooth length Li (i = 1–7) to obtain the normalized measurements for the seven teeth (xi = Ai/Li). Then, a variable denoted s is computed, which is equal to N0 + sum(xi) [8,56].

3.2. Dental Age Estimation in Adults

Clinically, the development of permanent dentition completes with the eruption of the third molar at the age of 17–21 years. The methods commonly followed are the assessment of the volume of teeth (pulp-to-tooth ratio method, coronal pulp cavity index) and the development of the third molar (Harris and Nortje method, Van Heerden system) [8,56].

- Pulp-to-tooth ratio method

Age estimation in adults can be achieved by radiological determination of the reduction in the size of the pulp cavity resulting from secondary dentine deposition, which is proportional to the age of the individual. With the pulp-to-tooth ratio method by Kvaal, the pulp-to-tooth ratio is calculated for six mandibular and maxillary teeth, such as the maxillary central and lateral incisors, the maxillary second premolars, the mandibular lateral incisor, the mandibular canine, and the first premolar. The age is derived using this pulp [52,53,56].

- The Coronal Pulp Cavity Index

This method calculates the correlation between the reduction in the coronal pulp cavity and the chronological age [52,57]. Only mandibular premolars and molars are considered as mandibular teeth are more visible than maxillary teeth.

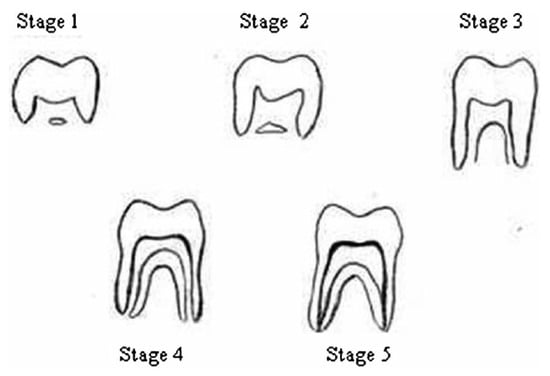

- Harris and Nortje method

Radiographic age estimation becomes problematic after 17 years of age as the eruption of permanent dentition is completed by that age with the eruption of the third molar. Later, the development of the third molar may be taken as a guide to determining the age of the individual [51]. In the Harris and Nortje method Figure 5, five stages of third molar root development are described with corresponding mean ages and mean length [55]. The stage descriptions are as follows: Stage 1 (cleft rapidly enlarging—one-third root formed, 15.8 ± 1.4 years, 5.3 ± 2.1 mm); Stage 2 (half root formed, 17.2 ± 1.2 years, 8.6 ± 1.5 mm); Stage 3 (two-thirds root formed, 17.8 ± 1.2 years, 12.9 ± 1.2 mm); Stage 4 (diverging root canal walls, 18.5 ± 1.1 years, 15.4 ± 1.9 mm); Stage 5 (converging root canal walls, 19.2 ± 1.2 years, 16.1 ± 2.1 mm) [56].

Figure 5.

Dental development chart by Harris and Nortje [56].

- Van Heerden system

In the Van Heerden system, the development of the mesial root of the third molar is assessed to determine age using a panoramic radiograph (in this system, Van Heerden considers five stages) [49]. The methods for dental age estimation in adults are of a minimally invasive nature, without a requirement for the extraction of teeth. The pulp/tooth ratio calculation has been applied to individuals from different populations. The method is based on the analysis of linear measurements of the pulp, tooth, and root length, as well as root and pulp width measurements at three different root levels, initially applied to periapical radiographs and later to panoramic radiographs and tomographs. The method is based on the analysis of pulp and tooth area measurements in periapical and panoramic radiographs. Finally, the different methods for dental age estimation in adults are based on the pulp/tooth volume ratio from CBCT radiographs [8,57]. Table 1 provides a summary of the traditional methods used in dental age estimation.

Table 1.

Summary of traditional methods used in dental age estimation.

4. Deep Learning Techniques

Artificial intelligence (AI) based on deep learning (DL) has sparked tremendous global interest in recent years. Deep learning (DL) was developed as an effective machine learning method that takes in numerous layers of features or representations of the data and provides state-of-the-art results. The application of deep learning has produced impressive results in various application areas, particularly in image classification and recognition [68,69], segmentation [70,71], object detection and natural-language processing [72,73]. Due to the success and rapid development of deep learning (DL), it has been applied in several fields, including robotics, medicine, biology, and commerce with significant and accurate results. The fundamental concept of DL comes from artificial neural network (ANN) research.

The evolution of artificial neural networks (ANN) began in the 1940s when McCulloch and Pitts published research articles discussing the idea of neural networks in general [74]. The concept of ANN was inspired by the biological human brain model. Then, this concept was transformed into a mathematical formulation and, lastly, resulted in machine learning, which is used to solve many problems. Mathematical formulations, design concepts, algorithms, and computer programs can be constructed from ANN. Artificial neural networks have undergone many changes in their algorithms and their execution. The areas of application are numerous, involving different techniques and approaches to the use of algorithms [75]. The ANN algorithm uses optimization techniques as a way to find the best outcome based on the problem to be solved [76]. The basic ANN architecture is a perceptron that weights a sum of inputs and applies a threshold activation function. It contains multiple connected perceptrons. The ANN architecture contains input layers, output layers, and hidden layers. The neurons in every layer are connected, so ANN is also known as a fully connected network [77]. The modern-day neural network contains many intermediate hidden layers, so these networks are called deep neural networks (DNNs). The number of weights between each layer can be calculated by multiplying the neurons in a current layer by the neurons in a previous layer. The number of weights increases together with the number of neurons in the hidden layer. The number of hidden layers and the number of neurons in each hidden layer are called hyperparameters, which have to be chosen thoughtfully by the network designer according to the application [75].

4.1. Convolutional Neural Networks (CNN)

Convolutional neural networks (CNN), first introduced by Fukushima [76] in 1998, have wide applications in activity recognition, sentence classification, text recognition, face recognition, image characterization, object detection, and localization [77,78,79]. CNN is a type of artificial neural network (ANN), which has a deep feed-forward architecture and has excellent generalizing ability compared to other networks with fully connected (FC) layers. It can learn highly abstracted features of objects, especially spatial data, and can identify them more efficiently. CNN is made up of neurons, where each neuron has a learnable weight and bias. It contains an input layer, an output layer, and multiple hidden layers, where the hidden layer consists of a convolutional layer, a pooling layer, a fully connected layer, and various normalization layers. Its primary purpose is to classify the input images into several classes, based on the training datasets. Deep CNN has various classical and modern architectures. Major CNN models include LeNet, AlexNet, ZFNet, GoogLeNet, VGGNet, ResNet, Inception model, ResNeXt, SENet, MobileNetV1/V2, DenseNet, Xception model, NASNet/PNASNet/ENASNet, and EfficientNet [77,80]. We briefly describe the major CNN models below.

- LeNet

LeNet-5 [81] (simply called Lenet) is a simple CNN structure proposed by Yann LeCun in 1998. It is a simple network that has seven layers: three convolution layers, two pooling layers, and one fully connected layer. It was one of the early architectures developed for convolution neural networks. The input for LeNet-5 may be a 32∗32 grayscale image that passes through the primary convolutional layer with six filters having a size of 5∗5 and a stride of the image dimensions changes from 32∗32∗1 to 28∗28∗6. The next layer is the average pooling layer.

- AlexNet

AlexNet was introduced in 2012 by Alex Krizhevsky [82]. AlexNet consists of a simple layout of eight layers with five convolutional and three fully connected layers. The CNN architecture is similar to that of LeNet, but deeper, with stacked convolutional layers and more filters. It is employed primarily to solve very challenging facial analysis problems including, age estimation, gender recognition, etc. However, AlexNet is outperformed by deeper models, such as ResNet and GoogLeNet, but these are computationally expensive.

- ZFNet

This model corresponds to a fine-tuning of the previous AlexNet model. It utilizes the filters of size 7∗7 and diminished stride esteem [83]. The idea behind this change was that a small filter size in the primary convolution layer holds a considerable amount of unique pixel data in the input volume. This model was trained on the GTX 580 GPU for 12 days and evolved a visualization technique named the deconvolutional network. The established ZFNet model retained the same number of AlexNet layers but improved the error rates to 11.7%, and, therefore, won the ImageNet Large-Scale Visual Recognition Challenge 2013 (ILSVRC 2013).

- GoogLeNet

GoogLeNet is made of nine inception modules joined together to form a deeper architecture [84]. It uses “global average pooling” instead of the “fully connected” layers found in earlier architectures, and this dramatically reduces its weight size. It represents a new topology of CNN, different from all previous CNN models. Moreover, it increased the network depth to 22 layers in comparison to all other architectures introduced since 2010. It outperforms both Alex-Net and VGG-Net in terms of the top five errors which are reduced to 6.67%.

- VGGNet

The visual geometry group (VGG) [85] is a CNN model introduced by VGG from the University of Oxford. It generalizes the AlexNet model by increasing the depth from 8 layers (AlexNet) to 16–19 layers (VGG16Net) with few parameters. Instead of 7 × 7, a stack of three 3 × 3 filters for the convolution layers is used and a SoftMax layer occurs at the output. A ReLU layer is provided after all the hidden layers. The basic architecture of VGG-16 is presented. One of the most appealing points about this architecture is its simplicity. A total of 138 parameters are used because of their simplicity and uniformity. The top five error of VGG-Net is 7.32%, which is much reduced in comparison to that of Alex-Net.

- ResNet

The ResNet architecture was developed by He et al. [86], primarily to improve the performance of existing CNN architectures, such as VGGNet, GoogLeNet, and AlexNet. The various types of ResNet models investigated so far include ResNet-18, ResNet-34, ResNet-50, ResNet-101, and ResNet-152. This architecture secured first place in the ImageNet challenge (ILSVRC 2015) with a 3.57 % error, and, for the first time, a CNN achieved an error rate better than human perception.

- Inception

The Inception network was an important milestone in the development of CNN classifiers. The main contribution of this network is to drastically reduce the network parameters when compared to the traditional CNN used in AlexNet. This model introduced a new technique called an inception layer. This approach is not only computationally convenient when compared to the traditional approach, but also provided the best recognition accuracy in ILSVRC 2014. In terms of network parameters and memory, GoogLeNet needs only 4 M whereas AlexNet needs around 60 M [84].

- ReasNeXt

The ResNeXt model was inspired by the VGG and ResNet models and has been valued for its improved performance for image classification tasks [87]. It utilizes a split–transform–merge procedure. This model contains the stack of residual blocks, which use similar topologies and rules that are influenced by the ResNet/VGGs. This model is built by replicating a building block that collects the group of transformations along with similar topologies. This model was the winner of the ILSVRC 2017 classification challenge.

- SENet

This model was proposed to enhance the representational power of the network, carried out by empowering it to achieve dynamic channel-wise feature recalibration. The model mainly concentrates on the relationship between the channels and includes an innovative architectural system termed the squeeze and excitation (SE) block. SENet’s assembled groundwork for the ILSVRC 2017 categorization submission won the first category and automatically reduced the top-five error to 2.251%. Hu [88] proposed a model known as squeeze and excitation. It enhances the interdependencies of the channel with no computational cost and global average pooling is implemented on a GPU. SENets came in the first position for the ILSVRC 2017 classification submission, reducing the top-five error to 2.251%. The model uses global average downsampling to achieve channel-wise statistics; features are first moved across the squeeze action and sample-specific activations are held in the excitation operation.

- MobileNetV1/V2

MobileNet is a type of convolutional neural network designed for mobile and embedded vision applications. MobileNet is based on a streamlined architecture that uses depthwise separable convolutions to build lightweight deep neural networks that can have low latency for mobile and embedded devices [89]. In the MobileNets V1 model, the normal convolution is replaced by the depthwise convolution followed by pointwise convolution, which is called depthwise separable convolution. It performs a solitary convolution on every color channel, as opposed to joining every one of the three and smoothing it, which can be done by utilizing the depthwise separable convolutions. This architecture was propounded by Google MobileNetV2. The basic idea of this model is to utilize the depthwise separable convolution as a proficient building block. It introduces two new features in the design, including linear bottlenecks among the layers and shortcut connections among the bottlenecks. The model utilizes depthwise separable convolutions and contains three convolutional layers in the block. The MobileNetV2 architecture is faster for a similar accuracy over the whole latency spectrum. Specifically, this model accomplishes higher accuracy and is around 30–40% quicker on a Google Pixel phone than MobileNetV1 architecture. It outperforms GoogLeNet and VGGNet.

- DenseNet

DenseNet [90] connects each layer to every other layer in a feed-forward fashion. Whereas traditional convolutional networks with L layers have L connections—one between each layer and its subsequent layer—this network has L (L + 1)/2 direct connections. For each layer, the feature maps of all preceding layers are used as inputs and its own feature maps are used as inputs into all subsequent layers. DenseNet has several advantages, such as alleviating the vanishing-gradient problem, strengthening feature propagation, encouraging feature reuse, and substantially reducing the number of parameters. It is quite similar to ResNet, though it has some fundamental differences.

- Xception

Xception [91] is a deep convolutional neural network architecture that involves depthwise separable convolutions. It was developed by Google researchers. Google suggested an interpretation of Inception modules in convolutional neural networks as an intermediate step between regular convolution and the depthwise separable convolution operation (a depthwise convolution followed by a pointwise convolution). In this context, a depthwise separable convolution can be understood as an Inception module with a maximally large number of towers. This observation led them to propose a novel deep convolutional neural network architecture inspired by Inception, where the Inception modules are replaced with depthwise separable convolutions. The Xception architecture has outperformed VGG-16, ResNet, and Inception V3 in most classical classification challenges. XCeption is an efficient architecture that relies on two main features: depthwise separable convolution and shortcuts between convolution blocks, as in ResNet.

- EfficientNet

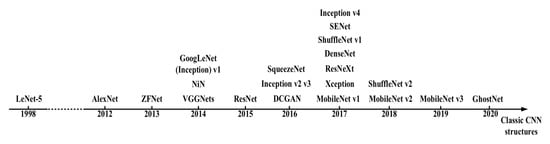

EfficientNet [92] is a convolutional neural network architecture and scaling method that uniformly scales all dimensions of depth/width/resolution using a compound coefficient. Unlike conventional practice that arbitrarily scales these factors, the EfficientNet scaling method uniformly scales network width, depth, and resolution with a set of fixed scaling coefficients. The EfficientNet architecture event scales every dimension with a defined set of scaling coefficients, with a huge reduction in parameters and computations, and is 5 to 10 times more efficient than present CNNs. Figure 6 shows the major CNN models from 1998 to 2020. All these CNN architectures can be employed for any computer vision application by performing minor modifications to the architecture and applying transfer learning. Recently, convolutional neural network research has included experiments on automated age estimation. The results obtained are far more accurate than for any other classification technique for automated age estimation. Many researchers are investigating using the CNN architecture for various applications in dentistry, but, until recently, little work has been directed toward the use of deep CNN for age estimation using dentistry [17].

Figure 6.

The evolution of CNN models [78].

4.2. Capsule Networks (CapsNet)

CapsNet is the newest neural network architecture, representing an advanced approach compared to previous neural network designs, particularly for computer vision tasks. A capsule is a collection or group of neurons that store different information about the object it attempts to identify in a given image, information mostly about the object’s position, rotation, scale, and so on, in a high-dimensional vector space (8 dimensions or 16 dimensions), with each dimension representing something special about the object that can be understood intuitively [93]. The architecture of a capsule network is divided into three main parts and each part has sub-operations. The parts are: primary capsules (convolution, reshape, squash), higher-layer capsules (routing by agreement), and loss calculation (margin loss, reconstruction loss) [94].

The convolutional neural network cannot identify the correct pattern of features in an object. The capsule neural network was introduced to overcome this limitation of convolutional neural networks. These capsules correctly identify the image even when the image is rotated. All capsules in the first layer predict the output of capsules in the next layer. Once the capsules in the main layer identify the capsules in the second layer to which they belong, the capsules in the main layer are routed only to the corresponding capsule in the second layer. For instance, if a CNN is intended to detect a face, irrespective of the position of the eye, it will still detect it as a face. However, equivariance ensures that the spatial location of the features on the face is taken into account. As a result, equivariance does not consider just the presence of an eye in the image, but also its location in the image. CapsNet can be used in future studies that require more accuracy, so we anticipate accurate results when using the CapsNet in age estimation. Finally, DL and CNN have dramatically upgraded their performance compared to machine learning (ML) techniques, such as support vector machine (SVM) and naive Bayes. Several advancements in DL make this model more reliable and adaptive.

4.3. Dental Age Estimation Studies Based on Deep Learning

Chronological age estimation using dental images is widely used for criminal, forensic, dentistry, orthodontics, and anthropological studies. However, it was not until recently that some work was directed at deep CNN for age estimation using dental images. In this section, we present studies that used DL techniques and the architectures of CNN reviewed in the previous section to estimate dental age and compare the accuracy of the results of the different architectures in dental age estimation, as well as discuss the challenges faced in age estimation.

- X-ray images

Essam et al. (2019) [95] used 1429 panoramic dental X-ray images with CNN models based on AlexNet and ResNet-101 to extract the features from the image. Several classifiers were used to perform the classification task, including a decision tree, k-nearest neighbor, linear discriminant, and support vector machine. They grouped the subjects into eight age groups in the range of 0 to 70 years. The results showed that AlexNet-based features were better than ResNet-based features. In addition, the k-nearest neighbor (K-NN) was found to be the best in the classifier. In another recent study, Seunghyeon et al. (2021) [96] used 2025 dental X-ray images of the first molars, and a deep neural network with 152 layers (ResNet-152) was trained to predict the age group. The CNNs successfully exploited the features from images to predict the correct age groups. The accuracy of estimation was 89.05 to 90.27%. The age groups ranged from 0 to 10 y, 10 to 19 y, 20 to 29 y, 30 to 39 y, 40 to 49 y, 50 to 59 y, and greater than 60 y.

Jaeyoung et al. (2019) [97] applied and evaluated 9435 panoramic dental X-ray images with a CNN model based on DenseNet-121 trained to predict the chronological age from the input image. Subjects ranging from 2 to 98 years were grouped into three age groups 2 to 11, 12 to 18, and 19 years or above. The performance of the CNN model showed a low mean absolute error for the three age groups. Denis et al. (2022) [98] studied age estimation from X-ray images of individual teeth using a dataset consisting of 4035 images. The age range was from 19 to 90 years. The models for age estimation were trained on the entire set of panoramic dental X-ray images and specific regions of interest, as well as on individual teeth. The following pre-trained convolutional networks were tested in their experiments for the transfer learning approach: DenseNet201, Inception, ResNetV2, ResNet50, VGG16, VGG19, and Xception. The best-performing model was VGG16.

Nicolás et al. (2020) [99] proposed two fully automatic methods to estimate the chronological age from orthopantomogram (OPG) images (upper and lower jaw) and ages between 4.5 and 89.2 years. Based on CNN, the first (DANet) consists of a sequential convolutional neural network (CNN) path for predicting age, while the second (DASNet) adds a second CNN path to predict sex to improve the age prediction performance. DANet consists of a single path, where convolution and pooling layers are interleaved to learn image features at different scales and, ultimately, to estimate age. The second method, DASNet, adds a second path, nearly identical to the first, to estimate sex. The sex path shares information with the age path to force it to use sex-specific features at intermediate points. In this way, sex information is taken into account to improve the age-estimation process. The results showed that the DASNet method can be used to predict someone’s chronological age automatically and accurately, especially in young subjects with developing dentition. A summary of all the dental age estimation studies using DL reviewed above is provided below in Table 2.

In another study, Syed et al. (2020) [100] used dental X-ray images (orthopantomography). They used the first-to-third molar teeth for Malaysian children (1 to 17 y) based on a pre-trained DCNN using orthopantomography to extract features from images to predict ages. The results indicated strong performance, enabling age estimation with high accuracy. Yu-cheng et al. (2021) [101] compared the traditional manual method based on the Demirjian method with CNN models for legal age threshold classification, based on a large sample of dental orthopantomograms (OPGs), for subjects aged between 5 and 24 years. They selected the EfficientNet network, which is a compound model scaling algorithm that helps to achieve a significant improvement in accuracy by comprehensively optimizing the network width, network depth, and resolution [92]. At the same time, to ensure the inevitability of the final result, they also adopted SE-ResNet 101, which embeds the squeeze and excitation block (SE-block) into ResNet, and more nonlinear operations were added so that the model could better fit the complex correlation between channels. The end-to-end classification was based on EfficientNet and SEResNet. The results demonstrated that CNN models can surpass humans in age classification and that the features extracted by machines may be different from those defined by humans. Galibourg et al. (2020) [102] compared the Demirjian and Willems methods with machine learning regression models for age estimation, for subjects between 2 and 24 years, and found that all machine learning models based on the developmental stages defined by Demirjian were more accurate in dental age estimation.

- Cone-beam computed tomography (CBCT) images

Qiang et al. (2021) [103] developed a method to estimate the age for ages between 10 and 160 y for 180 patients using the pulp chamber of the first molars from 3D cone-beam computed tomography (CBCT) images and a deep learning model (ResBlocks) [104,105]. The DL model was trained for coarse segmentation. The developed deep learning model provides automatic, rapid, and accurate segmentation of the pulp chamber, followed by pulp chamber volume calculation and human age estimation derived from the deep learning segmentation.

- Magnetic resonance imaging (MRI)

Stern et al. (2019) [106] first reported a classifier derived using random forests, which performs nonlinear regression and deep CNN (DCNN) based on magnetic resonance imaging (MRI) of data for the hand, clavicle, and teeth (third molar), with an age range between 13 and 25 years, with an accuracy of just 85.09%.

Table 2.

Summary of dental age estimation methods based on deep learning techniques.

Table 2.

Summary of dental age estimation methods based on deep learning techniques.

| Publication | DL Model | Method & Tooth | Database | Age | Performance |

| Jaeyoung et al., 2019 [97] | DenseNet-121. | Panoramic dental X-ray images, permanent teeth. | National Research Foundation Of Korea (NRF) dataset. | 2–98 | CNN model demonstrated low absolute error for the three age groups: 2–11y: 0.828, 12–18y: 1.229, 19 + y: 4.398. |

| Seyed et al., 2019 [100] | DCNN model. | Dental X-Ray (orthopantomography), the first-to-third molar. | Malaysian children’s dental development. | 1–17 | The method can efficiently classify the images with high performance that enables automated age estimation with high accuracy and precision, especially male patient age more precisely than the age of female patients in similar age groups. |

| Nicolás et al., 2020 [99] | DentalAgeNet (DANet), Dental Age and SexNet (DASNet) approach. | OPG images, upper and lower jaw. | Spanish Caucasian subjects (SCS) dataset | 4.5–89 | The DASNet provided better results than the DANet, the mean absolute error was about 2 years and 10 months, giving a median error of +0.12 years. |

| Qiang et al., 2020 [103] | CNN model (ResBlock) | 3D cone-beam computed tomography (CBCT) images, the pulp chamber of first molars. | 3D(CBCT) Images dataset. | 10–60 | The model segmented the purple chamber of first molars from 3D CBCT images, to derive pulp chamber volumes. the estimated human ages were not significantly different with true human age. The correlation coefficient r = 0.74. |

| Yu-cheng et al., 2021 [101] | CNN model (EfficientNet and SE- ResNet). | OPGs images, left mandibular eight permanent teeth or the third molar separately. | Chinese OPGs images dataset. | 5–24 | CNN models are better than the manual method, with high accuracy. The ACC of age threshold of 14 and 16 years reaches over 95%, and that of 18 years old reaches 93.3%. |

| Seunghyen et al., 2021 [96] | DNN with 152 layers (ResNet152). | Panoramic radiographs, four first molar images from the right and left sides of the maxilla and mandible. | The Kyung Hee University (IRB) dataset. | 0–60+ | The overall accuracy when using a CNN model is 90.37 ± 0.93%. |

| Essam et al., 2021 [95] | CNN model: AlexNet and ResNet-101. | Dental X-ray images (upper and lower jaw). | Dataset was obtained from Ebtisama Clinic in Kuwait. | 0–70 | AlexNet and ResNet gave a high performance, but the AlexNet-based features were better than the ResNet features. |

| Denis et al., 2022 [98] | (VGG, ResNet50, DenseNet201, InceptionNet V2) models. | Panoramic dental X-ray images. | Dataset was collected from the Dental Anthropology School of Dental Medicine, University of Zagreb, in Croatia. | 19–90 | The best performing in CNN models was VGG16, with median estimation error of 2.95 years for panoramic dental X-ray images. |

5. Dental Age Databases

The availability of appropriate dental age estimation databases plays an important role in the evolution of the research field of age estimation. It allows researchers to get engaged in research activities quickly. In the research area of soft biometrics, good quality age-related dental images are also of the utmost importance for the success of the research. There is a lack of databases for dental age estimation. In Table 3, we have summarized the dental age databases that are used in research and provided a brief description in terms of the type of images used, such as X-rays, panoramas, magnetic resonance imaging, and cone-beam computed tomography, as well as the number of images, the methods used in dental age estimation, and the accuracy of the results.

Table 3.

Summary of dental datasets used for age estimation.

6. Challenges and Problems

Relevant challenges and factors represent obstacles when determining age. Normal lifetime divides into different stages: childhood, adulthood, young adulthood, elderly, and old age. Each stage is characterized by certain biological and physiological changes, such as in tooth shape and size, bone structure, ethnicity, and disease, including common diseases in different countries, such as cleft lip and/or palate (CLP), one of the most common malformations [110]. Patients with cleft lip or palate have dental issues, such as the number of teeth, their shape, the time of the eruption, and the presence of supernumerary teeth, reflecting real-world environmental problems [111].

In addition, there is a large variation in teeth conditions after puberty due to dietary habits and teeth management. Choosing the appropriate method to use for a particular case is challenging because there is a lack of uniformity in procedures and methods used worldwide. Methods are population specific; in most cases, the results are either overestimated or underestimated when applied to different populations because of differences in ethnicity and race. Real-world environmental problems may include weather, stress, lifestyle, ultraviolet (UV) rays, solar radiation, lighting, color mode, disasters, and diseases related to the environment. On the other hand, it is very challenging to develop a system to estimate age covering all age groups. Therefore, we can conclude that creating a dataset is an important, relevant, and very challenging task.

7. Conclusions and Future Work

This paper reviews the different dental age estimation methods, showing the efficiency of the machine and deep learning methods compared to traditional dental age methods. Automated methods are more feasible than manual and semi-automated methods as they help to reduce human observation errors, computation time, and the use of clinical equipment. Little research has been conducted in the area of dental age estimation utilizing deep learning. Our survey indicates that, for dental age estimation, deep learning models, such as VGGNet16, the Inception model, EfficientNet, the Xception model, and MobileNetV1/V2 show high accuracy. Therefore, there are great opportunities for future work to estimate dental age using deep learning techniques and convolutional neural network (CNN) architectures that have not been widely used to date. We consider the emergence of deep CNN and discuss several recent approaches, highlighting the advantages and limitations of each. With the availability of bigger and more comprehensive datasets and the introduction of more advanced models, it is anticipated that the use of deep learning in age estimation based on teeth will lead to improved accuracy in age estimation.

Author Contributions

Conceptualization, E.G.M., R.P.D.R., A.K., M.S.E.-M. and M.K.; methodology, E.G.M., R.P.D.R., M.K.; validation, E.G.M., R.P.D.R.; formal analysis, E.G.M.; investigation, E.G.M., M.K.; resources, E.G.M., M.S.E.-M.; data curation, E.G.M.; writing—original draft preparation, E.G.M., R.P.D.R.; writing—review and editing, E.G.M., M.K., A.K.; visualization, M.K., R.P.D.R., A.K.; supervision, M.K., R.P.D.R., A.K. funding acquisition, R.P.D.R. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Spanish Government under the research project “Enhancing Communication Protocols with Machine Learning while Protecting Sensitive Data (COMPROMISE)” (PID2020-113795RB-C33/AEI/10.13039/501100011033). Additionally, this work has received financial support from the European Regional Development Fund (ERDF) and the Galician Regional Government under the agreement for funding the Atlantic Research Center for Information and Communication Technologies (atlanTTic).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Reid, D.A.; Samangooei, S.; Chen, C.; Nixon, M.S.; Ross, A. Soft biometrics for surveillance: An overview. Handb. Stat. 2013, 31, 327–352. [Google Scholar]

- Lanitis, A. A survey of the effects of aging on biometric identity verification. Int. J. Biom. 2010, 2, 34. [Google Scholar] [CrossRef]

- Patil, K.A.; Bhavsar, R.P.; Pawar, B.V. Features and methods of human age estimation: Opportunities and challenges in medical image processing. Turk. J. Comput. Math. Educ. (TURCOMAT) 2021, 12, 294–318. [Google Scholar]

- Verma, M.; Verma, N.; Sharma, R.; Sharma, A. Dental age estimation methods in adult dentitions: An overview. J. Forensic Dent. Sci. 2019, 11, 57. [Google Scholar] [CrossRef] [PubMed]

- Anemone, R.L.; Mooney, M.P.; Siegel, M.I. Longitudinal study of dental development in chimpanzees of known chronological age: Implications for understanding the age at death of Plio-Pleistocene hominids. Am. J. Phys. Anthropol. 1996, 99, 119–133. [Google Scholar] [CrossRef]

- Willems, G. A review of the most commonly used dental age estimation techniques. J. Forensic-Odonto-Stomatol. 2001, 19, 9–17. [Google Scholar]

- Nishanth, G.; Anitha, N.; Babu, N.A.; Malathi, L. Morphological Dental Age Estimation Technique-A Review. Eur. J. Mol. Clin. Med. 2020, 7, 2020. [Google Scholar]

- Priyadarshini, C.; Puranik, M.P.; Uma, S. Dental Age Estimation Methods—A Review; LAP Lambert Academic Publication: London, UK, 2015. [Google Scholar]

- De Tobel, J.; Hillewig, E.; Verstraete, K. Forensic age estimation based on magnetic resonance imaging of third molars: Converting 2D staging into 3D staging. Ann. Hum. Biol. 2017, 44, 121–129. [Google Scholar] [CrossRef]

- Bjørk, M.B.; Kvaal, S.I. CT and MR imaging used in age estimation: A systematic review. J. Forensic-Odonto-Stomatol. 2018, 36, 14. [Google Scholar]

- Szemraj, A.; Wojtaszek-Słomińska, A.; Racka-Pilszak, B. Is the cervical vertebral maturation (CVM) method effective enough to replace the hand-wrist maturation (HWM) method in determining skeletal maturation?—A systematic review. Eur. J. Radiol. 2018, 102, 125–128. [Google Scholar] [CrossRef] [PubMed]

- Angulu, R.; Tapamo, J.R.; Adewumi, A.O. Age estimation via face images: A survey. Eurasip J. Image Video Process. 2018, 2018, 1–35. [Google Scholar] [CrossRef]

- Punyani, P.; Gupta, R.; Kumar, A. Neural networks for facial age estimation: A survey on recent advances. Artif. Intell. Rev. 2020, 53, 3299–3347. [Google Scholar] [CrossRef]

- Townsend, N.; Hammel, E. Age estimation from the number of teeth erupted in young children: An aid to demographic surveys. Demography 1990, 27, 165–174. [Google Scholar] [CrossRef]

- Yan, C.; Lang, C.; Wang, T.; Du, X.; Zhang, C. Age estimation based on convolutional neural network. In Proceedings of the Pacific Rim Conference on Multimedia, Kuching, Malaysia, 1–4 December 2014; pp. 211–220. [Google Scholar]

- Zheng, T.; Deng, W.; Hu, J. Age estimation guided convolutional neural network for age-invariant face recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 1–9. [Google Scholar]

- Alkaabi, S.; Yussof, S.; Al-Khateeb, H.; Ahmadi-Assalemi, G.; Epiphaniou, G. Deep Convolutional Neural Networks for Forensic Age Estimation: A Review. In Cyber Defence in the Age of AI, Smart Societies and Augmented Humanity; Springer: Cham, Switzerland, 2020; pp. 375–395. [Google Scholar]

- Sharma, A.; Rai, A. An Improved DCNN-based classification and automatic age estimation from multi-factorial MRI data. In Advances in Computer, Communication and Computational Sciences; Springer: Berlin/Heidelberg, Germany, 2021; pp. 483–495. [Google Scholar]

- Dong, Y.; Liu, Y.; Lian, S. Automatic age estimation based on deep learning algorithm. Neurocomputing 2016, 187, 4–10. [Google Scholar] [CrossRef]

- Xia, M.; Zhang, X.; Weng, L.; Xu, Y. Multi-stage feature constraints learning for age estimation. IEEE Trans. Inf. Forensics Secur. 2020, 15, 2417–2428. [Google Scholar] [CrossRef]

- Liu, K.H.; Liu, T.J. A structure-based human facial age estimation framework under a constrained condition. IEEE Trans. Image Process. 2019, 28, 5187–5200. [Google Scholar] [CrossRef]

- Kaushik, M.; Pham, V.T.; Chng, E.S. End-to-end speaker height and age estimation using attention mechanism with LSTM-RNN. arXiv 2021, arXiv:2101.05056. [Google Scholar]

- Moyse, E. Age estimation from faces and voices: A review. Psychol. Belg. 2014, 54, 255–265. [Google Scholar]

- Mahmoodi, D.; Marvi, H.; Taghizadeh, M.; Soleimani, A.; Razzazi, F.; Mahmoodi, M. Age estimation based on speech features and support vector machine. In Proceedings of the 2011 3rd Computer Science and Electronic Engineering Conference (CEEC), Colchester, UK, 13–14 July 2011; pp. 60–64. [Google Scholar]

- Harnsberger, J.D.; Shrivastav, R.; Brown, W., Jr.; Rothman, H.; Hollien, H. Speaking rate and fundamental frequency as speech cues to perceived age. J. Voice 2008, 22, 58–69. [Google Scholar] [CrossRef]

- Maggio, A. The skeletal age estimation potential of the knee: Current scholarship and future directions for research. J. Forensic Radiol. Imaging 2017, 9, 13–15. [Google Scholar] [CrossRef]

- Erbilek, M.; Fairhurst, M.; Abreu, M.C.D.C. Age prediction from iris biometrics. In Proceedings of the 5th International Conference on Imaging for Crime Detection and Prevention (ICDP 2013), London, UK, 16–17 December 2013; pp. 1–5. [Google Scholar]

- Rajput, M.; Sable, G. Deep Learning Based Gender and Age Estimation from Human Iris. In Proceedings of the Proceedings of the International Conference on Advances in Electronics, Electrical & Computational Intelligence (ICAEEC), Allahabad, India, 31 May–1 June 2019. [Google Scholar]

- Machado, C.E.P.; Flores, M.R.P.; Lima, L.N.C.; Tinoco, R.L.R.; Franco, A.; Bezerra, A.C.B.; Evison, M.P.; Guimarães, M.A. A new approach for the analysis of facial growth and age estimation: Iris ratio. PLoS ONE 2017, 12, e0180330. [Google Scholar] [CrossRef]

- Damak, W.; Trabelsi, R.B.; Masmoudi, A.D.; Sellami, D. Palm vein age and gender estimation using center symmetric-local binary pattern. In Proceedings of the Computational Intelligence in Security for Information Systems Conference, International Conference on EUropean Transnational Education, Burgos, Spain, 16–18 September 2020; pp. 114–123. [Google Scholar]

- Štern, D.; Payer, C.; Urschler, M. Automated age estimation from MRI volumes of the hand. Med. Image Anal. 2019, 58, 101538. [Google Scholar] [CrossRef] [PubMed]

- Cadd, S.; Islam, M.; Manson, P.; Bleay, S. Fingerprint composition and aging: A literature review. Sci. Justice 2015, 55, 219–238. [Google Scholar] [CrossRef] [PubMed]

- Ceyhan, E.B.; Saĝiroĝlu, Ş.; Tatoĝlu, S.; Atagün, E. Age estimation from fingerprints: Examination of the population in Turkey. In Proceedings of the 2014 13th International Conference on Machine Learning and Applications, Washington, DC, USA, 3–6 December 2014; pp. 478–481. [Google Scholar]

- Tsimperidis, I.; Rostami, S.; Katos, V. Age detection through keystroke dynamics from user authentication failures. Int. J. Digit. Crime Forensics (IJDCF) 2017, 9, 1–16. [Google Scholar] [CrossRef]

- Pisani, P.H.; Lorena, A.C. A systematic review on keystroke dynamics. J. Braz. Comput. Soc. 2013, 19, 573–587. [Google Scholar] [CrossRef]

- Pentel, A. Predicting age and gender by keystroke dynamics and mouse patterns. In Proceedings of the Adjunct Publication of the 25th Conference on User Modeling, Adaptation and Personalization, Bratislava, Slovakia, 9–12 July 2017; pp. 381–385. [Google Scholar]

- Xu, C.; Makihara, Y.; Liao, R.; Niitsuma, H.; Li, X.; Yagi, Y.; Lu, J. Real-time gait-based age estimation and gender classification from a single image. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 5–9 January 2021; pp. 3460–3470. [Google Scholar]

- Nixon, M.S.; Correia, P.L.; Nasrollahi, K.; Moeslund, T.B.; Hadid, A.; Tistarelli, M. On soft biometrics. Pattern Recognit. Lett. 2015, 68, 218–230. [Google Scholar] [CrossRef]

- Riaz, Q.; Hashmi, M.Z.U.H.; Hashmi, M.A.; Shahzad, M.; Errami, H.; Weber, A. Move your body: Age estimation based on chest movement during normal walk. IEEE Access 2019, 7, 28510–28524. [Google Scholar] [CrossRef]

- Maulani, C.; Auerkari, E.I. Age estimation using DNA methylation technique in forensics: A systematic review. Egypt. J. Forensic Sci. 2020, 10, 1–15. [Google Scholar] [CrossRef]

- Shi, L.; Jiang, F.; Ouyang, F.; Zhang, J.; Wang, Z.; Shen, X. DNA methylation markers in combination with skeletal and dental ages to improve age estimation in children. Forensic Sci. Int. Genet. 2018, 33, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Yang, Q.; Gao, S.; Lin, J.; Lyu, K.; Wu, Z.; Chen, Y.; Qiu, Y.; Zhao, Y.; Wang, W.; Lin, T.; et al. A machine learning-based data mining in medical examination data: A biological features-based biological age prediction model. BMC Bioinform. 2022, 23, 411. [Google Scholar] [CrossRef] [PubMed]

- Alkass, K.; Buchholz, B.A.; Ohtani, S.; Yamamoto, T.; Druid, H.; Spalding, K.L. Age estimation in forensic sciences: Application of combined aspartic acid racemization and radiocarbon analysis. Mol. Cell. Proteom. 2010, 9, 1022–1030. [Google Scholar] [CrossRef]

- Lewis, J.M.; Senn, D.R. Forensic Dental Age Estimation: An Overview. J. Calif. Dent. Assoc. 2015, 43, 315–319. [Google Scholar]

- Farazdaghi, E.; Eslahi, M.; El Meouche, R. An Overview of the Use of Biometric Techniques in Smart Cities. International Archives of the Photogrammetry, Remote Sensing & Spatial Information Sciences. 2021. Available online: https://ui.adsabs.harvard.edu/abs/2021ISPAr4421...41F/abstract (accessed on 2 January 2023).

- Grabosky, P.N.; Smith, R.G.; Wright, P. Crime in the Digital Age: Controlling Telecommunications and Cyberspace Illegalities; Routledge: Oxfordshire, UK, 2018. [Google Scholar]

- Mukherjee, S.B.; Ghatak, S.G.N.; Ray, N. Digitization of Economy and Society: Emerging Paradigms; CRC Press: Boca Raton, FL, USA, 2021. [Google Scholar]

- Focardi, M.; Pinchi, V.; De Luca, F.; Norelli, G.A. Age estimation for forensic purposes in Italy: Ethical issues. Int. J. Leg. Med. 2014, 128, 515–522. [Google Scholar] [CrossRef] [PubMed]

- Black, S.; Aggrawal, A.; Payne-James, J. Age Estimation in the Living: The Practitioner’s Guide; John Wiley & Sons: Hoboken, NJ, USA, 2011. [Google Scholar]

- Yang, H.; Huang, D.; Wang, Y.; Wang, H.; Tang, Y. Face aging effect simulation using hidden factor analysis joint sparse representation. IEEE Trans. Image Process. 2016, 25, 2493–2507. [Google Scholar] [CrossRef] [PubMed]

- Panchbhai, A. Dental radiographic indicators, a key to age estimation. Dentomaxillofacial Radiol. 2011, 40, 199–212. [Google Scholar] [CrossRef]

- Nayyar, A.S.; Babu, B.A.; Krishnaveni, B.; Devi, M.V.; Gayitri, H. Age estimation: Current state and research challenges. J. Med. Sci. 2016, 36, 209. [Google Scholar] [CrossRef]

- Arumugam, V.; Doggalli, N. Different Dental Aging Charts or Atlas Methods Used for Age Estimation–A Review. Asian J. Basic Sci. Res. 2020, 2, 64–74. [Google Scholar] [CrossRef]

- Chandrasekhar, B.; Firdous, P.S. Unfolding The Link: Age Estimation through Comparison of Demirijian and Moore’s Method. 2019. Available online: https://saudijournals.com/media/articles/SJBR-44-168-173-ct.pdf (accessed on 2 January 2023).

- Demirjian, A.; Goldstein, H.; Tanner, J.M. A new system of dental age assessment. Hum. Biol. 1973, 45, 211–227. [Google Scholar]

- Apaydin, B.; Yasar, F. Accuracy of the demirjian, willems and cameriere methods of estimating dental age on turkish children. Niger. J. Clin. Pract. 2018, 21, 257. [Google Scholar]

- Marroquin, T.; Karkhanis, S.; Kvaal, S.; Vasudavan, S.; Kruger, E.; Tennant, M. Age estimation in adults by dental imaging assessment systematic review. Forensic Sci. Int. 2017, 275, 203–211. [Google Scholar] [CrossRef]

- Gleiser, I.; Hunt, E. The estimation of age and sex of preadolescent children from bones and teeth. Am. J. Phys. Anthr. 1955, 13, 479–488. [Google Scholar]

- Demirjian, A.; Goldstein, H. New systems for dental maturity based on seven and four teeth. Ann. Hum. Biol. 1976, 3, 411–421. [Google Scholar] [CrossRef]

- Harris, E.F.; McKee, J.H. Tooth mineralization standards for blacks and whites from the middle southern United States. J. Forensic Sci. 1990, 35, 859–872. [Google Scholar] [CrossRef]

- Kvaal, S.I.; Kolltveit, K.M.; Thomsen, I.O.; Solheim, T. Age estimation of adults from dental radiographs. Forensic Sci. Int. 1995, 74, 175–185. [Google Scholar] [CrossRef]

- Cameriere, R.; Ferrante, L.; Cingolani, M. Variations in pulp/tooth area ratio as an indicator of age: A preliminary study. J. Forensic Sci. 2004, 49, 1–3. [Google Scholar] [CrossRef]

- Cameriere, R.; Ferrante, L.; Belcastro, M.G.; Bonfiglioli, B.; Rastelli, E.; Cingolani, M. Age estimation by pulp/tooth ratio in canines by peri-apical X-rays. J. Forensic Sci. 2007, 52, 166–170. [Google Scholar] [CrossRef]

- Blenkin, M.; Taylor, J. Age estimation charts for a modern Australian population. Forensic Sci. Int. 2012, 221, 106–112. [Google Scholar] [CrossRef] [PubMed]

- Ahmed, H.; Ewiss, A.; Khattab, N.; Amer, M. Age estimation through dental measurements using cone-beam computerized tomography images in a sample of upper Egyptian population. Ain Shams J. Forensic Med. Clin. Toxicol. 2013, 21, 75–88. [Google Scholar] [CrossRef]

- Cameriere, R.; De Luca, S.; Egidi, N.; Bacaloni, M.; Maponi, P.; Ferrante, L.; Cingolani, M. Automatic age estimation in adults by analysis of canine pulp/tooth ratio: Preliminary results. J. Forensic Radiol. Imaging 2015, 3, 61–66. [Google Scholar] [CrossRef]

- Aka, P.; Yagan, M.; Canturk, N.; Dagalp, R. Direct and indirect forensic age estimation methods for deciduous teeth. J. Forensic Res. 2015, 6, 273. [Google Scholar]

- Nagi, R.; Jain, S.; Agrawal, P.; Prasad, S.; Tiwari, S.; Naidu, G.S. Tooth coronal index: Key for age estimation on digital panoramic radiographs. J. Indian Acad. Oral Med. Radiol. 2018, 30, 64. [Google Scholar] [CrossRef]

- Chen, C.; Li, O.; Tao, D.; Barnett, A.; Rudin, C.; Su, J.K. This looks like that: Deep learning for interpretable image recognition. Adv. Neural Inf. Process. Syst. 2019, 32. [Google Scholar] [CrossRef]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar] [CrossRef]

- Minaee, S.; Boykov, Y.Y.; Porikli, F.; Plaza, A.J.; Kehtarnavaz, N.; Terzopoulos, D. Image segmentation using deep learning: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 3523–3542. [Google Scholar] [CrossRef]

- Liu, L.; Ouyang, W.; Wang, X.; Fieguth, P.; Chen, J.; Liu, X.; Pietikäinen, M. Deep learning for generic object detection: A survey. Int. J. Comput. Vis. 2020, 128, 261–318. [Google Scholar] [CrossRef]

- Zhao, Z.Q.; Zheng, P.; Xu, S.t.; Wu, X. Object detection with deep learning: A review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef] [PubMed]

- Jain, A.K.; Mao, J.; Mohiuddin, K.M. Artificial neural networks: A tutorial. Computer 1996, 29, 31–44. [Google Scholar] [CrossRef]

- Abiodun, O.I.; Jantan, A.; Omolara, A.E.; Dada, K.V.; Mohamed, N.A.; Arshad, H. State-of-the-art in artificial neural network applications: A survey. Heliyon 2018, 4, e00938. [Google Scholar] [CrossRef] [PubMed]

- Yao, X. Evolving artificial neural networks. Proc. IEEE 1999, 87, 1423–1447. [Google Scholar]

- Graupe, D. Principles of Artificial Neural Networks; World Scientific: Singapore, 2013; Volume 7. [Google Scholar]

- Khan, A.; Sohail, A.; Zahoora, U.; Qureshi, A.S. A survey of the recent architectures of deep convolutional neural networks. Artif. Intell. Rev. 2020, 53, 5455–5516. [Google Scholar] [CrossRef]

- Dhillon, A.; Verma, G.K. Convolutional neural network: A review of models, methodologies and applications to object detection. Prog. Artif. Intell. 2020, 9, 85–112. [Google Scholar] [CrossRef]

- Alom, M.Z.; Taha, T.M.; Yakopcic, C.; Westberg, S.; Sidike, P.; Nasrin, M.S.; Hasan, M.; Van Essen, B.C.; Awwal, A.A.; Asari, V.K. A state-of-the-art survey on deep learning theory and architectures. Electronics 2019, 8, 292. [Google Scholar] [CrossRef]

- Guo, T.; Dong, J.; Li, H.; Gao, Y. Simple convolutional neural network on image classification. In Proceedings of the 2017 IEEE 2nd International Conference on Big Data Analysis (ICBDA), Beijing, China, 10–12 March 2017; pp. 721–724. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25. [Google Scholar] [CrossRef]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Volume 8689, pp. 818–833. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; IEEE: Las Vegas, NV, USA, 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Hitawala, S. Evaluating resnext model architecture for image classification. arXiv 2018, arXiv:1805.08700. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Patrick, M.K.; Adekoya, A.F.; Mighty, A.A.; Edward, B.Y. Capsule networks–a survey. J. King Saud-Univ.-Comput. Inf. Sci. 2022, 34, 1295–1310. [Google Scholar]

- Xi, E.; Bing, S.; Jin, Y. Capsule network performance on complex data. arXiv 2017, arXiv:1712.03480. [Google Scholar]

- Houssein, E.H.; Mualla, N.; Hassan, M. Dental age estimation based on X-ray images. Comput. Mater. Contin. 2020, 62, 591–605. [Google Scholar]

- Kim, S.; Lee, Y.H.; Noh, Y.K.; Park, F.C.; Auh, Q. Age-group determination of living individuals using first molar images based on artificial intelligence. Sci. Rep. 2021, 11, 1–11. [Google Scholar] [CrossRef]

- Kim, J.; Bae, W.; Jung, K.H.; Song, I.S. Development and Validation of Deep Learning-Based Algorithms for the Estimation of Chronological Age Using Panoramic Dental X-ray Images. 2019. Available online: https://openreview.net/forum?id=BJg4tI2VqV (accessed on 2 January 2023).