Understanding Clinical Reasoning through Visual Scanpath and Brain Activity Analysis

Abstract

1. Introduction

2. Previous Work

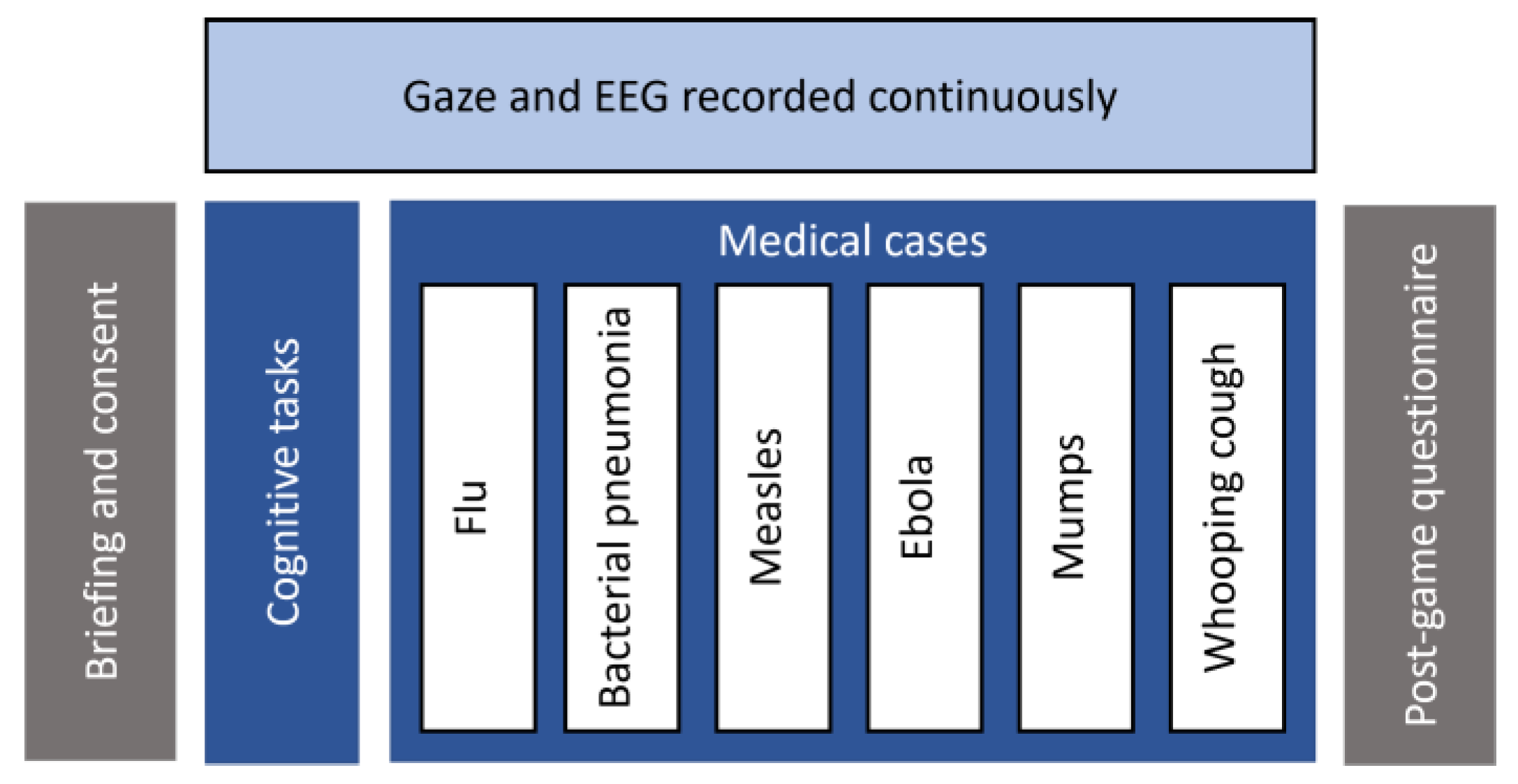

3. Experimental Design and Methodology

3.1. Amnesia

3.2. Gaze Recording

3.3. EEG Recording

4. Experimental Results

4.1. Gaze Behaviour

4.2. Brain Activity

4.3. Relationship between Gaze and EEG Data

5. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Coyne, E.; Calleja, P.; Forster, E.; Lin, F. A review of virtual-simulation for assessing healthcare students’ clinical competency. Nurse Educ. Today 2020, 96, 104623. [Google Scholar] [CrossRef] [PubMed]

- Thomas, A.; Lubarsky, S.; Varpio, L.; Durning, S.J.; Young, M.E. Scoping reviews in health professions education: Challenges, considerations and lessons learned about epistemology and methodology. Adv. Health Sci. Educ. 2019, 25, 989–1002. [Google Scholar] [CrossRef] [PubMed]

- Singh, M.; Collins, L.; Farrington, R.; Jones, M.; Thampy, H.; Watson, P.; Warner, C.; Wilson, K.; Grundy, J. From principles to practice: Embedding clinical reasoning as a longitudinal curriculum theme in a medical school programme. Diagnosis 2021, 9, 184–194. [Google Scholar] [CrossRef]

- Charlin, B.; Lubarsky, S.; Millette, B.; Crevier, F.; Audétat, M.-C.; Charbonneau, A.; Fon, N.C.; Hoff, L.; Bourdy, C. Clinical reasoning processes: Unravelling complexity through graphical representation. Med Educ. 2012, 46, 454–463. [Google Scholar] [CrossRef]

- Chon, S.-H.; Timmermann, F.; Dratsch, T.; Schuelper, N.; Plum, P.; Berlth, F.; Datta, R.R.; Schramm, C.; Haneder, S.; Späth, M.R.; et al. Serious Games in Surgical Medical Education: A Virtual Emergency Department as a Tool for Teaching Clinical Reasoning to Medical Students. JMIR Serious Games 2019, 7, 13028. [Google Scholar] [CrossRef] [PubMed]

- Mäkinen, H.; Haavisto, E.; Havola, S.; Koivisto, J.-M. User experiences of virtual reality technologies for healthcare in learning: An integrative review. Behav. Inf. Technol. 2020, 41, 1–17. [Google Scholar] [CrossRef]

- Stenseth, H.V.; Steindal, S.A.; Solberg, M.T.; Ølnes, M.A.; Mohallem, A.; Sørensen, A.L.; Strandell-Laine, C.; Olaussen, C.; Aure, C.F.; Riegel, F.; et al. Simulation-Based Learning Supported by Technology to Enhance Critical Thinking in Nursing Students: Protocol for a Scoping Review. JMIR Res. Protoc. 2022, 11, 36725. [Google Scholar] [CrossRef]

- Zhonggen, Y. A Meta-Analysis of Use of Serious Games in Education over a Decade. Int. J. Comput. Games Technol. 2019, 2019, 4797032. [Google Scholar] [CrossRef]

- Havola, S.; Haavisto, E.; Mäkinen, H.; Engblom, J.; Koivisto, J.-M. The Effects of Computer-Based Simulation Game and Virtual Reality Simulation in Nursing Students’ Self-evaluated Clinical Reasoning Skills. CIN Comput. Inform. Nurs. 2021, 39, 725–735. [Google Scholar] [CrossRef]

- Streicher, A.; Smeddinck, J.D. Personalized and adaptive serious games. In Entertainment Computing and Serious Games; Dörner, R., Göbel, S., Kickmeier-Rust, M., Masuch, M., Zweig, K., Eds.; Springer: Cham, Switzerland, 2016; pp. 332–377. [Google Scholar]

- Wagner, C.; Liu, L. Creating Immersive Learning Experiences: A Pedagogical Design Perspective. In Creative and Collaborative Learning through Immersion. Creativity in the Twenty First Century; Hui, A., Wagner, C., Eds.; Springer: Cham, Switzerland, 2021. [Google Scholar] [CrossRef]

- Hendrix, M.; Bellamy-Wood, T.; McKay, S.; Bloom, V.; Dunwell, I. Implementing Adaptive Game Difficulty Balancing in Serious Games. IEEE Trans. Games 2018, 11, 320–327. [Google Scholar] [CrossRef]

- Bellotti, F.; Berta, R.; De Gloria, A. Designing Effective Serious Games: Opportunities and Challenges for Research. Int. J. Emerg. Technol. Learn. (IJET) 2010, 5, 22. [Google Scholar] [CrossRef]

- Hu, B.; Shen, J.; Zhu, L.; Dong, Q.; Cai, H.; Qian, K. Fundamentals of Computational Psychophysiology: Theory and Methodology. IEEE Trans. Comput. Soc. Syst. 2022, 9, 349–355. [Google Scholar] [CrossRef]

- Dalton, C. Interaction Design in the Built Environment: Designing for the ‘Universal User’. Stud. Health Technol. Inform. 2016, 229, 314–323. [Google Scholar]

- Picard, R. Affective Computing; MIT Press: Cambridge, MA, USA, 1997. [Google Scholar]

- Bosch, N.; D’Mello, S.K.; Ocumpaugh, J.; Baker, R.S.; Shute, V. Using Video to Automatically Detect Learner Affect in Computer-Enabled Classrooms. ACM Trans. Interact. Intell. Syst. 2016, 6, 1–26. [Google Scholar] [CrossRef]

- Harley, J.M.; Jarrell, A.; Lajoie, S.P. Emotion regulation tendencies, achievement emotions, and physiological arousal in a medical diagnostic reasoning simulation. Instr. Sci. 2019, 47, 151–180. [Google Scholar] [CrossRef]

- Ben Khedher, A.; Jraidi, I.; Frasson, C. Static and dynamic eye movement metrics for students’ performance assessment. Smart Learn. Environ. 2018, 5, 14. [Google Scholar] [CrossRef]

- Gevins, A.; Smith, M.E. Neurophysiological measures of cognitive workload during human-computer interaction. Theor. Issues Ergon. Sci. 2003, 4, 113–131. [Google Scholar] [CrossRef]

- Le, Y.; Liu, J.; Deng, C.; Dai, D.Y. Heart rate variability reflects the effects of emotional design principle on mental effort in multimedia learning. Comput. Hum. Behav. 2018, 89, 40–47. [Google Scholar] [CrossRef]

- Elbattah, M.; Carette, R.; Dequen, G.; Guerin, J.-L.; Cilia, F. Learning Clusters in Autism Spectrum Disorder: Image-Based Clustering of Eye-Tracking Scanpaths with Deep Autoencoder. In Proceedings of the 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 1417–1420. [Google Scholar] [CrossRef]

- Sawyer, R.; Smith, A.; Rowe, J.; Azevedo, R.; Lester, J. Enhancing Student Models in Game-based Learning with Facial Expression Recognition. In Proceedings of the 25th Conference on User Modeling017, Adaptation and Personalization, Bratislava, Slovakia, 9–12 July 2017; pp. 192–201. [Google Scholar] [CrossRef]

- Khedher, A.B.; Jraidi, I.; Frasson, C. What can eye movement patterns reveal about learners’ performance? In Proceedings of the 14th International Conference on Intelligent Tutoring Systems (ITS 2018), Montreal, QC, Canada, 11–15 June 2018; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Hunter, M.; Mach, Q.H.; Grewal, R.S. The relationship between scan path direction and cognitive processing. In Proceedings of the Third Conference on Computer Science and Software Engineering, Montréal, QC, Canada, 19 May 2010; pp. 97–100. [Google Scholar]

- Mohammadhasani, N.; Caprì, T.; Nucita, A.; Iannizzotto, G.; Fabio, R.A. Atypical Visual Scan Path Affects Remembering in ADHD. J. Int. Neuropsychol. Soc. 2020, 26, 557–566. [Google Scholar] [CrossRef] [PubMed]

- Chandra, S.; Sharma, G.; Malhotra, S.; Jha, D.; Mittal, A.P. Eye tracking based human computer interaction: Applications and their uses. In Proceedings of the International Conference on Man a1&nd Macshine Interfacing (MAMI), Bhubaneswar, India, 17–19 December 2015; pp. 1–5. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Tsai, M.-J.; Tsai, C.-C. Multimedia recipe reading: Predicting learning outcomes and diagnosing cooking interest using eye-tracking measures. Comput. Hum. Behav. 2016, 62, 9–18. [Google Scholar] [CrossRef]

- D’Mello, S.; Olney, A.; Williams, C.; Hays, P. Gaze tutor: A gaze-reactive intelligent tutoring system. Int. J. Hum. Comput. Stud. 2012, 70, 377–398. [Google Scholar] [CrossRef]

- Lallé, S.; Toker, D.; Conati, C.; Carenini, G. Prediction of Users’ Learning Curves for Adaptation while Using an Information Visualization. In Proceedings of the 20th International Conference on Intelligent User Interfaces, Atlanta, GA, USA, 29 March–1 April 2015. [Google Scholar] [CrossRef]

- Lum, H.C.; Greatbatch, R.L.; Waldfogle, G.E.; Benedict, J.D.; Nembhard, D.A. The Relationship of Eye Movement, Workload, and Attention on Learning in a Computer-Based Training Program. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Washington, DC, USA, 19–23 April 2016; pp. 1477–1481. [Google Scholar] [CrossRef]

- Ben Khedher, A.; Frasson, C. Predicting User Learning Performance From Eye Movements During Interaction With a Serious Game. In Proceedings of the EdMedia + Innovate Learning 2016, Vancouver, BC, Canada, 28 June 2016; pp. 1504–1511. [Google Scholar]

- Pachman, M.; Arguel, A.; Lockyer, L.; Kennedy, G.; Lodge, J. Eye tracking and early detection of confusion in digital learning environments: Proof of concept. Australas. J. Educ. Technol. 2016, 32, 58–71. [Google Scholar] [CrossRef]

- Day, R.-F. Examining the validity of the Needleman–Wunsch algorithm in identifying decision strategy with eye-movement data. Decis. Support Syst. 2010, 49, 396–403. [Google Scholar] [CrossRef]

- Glöckner, A.; Herbold, A.-K. An eye-tracking study on information processing in risky decisions: Evidence for compensatory strategies based on automatic processes. J. Behav. Decis. Mak. 2010, 24, 71–98. [Google Scholar] [CrossRef]

- Su, Y.; Rao, L.-L.; Sun, H.-Y.; Du, X.-L.; Li, X.; Li, S. Is making a risky choice based on a weighting and adding process? An eye-tracking investigation. J. Exp. Psychol. Learn. Mem. Cogn. 2013, 39, 1765–1780. [Google Scholar] [CrossRef] [PubMed]

- Altmann, G.T.M.; Kamide, Y. “Discourse-mediation of the mapping between language and the visual world”: Eye movements and mental representation. Cognition 2009, 111, 55–71. [Google Scholar] [CrossRef]

- Blascheck, T.; Kurzhals, K.; Raschke, M.; Burch, M.; Weiskopf, D.; Ertl, T. State-of-the-Art of Visualization for Eye Tracking Data. In Eurographics Conference on Visualization (EuroVis); The Eurographics Association: Munich, Germany, 2014. [Google Scholar]

- Duchowski, A.T.; Driver, J.; Jolaoso, S.; Tan, W.; Ramey, B.N.; Robbins, A. Scanpath comparison revisited. In Proceedings of the 2010 Symposium on Eye-Tracking Research & Applications, Austin, TX, USA, 22–24 March 2010; pp. 219–226. [Google Scholar]

- Susac, A.; Bubic, A.; Kaponja, J.; Planinic, M.; Palmovic, M. Eye Movements Reveal Students’ Strategies in Simple Equation Solving. Int. J. Sci. Math. Educ. 2014, 12, 555–577. [Google Scholar] [CrossRef]

- Byun, J.; Loh, C.; Zhou, T. Assessing play-learners’ performance in serious game environments by using In Situ data: Using eye tracking for Serious Game Analytics. In Proceedings of the Annual Conference of the Association for Educational Communications and Technology (AECT), Jacksonville, FL, USA, 4–8 November 2014. [Google Scholar]

- Ho, H.N.J.; Tsai, M.-J.; Wang, C.-Y.; Tsai, C.-C. Prior Knowledge and Online Inquiry-Based Science Reading: Evidence from Eye Tracking. Int. J. Sci. Math. Educ. 2013, 12, 525–554. [Google Scholar] [CrossRef]

- Lee, W.-K.; Wu, C.-J. Eye Movements in Integrating Geometric Text and Figure: Scanpaths and Given-New Effects. Int. J. Sci. Math. Educ. 2017, 16, 699–714. [Google Scholar] [CrossRef]

- Berka, C.; Levendowski, D.J.; Cvetinovic, M.M.; Petrovic, M.M.; Davis, G.; Lumicao, M.N.; Zivkovic, V.T.; Popovic, M.V.; Olmstead, R. Real-Time Analysis of EEG Indexes of Alertness, Cognition, and Memory Acquired With a Wireless EEG Headset. Int. J. Hum. Comput. Interact. 2004, 17, 151–170. [Google Scholar] [CrossRef]

- Wang, H.; Li, Y.; Hu, X.; Yang, Y.; Meng, Z.; Chang, K. Using EEG to Improve Massive Open Online Courses Feedback Interaction. In Proceedings of the AIED Workshops, Memphis, TN, USA, 9–13 July 2013. [Google Scholar]

- Workshop on Utilizing EEG Input in Intelligent Tutoring Systems. Available online: https://sites.google.com/site/its2014wseeg/ (accessed on 1 June 2022).

- Chen, C.-M.; Wang, J.-Y.; Yu, C.-M. Assessing the attention levels of students by using a novel attention aware system based on brainwave signals. Br. J. Educ. Technol. 2017, 48, 348–369. [Google Scholar] [CrossRef]

- Lin, F.-R.; Kao, C.-M. Mental effort detection using EEG data in E-learning contexts. Comput. Educ. 2018, 122, 63–79. [Google Scholar] [CrossRef]

- Berka, C.; Levendowski, D.J.; Ramsey, C.K.; Davis, G.; Lumicao, M.N.; Stanney, K.; Reeves, L.; Regli, S.H.; Tremoulet, P.D.; Stibler, K. Evaluation of an EEG workload model in an Aegis simulation environment. In Proceedings Volume 5797, Biomonitoring for Physiological and Cognitive Performance during Military Operations; SPIE Press: Bellingham, WA, USA, 2005; pp. 90–99. [Google Scholar] [CrossRef]

- Berka, C.; Levendowski, D.J.; Lumicao, M.N.; Alan, Y.; Davis, G.; Zivkovic, V.T.; Olmstead, R.E.; Tremoulet, P.D.; Graven, P.L. EEG Correlates of Task Engagement and Mental Workload in Vigilance, Learning, and Memory Tasks. Aviation Space Environ. Med. 2007, 78, B231–B244. [Google Scholar]

- Mills, C.; Fridman, I.; Soussou, W.; Waghray, D.; Olney, A.M.; D’Mello, S.K. Put your thinking cap on: Detecting cognitive load using EEG during learning. In Proceedings of the Seventh International Learning Analytics & Knowledge Conference, Vancouver, BC, Canada, 13–17 March 2017; pp. 80–89. [Google Scholar]

- Pope, A.T.; Bogart, E.H.; Bartolome, D.S. Biocybernetic system evaluates indices of operator engagement in automated task. Biol. Psychol. 1995, 40, 187–195. [Google Scholar] [CrossRef]

- Alimardani, M.; Braak, S.V.D.; Jouen, A.-L.; Matsunaka, R.; Hiraki, K. Assessment of Engagement and Learning During Child-Robot Interaction Using EEG Signals. In Social Robotics. ICSR 2021. Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2021; pp. 671–682. [Google Scholar] [CrossRef]

- Chanel, G.; Rebetez, C.; Bétrancourt, M.; Pun, T. Emotion Assessment From Physiological Signals for Adaptation of Game Difficulty. IEEE Trans. Syst. Man Cybern.-Part A Syst. Hum. 2011, 41, 1052–1063. [Google Scholar] [CrossRef]

- Jraidi, I.; Chaouachi, M.; Frasson, C. A dynamic multimodal approach for assessing learners’ interaction experience. In Proceedings of the 15th ACM on International Conference on Multimodal Interaction, Sydney, Australia, 9–13 December 2013; pp. 271–278. [Google Scholar]

- Szafir, D.; Mutlu, B. Artful: Adaptive review technology for flipped learning. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Paris, France, 7 April 2013–2 May 2013; pp. 1001–1010. [Google Scholar]

- Apicella, A.; Arpaia, P.; Frosolone, M.; Improta, G.; Moccaldi, N.; Pollastro, A. EEG-based measurement system for monitoring student engagement in learning 4.0. Sci. Rep. 2022, 12, 5857. [Google Scholar] [CrossRef] [PubMed]

- Rashid, N.A.; Taib, M.N.; Lias, S.; Sulaiman, N.; Murat, Z.H.; Kadir, R.S.S.A. Learners’ Learning Style Classification related to IQ and Stress based on EEG. Procedia Soc. Behav. Sci. 2011, 29, 1061–1070. [Google Scholar] [CrossRef][Green Version]

- van der Hiele, K.; Vein, A.; Reijntjes, R.; Westendorp, R.; Bollen, E.; van Buchem, M.; van Dijk, J.; Middelkoop, H. EEG correlates in the spectrum of cognitive decline. Clin. Neurophysiol. 2007, 118, 1931–1939. [Google Scholar] [CrossRef]

- Lujan-Moreno, G.A.; Atkinson, R.; Runger, G. EEG-based user performance prediction using random forest in a dynamic learning environment. In Intelligent Tutoring Systems: Structure, Applications and Challenges; Nova Science Publishers, Inc.: Hauppauge, NY, USA, 2016; pp. 105–128. [Google Scholar]

- Szafir, D.; Mutlu, B. Pay attention! designing adaptive agents that monitor and improve user engagement. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Austin, TX, USA, 5–10 May 2012; pp. 11–20. [Google Scholar]

- Chaouachi, M.; Jraidi, I.; Frasson, C. MENTOR: A Physiologically Controlled Tutoring System. In User Modeling, Adaptation and Personalization, Proceedings of the 23rd International Conference, UMAP 2015, Dublin, Ireland, 29 June–3 July 2015; Ricci, F., Bontcheva, K., Conlan, O., Lawless, S., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 56–67. [Google Scholar]

- Brouwer, A.-M.; Hogervorst, M.A.; Oudejans, B.; Ries, A.J.; Touryan, J. EEG and Eye Tracking Signatures of Target Encoding during Structured Visual Search. Front. Hum. Neurosci. 2017, 11. [Google Scholar] [CrossRef]

- Slanzi, G.; Balazs, J.A.; Velásquez, J.D. Combining eye tracking, pupil dilation and EEG analysis for predicting web users click intention. Inf. Fusion 2017, 35, 51–57. [Google Scholar] [CrossRef]

- Alhasan, K.; Chen, L.; Chen, F. An Experimental Study of Learning Behaviour in an ELearning Environment. In Proceedings of the IEEE 20th International Conference on High Performance Computing and Communications & IEEE 16th International Conference on Smart City & IEEE 4th International Conference on Data Science and Systems (HPCC/SmartCity/DSS), Exeter, UK, 28–30 June 2018; pp. 1398–1403. [Google Scholar]

- Muldner, K.; Burleson, W. Utilizing sensor data to model students’ creativity in a digital environment. Comput. Hum. Behav. 2015, 42, 127–137. [Google Scholar] [CrossRef]

- El-Abbasy, K.; Angelopoulou, A.; Towell, T. Measuring the Engagement of the Learner in a Controlled Environment using Three Different Biosensors. In Proceedings of the 10th International Conference on Computer Supported Education, Madeira, Portugal, 15–17 March 2018; pp. 278–284. [Google Scholar] [CrossRef]

- Makransky, G.; Terkildsen, T.; Mayer, R.E. Role of subjective and objective measures of cognitive processing during learning in explaining the spatial contiguity effect. Learn. Instr. 2019, 61, 23–34. [Google Scholar] [CrossRef]

- Jraidi, I.; Khedher, A.B.; Chaouachi, M. Assessing Students’ Clinical Reasoning Using Gaze and EEG Features. Intelligent Tutoring Systems. In ITS 2019. Lecture Notes in Computer Science; Coy, A., Hayashi, Y., Chang, M., Eds.; Springer: Cham, Switzerland, 2019. [Google Scholar]

- Khedher, A.B.; Jraidi, I.; Frasson, C. Tracking Students’ Analytical Reasoning Using Visual Scan Paths. In Proceedings of the 2017 IEEE 17th International Conference on Advanced Learning Technologies (ICALT), Mumbai, India, 9–13 July 2017; pp. 53–54. [Google Scholar]

- Kardan, S.; Conati, C. Exploring Gaze Data for Determining User Learning with an Interactive Simulation. In Proceedings of the. 20th International Conference on User Modeling, Adaptation, and Personalization, Montreal, QC, Canada, 16–20 July 2012. [Google Scholar] [CrossRef]

- Toker, D.; Conati, C.; Steichen, B.; Carenini, G. Individual user characteristics and information visualization: Connecting the dots through eye tracking. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Paris, France, 27 April 2013; pp. 295–304. [Google Scholar]

- Khedher, A.B.; Jraidi, I.; Frasson, C. Exploring Students’ Eye Movements to Assess Learning Performance in a Serious Game. In EdMedia + Innovate Learning: Association for the Advancement of Computing in Education; Association for the Advancement of Computing in Education (AACE): Waynesville, NC, USA, 2018; pp. 394–401. [Google Scholar]

- Green, M.; Williams, L.; Davidson, D. Visual scanpaths and facial affect recognition in delusion-prone individuals: Increased sensitivity to threat? Cogn. Neuropsychiatry 2003, 8, 19–41. [Google Scholar] [CrossRef]

- Noton, D.; Stark, L. Eye movements and visual perception. Sci. Am. 1971, 224, 35–43. [Google Scholar]

- Goldberg, J.H.; Helfman, J.I. Visual scanpath representation. In Proceedings of the 2010 Symposium on Eye-Tracking Research & Applications (ETRA ‘10), Austin, TX, USA, 22–24 March 2010; Association for Computing Machinery: New York, NY, USA; pp. 203–210. [Google Scholar] [CrossRef]

- Cristino, F.; Mathôt, S.; Theeuwes, J.; Gilchrist, I.D. ScanMatch: A novel method for comparing fixation sequences. Behav. Res. Methods 2010, 42, 692–700. [Google Scholar] [CrossRef]

- Eraslan, S.; Yesilada, Y. Patterns in eyetracking scanpaths and the affecting factors. J. Web Eng. 2015, 14, 363–385. [Google Scholar]

- Liversedge, S.P.; Findlay, J.M. Saccadic eye movements and cognition. Trends Cogn. Sci. 2000, 4, 6–14. [Google Scholar] [CrossRef]

- Smith, T.F.; Waterman, M.S. Identification of common molecular subsequences. J. Mol. Biol. 1981, 147, 195–197. [Google Scholar] [CrossRef]

- Setubal, J.; Meidanis, J. Introduction to Computational Molecular; PWS Publishing Company: Biology, MA, USA, 1997. [Google Scholar]

- Swanson, H.L.; O’Connor, J.E.; Cooney, J.B. An Information Processing Analysis of Expert and Novice Teachers’ Problem Solving. Am. Educ. Res. J. 1990, 27, 533–556. [Google Scholar] [CrossRef]

- Khedher, A.B.; Jraidi, I.; Frasson, C. Local Sequence Alignment for Scan Path Similarity Assessment. Int. J. Inf. Educ. Technol. 2018, 8, 482–490. [Google Scholar] [CrossRef]

- Jasper, H.H. The ten-twenty electrode system of the International Federation. Electroencephalogr. Clin. Neurophysiol. 1958, 10, 371–375. [Google Scholar]

- Freeman, F.G.; Mikulka, P.J.; Prinzel, L.J.; Scerbo, M.W. Evaluation of an adaptive automation system using three EEG indices with a visual tracking task. Biol. Psychol. 1999, 50, 61–76. [Google Scholar] [CrossRef]

- Freeman, F.G.; Mikulka, P.J.; Scerbo, M.W.; Prinzel, L.J.; Clouatre, K. Evaluation of a Psychophysiologically Controlled Adaptive Automation System, Using Performance on a Tracking Task. Appl. Psychophysiol. Biofeedback 2000, 25, 103–115. [Google Scholar] [CrossRef] [PubMed]

- Parasuraman, R.; Caggiano, D. Mental workload. Encycl. Hum. Brain 2002, 3, 17–27. [Google Scholar]

- Chaouachi, M.; Jraidi, I.; Frasson, C. Modeling Mental Workload Using EEG Features for Intelligent Systems. In User Modeling, Adaption and Personalization, Lecture Notes in Computer Science; Konstan, J.A., Conejo, R., Marzo, J.L., Oliver, N., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 50–61. [Google Scholar] [CrossRef]

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of Empirical and Theoretical Research. In Human mental Workload; Hancock, P.A., Meshkati, N., Eds.; North-Holland: Amsterdam, The Netherlands, 1988; pp. 139–183. [Google Scholar]

- Rasmussen, C.E.; Williams, C.K.I. Gaussian Processes for Machine Learning; MIT Press: Cambridge, MA, USA, 2006. [Google Scholar]

- Chaouachi, M.; Jraidi, I.; Lajoie, S.P.; Frasson, C. Enhancing the Learning Experience Using Real-Time Cognitive Evaluation. Int. J. Inf. Educ. Technol. 2019, 9, 678–688. [Google Scholar] [CrossRef]

- Djamasbi, S. Eye Tracking and Web Experience. AIS Trans. Hum.-Comput. Interact. 2014, 6, 37–54. [Google Scholar] [CrossRef]

- Antoniou, P.E.; Arfaras, G.; Pandria, N.; Athanasiou, A.; Ntakakis, G.; Babatsikos, E.; Nigdelis, V.; Bamidis, P. Biosensor Real-Time Affective Analytics in Virtual and Mixed Reality Medical Education Serious Games: Cohort Study. JMIR Serious Games 2020, 8, 17823. [Google Scholar] [CrossRef] [PubMed]

- Moussa, M.B.; Magnenat-Thalmann, N. Applying Affect Recognition in Serious Games: The PlayMancer Project. In Proceedings of the International Workshop on Motion in Games MIG 2009, Zeist, The Netherlands, 21–24 November 2009; Springer: Berlin/Heidelberg, Germany, 2009; pp. 53–62. [Google Scholar]

- Argasiński, J.K.; Węgrzyn, P. Affective patterns in serious games. Futur. Gener. Comput. Syst. 2019, 92, 526–538. [Google Scholar] [CrossRef]

| Positive (Mentally Engaged) | Negative (Mentally Disengaged) | |||

|---|---|---|---|---|

| M | SD | M | SD | |

| Similarity score | 6.49 ** | 2.68 | 3.95 ** | 4.30 |

| Number of matches | 4.17 ** | 1.12 | 3.54 ** | 0.59 |

| Number of mismatches | 0.00 * | 0.00 | 0.12 * | 0.33 |

| Number of gaps | 1.86 | 1.97 | 3.00 | 3.91 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jraidi, I.; Chaouachi, M.; Ben Khedher, A.; Lajoie, S.P.; Frasson, C. Understanding Clinical Reasoning through Visual Scanpath and Brain Activity Analysis. Computation 2022, 10, 130. https://doi.org/10.3390/computation10080130

Jraidi I, Chaouachi M, Ben Khedher A, Lajoie SP, Frasson C. Understanding Clinical Reasoning through Visual Scanpath and Brain Activity Analysis. Computation. 2022; 10(8):130. https://doi.org/10.3390/computation10080130

Chicago/Turabian StyleJraidi, Imène, Maher Chaouachi, Asma Ben Khedher, Susanne P. Lajoie, and Claude Frasson. 2022. "Understanding Clinical Reasoning through Visual Scanpath and Brain Activity Analysis" Computation 10, no. 8: 130. https://doi.org/10.3390/computation10080130

APA StyleJraidi, I., Chaouachi, M., Ben Khedher, A., Lajoie, S. P., & Frasson, C. (2022). Understanding Clinical Reasoning through Visual Scanpath and Brain Activity Analysis. Computation, 10(8), 130. https://doi.org/10.3390/computation10080130