1. Introduction

Clustering is the problem of finding distinct groups in a dataset so that each group consists of similar observations. Clustering time series is an increasingly popular area of cluster analysis, and extensive literature is available on several types of approaches and methodologies. Reference [

1] provides a comprehensive review of standard procedures to clustering time series. A benchmark study on several methods for time series clustering is in the reference [

2].

For clustering time series, the approach focused in the present study consists of fitting the available data with a parametric model. This model uses an underlying mixture of statistical distributions, where each distribution represents a specific group of time series. Then, data clustering is performed through posterior probabilities [

3]. Each time series is assigned to a mixture component (distribution) with the highest probability value. Herein, we consider the finite mixtures of regression models, given their flexibility in modeling heterogeneous time series.

An issue concerning a research gap is as follows. The inclusion of exogenous variables in mixture models, such as spline and polynomial functions of time indexes, is usually connected to the assumption of independent observations. Although this assumption is verified in several applications, it represents a limitation when considering time series. Typically, time-series data are characterized by auto-correlation of other forms of statistical dependency over time.

The first study of mixture modeling for clustering of auto-correlated time series data was presented in [

4]. It was based on the maximum likelihood estimate of mixture models detected through the Expectation–Maximization (EM) algorithm [

5]. Concerning a case study in the optimal portfolio design for the stock market, the study showed that the main problem of the EM algorithm in the case of auto-correlated observations was the estimation and inversion of the component covariance matrices. Thus, a numerical optimization for auto-regressive time series of order

p was proposed and named Alternative Partial Expectation Conditional Maximization (APECM). In the field of finite mixture modeling the APECM algorithm: it is considered as one of the most efficient variants of the original EM [

4,

6,

7].

In the present study, we propose to fill the research gap by developing a finite mixture model through autoregressive mixtures combined with spline and polynomial regression for auto-correlated time series. Therefore in this paper, we present a novel estimation algorithm for mixtures of spline and polynomial regression in the case of auto-correlated data. Given the assumption of auto-correlated data and the usage of exogenous variables in the mixture model, the traditional maximum likelihood approach of estimating the parameters using the EM algorithm is computationally demanding. We implement the APECM algorithm combined with spline and polynomial regression. To our best knowledge, there is no previous published research combining auto-correlated noise with mixture models based on exogenous variables, such the spline and polynomial regression. Therefore, we provide a novel model-based clustering algorithm for auto-correlated times series.

Time-series clustering is a task encountered in many real-world applications, ranging from biology, genetics, engineering, business, finance, economics, and healthcare [

1]. Although our approach may find application in any of these contexts, the motivating example in this paper is concerning sales data influenced by different promotional campaigns. The subsequent section provides more insights into the motivating example of this research, while the remainder of the paper is organized as follows.

Section 2 presents some necessary preliminaries about the methodology.

Section 3 provides details on our proposed method for the regression mixtures models for time series clustering in the case of auto-correlated observations. This approach represents the original contribution of the present study. In

Section 4, a real-world case study is provided, and the results of clustering are presented and discussed. Finally, conclusions are drawn in

Section 5.

Motivating Example

In supply chain management, modeling of sales series provides an essential source of information for several managerial decisions, for example, demand planning [

8], inventory control [

9], and production planning [

10]. Several models were discussed for sales forecasting in the literature [

11,

12,

13,

14].

Sales modeling can be a challenging task [

13,

15]. In particular, the uncertainty of sales, which exists due to the consumers’ behavior, is a risk to the supply chain management. One possible solution to prevent the unfavorable impact of sales uncertainty in supply chain management is to increase the inventory level [

16] or the capacity. However, these approaches impose relevant costs on the companies. The uncertainty of the sales, along with complexity and ambiguity, are considered as important factors affecting the supply chain performance [

17,

18].

Several variables such as promotions, weather, market trends, and special events beyond the lack of historical information impact consumers’ behavior and add complexity to modeling of sales series [

19,

20]. Promotions, which are a common practice in retailing to increase sales, impact demand dynamics, as investigated in the recent literature [

20,

21,

22]. Different combinations of factors such as promotional tools, frequency of promotions, price cut-offs, and display types of products in the store can result in sales enhancements, which can arise from purchasing rate or increased consumption [

23]. The effect of promotions influences the uncertainty of demand and, if ignored, it causes errors and issues in the upstream supply chain such as bullwhip effect and supply shortage [

19,

24].

As an example of actual data,

Figure 1 shows 15 out of 131 series related to the percentage sales enhancements over a horizon of 90 days (horizontal axis). Each series results from a specific promotion (named from “Promo 20” to “Promo 34”). Thus, the horizontal axis represents the sequence of days in three months, where the first day corresponds to the initial day of the promotion. It is worth noting that the observed sales series are not depending on the specific time data, and thus, the sales series are time-invariant. Alignment between sales series is obtained using the initial day of the promotion. The percentage enhancements of sales (vertical axis) are computed to the non-promotional (baseline) demand. In this case study, we are interested in investigating the effect of a promotion on the whole series of sales, starting from the initial day of the promotional campaign and for a time window of 90 days. This task is different from the common task of forecasting for a specific time index: it is related to clustering the whole time series into homogeneous groups. From

Figure 1, we observe that “Promo 20”, “Promo 22”, and “Promo 23” induce relatively small variability, while the sales enhancements are not greater than

. “Promo 28”, “Promo 30”, and “Promo 34” show higher variability, with sales enhancements equal or greater than

and a different shape, which represents distinct impacts of the promotional campaign.

There has been more attention in the recent literature to analyze sales with different promotional impacts and to find the most appropriate model in several conditions. A few empirical studies, which investigate the volatility of sales caused by promotion as a criterion to develop a forecasting model, are reported in [

25,

26]. In the present study, our interest is to present a method to partition the demand time series into homogeneous groups. The goal is to devise a statistical model that extracts knowledge from data for exploratory analysis.

3. Proposed Regression Mixtures for Clustering Time Series with Auto-Correlated Data

This section provides details on our proposed method for the regression mixtures models for time series clustering in the case of auto-correlated observations. Modeling with regression mixtures is one of the major topics in the research field of finite mixture models. In the cluster of index k, the time series is modeled as a regression model with noise denoted as . Usually, it is common to consider as an independently and identically distributed Gaussian noise with a mean equal to zero and variance equal to one. In the present study, is considered as auto-correlated Gaussian noise.

The model can be written as follows.

where

is the vector of regression coefficients, which describes the population mean of cluster of index

k,

is the vector of regressors as a function of index

t (for example, the polynomial regressors

), and

corresponds to the standard deviation of the noise.

A common choice to model the observations

given the regression predictors

is the normal regression below.

where

denotes the

correlation matrix of index

k. The element in

of row

r and column

c is equal to 1 if

, where

if

and

. Function

is recursively defined as

. The vector

represents the correlation factors, which range between

and 1, for lags

and for each component of index

k.

The parameter vector of this density is and is composed of the regression coefficients vector, the noise variance and the correlation factors of lags .

is an

matrix of regressors. The regression mixture model includes polynomial, spline, and B-spline regression mixtures [

33]. The regression mixture is defined by the conditional mixture density function as follows.

The vector of parameters is given by

and it is estimated by iteratively maximizing the following log-likelihood function by using the EM algorithm [

33]

E-step

Starting with an initial solution

, this step computes the

Q-function in (

5), namely the expectation of the complete-data log-likelihood (

4) under this model, given the observed time series data and a current parameter vector

. In formula, the

Q-function is given by:

which only requires computing the posterior component memberships

for each of the

K clusters (components), that is, the posterior probability that the time series

is generated by the

kth component, as defined in (

6)

where:

(C)M-step

In this step, the value of the vector

is updated by maximizing the

Q-function in previous Equation (

13) with respect to

.

While in the EM algorithm, the M step involves a full iteration with a unique parameter subset, the APECM algorithm proposed in [

4] uses a disjoint partition of

for each component of index

and a total number of cycles

. Each M step of the EM algorithm is implemented with a sequence of several Conditional Maximization (CM) steps. In each CM step, each parameter is maximized separately, conditionally on the other parameters remaining fixed. All other E-steps after the CM-steps of

are implemented using the following construction.

Let

be the variable that stores the components of Equation (

14) to avoid most of the cost in recomputing the E-step

K times during an iteration. We have the

th solution

as for the complete data log likelihood given the

qth solution after the CM-step as follows.

Finally, we need to recalculate for all time series of index

i as follows, without further likelihood calculations.

This extra augmentation is the core of the APECM algorithm, as it substantially reduces computing in many aspects as discussed in [

4,

6]. The P in APECM indicates that only a partial update of

(its

hth column) is required in each of the

K cycles, making re-computation of

Q relatively inexpensive. The APECM algorithm takes benefits in both computing time and convergent rate.

After model estimation, the selection criteria AIC, BIC, or ICL presented in

Section 2.3 can be used to select the number of mixture components, namely one model from a set of pre-estimated candidate models.

4. Case Study

In our study, we collected real-world data from a food manufacturing company. Sales series data were available from the Point Of Sale (POS) systems, used to collect sales data for forecasting future demand. Modern POS systems provide a connected data gathering system for the retailer [

34]. Sales data were aggregated across the retailers and spanned an observation period of 90 days.

The data set consists of 131 different time series of the percentage sales enhancements ranging between 0 (no sales enhancements) and 1 (highest sales enhancement). We computed sales enhancements (vertical axis) by adopting the non-promotional demand as the baseline. Each series refers to a specific combination promotion/product (labeled from “Promo 1” to “Promo 131”). The data set is included as a

Supplementary Materials to the present paper. These series have different features influenced by the specific combination promotion/product. The above

Figure 1 represents a subsample of 15 different time series out of the 131 in the dataset. Demand levels differ from each other, and these differences are mainly due to the promotion impact. The aim is to group time series into clusters, where the cluster labels are missing, and the number of clusters is unknown (a.k.a. unsupervised clustering).

In this section, we present the results of the algorithms previously described, concerning both B-spline and polynomial regression for auto-correlated time series clustering. The proposed approaches were coded in Matlab language using the R2021b version. The Matlab code ran on a 2.6 GHz Intel Core i7 with 16 Gb of memory. In terms of computing time, we observed that the algorithm was fast enough for both regression models. Although for large sample sizes and a large number of data series, the algorithms may lead to significant computational load, in the case study of the present paper, it converged after a few iterations requiring at most less than 240 s for 131 series data. This feature makes it useful for real practical situations.

4.1. B-Spline Regression Mixtures for Time Series Clustering

Table 1 reports the values of BIC for

K ranging between 1 and 4 combined different spline orders. Generally, the most widely used orders for spline are 1, 2, and 4. For smooth function approximation, cubic B-splines, which correspond to order 4, are sufficient to approximate smooth functions. An order equal to 1 is selected for piecewise constant data. Spline knots were uniformly placed over the time series domain

t.

Results of

Table 1 show that the maximum value of BIC, equal to 2.2029, was obtained by using a cubic B-spline of order 4 and a value of

(number of clusters). The log-likelihood in (

12) was maximized by using the APECM algorithm in

Section 3. A graphical representation of the resulting cubic B-spline models of order 4 is reported in

Figure 2.

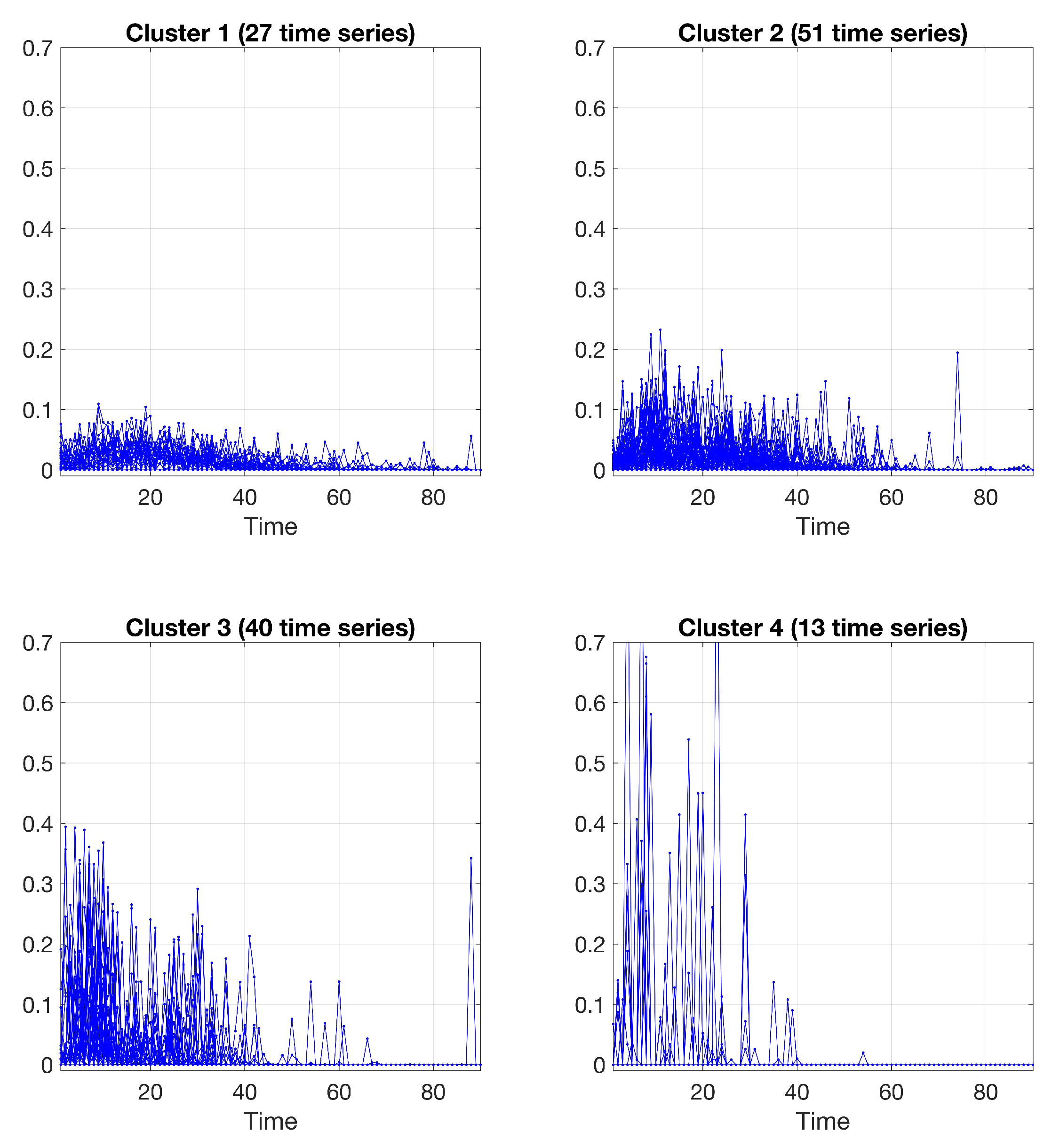

From

Figure 2, one can note that clusters numbers 1 and 2 present variabilities spanning more than 40 days, the shape of clusters numbers 3 and 4 present variabilities spanning a shorter period, with a higher peak in the first ten days.

Figure 2 also shows that cluster number 4 presents two points of local maximization of sales during 90 days. Modeling the effect of promotions can contribute to knowing how the uncertainty of demand changes over time. This knowledge represents an essential source of information for the practitioner to optimize the upstream supply chain and avoid drawbacks such as the bullwhip effect and supply shortage [

19,

24].

Table 2 reports the final estimation values of

,

, and

in (16) for each of the four clusters (components of the mixture model). In the case study, a maximum lag

p equal to

was considered adequate to model auto-correlation of data.

A “soft” partition of the time series into

clusters, represented by the estimated posterior probabilities

, is obtained as in (

6). The values of

are depicted in

Figure 3, where a color scale (on the right) ranging between 0 and 1 is used to code the value of

, for each time series out of the 131 in the case study, and for each cluster represented by the model depicted in previous

Figure 2.

From the results in

Figure 3, one can observe that the major uncertainties in clustering are limited to a few cases in the set of 131 time-series, specifically “Promo 2”, “Promo 28”, “Promo 68”, “Promo 85”, “Promo 111”, and “Promo 113”. A "hard" partition is obtained by allocating each time series to the component (cluster) having the most elevated posterior probability value

.

Figure 4 shows the final clustering of the time series data via the B-spline regression mixtures.

4.2. Polynomial Regression Mixtures for Time Series Clustering

In this section, polynomial regression is used for time series clustering. Following

Table 3, reports the value of the BIC for

K ranging between 1 and 4. In

Table 3, the BIC value is also reported for various polynomial orders.

From the results in

Table 3, we observe that the maximum value of BIC is 2.2027, and was obtained by using a polynomial of order 4 and a value of

(number of clusters). Similar to the case of B-spline regression, the log-likelihood in (

12) was maximized by using the EM algorithm. A graphical representation of the resulting polynomial models of order 4 is reported in the following

Figure 5.

Figure 5 clearly shows that, while clusters 1 and 2 present variabilities spanning more than 40 days, the shape of clusters 3 and 4 present variabilities spanning a shorter period, with higher values in the first 10 days.

Table 4 reports the final estimation values of

,

, and

in (16) for each of the four clusters (components of the mixture model).

The values of

related to the estimated posterior probabilities of the “soft” partition are represented in

Figure 6. From the results in

Figure 6, it can be noted that the uncertainties in clustering are limited to a fewer number of cases if compared to the B-spline results in previous

Figure 3. Specifically, “Promo 2”, “Promo 28”, and “Promo 33”. Finally, a “hard” partition is obtained by allocating each time series to the component (cluster) having the most elevated posterior probability value

.

Figure 7 shows the clustering of the time series data via the polynomial regression mixtures.

5. Conclusions

Modeling sales series data assume great importance for several managerial decisions at different levels of the supply chain. Promotion is one of the factors that can have differing effects on sales dynamics over the entire time series. Therefore, there is a need for a simple approach to model sales time series influenced by promotions as sophisticated models are useless in practice.

We analyzed the finite mixture models for time series clustering. The reason for using such models is their sound statistical basis and the interpretability of their results. The fitted values for their posterior membership probabilities provide the uncertainties that the data belong to clusters. Moreover, as clusters correspond to model components, choosing the number of them can be easily implemented in terms of the likelihood function for the components in the mixture model.

We developed an approach for clustering auto-correlated time series. In particular, we implemented the APECM algorithm combined with spline and polynomial regression through autoregressive mixtures as a novel model-based clustering algorithm for auto-correlated times series.

We demonstrated the capabilities of the developed approach for dealing with time-series data with several complex data situations, including heterogeneity and dynamical behavior. We explored two regression mixtures approaches by implementing both B-spline and polynomial models. Numerical results on 131 real-world time series data demonstrate the advantage of the mixture model-based approach presented in this study for time series clustering. For the data set used in this study, the spline and polynomial order with the best BIC value were considered. For the spline regression mixtures, we used cubic B-splines because cubic splines, which correspond to a spline of order 4, are sufficient to approximate smooth functions. For the polynomial regression mixtures, we observed that an order 4 was satisfactory for the dataset of the case study. The results from the case study demonstrate the efficacy of the proposed method for clustering auto-correlated time series. Despite the specific case study of this paper, our approach can be used in different real-world application fields.

The most important benefit of the research presented in this paper is the parametric model-based approach. A model-based approach is a convenient, understandable description, allowing the analyst to access and interpret each component of the real-world systems. The main drawback of this approach is related to the fact that the user should be able to select the most appropriate structure of the model, selecting the type of regressors (spline and polynomial), the order of the regressors, the number of clusters, and the order of the autoregressive component. In our approach, we used a BIC-based rule for model selection. The main limitation of this approach is the computational requirements caused by dealing with large datasets, as in the framework of Big Data. In these cases, a data-driven method for time series clustering/classification, such as deep learning approaches, should be considered instead [

35,

36].