A Framework for More Effective Dark Web Marketplace Investigations

Abstract

1. Introduction

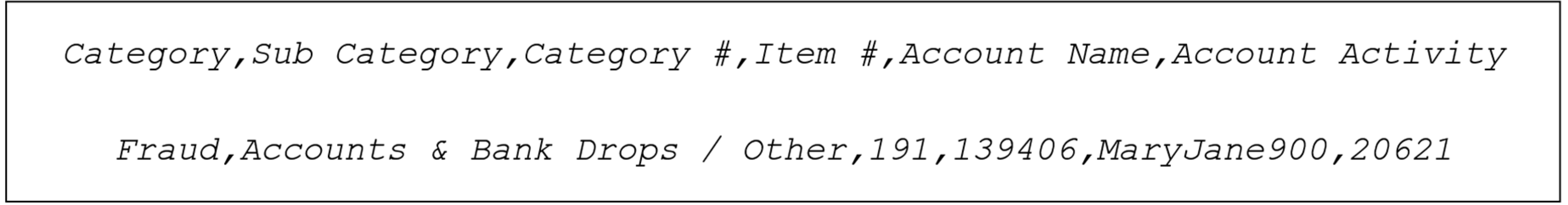

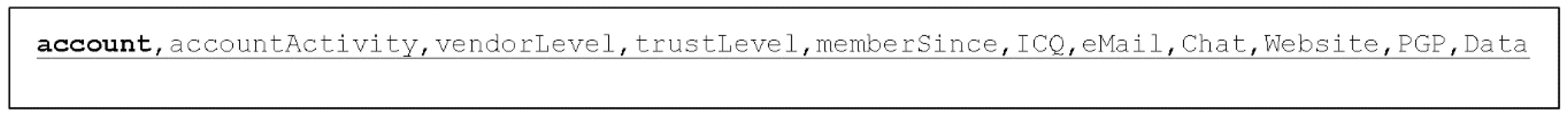

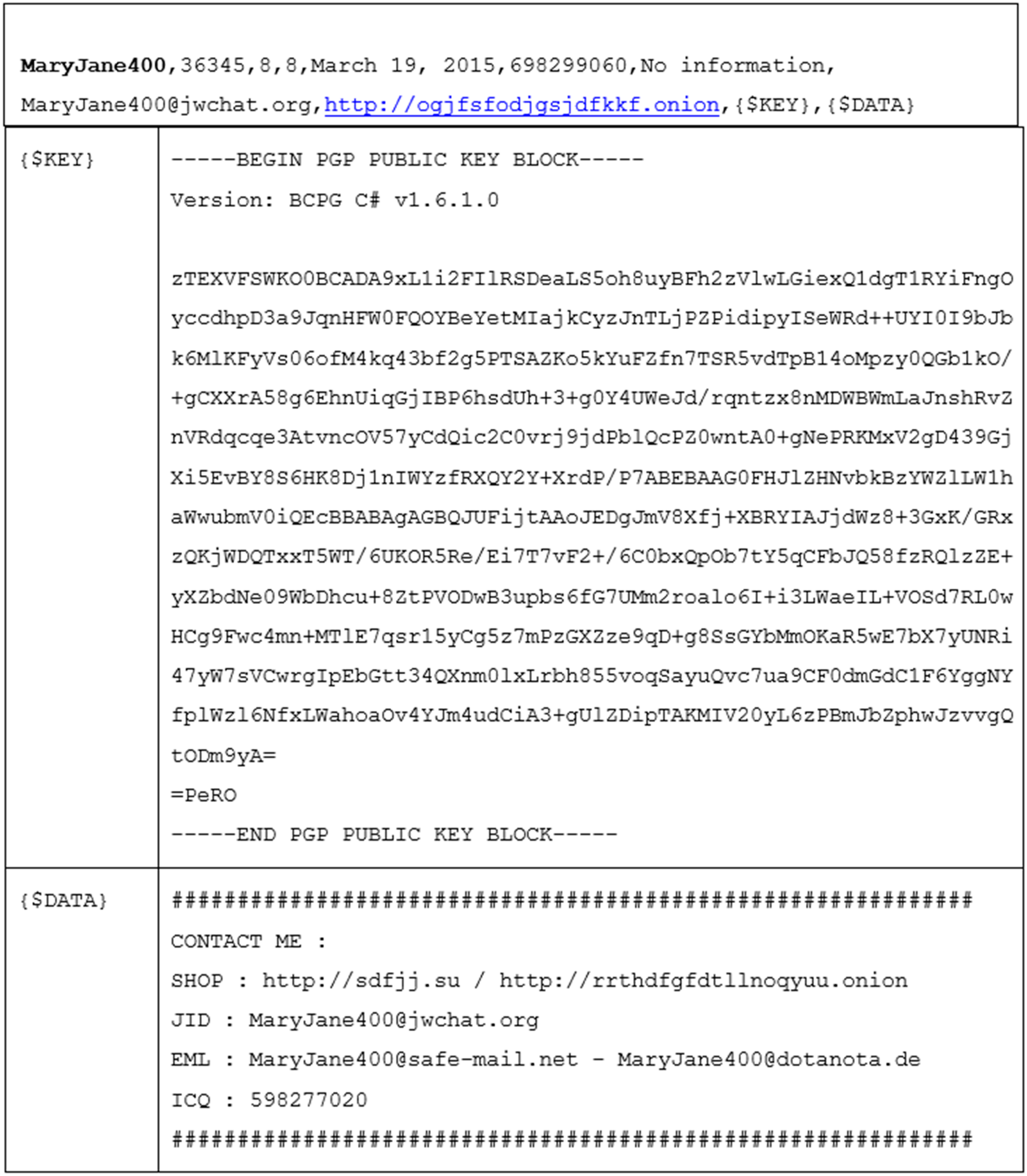

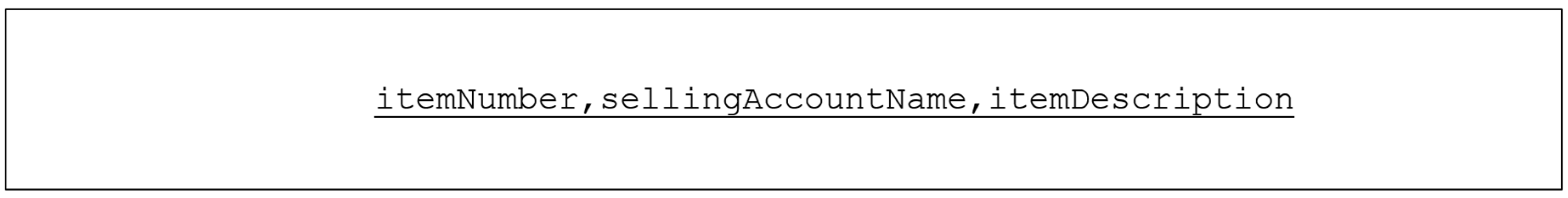

2. Background and Literature Review

3. Methodology

3.1. System Setup for the Dark Web

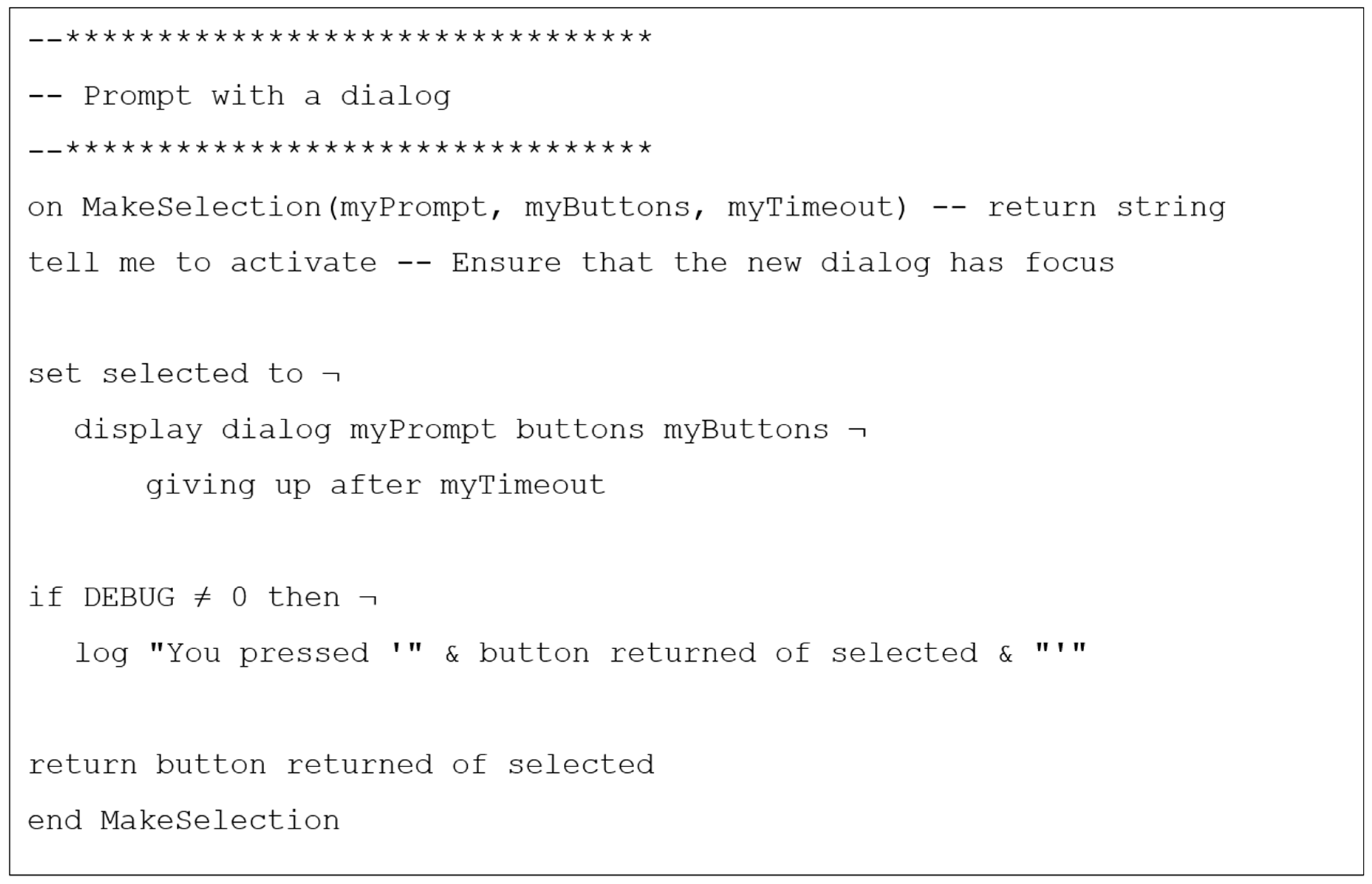

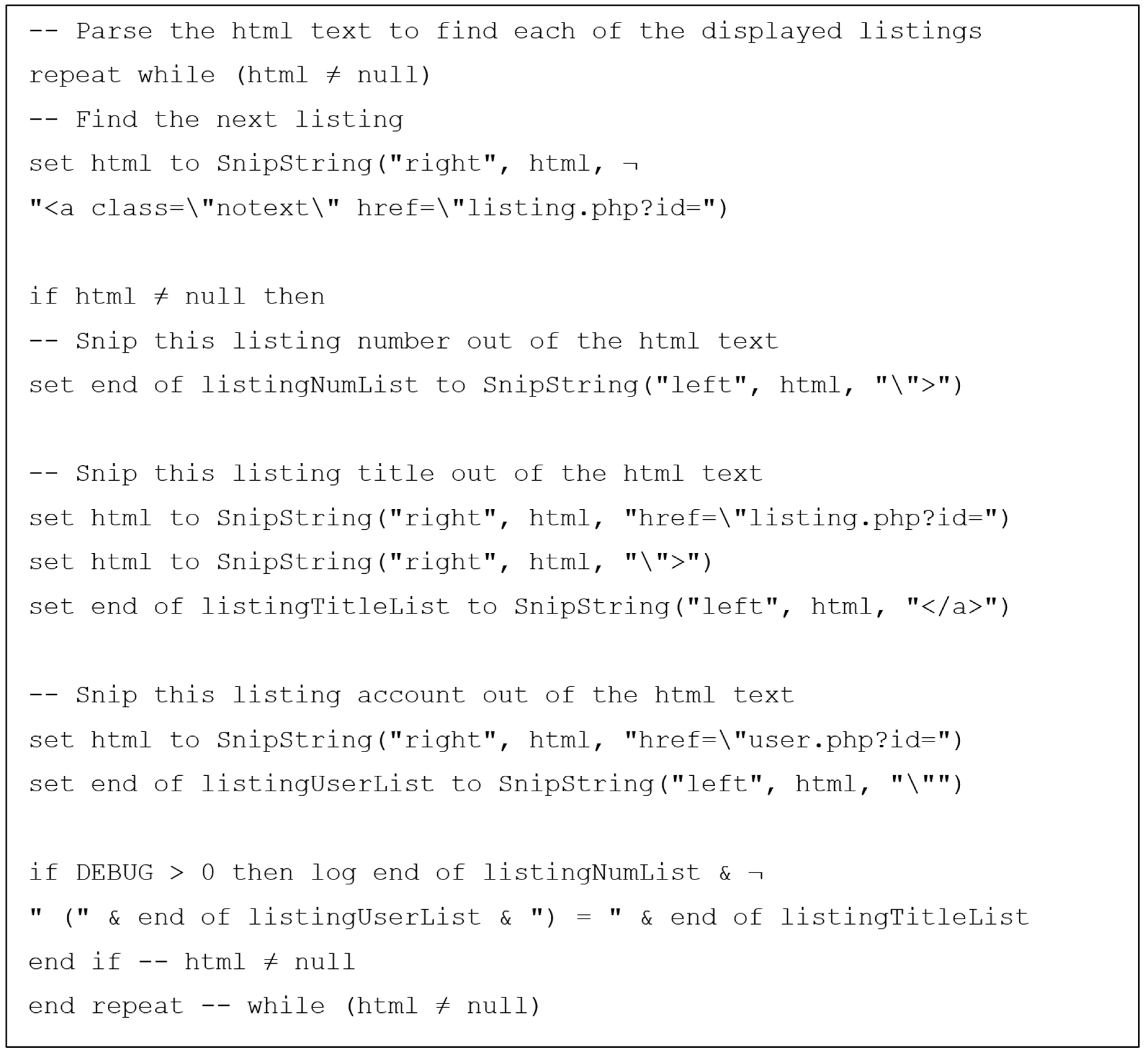

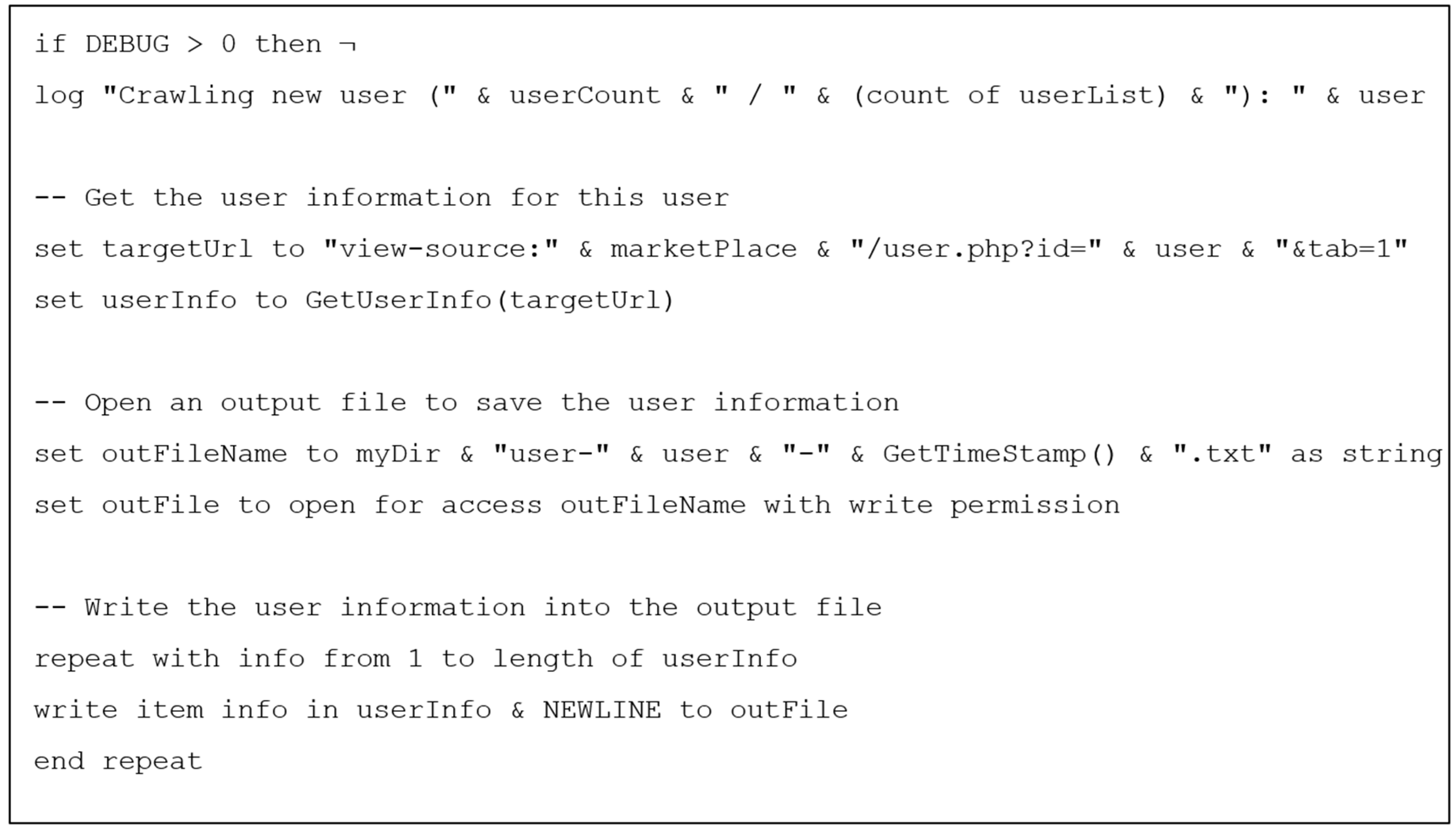

3.2. Creating a Web Crawler

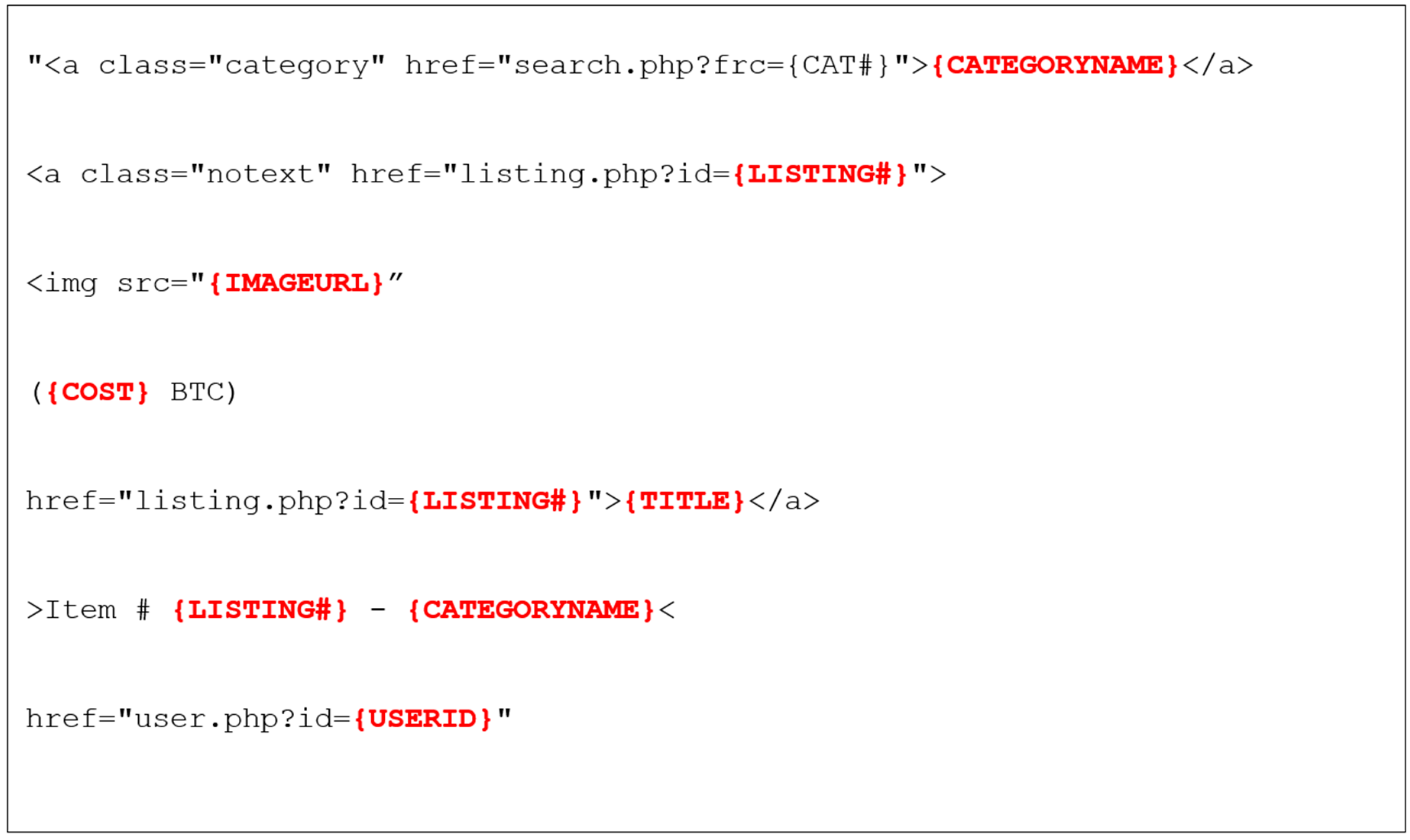

3.3. Determining the Structure of the Dark Web Marketplace

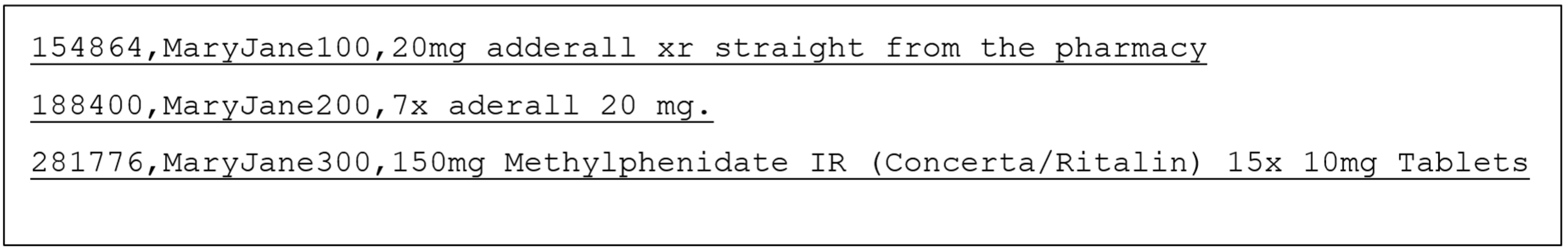

3.4. Investigating Marketplace Vendors

- Downloaded, installed and executed Maltego CE 4.0.11.9358 [63];

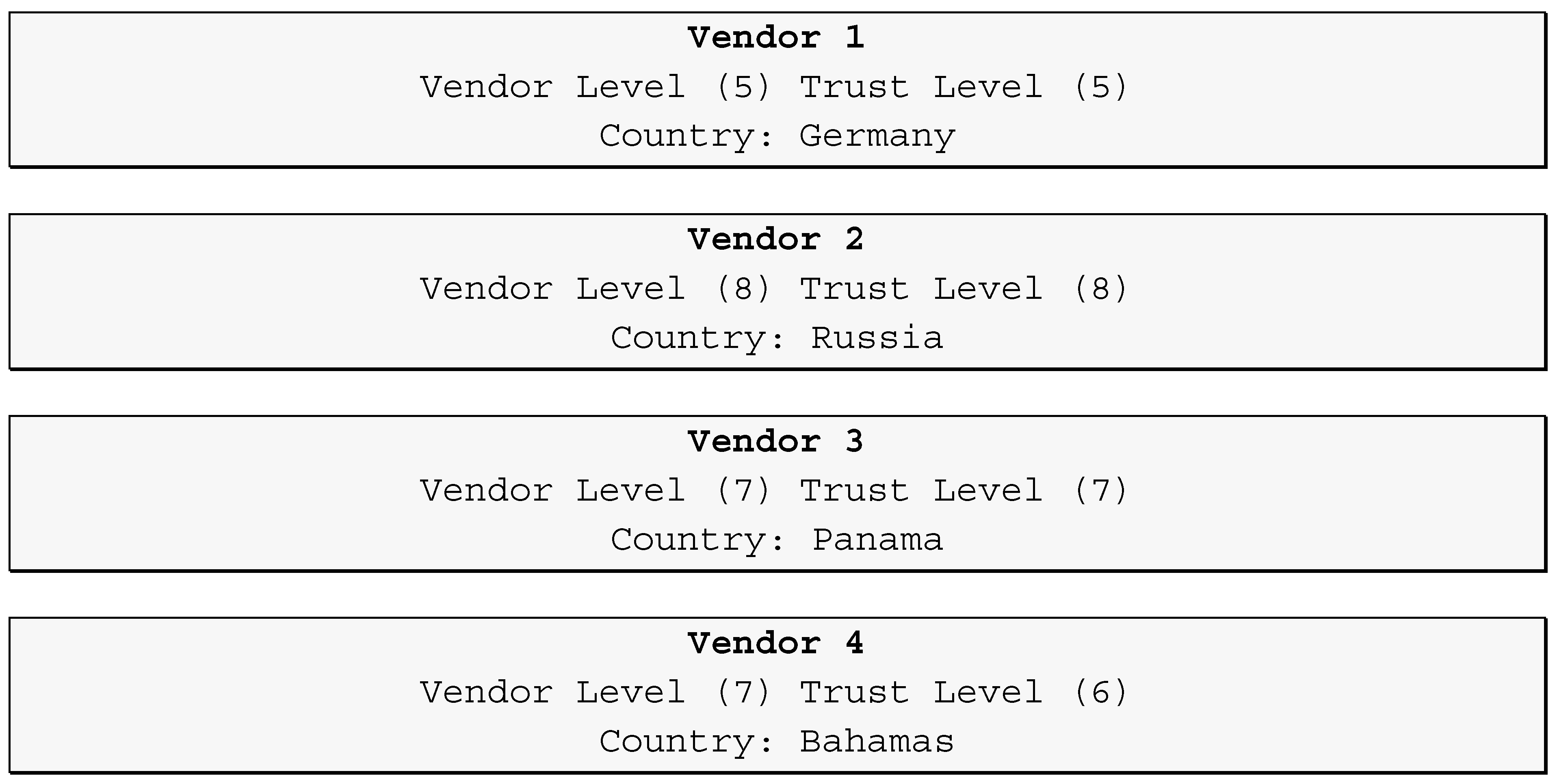

- Selected 50 vendor account names, from the marketplace, which warranted further examination. Note that the free CE (Community Edition) version of Maltego only supports 50 entries at a time; the paid version allows more concurrent searches. Criteria used to select these vendor account names of interest, included:

- Interesting or unique names that a vendor may re-use on the World Wide Web;

- Accounts with a high trust and vendor level, is generally indicative of a more active vendor;

- Focused on categories of particular interest, i.e., drugs or credit card fraud;

- Used the Maltego “Create a Graph” option to start a new investigation, and entered in the selected account names;

- Once the account names were imported into Maltego, we executed different transforms against them:

- d.

- “To Email Addresses [using Search Engine]”;

- e.

- “AliasToTwitterAccount”;

- f.

- “To Website [using Search Engine]”;

- g.

- “To Phone Numbers [using Search Engine]”;

- After the transforms finished running, we used the “Export Graph to Table” option to output the file into a flat text file for further investigation;

- Manually parsed through the resulting table using Microsoft Excel or another application, and eliminated any obvious false positives—for example, “online@cvs.com” is almost certainly a generic email address legitimately used by the CVS Drug Store and would not warrant a further investigation;

- Combined the results from Maltego with the address links already pulled directly from the marketplace and used the Tor browser to protect the investigator’s privacy. Then we performed Web searches on the results that appeared to be most interesting. Note that this process can be very tedious, as it requires a manual examination and judgment calls to decide which routes are most likely to lead to a successful identification. For example:

- Drilled down into any Reddit or 4chan or Twitter or YouTube or similar public sites to try to find alternate accounts used by the user;

- Used the www.whois.com to find any domain name registration information for Clear Web Websites associated with these users; and

- Used Google Reverse Image Lookup to identify alternate versions/locations of any pictures identified as related to the users.

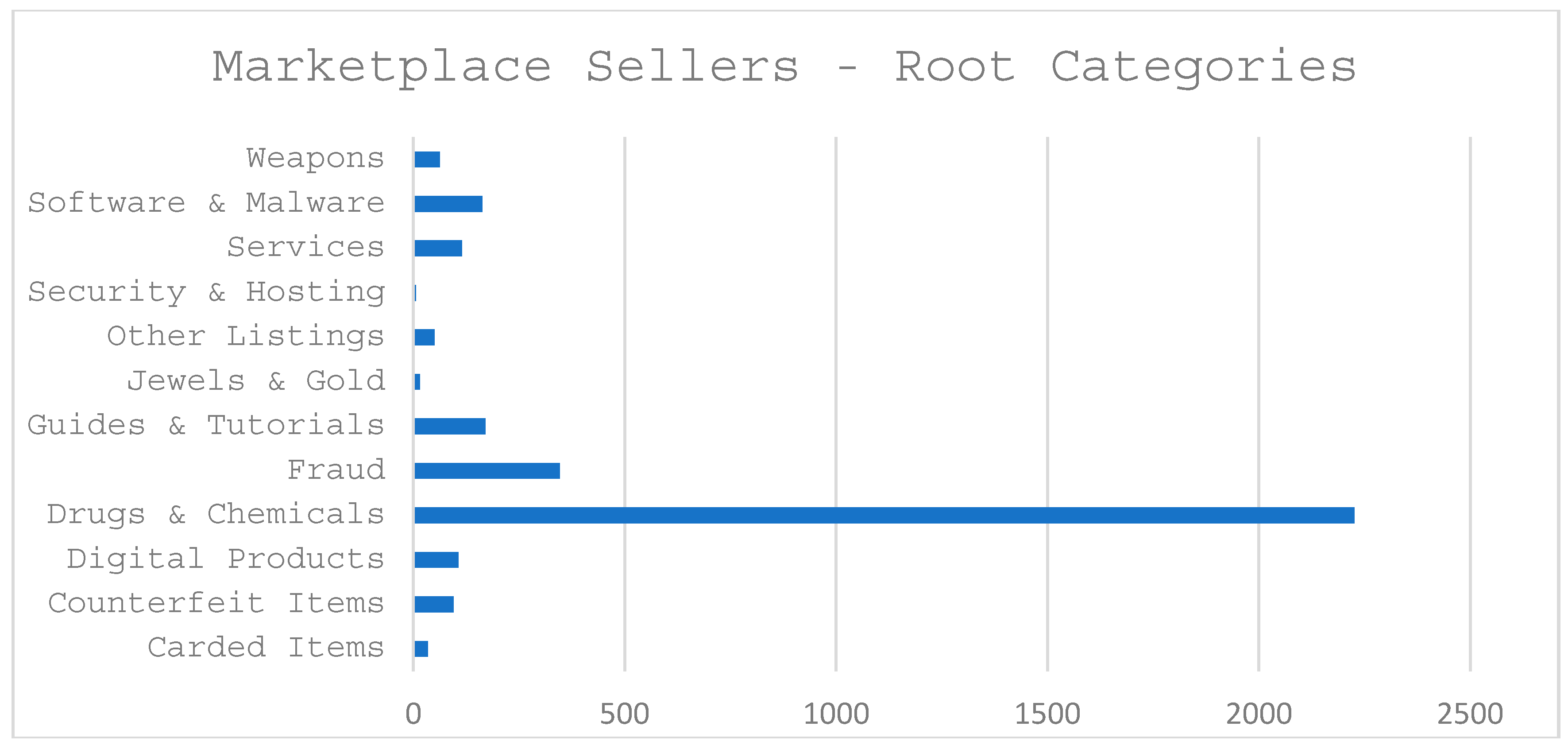

4. Results

5. Discussion and Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A

References

- Hurlburt, G. Shining Light on the Dark Web. Computer 2017, 50, 100–105. [Google Scholar] [CrossRef]

- Chertoff, M.; Simon, T. The Impact of the Dark Web on Internet Governance and Cyber Security; Centre for International Governance Innovation and Chatham House: Waterloo, ON, Canada, 2015. [Google Scholar]

- Weimann, G. Going dark: Terrorism on the dark web. Stud. Conf. Terr. 2016, 39, 195–206. [Google Scholar] [CrossRef]

- Mansfield-Devine, S. Darknets. Comput. Fraud Secur. 2009, 2009, 4–6. [Google Scholar] [CrossRef]

- Song, J.; Lee, Y.; Choi, J.W.; Gil, J.M.; Han, J.; Choi, S.S. Practical in-depth analysis of ids alerts for tracing and identifying potential attackers on darknet. Sustainability 2017, 9, 262. [Google Scholar] [CrossRef]

- Robertson, J.; Diab, A.; Marin, E.; Nunes, E.; Paliath, V.; Shakarian, J.; Shakarian, P. Darknet Mining and Game Theory for Enhanced Cyber Threat Intelligence. Cyber Déf. Rev. 2016, 1, 95–122. [Google Scholar]

- Robertson, J.; Diab, A.; Marin, E.; Nunes, E.; Paliath, V.; Shakarian, J.; Shakarian, P. Darkweb Cyber Threat Intelligence Mining; Cambridge University Press: Cambridge, UK, 2017. [Google Scholar]

- Bradbury, D. Unveiling the dark web. Netw. Secur. 2014, 2014, 14–17. [Google Scholar] [CrossRef]

- Broséus, J.; Rhumorbarbe, D.; Mireault, C.; Ouellette, V.; Crispino, F.; Décary-Hétu, D. Studying illicit drug trafficking on Darknet markets: Structure and organisation from a Canadian perspective. Forensic Sci. Int. 2016, 264, 7–14. [Google Scholar] [CrossRef] [PubMed]

- Jardine, E. Privacy, censorship, data breaches and Internet freedom: The drivers of support and opposition to Dark Web technologies. New Media Soc. 2017. [Google Scholar] [CrossRef]

- George, A. Shopping on the dark net. New Sci. 2015, 228, 41. [Google Scholar] [CrossRef]

- Aceto, G.; Pescapé, A. Internet Censorship detection: A survey. Comput. Netw. 2015, 83, 381–421. [Google Scholar] [CrossRef]

- Pescape, A.; Montieri, A.; Aceto, G.; Ciuonzo, D. Anonymity Services Tor, I2P, JonDonym: Classifying in the Dark (Web). In Proceedings of the 2017 29th International Teletraffic Congress (ITC 29), Genoa, Italy, 4–8 September 2017. [Google Scholar]

- Tor Project. Available online: https://www.torproject.org/ (accessed on 9 September 2017).

- Scholz, R.W. Sustainable digital environments: What major challenges is humankind facing? Sustainability 2016, 8, 726. [Google Scholar] [CrossRef]

- Rhumorbarbe, D.; Staehli, L.; Broséus, J.; Rossy, Q.; Esseiva, P. Buying drugs on a Darknet market: A better deal? Studying the online illicit drug market through the analysis of digital, physical and chemical data. Forensic Sci. Int. 2016, 267, 173–182. [Google Scholar] [CrossRef] [PubMed]

- European Monitoring Center for Drugs and Drug Addiction. European Drug Report—Trends and Developments; European Monitoring Center for Drugs and Drug Addiction: Lisbon, Portugal, 2017. [Google Scholar]

- Lacson, W.; Jaishankar, K.; Jones, B. The 21st Century DarkNet Market: Lessons from the Fall of Silk Road. Int. J. Cyber Criminol. 2016, 10, 40–61. [Google Scholar]

- Broséus, J.; Morelato, M.; Tahtouh, M.; Roux, C. Forensic drug intelligence and the rise of cryptomarkets. Part I: Studying the Australian virtual market. Forensic Sci. Int. 2017, 279, 288–301. [Google Scholar] [CrossRef] [PubMed]

- Van Hout, M.C.; Bingham, T. “Silk Road”, the virtual drug marketplace: A single case study of user experiences. Int. J. Drug Policy 2013, 24, 385–391. [Google Scholar] [CrossRef] [PubMed]

- Ahmed, M.; Litchfield, A.T. Taxonomy for Identification of Security Issues in Cloud Computing Environments. J. Comput. Inf. Syst. 2016, 1–10. [Google Scholar] [CrossRef]

- Revell, T. US guns sold in Europe via dark web. New Sci. 2017, 235, 12. [Google Scholar] [CrossRef]

- Pergolizzi, J.V.; LeQuang, J.A.; Taylor, R.; Raffa, R.B. The “Darknet”: The new street for street drugs. J. Clin. Pharm. Ther. 2017, 42, 790–792. [Google Scholar] [CrossRef] [PubMed]

- Kirkpatrick, K. Financing the dark web. Commun. ACM 2017, 60, 21–22. [Google Scholar] [CrossRef]

- Masoni, M.; Guelfi, M.R.; Gensini, G.F. Darknet and bitcoin, the obscure and anonymous side of the internet in healthcare. Technol. Health Care 2016, 24, 969–972. [Google Scholar] [CrossRef] [PubMed]

- Soska, K.; Christin, N. Measuring the Longitudinal Evolution of the Online Anonymous Marketplace Ecosystem. In Proceedings of the 24th USENIX Security Symposium, Washington, DC, USA, 12–14 August 2015; pp. 33–48. [Google Scholar]

- Tor Metrics. Available online: https://metrics.torproject.org/hidserv-dir-onions-seen.html (accessed on 2 March 2018).

- Dainotti, A.; Pescape, A.; Claffy, K. Issues and future directions in traffic classification. IEEE Netw. 2012, 26, 35–40. [Google Scholar] [CrossRef]

- Dainotti, A.; Pescapé, A.; Sansone, C. Early classification of network traffic through multi-classification. In Traffic Monitoring and Analysis TMA 2011. Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2011; Volume 6613, pp. 122–135. [Google Scholar]

- Park, J.H.; Chao, H.C. Advanced IT-based Future sustainable computing. Sustainability 2017, 9, 757. [Google Scholar] [CrossRef]

- Chen, H.; Chung, W.; Qin, J.; Reid, E.; Sageman, M.; Weimann, G. Uncovering the dark Web: A case study of Jihad on the Web. J. Am. Soc. Inf. Sci. Technol. 2008, 59, 1347–1359. [Google Scholar] [CrossRef]

- Zulkarnine, A.T.; Frank, R.; Monk, B.; Mitchell, J.; Davies, G. Surfacing collaborated networks in dark web to find illicit and criminal content. In Proceedings of the 2016 IEEE Conference on Intelligence and Security Informatics (ISI), Tucson, AZ, USA, 27–30 September 2016; pp. 109–114. [Google Scholar]

- Spitters, M.; Klaver, F.; Koot, G.; van Staalduinen, M. Authorship Analysis on Dark Marketplace Forums. In Proceedings of the 2015 European Intelligence and Security Informatics Conference, Manchester, UK, 7–9 September 2015; pp. 1–8. [Google Scholar]

- DarkOwl. Available online: https://www.darkowl.com/ (accessed on 28 June 2018).

- Intelliagg. Available online: https://www.intelliagg.com/ (accessed on 28 June 2018).

- Fiss, P.C.; Hirsch, P.M. The discourse of globalization: Framing and sensemaking of an emerging concept. Am. Sociol. Rev. 2005, 70, 29–52. [Google Scholar] [CrossRef]

- Lee, J.K. Research Framework for AIS Grand Vision of the Bright ICT Initiative. MIS Q. 2015, 39, 3–13. [Google Scholar]

- Moloney, P. Dark Net Drug Marketplaces Begin to Emulate Organised Street Crime; National Drug and Alcohol Research Centre: Sydney, Australia, 2016. [Google Scholar]

- Dolliver, D.S. Evaluating drug trafficking on the Tor Network: Silk Road 2, the sequel. Int. J. Drug Policy 2015, 26, 1113–1123. [Google Scholar] [CrossRef] [PubMed]

- Kim, W.; Jeong, O.R.; Kim, C.; So, J. The dark side of the Internet: Attacks, costs and responses. Inf. Syst. 2011, 36, 675–705. [Google Scholar] [CrossRef]

- Koch, R. The Darkweb—A Growing Risk for Military Operations? Information 2018, in press. [Google Scholar]

- Harrison, J.R.; Roberts, D.L.; Hernandez-Castro, J. Assessing the extent and nature of wildlife trade on the dark web. Conserv. Boil. 2016, 30, 900–904. [Google Scholar] [CrossRef] [PubMed]

- Dalins, J.; Wilson, C.; Carman, M. Criminal motivation on the dark web: A categorisation model for law enforcement. Digit. Investig. 2018, 24, 62–71. [Google Scholar] [CrossRef]

- Van Hout, M.C.; Bingham, T. Responsible vendors, intelligent consumers: Silk Road, the online revolution in drug trading. Int. J. Drug Policy 2014, 25, 183–189. [Google Scholar] [CrossRef] [PubMed]

- Christin, N. Nicolas Traveling the silk road. In Proceedings of the 22nd International Conference on World Wide Web—WWW ’13, Rio de Janeiro, Brazil, 13–17 May 2013; ACM Press: New York, NY, USA, 2013; pp. 213–224. [Google Scholar]

- Edwards, M.J.; Rashid, A.; Rayson, P. A Service-Indepenent Model for Linking Online User Profile Information. In Proceedings of the 2014 IEEE Joint Intelligence and Security Informatics Conference, The Hague, The Netherlands, 24–26 September 2014; pp. 280–283. [Google Scholar]

- Phelps, A.; Watt, A. I shop online—Recreationally! Internet anonymity and Silk Road enabling drug use in Australia. Digit. Investig. 2014, 11, 261–272. [Google Scholar] [CrossRef]

- Qin, J.; Zhou, Y.; Chen, H. A multi-region empirical study on the internet presence of global extremist organizations. Inf. Syst. Front. 2011, 13, 75–88. [Google Scholar] [CrossRef]

- Lacey, D.; Salmon, P.M. It’s Dark in There: Using Systems Analysis to Investigate Trust and Engagement in Dark Web Forums. In Proceedings of the International Conference on Engineering Psychology and Cognitive Ergonomics, Los Angeles, CA, USA, 2–7 August 2015; Springer: Cham, Switzerland, 2015; pp. 117–128. [Google Scholar]

- UNODC. World Drug Report; UNODC: Vienna, Austria, 2016. [Google Scholar]

- Reddit. Available online: https://www.reddit.com/r/darknetmarkets (accessed on 9 September 2017).

- Zetter, K. New “Google” for the Dark Web Makes Buying Dope and Guns Easy. Available online: https://www.wired.com/2014/04/grams-search-engine-dark-web/ (accessed on 26 October 2017).

- Zillman, M.P. Deep Web Research and Discovery Resources 2017; Deep Web: Naples, FL, USA, 2017. [Google Scholar]

- Defense Advanced Research Project Agency Memex. Available online: https://www.darpa.mil/program/memex (accessed on 5 October 2017).

- Zetter, K. Darpa Is Developing a Search Engine for the Dark Web|WIRED. Available online: https://www.wired.com/2015/02/darpa-memex-dark-web/ (accessed on 26 October 2017).

- Mahto, D.K.; Singh, L. A Dive into Web Scraper World. In Proceedings of the 2016 3rd International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, 16–18 March 2016. [Google Scholar]

- Baravalle, A.; Lopez, M.S.; Lee, S.W. Mining the Dark Web: Drugs and Fake Ids. In Proceedings of the IEEE International Conference on Data Mining Workshops, ICDMW, New Orleans, LA, USA, 11–20 November 2017; pp. 350–356. [Google Scholar]

- Wanjala, G.W.; Kahonge, A.M. Social Media Forensics for Hate Speech Opinion Mining. Int. J. Comput. Appl. 2016, 155, 975–8887. [Google Scholar]

- Plachouras, V.; Carpentier, F.; Faheem, M.; Masanès, J.; Risse, T.; Senellart, P.; Siehndel, P.; Stavrakas, Y. ARCOMEM Crawling Architecture. Future Internet 2014, 6, 518–541. [Google Scholar] [CrossRef]

- Unix & Linux Stack Exchange Anonymous Url Navigation in Command Line? Available online: https://unix.stackexchange.com/questions/87156/anonymous-url-navigation-in-command-line (accessed on 30 May 2018).

- Landhauber, M.; Weigelt, S.; Tichy, W.F. NLCI: A natural language command interpreter. Autom. Softw. Eng. 2016, 24, 839–861. [Google Scholar] [CrossRef]

- Hayes, D.; Cappa, F. Open Source Intelligence for Risk Assessment. Bus. Horiz. 2018, in press. [Google Scholar] [CrossRef]

- Paterva Home Maltego. Available online: https://www.paterva.com/web7/ (accessed on 11 September 2017).

- Tarafdar, M.; Gupta, A.; Turel, O. Special issue on ‘Dark side of information technology use’: An introduction and a framework for research. Inf. Syst. J. 2015, 25, 161–170. [Google Scholar] [CrossRef]

- George, J.F.; Derrick, D.; Marett, K.; Harrison, A.; Thatcher, J.B. The dark internet: Without darkness there is no light. In Proceedings of the AMCIS 2016: Surfing the IT Innovation Wave—22nd Americas Conference on Information Systems, San Diego, CA, USA, 11–14 August 2016. [Google Scholar]

- Roberts, N.C. Tracking and disrupting dark networks: Challenges of data collection and analysis. Inf. Syst. Front. 2011, 13, 5–19. [Google Scholar] [CrossRef]

- Hartong, M.; Goel, R.; Wijesekera, D. Security and the US rail infrastructure. Int. J. Crit. Infrastruct. Prot. 2008, 1, 15–28. [Google Scholar] [CrossRef]

- Rice, M.; Miller, R.; Shenoi, S. May the US government monitor private critical infrastructure assets to combat foreign cyberspace threats? Int. J. Crit. Infrastruct. Prot. 2011, 4, 3–13. [Google Scholar] [CrossRef]

- Shackelford, S.J. Business and cyber peace: We need you! Bus. Horiz. 2016, 59, 539–548. [Google Scholar] [CrossRef]

- Parent, M.; Cusack, B. Cybersecurity in 2016: People, technology, and processes. Bus. Horiz. 2016, 59, 567–569. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hayes, D.R.; Cappa, F.; Cardon, J. A Framework for More Effective Dark Web Marketplace Investigations. Information 2018, 9, 186. https://doi.org/10.3390/info9080186

Hayes DR, Cappa F, Cardon J. A Framework for More Effective Dark Web Marketplace Investigations. Information. 2018; 9(8):186. https://doi.org/10.3390/info9080186

Chicago/Turabian StyleHayes, Darren R., Francesco Cappa, and James Cardon. 2018. "A Framework for More Effective Dark Web Marketplace Investigations" Information 9, no. 8: 186. https://doi.org/10.3390/info9080186

APA StyleHayes, D. R., Cappa, F., & Cardon, J. (2018). A Framework for More Effective Dark Web Marketplace Investigations. Information, 9(8), 186. https://doi.org/10.3390/info9080186