1. Introduction

Decision making can be considered as the process of choosing the best alternative from the feasible alternatives. With the development of research, decision making is extended from one attribute to multiple attributes. To solve problems in multiple attribute decision making, various theories such as fuzzy sets, rough sets and utility theory, etc. have been used. Many of significant results [

1,

2,

3,

4,

5,

6,

7,

8] have been achieved in multiple attribute decision making. Researchers in rough set theory [

9] are usually concerned with attribute reduction (or feature selection) problems of multiple attribute decision making. The binary discernibility matrix, proposed by Felix and Ushio [

10], is a useful tool for attribute reduction and knowledge acquisition. Recently, many algorithms of attribute reduction based on binary discernibility matrices have been developed [

11,

12,

13]. In 2014, Zhang et al. [

14] proposed a binary discernibility matrix for an incomplete information system, and designed a novel algorithm of attribute reduction based on the proposed binary discernibility matrix. In the paper [

15], Li et al. developed an attribute reduction algorithm in terms of the improved binary discernibility matrix, and applied the algorithm in customer relationship management. Tiwari et al. [

16] developed hardware for a binary discernibility matrix which can be used for attribute reduction and rule acquisition in an information system. Considering mathematical properties of a binary discernibility matrix, Zhi and Miao [

17] introduced the so-called binary discernibility matrix reduction (BDMR), which was actually an algorithm for binary discernibility matrix simplification. On the basis of BDMR, two algorithms for attribute reduction and reduction judgement were presented. A binary discernibility matrix with a vertical partition [

18] was proposed to deal with big data in attribute reduction. Ren et al. [

19] constructed an improved binary discernibility matrix which can be used in an inconsistent information system. Ding et al. [

20] discussed several problems about a binary discernibility matrix in an incomplete system. Combining the binary discernibility matrix in an incomplete system, an algorithm of incremental attribute reduction was proposed. In the paper [

20], a novel method for calculation of incremental core attribute was introduced firstly. On this basis, an algorithm of attribute reduction was proposed. As is well known that core attributes play a crucial role in heuristic attribute reduction algorithms. Core attributes are computationally expensive in attribute reduction. Hu et al. [

21] gave a quick algorithm of the core attribute calculation using a binary discernibility matrix. The computational complexity of the algorithm is

, where

is the number of condition attributes and

is the number of objects in the universe.

An original binary discernibility matrix usually contains redundant objects and attributes. These redundant objects and attributes may deteriorate the performance of feature selection (attribute reduction) and knowledge acquisition based on binary discernibility matrices. In other words, storing or processing all objects and attributes in an original binary discernibility matrix could be computationally expensive, especially in dealing with large scale data sets with high dimensions. So far, however, few works about the binary discernibility matrix simplification have been investigated. The existing algorithms regarding binary discernibility matrix simplification are time-consuming. To tackle this problem, our works in this paper concern on how to improve the time efficiency of algorithms of binary discernibility matrix simplification. On this purpose, we construct deterministic finite automata in a binary discernibility matrix to compare the relationships of different rows (or columns) quickly. By using deterministic finite automata, we develop a quick algorithm of binary discernibility matrix simplification. Experimental results show that the proposed algorithm is effective and efficient. The contributions of this paper are summarized as follows: First, we define row and column relations which can be used for constructing deterministic finite automata in a binary discernibility matrix. Second, deterministic finite automata in a binary discernibility matrix are proposed to compare the relationships of different rows (or columns) quickly. Third, based on this method, a quick algorithm for binary discernibility matrix simplification (BDMSDFA) is proposed. The proposed method in this paper is meaningful in practical applications. First, by using BDMSDFA, we obtain the simplified binary discernibility matrices quickly. These simplified binary discernibility matrices can significantly improve the efficiency of attribute reduction (feature selection) in decision systems. Second, a binary discernibility matrix without redundant objects and attributes will have the high performance of learning algorithms, and need less space for data storage.

The rest of this paper is structured as follows. We review basic notions about rough set theory in the next section. In

Section 3, we propose a general binary discernibility matrix, and define row relations and column relations in a binary discernibility matrix. In

Section 4, we develop a quick algorithm for binary discernibility matrix simplification which is called BDMSDFA. Experimental results in

Section 5 show that the algorithm of BDMSDFA is effective and efficient, it can be applicable to simplification of large-scale binary discernibility matrices. Finally, the whole paper is summarized in

Section 6.

2. Preliminaries

Basic notions about rough set theory are briefly reviewed in this section. Some further details about rough set theory can be found in the paper [

9]. A Pawlak decision system can be regarded as an original information system with decision attributes which give decision classes for objects.

A Pawlak decision system [

9] can be denoted by

4-tuple

, where universe

is a finite non-empty set of objects; attribute set

,

, where

is called a condition attribute set and

is called a decision attribute set in a decision system;

is the domain of a condition attribute

,

and

is a function such that

,

, where

.

Given a Pawlak decision system , for , an indiscernibility relation regarding attribute set is defined as . Therefore, the discernibility relation regarding attribute set is given by . The indiscernibility relation regarding is reflexive, symmetric and transitive. Meanwhile, the discernibility relation is irreflexive, symmetric, but not transitive. A partition of U derived from is denoted by . The equivalence class in containing object is defined as .

For

, the relative indiscernibility relation and discernibility relation with respect to decision attribute set [

9] are defined by:

A relative indiscernibility relation with respect to is reflexive, symmetric, but not transitive. A relative discernibility relation with respect to is irreflexive, symmetric, but not transitive.

A discernibility matrix, proposed by Skowron and Rauszer [

22], suggests a matrix representation for storing condition attribute sets which can discern objects in the universe. Discernibility matrix is an effective method in reduct construction, data representation and rough logic reasoning, and it is also useful mathematical tool in data mining, machine learning, etc. Many extended models of dicernibility matrices have been studied in recent years [

23,

24,

25,

26,

27,

28,

29,

30]. Considering the classification property

, Miao et al. [

31] constructed a general discernibility matrix

, where

is denoted by:

where

denotes objects

and

are discernible with respect to the classification property

in a decision system

. It should be noted that

is a general definition on classification property. A general discernibility matrix provides a common solution to attribute reduction algorithms based on discernibility matrices. By constructing different discernibility matrices, the relative attribute reducts with different reduction targets can be obtained. Based on the relative discernibility relation

, Miao et al. [

31] introduced a relationship preservation discernibility matrix which can be denoted as follows:

Definition 1. [31] Let be a decision system, for , , , is a relationship preservation discernibility matrix, where is defined by: 3. Binary Discernibility Matrices and Their Simplifications

The binary discernibility matrix, initiated by Felix and Ushio [

10], is a binary presentation of original discernibility matrix. In this section, we suggest a general binary discernibility matrix. Relations of row pairs and column pairs are discussed respectively. Formally, a binary discernibility matrix [

10] is introduced as follows:

Definition 2. [10] Given a decision system , for and . is a binary discernibility matrix, where the element is denoted by: Based on a binary discernibility matrix, discernible attributes about and can be easily obtained. A binary discernibility matrix brings us an understandable approach for representations of discernible attributes, and can be used for designing reduction algorithms. To satisfy more application requirements, we extend original binary discernibility matrix to general binary discernibility matrix as follows:

Definition 3. Given a decision system , for , , regarding Δ

is a general binary discernibility matrix, in which is defined by: is the discernibility relation regarding classification property Δ. The set of rows in is presented by , where . The set of columns in is presented by , where is the cardinality of attribute sets in a decision system. For convenience, a general binary discernibility matrix can be also denoted by . For , , is the matrix element at row and column in , and is the matrix element at row and column in , where , .

Since a general binary discernibility matrix provides a common structure of binary discernibility matrices in rough set theory, one can construct a binary discernibility matrix according to a given classification property. Any binary discernibility matrix can be also regarded as the special case of the general binary discernibility matrix. Therefore, a general definition of binary discernibility matrix is necessary and important. Based on the relative discernibility relation with respect to D, Definition 2 can be also rewritten as follows:

Definition 4. Given a decision system , for , , is the relative discernibility relation with respect to a condition attribute set C. is a binary discernibility matrix, in which the element is denoted by: This definition is equivalent to Definition 2 [

10]. It is noted that we calculate binary discernibility matrix in this paper by using the relationship preservation discernibility matrix.

Definition 5. For , , and are elements in a binary discernibility matrix , a row pair with respect to attribute is denoted by {< 0,0 >, < 0,1 >, < 1,0 >, < 1,1 >}, a binary relation between row and is defined as .

Similar to Definition 5, we define a column pair and a binary relation with respect to columns as follows.

Definition 6. For , , elements and in a binary discernibility matrix , a column pair with respect to row is denoted by { < 0,0 >, < 0,1 >, < 1,0 >, < 1,1 >}, a binary relation between column and is defined as .

For the matrix element and in the same column , we define three row relations in a binary discernibility matrix as follows.

Definition 7. Given a binary discernibility matrix , ,

- (1)

for , , if and only if and ; for , , if and only if and ;

- (2)

for , if and only if ;

- (3)

for , if and only if and .

Analogous to Definition 7, for matrix elements and in the same row , we define column relations in a binary discernibility matrix as follows.

Definition 8. Given a binary discernibility matrix , ,

- (1)

for , , if and only if and ; for , , if and only if and ;

- (2)

for , if and only if ;

- (3)

for , if and only if and .

Let be the elements’ set of a prime implicant in a disjunctive normal form with row and be the elements’ set of a prime implicant in a disjunctive normal form with row , then means that is the superset of . For , indicates attribute can distinguish more objects in the universe. In a binary discernibility matrix, the row in which all elements are 0s indicates there are no attribute can discern the related objects, and the column in which all elements are 0s indicates that this attribute cannot discern objects in the universe.

In [

17], Zhi and Miao first proposed an algorithm of a binary discernibility matrix simplification shown in Algorithm 1. To improve the efficiency of BDMR, Wang et al. [

32] introduced an improved algorithm of binary discernibility matrix reduction shown in Algorithm 2.

| Algorithm 1: An algorithm of binary discernibility matrix reduction, BDMR. |

| Input: Original binary discernibility matrix ; |

| Output: Simplified binary discernibility matrix |

- 1:

delete the row in which all elements are 0 s; - 2:

fortodo - 3:

for to do - 4:

if then - 5:

delete row - 6:

break - 7:

end if - 8:

end for - 9:

end for - 10:

delete the column in which all elements are 0 s; - 11:

fortodo - 12:

for to do - 13:

if then - 14:

delete column - 15:

break - 16:

end if - 17:

end for - 18:

end for - 19:

output a simplified binary discernibility matrix ;

|

| Algorithm 2: An improved algorithm of binary discernibility matrix reduction, IBDMR. |

| Input: Original binary discernibility matrix ; |

| Output: Simplified binary discernibility matrix |

- 1:

delete the row in which all elements are 0 s; - 2:

sort rows in ascending order by the quantity of the number ‘1’ in each row - 3:

fortodo - 4:

for to do - 5:

if then - 6:

delete row - 7:

break - 8:

end if - 9:

end for - 10:

end for - 11:

delete the column in which all elements are 0 s; - 12:

fortodo - 13:

for to do - 14:

if then - 15:

delete column - 16:

break - 17:

end if - 18:

end for - 19:

end for - 20:

output a simplified binary discernibility matrix ;

|

4. A Quick Algorithm for Binary Discernibility Matrix Simplification

In this section, we investigate two theorems related to row relations and column relations respectively. Based on the two theorems, deterministic finite automata for row and column relations are introduced. Deterministic finite automata can be carried out to obtain the row relations and column relations quickly. By using deterministic finite automata, we propose an algorithm of binary discernibility matrix simplification using deterministic finite automata (BDMSDFA).

Theorem 1. Let be a binary discernibility matrix, for , , , we have:

- (1)

if , then there exists ;

- (2)

if , then there exists ;

- (3)

if , then there exists or

;

- (4)

if , then there exists and

.

Proof. - (1)

If there does not exist , then or or . We have seven binary relations as follows: , , , , , and . From seven binary relations above, if , , one cannot get . Thus, there exists in .

- (2)

If there does not exist , then or or . Thus, we can also have seven binary relations as follows: , , , , , and . From seven binary relations above, if and , one cannot get . Thus, there exists in .

- (3)

If there does not exist or . There must have seven binary relations as follows: , , , , , , . From seven binary relations above, we cannot have . Thus, there exists or in .

- (4)

If there does not exist and . We may obtain eleven binary relations as follows: , , , , , , , , , , . From eleven binary relations, for , we cannot have . Thus, there exists and in .

This completes the proof. ☐

Analogous to Theorem 1, we can easily obtain the following theorem as:

Theorem 2. Let be a binary discernibility matrix, for , , we can have:

- (1)

if , then there exists ;

- (2)

if , then there exists ;

- (3)

if , then there exists or

;

- (4)

if , then there exists and

.

Proof. - (1)

If there does not exist , then or or . We have seven binary relations as follows: , , , , , and . From seven binary relations above, if , , one cannot get . Thus, there exists in .

- (2)

If there does not exist , then or or . Thus, we can also have seven binary relations as follows: , , , , , and . From seven binary relations above, if and , one cannot get . Thus, there exists in .

- (3)

If there does not exist or . There must have seven binary relations as follows: , , , , , , . From seven binary relations above, we cannot have . Thus, there must exists or in .

- (4)

If there does not exist and . We may obtain eleven binary relations as follows: , , , , , , , , , , . From eleven binary relations, for , we cannot have . Thus, there exists and in .

This completes the proof. ☐

Deterministic finite automaton, also called deterministic finite acceptor, is an important concept in theory of computation. A deterministic finite automaton constructs a finite-state machine which can accept or reject symbol strings, and produce a computation of automation for each input string. In what follows, we adopt deterministic finite automata to obtain row relations and column relations in a binary discernibility matrix. Here, we first review the definition of deterministic finite automaton as follows.

Definition 9. A deterministic finite automaton is a 5-tuple , where Q is a finite nonempty set of states, ∑ is a finite set of input symbols, δ is a transition function, is a start state, F is a set of accept states.

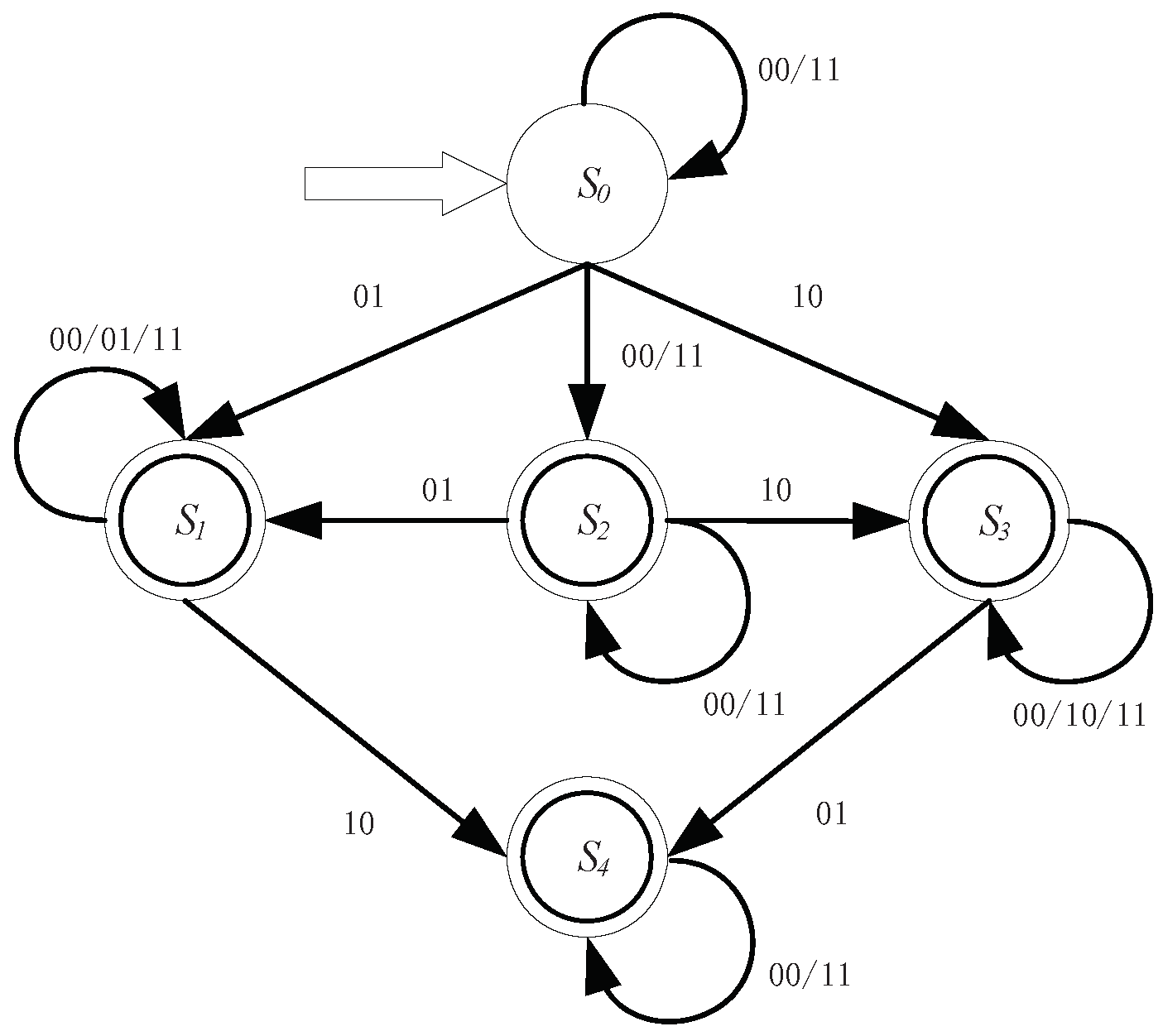

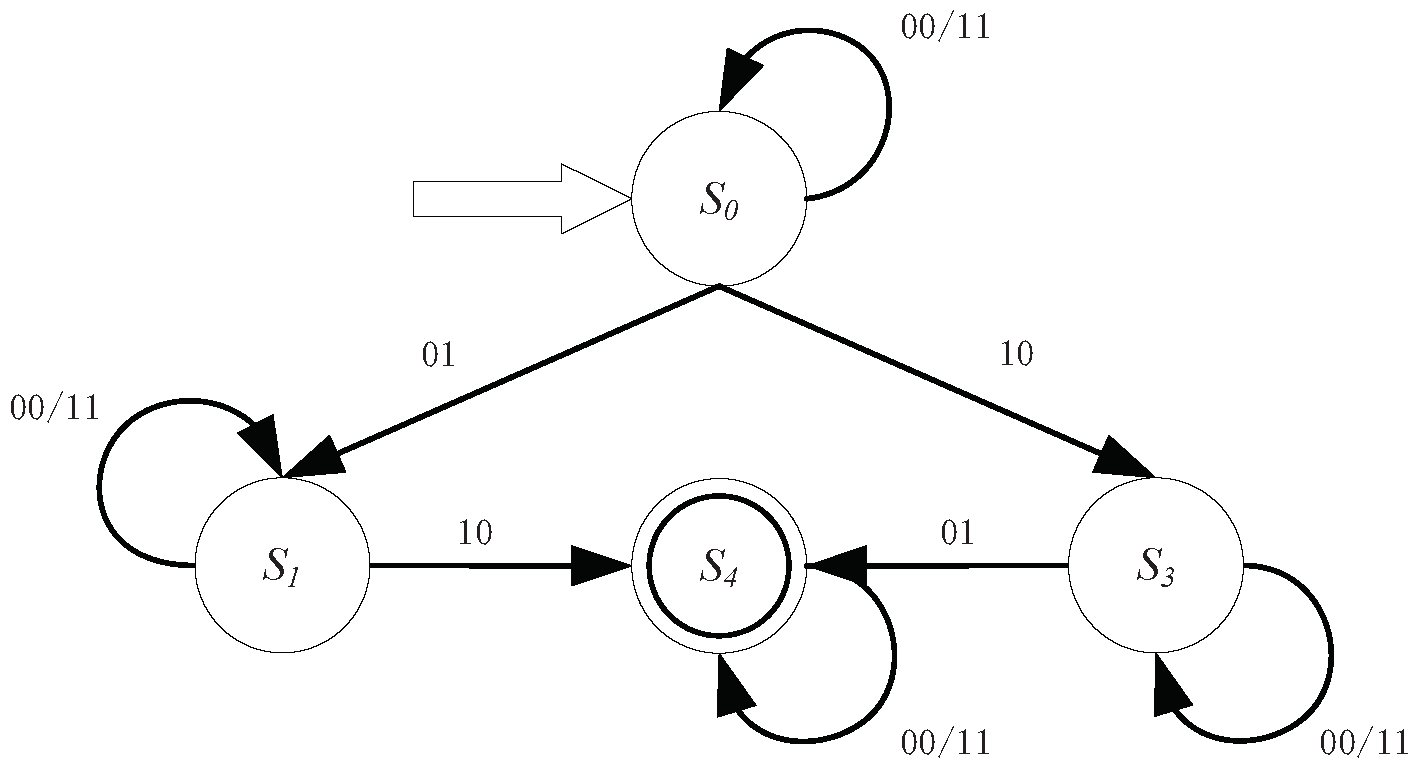

Regarding object pair ‘’ as the basic granule in input symbols, a deterministic finite automaton for row relations in a binary discernibility matrix is illustrated by the following theorem:

Theorem 3. A deterministic finite automaton for row relations, denoted by , is a 5-tuple , where is a finite set of states, is an input binary character string, δ is a transition function, is a start state, is a set of accept states. A deterministic finite automaton for row relations can be illustrated in Figure 1 as follows. Proof. In a binary discernibility matrix, relations between and can be concluded as , , and .

We discuss a deterministic finite automaton for row relations from four parts separately, as follows.

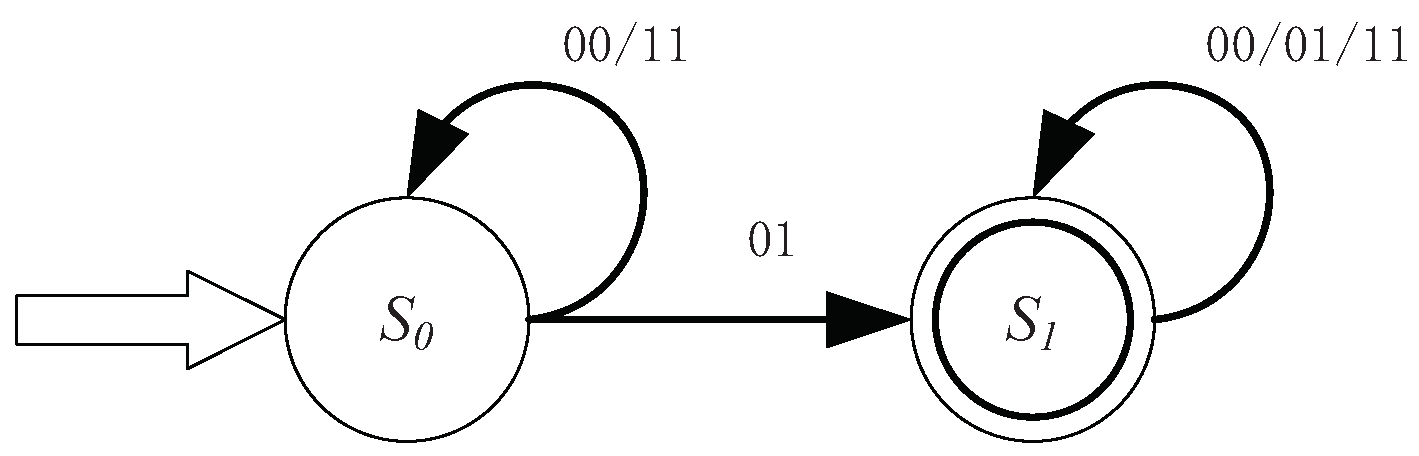

- (1)

According to Definition 5 and Theorem 1, for

,

, there must be

. Thus, the regular expression for

can be defined as

. We can easily have the corresponding deterministic finite automaton in

Figure 2 as:

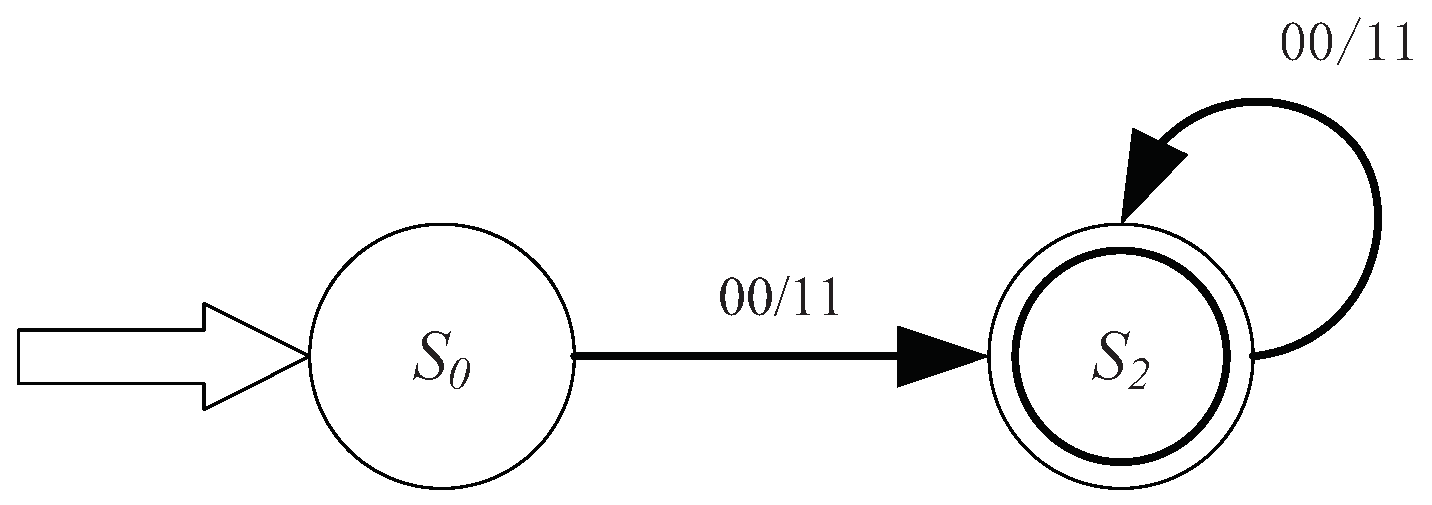

- (2)

For

, there must be

or

. The regular expression for

is denoted by

. So, the corresponding deterministic finite automaton can be illustrated in

Figure 3 as:

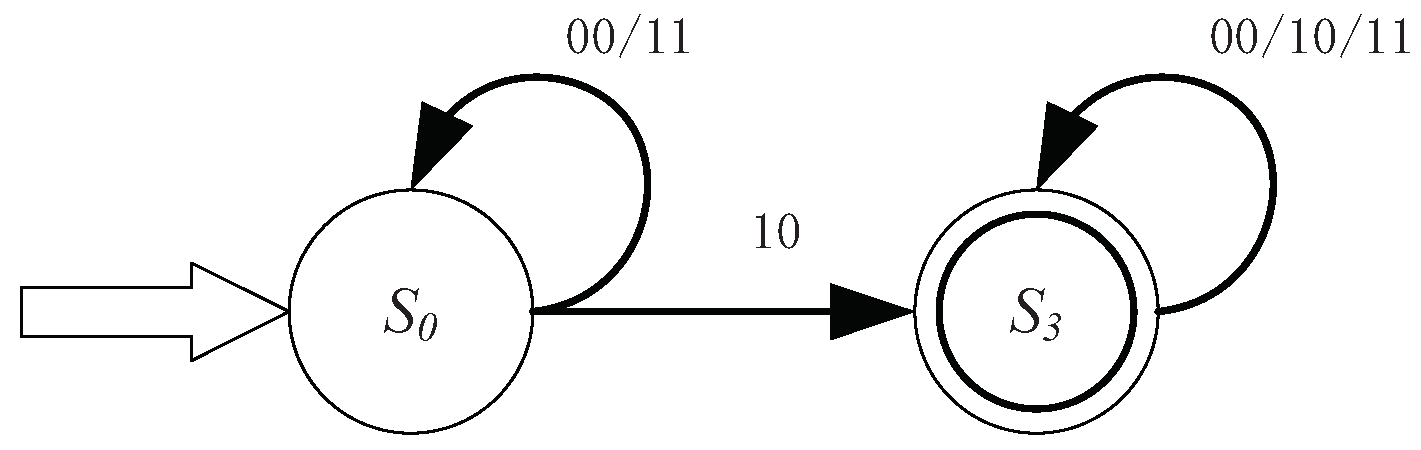

- (3)

Analogous to

, for

, there must be

. Therefore, the regular expression for

can be obtained as

. We can easily have the corresponding deterministic finite automaton in

Figure 4 as:

- (4)

For

, there must be

and

. The regular expression for

is denoted by

. Hence, the corresponding deterministic finite automaton can be illustrated in

Figure 5 as:

One can construct a deterministic finite automaton for row relations by four deterministic finite automata shown in

Figure 1.

This completes the proof. ☐

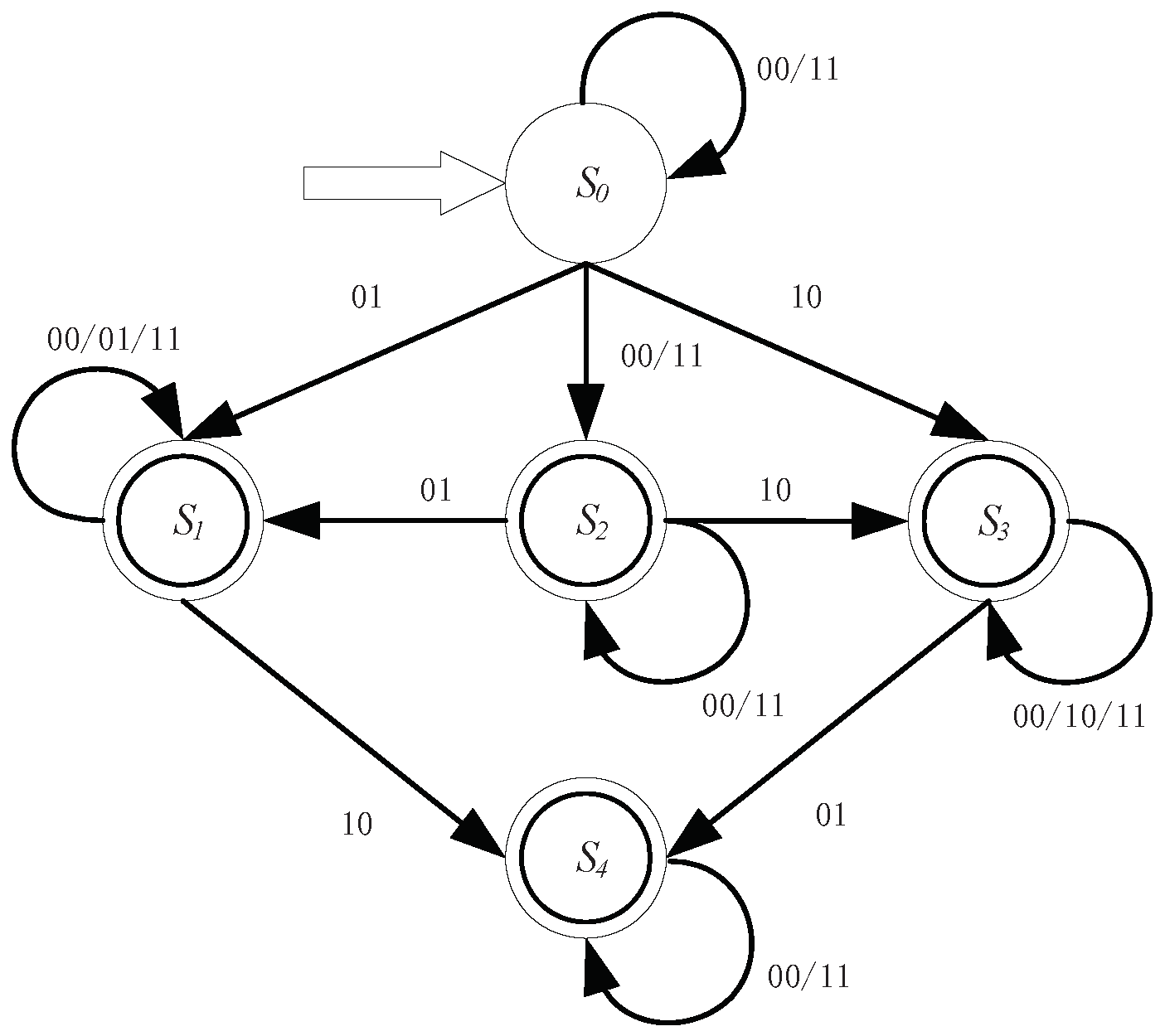

Similar to the deterministic finite automaton for row relations, we present the deterministic finite automaton for column relations in a binary discernibility matrix as follows.

Theorem 4. A deterministic finite automaton for column relations is a 5-tuple , where is a finite set of states, is an input binary character string, δ is a transition function, is a start state. is a set of accept states. A deterministic finite automaton for column relations can be illustrated in Figure 6 as follows: Proof. This proof is similar to the proof of Theorem 3. ☐

By means of the proposed deterministic finite automata for row and column relations, we propose a quick algorithm for binary discernibility matrix simplification using deterministic finite automata (BDMSDFA) as follows:

We present the following example to explain Algorithm 3 as follows.

| Algorithm 3: A quick algorithm for binary discernibility matrix simplification using deterministic finite automata, BDMSDFA. |

| Input: Original binary discernibility matrix ; |

| Output: Simplified binary discernibility matrix |

- 1:

delete the row in which all elements are 0 s; - 2:

compare the row relation between and by - 3:

for to do - 4:

for to do - 5:

if then - 6:

delete row from - 7:

break - 8:

end if - 9:

end for - 10:

end for - 11:

delete the column in which all elements are 0 s; - 12:

compare the column relation between and by - 13:

for to do - 14:

for to do - 15:

if then - 16:

delete column from - 17:

break. - 18:

end if - 19:

end for - 20:

end for - 21:

output a simplified binary discernibility matrix ;

|

Example 1. Let be a decision system shown Table 1, where the universe , the condition attribute set , the decision attribute set . For the decision system above, we have the corresponding binary discernibility matrix as follows: We delete the row in which all elements are 0 s in , and obtain the binary discernibility matrix as follows. In the binary discernibility matrix , , , , , , , , , . According to the definition of the deterministic finite automaton for row relations, we have , , , , , , , . By using the deterministic finite automaton for row relations shown in Figure 1, we can get the row relations as follows. , , , , , , , . Therefore, we delete , , and . Similarly, we get and , , and then delete and . Therefore, we have the following binary discernibility matrix: We delete the column in which all elements are 0 s in , and have In the binary discernibility matrix , , , . According to the definition of deterministic finite automaton for column relations, we have , , . By using the deterministic finite automaton for column relations shown in Figure 6, we have , , . Thus, we cannot delete any column in , and get the following binary discernibility matrix. A matrix is compressed to a matrix . The simplified binary discernibility matrix with fewer objects or columns will be help in improving the efficiency of attribute reduction.

Assume that and , the upper bound of time complexity of BDMR is , the lower bound of time complexity of BDMR is . The upper bound of time complexity of IBDMR is and the lower bound of the worst-case time complexity of IBDMR is . By employing deterministic finite automata, the algorithm complexity of BDMSDFA is . Obviously, the time complexity of BDMSDFA is lower than that of BDMR and IBDMR. Therefore, it is concluded that the proposed algorithm BDMSDFA reduces the computational time for binary discernibility matrix simplification in general.

The advantages of the proposed method are expressed as follows. (1) Deterministic finite automata in a binary discernibility matrix are constructed, it can provide an understandable approach to comparing the relationships of different rows (columns) quickly. (2) Based on deterministic finite automata, a high efficiency algorithm of binary discernibility matrix simplification is developed. Theoretical analyses and experimental results indicate that the proposed algorithm is effective and efficient. It should be noted that the proposed method is based on Pawlak decision systems, but not suitable for generalized decision systems, such as incomplete decision systems, interval-valued decision systems and fuzzy decision systems. Deterministic finite automata in generalized decision systems will be investigated in the future.

5. Experimental Results and Analyses

The objective of the following experiments in this section is to demonstrate the high efficiency of the algorithm BDMSDFA. The experiments are divided into two aspects. In one aspect, we employ 10 datasets in

Table 2 to verify the performance of time consumption of BDMR, IBDMR and BDMSDFA. In the other aspect, the computational times of algorithms BDMR, IBDMR and BDMSDFA with the increase of the size of attributes (or objects) are calculated respectively. We carry out three algorithms on a personal computer with Windows 8.1 (64 bit) and Inter(R) Core(TM) i5-4200U, 1.6 GHz and 4 GB memory. The software is Microsoft Visual Studio 2017 version 15.9 and C++. Data sets used in the experiments are all downloaded from UCI repository of machine learning data sets (

http://archive.ics.uci.edu/ml/datasets.html).

Table 2 indicates the computational time of BDMR, IBDMR and BDMSDFA on the 10 data sets. We can see that the algorithm BDMSDFA is much faster than the algorithms BDMR and IBDMR. The computational times of three algorithms follows this order: BDMR ≥ IBDMR > BDMSDFA. The computational time of BDMSDFA is the minimum among the three algorithms. For the data set Auto in

Table 2, the computational times of BDMR and IBDMR are 75 ms and 68 ms, while that of BDMSDFA is 36 ms. For the data set Credit_a, the computational times of BDMR and IBDMR are 113 ms and 105 ms, while that of BDMSDFA is 55 ms. For some data sets in

Table 2, the computational time of BDMSDFA can reduce over half the computational time of BDMR or IBDMR. In

Table 2, for the data set Breast_w, the computational times of BDMR and IBDMR are 75 ms and 73 ms, while that of BDMSDFA is 29 ms. For the data set Promoters, the computational times of BDMR and IBDMR are 1517 ms and 936 ms, while that of BDMSDFA is only 398 ms. For the date sets such as Lung-cancer, Credit_a, Breast_w, Anneal, the computational time of BDMR is close to that of IBDMR. For the data set Labor_neg, the computational time of BDMR is equivalent to that of IBDMR. For each data set in

Table 2, difference between BDMR and IBDMR is relatively smaller than difference between BDMR (IBDMR) and BDMSDFA.

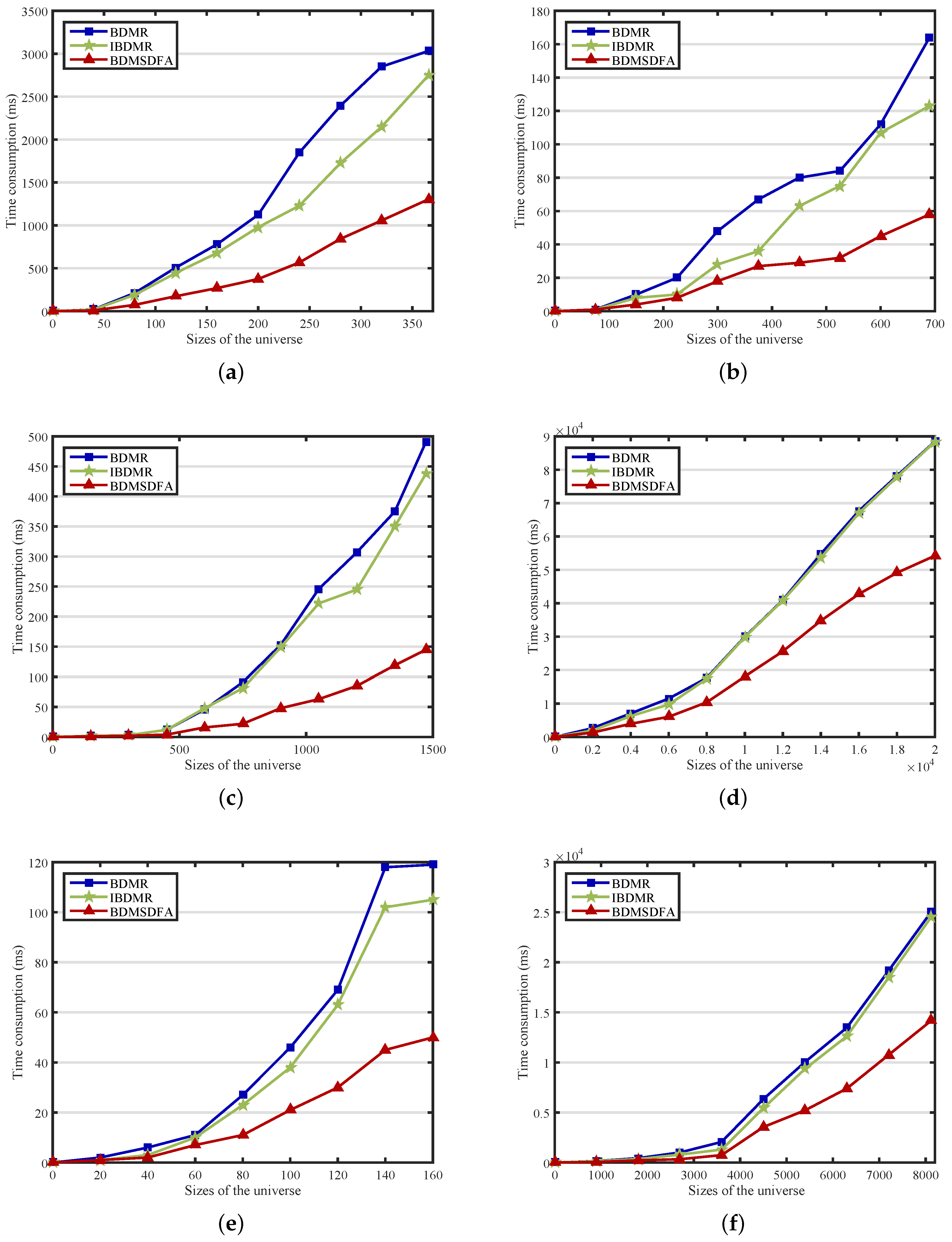

We compare the computational times of BDMR, IBDMR and BDMSDFA with the increase of the size of objects. In

Figure 7a–f, the

x-coordinate pertains to the size of objects in the universe, while the

y-coordinate concerns the time consumption of algorithms. We employ 6 data sets (Dermatlogy, Credit_a, Controceptive_Method_Choice, Letter, Flag and Mushroom) to verify the performance of time consumption of BDMR, IBDMR and BDMSDFA. When dealing with the same UCI data sets, the computational time of BDMSDFA is less than that of BDMR and IBDMR, in other words, BDMSDFA is more efficient than BDMR and IBDMR.

Figure 7 shows more detailed change trends of each algorithm with the number of objects increasing. The computational times of three algorithms increase with the increase of the number of objects simultaneously. It is obvious to see that the slope of the curve of BDMSDFA is smaller than the curve of BDMR or IBDMR, and the computational time of BDMSDFA increases slowly. The differences between BDMR (IBDMR) and BDMSDFA become distinctly larger when the size of the objects increases. In

Figure 7c, the difference of BDMR (IBDMR) and BDMSDFA is not obviously different at the beginning. The computational time of DBMR (IBDMR) increases distinctly when the number of objects is over 450. The computational time of algorithm BDMR increases by 479 ms when the number of objects rises from 450 to 1473, whereas the computational time of algorithm BDMSDFA increases by only 141 ms. In

Figure 7e, the computational time of the algorithm IBDMR increases by 104 ms when the number of objects rises from 20 to 160, whereas the time consumption of algorithm BDMSDFA increases by only 49.

In

Figure 8a–f, the

x-coordinate pertains to the size of attributes, while the

y-coordinate concerns the time consumption of algorithms. We also take 6 data sets (Dermatlogy, Credit_a, Controceptive_Method_Choice, Letter, Flag and Mushroom) to verify the performance of the computational times of BDMR, IBDMR and BDMSDFA. The curve of BDMR is similar to that of IBDMR. The curve of BDMSDFA is under the curves of BDMR and IBDMR. Then, the computational time of BDMSDFA is less than that of BDMR or IBDMR. In

Figure 8b, the computational time of algorithms BDMR and IBDMR increase by 164 ms and 123 ms respectively, while the computational time of algorithm BDMSDFA increases by 58 ms. In

Figure 8c, the curves of BDMR and IBDMR raise profoundly when the size of the attributes increases. In

Figure 8e, the computational time of algorithm IBDMR increases from 4 ms to 105 ms when the number of objects rises from 3 to 24, while the computational time of algorithm BDMSDFA increasedly from 2 ms to 50 ms. For

Figure 8a–f, it is concluded that the efficiency of BDMSDFA is higher than that of BDMR or IBDMR with the increase of the number of attributes. Difference between BDMR and IBDMR is relatively smaller than difference between BDMR (IBDMR) and BDMSDFA. The computational times of three algorithms increase with the increase of the number of attributes monotonously. When dealing with the same situation, the computational time of BDMSDFA is the minimum among the three algorithms.

Experimental analyses and results show a high efficiency of the algorithm BDMSDFA. The proposed simplification algorithm using deterministic finite automata can be applied as a preprocessing technique for data compression and attribute reduction in large-scale data sets.