Abstract

Big Data classification has recently received a great deal of attention due to the main properties of Big Data, which are volume, variety, and velocity. The furthest-pair-based binary search tree (FPBST) shows a great potential for Big Data classification. This work attempts to improve the performance the FPBST in terms of computation time, space consumed and accuracy. The major enhancement of the FPBST includes converting the resultant BST to a decision tree, in order to remove the need for the slow K-nearest neighbors (KNN), and to obtain a smaller tree, which is useful for memory usage, speeding both training and testing phases and increasing the classification accuracy. The proposed decision trees are based on calculating the probabilities of each class at each node using various methods; these probabilities are then used by the testing phase to classify an unseen example. The experimental results on some (small, intermediate and big) machine learning datasets show the efficiency of the proposed methods, in terms of space, speed and accuracy compared to the FPBST, which shows a great potential for further enhancements of the proposed methods to be used in practice.

1. Introduction

Big Data analytics has received a great deal of attention recently, particularly in terms of classification, this is due to the main properties of Big Data: volume, variety, and velocity [1,2]. Having a large number of examples and various types of data, Big Data classification attempts to seize these properties to obtain better learning models with fast learning/classification [3,4,5].

The problem of Big Data classification is similar to the tradition classification problem, taking into consideration the main properties of such data, and can be defined as follows, given a training dataset of n examples, in d dimensions or features; the learning algorithm needs to learn a model that will be able to efficiently classify an unseen example E. In the case of Big Data, where n and/or d are very large values, tradition classifiers become inefficient, for example the K-nearest neighbors (KNN) [6,7] took weeks to classify some Big Data sets [8].

Recently, we proposed three methods for Big Data classification [8,9,10]. All of these methods employ an approach based on creating a binary search tree (BST), in order to speed up the Big Data classification using a KNN classifier with a smaller number of examples, those which are found by the search process. The real distinction between these methods is in the way of creating the BST. The first uses the furthest-pair of points to classify the examples along the BST, the second uses two extreme points based on the minimum and maximum points found in a dataset, and the third uses the Euclidean norms of the examples. Each has its own weakness and strength. However, the common weakness is the use of the slow KNN classifier.

The main goal and contribution of this paper is to improve the performance of the first method- the furthest-pair-based BST (FPBST), by removing the need for the slow KNN classifier, and converting the BST to a decision tree (DT). However, any enhancement made for this method can be easily generalized to the other two methods.

The new enhancement might make the FPBST (and its sisters) a practical alternative for the KNN classifier, since the KNN might be the only available choice in certain cases, for example, such as when used for content-based image retrieval (CBIR) [11,12].

The FPBST sorts the numeric training examples into a binary search tree, to be used later by the KNN classifier, attempting to speed up the Big Data classification, knowing that searching a BST for an example is much faster than searching the whole training data. This method depends mainly on finding two local examples (points) to create the BST, these points are the furthest-pair of points (diameter) of a set of points in d-dimensional Euclidean feature space [9], these two points are found using a greedy algorithm proposed by [13]. These points are then used to sort the other examples based on their similarity using the Euclidean distance. The training phase of the FPBST ends by creating the BST, which is searched later for a test example E to a leaf-node, where similar examples are found, the KNN classifier is then used to classify E.

Having known that the KNN is slow, we opt for disusing it in this paper, and we do this by converting the resultant BST to a decision tree. To do so, we opt for calculating the probabilities of each class at each node, we calculate the probabilities using four methods, (1) calculating them at the leaf node only; (2) calculating the accumulated probabilities along the depth of the tree; (3) calculating the weighted-accumulated probabilities using the tree’s level as a weight; and (4) calculating the weighted-accumulated probabilities using the tree’s level as an exponential weight. Therefore, we propose four methods based on these four calculations, these four methods stop clustering when having examples of only one class. We propose the fifth method which uses the accumulated probabilities of the classes but continues clustering until there is only one example (or similar examples) in a leaf-node.

We further enhanced these five methods by swapping the furthest-pair of points based on the minimum class, so as to obtain a coherent decision tree, where examples of similar classes are stored closer to each other, unlike the FPBST, which uses the minimum/maximum norms for this purpose, thus, we propose ten methods in this paper. These methods/enhancements of the FPBST solve (by default) another related problem associated with the FPBST use of the KNN, which is finding the best k for the KNN [14,15]. In this work, there is no need to determine such a parameter since there is no need to use the KNN.

The important of this research stems from the decreased size of the resultant tree, which is attained by trimming the tree, where all the examples found in a node were of the same class, and this speeds up the training process, reduces the space needed for the resultant tree and increases the speed of testing, in addition to increasing the accuracy of classification if possible.

The rest of this paper is organized as follows. Section 2 presents some related methods used for Big Data classification. Section 3 describes the proposed enhancements and the data set used for experiments. Section 4 evaluates and compares the proposed enhancements to FPBST. Section 5 draws some conclusions, shows the limitations of the proposed enhancements, and gives directions for future research.

2. Related Work

Recently, remarkable efforts have been made to find new methods for Big Data classification, in addition to the FPBST, reference [10] used two extreme points, which are the minimum and maximum points found in a dataset to create a BST, so as to sort the examples of a training set. This BST is then searched for a test example to a leaf-node, where similar examples can be found, the KNN classifier is then used to classify a test example. Similarly, reference [8] used the same methodology, except for the manner of creating the BST, where it was created based on the Euclidean norms of the training examples. Both methods were very fast, however, the accuracy results were slightly less than that of the FPBST [9] in general.

Two recent and interesting approaches proposed by Wang et al. [16] deal with the problem differently, using random and principal component analysis (PCA) techniques to divide the data in order to obtain multivariate decision tree classifiers. Both methods were evaluated on several Big Datasets, the reported accuracy results considering all the datasets used, show that the data partitioning using PCA performs better than that of a random technique used.

Maillo et al. [17] proposed a parallel implementation based on mapping the training set examples, followed by reducing the number of examples that are related to a test sample. The reported results were similar to that of an exact KNN but faster, i.e., about up to 149 times faster than the KNN when tested on 1 million examples; the speed of this parametric method depends mainly on the K neighbors as well as the number of maps used. This work is further improved by almost the same team [18], where they proposed a new KNN based on Spark, which is similar to the mapping/reducing technique but with using multiple reducers to speed up the process, the size of the dataset used was up to 11 million examples.

Based on clustering the training set using K-means clustering algorithm, Deng et al. [19] proposed two methods to increase the speed of KNN, the first used random clustering and the second used landmark spectral clustering, when finding the related cluster, both utilize the KNN to test the input example with a smaller set of examples. Both algorithms were evaluated on nine Big Datasets showing reasonable approximations to the sequential KNN, the reported accuracy results were dependent on the number of clusters used.

Another clustering approach is utilized recently by Gallego et al. [20], who proposed two clustering methods to accelerate the speed of the KNN, both are similar; however, the second is an enhancement of the first, where a cluster augmentation process is employed. The reported average accuracy of all the Big Datasets used was in the range of 83 to 90% depending on the K-neighbors and number of clusters used. The performance of both methods has improved significantly when the Deep Neural Networks has been employed for learning a suitable representation for the classification task.

Most of the proposed work in this domain is based on divide and conquer approach, this is a logical approach to use with Big Datasets and, therefore, most of these approaches are based on clustering, splitting, or partitioning the data to turn and reduce the very large size to a manageable size that can be used for and efficient classification. One major problem associated with such approaches is that the determination of the best number of clusters/parts, sine more clusters means fewer examples and, therefore, faster testing. However, fewer examples also means less accuracy, as the examples found in a specific cluster might not be related to the tested example. On the contrary, few clusters indicate a large number of examples per each, which increases the accuracy but slows down the classification process if the KNN is used.

Similar to [8,9,10] there exist extensive literature on tree structures such as k-d trees [21], metric trees [22], cover trees [23], and other related work such as [24,25]. Regardless of the plethora of the proposed methods in this domain, there is still room for improvement in terms of accuracy and time consumed for both training and testing stages. Additionally, this work is nothing but an attempt to improve the performance of one of these methods.

3. Furthest-Pair-Based Decision Trees (FPDT)

This section describes and illustrates the proposed methods, in addition to describing the data used for evaluation and experiments.

3.1. Methods

The main improvement of the FPBST [9] includes the unemployment of the standard KNN algorithm as described by [6,7], which is time-consuming particularly when classifying Big Data. In this paper, we propose the use of the probabilities of the classes found in the leaf-nodes to decide the class of a test example, without having to use the slow KNN, even if there are a small number of examples found in a leaf-node. We keep the same functionality of the binary search tree (BST), which is employed to sort the examples (points) of machine learning datasets in a way that facilitates the search process. This BST sorts all the examples taken from a training dataset based on their distances from two local points (P1 and P2), which are two examples from the training dataset itself, and they vary based on the host node and the level/location of that node in a BST.

The FPBST builds its BST by finding the furthest points P1 and P2 [13], assuming that the furthest points are the most dissimilar points, and therefore, are more likely to be belonging to different classes. Thus, sorting other examples based on their distances to these points might be a good choice, as similar examples are sorted nearby, while dissimilar examples are sorted faraway in the created BST.

Similar to the FPBST, the training phase of the proposed method (FPDT) creates a binary decision tree (DT), which speeds up searching for a test example comparing to the unacceptable time an exhaustive search, particularly when classifying Big Datasets. We use the same Euclidean distance metric (ED) for measuring distance, to compare the results of the proposed method to those of the FPBST.

While creating the DT, we calculate the probability of each class to occur in each node, her we opt for several options:

- Accumulate the classes’ probabilities by adding the parent’s probabilities to its children’s; we call this method decision tree 0 (DT0).

- Accumulate the probabilities by adding the parent’s probabilities to its children’s; and weighting these probabilities by the level of the node, assuming that the more we go deeper in the tree, the more likely we reach to a similar example(s), this is done by multiplying the tree level by the classes’ probabilities at a particular node; we call this method decision tree 1 (DT1), and is shown in Algorithm 1 as (Dtype = 1).

- Accumulate the classes’ probabilities by adding the parent’s probabilities to its children's; and weighting these probabilities exponentially, for the same reason in 2, but with higher weight. This is done by multiplying the classes’ probabilities by 2 to the power of the tree’s level at a particular node; we call this method decision tree 2 (DT2), and is shown in Algorithm 1 as (Dtype = 2).

- No accumulation of the probabilities, we use just the probabilities found in a leaf node; we call this method decision tree 3 (DT3), and is shown in Algorithm 1 as (Dtype ≠ 3).

- Similar to DT0 (normal accumulation), but the algorithm continues to cluster until there is only one or a number of similar examples in a leaf-node, this is done even if all the examples of a current node belong to the same class. While DT0–DT3 stop the recursive clustering when all the examples of the current node are belonging to the same class, and consider the current class as a leaf-node, we call this method decision tree 4 (DT4), and is shown in Algorithm 1 as (Dtype = 4).

The idea behind accumulating the probabilities is to remove the effect of unbalanced datasets, as some datasets contains more examples of a specific class than the other classes, and this will increase the probability of the dominant class, since it is calculated in the root node and accumulated along the depth of the tree, so by moving deeper, less number of the dominant examples remain.

Algorithm 1 shows the pseudo code for the training phase of the FPDT method, which works well for DT0, DT1, DT2, DT3, and DT4 depending on the input (Dtype), and Algorithm 2 shows the pseudo code for the testing phase of the FPDT method, which is the same for DT0, DT1, DT2, DT3, and DT4, as these methods differ in the way of creating the decision tree only, i.e., the training phase.

| Algorithm 1. Training Phase (DT building) of FPDT. |

| Input: Numerical training dataset DATA with n FVs and d features, and DT type (Dtype) Output: A root pointer (RootN) to the resultant DT. 1- Create a DT Node → RootN 2- RootN.Examples ← FVs//all indexes of FVs from the training set 3- (P1, P2) ← Procedure Furthest(DATA ← RootN.Examples, n)//hill climbing algorithm [1] 4- if EN(P1) > EN(P2) swap(P1, P2) 5- RootN.P1 ← P1 6- RootN.P2 ← P2 7- RootN.Left = Null 8- RootN.Right = Null 9- Procedure BuildDT(Node ← RootN) 10- for each FVi in Node, do 11- D1←ED(FVi, Node.P1) 12- D2←ED(FVi, Node.P2) 13- If (D1 < D2) 14- Add index of FVi to Node.Left.Examples 15- else 16- Add index of FVi to Node.Right.Examples 17- end for 18- if (Node.Left.Size == 0 or Node.Right.Size == 0) 19- return //this means a leaf node 20- (P1, P2) ← Furthest(Node.Left.Examples, size(Node.Left.Examples))//work on the left child 21- if (EN(P1) > EN(P2)) then swap(P1, P2) 22- Node.Left.P1 ← P1 23- Node.Left.P2 ← P2 24- Node.Left.ClassP [numclasses] = {0}//initialize the classes’ probabilities to 0; 25- for each i in Node.Left.Examples do 26- Node.Left.ClassP [DATA.Class[i]]++//histogram of classes at Left-Node 27- bool LeftMulticlasses = false//check for single class to prune the tree 28- if there is more than one class at Node.Left.ClassP 29- LeftMulticlasses=true; 30- if (Dtype ==4) //no pruning if chosen 31- LeftMulticlasses=true//even if there is only one class in a node=> cluster it further 32- for each i in numclasses do //calculate probabilities of classes at the left node 33- Node.Left.ClassP [i]= Node.Left.ClassP [i]/ size(Node.Left.Examples) 34- if (Dtype ==1) //increase the probabilities by the increased level 35- for each i in numclasses do 36- Node.Left.ClassP [i]= Node.Left.ClassP [i]* Node.Left.level 37- if (Dtype ==2) //increase the probabilities exponentially by the increased level 38- for each i in numclasses do 39- Node.Left.ClassP [i]= Node.Left.ClassP [i]* 2Node.Left.level 40- if (Dtype != 3)//do accumulation for probabilities, if 3, use just the probabilities in a leaf node 41- for each i in numclasses do 42- Node.Left.ClassP [i] = Node.Left.ClassP [i] + Node.ClassP [i] 43- Node.Left.Left = NULL; 44- Node.Left.Right = NULL; 45- Repeat the previous steps (20–44) on Node.Right 46- if (LeftMulticlasses) 47- BuildDT (Node.Left) 48- if (RightMulticlasses) 49- BuildTree(Node.Right) 50- end Procedure 51- return RootN 52- end Algorithm 1 |

| Algorithm 2. Testing Phase of FPDT. |

| Input: test dataset TESTDATA with n FVs and d features Output: Testing Accuracy (Acc). 1- Acc←0 2- for each FVi in TESTDATA do 3- Procedure GetTreeNode(Node ← RootN, FVi) 4- D1 ← ED(FV[i], Node.P1) 5- D2 ← ED(FV[i], Node.P2) 6- if (D1 < D2 and Node.Left) 7- return GetTreeNode (Node.Left, FVi) 8- else if (D2 ≤ D1 and Node.Right) 9- return GetTreeNode (Node.Right,FVi) 10- else 11- return Node 12- end if 13- end Procedure GetTreeNode 14- class ← argmax(Node.ClassP)// returns the class with the maximum probability 15- if class == Class(FVi) 16- Acc ← Acc+1 17- end for each 18- Acc← Acc/n 19- return Acc 20- end Algorithm 2 |

The training phase of the FPBST and the new DT0–DT4 use the Euclidean norm to regularize the resultant tree by swapping P1 and P2 if the norm of P2 is less than that of P1 (step 21 in Algorithm 1). This is normally done to let the examples, which are similar to the point of the least norm to be sorted to the left side of the tree, and the others to be sorted to the right side of the tree, so as to have similar examples adjacent as possible as could in the resultant BST. Having known that the Euclidean norm is sensitive to the negative numbers (negative and positive similar numbers result the same Euclidean norm), the examples with many zeros or similar repeated numbers [2], we opt for an alternative of the norm to decide which goes to left and which goes to right. Here we propose the use of the class of the example, so we check the classes of P1 and P2 to see if P2 has the minimum class, if yes, we swap P1 with P2, otherwise they remain as they are. Such a swap allows for regularizing the resultant decision tree with the minimum cost, as creating the norm cost extra O(d) each time, while obtaining the class of an example costs O(1), and at the same time we get more coherent trees in terms of the classes distribution, since the examples of minimum class are forced to be sorted to the left and those with the maximum class are sorted to the right, this might have a good effect on the probabilities of the classes. This improvement is applied on all the proposed DT0–DT4 making new decision trees DT0+, DT1+, DT2+, DT3+, and DT4+.

Similar to the FPBST, the time complexity of training phase to build the decision tree (DT) by the proposed methods (DT0–DT4 and DT0+ to DT4+) is:

where (cnd) is the time consumed to find the approximate furthest points, as the constant c is the number of iterations needed to find the approximate furthest points, which is found experimentally to be in the range of 2 to 5 [1]. The (log n) time is consumed along the depth of the DT.

An extra (2nd) time is consumed by comparing each example or feature vector (FV) to the local furthest points (P1 and P2). This time can be added to c to make it in the range of 4 to 7, however, c is still a constant and the overall time complexity can be asymptotically approximated to:

and if n >> d, the time complexity can be further approximated to:

The space complexity can be defined by:

where the space consumed (S) is a function of n and d, which similar to the size of a normal BST.

The test phase of the proposed method (Algorithm 2) is the same for all the DTs, as it searches the created DT for a test example starting from the root node to a leaf node, where similar example(s) are supposed to be there. However, it is different from the test phase algorithm of the FPBST, where KNN algorithm is employed to classify the test example using those found in a leaf-node. The proposed DTs have no need to use the KNN, because the leaf-node has become able to decide the class of the tested example based on the pre-calculated probabilities it has, since the name (decision tree) suggests. Disusing the KNN with the proposed DTs allows for more speed. Therefore, the time complexity of the test phase of the proposed DTs for each tested example is:

where the (2d) time is consumed by the calculation of the ED, which costs d time for each comparison with either P1 or P2. And the (log n) time is consumed along the depth of the BST, which is about (log n) on average.

And if n >> d, the d time can be ignored making the testing time:

3.2. Implementation Example

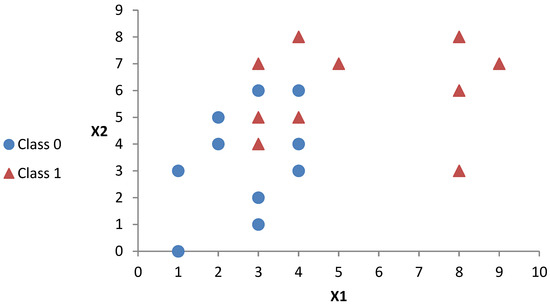

To further explain the proposed Dts, we implement some of them to create decision trees to be compared with the BST of the FPBST. For this end, we used a small synthesized dataset for illustration purposes. The synthesized dataset used consists of two hypothetical features (X1 and X2) and two classes (0 and 1) having 20 examples as shown in Table 1 and illustrated in Figure 1.

Table 1.

A hypothetical training data sample to exemplify the resultant BST of the FPBST, as well as the decision trees of the proposed methods.

Figure 1.

A visual illustration of the synthesized dataset obtained from Table 1.

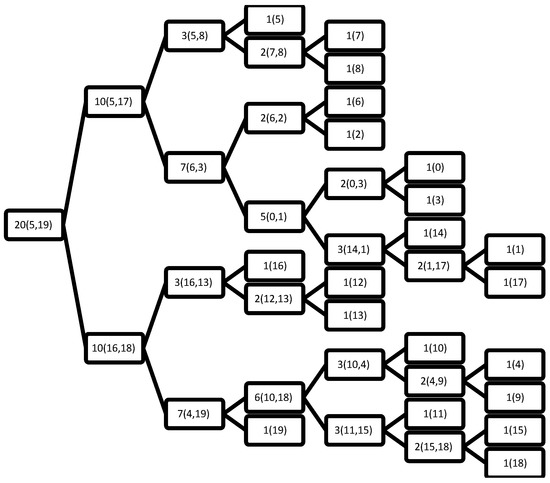

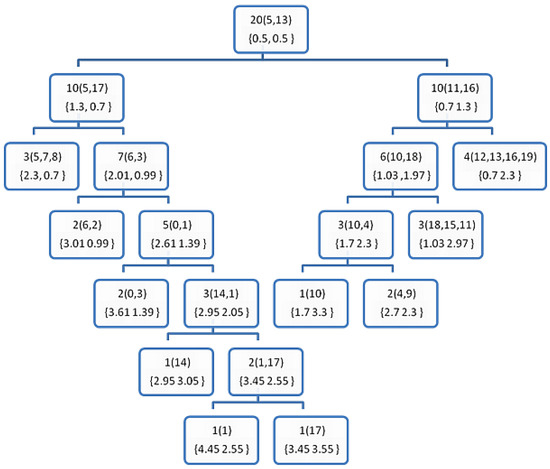

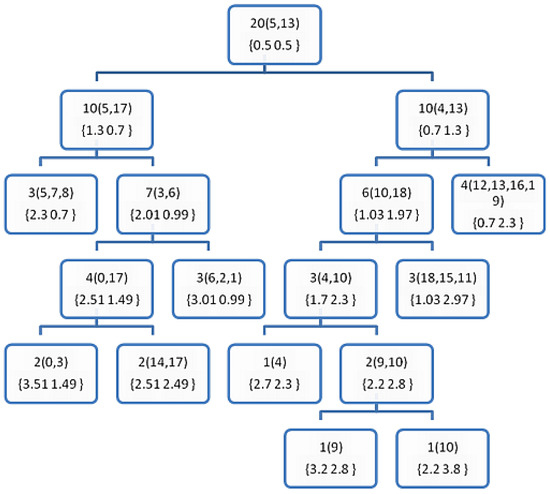

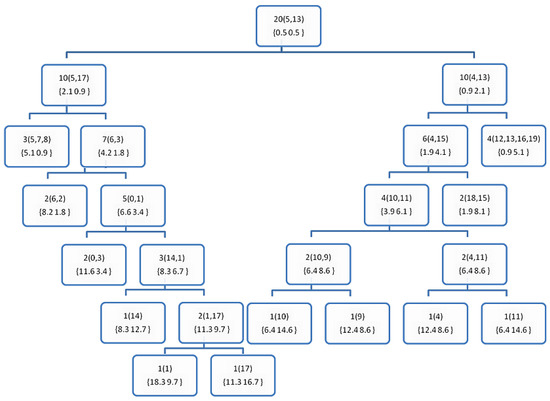

If we apply the FPBST on the synthesized dataset we get the BST illustrated in Figure 2, and when applying the DT0, DT0+, DT1, and DT1+ on the same dataset we get the decision trees illustrated in Figure 3, Figure 4, Figure 5 and Figure 6, respectively.

Figure 2.

The resultant BST after applying the training phase of the FPBST on the sample data from Table 1. The number outside the brackets is the counter of the examples hosted by each node, and those inside the brackets are the index of the examples in a leaf node, or the furthest points (P1 and P2) otherwise.

Figure 3.

The resultant decision tree after applying the training phase of the DT0 on the sample data from Table 1. The number outside the rounded brackets () is the counter of the examples hosted by each node, and those inside the rounded brackets are the index of the examples in a leaf node, or the furthest points (P1 and P2) otherwise. The numbers in the curly brackets {} shows the probabilities of the classes at each node.

Figure 4.

The resultant decision tree after applying the training phase of the DT0+ on the sample data from Table 1. The number outside the rounded brackets is the counter of the examples hosted by each node, and those inside the rounded brackets are the index of the examples in a leaf node, or the furthest points (P1 and P2) otherwise. The numbers in the curly brackets {} shows the probabilities of the classes at each node.

Figure 5.

The resultant decision tree after applying the training phase of the DT1 on the sample data from Table 1. The number outside the rounded brackets is the counter of the examples hosted by each node, and those inside the rounded brackets are the index of the examples in a leaf node, or the furthest points (P1 and P2) otherwise. The numbers in the curly brackets {} shows the probabilities of the classes at each node.

Figure 6.

The resultant decision tree after applying the training phase of the DT1+ on the sample data from Table 1. The number outside the rounded brackets is the counter of the examples hosted by each node, and those inside the rounded brackets are the index of the examples in a leaf node, or the furthest points (P1 and P2) otherwise. The numbers in the curly brackets {} shows the probabilities of the classes at each node.

The purpose of these figures is not to prove anything, as we cannot draw significant conclusions from such weak evidence (the very small data set in Table 1). However, they are meant to show how the proposed DTs are constructed comparing to the BST of the FPBST. It is interesting to note that calculating the furthest-pair of points is an approximate algorithm and might not obtain the same pair of points always, as seen in the Figure 2 and Figure 6, where the furthest pair was (5, 19), while the furthest pair was (5, 13) in Figure 3, Figure 4 and Figure 5. Both of the pairs have the maximum distance in the dataset which is 10.63. In addition, we can note the smaller size of the DTs in (Figure 3, Figure 4, Figure 5 and Figure 6) and the shallow nodes comparing to the BST in Figure 2, this is because the DTs stop the recursive process to create child-nodes when the node is pure, i.e., all the examples hosted belong to the same class. One exception is the DT4 and DT4+, which carry on sorting the examples until there is only one example (or similar examples) in a leaf-node, we mean by similar examples, those who share the same Euclidean distance to a reference point. Additionally, we can note the difference between the DTs and the DT+s, for example, the point 14 is classified as class 0 in Figure 3, while it belongs to class 1, this is because its norm = 5, while the other point (1) sharing the same parent node has a norm = 5.4, according to DT0, Point 14 goes to the left and Point 1 goes to the right, if the DT0 was not calculating accumulated probabilities this should not make a big difference, but since such type of probabilities is used by the DT0 and DT0+ without giving a higher weight to the deeper levels we get such a classification error. However, this situation is not happening when using DT1 and DT1+, because the tree level is used to weight the probabilities.

3.3. Data

In order to evaluate the proposed methods and compare the results to the FPBST on Big Data classification, we use some of the well-known machine learning datasets, which are used by state-of-the-art work in this domain. These datasets are freely available for download from either the support vector machines library (LIBSVM) Data [26] or the UCI Machine Learning Repository [27]. The datasets used are of different dimensions, sizes, and data types, such diversity is important to evaluate the efficiency of the proposed method in terms of accuracy and time consumed.

All datasets used contain numeric data, i.e., real numbers and/or Integers. The sizes of these datasets are in the range of 625 to 11,000,000 examples; the dimensions are in the range of 4 to 5000 features. Table 2 shows the descriptions of the datasets used.

Table 2.

Description of datasets used for evaluation and comparison of the proposed methods.

4. Results and Discussion

To evaluate the proposed methods (DT0–DT4 and DT0+-DT4+), we programmed both Algorithms 1 and 2 using MS VC++.Net framework, version 2017, and conducted several classification experiments on all the datasets described in the data section. We utilized a personal computer with the following specifications:

- Processor: Intel® Core™ i7-6700 CPU @ 340GHz

- Installed memory (RAM): 16.0 GB

- System type: 64-bit operating system, x64-based processor, MS Windows 10.

Table 3 shows the characteristics of the BT built using the proposed DTs comparing to that of the FPBST, her we used one dataset (poker), as being one of the largest datasets and to save space for this paper.

Table 3.

Some specifications of the resultant BST of the FPBST compared to the resultant DTs after applying the proposed FPDTs on the poker dataset (training phase).

As can be noted in Table 3, the maximum depth of the resultant BST and DTs is not much larger than log2(1025010) = 19.97, this of course increases the speed of the test phase for all the proposed methods including the FPBST. Although the number of nodes in a full BST is typically (n log n), and therefore should be around 20,421,879, we found it much less than that for all methods, this is due to the resultant BST and DTs being not full binary trees. It is interested to note that the number of nodes in the proposed DTs is significantly less than that of the BST; this is related to the number of hosted examples in the leaf-nodes, as it is higher in the DTs than the BST, i.e., the lower the number of nodes, the higher the number of examples per leaf-node. This is because the DTs stop the recursive process earlier, mainly, when all the existing examples are belonging to only one specific class. One exception is the DT4 and DT4+, obviously because both of them do not stop the recursive process and carry on creating nodes until there is only one example per each leaf-node, or similar examples.

The relatively small size of the DT created by the proposed DT0–DT3 and DT0+- DT3+ shall serve two purposes, (1) decreasing the space needed for the tree; and (2) speeding up the classification process, since searching a smaller tree is faster than a larger one. This is also complying with the number of leaf-node, as being significantly smaller than that of the FPBST, DT4, and DT4+.

In this paper, we compare the performance of the proposed methods to that of the FPBST, as the goal of this paper is to improve the performance of the FPBST, in terms of speed, space used, and classification accuracy. For this end, we evaluated the proposed methods DT0–DT4 by employing them to classify the machine learning datasets stated in Table 2, using 10-fold cross-validation, so as to be able to compare their performances to that of the FPBS.

Ideally, the 10-fold cross-validation approach selects the training data set randomly; however, Nalepa and Kawulok [28] discussed other interesting methods for selecting the training data such as data geometry analysis (clustering and non-clustering), neighborhood analysis methods, Evolutionary, active learning and the random sampling methods. Our choice belongs to the random sampling methods as being the most used.

Since we used a different hardware with different computation powers, which might significantly affect the comparison in terms of time consumed, we opt for reporting the speed-up factor of each method similarly to [13,17]. We calculate the speed-up factor by considering the ratio of the time consumed by the FPBST classifier to that of the proposed methods on the same dataset used and same examples tested as follows:

where D is the dataset tested, X is the method that we wish to calculate its speedup factor, and T is the time function, which returns the time consumed by the method X on the dataset D.

The accuracy comparison results are shown in Table 4. Table 5 and Table 6 show the time consumed in the training and testing phases, respectively, while Table 7 shows the speed-up comparison results.

Table 4.

Accuracy results of the proposed methods DT0–DT4 compared to that of the FPBST, using 10-fold cross-validation.

Table 5.

Time (ms) consumed by the proposed methods DT0–DT4 to build their DTs compared to that of the FPBST to build its BST, this is the average training time of the 10 folds.

Table 6.

Time (ms) consumed by the FPBST to test the entire test examples compared to that of the proposed methods DT0–DT4, this is the average test time of the 10 folds.

Table 7.

Speed-up results (training and testing phases) of the proposed methods DT0–DT4 compared to the FPBST.

As can be seen from Table 4, one or more of the proposed methods DT0–DT4 slightly outperform the FPBST in terms of accuracy when testing on all datasets except for the Satimage, which works better with the FPBST, however, the difference is not significant (less than 1%), and it might due to randomness of the train/test examples, on average, we can see that the DT1, DT3, and DT4 perform slightly better than the FPBST. The maximum average classification accuracy is attributed to the DT4; this is due to the nature of the DT that created by the DT4, which continues the recursive process until there is only one class or similar classes per leaf-node. We are not favoring the DT4 as its size is similar to the BST of the FPBST, however, its accuracy is not significantly higher than the other DTs and the FPBST, for example the DT3 outperforms all methods in terms of the number of datasets tested. We can say with some confidence that the proposed approach (using the decision tree instead of the KNN-regardless the creation method of the decision tree) performs well on all the evaluated datasets, and this performance is almost similar to the FPBST in some cases or slightly better in other cases.

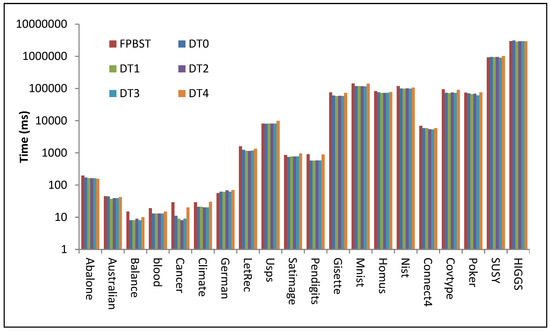

It is interesting to note from Table 5 and Figure 7 that the proposed DT0–DT3 consumed less time in general than the FPBST and the DT4, this is due to the smaller decision trees created by these methods, However, the time saved while building the decision tree by DT0–DT3 is not significant on some datasets, this is due to the extra calculations of the probabilities of each class for each dataset. It is also interesting to note the time consumed by the DT4 is almost similar to that of the FPBST and sometimes longer; this is because it has a similar tree size to that of the FPBST, with extra time for calculating the probabilities.

Figure 7.

Illustration of data from Table 5 (time (ms) consumed by the proposed methods to build their DTs compared to that of the FPBST), the time axis is logarithmic base-10.

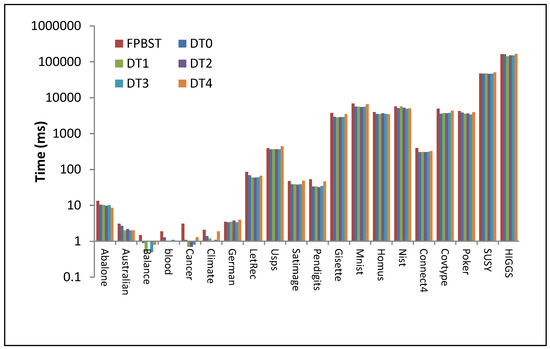

As can be seen from Table 6 and Figure 8, the consumed time in the testing phase for DT0–DT3 is less than that of the FPBST, this is due to the smaller size of these threes, and the disuse of the KNN classifier, it is interesting to note that the DT4 speed in the testing phase is almost similar to that of the FPBST, this is due the large and equal size of their trees. It is also interesting to note that there is no significant difference in the time consumed by the proposed DT0–DT3 in the testing phases, since they are almost the same except for the method of calculating the probability for each class.

Figure 8.

Illustration of data from Table 6 (time (ms) consumed by the FPBST to test the entire test examples compared to that of the proposed methods), the time axis is logarithmic base-10.

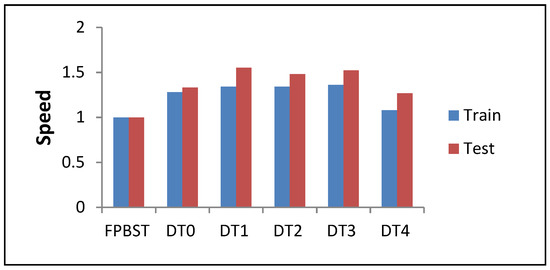

The speed up results shown in Table 7 and Figure 9 are calculated from both Table 5 (the speed of training phase), and Table 6 (the speed of testing phase) using Equation (7). Here, we can see that the speed of DT4 in training phases is almost similar to that of the FPBST, this is due to the similar trees created by both methods, however the speed of the DT4 in the testing phases is significant 1.27 times of the FPBST testing phases on average, this is due to the disuse of the KNN by DT4. It is interesting to note the high speed of the proposed DT0–DT3 methods, which is about one and half times faster than the FPBST, which might be due to the smaller size of the resultant trees and the disuse of the KNN. It is also interesting to note that the training speeds of the proposed DT0–DT3 are not significant as their testing speeds; this is due to the extra time which is needed for the extra computations of the probabilities of the classes in each node.

Figure 9.

Illustration of data average from Table 7 (speed-up results—training and testing phases—of the proposed methods DT0–DT4 compared to the FPBST).

As mentioned above, the proposed DT0–DT4 have been further improved attempting to provide more regular trees in terms of class distribution, this improvement includes the enforcement of the examples that are similar to the furthest point of the lower class to be sorted to the left-side of the tree, and those which are similar to the other furthest point with the higher class to be sorted to the right-side of the tree. We conducted several experiments to measure the effect of this improvement on both accuracy and speed. Here we choose the DT of the best performance on each dataset and compare its performance to the FPBST, the comparison results are shown in Table 8.

Table 8.

Accuracy (Acc.) and speed-up results (training and testing phases) of FPBST compared to the proposed DT0+ to DT4+.

As can be seen from Table 8, the accuracy of the proposed DT after the improvement has increased by about 2.38%, this is due to the sorting of the examples based on their classes, which is the only change that has been made to the decision trees. However, there is no improvement in the speed of both training and testing phases, since swapping the furthest points based on their classes need the same computation of swapping them based on their minimum/maximum norms, so there is no extra calculations needed by the new improvement, and that why the time consumed by both phases is not improved.

In order to statistically analyze the accuracy results of the DT+ methods compared to that of the FPBST (Table 8), we used the statistical test for algorithm comparison (STAC) (http://tec.citius.usc.es/stac/) [29]. Here we opt for the t-test paired as being commonly used to determine whether there is a significant difference between the means of two groups; the first group is FPBST results and the second group is the DT+ results. However, to do the t-test, our data should satisfy: independence, normality, and homocedasticity [29].

Our data is independent, since it comes from different methods (FPBST and DT+). To test the Normality of our data, we used the Shapiro-Wilk test because it performs the best, especially for samples of less than 30 elements [30]. The null hypothesis for normality would be: The samples follow a normal distribution. With significance level of 0.05, Table 9 shows the normality results.

Table 9.

Normality test of FPBST and DT+ accuracies obtained from Table 8.

As can be seen from Table 9, according to the p-value of the Shapiro-Wilk test we accept the Null hypothesis, i.e., both FPBST and DT+ accuracies follow normal distributions. To test the homocedasticity of both FPBST and DT+ accuracies we opt for the Levene test [31], The null hypothesis of the homocedasticity of our data is: All the input populations come from populations with equal variances. With significance level of 0.05, Table 10 shows the homocedasticity results.

Table 10.

Homocedasticity test of FPBST and DT+ accuracies obtained from Table 8.

As can be seen from Table 10, since the p-value is greater than the level of significance (0.05), the null hypothesis is accepted, and therefore the tested data is homocedastic. Since we verified normality, homocedasticity, and independence of our data we can apply the t-test. The null hypothesis (Ho): Accuracies of FPBST and DT+ have identical mean values. The alternative hypothesis (Ha): Accuracies of FPBST and DT+ do not have identical mean values. With significance level of 0.05, Table 11 shows the t-test results.

Table 11.

T-test results of group 1 (FPBST) and group 2 (DT+) accuracies obtained from Table 8.

As can be seen from Table 11, since the p-value is less than the level of significance (0.05), the null hypothesis is rejected to the favor of Ha. Thus, there is a statistically significant difference between the FPBST and the DT+ accuracies. In addition, the T statistic is shown in negative value because the mean of the FPBST accuracies is less than that of the DT+, therefore, we can say with some confidence that the DT+ methods outperform FPBST in terms of classification accuracy.

It is worth mentioning that the STAC platform accepted the Ho regardless the very small P-value, however, we rejected it here due to the p-value being less than the level of significance, which is (0.05) in our case. We further verified this decision using other platforms such as http://www.statskingdom.com/160MeanT2pair.html, in addition to our own calculations using Microsoft Excel software.

According to the previous results, we can approximately compare the performance of the proposed methods to that of the FPBST in terms of accuracy, training/testing time, and space consumed, as shown in Table 12.

Table 12.

Approximate comparison of the performance of the proposed methods to that of the FPBST.

As can be seen from Table 12, DT0+, DT1+ and DT3+ are the best performer in general, since they obtain the highest accuracies with the shortest training/testing times and the smallest model size. And therefore, we compared them with some of the well-known decision trees, namely, J48 [32], Reduced-error pruning tree (REPTree) [33] and Random Forest (RF) [34]. We applied these trees using the Weka data mining tool [35]. The comparison results are shown in Table 13.

Table 13.

Accuracy results of some of the proposed DTs compared to that of some of the well-known decision trees.

As can be seen from Table 13, the compared DTs show competing accuracy results compared to the well-known decision trees, with and extra advantage of the ability to work on Big Data and, therefore, can be used in practice, particularly for big data and image classification such as [36,37,38].

5. Conclusions

In this paper, we propose a new approach to improve the performance of the FPBST when classifying small, intermediate and Big Datasets. The major improvement includes the abandonment of the slow KNN, which is used with the FPBST to classify a small number of examples found in a leaf-node. Instead, we convert the BST to be a decision tree by its own, seizing the labeled examples in the training phase, by calculating the probability of each class in each node, we used various methods to calculate these probabilities.

The experimental results show that the proposed decision trees improve the performance of the FPBST in terms of classification accuracy, training speed, testing speed and the size of the model (the tree in our case). We also made another simple enhancement on the FPBST algorithm in the training phase, which is the swapping of the furthest pair of points based on their classes rather than being based on their minimum/maximum Euclidean norms. This makes the resultant decision tree more coherent in terms of the distribution of the classes, making them closer to each other as possible as could, such enhancement further improved the accuracy of the proposed decision trees compared to that of the FPBST as the results suggest.

The improvements made in this study allow the proposed DTs to be more suitable for variety of real-world applications such as image classifications, face recognition, hand biometrics, fingerprint systems, and other types of biometrics, particularly, when the training data is very large, where traditional classification methods become impractical.

However, this approach is still based on finding the furthest-pair of points (diameter), which has two major disadvantages, first, it takes time to find the diameter of the data at each node, and second, these two points might be belonging to the same class, and this might affect the classification accuracy. Therefore, we need to find a more accurate and perhaps a faster algorithm to cluster the data in each node. Moreover, the Euclidean distance might not be the perfect choice for the proposed methods, therefore, we need to investigate other distance metrics such as [39,40]. These limitations will be addressed in our future work.

Funding

This research received no external funding.

Conflicts of Interest

The author declares no conflict of interest.

References

- Zerbino, P.; Aloini, D.; Dulmin, R.; Mininno, V. Big Data-enabled Customer Relationship Management: A holistic approach. Inf. Process. Manag. 2018, 54, 818–8469. [Google Scholar] [CrossRef]

- LaValle, S.; Lesser, E.; Shockley, R.; Hopkins, M.S.; Kruschwitz, N. Big data, analytics and the path from insights to value. MIT Sloan Manag. Rev. 2011, 52, 21. [Google Scholar]

- Zhang, Q.; Yang, L.T.; Chen, Z.; Li, P. A survey on deep learning for big data. Inf. Fusion 2018, 42, 146–157. [Google Scholar] [CrossRef]

- Bolón-Canedo, V.; Remeseiro, B.; Sechidis, K.; Martinez-Rego, D.; Alonso-Betanzos, A. Algorithmic challenges in Big Data analytics. In Proceedings of the European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning, Bruges, Belgium, 26–28 April 2017. [Google Scholar]

- Lv, X. The big data impact and application study on the like ecosystem construction of open internet of things. Clust. Comput. 2018, 1–10. [Google Scholar] [CrossRef]

- Fix, E.; Hodges, J.L., Jr. Discriminatory Analysis-Nonparametric Discrimination: Consistency Properties; USAF School of Aviation Medicine: Randolph Field, TX, USA, 1951. [Google Scholar]

- Cover, T.; Hart, P. Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

- Hassanat, A. Norm-Based Binary Search Trees for Speeding Up KNN Big Data Classification. Computers 2018, 7, 54. [Google Scholar] [CrossRef]

- Hassanat, A.B. Furthest-Pair-Based Binary Search Tree for Speeding Big Data Classification Using K-Nearest Neighbors. Big Data 2018, 6, 225–235. [Google Scholar] [CrossRef]

- Hassanat, B. Two-point-based binary search trees for accelerating big data classification using KNN. PLoS ONE 2018, 13, 14, in press. [Google Scholar]

- Hassanat, B.; Tarawneh, A.S. Fusion of color and statistic features for enhancing content-based image retrieval systems. J. Theor. Appl. Inf. Technol. 2016, 88, 644–655. [Google Scholar]

- Tarawneh, A.S.; Chetverikov, D.; Verma, C.; Hassanat, A.B. Stability and reduction of statistical features for image classification and retrieval: Preliminary results. In Proceedings of the 9th International Conference on Information and Communication Systems, Irbid, Jordan, 3–5 April 2018; pp. 117–121. [Google Scholar]

- Hassanat, A.B. Greedy algorithms for approximating the diameter of machine learning datasets in multidimensional euclidean space. arXiv, 2018; arXiv:1808.03566. [Google Scholar]

- Zhang, S.; Li, X.; Zong, M.; Zhu, X.; Wang, R. Efficient knn classification with different numbers of nearest neighbors. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 1774–1785. [Google Scholar] [CrossRef] [PubMed]

- Hassanat, A.B.; Abbadi, M.A.; Altarawneh, G.A.; Alhasanat, A.A. Solving the problem of the K parameter in the KNN classifier using an ensemble learning approach. arXiv, 2014; arXiv:1409.0919. [Google Scholar]

- Wang, F.; Wang, Q.; Nie, F.; Yu, W.; Wang, R. Efficient tree classifiers for large scale datasets. Neurocomputing 2018, 284, 70–79. [Google Scholar] [CrossRef]

- Maillo, J.; Triguero, I.; Herrera, F. A mapreduce-based k-nearest neighbor approach for big data classification. In Proceedings of the 13th IEEE International Symposium on Parallel and Distributed Processing with Application, Helsinki, Finland, 20–22 August 2015; pp. 167–172. [Google Scholar]

- Maillo, J.; Ramírez, S.; Triguero, I.; Herrera, F. kNN-IS: An Iterative Spark-based design of the k-Nearest Neighbors classifier for big data. Knowl.-Based Syst. 2017, 117, 3–15. [Google Scholar] [CrossRef]

- Deng, Z.; Zhu, X.; Cheng, D.; Zong, M.; Zhang, S. Efficient kNN classification algorithm for big data. Neurocomputing 2016, 195, 143–148. [Google Scholar] [CrossRef]

- Gallego, A.J.; Calvo-Zaragoza, J.; Valero-Mas, J.J.; Rico-Juan, J.R. Clustering-based k-nearest neighbor classification for large-scale data with neural codes representation. Pattern Recognit. 2018, 74, 531–543. [Google Scholar] [CrossRef]

- Bentley, J.L. Multidimensional binary search trees used for associative searching. Commun. ACM 1975, 18, 509–517. [Google Scholar] [CrossRef]

- Uhlmann, J.K. Satisfying general proximity/similarity queries with metric trees. Inf. Process. Lett. 1991, 40, 175–179. [Google Scholar] [CrossRef]

- Beygelzimer, A.; Kakade, S.; Langford, J. Cover trees for nearest neighbor. In Proceedings of the 23rd International Conference on Machine Learning, Pittsburgh, PA, USA, 25–29 June 2006; pp. 97–104. [Google Scholar]

- Kibriya, A.M.; Frank, E. An empirical comparison of exact nearest neighbour algorithms. In Proceedings of the 11th European Conference on Principles and Practice of Knowledge Discovery in Databases, Warsaw, Poland, 17–21 September 2007; pp. 140–151. [Google Scholar]

- Cislak, A.; Grabowski, S. Experimental evaluation of selected tree structures for exact and approximate k-nearest neighbor classification. In Proceedings of the Ederated Conference on Computer Science and Information Systems, Warsaw, Poland, 7–10 September 2014; pp. 93–100. [Google Scholar]

- Fan, R.-E. LIBSVM Data: Classification, Regression, and Multi-label. 2011. Available online: https://www.csie.ntu.edu.tw/~cjlin/libsvmtools/datasets/ (accessed on 1 March 2018).

- Lichman, M. University of California, Irvine, School of Information and Computer Sciences, 2013. Available online: http://archive.ics.uci.edu/ml (accessed on 1 March 2018).

- Nalepa, J.; Kawulok, M. Selecting training sets for support vector machines: A review. Artif. Intell. Rev. 2018, 1–44. [Google Scholar] [CrossRef]

- Rodríguez-Fdez, I.; Canosa, A.; Mucientes, M.; Bugarín, A. STAC: A web platform for the comparison of algorithms using statistical tests. In Proceedings of the 2015 IEEE International Conference on Fuzzy Systems, Istanbul, Turkey, 2–5 August 2015; pp. 1–8. [Google Scholar]

- Shapiro, S.S.; Wilk, M.B. An analysis of variance test for normality (complete samples). Biometrika 1965, 52, 591–611. [Google Scholar] [CrossRef]

- Levene, H. Robust tests for equality of variances. Contrib. Probab. Stat. 1961, 69, 279–292. [Google Scholar]

- Quinlan, J.R. C4.5: Programs for Machine Learning; Morgan Kaufmann Publishers: San Mateo, CA, USA, 1993. [Google Scholar]

- Quinlan, J.R. Simplifying decision trees. Int. J. Man-Mach. Stud. 1987, 27, 221–234. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Witten, I.H.; Frank, E.; Hall, M.A.; Pal, C.J. Data Mining: Practical Machine Learning Tools and Techniques; Morgan Kaufmann Publishers: San Mateo, CA, USA, 2016. [Google Scholar]

- Hassanat, A.B. On identifying terrorists using their victory signs. Data Sci. J. 2018, 17, 27. [Google Scholar] [CrossRef]

- Hassanat, A.B.; Prasath, V.S.; Al-kasassbeh, M.; Tarawneh, A.S.; Al-shamailh, A.J. Magnetic energy-based feature extraction for low-quality fingerprint images. Signal Image Video Process. 2018, 1–8. [Google Scholar] [CrossRef]

- Hassanat, A.B.; Prasath, V.S.; Al-Mahadeen, B.M.; Alhasanat, S.M.M. Classification and gender recognition from veiled-faces. Int. J. Biom. 2017, 9, 347–364. [Google Scholar] [CrossRef]

- Hassanat, A.B. Dimensionality invariant similarity measure. arXiv, 2014; arXiv:1409.0923. [Google Scholar]

- Alkasassbeh, M.; Altarawneh, G.A.; Hassanat, A. On enhancing the performance of nearest neighbour classifiers using hassanat distance metric. arXiv 2015, arXiv:1501.00687. [Google Scholar]

© 2018 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).