Correlation Tracking via Self-Adaptive Fusion of Multiple Features

Abstract

1. Introduction

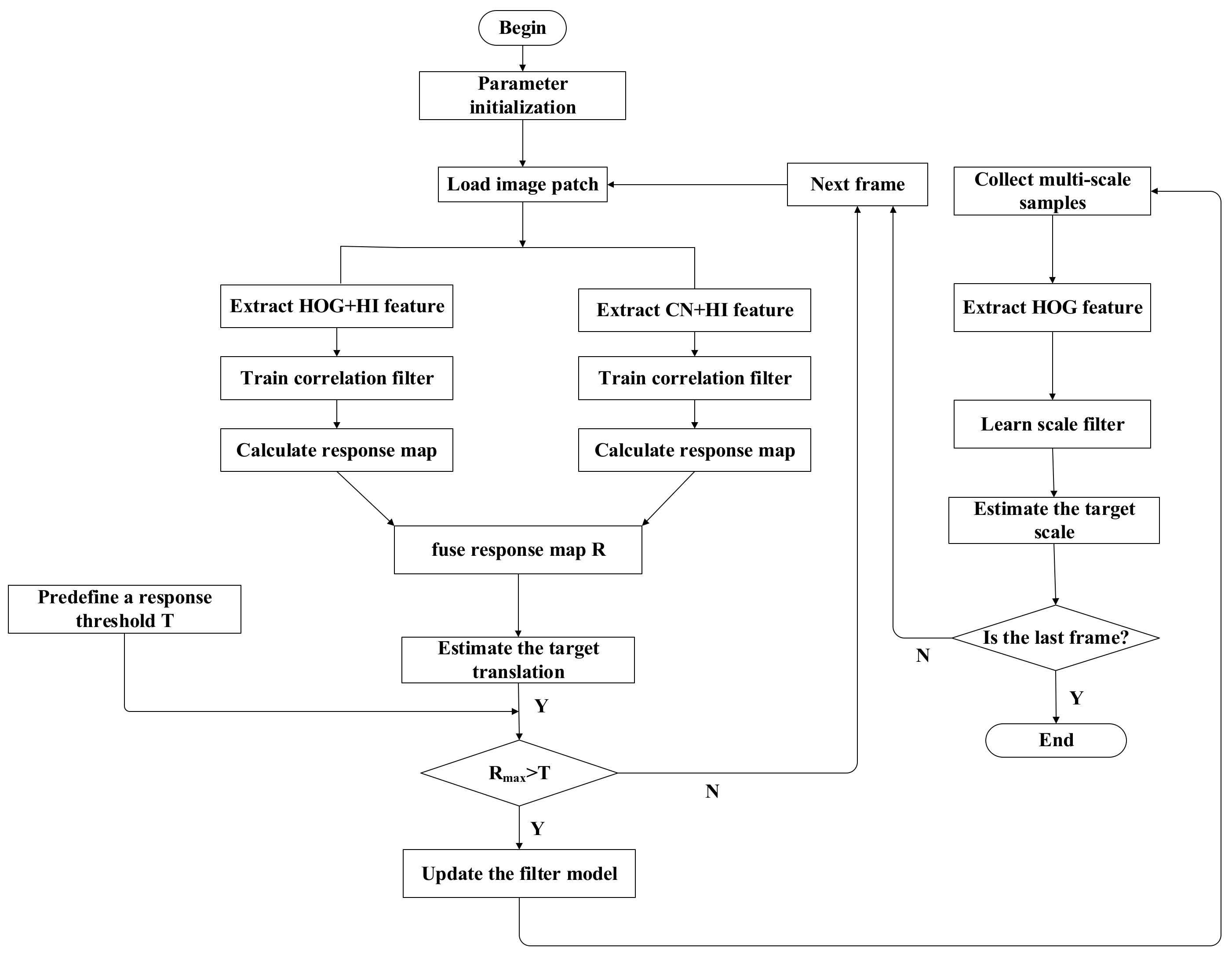

- We integrated multiple multi-channel hand-crafted features with great discriminative power, such as HOG, CN, and histogram of local intensities into correlation filter framework in the response layer, combine the complementary advantages of multiple different features effectively and propose self-adaptive fusion of multiple features for preferable feature representation.

- We establish a model update strategy to avoid the tracking model deteriorated by inaccurate update to some extent, which is performed by setting an optimal pre-defined response threshold as a judging condition for updating tracking model.

- We integrate an accurate scale estimate method with the proposed model update strategy for further improving scale variation adaptability. We evaluate the proposed algorithm carried out on the tracking benchmark dataset [31,32], and the experimental results demonstrate that the proposed algorithm performs favorably against several state-of-the-art CF based methods.

2. Related Work

3. Tracking Components

3.1. The Context-Aware Correlation Filter Tracking Framework

3.2. The Scale Discriminative Correlation Filter

4. The Proposed Algorithm

4.1. The Visual Features Performance Analysis

4.2. The Self-Adaptive Fusion of Multiple Features

4.3. The Proposed Model Updating Strategy

5. Experiments

5.1. Implementation Details

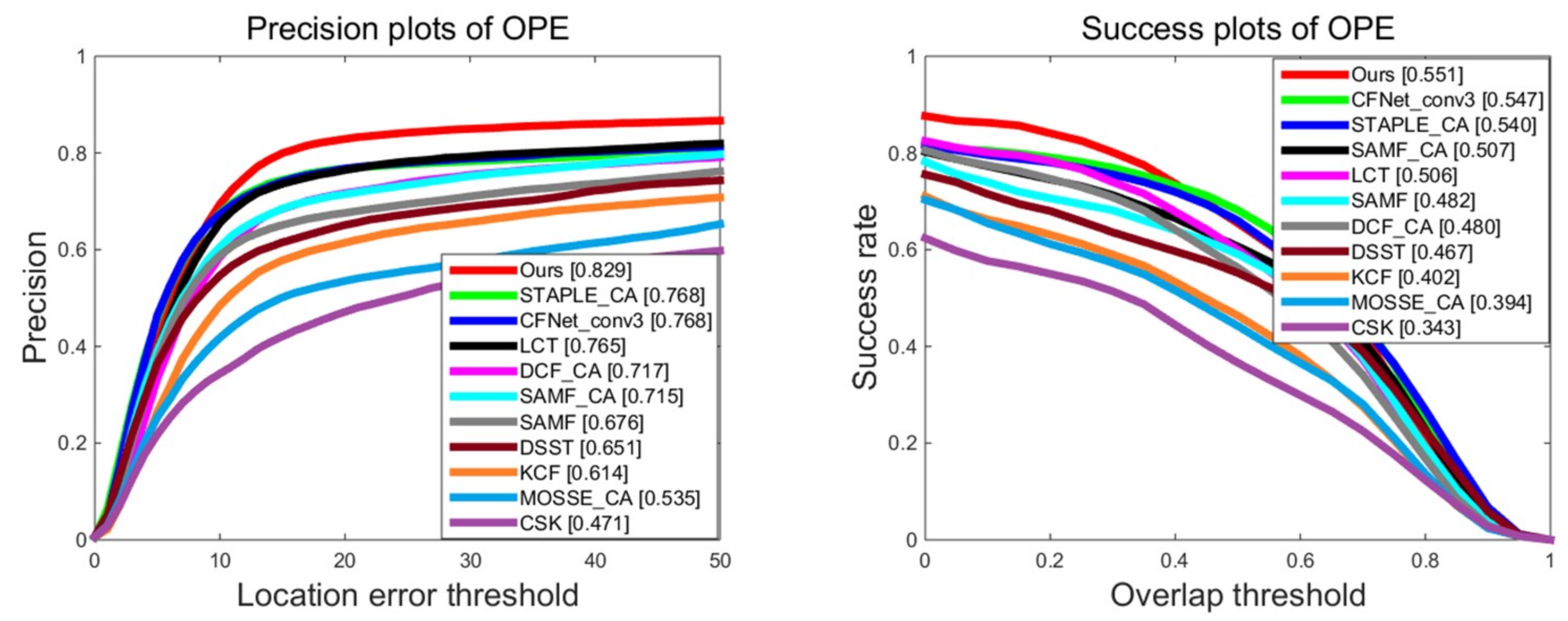

5.2. Overall Tracking Performance on OTB Benchmark dataset

5.3. Attribute Based Evaluation

5.4. Qualitative Evaluation

5.5. Overall Tracking Performance on Temple Color Dataset

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Smeulders, A.W.; Chu, D.M.; Cucchiara, R.; Calderara, S.; Dehghan, A.; Shah, M. Visual tracking: An experimental survey. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1442–1468. [Google Scholar] [PubMed]

- Yilmaz, A.; Javed, O.; Shah, M. Object tracking: A survey. ACM Comput. Surv. 2006, 38, 13. [Google Scholar] [CrossRef]

- Tsagkatakis, G.; Savakis, A. Online Distance Metric Learning for Object Tracking. IEEE Trans. Circuits Syst. Video Technol. 2011, 21, 1810–1821. [Google Scholar] [CrossRef]

- Babenko, B.; Yang, M.H.; Belongie, S. Robust Object Tracking with Online Multiple Instance Learning. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 1619–1632. [Google Scholar] [CrossRef] [PubMed]

- Hare, S.; Saffari, A.; Torr PH, S. Struck: Structured output tracking with kernels. In Proceedings of the IEEE International Conference on Computer Vision 2011, Barcelona, Spain, 6–13 November 2011; pp. 263–270. [Google Scholar]

- Kalal, Z.; Mikolajczyk, K.; Matas, J. Tracking-Learning-Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 1409–1422. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.; Zhang, L.; Yang, M.H. Real-Time Compressive Tracking. In Proceedings of the European Conference on Computer Vision 2012, Florence, Italy, 7–13 October 2012; pp. 864–877. [Google Scholar]

- Grabner, H.; Leistner, C.; Bischof, H. Semi-supervised On-Line Boosting for Robust Tracking. In Proceedings of the European Conference on Computer Vision 2008, Marseille, France, 12–18 October 2008; pp. 234–247. [Google Scholar]

- Cauwenberghs, G.; Poggio, T. Incremental and decremental support vector machine learning. In Proceedings of the International Conference on Neural Information Processing Systems 2000; MIT Press: Cambridge, MA, USA, 2000; pp. 388–394. [Google Scholar]

- Mei, X.; Ling, H. Robust visual tracking using ℓ 1 minimization. In Proceedings of the IEEE International Conference on Computer Vision 2009, Kyoto, Japan, 29 September–2 October 2009; pp. 1436–1443. [Google Scholar]

- Ahuja, N. Robust visual tracking via multi-task sparse learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2012, Providence, RI, USA, 16–21 June 2012; pp. 2042–2049. [Google Scholar]

- Fan, H.; Xiang, J. Robust Visual Tracking With Multitask Joint Dictionary Learning. IEEE Trans. Circuits Syst. Video Technol. 2017, 27, 1018–1030. [Google Scholar] [CrossRef]

- Henriques, J.F.; Rui, C.; Martins, P.; Batista, J. High-Speed Tracking with Kernelized Correlation Filters. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 583–596. [Google Scholar] [CrossRef] [PubMed]

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Liu, Y.M. Visual object tracking using adaptive correlation filters. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2010, San Francisco, CA, USA, 13–18 June 2010; pp. 2544–2550. [Google Scholar]

- Rui, C.; Martins, P.; Batista, J. Exploiting the circulant structure of tracking-by-detection with kernels. In Proceedings of the European Conference on Computer Vision 2012, Florence, Italy, 7–13 October 2012; pp. 702–715. [Google Scholar]

- Danelljan, M.; Häger, G.; Khan, F.S.; Felsberg, M. Accurate Scale Estimation for Robust Visual Tracking. In Proceedings of the British Machine Vision Conference 2014; BMVA Press: Surrey, UK, 2014; pp. 65.1–65.11. [Google Scholar]

- Li, Y.; Zhu, J. A Scale Adaptive Kernel Correlation Filter Tracker with Feature Integration. In European Conference on Computer Vision 2014; Springer: Cham, Germany, 2014; pp. 254–265. [Google Scholar]

- Ma, C.; Yang, X.; Zhang, C.; Yang, M.H. Long-term correlation tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2015, Boston, MA, USA, 7–12 June 2015; pp. 5388–5396. [Google Scholar]

- Danelljan, M.; Häger, G.; Khan, F.S.; Felsberg, M. Learning Spatially Regularized Correlation Filters for Visual Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2015, Boston, MA, USA, 7–12 June 2015; pp. 4310–4318. [Google Scholar]

- Mueller, M.; Smith, N.; Ghanem, B. Context-Aware Correlation Filter Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 138–1395. [Google Scholar]

- Lukezic, A.; Vojir, T.; Zajc, L.C.; Matas, J.; Kristan, M. Discriminative correlation filter with channel and spatial reliability. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; Volume 2. [Google Scholar]

- Tu, Z. Probabilistic Boosting-Tree. Learning Discriminative Models for Classification, Recognition, and Clustering. In Proceedings of the IEEE International Conference on Computer Vision 2005, San Diego, CA, USA, 20–25 June 2005; pp. 1589–1596. [Google Scholar]

- Avidan, S. Ensemble Tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 261–271. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Lu, H.; Yang, F.; Yang, M.H. Super pixel tracking. In Proceedings of the IEEE International Conference on Computer Vision 2011, Barcelona, Spain, 6–13 November 2011; pp. 1323–1330. [Google Scholar]

- Grabner, H.; Grabner, M.; Bischof, H. Real-time tracking via online boosting. Br. Mach. Vis. Assoc. BMVC 2006, 1, 47–56. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2005, San Diego, CA, USA, 20–25 June 2005; pp. 886–893. [Google Scholar]

- Danelljan, M.; Khan, F.S.; Felsberg, M.; van de Weijer, J. Adaptive Color Attributes for Real-Time Visual Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2014, Columbus, OH, USA, 23–28 June 2014; pp. 1090–1097. [Google Scholar]

- Bertinetto, L.; Valmadre, J.; Golodetz, S.; Miksik, O.; Torr, P.H.S. Staple: Complementary Learners for Real-Time Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2016, Las Vegas, NV, USA, 27–30 June 2016; pp. 1401–1409. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv, 2014; arXiv:1409.1556. [Google Scholar]

- Danelljan, M.; Robinson, A.; Khan, F.S.; Felsberg, M. Beyond Correlation Filters: Learning Continuous Convolution Operators for Visual Tracking. In Proceedings of the European Conference on Computer Vision 2016, Amsterdam, The Netherlands, 11–14 October 2016; pp. 472–488. [Google Scholar]

- Wu, Y.; Lim, J.; Yang, M.H. Online Object Tracking: A Benchmark. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2013, Portland, OR, USA, 23–28 June 2013; pp. 2411–2418. [Google Scholar]

- Wu, Y.; Lim, J.; Yang, M.H. Object Tracking Benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1834–1848. [Google Scholar] [CrossRef] [PubMed]

- Wold, S.; Esbensen, K.; Geladi, P. Principal component analysis. Chemom. Intell. Lab. Syst. 1987, 2, 37–52. [Google Scholar] [CrossRef]

- Wang, M.; Liu, Y.; Huang, Z. Large Margin Object Tracking with Circulant Feature Maps. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4800–4808. [Google Scholar]

- Danelljan, M.; Häger, G.; Khan, F.S.; Felsberg, M. Coloring Channel Representations for Visual Tracking. In Proceedings of the Scandinavian Conference on Image Analysis; Springer International Publishing: New York, NY, USA, 2015; pp. 117–129. [Google Scholar]

- Danelljan, M.; Hager, G.; Khan, F.S.; Felsberg, M. Convolutional Features for Correlation Filter Based Visual Tracking. In Proceedings of the IEEE International Conference on Computer Vision Workshop 2016, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 621–629. [Google Scholar]

- Felzenszwalb, P.F.; Girshick, R.B.; McAllester, D.A.; Ramanan, D. Object detection with discriminatively trained part-based models. PAMI 2010, 32, 1627–1645. [Google Scholar] [CrossRef] [PubMed]

- Berlin, B.; Kay, P. Basic Color Terms: Their Universality and Evolution; University of California Press: Berkeley, CA, USA, 1969; Volume 6, p. 151. [Google Scholar]

- Khan, F.S.; Anwer, R.M.; van de Weijer, J.; Bagdanov, A.; Lopez, A.; Felsberg, M. Coloring action recognition in still images. IJCV 2013, 105, 205–221. [Google Scholar] [CrossRef]

- Zhang, J.; Ma, S.; Sclaroff, S. MEEM: Robust Tracking via Multiple Experts Using Entropy Minimization. In Proceedings of the European Conference on Computer Vision 2014; Springer: Cham, Germany, 2014; pp. 188–203. [Google Scholar]

- Avidan, S.; Levi, D.; Barhillel, A.; Oron, S. Locally Orderless Tracking. Int. J. Comput. Vis. 2015, 111, 213–228. [Google Scholar]

- Aceto, G.; Ciuonzo, D.; Montieri, A.; Pescapé, A. Multi-classification approaches for classifying mobile app traffic. J. Netw. Comput. Appl. 2018, 103, 131–145. [Google Scholar] [CrossRef]

- Aceto, G.; Ciuonzo, D.; Montieri, A.; Pescapé, A. Traffic Classification of Mobile Apps through Multi-classification. In GLOBECOM 2017—2017 IEEE Global Communications Conference; IEEE: Piscataway, NJ, USA, 2017. [Google Scholar]

- Valmadre, J.; Bertinetto, L.; Henriques, J.; Vedaldi, A.; Torr, P.H. End-to-end representation learning for correlation filter based tracking. In Computer Vision and Pattern Recognition (CVPR); IEEE: Piscataway, NJ, USA, 2017. [Google Scholar]

- Liang, P.; Blasch, E.; Ling, H. Encoding Color Information for Visual Tracking: Algorithms and Benchmark. IEEE Trans. Image Process. 2015, 24, 5630–5644. [Google Scholar] [CrossRef] [PubMed]

| LCT | SAMF | KCF | DSST | CSK | SAMF_CA | DCF_CA | STAPLE_CA | MOSSE_CA | Ours | |

|---|---|---|---|---|---|---|---|---|---|---|

| Avg. FPS | 21.2 | 18.6 | 212.6 | 28.6 | 266.8 | 40.2 | 90.2 | 29.3 | 123.8 | 31.5 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Z.; Liu, P.; Du, Y.; Luo, Y.; Zhang, W. Correlation Tracking via Self-Adaptive Fusion of Multiple Features. Information 2018, 9, 241. https://doi.org/10.3390/info9100241

Chen Z, Liu P, Du Y, Luo Y, Zhang W. Correlation Tracking via Self-Adaptive Fusion of Multiple Features. Information. 2018; 9(10):241. https://doi.org/10.3390/info9100241

Chicago/Turabian StyleChen, Zhi, Peizhong Liu, Yongzhao Du, Yanmin Luo, and Wancheng Zhang. 2018. "Correlation Tracking via Self-Adaptive Fusion of Multiple Features" Information 9, no. 10: 241. https://doi.org/10.3390/info9100241

APA StyleChen, Z., Liu, P., Du, Y., Luo, Y., & Zhang, W. (2018). Correlation Tracking via Self-Adaptive Fusion of Multiple Features. Information, 9(10), 241. https://doi.org/10.3390/info9100241