Enhancing Privacy-Preserving Network Trace Synthesis Through Latent Diffusion Models

Abstract

1. Introduction

- Data Augmentation: Users generate synthetic traces purely for internal use to diversify and expand the existing network trace for better utility.

- Data Release: The synthesized network traces are intended for external dissemination. In this scenario, directly releasing synthetic network traces risks revealing sensitive information from the original dataset. DP dataset synthesis becomes indispensable to ensure that no sensitive patterns, identities, or infrastructure details are inadvertently revealed.

- This paper is the first to explore how diffusion models can enhance the synthesis of network traces. We investigate their potential for addressing the challenges associated with accurately replicating complex network patterns while ensuring scalability and flexibility;

- We enable both internal data augmentation and secure external data sharing within a DP framework, addressing key challenges in balancing data utility and privacy in real-world applications;

- Extensive experiments on five widely used network trace datasets demonstrate that NetSynDM achieves state-of-the-art fidelity, while DPNetSynDM effectively mitigates privacy risks while maintaining higher data quality than existing DP-based synthesis methods.

2. Background

2.1. Network Dataset

- Packet Header: This contains information for each observed packet across both the network (Layer 3) and transport (Layer 4) layers. Included fields typically comprise source/destination IPs (srcip, dstip), source/destination ports (srcport, dstport), the transport protocol (proto, such as TCP, UDP, and ICMP), the capture timestamp (ts), and the packet size (pkt_len), along with additional fields like checksum (chksum) and a dataset label (label).

- Flow Header: A network flow is identified by a five-tuple approach 〈srcip, dstip, srcport, dstport, proto〉 [21]. Its associated header includes the timestamp of the initial packet (ts), the total flow duration (td), the packet count (pkt), the cumulative byte size (byt), and the assigned label (label).

2.2. Privacy Leakage from Network Dataset

2.3. Diffusion Models

2.3.1. Standard Diffusion Models

2.3.2. Latent Diffusion Models

2.4. Differential Privacy

3. Real-World Scenarios

- Data Expansion: Data expansion addresses issues such as data scarcity and certain data’s rarity during training. When the training dataset is insufficient, the model’s generalization ability is severely impacted [43], leading to overfitting or prediction bias. Data expansion solves these issues by increasing the size, coverage, or diversity of the dataset, which is especially important in scenarios where data collection is difficult or expensive. In the network traffic domain, generative approaches can be used for data expansion, producing synthetic traces that improve generalization, support rare-event modeling, and enhance the robustness of downstream predictive models [1].

- Data Release: As privacy regulations become more stringent, data release has become particularly important, ensuring that data sharing does not violate privacy protection laws. Releasing raw data can lead to personal privacy leaks or legal violations [44]. Therefore, using DP techniques or synthetic data release allows for data sharing and analysis without exposing sensitive information and permits data release for research, collaboration, or commercial analysis [45]. This is crucial for complying with privacy regulations, protecting user privacy, and promoting data sharing. Data release helps to resolve the conflict between data sharing and privacy protection, promoting research and collaboration.

4. Methodology

4.1. Motivation and Workflow

4.2. Preprocessing

- IP (srcip, dstip): IPs with low frequency are aggregated using the /30 prefix.

- Port (srcport, dstport): Ports below 1024 are reserved as exceptions, while others are grouped in steps of 10.

- Categorical attributes (e.g., proto, label): These are left unchanged due to their limited domain sizes.

- Numerical attributes (pkt, byt, td): A logarithmic scale is applied to discretize these values,which effectively reduces the number of bins compared to linear segmentation.

- Timestamp (ts): A separate strategy is adopted for timestamps, as discussed later in Section 4.3.

4.3. NetSynDM: Highly Efficient Network Trace Synthesis

| Algorithm 1 The Workflow of NetSynDM |

|

4.4. DPNetSynDM: Privacy-Preserving for NetSynDM

- DP during Training: Gradients are clipped and perturbed with calibrated Gaussian noise:where CC denotes the clipping norm.

- DP during Data Synthesis: Embeddings receive additional noise during the reverse diffusion process as follows:

| Algorithm 2 The Workflow of DPNetSynDM |

|

4.5. Model Tuning and Training

4.6. Privacy Budget Allocation

- Preprocessing stage: This included tokenization, binning, and embedding initialization. Although frequency-based binning was applied, it only used global frequency counts derived from the raw dataset and did not access individual user-level records. All operations were deterministic and non-interactive, meaning that they did not adapt based on input data or model feedback. Therefore, no privacy budget was consumed at this stage. Furthermore, the generative model did not access raw data directly, and all DP guarantees were enforced during training. This design was aligned with standard DP-compliant pipelines, where static data preparation steps are not counted toward privacy cost.

- Model training stage: The entire privacy budget was consumed during training. We employed the DP-SGD optimizer with fixed gradient clipping and calibrated Gaussian noise injection. The cumulative privacy loss was computed using the Rényi Differential Privacy (RDP) accountant [42].

- Data synthesis stage: No additional privacy cost was incurred during data generation, as this step relied solely on the trained model. According to the post-processing invariance property of DP, this stage did not contribute to further privacy leakage.

5. Experimental Settings

5.1. Datasets

5.2. Baselines

- CTGAN is a no-DP synthesis algorithm that can be applied to network traffic data. It leverages GANs to learn the distributions of network traffic data. Throughout the training process, methods like conditional generation and the use of Wasserstein loss [50] are adopted to improve the realism and variety of generated traffic data.

- TVAE addresses the challenges of non-Gaussian continuous distributions in network traffic data by applying mode-specific normalization, thereby generating more accurate and realistic synthetic traffic data.

- GReat is a data synthesis method based on an LLM that can be adapted or extended to network traffic data by converting the various fields into LLM-friendly textual representations before generating synthetic data.

- NetShare uses GANs to learn generative models to generate synthetic packets automatically under DP;

- NetDPSyn is a non-parametric DP synthesizer that iteratively updates the synthetic dataset to align with the target noise marginals.

5.3. Implementations

5.4. Experimental Environment

5.5. Computational Cost Considerations

6. Results Analysis

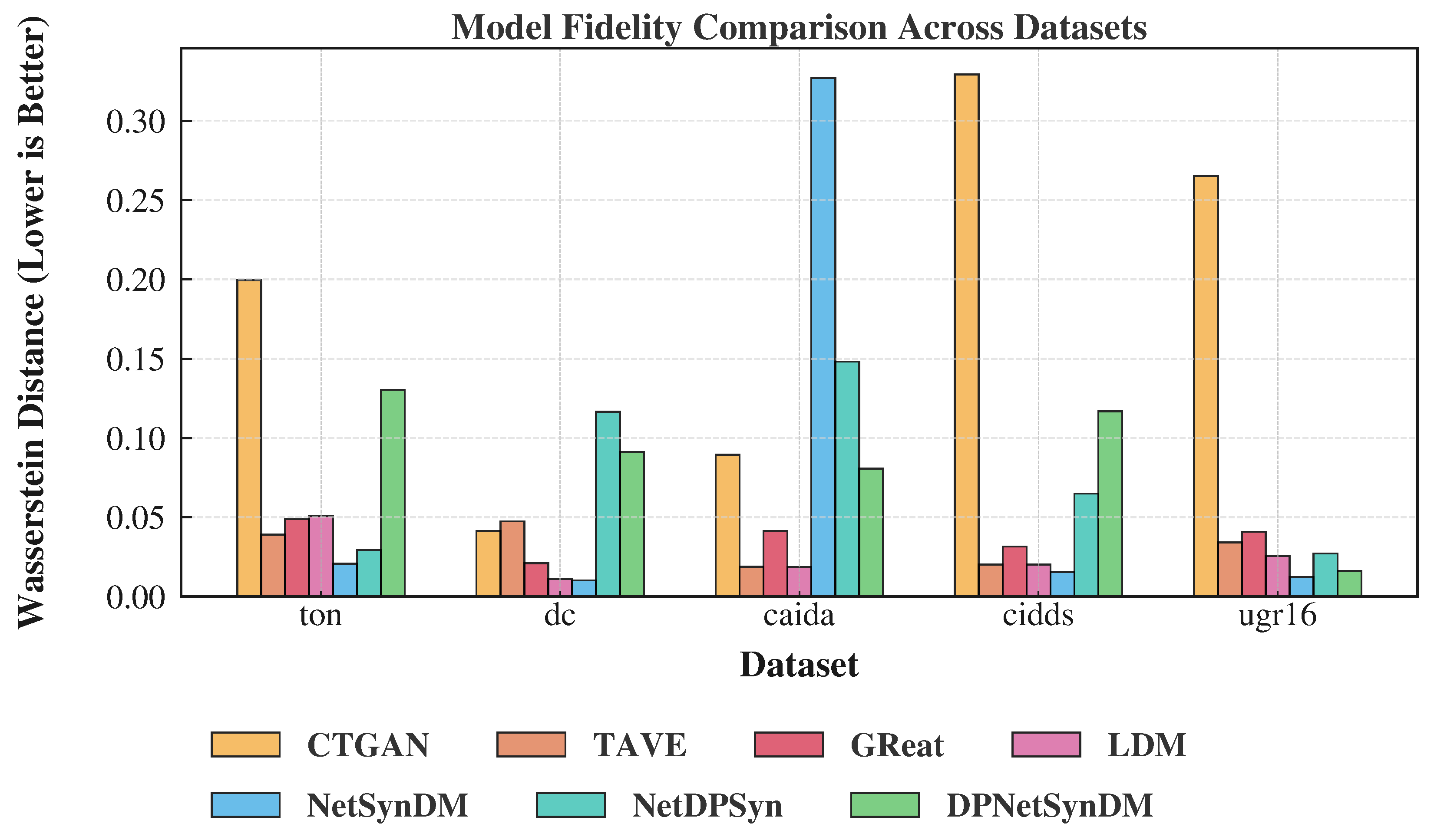

6.1. Fidelity Evaluation

6.2. Privacy Evaluation

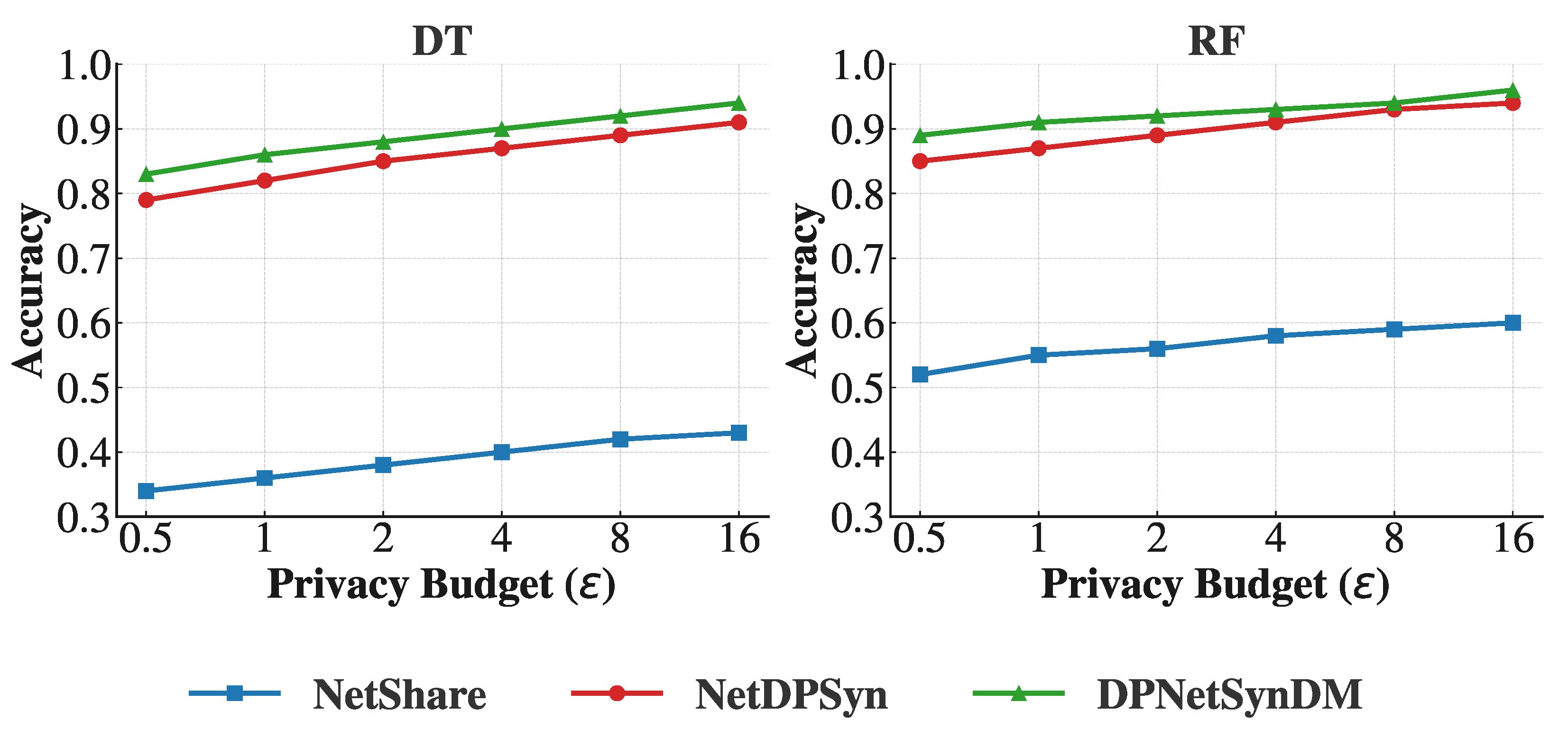

6.3. Utility Evaluation

7. Discussion

8. Related Works

8.1. Network Data Synthesis

8.2. Privacy Leakage for Network Dataset

8.3. DP Dataset Synthesis

8.4. DP for Network Communication

9. Conclusions

- Hyperparameter tuning is critical for maximizing the performance of both no-DP and DP synthesizers. Optimizing model configurations can substantially improve fidelity and reduce query errors, particularly in deep generative models.

- Statistical methods exhibit stable privacy performance, making them preferable for applications where privacy is the highest priority. LDM, for instance, achieves consistent utility with low membership disclosure risks, positioning it as a reliable choice for privacy-focused settings.

- Diffusion models strike a balance between fidelity and privacy, with DPNetSynDM demonstrating the potential of integrating DP mechanisms with diffusion-based synthesis. While its fidelity lags behind those of no-DP models, its strong privacy guarantees make it a promising candidate for secure data synthesis.

- Deep generative models offer flexibility and adaptability for task-specific applications. Methods such as TAVE and GReaT show superior generalization capabilities, making them well-suited for machine learning-driven network analysis and other data-intensive tasks.

- Adaptive privacy budget allocation: Future research may explore dynamically assigning differential privacy budgets based on feature sensitivity or task-specific utility requirements. This approach aims to preserve data fidelity while maintaining strong privacy guarantees.

- Domain-specific synthesis strategies: Tailoring network trace generation methods for specific domains, such as healthcare, industrial control systems, or the Internet of Things (IoT), can leverage domain knowledge and specialized data structures to enhance synthesis quality and downstream performance.

- Cross-institution collaboration mechanisms: Facilitating collaborative analysis and federated learning across institutions using DP-synthesized data—without sharing raw data—remains a key challenge worthy of further investigation.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Jiang, X.; Liu, S.; Gember-Jacobson, A.; Schmitt, P.; Bronzino, F.; Feamster, N. Generative, high-fidelity network traces. In Proceedings of the 22nd ACM Workshop on Hot Topics in Networks, Cambridge, MA, USA, 28–29 November 2023; pp. 131–138. [Google Scholar]

- Lyu, M.; Gharakheili, H.H.; Sivaraman, V. A survey on enterprise network security: Asset behavioral monitoring and distributed attack detection. IEEE Access 2024, 12, 89363–89383. [Google Scholar] [CrossRef]

- Fuentes-García, M.; Camacho, J.; Maciá-Fernández, G. Present and future of network security monitoring. IEEE Access 2021, 9, 112744–112760. [Google Scholar] [CrossRef]

- Devan, M.; Shanmugam, L.; Tomar, M. AI-powered data migration strategies for cloud environments: Techniques, frameworks, and real-world applications. Aust. J. Mach. Learn. Res. Appl. 2021, 1, 79–111. [Google Scholar]

- Kenyon, A.; Deka, L.; Elizondo, D. Are public intrusion datasets fit for purpose characterising the state of the art in intrusion event datasets. Comput. Secur. 2020, 99, 102022. [Google Scholar] [CrossRef]

- Sun, D.; Chen, J.Q.; Gong, C.; Wang, T.; Li, Z. NetDPSyn: Synthesizing Network Traces under Differential Privacy. In Proceedings of the 2024 ACM on Internet Measurement Conference, Madrid, Spain, 4–6 November 2024; pp. 545–554. [Google Scholar]

- Ghalebikesabi, S.; Wilde, H.; Jewson, J.; Doucet, A.; Vollmer, S.; Holmes, C. Mitigating statistical bias within differentially private synthetic data. In Proceedings of the Thirty-Eighth Conference on Uncertainty in Artificial Intelligence, Eindhoven, The Netherlands, 1–5 August 2022; PMLR: New York, NY, USA, 2022; pp. 696–705. [Google Scholar]

- Wei, C.; Li, W.; Chen, G.; Chen, W. DC-SGD: Differentially Private SGD with Dynamic Clipping through Gradient Norm Distribution Estimation. arXiv 2025, arXiv:2503.22988. [Google Scholar] [CrossRef]

- Novado, D.; Cohen, E.; Foster, J. Multi-tier privacy protection for large language models using differential privacy. Authorea 2024. [Google Scholar] [CrossRef]

- Keshun, Y.; Guangqi, Q.; Yingkui, G. Optimizing prior distribution parameters for probabilistic prediction of remaining useful life using deep learning. Reliab. Eng. Syst. Saf. 2024, 242, 109793. [Google Scholar] [CrossRef]

- Saxena, D.; Cao, J. Generative adversarial networks (GANs) challenges, solutions, and future directions. ACM Comput. Surv. (CSUR) 2021, 54, 63. [Google Scholar]

- Navidan, H.; Moshiri, P.F.; Nabati, M.; Shahbazian, R.; Ghorashi, S.A.; Shah-Mansouri, V.; Windridge, D. Generative Adversarial Networks (GANs) in networking: A comprehensive survey & evaluation. Comput. Netw. 2021, 194, 108149. [Google Scholar]

- Akkem, Y.; Biswas, S.K.; Varanasi, A. A comprehensive review of synthetic data generation in smart farming by using variational autoencoder and generative adversarial network. Eng. Appl. Artif. Intell. 2024, 131, 107881. [Google Scholar] [CrossRef]

- Liang, S.; Pan, Z.; Liu, W.; Yin, J.; de Rijke, M. A Survey on Variational Autoencoders in Recommender Systems. ACM Comput. Surv. 2024, 56, 268. [Google Scholar] [CrossRef]

- Yin, Y.; Lin, Z.; Jin, M.; Fanti, G.; Sekar, V. Practical gan-based synthetic ip header trace generation using netshare. In Proceedings of the ACM SIGCOMM 2022 Conference, Amsterdam, The Netherlands, 22–26 August 2022; pp. 458–472. [Google Scholar]

- Yang, Z.; Zhan, F.; Liu, K.; Xu, M.; Lu, S. Ai-generated images as data source: The dawn of synthetic era. arXiv 2023, arXiv:2310.01830. [Google Scholar] [CrossRef]

- Liu, M.F.; Lyu, S.; Vinaroz, M.; Park, M. Differentially private latent diffusion models. arXiv 2023, arXiv:2305.15759. [Google Scholar]

- Abadi, M.; Chu, A.; Goodfellow, I.; McMahan, H.B.; Mironov, I.; Talwar, K.; Zhang, L. Deep learning with differential privacy. In Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security, Vienna, Austria, 24–28 October 2016; pp. 308–318. [Google Scholar]

- Xu, L.; Skoularidou, M.; Cuesta-Infante, A.; Veeramachaneni, K. Modeling tabular data using conditional gan. In Proceedings of the Advances in Neural Information Processing Systems 32 (NeurIPS 2019), Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Borisov, V.; Seßler, K.; Leemann, T.; Pawelczyk, M.; Kasneci, G. Language models are realistic tabular data generators. arXiv 2022, arXiv:2210.06280. [Google Scholar]

- Bagnulo, M.; Matthews, P.; van Beijnum, I. Stateful NAT64: Network Address and Protocol Translation from IPv6 Clients to IPv4 Servers; Technical Report; RFC Editor: Marina del Rey, CA, USA, 2011. [Google Scholar]

- Gruteser, M.; Grunwald, D. Enhancing location privacy in wireless LAN through disposable interface identifiers: A quantitative analysis. In Proceedings of the 1st ACM International Workshop on Wireless Mobile Applications and Services on WLAN Hotspots, San Diego, CA, USA, 19 September 2003; pp. 46–55. [Google Scholar]

- Jain, S.; Javed, M.; Paxson, V. Towards mining latent client identifiers from network traffic. Proc. Priv. Enhancing Technol. 2016, 2016, 100–114. [Google Scholar] [CrossRef]

- Xu, J.; Fan, J.; Ammar, M.H.; Moon, S.B. Prefix-preserving ip address anonymization: Measurement-based security evaluation and a new cryptography-based scheme. In Proceedings of the 10th IEEE International Conference on Network Protocols, Paris, France, 12–15 November 2002; IEEE: Piscataway, NJ, USA, 2002; pp. 280–289. [Google Scholar]

- Imana, B.; Korolova, A.; Heidemann, J. Institutional privacy risks in sharing DNS data. In Proceedings of the Applied Networking Research Workshop, Online, 24–30 July 2021; pp. 69–75. [Google Scholar]

- Sohl-Dickstein, J.; Weiss, E.; Maheswaranathan, N.; Ganguli, S. Deep unsupervised learning using nonequilibrium thermodynamics. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 6–11 July 2015; PMLR: New York, NY, USA, 2015; pp. 2256–2265. [Google Scholar]

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. Adv. Neural Inf. Process. Syst. 2020, 33, 6840–6851. [Google Scholar]

- Luo, C. Understanding diffusion models: A unified perspective. arXiv 2022, arXiv:2208.11970. [Google Scholar] [CrossRef]

- Croitoru, F.A.; Hondru, V.; Ionescu, R.T.; Shah, M. Diffusion models in vision: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 10850–10869. [Google Scholar] [CrossRef]

- Yang, L.; Zhang, Z.; Song, Y.; Hong, S.; Xu, R.; Zhao, Y.; Zhang, W.; Cui, B.; Yang, M.H. Diffusion models: A comprehensive survey of methods and applications. ACM Comput. Surv. 2023, 56, 105. [Google Scholar] [CrossRef]

- Li, K.; Gong, C.; Li, Z.; Zhao, Y.; Hou, X.; Wang, T. PrivImage: Differentially Private Synthetic Image Generation using Diffusion Models with Semantic-Aware Pretraining. In Proceedings of the 33rd USENIX Security Symposium (USENIX Security 24), Philadelphia, PA, USA, 14–16 August 2024; pp. 4837–4854. [Google Scholar]

- Jiang, X.; Liu, S.; Gember-Jacobson, A.; Bhagoji, A.N.; Schmitt, P.; Bronzino, F.; Feamster, N. Netdiffusion: Network data augmentation through protocol-constrained traffic generation. Proc. ACM Meas. Anal. Comput. Syst. 2024, 8, 11. [Google Scholar] [CrossRef]

- Zhang, S.; Li, T.; Jin, D.; Li, Y. NetDiff: A service-guided hierarchical diffusion model for network flow trace generation. Proc. ACM Netw. 2024, 2, 16. [Google Scholar] [CrossRef]

- Chai, H.; Jiang, T.; Yu, L. Diffusion model-based mobile traffic generation with open data for network planning and optimization. In Proceedings of the KDD’24, Barcelona, Spain, 25–29 August 2024; pp. 4828–4838. [Google Scholar] [CrossRef]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 28–24 June 2022; pp. 10684–10695. [Google Scholar]

- Dwork, C.; Roth, A. The algorithmic foundations of differential privacy. Found. Trends® Theor. Comput. Sci. 2014, 9, 211–407. [Google Scholar] [CrossRef]

- Dwork, C.; McSherry, F.; Nissim, K.; Smith, A. Calibrating noise to sensitivity in private data analysis. In Theory of Cryptography, Proceedings of the Third Theory of Cryptography Conference, New York, NY, USA, 4–7 March 2006; Springer: Berlin/Heidelberg, Germany, 2006; pp. 265–284. [Google Scholar]

- Song, S.; Chaudhuri, K.; Sarwate, A.D. Stochastic gradient descent with differentially private updates. In Proceedings of the 2013 IEEE Global Conference on Signal and Information Processing, Austin, TX, USA, 3–5 December 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 245–248. [Google Scholar]

- Du, J.; Li, S.; Chen, X.; Chen, S.; Hong, M. Dynamic differential-privacy preserving sgd. arXiv 2021, arXiv:2111.00173. [Google Scholar]

- Yousefpour, A.; Shilov, I.; Sablayrolles, A.; Testuggine, D.; Prasad, K.; Malek, M.; Nguyen, J.; Ghosh, S.; Bharadwaj, A.; Zhao, J.; et al. Opacus: User-friendly differential privacy library in PyTorch. arXiv 2021, arXiv:2109.12298. [Google Scholar]

- Torfi, A.; Fox, E.A.; Reddy, C.K. Differentially private synthetic medical data generation using convolutional GANs. Inf. Sci. 2022, 586, 485–500. [Google Scholar] [CrossRef]

- Mironov, I. Rényi differential privacy. In Proceedings of the 2017 IEEE 30th Computer Security Foundations Symposium (CSF), Santa Barbara, CA, USA, 21–25 August 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 263–275. [Google Scholar]

- Zhang, Y.; Wen, J.; Yang, G.; He, Z.; Wang, J. Path loss prediction based on machine learning: Principle, method, and data expansion. Appl. Sci. 2019, 9, 1908. [Google Scholar] [CrossRef]

- Wang, J.; Liu, S.; Li, Y. A review of differential privacy in individual data release. Int. J. Distrib. Sens. Netw. 2015, 11, 259682. [Google Scholar] [CrossRef]

- Abay, N.C.; Zhou, Y.; Kantarcioglu, M.; Thuraisingham, B.; Sweeney, L. Privacy preserving synthetic data release using deep learning. In Proceedings of the Machine Learning and Knowledge Discovery in Databases: European Conference, ECML PKDD 2018, Dublin, Ireland, 10–14 September 2018; Springer: Cham, Switzerland, 2019; pp. 510–526. [Google Scholar]

- Du, Y.; Li, N. Towards principled assessment of tabular data synthesis algorithms. arXiv 2024, arXiv:2402.06806. [Google Scholar]

- Akiba, T.; Sano, S.; Yanase, T.; Ohta, T.; Koyama, M. Optuna: A next-generation hyperparameter optimization framework. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 2623–2631. [Google Scholar]

- McKenna, R.; Miklau, G.; Sheldon, D. Winning the nist contest: A scalable and general approach to differentially private synthetic data. arXiv 2021, arXiv:2108.04978. [Google Scholar] [CrossRef]

- Cai, K.; Lei, X.; Wei, J.; Xiao, X. Data synthesis via differentially private markov random fields. Proc. VLDB Endow. 2021, 14, 2190–2202. [Google Scholar] [CrossRef]

- Gulrajani, I.; Ahmed, F.; Arjovsky, M.; Dumoulin, V.; Courville, A.C. Improved training of wasserstein gans. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Flamary, R.; Courty, N.; Gramfort, A.; Alaya, M.Z.; Boisbunon, A.; Chambon, S.; Chapel, L.; Corenflos, A.; Fatras, K.; Fournier, N.; et al. Pot: Python optimal transport. J. Mach. Learn. Res. 2021, 22, 1–8. [Google Scholar]

- El Emam, K.; Mosquera, L.; Fang, X. Validating a membership disclosure metric for synthetic health data. JAMIA Open 2022, 5, ooac083. [Google Scholar] [CrossRef] [PubMed]

- Shokri, R.; Stronati, M.; Song, C.; Shmatikov, V. Membership Inference Attacks Against Machine Learning Models. In Proceedings of the 2017 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 22–24 May 2017; pp. 3–18. [Google Scholar] [CrossRef]

- Prokhorenkova, L.; Gusev, G.; Vorobev, A.; Dorogush, A.V.; Gulin, A. CatBoost: Unbiased boosting with categorical features. In Proceedings of the Advances in Neural Information Processing Systems 31 (NeurIPS 2018), Montreal, QC, Canada, 3–8 December 2018. [Google Scholar]

- Gorishniy, Y.; Rubachev, I.; Khrulkov, V.; Babenko, A. Revisiting deep learning models for tabular data. Adv. Neural Inf. Process. Syst. 2021, 34, 18932–18943. [Google Scholar]

- Gong, C.; Yang, Z.; Bai, Y.; He, J.; Shi, J.; Li, K.; Sinha, A.; Xu, B.; Hou, X.; Lo, D.; et al. Baffle: Hiding backdoors in offline reinforcement learning datasets. In Proceedings of the 2024 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 19–23 May 2024; pp. 2086–2104. [Google Scholar]

- Gong, C.; Yang, Z.; Bai, Y.; Shi, J.; Sinha, A.; Xu, B.; Lo, D.; Hou, X.; Fan, G. Curiosity-driven and victim-aware adversarial policies. In Proceedings of the 38th Annual Computer Security Applications Conference, Austin, TX, USA, 5–9 December 2022; pp. 186–200. [Google Scholar]

- Gong, C.; Li, K.; Yao, J.; Wang, T. Trajdeleter: Enabling trajectory forgetting in offline reinforcement learning agents. arXiv 2024, arXiv:2404.12530. [Google Scholar] [CrossRef]

- Assefa, S.A.; Dervovic, D.; Mahfouz, M.; Tillman, R.E.; Reddy, P.; Veloso, M. Generating synthetic data in finance: Opportunities, challenges and pitfalls. In Proceedings of the First ACM International Conference on AI in Finance, New York, NY, USA, 15–16 October 2020; pp. 1–8. [Google Scholar]

- Zheng, S.; Charoenphakdee, N. Diffusion models for missing value imputation in tabular data. arXiv 2022, arXiv:2210.17128. [Google Scholar]

- Hernandez, M.; Epelde, G.; Alberdi, A.; Cilla, R.; Rankin, D. Synthetic data generation for tabular health records: A systematic review. Neurocomputing 2022, 493, 28–45. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems 27 (NIPS 2014), Montreal, QC, Canada, 8–13 December 2014. [Google Scholar]

- Kingma, D.P. Auto-encoding variational bayes. arXiv 2013, arXiv:1312.6114. [Google Scholar]

- Liu, T.; Qian, Z.; Berrevoets, J.; van der Schaar, M. GOGGLE: Generative modelling for tabular data by learning relational structure. In Proceedings of the the Eleventh International Conference on Learning Representations, Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Hu, Y.; Wu, F.; Li, Q.; Long, Y.; Garrido, G.M.; Ge, C.; Ding, B.; Forsyth, D.; Li, B.; Song, D. Sok: Privacy-preserving data synthesis. In Proceedings of the 2024 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 19–23 May 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 4696–4713. [Google Scholar]

- Dockhorn, T.; Cao, T.; Vahdat, A.; Kreis, K. Differentially private diffusion models. arXiv 2022, arXiv:2210.09929. [Google Scholar]

- Liew, S.P.; Takahashi, T.; Ueno, M. Pearl: Data synthesis via private embeddings and adversarial reconstruction learning. arXiv 2021, arXiv:2106.04590. [Google Scholar]

- Gong, C.; Li, K.; Lin, Z.; Wang, T. DPImageBench: A Unified Benchmark for Differentially Private Image Synthesis. arXiv 2025, arXiv:2503.14681. [Google Scholar]

- Li, K.; Gong, C.; Li, X.; Zhao, Y.; Hou, X.; Wang, T. From easy to hard: Building a shortcut for differentially private image synthesis. In Proceedings of the 2025 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 12–15 May 2025; IEEE: Piscataway, NJ, USA, 2025; pp. 3988–4006. [Google Scholar]

- Cai, K.; Xiao, X.; Cormode, G. Privlava: Synthesizing relational data with foreign keys under differential privacy. Proc. ACM Manag. Data 2023, 1, 142. [Google Scholar] [CrossRef]

- McKenna, R.; Mullins, B.; Sheldon, D.; Miklau, G. Aim: An adaptive and iterative mechanism for differentially private synthetic data. arXiv 2022, arXiv:2201.12677. [Google Scholar] [CrossRef]

- Jordon, J.; Yoon, J.; Van Der Schaar, M. PATE-GAN: Generating synthetic data with differential privacy guarantees. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Zhang, J.; Cormode, G.; Procopiuc, C.M.; Srivastava, D.; Xiao, X. Privbayes: Private data release via bayesian networks. ACM Trans. Database Syst. (TODS) 2017, 42, 25. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, T.; Li, N.; Honorio, J.; Backes, M.; He, S.; Chen, J.; Zhang, Y. PrivSyn: Differentially private data synthesis. In Proceedings of the 30th USENIX Security Symposium (USENIX Security 21), Vancouver, BC, Canada, 11–13 August 2021; pp. 929–946. [Google Scholar]

- Yuan, Q.; Zhang, Z.; Du, L.; Chen, M.; Cheng, P.; Sun, M. PrivGraph: Differentially Private Graph Data Publication by Exploiting Community Information. In Proceedings of the 32nd USENIX Security Symposium (USENIX Security 23), Anaheim, CA, USA, 9–11 August 2023; pp. 3241–3258. [Google Scholar]

- Frigerio, L.; de Oliveira, A.S.; Gomez, L.; Duverger, P. Differentially private generative adversarial networks for time series, continuous, and discrete open data. In Proceedings of the ICT Systems Security and Privacy Protection: 34th IFIP TC 11 International Conference, SEC 2019, Lisbon, Portugal, 25–27 June 2019; Springer: Cham, Switzerland, 2019; pp. 151–164. [Google Scholar]

- Du, Y.; Hu, Y.; Zhang, Z.; Fang, Z.; Chen, L.; Zheng, B.; Gao, Y. Ldptrace: Locally differentially private trajectory synthesis. Proc. VLDB Endow. 2023, 16, 1897–1909. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, Z.; Wang, T.; He, S.; Backes, M.; Chen, J.; Zhang, Y. PrivTrace: Differentially Private Trajectory Synthesis by Adaptive Markov Models. In Proceedings of the 32nd USENIX Security Symposium (USENIX Security 23), Anaheim, CA, USA, 9–11 August 2023; pp. 1649–1666. [Google Scholar]

- Tang, X.; Shin, R.; Inan, H.A.; Manoel, A.; Mireshghallah, F.; Lin, Z.; Gopi, S.; Kulkarni, J.; Sim, R. Privacy-preserving in-context learning with differentially private few-shot generation. arXiv 2023, arXiv:2309.11765. [Google Scholar]

- Yue, X.; Inan, H.A.; Li, X.; Kumar, G.; McAnallen, J.; Shajari, H.; Sun, H.; Levitan, D.; Sim, R. Synthetic text generation with differential privacy: A simple and practical recipe. arXiv 2022, arXiv:2210.14348. [Google Scholar]

- Cheng, A. PAC-GAN: Packet generation of network traffic using generative adversarial networks. In Proceedings of the 2019 IEEE 10th Annual Information Technology, Electronics and Mobile Communication Conference (IEMCON), Vancouver, BC, Canada, 17–19 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 728–734. [Google Scholar]

- Fan, L.; Pokkunuru, A. DPNeT: Differentially private network traffic synthesis with generative adversarial networks. In Proceedings of the IFIP Annual Conference on Data and Applications Security and Privacy, Calgary, AB, Canada, 19–20 July 2021; Springer: Cham, Switzerland, 2021; pp. 3–21. [Google Scholar]

- Han, L.; Sheng, Y.; Zeng, X. A packet-length-adjustable attention model based on bytes embedding using flow-wgan for smart cybersecurity. IEEE Access 2019, 7, 82913–82926. [Google Scholar] [CrossRef]

- Lin, Z.; Jain, A.; Wang, C.; Fanti, G.; Sekar, V. Using gans for sharing networked time series data: Challenges, initial promise, and open questions. In Proceedings of the ACM Internet Measurement Conference, Online, 27–29 October 2020; pp. 464–483. [Google Scholar]

- Ring, M.; Schlör, D.; Landes, D.; Hotho, A. Flow-based network traffic generation using generative adversarial networks. Comput. Secur. 2019, 82, 156–172. [Google Scholar] [CrossRef]

- Sivaroopan, N.; Madarasingha, C.; Muramudalige, S.; Jourjon, G.; Jayasumana, A.; Thilakarathna, K. SyNIG: Synthetic network traffic generation through time series imaging. In Proceedings of the 2023 IEEE 48th Conference on Local Computer Networks (LCN), Daytona Beach, FL, USA, 2–5 October 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–9. [Google Scholar]

- Wang, P.; Li, S.; Ye, F.; Wang, Z.; Zhang, M. PacketCGAN: Exploratory study of class imbalance for encrypted traffic classification using CGAN. In Proceedings of the ICC 2020—2020 IEEE International Conference on Communications (ICC), Dublin, Ireland, 7–11 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–7. [Google Scholar]

- Stadler, T.; Oprisanu, B.; Troncoso, C. Synthetic data–anonymisation groundhog day. In Proceedings of the 31st USENIX Security Symposium (USENIX Security 22), Boston, MA, USA, 10–12 August 2022; pp. 1451–1468. [Google Scholar]

| Dataset | Records | Attributes | Domain | Label | Type |

|---|---|---|---|---|---|

| TON | 295,497 | 11 | 2 × 106 | type | flow |

| UGR16 | 1,000,000 | 10 | 4 × 106 | type | flow |

| CIDDS | 1,000,000 | 11 | 6 × 106 | type | flow |

| CAIDA | 1,000,000 | 15 | 1 × 107 | flag | packet |

| DC | 1,000,000 | 15 | 1 × 107 | flag | packet |

| Method | Time (hh:mm) | Privacy |

|---|---|---|

| CTGAN | 03:06 | No |

| TVAE | 05:00 | No |

| GReaT | 09:36 | No |

| LDM | 01:30 | No |

| NetSynDM | 01:31 | No |

| NetDPSyn | 00:30 | Yes () |

| DPNetSynDM | 03:30 | Yes () |

| Methods | TON | DC | CAIDA | CIDDS | UGR16 |

|---|---|---|---|---|---|

| CTGAN | |||||

| TAVE | |||||

| GReat | |||||

| LDM | |||||

| NetSynDM | |||||

| NetDPSyn | |||||

| DPNetSynDM |

| Methods | TON | DC | CAIDA | CIDDS | UGR16 |

|---|---|---|---|---|---|

| CTGAN | 0.0264 | 0.0128 | 0.0136 | 0.0159 | 0.0164 |

| TAVE | 0.1211 | 0.0133 | 0.0145 | 0.0143 | 0.0155 |

| GReat | 0.0346 | 0.0168 | 0.0132 | 0.0212 | 0.0148 |

| LDM | 0.0208 | 0.0132 | 0.0122 | 0.0141 | 0.0146 |

| NetSynDM | 0.0196 | 0.0104 | 0.0118 | 0.0138 | 0.0142 |

| NetDPSyn | 0.0768 | 0.0208 | 0.0226 | 0.0327 | 0.0228 |

| DPNetSynDM | 0.0652 | 0.0184 | 0.0224 | 0.0339 | 0.0186 |

| Methods | TON | DC | CAIDA | CIDDS | UGR16 |

|---|---|---|---|---|---|

| CTGAN | 0.000265 ± 0.000122 | 0.580068 ± 0.025935 | 0.530987 ± 0.014147 | 0.000100 ± 0.000035 | 0.297094 ± 0.005937 |

| TAVE | 0.000797 ± 0.000188 | 0.702786 ± 0.023150 | 0.475315 ± 0.012104 | 0.001135 ± 0.001017 | 0.304603 ± 0.095859 |

| GReat | 0.085016 ± 0.002554 | 0.082346 ± 0.002658 | 0.084020 ± 0.002714 | 0.087224 ± 0.002212 | 0.08344 ± 0.0024 |

| LDM | 0.000018 ± 0.000012 | 0.108598 ± 0.004408 | 0.076747 ± 0.003395 | 0.000009 ± 0.000004 | 0.1230 ± 0.1063 |

| NetSynDM | 0.000004 ± 0.000004 | 0.222335 ± 0.009213 | 2.207452 ± 0.365110 | 0.000003 ± 0.000002 | 0.2676 ± 0.1254 |

| NetDPSyn | 0.000378 ± 0.000777 | 1.512826 ± 0.148622 | 1.234690 ± 0.143961 | 0.005015 ± 0.000815 | 0.005416 ± 0.000518 |

| DPNetSynDM | 0.000331 ± 0.009779 | 1.422954 ± 0.112818 | 1.357546 ± 0.094102 | 0.004411 ± 0.006152 | 0.003554 ± 0.000216 |

| Methods | TON | DC | CAIDA | CIDDS | UGR16 |

|---|---|---|---|---|---|

| CTGAN | 0.026149 ± 0.002224 | 0.012809 ± 0.001444 | 0.021641 ± 0.002198 | 0.044259 ± 0.004962 | 0.031250 ± 0.004651 |

| TAVE | 0.008266 ± 0.001252 | 0.017962 ± 0.001891 | 0.009775 ± 0.001189 | 0.004328 ± 0.000575 | 0.011067 ± 0.006042 |

| GReat | 0.009468 ± 0.000664 | 0.005451 ± 0.000432 | 0.010898 ± 0.000810 | 0.034348 ± 0.000426 | 0.006684 ± 0.002112 |

| LDM | 0.007130 ± 0.000951 | 0.003884 ± 0.000326 | 0.004022 ± 0.000430 | 0.002174 ± 0.000317 | 0.0039 ± 0.0023 |

| NetSynDM | 0.003689 ± 0.000390 | 0.003487 ± 0.000342 | 0.082973 ± 0.009303 | 0.002096 ± 0.000162 | 0.0036 ± 0.0019 |

| NetDPSyn | 0.009992 ± 0.001142 | 0.003223 ± 0.004327 | 0.053591 ± 0.010868 | 0.028328 ± 0.004384 | 0.0032 ± 0.0016 |

| DPNetSynDM | 0.007382 ± 0.004230 | 0.008117 ± 0.004310 | 0.041462 ± 0.005987 | 0.022666 ± 0.004780 | 0.0034 ± 0.0021 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, J.-X.; Xu, Y.-H.; Hua, M.; Yu, G.; Zhou, W. Enhancing Privacy-Preserving Network Trace Synthesis Through Latent Diffusion Models. Information 2025, 16, 686. https://doi.org/10.3390/info16080686

Yu J-X, Xu Y-H, Hua M, Yu G, Zhou W. Enhancing Privacy-Preserving Network Trace Synthesis Through Latent Diffusion Models. Information. 2025; 16(8):686. https://doi.org/10.3390/info16080686

Chicago/Turabian StyleYu, Jin-Xi, Yi-Han Xu, Min Hua, Gang Yu, and Wen Zhou. 2025. "Enhancing Privacy-Preserving Network Trace Synthesis Through Latent Diffusion Models" Information 16, no. 8: 686. https://doi.org/10.3390/info16080686

APA StyleYu, J.-X., Xu, Y.-H., Hua, M., Yu, G., & Zhou, W. (2025). Enhancing Privacy-Preserving Network Trace Synthesis Through Latent Diffusion Models. Information, 16(8), 686. https://doi.org/10.3390/info16080686