Automated Grading Method of Python Code Submissions Using Large Language Models and Machine Learning

Abstract

1. Introduction

- RQ1: Can the proposed system detect and correct syntactic and logical errors in student code submissions, including non-compilable ones?

- RQ2: Which supervised learning model, automatically selected and evaluated using PyCaret, best replicates human grading in terms of accuracy, reliability, and consistency across both continuous and discrete scoring tasks?

- RQ3: How does the performance of the proposed system compare to recent automated grading approaches, particularly in terms of accuracy, robustness, and generalisation across diverse evaluation metrics?

2. Related Works

2.1. Approaches Based on Static and/or Dynamic Analysis

2.2. Approaches Based on Supervised Machine Learning

2.3. Approaches Based on Large Language Models

2.4. Evaluation Techniques Comparison

3. Materials and Methods

3.1. GPT-4-Turbo

3.2. Automated Model Selection Using PyCaret

3.3. Research Paradigm and Approach

3.4. General Process of Programming Code Correction

- Understanding the intent of the code: It is essential to understand the purpose of the code that needs to be fixed and what it is intended to do.

- Error identification: This step involves identifying the different types of errors in the student’s answer, such as syntax errors, execution errors, and logical errors.

- Correcting and modifying the code: This step involves changing the original code (student answer) by rewriting parts, inserting new lines, or deleting parts.

- Validate the new solution: after making the necessary changes, ensure that the new code works correctly and that the recent changes do not introduce new problems.

- Rate the code: This crucial step allows grades to be awarded based on the accuracy of the answers. These scores distinguish between students who have mastered the material and those who need additional support or intervention.

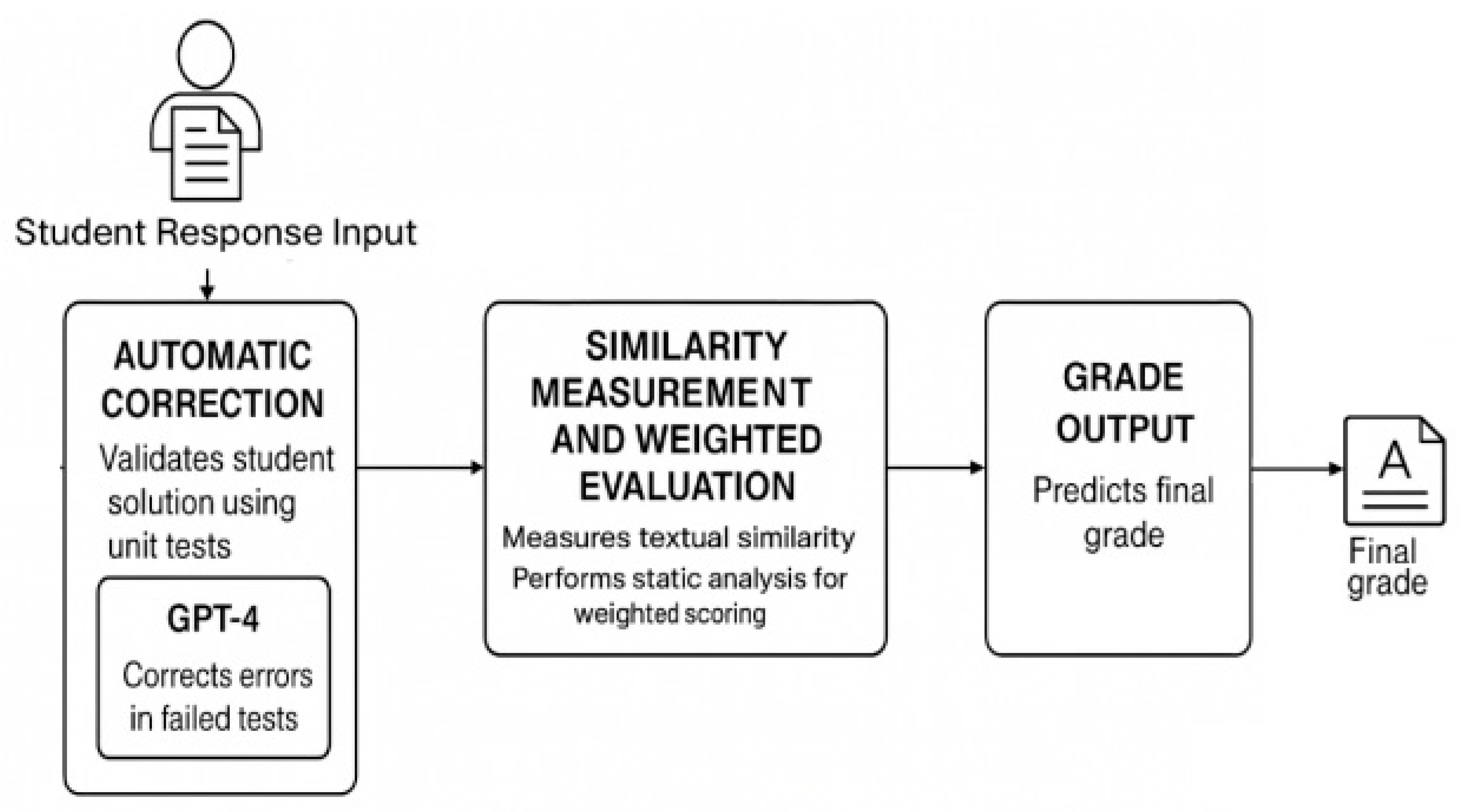

3.5. Grading Workflow Review of the Proposed Model

3.5.1. The Automatic Correction Module

3.5.2. The Similarity Measurement and Weighted Evaluation Module

- Correct lines: unchanged lines that are syntactically and semantically correct.

- Updated lines: modified lines that reflect corrections made to existing code.

- Added lines: new lines introduced in the corrected version to complete or fix the solution.

- Removed lines: lines present only in the original submission that were eliminated during correction.

- Weighted ratio of correct lines: Proportion of unchanged, correct lines, adjusted by their functional significance.

- Weighted ratio of deleted lines: Reflects the impact of removed lines on the overall solution.

- Weighted ratio of inserted lines: Evaluates the contribution of added lines in completing the correct solution.

- Weighted similarity ratio of modified lines: Combines edit distance similarity and line importance, giving more credit to accurate, minimally invasive changes in key code segments.

3.5.3. The Grade Output Module

3.5.4. Model Training and Dataset Preparation

3.5.5. Human Grading

- def factorial(n):

- result = 1

- for i in range(1, n + 1):

- result *= i

- return result

- def factorial(n): # correct

- result = 0 # logic (incorrect initialization)

- for i in range(n): # logic (off-by-one error)

- result = result + i # logic (incorrect operation)

- print(result) # output (misused: should return)

4. Results

4.1. Correction of the Student Code

4.2. Predicting Scores of the Student Code Using PyCaret

4.2.1. Regression Results

4.2.2. Classification Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Tseng, C.Y.; Cheng, T.H.; Chang, C.H. A Novel Approach to Boosting Programming Self-Efficacy: Issue-Based Teaching for Non-CS Undergraduates in Interdisciplinary Education. Information 2024, 15, 820. [Google Scholar] [CrossRef]

- Messer, M.; Brown, N.C.; Kölling, M.; Shi, M. Automated grading and feedback tools for programming education: A systematic review. ACM Trans. Comput. Educ. 2024, 24, 1–43. [Google Scholar] [CrossRef]

- Guskey, T.R. Addressing inconsistencies in grading practices. Phi Delta Kappan 2024, 105, 52–57. [Google Scholar] [CrossRef]

- Gamage, D.; Staubitz, T.; Whiting, M. Peer assessment in MOOCs: Systematic literature review. Distance Educ. 2021, 42, 268–289. [Google Scholar] [CrossRef]

- Borade, J.G.; Netak, L.D. Automated grading of essays: A review. In Intelligent Human Computer Interaction, Proceedings of the 12th International Conference, IHCI 2020, Daegu, Republic of Korea, 24–26 November 2020; Part I; Springer International Publishing: Berlin/Heidelberg, Germany, 2021; Volume 12, pp. 238–249. [Google Scholar]

- Tetteh, D.J.K.; Okai, B.P.K.; Beatrice, A.N. VisioMark: An AI-Powered Multiple-Choice Sheet Grading System; Technical Report, no. 456; Kwame University of Science and Technology, Department of Computer Engineering: Kumasi, Ghana, 2023. [Google Scholar]

- Zhu, X.; Wu, H.; Zhang, L. Automatic short-answer grading via BERT-based deep neural networks. IEEE Trans. Learn. Technol. 2022, 15, 364–375. [Google Scholar] [CrossRef]

- Bonthu, S.; Sree, S.R.; Prasad, M.K. Improving the performance of automatic short answer grading using transfer learning and augmentation. Eng. Appl. Artif. Intell. 2023, 123, 106292. [Google Scholar] [CrossRef]

- Mahdaoui, M.; Nouh, S.; Alaoui, M.E.; Rachdi, M. Semi code writing intelligent tutoring system for learning python. J. Eng. Sci. Technol. 2023, 18, 2548–2560. [Google Scholar]

- Liu, X.; Wang, S.; Wang, P.; Wu, D. Automatic grading of programming assignments: An approach based on formal semantics. In Software Engineering Education and Training, ICSE-SEET 2019, Proceedings of the 2019 IEEE/ACM 41st International Conference on Software Engineering, Montréal, QC, Canada, 25–31 May 2019; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2019; pp. 126–137. [Google Scholar]

- Verma, A.; Udhayanan, P.; Shankar, R.M.; Kn, N.; Chakrabarti, S.K. Source-code similarity measurement: Syntax tree fingerprinting for automated evaluation. In Proceedings of the AIMLSystems 2021: The First International Conference on AI-ML-Systems, Bangalore, India, 21–23 October 2021; pp. 1–7. [Google Scholar]

- Cepelis, K. The Automation of Grading Programming Exams in Computer Science Education. Bachelor’s Thesis, University of Twente, Enschede, The Netherlands, 2024. [Google Scholar]

- de Souza, F.R.; de Assis Zampirolli, F.; Kobayashi, G. Convolutional Neural Network Applied to Code Assignment Grading. In Convolutional Neural Network Applied to Code Assignment Grading, Proceedings of the 11th International Conference on Computer Supported Education (CSEDU 2019), Heraklion, Greece, 2–4 May 2019; SCITEPRESS: Setúbal, Portugal, 2019; pp. 62–69. [Google Scholar]

- Yousef, M.; Mohamed, K.; Medhat, W.; Mohamed, E.H.; Khoriba, G.; Arafa, T. BeGrading: Large language models for enhanced feedback in programming education. Neural. Comput. Appl. 2025, 37, 1027–1040. [Google Scholar] [CrossRef]

- Akyash, M.; Azar, K.Z.; Kamali, H.M. StepGrade: Grading Programming Assignments with Context-Aware LLMs. arXiv 2025, arXiv:2503.20851. [Google Scholar]

- Tseng, E.Q.; Huang, P.C.; Hsu, C.; Wu, P.Y.; Ku, C.T.; Kang, Y. CodEv: An Automated Grading Framework Leveraging Large Language Models for Consistent and Constructive Feedback. arXiv 2024, arXiv:2501.10421. [Google Scholar]

- Mendonça, P.C.; Quintal, F.; Mendonça, F. Evaluating LLMs for Automated Scoring in Formative Assessments. Appl. Sci. 2025, 15, 2787. [Google Scholar] [CrossRef]

- Jukiewicz, M. The future of grading programming assignments in education: The role of ChatGPT in automating the assessment and feedback process. Think. Skills Creat. 2024, 52, 101522. [Google Scholar] [CrossRef]

- OpenAI, “New Models and Developer Products Announced at Dev Day,” OpenAI. 6 November 2023. Available online: https://openai.com/index/new-models-and-developer-products-announced-at-devday/ (accessed on 1 July 2025).

- Westergaard, G.; Erden, U.; Mateo, O.A.; Lampo, S.M.; Akinci, T.C.; Topsakal, O. Time series forecasting utilizing automated machine learning (AutoML): A comparative analysis study on diverse datasets. Information 2024, 15, 39. [Google Scholar] [CrossRef]

- Salman, H.A.; Kalakech, A.; Steiti, A. Random forest algorithm overview. Babylon. J. Mach. Learn. 2024, 2024, 69–79. [Google Scholar] [CrossRef] [PubMed]

- Nalluri, M.; Pentela, M.; Eluri, N.R. A scalable tree boosting system: XGBoost. Int. J. Res. Stud. Sci. Eng. Technol. 2020, 7, 36–51. [Google Scholar]

- Mangalingam, A.S. An Enhancement of AdaBoost Algorithm Applied in Online Transaction Fraud Detection System. Int. J. Multidiscip. Res. (IJFMR) 2024, 6, 69–79. [Google Scholar]

- Keuning, H.; Jeuring, J.; Heeren, B. A systematic literature review of automated feedback generation for programming exercises. ACM Trans. Comput. Educ. (TOCE) 2018, 19, 1–43. [Google Scholar] [CrossRef]

- Ihantola, P.; Ahoniemi, T.; Karavirta, V.; Seppälä, O. Review of recent systems for automatic assessment of programming assignments. In Proceedings of the 10th Koli Calling International Conference on Computing Education Research, Koli, Finland, 28–31 October 2010; pp. 86–93. [Google Scholar]

- Landis, J.R.; Koch, G.G. The measurement of observer agreement for categorical data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef] [PubMed]

| Reference | Scoring Type | Static Analysis | Dynamic Analysis | Machine Learning | LLM |

|---|---|---|---|---|---|

| Verma et al. [11] | Continuous | AST | No | SVM | No |

| Čepelis [12] | Continuous | AST | Unit-test | No | No |

| Souza et al. [13] | Discrete | No | No | CNN | No |

| Yousef et al. [14] | Continuous | No | No | Fine-tuned model | Yes |

| Akyash et al. [15] | Continuous | No | No | No | GPT-4 |

| Tseng et al. [16] | Continuous | No | No | No | GPT-4o, LLaMA, Gemma |

| Mendonça et al. [17] | Continuous | No | No | No | GPT-4, and open-source models |

| Jukiewicz [18] | Discrete | No | No | No | Chat GPT |

| Our System | Discrete | AST | Unit-test | Random Forest | GPT-4-Turbo |

| Continuous | Extra Trees Regressor |

| Line | Role | Error Type | Penalty |

|---|---|---|---|

| 2 | Logic | Incorrect initialization | −10% |

| 3 | Logic | Off-by-one error in range | −10% |

| 4 | Logic | Incorrect operation | −10% |

| 5 | Output | Uses print() instead of return | −5% |

| Number of Iterations | Successful Repair |

|---|---|

| 1 | 77% |

| 2 | 87% |

| 3 | 87.5% |

| 4 | 87.5% |

| 5 | 88% |

| Model | MAE | RMSE | R2 Score | |

|---|---|---|---|---|

| 1 | Extra Trees Regressor | 4.43 | 8.36 | 0.83 |

| 2 | Random Forest Regressor | 5.32 | 9.14 | 0.79 |

| 3 | Extreme Gradient Boosting | 4.90 | 9.03 | 0.78 |

| 4 | Gradient Boosting Regressor | 5.56 | 9.15 | 0.76 |

| 5 | AdaBoost Regressor | 6.27 | 10.26 | 0.74 |

| 6 | K Neighbours Regressor | 6.62 | 10.96 | 0.73 |

| 7 | Decision Tree Regressor | 5.55 | 10.29 | 0.73 |

| 8 | Elastic Net | 6.89 | 10.84 | 0.71 |

| 9 | Lasso Least Angle Regression | 7.23 | 11.33 | 0.70 |

| 10 | Lass Regression | 7.24 | 11.33 | 0.69 |

| Model | Accuracy | Recall | F1 | Kappa | |

|---|---|---|---|---|---|

| 1 | Random Forest Classifier | 0.91 | 0.91 | 0.91 | 0.84 |

| 2 | Gradient Boosting Classifier | 0.90 | 0.90 | 0.90 | 0.82 |

| 3 | Extra Trees Classifier | 0.90 | 0.90 | 0.89 | 0.81 |

| 4 | Light Gradient Boosting Machine | 0.87 | 0.87 | 0.87 | 0.76 |

| 5 | K Neighbours Classifier | 0.86 | 0.86 | 0.85 | 0.73 |

| 6 | Decision Tree Classifier | 0.86 | 0.86 | 0.86 | 0.74 |

| 7 | AdaBoost Classifier | 0.81 | 0.81 | 0.81 | 0.67 |

| 8 | Logistic Regression | 0.80 | 0.80 | 0.78 | 0.61 |

| 9 | Linear Discriminant Analysis | 0.79 | 0.79 | 0.77 | 0.61 |

| 10 | Ridge Classifier | 0.74 | 0.74 | 0.68 | 0.58 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mahdaoui, M.; Nouh, S.; El Kasmi Alaoui, M.S.; Kandali, K. Automated Grading Method of Python Code Submissions Using Large Language Models and Machine Learning. Information 2025, 16, 674. https://doi.org/10.3390/info16080674

Mahdaoui M, Nouh S, El Kasmi Alaoui MS, Kandali K. Automated Grading Method of Python Code Submissions Using Large Language Models and Machine Learning. Information. 2025; 16(8):674. https://doi.org/10.3390/info16080674

Chicago/Turabian StyleMahdaoui, Mariam, Said Nouh, My Seddiq El Kasmi Alaoui, and Khalid Kandali. 2025. "Automated Grading Method of Python Code Submissions Using Large Language Models and Machine Learning" Information 16, no. 8: 674. https://doi.org/10.3390/info16080674

APA StyleMahdaoui, M., Nouh, S., El Kasmi Alaoui, M. S., & Kandali, K. (2025). Automated Grading Method of Python Code Submissions Using Large Language Models and Machine Learning. Information, 16(8), 674. https://doi.org/10.3390/info16080674