From Tools to Creators: A Review on the Development and Application of Artificial Intelligence Music Generation

Abstract

1. Introduction

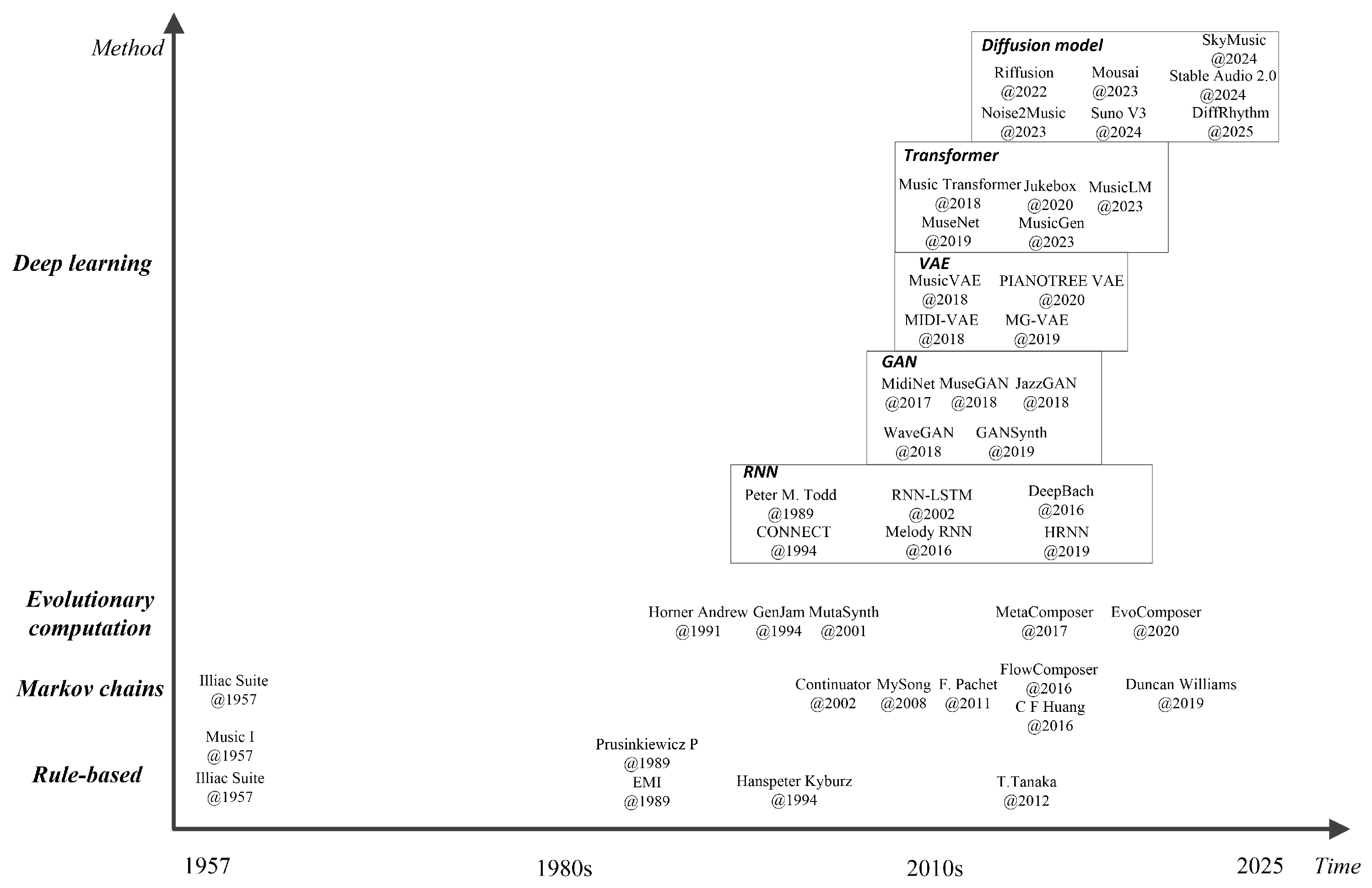

2. Music Generation

2.1. Rule-Based Method

2.2. Markov Chain

2.3. Evolutionary Computation

2.4. Deep Learning

2.4.1. Recurrent Neural Network

2.4.2. Generative Adversarial Network

2.4.3. Variational Autoencoder

2.4.4. Transformer Architecture

2.4.5. Diffusion Model

2.5. Summary of Music Generation Models

3. Applications of Music AI

3.1. Music Generation and Composition

3.2. Music Rehabilitation and Therapy

3.3. Music Education and Learning

3.4. Summary

4. Discussion

4.1. Impact on the Music Industry

4.1.1. Impact on Intellectual Property

4.1.2. Impact on Economies

4.2. Creativity of AI-Generated Music

4.2.1. Creativity Evaluation

4.2.2. Impact on Artistic Creativity and Expression

4.3. Emotional Expressiveness of AI-Generated Music

4.3.1. Impact on Artistic Creativity and Expression

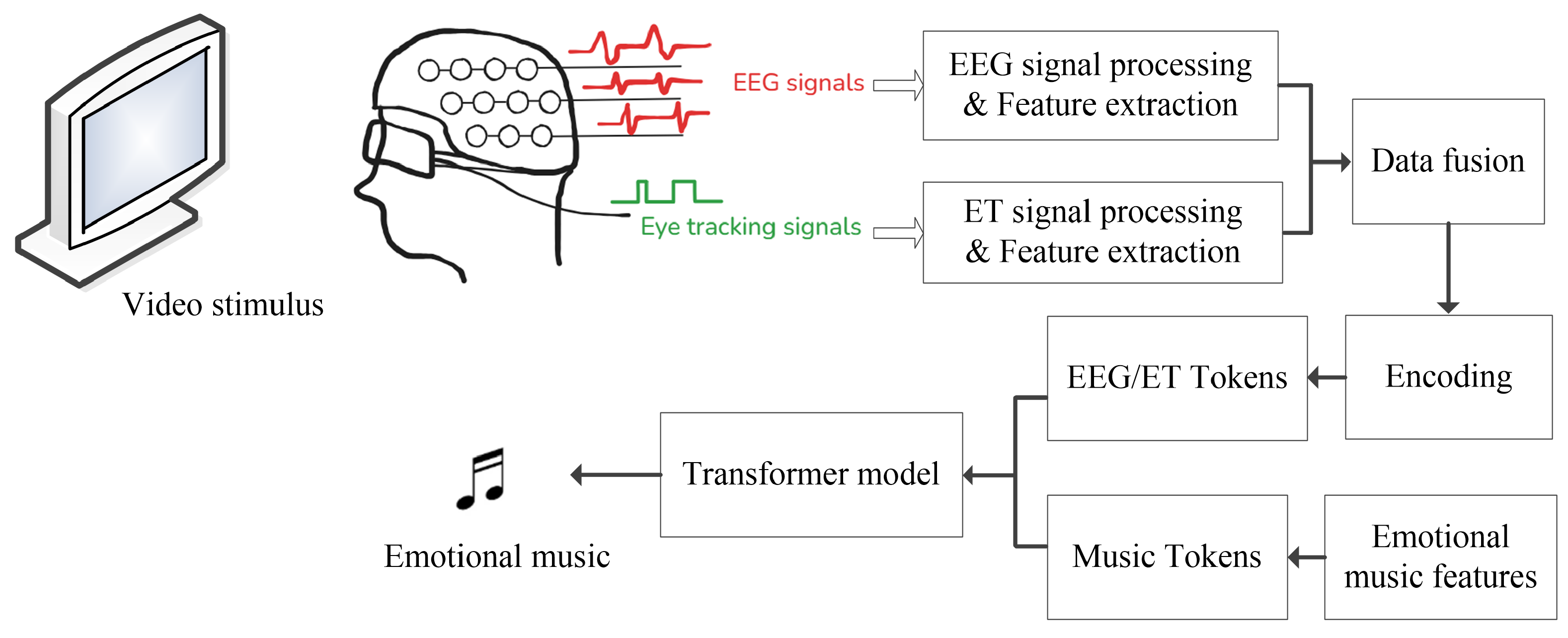

4.3.2. A Multimodal Signal-Driven Emotional Music Generation System

4.4. Other Challenges of AI-Generated Music

4.4.1. Potential Training Bias

4.4.2. Difficulty in Creation

4.5. Limitations of This Survey

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Yang, W.; Shen, L.; Huang, C.F.; Lee, J.; Zhao, X. Development Status, Frontier Hotspots, and Technical Evaluations in the Field of AI Music Composition Since the 21st Century: A Systematic Review. IEEE Access 2024, 12, 89452–89466. [Google Scholar] [CrossRef]

- Miranda, E.R. Handbook of Artificial Intelligence for Music; Springer: Berlin/Heidelberg, Germany, 2021. [Google Scholar]

- Briot, J.P.; Hadjeres, G.; Pachet, F.D. Deep Learning Techniques for Music Generation; Springer: Berlin/Heidelberg, Germany, 2020; Volume 1. [Google Scholar]

- Afchar, D.; Melchiorre, A.; Schedl, M.; Hennequin, R.; Epure, E.; Moussallam, M. Explainability in music recommender systems. AI Mag. 2022, 43, 190–208. [Google Scholar] [CrossRef]

- Messingschlager, T.V.; Appel, M. Mind ascribed to AI and the appreciation of AI-generated art. New Media Soc. 2023, 27, 1673–1692. [Google Scholar] [CrossRef]

- Williams, D.; Hodge, V.J.; Wu, C.Y. On the use of ai for generation of functional music to improve mental health. Front. Artif. Intell. 2020, 3, 497864. [Google Scholar] [CrossRef]

- Shen, L.; Zhang, H.; Zhu, C.; Li, R.; Qian, K.; Meng, W.; Tian, F.; Hu, B.; Schuller, B.W.; Yamamoto, Y. A First Look at Generative Artificial Intelligence Based Music Therapy for Mental Disorders. IEEE Trans. Consum. Electron. 2024; early access. [Google Scholar] [CrossRef]

- Wu, J.; Ji, Z.; Li, P. C2-MAGIC: Chord-Controllable Multi-track Accompaniment Generation with Interpretability and Creativity. In Summit on Music Intelligence; Springer: Berlin/Heidelberg, Germany, 2023; pp. 108–121. [Google Scholar]

- Cope, D. Experiments in Musical Intelligence; A-R Editions: Madison, WI, USA, 1996. [Google Scholar]

- Payne, C. MuseNet. OpenAI Blog 2019, 3. Available online: https://openai.com/index/musenet/ (accessed on 3 March 2025).

- Briot, J.P.; Pachet, F. Deep learning for music generation: Challenges and directions. Neural Comput. Appl. 2020, 32, 981–993. [Google Scholar] [CrossRef]

- Drott, E. Copyright, compensation, and commons in the music AI industry. Creat. Ind. J. 2021, 14, 190–207. [Google Scholar] [CrossRef]

- Pachet, F.; Roy, P.; Carré, B. Assisted music creation with flow machines: Towards new categories of new. In Handbook of Artificial Intelligence for Music: Foundations, Advanced Approaches, and Developments for Creativity; Springer: Berlin/Heidelberg, Germany, 2021; pp. 485–520. [Google Scholar]

- Schäfer, T.; Sedlmeier, P.; Städtler, C.; Huron, D. The psychological functions of music listening. Front. Psychol. 2013, 4, 511. [Google Scholar] [CrossRef]

- Wang, Z.; Liu, H.; Yu, J.; Zhang, T.; Liu, Y.; Zhang, K. MuDiT & MuSiT: Alignment with Colloquial Expression in Description-to-Song Generation. arXiv 2024, arXiv:2407.03188. [Google Scholar]

- Supper, M. A few remarks on algorithmic composition. Comput. Music J. 2001, 25, 48–53. [Google Scholar] [CrossRef]

- Tanaka, T.; Furukawa, K. Automatic melodic grammar generation for polyphonic music using a classifier system. In Proceedings of the SMC Conferences, Seoul, Republic of Korea, 14–17 October 2012; pp. 150–156. [Google Scholar]

- Pachet, F. Interacting with a musical learning system: The continuator. In Proceedings of the International Conference on Music and Artificial Intelligence, Edinburgh, UK, 12–14 September 2002; Springer: Berlin/Heidelberg, Germany, 2002; pp. 119–132. [Google Scholar]

- Pachet, F.; Roy, P. Markov constraints: Steerable generation of Markov sequences. Constraints 2011, 16, 148–172. [Google Scholar] [CrossRef]

- Papadopoulos, A.; Roy, P.; Pachet, F. Assisted lead sheet composition using flowcomposer. In Proceedings of the Principles and Practice of Constraint Programming: 22nd International Conference, CP 2016, Toulouse, France, 5–9 September 2016; Proceedings 22. Springer: Berlin/Heidelberg, Germany, 2016; pp. 769–785. [Google Scholar]

- Huang, C.F.; Lian, Y.S.; Nien, W.P.; Chieng, W.H. Analyzing the perception of Chinese melodic imagery and its application to automated composition. Multimed. Tools Appl. 2016, 75, 7631–7654. [Google Scholar] [CrossRef]

- Simon, I.; Morris, D.; Basu, S. MySong: Automatic accompaniment generation for vocal melodies. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Florence, Italy, 5–10 April 2008; pp. 725–734. [Google Scholar]

- Williams, D.; Hodge, V.J.; Gega, L.; Murphy, D.; Cowling, P.I.; Drachen, A. AI and automatic music generation for mindfulness. In Proceedings of the 2019 AES International Conference on Immersive and Interactive Audio: Creating the Next Dimension of Sound Experience, York, UK, 27–29 March 2019. [Google Scholar]

- Horner, A.; Goldberg, D.E. Genetic Algorithms and Computer-Assisted Music Composition; Michigan Publishing, University of Michigan Library: Ann Arbor, MI, USA, 1991; Volume 51. [Google Scholar]

- Biles, J. GenJam: A genetic algorithm for generating jazz solos. In Proceedings of the ICMC, Aarhus, Denmark, 12–17 September 1994; ICMC: Ann Arbor, MI, USA, 1994; Volume 94, pp. 131–137. [Google Scholar]

- Dahlstedt, P.; Nordahl, M.G. Living melodies: Coevolution of sonic communication. Leonardo 2001, 34, 243–248. [Google Scholar] [CrossRef]

- Scirea, M.; Togelius, J.; Eklund, P.; Risi, S. Affective evolutionary music composition with MetaCompose. Genet. Program. Evolvable Mach. 2017, 18, 433–465. [Google Scholar] [CrossRef]

- De Prisco, R.; Zaccagnino, G.; Zaccagnino, R. EvoComposer: An evolutionary algorithm for 4-voice music compositions. Evol. Comput. 2020, 28, 489–530. [Google Scholar] [CrossRef]

- Todd, P.M. A connectionist approach to algorithmic composition. Comput. Music J. 1989, 13, 27–43. [Google Scholar] [CrossRef]

- Mozer, M.C. Neural network music composition by prediction: Exploring the benefits of psychoacoustic constraints and multi-scale processing. Connect. Sci. 1994, 6, 247–280. [Google Scholar] [CrossRef]

- Eck, D.; Schmidhuber, J. Finding temporal structure in music: Blues improvisation with LSTM recurrent networks. In Proceedings of the 12th IEEE Workshop on Neural Networks for Signal Processing, Martigny, Switzerland, 6 September 2002; IEEE: Piscataway, NJ, USA, 2002; pp. 747–756. [Google Scholar]

- Ji, S.; Yang, X.; Luo, J. A survey on deep learning for symbolic music generation: Representations, algorithms, evaluations, and challenges. ACM Comput. Surv. 2023, 56, 1–39. [Google Scholar] [CrossRef]

- Hadjeres, G.; Pachet, F.; Nielsen, F. Deepbach: A steerable model for bach chorales generation. In Proceedings of the International Conference on Machine Learning, PMLR, Sydney, Australia, 6–11 August 2017; pp. 1362–1371. [Google Scholar]

- Wu, J.; Hu, C.; Wang, Y.; Hu, X.; Zhu, J. A hierarchical recurrent neural network for symbolic melody generation. IEEE Trans. Cybern. 2019, 50, 2749–2757. [Google Scholar] [CrossRef]

- Yang, L.C.; Chou, S.Y.; Yang, Y.H. MidiNet: A convolutional generative adversarial network for symbolic-domain music generation. arXiv 2017, arXiv:1703.10847. [Google Scholar]

- Dong, H.W.; Hsiao, W.Y.; Yang, L.C.; Yang, Y.H. Musegan: Multi-track sequential generative adversarial networks for symbolic music generation and accompaniment. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Trieu, N.; Keller, R. JazzGAN: Improvising with generative adversarial networks. In Proceedings of the MUME Workshop, Salamanca, Spain, 25–26 June 2018. [Google Scholar]

- Donahue, C.; McAuley, J.; Puckette, M. Adversarial audio synthesis. arXiv 2018, arXiv:1802.04208. [Google Scholar]

- Engel, J.; Agrawal, K.K.; Chen, S.; Gulrajani, I.; Donahue, C.; Roberts, A. Gansynth: Adversarial neural audio synthesis. arXiv 2019, arXiv:1902.08710. [Google Scholar] [CrossRef]

- Brunner, G.; Konrad, A.; Wang, Y.; Wattenhofer, R. MIDI-VAE: Modeling dynamics and instrumentation of music with applications to style transfer. arXiv 2018, arXiv:1809.07600. [Google Scholar] [CrossRef]

- Roberts, A.; Engel, J.; Raffel, C.; Hawthorne, C.; Eck, D. A hierarchical latent vector model for learning long-term structure in music. In Proceedings of the International Conference on Machine Learning, PMLR, Stockholm, Sweden, 10–15 July 2018; pp. 4364–4373. [Google Scholar]

- Luo, J.; Yang, X.; Ji, S.; Li, J. MG-VAE: Deep Chinese folk songs generation with specific regional styles. In Proceedings of the 7th Conference on Sound and Music Technology (CSMT) Revised Selected Papers, Harbin, China, 26–29 December 2019; Springer: Singapore, 2020; pp. 93–106. [Google Scholar]

- Wang, Z.; Zhang, Y.; Zhang, Y.; Jiang, J.; Yang, R.; Zhao, J.; Xia, G. Pianotree vae: Structured representation learning for polyphonic music. arXiv 2020, arXiv:2008.07118. [Google Scholar] [CrossRef]

- Huang, C.Z.A.; Vaswani, A.; Uszkoreit, J.; Shazeer, N.; Simon, I.; Hawthorne, C.; Dai, A.M.; Hoffman, M.D.; Dinculescu, M.; Eck, D. Music Transformer: Generating Music with Long-Term Structure. arXiv 2018, arXiv:1809.04281. [Google Scholar]

- Dhariwal, P.; Jun, H.; Payne, C.; Kim, J.W.; Radford, A.; Sutskever, I. Jukebox: A generative model for music. arXiv 2020, arXiv:2005.00341. [Google Scholar] [CrossRef]

- Agostinelli, A.; Denk, T.I.; Borsos, Z.; Engel, J.; Verzetti, M.; Caillon, A.; Huang, Q.; Jansen, A.; Roberts, A.; Tagliasacchi, M.; et al. Musiclm: Generating music from text. arXiv 2023, arXiv:2301.11325. [Google Scholar] [CrossRef]

- Copet, J.; Kreuk, F.; Gat, I.; Remez, T.; Kant, D.; Synnaeve, G.; Adi, Y.; Défossez, A. Simple and controllable music generation. Adv. Neural Inf. Process. Syst. 2023, 36, 47704–47720. [Google Scholar]

- Forsgren, S.; Martiros, H. Riffusion-Stable Diffusion for Real-Time Music Generation. 2022. Available online: https://riffusion.com (accessed on 3 May 2025).

- Huang, Q.; Park, D.S.; Wang, T.; Denk, T.I.; Ly, A.; Chen, N.; Zhang, Z.; Zhang, Z.; Yu, J.; Frank, C.; et al. Noise2music: Text-conditioned music generation with diffusion models. arXiv 2023, arXiv:2302.03917. [Google Scholar]

- Schneider, F.; Kamal, O.; Jin, Z.; Schölkopf, B. Moûsai: Text-to-Music Generation with Long-Context Latent Diffusion. arXiv 2023, arXiv:2301.11757. [Google Scholar]

- Ning, Z.; Chen, H.; Jiang, Y.; Hao, C.; Ma, G.; Wang, S.; Yao, J.; Xie, L. DiffRhythm: Blazingly Fast and Embarrassingly Simple End-to-End Full-Length Song Generation with Latent Diffusion. arXiv 2025, arXiv:2503.01183. [Google Scholar]

- Yu, J.; Wu, S.; Lu, G.; Li, Z.; Zhou, L.; Zhang, K. Suno: Potential, prospects, and trends. Front. Inf. Technol. Electron. Eng. 2024, 25, 1025–1030. [Google Scholar] [CrossRef]

- Friberg, A. Generative rules for music performance: A formal description of a rule system. Comput. Music J. 1991, 15, 56–71. [Google Scholar] [CrossRef]

- Liu, C.H.; Ting, C.K. Computational intelligence in music composition: A survey. IEEE Trans. Emerg. Top. Comput. Intell. 2016, 1, 2–15. [Google Scholar] [CrossRef]

- Roads, C.; Mathews, M. Interview with max mathews. Comput. Music J. 1980, 4, 15–22. [Google Scholar] [CrossRef]

- Chomsky, N. Logical structure in language. J. Am. Soc. Inf. Sci. 1957, 8, 284. [Google Scholar] [CrossRef]

- Lindenmayer, A. Mathematical models for cellular interactions in development I. Filaments with one-sided inputs. J. Theor. Biol. 1968, 18, 280–299. [Google Scholar] [CrossRef] [PubMed]

- Prusinkiewicz, P. Score generation with L-systems. In Proceedings of the ICMC, Den Haag, The Netherlands, 20–24 October 1986; ICMC: Ann Arbor, MI, USA, 1986; pp. 455–457. [Google Scholar]

- Pachet, F. Creativity studies and musical interaction. In Musical Creativity; Psychology Press: London, UK, 2006; pp. 363–374. [Google Scholar]

- Wang, L.; Zhao, Z.; Liu, H.; Pang, J.; Qin, Y.; Wu, Q. A review of intelligent music generation systems. Neural Comput. Appl. 2024, 36, 6381–6401. [Google Scholar] [CrossRef]

- Sandred, O.; Laurson, M.; Kuuskankare, M. Revisiting the Illiac Suite–a rule-based approach to stochastic processes. Sonic Ideas/Ideas Sonicas 2009, 2, 42–46. [Google Scholar]

- Thyer, M.; Kuczera, G. Modeling long-term persistence in hydroclimatic time series using a hidden state Markov model. Water Resour. Res. 2000, 36, 3301–3310. [Google Scholar] [CrossRef]

- Meyn, S.P.; Tweedie, R.L. Markov Chains and Stochastic Stability; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Mor, B.; Garhwal, S.; Kumar, A. A systematic review of hidden Markov models and their applications. Arch. Comput. Methods Eng. 2021, 28, 1429–1448. [Google Scholar] [CrossRef]

- Back, T.; Hammel, U.; Schwefel, H.P. Evolutionary computation: Comments on the history and current state. IEEE Trans. Evol. Comput. 1997, 1, 3–17. [Google Scholar] [CrossRef]

- Holland, J.H. Adaptation in Natural and Artificial Systems: An Introductory Analysis with Applications to Biology, Control, and Artificial Intelligence; MIT Press: Cambridge, MA, USA, 1992. [Google Scholar]

- Berry, R.; Dahlstedt, P. Artificial Life: Why should musicians bother? Contemp. Music Rev. 2003, 22, 57–67. [Google Scholar] [CrossRef]

- Eck, D.; Schmidhuber, J. A first look at music composition using lstm recurrent neural networks. Ist. Dalle Molle Studi Sull Intell. Artif. 2002, 103, 48–56. [Google Scholar]

- Hochreiter, S. The vanishing gradient problem during learning recurrent neural nets and problem solutions. Int. J. Uncertain. Fuzziness-Knowl.-Based Syst. 1998, 6, 107–116. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Bisharad, D.; Laskar, R.H. Music genre recognition using convolutional recurrent neural network architecture. Expert Syst. 2019, 36, e12429. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Advances in Neural Information Processing Systems 27, Proceedings of the NIPS 2014, Montreal, QC, Canada, 8–13 December 2014; NeurlPS, 2014; Available online: https://proceedings.neurips.cc/paper_files/paper/2014/file/f033ed80deb0234979a61f95710dbe25-Paper.pdf (accessed on 22 April 2025).

- Kingma, D.P.; Welling, M. Auto-encoding variational bayes. arXiv 2013, arXiv:1312.6114. [Google Scholar]

- Simon, I.; Roberts, A.; Raffel, C.; Engel, J.; Hawthorne, C.; Eck, D. Learning a latent space of multitrack measures. arXiv 2018, arXiv:1806.00195. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems 30, Proceedings of the NIPS 2017, Long Beach, CA, USA, 4–9 December 2017; NeurlPS, 2017; Available online: https://proceedings.neurips.cc/paper_files/paper/2017/file/3f5ee243547dee91fbd053c1c4a845aa-Paper.pdf (accessed on 22 April 2025).

- Zhang, N. Learning adversarial transformer for symbolic music generation. IEEE Trans. Neural Netw. Learn. Syst. 2020, 34, 1754–1763. [Google Scholar] [CrossRef]

- Jiang, J.; Xia, G.G.; Carlton, D.B.; Anderson, C.N.; Miyakawa, R.H. Transformer vae: A hierarchical model for structure-aware and interpretable music representation learning. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 516–520. [Google Scholar]

- Wang, W.; Li, J.; Li, Y.; Xing, X. Style-conditioned music generation with Transformer-GANs. Front. Inf. Technol. Electron. Eng. 2024, 25, 106–120. [Google Scholar] [CrossRef]

- Sohl-Dickstein, J.; Weiss, E.; Maheswaranathan, N.; Ganguli, S. Deep unsupervised learning using nonequilibrium thermodynamics. In Proceedings of the International Conference on Machine Learning, PMLR, Lille, France, 7–9 July 2015; pp. 2256–2265. [Google Scholar]

- Han, S. AI, culture industries and entertainment. In The Routledge Social Science Handbook of AI; Routledge: Boca Raton, FL, USA, 2021; pp. 295–312. [Google Scholar]

- Huang, C.Z.A.; Hawthorne, C.; Roberts, A.; Dinculescu, M.; Wexler, J.; Hong, L.; Howcroft, J. The bach doodle: Approachable music composition with machine learning at scale. arXiv 2019, arXiv:1907.06637. [Google Scholar] [CrossRef]

- Zulić, H. How AI can change/improve/influence music composition, performance and education: Three case studies. INSAM J. Contemp. Music. Art Technol. 2019, 100–114. [Google Scholar] [CrossRef]

- Behr, A. Now and Then: Enabled by AI–Created by Profound Connections Between the Four Beatles. The Conversation. 2023. Available online: https://theconversation.com/now-and-then-enabled-by-ai-created-by-profound-connections-between-the-four-beatles-216920 (accessed on 15 April 2025).

- Thaut, M.H.; McIntosh, G.C.; Hoemberg, V. Neurobiological foundations of neurologic music therapy: Rhythmic entrainment and the motor system. Front. Psychol. 2015, 5, 1185. [Google Scholar] [CrossRef]

- Damm, L.; Varoqui, D.; De Cock, V.C.; Dalla Bella, S.; Bardy, B. Why do we move to the beat? A multi-scale approach, from physical principles to brain dynamics. Neurosci. Biobehav. Rev. 2020, 112, 553–584. [Google Scholar] [CrossRef] [PubMed]

- Marquez-Garcia, A.V.; Magnuson, J.; Morris, J.; Iarocci, G.; Doesburg, S.; Moreno, S. Music therapy in autism spectrum disorder: A systematic review. Rev. J. Autism Dev. Disord. 2022, 9, 91–107. [Google Scholar] [CrossRef]

- Eftychios, A.; Nektarios, S.; Nikoleta, G. Alzheimer disease and music-therapy: An interesting therapeutic challenge and proposal. Adv. Alzheimer’s Dis. 2021, 10, 1–18. [Google Scholar] [CrossRef]

- Tang, H.; Chen, L.; Wang, Y.; Zhang, Y.; Yang, N.; Yang, N. The efficacy of music therapy to relieve pain, anxiety, and promote sleep quality, in patients with small cell lung cancer receiving platinum-based chemotherapy. Support. Care Cancer 2021, 29, 7299–7306. [Google Scholar] [CrossRef]

- Chomiak, T.; Sidhu, A.S.; Watts, A.; Su, L.; Graham, B.; Wu, J.; Classen, S.; Falter, B.; Hu, B. Development and validation of ambulosono: A wearable sensor for bio-feedback rehabilitation training. Sensors 2019, 19, 686. [Google Scholar] [CrossRef]

- Wittwer, J.E.; Winbolt, M.; Morris, M.E. Home-based gait training using rhythmic auditory cues in Alzheimer’s disease: Feasibility and outcomes. Front. Med. 2020, 6, 335. [Google Scholar] [CrossRef]

- Gonzalez-Hoelling, S.; Bertran-Noguer, C.; Reig-Garcia, G.; Suñer-Soler, R. Effects of a music-based rhythmic auditory stimulation on gait and balance in subacute stroke. Int. J. Environ. Res. Public Health 2021, 18, 2032. [Google Scholar] [CrossRef]

- Grau-Sánchez, J.; Münte, T.F.; Altenmüller, E.; Duarte, E.; Rodríguez-Fornells, A. Potential benefits of music playing in stroke upper limb motor rehabilitation. Neurosci. Biobehav. Rev. 2020, 112, 585–599. [Google Scholar] [CrossRef]

- Ghai, S.; Maso, F.D.; Ogourtsova, T.; Porxas, A.X.; Villeneuve, M.; Penhune, V.; Boudrias, M.H.; Baillet, S.; Lamontagne, A. Neurophysiological changes induced by music-supported therapy for recovering upper extremity function after stroke: A case series. Brain Sci. 2021, 11, 666. [Google Scholar] [CrossRef]

- Dogruoz Karatekin, B.; Icagasioglu, A. The effect of therapeutic instrumental music performance method on upper extremity functions in adolescent cerebral palsy. Acta Neurol. Belg. 2021, 121, 1179–1189. [Google Scholar] [CrossRef]

- Mina, F.; Darweesh, M.E.S.; Khattab, A.N.; Serag, S.M. Role and efficacy of music therapy in learning disability: A systematic review. Egypt. J. Otolaryngol. 2021, 37, 1–12. [Google Scholar] [CrossRef]

- Butzlaff, R. Can music be used to teach reading? J. Aesthetic Educ. 2000, 34, 167–178. [Google Scholar] [CrossRef]

- Okely, J.A.; Overy, K.; Deary, I.J. Experience of playing a musical instrument and lifetime change in general cognitive ability: Evidence from the lothian birth cohort 1936. Psychol. Sci. 2022, 33, 1495–1508. [Google Scholar] [CrossRef] [PubMed]

- Morrison, B. Millions of U.S. Students Denied Access to Music Education, According to First-Ever National Study—Arts Education Data Project—artseddata.org. Available online: https://artseddata.org/millions-of-u-s-students-denied-access-to-music-education-according-to-first-ever-national-study/ (accessed on 26 March 2025).

- Ruth, N.; Müllensiefen, D. Survival of musical activities. When do young people stop making music? PLoS ONE 2021, 16, e0259105. [Google Scholar] [CrossRef] [PubMed]

- Wei, J.; Karuppiah, M.; Prathik, A. College music education and teaching based on AI techniques. Comput. Electr. Eng. 2022, 100, 107851. [Google Scholar] [CrossRef]

- Wu, Q. The application of artificial intelligence in music education management: Opportunities and challenges. J. Comput. Methods Sci. Eng. 2024, 25, 2836–2848. [Google Scholar] [CrossRef]

- Li, S. The impact of AI-driven music production software on the economics of the music industry. Inf. Dev. 2025, 02666669241312170. [Google Scholar] [CrossRef]

- Alexander, A. “Heart on My Sleeve”: An AI-Created Hit Song Mimicking Drake and The Weeknd Goes Viral; SAGE Business Cases Originals; SAGE Publications: Thousand Oaks, CA, USA, 2024. [Google Scholar]

- Suno. Do I Have the Copyrights to Songs I Made? 2025. Available online: https://help.suno.com/en/articles/2746945 (accessed on 16 June 2025).

- Gruetzemacher, R.; Whittlestone, J. The transformative potential of artificial intelligence. Futures 2022, 135, 102884. [Google Scholar] [CrossRef]

- Lee, E. Prompting progress: Authorship in the age of AI. Fla. Law Rev. 2024, 76, 1445. [Google Scholar] [CrossRef]

- Steele, A. Universal, Warner and Sony Are Negotiating AI Licensing Rights for Music. 2025. Available online: https://www.wsj.com/business/media/ai-music-licensing-universal-warner-sony-92bcbc0d (accessed on 16 June 2025).

- Sarkar, P.; Chakrabarti, A. Studying engineering design creativity-developing a common definition and associated measures. In Proceedings of the NSF International Workshop on Studying Design Creativity’08, Aix-en-Provence, France, 10–11 March 2008. [Google Scholar]

- Colton, S.; Wiggins, G.A. Computational creativity: The final frontier? In Proceedings of the ECAI 2012, Montpellier, France, 27–31 August 2012; IOS Press: Amsterdam, The Netherlands, 2012; pp. 21–26. [Google Scholar]

- Carnovalini, F.; Rodà, A. Computational creativity and music generation systems: An introduction to the state of the art. Front. Artif. Intell. 2020, 3, 14. [Google Scholar] [CrossRef]

- Ji, S.; Luo, J.; Yang, X. A comprehensive survey on deep music generation: Multi-level representations, algorithms, evaluations, and future directions. arXiv 2020, arXiv:2011.06801. [Google Scholar] [CrossRef]

- Huang, J.; Wang, J.C.; Smith, J.B.; Song, X.; Wang, Y. Modeling the compatibility of stem tracks to generate music mashups. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtually, 2–9 February 2021; Volume 35, pp. 187–195. [Google Scholar]

- Stevens Jr, C.E.; Zabelina, D.L. Creativity comes in waves: An EEG-focused exploration of the creative brain. Curr. Opin. Behav. Sci. 2019, 27, 154–162. [Google Scholar] [CrossRef]

- Samal, P.; Hashmi, M.F. Role of machine learning and deep learning techniques in EEG-based BCI emotion recognition system: A review. Artif. Intell. Rev. 2024, 57, 50. [Google Scholar] [CrossRef]

- Lee, M.S.; Lee, Y.K.; Pae, D.S.; Lim, M.T.; Kim, D.W.; Kang, T.K. Fast emotion recognition based on single pulse PPG signal with convolutional neural network. Appl. Sci. 2019, 9, 3355. [Google Scholar] [CrossRef]

- Corporation, B. Unleash Your Creativity Make Music with Boomy AI. 2025. Available online: https://boomy.com/ (accessed on 19 June 2025).

- Spotify. About Spotify. 2025. Available online: https://newsroom.spotify.com/company-info/ (accessed on 19 June 2025).

- Lecamwasam, K.; Chaudhuri, T.R. Exploring listeners’ perceptions of AI-generated and human-composed music for functional emotional applications. arXiv 2025, arXiv:2506.02856. [Google Scholar]

- Hagen, A.N. Datafication, literacy, and democratization in the music industry. Pop. Music Soc. 2022, 45, 184–201. [Google Scholar] [CrossRef]

- Weng, S.S.; Chen, H.C. Exploring the role of deep learning technology in the sustainable development of the music production industry. Sustainability 2020, 12, 625. [Google Scholar] [CrossRef]

- Qian, S.; Watson, B. Records of the Grand Historian; Columbia University Press: New York, NY, USA, 1993; Volume 1. [Google Scholar]

- Novelli, N.; Proksch, S. Am I (deep) blue? Music-making ai and emotional awareness. Front. Neurorobot. 2022, 16, 897110. [Google Scholar] [CrossRef]

- Kang, C.; Lu, P.; Yu, B.; Tan, X.; Ye, W.; Zhang, S.; Bian, J. EmoGen: Eliminating subjective bias in emotional music generation. arXiv 2023, arXiv:2307.01229. [Google Scholar] [CrossRef]

- Ji, S.; Yang, X. Emomusictv: Emotion-conditioned symbolic music generation with hierarchical transformer vae. IEEE Trans. Multimed. 2023, 26, 1076–1088. [Google Scholar] [CrossRef]

- Yao, W.; Chen, C.P.; Zhang, Z.; Zhang, T. AE-AMT: Attribute-Enhanced Affective Music Generation with Compound Word Representation. IEEE Trans. Comput. Soc. Syst. 2024, 12, 890–904. [Google Scholar] [CrossRef]

- Zheng, K.; Meng, R.; Zheng, C.; Li, X.; Sang, J.; Cai, J.; Wang, J.; Wang, X. EmotionBox: A music-element-driven emotional music generation system based on music psychology. Front. Psychol. 2022, 13, 841926. [Google Scholar] [CrossRef] [PubMed]

- Kundu, S.; Singh, S.; Iwahori, Y. Emotion-Guided Image to Music Generation. arXiv 2024, arXiv:2410.22299. [Google Scholar] [CrossRef]

- Gomez-Morales, O.; Perez-Nastar, H.; Álvarez-Meza, A.M.; Torres-Cardona, H.; Castellanos-Dominguez, G. EEG-Based Music Emotion Prediction Using Supervised Feature Extraction for MIDI Generation. Sensors 2025, 25, 1471. [Google Scholar] [CrossRef] [PubMed]

- Ran, S.; Zhong, W.; Ma, L.; Duan, D.; Ye, L.; Zhang, Q. Mind to Music: An EEG Signal-Driven Real-Time Emotional Music Generation System. Int. J. Intell. Syst. 2024, 2024, 9618884. [Google Scholar] [CrossRef]

- Colafiglio, T.; Ardito, C.; Sorino, P.; Lofù, D.; Festa, F.; Di Noia, T.; Di Sciascio, E. Neuralpmg: A neural polyphonic music generation system based on machine learning algorithms. Cogn. Comput. 2024, 16, 2779–2802. [Google Scholar] [CrossRef]

- Jiang, H.; Chen, Y.; Wu, D.; Yan, J. EEG-driven automatic generation of emotive music based on transformer. Front. Neurorobot. 2024, 18, 1437737. [Google Scholar] [CrossRef]

- Qin, Y.; Yang, J.; Zhang, M.; Zhang, M.; Kuang, J.; Yu, Y.; Zhang, S. Construction of a Quality Evaluation System for University Course Teaching Based on Multimodal Brain Data. Recent Patents Eng. 2025; in press. [Google Scholar]

- Lim, J.Z.; Mountstephens, J.; Teo, J. Emotion recognition using eye-tracking: Taxonomy, review and current challenges. Sensors 2020, 20, 2384. [Google Scholar] [CrossRef]

- Ekman, P.; Dalgleish, T.; Power, M. Basic Emotions; John Wiley & Sons Ltd.: San Francisco, CA, USA, 1999. [Google Scholar]

- Kim, B.; Kim, H.; Kim, K.; Kim, S.; Kim, J. Learning not to learn: Training deep neural networks with biased data. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 9012–9020. [Google Scholar]

- Bryan-Kinns, N.; Li, Z. Reducing Barriers to the Use of Marginalised Music Genres in AI. arXiv 2024, arXiv:2407.13439. [Google Scholar] [CrossRef]

- Mehta, A.; Chauhan, S.; Choudhury, M. Missing Melodies: AI Music Generation and its “Nearly” Complete Omission of the Global South. arXiv 2024, arXiv:2412.04100. [Google Scholar] [CrossRef]

- Shahul Hameed, M.A.; Qureshi, A.M.; Kaushik, A. Bias mitigation via synthetic data generation: A review. Electronics 2024, 13, 3909. [Google Scholar] [CrossRef]

- Radwan, A.; Zaafarani, L.; Abudawood, J.; AlZahrani, F.; Fourati, F. Addressing bias through ensemble learning and regularized fine-tuning. arXiv 2024, arXiv:2402.00910. [Google Scholar] [CrossRef]

- Zhang, S.; Zhu, C.; Li, H.; Cai, J.; Yang, L. Gradient-aware learning for joint biases: Label noise and class imbalance. Neural Netw. 2024, 171, 374–382. [Google Scholar] [CrossRef]

| Model | Advantages | Challenges | Scenarios |

|---|---|---|---|

| Rule-based | Comprehensible for humans, suitable for a large amount of music, no training. | Lack of creativity and flexibility in music, poor versatility and scalability. | Fixed style of music, music education. |

| Markov chain | Easy to control, allows adding constraints. | Has difficulty capturing long time structures, repetition of melodies in corpus. | Game background music, repetitive electronic music clips. |

| Evolutionary computation | Good adaptability to feedback and evaluation. | Time-consuming, quality relies on fitness function. | Interactive music, game dynamic soundtrack, improvised music. |

| RNN | Strong at capturing temporal structure in music. | Poor quality in long time sequences, over-fitting, lack of diversity. | Short or simple melodies in real time, classical music. |

| GAN | High-quality music, diverse and unique style music in real time. | Lots of computing resources/time for training, unstable in training. | Complex music with multiple tracks, electronic music. |

| VAE | Diverse and complex musical pieces, flexible and controllable, fast training speed. | Low quality and lacks sharpness and detail, relatively complicated training. | Diverse and complex musical pieces, different style interpolation. |

| Transformer | Excellent at capturing long-range dependencies and complex musical structures. | Requires significant computational resources and large amounts of data. | Music with complex arrangements and long sequences, composition of multiple tracks. |

| Diffusion models | High-quality audio, capturing complex audio characteristics, stable training. | Long training generation time, high computational resources. | High-fidelity music, professional audio. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wei, L.; Yu, Y.; Qin, Y.; Zhang, S. From Tools to Creators: A Review on the Development and Application of Artificial Intelligence Music Generation. Information 2025, 16, 656. https://doi.org/10.3390/info16080656

Wei L, Yu Y, Qin Y, Zhang S. From Tools to Creators: A Review on the Development and Application of Artificial Intelligence Music Generation. Information. 2025; 16(8):656. https://doi.org/10.3390/info16080656

Chicago/Turabian StyleWei, Lijun, Yuanyu Yu, Yuping Qin, and Shuang Zhang. 2025. "From Tools to Creators: A Review on the Development and Application of Artificial Intelligence Music Generation" Information 16, no. 8: 656. https://doi.org/10.3390/info16080656

APA StyleWei, L., Yu, Y., Qin, Y., & Zhang, S. (2025). From Tools to Creators: A Review on the Development and Application of Artificial Intelligence Music Generation. Information, 16(8), 656. https://doi.org/10.3390/info16080656