Abstract

School dropout in higher education remains a significant global challenge with profound socioeconomic consequences. To address this complex issue, educational institutions increasingly rely on business intelligence (BI) and related predictive analytics, such as machine learning and data mining techniques. This systematic review critically examines the application of BI and predictive analytics for analyzing and preventing student dropout, synthesizing evidence from 230 studies published globally between 1996 and 2025. We collected literature from the Google Scholar and Scopus databases using a comprehensive search strategy, incorporating keywords such as “business intelligence”, “machine learning”, and “big data”. The results highlight a wide range of predictive tools and methodologies, notably data visualization platforms (e.g., Power BI) and algorithms like decision trees, Random Forest, and logistic regression, demonstrating effectiveness in identifying dropout patterns and at-risk students. Common predictive variables included personal, socioeconomic, academic, institutional, and engagement-related factors, reflecting dropout’s multifaceted nature. Critical challenges identified include data privacy regulations (e.g., GDPR and FERPA), limited data integration capabilities, interpretability of advanced models, ethical considerations, and educators’ capacity to leverage BI effectively. Despite these challenges, BI applications significantly enhance institutions’ ability to predict dropout accurately and implement timely, targeted interventions. This review emphasizes the need for ongoing research on integrating ethical AI-driven analytics and scaling BI solutions across diverse educational contexts to reduce dropout rates effectively and sustainably.

1. Introduction

In today’s information-driven society, educational institutions face the challenge of efficiently transforming large volumes of data into actionable insights to address critical problems, such as school dropout. School dropout, particularly in higher education, is a complex phenomenon with severe implications at the individual, social, and economic levels. Factors contributing to dropout range from socioeconomic circumstances and academic performance to institutional policies and emotional support [1,2]. Despite considerable efforts to mitigate dropout, many institutions still struggle due to inadequate predictive tools, delayed interventions, and fragmented data management practices.

In this context, business intelligence (BI) has emerged as a powerful approach to support data-driven decision-making, offering institutions strategic tools for analyzing data to identify patterns, trends, and risks proactively [3]. Originally defined as “concepts and methods to improve business decisions through fact-based support systems” [3], BI now encompasses a wide range of technologies and methodologies, including data warehousing, online analytical processing (OLAP), predictive analytics, and data visualization. These capabilities enable organizations not only to understand historical data but also to anticipate future trends and issues, thus facilitating informed and timely decisions [4,5].

Educational institutions adopting BI tools can significantly improve their ability to predict and prevent dropout by leveraging predictive models and comprehensive data analyses. Specifically, BI allows for the integration of diverse data sources—from academic records and socio-demographic indicators to real-time student engagement data—into cohesive analytical frameworks that help identify at-risk students early and precisely. This integration supports the development of targeted interventions and strategic resource allocation to effectively reduce dropout rates.

Nevertheless, despite growing interest and promising results reported in various studies, the application of BI tools for dropout prediction in education remains fragmented. Many institutions struggle to identify best practices, appropriate methodologies, and robust predictive models suitable for their contexts. Furthermore, the existing literature is often dispersed, lacking comprehensive syntheses or clear guidance on effectively implementing BI approaches for dropout prevention.

Addressing these gaps, this systematic review aims to consolidate and critically analyze the existing literature on the application of BI and related data-driven approaches—specifically machine learning and big data analytics—in predicting and preventing school dropout in higher education. The study seeks to clarify how these tools have been implemented, identify the most effective strategies, and highlight ongoing challenges and opportunities for future research. By providing a clearer and more gradual transition from general BI concepts to specific applications in dropout analysis, this review contributes to enhancing institutional capacity to reduce dropout rates and improve educational outcomes globally.

Business Intelligence in the Educational Sector

Business intelligence (BI) provides educational institutions with critical tools and methodologies for the continuous analysis of institutional data, allowing for the proactive identification of trends, opportunities, and risks [6]. By effectively transforming large volumes of diverse data into actionable insights, BI supports better decision making in various educational operations.

The key benefits of BI for educational institutions include creating a continuous cycle of improvement in which data are analyzed to inform decision-making, subsequently generating new data for ongoing analysis [3]. BI also enables comprehensive, historical views of institutional performance through robust metrics such as Key Performance Indicators (KPIs) and Key Goal Indicators (KGIs). Additionally, BI tools provide detailed yet intuitive access to updated data, facilitating swift and effective decision-making.

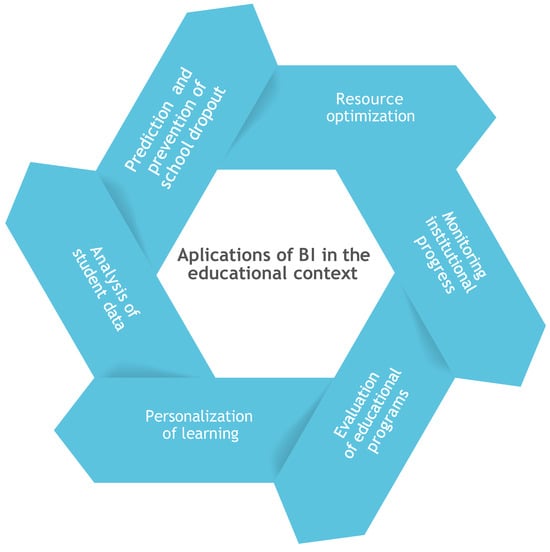

Within educational contexts, implementing BI technologies—such as data warehousing, online analytical processing (OLAP), visual analytics, predictive analytics, dashboards, and data integration through Extract–Transform–Load (ETL) processes—significantly improves institutional responsiveness and efficiency [3,7]. Specifically, as illustrated in Figure 1, these technologies empower institutions to:

Figure 1.

Business intelligence applications in the educational environment.

- Analyze Student Data: Evaluate academic performance, attendance, and progression metrics to proactively identify students at risk, enabling targeted instructional and support interventions.

- Monitor Institutional Performance: Track strategic indicators, including graduation rates, student satisfaction, and employment outcomes, to pinpoint strengths and weaknesses and develop evidence-based improvement strategies.

- Personalize Learning Experiences: Tailor educational content and approaches based on students’ learning patterns, preferences, and challenges, thereby enhancing educational effectiveness and student engagement.

- Optimize Resource Utilization: Analyze the allocation and effectiveness of educational resources such as staff, materials, and technology to maximize educational impact and institutional efficiency.

- Evaluate Educational Programs: Assess program performance comprehensively, identifying successful initiatives and areas needing improvement, thus guiding informed decisions about curricular and operational adjustments.

This systematic review specifically explores how educational institutions leverage BI and related predictive analytics tools, such as machine learning and big data, to address school dropout. By examining global case studies, the review identifies successful strategies, highlights implementation challenges, and outlines opportunities for future research and practice aimed at reducing dropout rates and improving educational outcomes.

2. Materials and Methods

This study was conducted using a systematic literature review approach, following the guidelines proposed by the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) framework [8]. The PRISMA methodology was selected as it ensures transparency, replicability, and rigor in the identification, selection, and analysis of the relevant literature. This systematic approach allows us to comprehensively address our research questions regarding the use of business intelligence and machine learning methods for predicting and preventing school dropout in higher education.

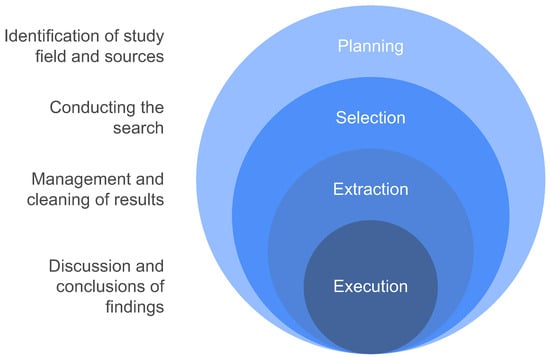

To develop this study, we followed the four stages proposed by Grijalva et al. [9] shown in Figure 2.

Figure 2.

Stages followed to conduct the systematic review based on [9].

The following are detailed descriptions of each stage.

2.1. Planning

In this stage, this study addresses the following research questions, which serve as a guide.

- Has business intelligence allowed for the prediction and prevention of school dropouts?

- What are the methodologies and tools for business intelligence that educational institutions have used in their management processes?

- What have been the main contributions of business intelligence to the issue of school dropout?

Information Retrieval

To perform the analysis, we selected two academic databases: Google Scholar and Scopus. Google Scholar was chosen due to its extensive indexing of a wide range of academic outputs, including journal articles, conference proceedings, preprints, theses, dissertations, and patents. This broad scope ensured comprehensive coverage of diverse and emerging literature related to business intelligence, machine learning, and predictive analytics in education. Scopus was selected as a complementary source because of its specialized focus on peer-reviewed literature, providing high-quality academic articles and ensuring methodological rigor in our systematic review process.

The search criteria included scientific articles, conference proceedings, book chapters, and theses containing the keywords presented in Table 1. These databases together offered the most balanced and thorough representation of relevant scholarly work, aligning well with the objectives and scope of this review.

Table 1.

Search strings used for retrieving articles and theses from Google Scholar and Scopus databases.

2.2. Data Selection

Articles were selected for this systematic review based on strict inclusion and exclusion criteria shown in Table 2. If a study met the following criteria, it was included: (1) Business intelligence-related tools were used for dropout prediction; and (2) the data were from real-world case studies. The excluded articles were those that did not have a clear BI implementation framework, were solely theoretical in focus, or were not published in English or Spanish. This approach helped prevent the review from including studies with little or no practical relevance to dropout prevention.

Table 2.

Inclusion and exclusion criteria for article selection.

2.3. Information Extraction

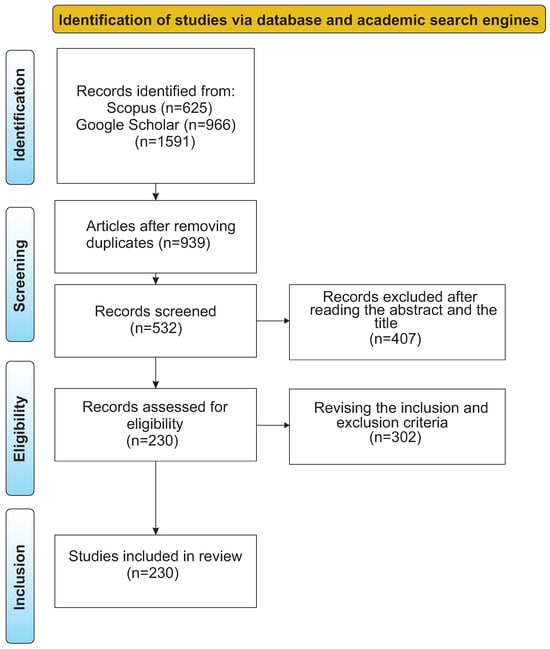

The methodology used for this systematic review is based on the PRISMA Statement [8] (shown in Figure 3), which aims to identify and analyze business intelligence methodologies that have been used as strategies to analyze school dropout.

Figure 3.

Process of selecting scientific articles for review.

3. Results

This section integrates and summarizes the main findings from the literature on business intelligence and data-driven approaches to predict and prevent student dropout in higher education. The discussion is organized according to the distribution of research by region (Section 3.1), publication trends over time (Section 3.2), data sources used in the analyzed studies (Section 3.3), the machine learning methods employed (Section 3.4), software tools and platforms utilized (Section 3.5), the general types of research approaches (Section 3.6), the main application objectives of these studies (Section 3.7), and the reported challenges (Section 3.8). Each subsection integrates insights from all the references we identified, highlighting how each study contributes to understanding the school dropout phenomenon and how business intelligence can address it.

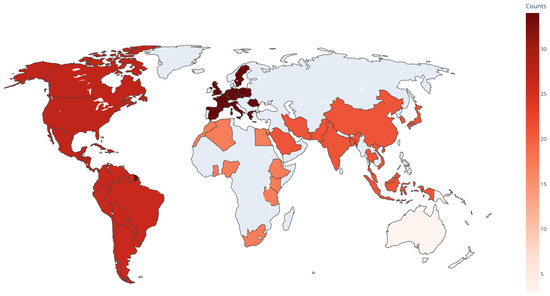

3.1. Global Distribution of Research

A large portion of research on student dropout prediction and prevention using business intelligence originates from countries such as the United States, the United Kingdom, Spain, Brazil, and other regions in Latin America (e.g., Mexico, Colombia, Chile) [10], reflecting a growing global interest in these techniques. For example, multiple studies conducted in the United States explore how analytics can identify at-risk students [11,12,13,14,15,16,17,18,19,20,21,22,23,24], while in Latin America, Brazil has a large body of work focusing on machine learning models for prediction of dropout [25,26,27,28,29,30,31,32,33,34,35,36,37,38] and also from other works [39,40,41,42,43,44,45,46,47,48,49,50] that reflect widespread investigations in the region. The focus on Latin America is further emphasized by customized solutions such as the ADHE Dashboard [51], developed in Colombia to help academic program administrators better visualize the dropout phenomenon, or the multi-department study in Peru [52], which employed machine learning and survival analysis to predict dropout times in various engineering faculties. Additional examples include recent Ecuador-based investigations [53,54], where machine learning aids in the identification of at-risk students in STEM institutions. Other Latin American countries with documented efforts include Chile, where logistic regression and other machine learning models have been tested using public datasets [10]. Increasingly, higher education institutions in Mexico are using predictive analytics to identify at-risk students in large-scale data from multiple groups [50,55,56,57,58,59,60,61].

There is also a notable concentration of publications from Asia, such as those from Saudi Arabia [62,63,64,65,66,67], India [68,69,70,71,72,73,74], China [75,76,77], Taiwan [78], Malaysia [79], Thailand [80], and Japan [81], in conjunction with a wider collection of works that demonstrate a growing interest in Asian contexts [82,83,84,85,86,87,88,89]. For example, Patel et al. [73] focuses on a newly created Gujarat dataset (EduDropX) to analyze dropout based on demographic and cultural variables, achieving highly accurate regression-based predictions. In contrast, the work presented in [74] analyzes Kaggle-based data in India to compare random forest and naive Bayes for a balanced dropout classification. Tsai et al. [78] present a case study in Taiwan exploring statistical and deep learning for precision education, while [81] leverage LMS log data in Japan for early detection of at-risk students. Meanwhile, Villegas et al. [54] offer insight into improving student retention in higher education settings through a sustainable approach, reflecting how institutions around the world seek more holistic frameworks for retention, resource optimization, and ethical AI use.

Research in African contexts, though less frequent, is present with Ghana and Tanzania as prime examples [90,91,92], along with Nigeria [93]. Likewise, references from European contexts abound, such as Germany [94,95,96,97,98,99], Finland [100], Spain [101,102,103,104], Portugal [105,106,107], and Italy [108,109,110], each discussing methods to track and forecast student dropout. The Netherlands is represented by [111], which documents a field experiment on early warning systems and the effect of improving individual student counseling. Other publications reflect cross-continental collaborations and meta-analyses [8,112,113,114,115,116]. Africa-based or Africa-related studies also include references from Uganda [117] and data-mining applications in Ghana [118].

An early examination of academic support and monitoring in Latin American contexts also emerges in [119], highlighting how new technologies (artificial intelligence, smart monitors) can significantly improve student retention but require careful integration within local cultures. Furthermore, some universities have begun focusing on designing actionable methods that target specific course pathways, as outlined in [120]. Overall, the scope of publications underscores that the worldwide spread of learning analytics and data-driven insights is not limited to a single region, but rather is a universal pursuit to reduce student dropout rates.

The global distribution of research highlighted above is visually represented in Figure 4, which provides an overview of the geographic concentration of publications focusing on student dropout prediction and prevention through business intelligence and data-driven methods. The map illustrates the number of studies per country, with color intensity corresponding to publication frequency. Countries such as the United States, Brazil, Spain, and the United Kingdom show particularly high research activity, reflecting robust academic and institutional interest. Additionally, notable concentrations in regions like Latin America, Asia, and Europe underscore the worldwide adoption and adaptation of these predictive analytics techniques in diverse educational contexts.

Figure 4.

Global distribution of research papers focused on using business intelligence and data-driven techniques for predicting student dropout in higher education. Color intensity indicates the number of publications per country.

3.2. Temporal Distribution of Research

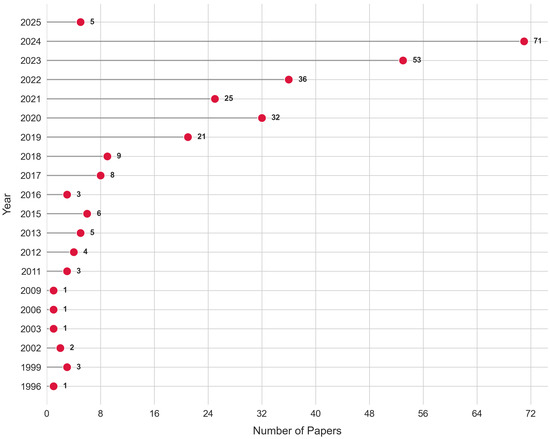

Figure 5 highlights how interest in dropout prediction has expanded considerably since earlier works of the late 1990s, such as those by Witten [121] and Shin [122], and by Dietterich [123]. Although earlier approaches were more exploratory, the proliferation of computing power, big data, and machine learning frameworks has led to more sophisticated predictive models. Classic works in the early 2000s demonstrated the feasibility of machine learning for dropout or at-risk detection [124,125,126,127], while mid-2010s studies investigated large-scale analytics in more depth [128,129,130]. From 2019 onward, the growth in publications has been markedly accelerated, reflecting a significant increase in the adoption of advanced data-driven solutions and the urgency to reduce dropout rates [105,108,115,131,132,133,134,135].

Figure 5.

Temporal distribution of research papers published on predicting student dropout in higher education using business intelligence and machine learning techniques (1996–2025). The number of publications demonstrates rapid growth in interest, particularly from 2019 onward.

Recent studies (2020–2025) exhibit a wide methodological range, including multi-phase predictive modeling [107] and novel data pipelines [136], while also addressing interpretability challenges [120,137]. Works such as [138] explore recommender systems to guide students toward suitable courses and prevent attrition; similarly, Alturki & Alturki [65] emphasizes how the increasing adoption of educational data mining from around 2015 onward promoted new lines of inquiry focused on predicting academic achievement and identifying at-risk students. The need for very early detection has propelled investigations into multi-semester data usage [72,139], while deep learning or neural approaches have gained attention in 2020 publications [84,95]. For example, Tsai et al. [78] shows how the combination of big data with deep neural networks can yield 77% precision for identifying dropouts in Taiwan. Similarly, Paul [140] describes how student participation factors are essential for reliable predictions using supervised and unsupervised methods.

Furthermore, Villegas et al. [54] details recent explorations of integrating AI-based retention strategies into broader institutional sustainability and quality frameworks. The works in [44,141,142] represent intermediate phases in which educational institutions have increasingly sought predictive analytics to curb dropout, although with fewer data integration capabilities compared to today. In the very recent period (2020–2025), many works [12,48,57,82,86,143,144] explore or refine deep learning approaches, advanced clustering, or creative feature engineering. Studies such as [145] propose probabilistic machine learning pipelines that quantify uncertainty, helping instructors with structured interventions throughout the semester. Meanwhile, the works from [66,146] demonstrate the value of early-stage predictions in online or hybrid contexts, allowing for timely intervention to keep students engaged.

Several 2023–2025 publications reflect the growing interest in specialized approaches. For example, Mosia [145] uses probabilistic logistic regression in multiple stages to identify at-risk students at different times, while [89] focuses on MOOC dropout modeling with XGBoost. Suaprae et al. [80] discuss an intelligent consulting system equipped with cognitive technology for student counseling, and [93] refines predictive modeling in Nigeria by applying improved data pre-processing in multi-year records. Furthermore, Demartini et al. [147] shows how AI-driven dashboards can catalyze acceptance and usage of learning analytics in primary and secondary education, although the insights apply equally to higher education. Awedh and Mueen [67] present a new hybrid approach (LR-KNN) and point to the future need to bridge interpretability with advanced analytics. These contributions highlight how the literature continues to evolve, pushing toward more adaptive, context-driven, and multi-source methodologies.

3.3. Data Sources in Dropout Prediction

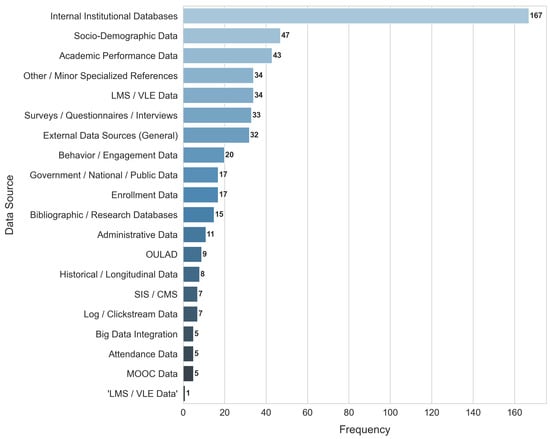

The literature confirms that an accurate prediction of dropout is often based on a combination of institutional and external data. Studies repeatedly highlight internal academic records (grades, assessments, GPA) and demographic information as fundamental characteristics [9,46,148,149,150,151]. Some authors, such as [64,90,152,153,154], incorporate data extracted from virtual learning environments (VLEs), learning management systems (LMSs) [81,146], or MOOC platforms [89]. Others emphasize less common input features; for example, the clustering of digital traces from e-learning platforms [46], the combination of continuous and categorical data through specialized clustering-then-classification methodologies [133], or the exploration of textual variables with advanced NLP [155,156].

Longitudinal data from multi-semester course performance have been proven critical in many contexts, including recent work such as [139], where the course completion data for each semester improve predictive accuracy. Similarly, Martins et al. [107] uses academic, social–demographic, and macroeconomic information collected at different phases of a student’s first year to detect dropout risk as early as possible. Additional works incorporate structured questionnaires to measure intangible factors associated with dropout, such as CPQ-based approaches in [103], while [157] emphasizes the importance of thorough data cleaning, labeling, and transformation steps in building robust predictive pipelines. Jaiswal et al. [158] highlights how mining large educational datasets can unmask hidden relationships that predict dropout, particularly in contexts where institutional data accumulate every year.

In many Latin American institutions, official government or institutional datasets are central for analyses [10,51,52], combining socio-demographic, socio-economic, and academic indicators to identify when a student becomes at risk of leaving. Elsewhere, Vidal et al. [53] shows how such data can reveal motivational or attributional patterns, especially relevant in STEM contexts. In the United States, Jain [22] merges high school and freshman college data for early warning systems, while [23] leverages Canvas engagement logs. The works such as [79] in Malaysia or [93] in Nigeria illustrate how data from multiple enrollment groups combined with pre-processing in Weka yield accurate predictive models of student attrition. Demartini et al. [147] adapt analytics to a broader educational domain, analyzing data streams from secondary schools and exploring how AI-based dashboards benefit teachers. Meanwhile, Guarda [61] discusses how identifying the personal and institutional reasons for dropout requires cross-sectional and time series data for robust classification.

Socio-economic variables remain consistently identified as crucial predictors of dropout. Multiple references [42,96,127,159,160,161,162] and more recent works [62,67,117] stress that parental support, financial conditions, and scholarship data can carry strong predictive power. De Jesus [163] uses fuzzy logic to prescribe specific interventions based on a set of risk factors that include family environment, personal motivation, and socioeconomic background. Sani et al. [93] and Adnan et al. [146] confirm that thorough pre-processing and feature selection further augment the value of such data, thus enhancing predictive performance. Finally, specialized data warehousing frameworks that unify multiple data streams appear in [49,164,165], ensuring that advanced machine learning models receive high-fidelity inputs. While data availability expands, the works from [157,166] remind us of the critical need for systematic data cleaning and transformation to combat noisy or incomplete institutional records.

Figure 6 summarizes the range of data sources used in dropout prediction studies. It shows that internal databases, academic records, and socio-demographic data are the most frequently utilized sources in the literature employing business intelligence and machine learning. This emphasizes the critical role of comprehensive data integration in developing accurate predictive models.

Figure 6.

Data sources utilized in studies predicting student dropout in higher education using business intelligence and machine learning techniques. Internal institutional databases, socio-demographic data, and academic performance data are the most frequently employed data sources across the reviewed literature.

3.4. Machine Learning Methods Used

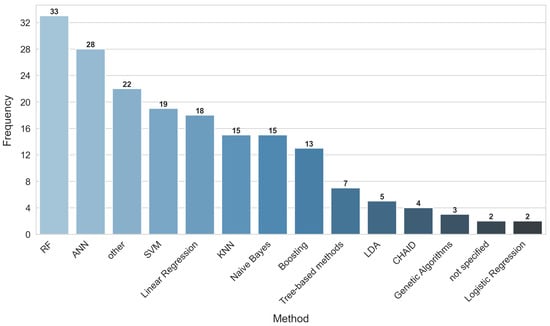

Figure 7 illustrates the algorithms most commonly employed in the prediction of student dropout. Decision trees remain quite popular [24,35,68,71,112,159,167,168,169,170,171,172,173], mainly due to their interpretability and ease of implementation. Random Forest and Gradient Boosting Machines (e.g., XGBoost, LightGBM, CatBoost) have gained traction for their robust performance [46,47,82,89,107,109,118,131,133,143,174,175,176,177,178,179,180,181,182,183], including in MOOC dropout research. Logistic regression remains a consistent baseline for interpretability [68,69,72,79,87,96,145,152,162,184,185,186], and some authors integrate stepwise or probabilistic refinements [145].

Figure 7.

Frequency of machine learning methods employed in predicting student dropout in higher education.

Ensemble methods and hybrid approaches often emerge as top performers, exemplified in [43,66,114,132,150,175,181,187,188,189]. Deep learning frameworks (feedforward networks, CNNs, LSTMs) have gained traction [71,78,84,87,95,152,154,160,190], and approaches like [67] show potential for hybrid classification (LR-KNN) or fuzzy logic-based systems [44,153,163]. Adnan et al. [146] proposes a staged approach to identify at-risk students at 0%, 20%, 40%, 60%, 80%, and 100% of the course timeline, utilizing random forest models to capture changing levels of engagement. Meanwhile, Sani et al. [93] applies Random Forest and decision trees with improved data pre-processing, surpassing 79% accuracy in student attrition forecasting.

Less common techniques such as Fuzzy-ARTMAP networks [44], Bayesian classifiers [23,56,191,192], and cluster-then-classify strategies [46,133] complement the more traditional approaches, especially when institutions seek to handle unstructured or noisy data. Kondo et al. [81] demonstrate how straightforward logistic regression and decision trees benefit from LMS log data for early warnings, while [80] deploy an intelligent consulting system integrated with cognitive technology. An increasing emphasis on explainability also appears, with XAI methods (e.g., LIME, SHAP) helping to clarify model decisions [131,137]. As these tools evolve, a shared trend is to balance predictive performance with transparency for institutional acceptance [120,170].

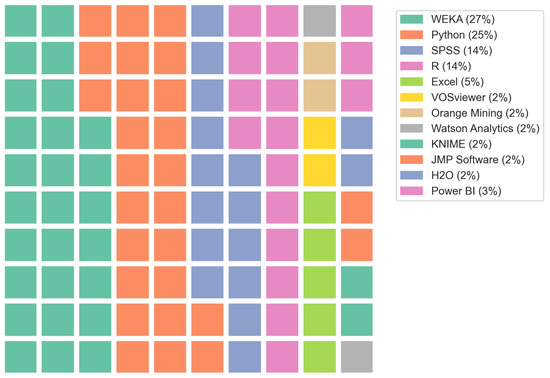

3.5. Software Tools and Platforms

Regarding specific software tools, the works analyzed indicate frequent usage of open source data mining platforms such as Weka [79,93,193,194,195], KNIME [196], Orange, R, and Python-based libraries (scikit-learn, TensorFlow, PyTorch) [36,143,154,160,197,198]. Commercial platforms like IBM SPSS Modeler and SAS Enterprise Miner appear in large institutional or multi-campus projects [199,200,201], while Power BI or Tableau are commonly cited for data visualization and reporting to decision-makers [202,203,204]. In some cases, specialized data warehousing or star schema designs facilitate data extraction and transformation [164,205,206,207].

LMS and MOOC platforms also serve as crucial data sources. The works of [81,89] use online log data to train predictive models and implement early warning systems. Novel or hybrid frameworks appear in references such as [157], which offers an end-to-end pipeline with data augmentation, labeling, and feature engineering. In [147], a cloud-based AI dashboard is implemented to enhance teacher acceptance of learning analytics, especially in primary and secondary schools, but with clear parallels to higher education usage. Suaprae et al. [80] emphasize integrating machine learning into intelligent consulting systems, demonstrating how advanced analytics can be wrapped into user-friendly interfaces for counselors. In general, researchers stress solutions that are accessible and flexible enough to accommodate diverse institutional data, ensuring robust and scalable dropout prevention initiatives.

The diverse ecosystem of software tools and platforms employed in the research on dropout prediction discussed above is visually summarized in Figure 8. This waffle plot illustrates the proportional usage of various tools across reviewed studies. Open-source data mining platforms such as Weka, KNIME, R, and Python-based libraries are prominently featured, along with commercial solutions like IBM SPSS Modeler, SAS Enterprise Miner, Power BI, and Tableau. The visualization highlights the widespread adoption of versatile software tools, emphasizing both the flexibility and accessibility required by institutions implementing effective dropout prediction and prevention systems.

Figure 8.

Waffle plot illustrating the proportion of software tools used in studies predicting student dropout in higher education.

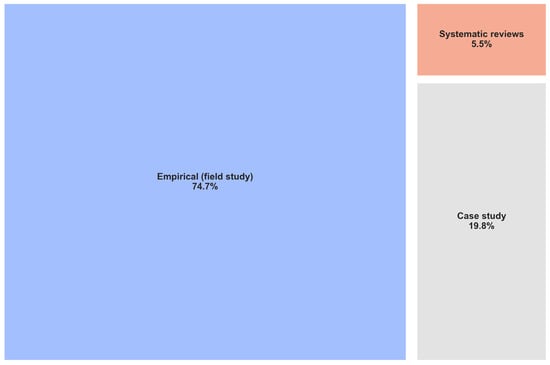

3.6. Types of Research Approaches

Figure 9 shows how most studies employ quantitative empirical methods that feature some variant of supervised machine learning in institutional data. Many of these are cross-sectional analyses, although a growing number of researchers adopt longitudinal approaches to capture changes in student performance over time [42,79,102,128,203,208,209]. Intervention-based pilot implementations of early warning systems are increasingly common [34,66,131,210,211,212,213]. Here, data dashboards are tested in real courses or programs, with interventions triggered once high-risk profiles emerge [81,146]. The work of De Jesus et al. [163] proposes a fuzzy logic-based prescriptive analytics system that predicts both dropout risk and suggests targeted interventions. Similarly, [80] integrates predictive analytics into an intelligent counseling system, guided by cognitive technology to decrease mid-exits.

Figure 9.

Treemap representing the distribution of research types among studies on predicting student dropout in higher education using business intelligence and machine learning techniques.

Studies such as [113] test specific interventions through randomized or quasi-experimental designs, while meta-analyses such as [112,114,116] evaluate multiple predictive modeling approaches. Other authors focus on design-based research [58,135,136,214], focusing on iterative improvements in early warning dashboards. Works such as [52,93] employ survival analysis or multi-year classification to unravel dropout trajectories. Some efforts combine numeric data with interviews or surveys to validate the root causes of dropout behavior [21,61,191,215].

New lines of research incorporate novel analytics and AI. For example, Demartini et al. [147] addresses K–12 education, but highlights a multidimensional approach to bridging analytics and stakeholder acceptance, relevant to higher education. Vidal et al. [53] employ neural networks and a robust set of motivational variables in Ecuador, demonstrating how psychosocial factors can affect the success of STEM courses. In [67], the authors propose a layered approach that integrates pre-processing, clustering (canopy + Gaussian flow optimizer), and a hybrid LR-KNN model to identify at-risk students at King Abdulaziz University. References like [157] detail data pre-processing pipelines (CRISP-DM, star schemas) that serve as the backbone for predictive tasks. Finally, large-scale or multi-institutional explorations, such as [104,190], underscore the importance of shared data standards to refine predictions across varying contexts.

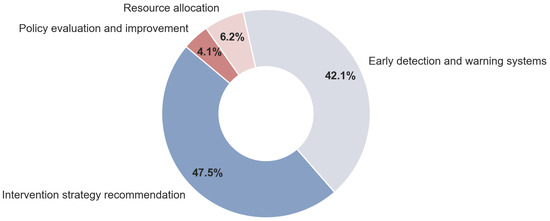

3.7. Application Objectives of the Studies

Whereas early work on dropout analysis often aimed simply to identify at-risk students, recent publications indicate broader objectives of data usage. Figure 10 illustrates how early detection and intervention design remain the most common goals [46,83,148,181,185,216]. Many studies propose frameworks for timely or even real-time interventions, focusing on academic counseling, customized feedback, or improved course design [24,63,80,132,142,153,163,217,218,219]. Another line of research focuses on building recommendation systems or advising platforms (e.g., personalized learning pathways [133,216] or data-driven academic counseling tools [93,108,220]).

Figure 10.

Distribution of application objectives among studies predicting student dropout in higher education using business intelligence and machine learning techniques.

In addition, a portion of the literature contemplates how predictive insights can guide broader institutional policy. For example, works in [159,221,222] detail using analytics to reorient curriculum design and resource allocation. Several authors highlight the use of AI-based predictive models to reduce inequality and strengthen equity in education, especially for disadvantaged groups [53,62,82,117,223]. Another dimension lies in designing advanced dashboards or holistic monitoring solutions that unify multiple data streams into a coherent framework [132,147,206,224,225,226], supporting administrators in real-time decision-making. Integrating the concept of “digital portraits” from social media and university records has also been explored [227] to provide a 360-degree overview of students’ academic and extracurricular lives.

Several works address how performance analytics can be leveraged by institutional leaders to formulate macro-level policies. Martins et al. [107] study whether students are likely to drop out, delay, or complete on time, while [66] focuses on improving retention rates for students at risk by combining LSTM with other machine learning classifiers. Sharma and Yadav [72] help first-year engineering students through a streamlined predictor set for timely interventions, while Adnan et al. [146] explicitly tailors random forest models to identify at-risk students at multiple junctures across a course. MOOC-based contexts also arise: Patel and Amin [89] show how advanced gradient enhancement promotes retention through early detection, facilitating more personalized online learning. Integration of institutional sustainability goals into references such as [54] reveals a growing acknowledgment that dropout reduction aligns with resource optimization, mental health considerations, and institutional longevity.

In general, these specialized objectives converge on the broad aim to reduce dropout, improve academic performance, and optimize routes in a data-driven, equitable manner. Local contexts, from Taiwan [78] to Nigeria [93] to Ecuador [53], demonstrate that region-specific features (cultural, socioeconomic, infrastructural) must be integrated into models to maximize impact. Consequently, the typical workflow is shifting from predictive to prescriptive as exemplified by [163], which merges fuzzy logic with interventions to address the multifaceted challenges of student attrition.

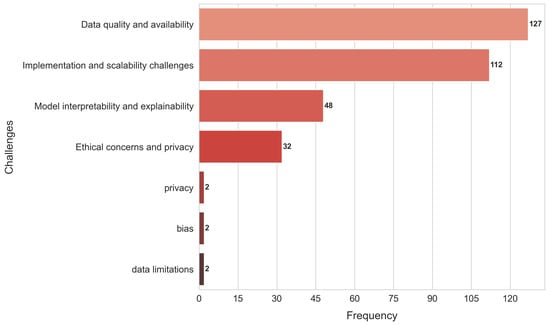

3.8. Challenges Reported

Several recurring challenges in the use of BI and machine learning for predicting dropout appear in the literature, such as data privacy, data quality, interpretability, resource limitations, and ethical concerns, as depicted in Figure 11.

Figure 11.

Challenges reported in studies focused on predicting student dropout in higher education using business intelligence and machine learning techniques.

3.8.1. Data Privacy and Security

Data privacy remains a critical concern, especially regarding sensitive student information. Studies such as [12,228] emphasize risks associated with bias and privacy violations arising from inappropriate use or sharing of student data. Transparent data governance frameworks are recommended by [20,185] to mitigate these risks. Cross-platform and cross-institutional data sharing raise additional privacy complexities, especially in contexts involving emerging AI-driven analytics [83,156,190]. Maintaining adherence to robust ethical standards and institutional policies, as highlighted by [54], is essential for responsible and secure data use.

3.8.2. Data Quality and Integration

Integrating data from diverse sources often poses significant challenges due to inconsistencies, missing values, or variations in data formats [155,166]. The literature advocates for adopting robust data management practices, including structured pipelines, the CRISP-DM methodology, star schema designs, and advanced data warehousing approaches [49,51,164,205,229,230]. Additionally, longitudinal data present difficulties in maintaining consistency over extended periods, requiring special attention to shifting definitions and evolving institutional standards [72,79,139]. Work such as [73] emphasizes the need to generate region-specific datasets, while [74,157] emphasizes systematic strategies to handle class imbalances, data cleaning, and labeling to improve model robustness.

3.8.3. Model Interpretability

The increasing sophistication of machine learning models, such as deep neural networks and ensemble methods, often results in reduced transparency, commonly referred to as the black box problem [95,160,170,185]. This lack of interpretability can undermine stakeholder trust and limit practical adoption. To address these issues, researchers emphasize the development of explainable AI (XAI) frameworks such as LIME or SHAP, which provide insights into model decisions [131,133,231,232]. Additionally, probabilistic approaches and Bayesian models are recommended to integrate uncertainty explicitly, thus enhancing interpretability without sacrificing predictive performance [23,145]. Studies such as [120,137] further highlight the importance of obtaining causal insights and actionable interventions from predictive analytics.

3.8.4. Resource Limitations and Expertise

A recurring barrier to effective implementation of BI in higher education is the lack of technical expertise and the necessary infrastructure [114,167,191]. Institutions often face constraints related to limited financial resources, insufficient technological infrastructure, and staff training gaps [166,233]. These limitations are particularly pronounced in developing countries where unstable internet connectivity, inadequate hardware, and minimal technical support restrict the deployment of sophisticated predictive systems [91,92,93]. Addressing these challenges requires comprehensive capacity building initiatives, targeted training programs, and the creation of user-friendly analytical tools to ensure successful adoption and sustained implementation [80,119].

3.8.5. Ethical Concerns and Bias

The risk of perpetuating existing biases through predictive analytics is widely noted, raising significant ethical concerns [20,83,108,143,185]. There is a critical need for fairness-aware modeling practices to ensure that dropout prediction tools do not disproportionately disadvantage marginalized or vulnerable groups [12,14,234]. Researchers advocate for interpretive frameworks that explicitly account for demographic, socioeconomic, and cultural contexts to prevent unintended discrimination [98,117]. Moreover, careful validation practices and inclusive model design are essential to mitigate inadvertent biases, especially in diverse educational settings such as MOOCs or digitally mediated learning environments [67,89].

4. Discussion

The results of this systematic analysis highlight the rapidly expanding global interest in using business intelligence (BI) and data-driven approaches to predict and prevent student dropout in higher education. Taken together, these findings emphasize how diverse regions, institutional contexts, and research methods converge on a common goal: to reduce attrition rates by leveraging advanced analytics. Below, we discuss key insights and their implications for educational practice, policy, and research.

4.1. Global and Temporal Trends

The geographical scope of the reviewed research demonstrates that dropout prediction efforts are widespread in North America, Europe, Latin America, and Asia, with a growing presence in Africa. This global engagement highlights the universal challenge of student attrition, while also revealing significant context-specific nuances. Nations such as the United States, Brazil, Spain, and the United Kingdom stand out for their high volume of studies, although work from other regions continues to grow. At the same time, the marked increase in publications from 2019 onward suggests that the urgent need to improve student success, combined with greater data availability and computational capacity, has fueled more robust and sophisticated modeling techniques.

Despite varying resource constraints across regions, the common denominator is a shared objective: transitioning from traditional, often manual tracking systems to proactive, data-driven strategies that inform timely interventions. This widespread interest points to the potential for cross-regional knowledge exchange, especially as more mature educational systems refine their approaches and emerging systems adopt best practices. Moreover, the methodological evolution observed over the last decade—from relatively simple classification models to deep learning and explainable AI—reflects heightened demand for better predictive power and interpretability.

4.2. Data Sources and Methods

A variety of data sources have been employed to predict dropout, with the most prominent being institutional academic records (e.g., grades, GPA) and socio-demographic variables (e.g., socioeconomic status, parental support). Additionally, learning management system (LMS) logs, MOOC platform data, and longitudinal performance records are increasingly integrated to capture student engagement and changes in risk over time. This combination of data highlights the multifactorial nature of dropout, in which academic, social, psychological, and economic factors intersect to influence students’ decisions to abandon their studies.

From a methodological point of view, machine learning approaches show significant heterogeneity. Decision trees and logistic regression are consistently used because of their interpretability, while ensemble methods (e.g., Random Forest, XGBoost) and deep learning models have gained popularity for their ability to handle complex, high-dimensional data. However, the shift toward more advanced models often comes at the cost of reduced transparency, leading to a growing emphasis on explainable artificial intelligence (XAI) methods. Such approaches aim to balance predictive accuracy with stakeholder interpretability, particularly as institutional acceptance and ethical use hinge on clear, justifiable decision-making processes.

4.3. Research Approaches and Application Objectives

Quantitative empirical analyses predominate, often featuring supervised learning to classify or predict at-risk students. Yet, a notable subset of studies incorporates intervention-based designs, in which predictive models guide real-time or near-real-time actions. These interventions—ranging from personalized counseling to curricular adjustments—are often delivered through user-friendly dashboards or integrated advising platforms. By embedding predictive results into day-to-day academic operations, these studies demonstrate the feasibility of moving beyond retrospective analytics and toward proactive student support.

The reviewed research also reveals an expansion of objectives. While early detection of at-risk students remains central, many institutions now aim to use dropout risk data to inform broader policy decisions. For example, predictive insights help drive course redesign, equitable resource distribution, and even institutional strategies to improve mental health and social engagement. Some universities align these efforts with long-term sustainability and inclusion goals, recognizing that dropout prevention intersects with ethics, student well-being, and institutional reputation.

4.4. Challenges and Limitations

Educational institutions often face technical challenges due to educators’ limited familiarity with BI tools and predictive analytics. To effectively overcome these barriers, institutions should invest in targeted professional development initiatives, including hands-on workshops, certifications in data analytics, and training programs tailored specifically to educational contexts. Collaborative efforts with industry experts or partnerships with institutions experienced in data-driven practices can also facilitate knowledge transfer and skill enhancement. Additionally, implementing user-friendly, intuitive BI dashboards designed explicitly for educators can significantly reduce barriers to effective use, ultimately improving the practical impact of BI tools on dropout prevention strategies.

Data privacy remains a significant challenge in implementing BI and predictive analytics in education, particularly given stringent legal frameworks such as the General Data Protection Regulation (GDPR) in the European Union and the Family Educational Rights and Privacy Act (FERPA) in the United States. GDPR sets strict standards for collecting, processing, and managing personal data, emphasizing transparency, consent, and accountability, while FERPA specifically protects the privacy of student educational records, limiting how institutions can use and disclose data. These regulations require educational institutions to establish robust data governance practices and infrastructure to ensure compliance, which poses considerable resource challenges, especially for smaller or under-resourced institutions.

Data quality and integration also pose substantial difficulties. Heterogeneous data systems, missing values, and inconsistent recording practices can hinder model accuracy, underscoring the need for standardized data warehousing solutions and sophisticated pre-processing workflows.

Another limitation involves the interpretability of advanced predictive methods. Although sophisticated algorithms, including deep learning and ensemble models, offer high predictive performance, their inherent complexity often limits transparency. This so-called “black-box” issue can undermine institutional stakeholders’ trust, necessitating greater emphasis on explainable AI methodologies that provide clarity and confidence in predictive outputs.

Additionally, successful BI implementation hinges upon adequate training and skill development among educators and administrators. Even the most effective predictive analytics systems require skilled users who can interpret and translate data insights into actionable interventions. Without sufficient training and ongoing professional development, educational institutions may struggle to realize the full potential of BI technologies.

Finally, ethical concerns remain significant. Predictive models trained on historical data risk reinforcing existing biases, inadvertently disadvantaging vulnerable student populations. Ensuring fairness and equity requires rigorous validation, ongoing monitoring, and transparent modeling practices, highlighting the ethical responsibilities of institutions employing predictive analytics.

4.5. Future Directions

Several avenues for further work emerge from these findings. First, efforts to integrate multi-modal data—encompassing academic records, LMS logs, and psychosocial indicators—should continue, as richer datasets promise more robust risk assessments. Second, bridging the gap between prediction and intervention calls for interdisciplinary research, uniting data scientists, educators, and policy experts to design actionable strategies. Third, scaling up cross-institutional collaborations can accelerate learning from diverse contexts, particularly if researchers embrace open science principles that encourage data and methodology sharing. Lastly, developing and validating fairness-aware machine learning models is critical to ensuring that dropout prevention strategies support, rather than stigmatize, underrepresented or marginalized student groups.

5. Conclusions

The collective evidence from these studies points to the growing sophistication and relevance of business intelligence and machine learning methods in addressing student dropout in higher education. Institutions worldwide are leveraging increasingly varied data sources and advanced analytical tools, supported by user-centered dashboards and interventions that target students most in need. However, persistent challenges, such as data quality, privacy, interpretability, and ethical risk, underscore that successful implementation requires robust governance and community involvement. In the future, collaborative and context-specific research will play a key role in unlocking the full potential of data-driven dropout prevention, ultimately contributing to more inclusive and effective educational systems.

Author Contributions

Conceptualization, D.-M.C.-E.; methodology D.-M.C.-E., J.T., J.-A.R.-G. and K.-E.C.-E.; formal analysis, D.-M.C.-E., J.T., J.-A.R.-G. and K.-E.C.-E.; investigation, D.-M.C.-E., J.T. and K.-E.C.-E.; resources D.-M.C.-E., J.T., J.-A.R.-G., K.-E.C.-E., R.-E.L.-M. and R.C.-S.; writing—original draft preparation, D.-M.C.-E., J.T., J.-A.R.-G. and K.-E.C.-E.; writing—review and editing, D.-M.C.-E., J.-A.R.-G., J.T., K.-E.C.-E., R.-E.L.-M., T.G.-R. and R.C.-S.; supervision, D.-M.C.-E., K.-E.C.-E., T.G-R. and J.T.; project administration, D.-M.C.-E., J.T. and K.-E.C.-E. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No new data were created or analyzed in this study.

Acknowledgments

We thank the Secretaría de Ciencia, Humanidades, Tecnología e Innovación (SECIHTI) for its support through the National System of Researchers (SNII).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Urbina-Nájera, A.B.; Camino-Hampshire, J.C.; Barbosa, R.C. Deserción escolar universitaria: Patrones para prevenirla aplicando minería de datos educativa. RELIEVE—Rev. Electrón. Investig. Eval. Educativa 2020, 26. [Google Scholar] [CrossRef]

- Rochin Berumen, F.L. Deserción escolar en la educación superior en México: Revisión de literatura. RIDE—Rev. Iberoam. Para Investig. Desarro. Educativo 2021, 11, 1–11. [Google Scholar] [CrossRef]

- Curto Díaz, J. Introduccion al Business Intelligence; Editorial UOC: Barcelona, Spain, 2017. [Google Scholar]

- Ayala, J.; Ortiz, J.; Guevara, C.; Maya, E. Herramientas de Business Intelligence (BI) modernas, basadas en memoria y con lógica asociativa. Revistapuce 2018, 106. [Google Scholar] [CrossRef]

- Ouriniche, N.; Benabbou, Z.; Abbar, H. Global Performance Management Using the Sustainability Balanced Scorecard and Business Intelligence—A Case Study. Proc. Eng. 2022, 4, 313–326. [Google Scholar] [CrossRef]

- Cano, J.L. Business Intelligence: Competir con Información; Fundación Banesto: Madrid, Spain, 2007. [Google Scholar]

- Pascal, G.; Servetto, D.; Mirasson, U.L.; Luna, Y. Aplicación de Business Intelligence para la toma de decisiones en Instituciones Universitarias. Implementación de Boletines Estadísticos en la Universidad Nacional de Lomas de Zamora (UNLZ). Rev. Electrón. Sobre Tecnol. Educ. Sociedad 2017, 4, 7. [Google Scholar]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G.; Group, P. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. Int. J. Surg. 2010, 8, 336–341. [Google Scholar] [CrossRef]

- Grijalva, P.K.; Cornejo, G.E.; Gómez, R.R.; Real, K.P.; Fernández, A. Herramientas colaborativas para revisiones sistemáticas. Rev. Espac. 2019, 40, 9. [Google Scholar]

- Uldall, J.S.; Rojas, C.G. An Application of Machine Learning in Public Policy: Early Warning Prediction of School Dropout in the Chilean Public Education System. Multidiscip. Bus. Rev. 2022, 15, 20–35. [Google Scholar] [CrossRef]

- Christie, S.T.; Jarratt, D.C.; Olson, L.A.; Taijala, T.T. Machine-Learned School Dropout Early Warning at Scale. In Proceedings of the Educational Data Mining, Montreal, QC, Canada, 2–5 July 2019. [Google Scholar]

- Bird, K. Predictive Analytics in Higher Education: The Promises and Challenges of Using Machine Learning to Improve Student Success. AIR Prof. File 2023, 2023, 11–18. [Google Scholar] [CrossRef]

- Ryan, L.; Snow, N. Supporting Student Success with Intuitive, Approachable Data Visualization. In Supporting the Success of Adult and Online Students Proven Practices in Higher Education; CreateSpace Independent Publishing: San Bernardino, CA, USA, 2016. [Google Scholar]

- Pinkus, L. Using Early-Warning Data to Improve Graduation Rates: Closing Cracks in the Education System; Alliance for Excellent Education: Washington, DC, USA, 2009. [Google Scholar]

- Aulck, L.; Velagapudi, N.; Blumenstock, J.E.; West, J.D. Predicting Student Dropout in Higher Education. arXiv 2016, arXiv:1606.06364. [Google Scholar]

- Kang, K.; Wang, S. Analyze and Predict Student Dropout from Online Programs. In Proceedings of the Conference on Educational Data Mining, DeKalb, IL, USA, 23–25 March 2018; pp. 6–12. [Google Scholar] [CrossRef]

- Essa, A.; Ayad, H. Improving student success using predictive models and data visualisations. Res. Learn. Technol. 2012, 20, 58–70. [Google Scholar] [CrossRef]

- Matz, S.; Peters, H.; Stachl, C. Using machine learning to predict student retention from socio-demographic characteristics and app-based engagement metrics. Dent. Sci. Rep. 2023, 13, 5705. [Google Scholar] [CrossRef]

- Yu, R.; Li, Q.; Fischer, C.; Doroudi, S.; Xu, D. Towards Accurate and Fair Prediction of College Success: Evaluating Different Sources of Student Data. In Proceedings of the Educational Data Mining Conference, Virtual Event, 10–13 July 2020; pp. 292–301. [Google Scholar]

- Yu, R.; Lee, H.; Kizilcec, R.F. Should College Dropout Prediction Models Include Protected Attributes. In Proceedings of the Learning at Scale Conference, Virtual, 22–25 June 2021; pp. 91–100. [Google Scholar] [CrossRef]

- Zhao, Y.; Otteson, A. AI-Driven Strategies for Reducing Student Withdrawal—A Study of EMU Student Stopout. arXiv 2024, arXiv:2408.02598. [Google Scholar] [CrossRef]

- Jain, H. Predicting college dropout likelihood based on high school and college data: A machine learning approach. In Proceedings of the International Conference on Science & Technology, Bali, Indonesia, 18–19 July 2024. [Google Scholar] [CrossRef]

- Mínguez-Martínez, A.L.; Sood, K.; Mahto, R. Early Detection of At-Risk Students Using Machine Learning. arXiv 2024, arXiv:2412.09483. [Google Scholar]

- Bukralia, R.; Deokar, A.V.; Sarnikar, S. Using Academic Analytics to Predict Dropout Risk in E-Learning Courses. In Reshaping Society Through Analytics, Collaboration, and Decision Support; Iyer, L.S., Power, D.J., Eds.; Annals of Information Systems; Springer: Cham, Switzerland, 2015; Volume 18, pp. 67–93. [Google Scholar] [CrossRef]

- Cambruzzi, W.; Rigo, S.; Barbosa, J.L.V. Dropout Prediction and Reduction in Distance Education Courses with the Learning Analytics Multitrail Approach. J. Univers. Comput. Sci. 2015, 21, 23–47. [Google Scholar] [CrossRef]

- da, S. Freitas, F.A.; Vasconcelos, F.F.X.; Peixoto, S.A.; Hassan, M.M.; Dewan, M.A.A.; de Albuquerque, V.H.C.; Filho, P.P.R. IoT System for School Dropout Prediction Using Machine Learning Techniques Based on Socioeconomic Data. Electronics 2020, 9, 1613. [Google Scholar] [CrossRef]

- Barthès, J.P.A. An explainable machine learning approach for student dropout prediction. Expert Syst. Appl. 2023, 233, 120933. [Google Scholar] [CrossRef]

- Flores, V.; Heras, S.; Julián, V. Comparison of Predictive Models with Balanced Classes for the Forecast of Student Dropout in Higher Education. In Practical Applications of Agents and Multi-Agent Systems; Springer: Cham, Switzerland, 2021; pp. 139–152. [Google Scholar] [CrossRef]

- Gonzalez-Nucamendi, A.; Noguez, J.; Neri, L.; Robledo-Rella, V.; García-Castelán, R.M.G.; Escobar-Castillejos, D. Learning Analytics to Determine Profile Dimensions of Students Associated with their Academic Performance. Appl. Sci. 2022, 12, 10560. [Google Scholar] [CrossRef]

- Melo, E.C.; de Souza, F.S.H. Improving the prediction of school dropout with the support of the semi-supervised learning approach. iSys 2023, 16, 10:1–10:26. [Google Scholar] [CrossRef]

- Dávila, G.; Haro, J.; González, A.; Ruiz-Vivanco, O.; Guamán, D. Student Dropout Prediction in High Education, Using Machine Learning and Deep Learning Models: Case of Ecuadorian University. In Proceedings of the 2023 International Conference on Computational Science and Computational Intelligence (CSCI), Las Vegas, NV, USA, 13–15 December 2023. [Google Scholar] [CrossRef]

- Fernandes, T.D.S.; Ramos, G.N. Generating and Understanding Predictive Models for Student Attrition in Public Higher Education. In Proceedings of the IEEE Frontiers in Education Conference, College Station, TX, USA, 18–21 October 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Kalegele, K. Enabling Proactive Management of School Dropouts Using Neural Network. J. Softw. Eng. Appl. 2020, 13, 245–257. [Google Scholar] [CrossRef]

- Baneres, D.; Rodríguez-González, M.E.; Guerrero-Roldán, A.E. A Real Time Predictive Model for Identifying Course Dropout in Online Higher Education. IEEE Trans. Learn. Technol. 2023, 16, 484–499. [Google Scholar] [CrossRef]

- Sayed, M. Student Progression and Dropout Rates Using Convolutional Neural Network: A Case Study of the Arab Open University. J. Adv. Comput. Intell. Intell. Inform. 2024, 28, 668–678. [Google Scholar] [CrossRef]

- do Carmo Nicoletti, M.; de Oliveira, O.L. A Machine Learning-Based Computational System Proposal Aiming at Higher Education Dropout Prediction. High. Educ. Stud. 2020, 10, 12–24. [Google Scholar] [CrossRef]

- Kim, S.; Yoo, E.; Kim, S. Why Do Students Drop Out? University Dropout Prediction and Associated Factor Analysis Using Machine Learning Techniques. arXiv 2023, arXiv:2310.10987. [Google Scholar] [CrossRef]

- Silva, J.; Matos, L.F.A.; Mosquera, C.M.; Mercado, C.V.; González, R.B.; Llinas, N.O.; Lezama, O.B.P. Prediction of academic dropout in university students using data mining: Engineering case. In Advances in Intelligent Systems and Computing; Springer: Singapore, 2020; pp. 495–500. [Google Scholar] [CrossRef]

- Manrique, R.; Nunes, B.P.; Marino, O.; Casanova, M.A.; Nurmikko-Fuller, T. An Analysis of Student Representation, Representative Features and Classification Algorithms to Predict Degree Dropout. In Proceedings of the Learning Analytics and Knowledge Conference, Tempe, AZ, USA, 4–8 March 2019; pp. 401–410. [Google Scholar] [CrossRef]

- Beltrame, W.A.R.; Gonçalves, O.L. Socioeconomic Data Mining and Student Dropout: Analyzing a Higher Education Course in Brazil. Int. J. Innov. Educ. Res. 2020, 8, 505–518. [Google Scholar] [CrossRef]

- Böttcher, A.; Thurner, V.; Hafner, T. Applying Data Analysis to Identify Early Indicators for Potential Risk of Dropout in CS Students. In Proceedings of the Global Engineering Education Conference, Porto, Portugal, 27–30 April 2020; pp. 827–836. [Google Scholar] [CrossRef]

- Hegde, V.; Prageeth, P.P. Higher education student dropout prediction and analysis through educational data mining. In Proceedings of the International Conference on Information and Communication Systems (ICISC), Coimbatore, India, 19–20 January 2018. [Google Scholar] [CrossRef]

- Aguirre, C.E.; Pérez, J.C. Predictive data analysis techniques applied to dropping out of university studies. In Proceedings of the Conference on Latin American Computing, Loja, Ecuador, 19–23 October 2020; pp. 512–521. [Google Scholar] [CrossRef]

- Martinho, V.R.C.; Nunes, C.; Minussi, C.R. Prediction of School Dropout Risk Group Using Neural Network Fuzzy ARTMAP. In Proceedings of the 2013 Federated Conference on Computer Science and Information Systems, Krakow, Poland, 8–11 September 2013; pp. 111–114. [Google Scholar]

- Palacios, C.A.; Reyes-Suarez, J.A.; Bearzotti, L.; Leiva, V.; Marchant, C. Knowledge Discovery for Higher Education Student Retention Based on Data Mining: Machine Learning Algorithms and Case Study in Chile. Entropy 2021, 23, 485. [Google Scholar] [CrossRef]

- Pecuchova, J.; Drlík, M. Enhancing the Early Student Dropout Prediction Model Through Clustering Analysis of Students’ Digital Traces. IEEE Access 2024, 12, 159336–159367. [Google Scholar] [CrossRef]

- Poellhuber, L.V.; Poellhuber, B.; Desmarais, M.C.; Léger, C.; Roy, N.; Vu, M.M.C. Cluster-Based Performance of Student Dropout Prediction as a Solution for Large Scale Models in a Moodle LMS. In Proceedings of the International Conference on Learning Analytics and Knowledge, Arlington, TX, USA, 13–17 March 2023. [Google Scholar] [CrossRef]

- Kok, C.L.; Ho, C.K.; Chen, L.; Koh, Y.Y.; Tian, B. A Novel Predictive Modeling for Student Attrition Utilizing Machine Learning and Sustainable Big Data Analytics. Appl. Sci. 2024, 14, 9633. [Google Scholar] [CrossRef]

- Orong, M.Y.; Caroro, R.A.; Durias, G.D.; Cabrera, J.A.; Lonzon, H.A.; Ricalde, G.T. A Predictive Analytics Approach in Determining the Predictors of Student Attrition in the Higher Education Institutions in the Philippines. In Proceedings of the International Conference on Software Engineering and Information Management, Sydney, NSW, Australia, 12–15 January 2020; pp. 222–225. [Google Scholar] [CrossRef]

- Pérez, P.N.M.; C, J.R.A.; Zamora, A.R.R. Predictive Model Design applying Data Mining to identify causes of Dropout in University Students. Int. J. Sci. Technol. Soc. 2019, 7, 11–39. [Google Scholar]

- Patino-Rodriguez, C.E. ADHE: A Tool to Characterize Higher Education Dropout Phenomenon. Rev. Fac. Ing.-Univ. Antioq. 2023, 111, 64–75. [Google Scholar] [CrossRef]

- Gutierrez-Pachas, D.A.; Zanabria, G.G.; Cuadros-Vargas, E.; Chavez, G.C.; Gomez-Nieto, E. Supporting Decision-Making Process on Higher Education Dropout by Analyzing Academic, Socioeconomic, and Equity Factors through Machine Learning and Survival Analysis Methods in the Latin American Context. Educ. Sci. 2023, 13, 154. [Google Scholar] [CrossRef]

- Vidal, J.; Gilar-Corbi, R.; Pozo-Rico, T.; Castejón, J.L.; Sánchez-Almeida, T. Predictors of University Attrition: Looking for an Equitable and Sustainable Higher Education. Sustainability 2022, 14, 10994. [Google Scholar] [CrossRef]

- Villegas-Ch, W.; Govea, J.; Revelo-Tapia, S. Improving Student Retention in Institutions of Higher Education through Machine Learning: A Sustainable Approach. Sustainability 2023, 15, 14512. [Google Scholar] [CrossRef]

- Gonzalez-Nucamendi, A.; Noguez, J.; Neri, L.; Robledo-Rella, V.; García-Castelán, R.M.G. Predictive analytics study to determine undergraduate students at risk of dropout. Front. Educ. 2023, 8, 1244686. [Google Scholar] [CrossRef]

- Núñez, A.; del Carmen Santiago Díaz, M.; Vázquez, A.C.Z.; Marcial, J.P.; Linarès, G. Early Detection of Students at High Risk of Academic Failure using Artificial Intelligence. Int. J. Comb. Optim. Probl. Inform. 2024, 15, 155–160. [Google Scholar] [CrossRef]

- Su, Z.; Liu, Y.; Zhang, X. Preventing Dropouts and Promoting Student Success: The Role of Predictive Analytics. In Proceedings of the 2nd International Conference on Computer Application Technology (CCAT 2023), Guiyang, China, 15–17 September 2023; pp. 282–286. [Google Scholar] [CrossRef]

- Heredia-Jiménez, V.; Jimenez, A.; Ortiz-Rojas, M.; Marín, J.I.; Moreno-Marcos, P.M.; Muñoz-Merino, P.J.; Kloos, C.D. An Early Warning Dropout Model in Higher Education Degree Programs: A Case Study in Ecuador. In Proceedings of the Workshop on Adoption, Adaptation and Pilots of Learning Analytics in Under-represented Regions (LAUR 2020), online, 14–15 September 2020; Volume 2704 of CEURWorkshop Proceedings. pp. 58–67. [Google Scholar]

- Núñez-Naranjo, A.F.; Ayala-Chauvin, M.; Riba-Sanmartí, G. Prediction of University Dropout Using Machine Learning. In Proceedings of the International Conference on Information Technology and Systems (ICITS 2021), Universidad Estatal Península de Santa Elena, Libertad, Ecuador, 4–6 February 2021; Advances in Intelligent Systems and Computing. Springer: Cham, Switzerland, 2021; Volume 1330, pp. 396–406. [Google Scholar] [CrossRef]

- Santacoloma, G.D. Predictive Model to Identify College Students with High Dropout Rates. Rev. Electron. Investig. Educ. 2023, 25, 1–10. [Google Scholar] [CrossRef]

- Guarda, T. Higher Education Students Dropout Prediction. In Proceedings of the 8th International Conference on Data Mining and Big Data (DMBD 2023), Sanya, China, 9–12 December 2023; Communications in Computer and Information Science. Springer: Singapore, 2023; Volume 328, pp. 121–128. [Google Scholar] [CrossRef]

- Khan, M.A.; Khojah, M.; Vivek. Artificial Intelligence and Big Data: The Advent of New Pedagogy in the Adaptive E-Learning System in the Higher Educational Institutions of Saudi Arabia. Educ. Res. Int. 2022, 2022, 1263555. [Google Scholar] [CrossRef]

- Khan, I.M.; Khan, I.M.; Ahmad, A.R.; Jabeur, N.; Mahdi, M.N. An Artificial Intelligence Approach to Monitor Student Performance and Devise Preventive Measures. Smart Learn. Environ. 2021, 8, 17. [Google Scholar] [CrossRef]

- Alfahid, A. Algorithmic Prediction of Students On-Time Graduation from the University. TEM J. 2024, 13, 692–698. [Google Scholar] [CrossRef]

- Alturki, S.; Alturki, N.M. Using Educational Data Mining to Predict Students’ Academic Performance for Applying Early Interventions. J. Inf. Technol. Educ. Innov. Pract. 2021, 20, 121–137. [Google Scholar] [CrossRef]

- Brdesee, H.; Alsaggaf, W.; Aljohani, N.R.; Hassan, S.U. Predictive Model Using a Machine Learning Approach for Enhancing the Retention Rate of Students At-Risk. Int. J. Semant. Web Inf. Syst. 2022, 18, 1–21. [Google Scholar] [CrossRef]

- Awedh, M.; Mueen, A. Early Identification of Vulnerable Students with Machine Learning Algorithms. WSEAS Trans. Inf. Sci. Appl. 2025, 22, 166–188. [Google Scholar] [CrossRef]

- Priya V, G.; Eliyas, S.; Kumar M, S. Detecting and Predicting Learner’s Dropout Using KNN Algorithm. In Proceedings of the 2024 OPJU International Technology Conference (OTCON) on Smart Computing for Innovation and Advancement in Industry 4.0, Raigarh, India, 5–7 June 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Deb, S.; Sammy, M.S.R.; Tusher, A.N.; Sakib, M.R.S.; Hasan, M.H.; Aunik, A.I. Predicting Student Dropout: A Machine Learning Approach. In Proceedings of the 15th International Conference on Computing, Communication and Networking Technologies (ICCCNT 2024), IIT Mandi (Kamand), Himachal Pradesh, India, 24–28 June 2024; pp. 1–7. [Google Scholar] [CrossRef]

- Revathy, M.; Kamalakkannan, S. Collaborative learning for improving intellectual skills of dropout students using datamining techniques. In Proceedings of the International Conference on Artificial Intelligence, Coimbatore, India, 25–27 March 2021. [Google Scholar] [CrossRef]

- Gupta, K.; Gupta, K.; Dwivedi, P.; Chaudhry, M. Binary Classification of Students’ Dropout Behaviour in Universities using Machine Learning Algorithms. In Proceedings of the Conference, New Delhi, India, 28 February–1 March 2024; pp. 709–714. [Google Scholar] [CrossRef]

- Sharma, M.; Yadav, M.L. Predicting Students’ Drop-Out Rate Using Machine Learning Models: A Comparative Study. In Proceedings of the International Conference on Intelligent Computing, Instrumentation and Control Technologies (ICICICT), Kannur, India, 11–12 August 2022; pp. 1166–1171. [Google Scholar] [CrossRef]

- Patel, D.D.; Savaj, K.; Malani, P.; Patel, J.; Trivedi, H. Unlocking Enigmatic Pathways: Empowering Student Dropout Analysis with Machine Learning and Energizing Holistic Investigation. In Proceedings of the 2024 IEEE 9th International Conference for Convergence in Technology (I2CT), Pune, India, 5–7 April 2024. [Google Scholar] [CrossRef]

- Dongre, P.G.G. Predicting Student Dropout Rates in Higher Education: A Comparative Study of Machine Learning Algorithms. Indian Sci. J. Res. Eng. Manag. 2024. [Google Scholar] [CrossRef]

- Cheng, J.; Yang, Z.Q.; Cao, J.; Yang, Y.; Poon, K.C.F.; Lai, D. Modeling Behavior Change for Multi-model At-Risk Students Early Prediction. In Proceedings of the 2024 International Symposium on Educational Technology (ISET), Macau, Macao, 29 July–1 August 2024; pp. 54–58. [Google Scholar] [CrossRef]

- Cheng, Y.H. Improving Students’ Academic Performance with AI and Semantic Technologies. arXiv 2022, arXiv:2206.03213. [Google Scholar] [CrossRef]

- Bagunaid, W.; Chilamkurti, N.; Shahraki, A.S.; Bamashmos, S. Visual Data and Pattern Analysis for Smart Education: A Robust DRL-Based Early Warning System for Student Performance Prediction. Future Internet 2024, 16, 206. [Google Scholar] [CrossRef]

- Tsai, S.C.; Chen, C.H.; Shiao, Y.T.; Ciou, J.S.; Wu, T.N. Precision education with statistical learning and deep learning: A case study in Taiwan. Int. J. Educ. Technol. High. Educ. 2020, 17, 12. [Google Scholar] [CrossRef]

- Yaacob, W.F.W.; Sobri, N.M.; Nasir, S.A.M.; Norshahidi, N.D.; Husin, W.Z.W. Predicting Student Drop-Out in Higher Institution Using Data Mining Techniques. J. Phys. Conf. Ser. 2020, 1496, 012005. [Google Scholar] [CrossRef]

- Suaprae, P.; Nilsook, P.; Wannapiroon, P. System Framework of Intelligent Consulting Systems with Intellectual Technology. In Proceedings of the 9th International Conference on Computer and Communications Management (ICCCM ’21), Singapore, 16–18 July 2021; pp. 31–36. [Google Scholar] [CrossRef]

- Kondo, N.; Okubo, M.; Hatanaka, T. Early Detection of At-Risk Students Using Machine Learning Based on LMS Log Data. In Proceedings of the International Conference on Advanced Applied Informatics, Hamamatsu, Japan, 9–13 July 2017; pp. 198–201. [Google Scholar] [CrossRef]

- Sulak, S.; Koklu, N. Predicting Student Dropout Using Machine Learning Algorithms. Intell. Methods Eng. Sci. 2025, 3, 91–98. [Google Scholar] [CrossRef]

- Shin, S. Datafication of Education and Machine Learning Techniques in Education Research: A Critical Review. Gyoyug Yeon’Gu 2024, 2, 215–240. [Google Scholar] [CrossRef]

- Rosdiana, R.; Sunandar, E.; Purnama, A.; Arribathi, A.H.; Yusuf, D.A.; Daeli, O.P.M. Strategies and Consequences of AI-Enhanced Predictive Models for Early Identification of Students at Risk. In Proceedings of the 2024 3rd International Conference on Creative Communication and Innovative Technology (ICCIT), Tangerang, Indonesia, 7–8 August 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Sridevi, K.; Ranjani, A.P.; Ahmad, S.S. Recognizing Students At-Danger with Early Intervention Using Machine Learning Techniques. J. Adv. Zool. 2024, 45, 481. [Google Scholar] [CrossRef]

- Prajwal, P.; Sahana, L.R.; Kanchana, V. Forecasting Student Attrition Using Machine Learning. In Proceedings of the 4th Asian Conference on Innovation in Technology (ASIANCON 2024), Pimpri Chinchwad College of Engineering and Research (PCCOER), Pune, India, 23–25 August 2024; pp. 1–7. [Google Scholar] [CrossRef]

- Prasanth, A.; Alqahtani, H. Predictive Modeling of Student Behavior for Early Dropout Detection in Universities using Machine Learning Techniques. In Proceedings of the International Conference on Emerging Technologies and Applications, Bahrain, Bahrain, 25–27 October 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Al-Tameemi, G.; Xue, J.; Ajit, S.; Kanakis, T.; Hadi, I. Predictive Learning Analytics in Higher Education: Factors, Methods and Challenges. In Proceedings of the International Conference on Advances in Computing and Communication Engineering, Las Vegas, NV, USA, 22–24 June 2020; pp. 1–9. [Google Scholar] [CrossRef]

- Patel, K.; Amin, K. Predictive modeling of dropout in MOOCs using machine learning techniques. Sci. Temper 2024, 15, 2199–2206. [Google Scholar] [CrossRef]

- Tahiru, F. Predicting At-Risk Students in a Higher Educational Institution in Ghana for Early Intervention Using Machine Learning. Ph.D. Thesis, Durban University of Technology, Durban, South Africa, 2023. [Google Scholar] [CrossRef]

- Mduma, N.; Kalegele, K.; Machuve, D. Machine learning approach for reducing students dropout rates. Int. J. Adv. Comput. Res. 2019, 9, 156–169. [Google Scholar] [CrossRef]

- Mnyawami, Y.N.; Maziku, H.; Mushi, J.C. Enhanced Model for Predicting Student Dropouts in Developing Countries Using Automated Machine Learning Approach: A Case of Tanzanian’s Secondary Schools. Appl. Artif. Intell. 2022, 36, 2071406. [Google Scholar] [CrossRef]

- Sani, G.; Oladipo, F.; Ogbuju, E.; Agbo, F.J. Development of a Predictive Model of Student Attrition Rate. J. Appl. Artif. Intell. 2022, 3, 1–12. [Google Scholar] [CrossRef]

- Fauszt, T.; Erdélyi, K.; Dobák, D.; Bognár, L.; Kovács, E. Design of a Machine Learning Model to Predict Student Attrition. Int. J. Emerg. Technol. Learn. 2023, 18, 184. [Google Scholar] [CrossRef]

- Nagy, M. Interpretable Dropout Prediction: Towards XAI-Based Personalized Intervention. Int. J. Artif. Intell. Educ. 2023, 34, 274–300. [Google Scholar] [CrossRef]

- Berens, J.; Schneider, K.; Görtz, S.; Oster, S.; Burghoff, J. Early Detection of Students at Risk—Predicting Student Dropouts Using Administrative Student Data from German Universities and Machine Learning Methods. In Proceedings of the Educational Data Mining Conference, Montréal, QC, Canada, 2–5 July 2019; Volume 11, pp. 1–41. [Google Scholar] [CrossRef]

- Glandorf, D.; Lee, H.R.; Orona, G.A.; Pumptow, M.; Yu, R.; Fischer, C. Temporal and Between-Group Variability in College Dropout Prediction. In Proceedings of the 14th Learning Analytics and Knowledge Conference, Kyoto, Japan, 18–22 March 2024. [Google Scholar] [CrossRef]

- Hammoodi, M.S.; Al-Azawei, A.H.S. Using Socio-Demographic Information in Predicting Students’ Degree Completion based on a Dynamic Model. Int. J. Intell. Eng. Syst. 2022, 15, 107–115. [Google Scholar] [CrossRef]

- Perchinunno, P.; Bilancia, M.; Vitale, D. A Statistical Analysis of Factors Affecting Higher Education Dropouts. Soc. Indic. Res. 2021, 156, 341–362. [Google Scholar] [CrossRef]

- Vaarma, M.; Li, H. Predicting student dropouts with machine learning: An empirical study in Finnish higher education. Technol. Soc. 2024, 76, 102474. [Google Scholar] [CrossRef]

- Ortiz-Lozano, J.M.; Aparicio-Chueca, P.; Triadó-Ivern, X.; Arroyo-Barrigüete, J.L. Early dropout predictors in social sciences and management degree students. Stud. High. Educ. 2023, 49, 1303–1316. [Google Scholar] [CrossRef]

- Delogu, M.; Lagravinese, R.; Paolini, D.; Resce, G. Predicting dropout from higher education: Evidence from Italy. Econ. Model. 2024, 130, 106583. [Google Scholar] [CrossRef]

- Simón, E.J.L.; Puerta, J.G. Prediction of early dropout in higher education using the SCPQ. Cogent Psychol. 2022, 9, 2123588. [Google Scholar] [CrossRef]

- Fernández-García, A.J.; Preciado, J.C.; Melchor, F.; Rodríguez-Echeverría, R.; Conejero, J.M.; Sánchez-Figueroa, F. A Real-Life Machine Learning Experience for Predicting University Dropout at Different Stages Using Academic Data. IEEE Access 2021, 9, 133076–133090. [Google Scholar] [CrossRef]

- Blanquet, L.; Grilo, J.; Strecht, P.; Camanho, A. Curbing Dropout: Predictive Analytics at the University of Porto. In Proceedings of the 23rd Conference of the Portuguese Association for Information Systems (CAPSI 2023), Porto, Portugal, 19–21 October 2023; p. 14. Available online: https://aisel.aisnet.org/capsi2023/14 (accessed on 15 April 2025).

- Realinho, V.; Machado, J.A.D.; Baptista, L.M.T.; Martins, M. Predicting Student Dropout and Academic Success. Data 2022, 7, 146. [Google Scholar] [CrossRef]

- Martins, M.V.; Baptista, L.; Machado, J.; Realinho, V. Multi-class phased prediction of academic performance and dropout in higher education. Appl. Sci. 2023, 13, 4702. [Google Scholar] [CrossRef]

- Bassetti, E.; Conti, A.; Panizzi, E.; Tolomei, G. ISIDE: Proactively Assist University Students at Risk of Dropout. In Proceedings of the 2022 IEEE International Conference on Big Data (BigData 2022), Osaka, Japan, 17–20 December 2022; pp. 1776–1783. [Google Scholar] [CrossRef]

- Segura, M.; Mello, J.; Hernández, A. Machine Learning Prediction of University Student Dropout: Does Preference Play a Key Role? Mathematics 2022, 10, 3359. [Google Scholar] [CrossRef]

- Agrusti, F.; Bonavolonta, G.; Mezzini, M. Use of Artificial Intelligence to Predict University Dropout: A Quantitative Research. In Proceedings of the EDEN Conference Proceedings, Virtual, 21–24 June 2020; pp. 245–254. [Google Scholar] [CrossRef]