Shaping the Future of Higher Education: A Technology Usage Study on Generative AI Innovations

Abstract

1. Introduction

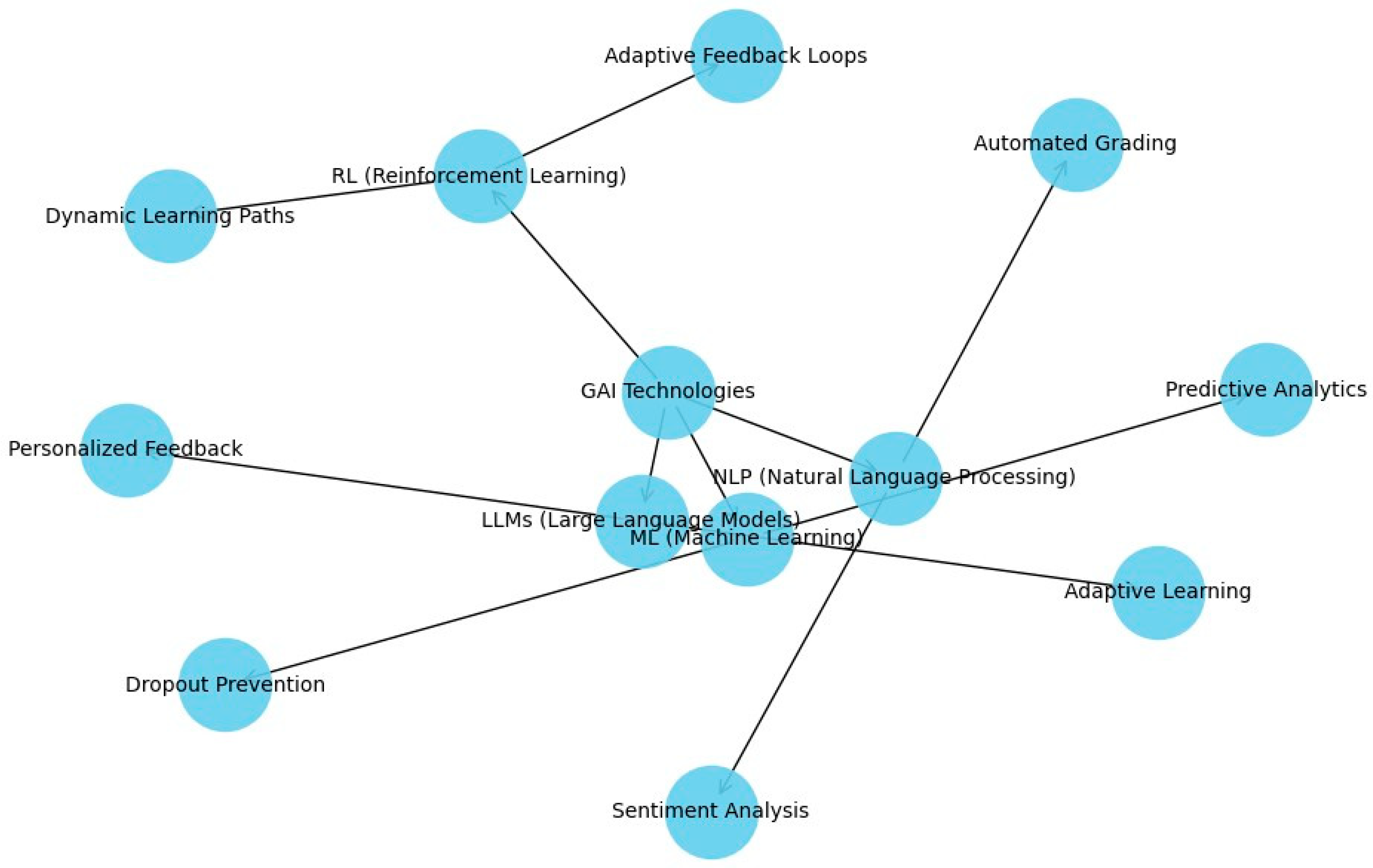

2. Technological Foundations of GAI in Higher Education

2.1. Large Language Models (LLMs): A Foundation for Interaction

2.2. Machine Learning (ML): Unlocking Patterns and Predictions

2.3. Natural Language Processing (NLP): Bridging Communication Gaps

2.4. Reinforcement Learning (RL): Adaptive and Interactive Learning Systems

2.5. Explainable AI (XAI): Building Transparency and Trust

2.6. Multimodal Learning Analytics (MMLA): Integrating Diverse Data Sources

3. Methodological Framework for Analyzing GAI in Higher Education

3.1. Rationale for the Review in the Context of Existing Knowledge

- (1)

- Large language models (LLMs) are widely used for personalized learning and automated grading.

- (2)

- Machine learning (ML) is applied to predict student performance and identify at-risk students.

- (3)

- Reinforcement learning (RL) is leveraged for adaptive learning environments.

3.2. Criteria for Inclusion in the Conference and Review

3.2.1. AIED 2024 Conference Inclusion Criteria

- (1)

- Originality. Novel contributions to AI in education.

- (2)

- Relevance. Research addressing key challenges in higher education.

- (3)

- Methodological Rigor. Studies with clear research designs and valid evaluation metrics.

- (4)

- Impact. Contributions with the potential to address critical gaps or advance the field.

3.2.2. Review Inclusion Criteria

- (1)

- Educational Relevance. Focus on higher education challenges.

- (2)

- Technological Diversity. Inclusion of LLMs, NLP, ML, and other GAI technologies.

- (3)

- Empirical Evidence. Preference for papers with measurable outcomes.

- (4)

- Comprehensive Scope. Representation across applications such as assessment, engagement, and predictive analytics.

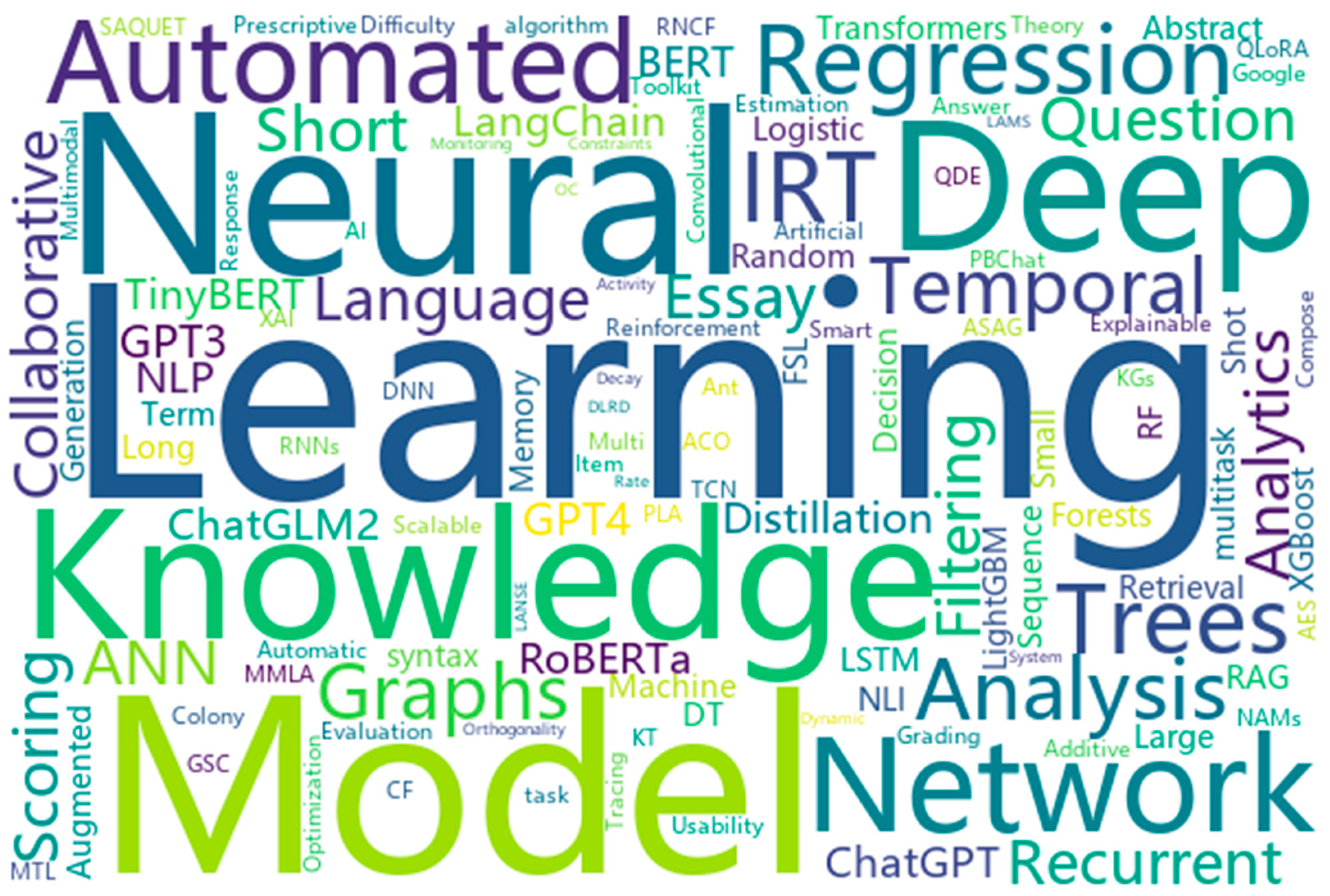

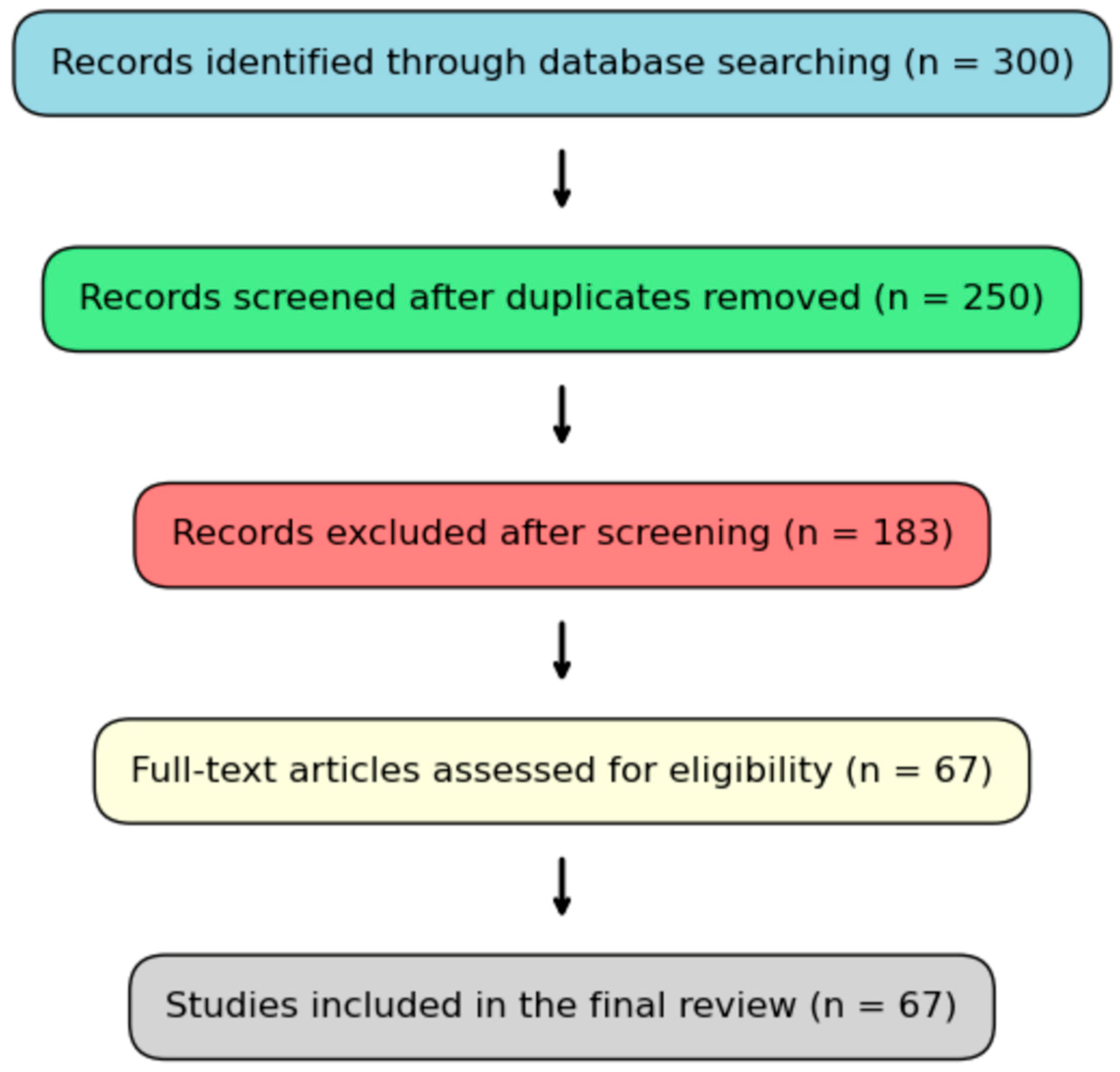

3.3. Systematic Review of the Academic Literature

3.3.1. Search Strategy

- (1)

- Keywords and Themes. Papers were identified based on their alignment with themes such as “Generative AI in education”, “personalized learning”, “adaptive learning systems”, and “AI-driven educational analytics”.

- (2)

- Scope of Search. The search included all tracks and sessions of the conference, ensuring comprehensive coverage of GAI applications in higher education.

- (3)

- Abstract Screening. Abstracts and keywords were screened to determine relevance to this study’s focus on higher education and core GAI technologies.

- (4)

- Full-Text Review. Papers that passed the abstract screening stage were reviewed in full to ensure they presented empirical findings, actionable insights, or theoretical advancements.

3.3.2. Screening and Selection

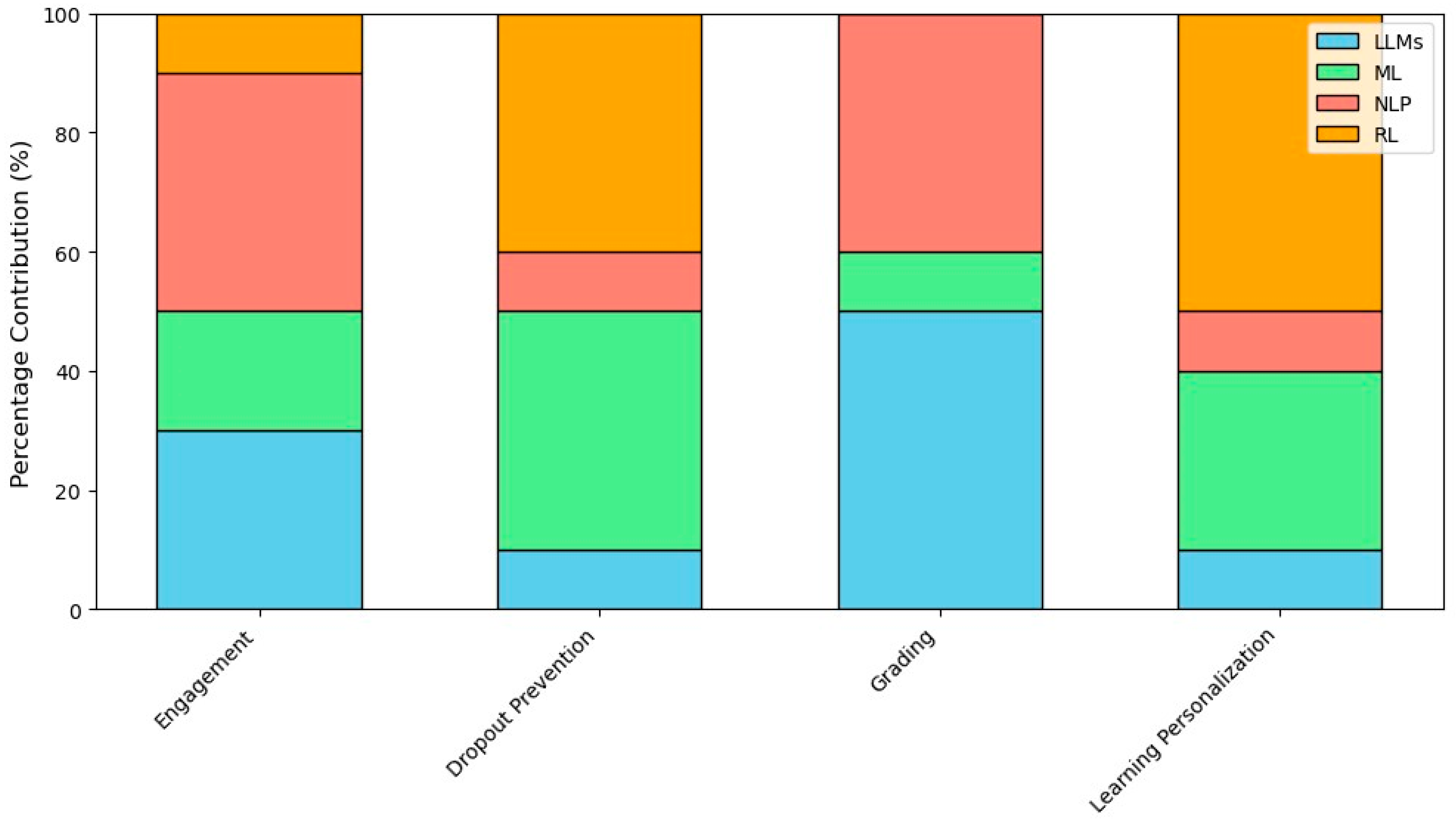

3.3.3. Synthesis of Findings

- (1)

- Technological Focus. Papers were grouped according to the core GAI technologies discussed, such as large language models (LLMs), machine learning (ML), Natural Language Processing (NLP), reinforcement learning (RL), and knowledge tracing (KT).

- (2)

- Application Areas. Studies were further categorized based on their application to higher education challenges, such as personalized learning, predictive analytics, and assessment automation.

- (3)

- Reported Outcomes. The empirical findings, such as engagement improvements, were extracted to evaluate the impact of GAI technologies.

3.4. Analytical Framework for Evaluating Case Studies

3.4.1. Selection Criteria

- (1)

- Technological Diversity. The case studies represent a range of GAI technologies, including large language models (LLMs), Natural Language Processing (NLP), machine learning (ML), and explainable AI (XAI).

- (2)

- Educational Relevance. The cases address critical challenges in higher education, such as engagement, personalized learning, assessment, and early intervention.

- (3)

- Empirical Evidence. Only case studies with measurable outcomes, such as retention improvements, or reduced workload were included.

- (4)

- Comprehensive Scope. The selected cases span a wide array of applications, from automated grading to career readiness prediction.

3.4.2. Evaluation Framework

- (1)

- Technological Role. How GAI technologies address specific challenges.

- (2)

- Applications Across Contexts. Implementation in areas such as grading, personalized learning, and performance prediction.

- (3)

- Challenges and Limitations. Barriers to adoption, such as technical complexity and ethical concerns

4. Case Studies and Applications of GAI in Higher Education

4.1. Leveraging Generative AI for Enhancing Student Engagement and Behavior Analysis

4.1.1. Enhancing Self-Regulated Learning with Learning Analytics Dashboards (LADs)

4.1.2. Diagnosing Student Problem Behaviors with PBChat

4.1.3. Multimodal Learning Analytics in Mixed-Reality Educational Environments

4.2. Advancing Educational Assessment with Generative AI

4.2.1. Enhancing Grading Transparency and Interpretability

4.2.2. Improving Assessment in Programming Education

4.2.3. Improving Complex Grading Tasks with NLP

4.2.4. Categorizing and Evaluating Student Responses

4.2.5. Advancing Essay Scoring with Large Language Models

4.2.6. Refining Automated Feedback Mechanisms

4.2.7. Addressing Linguistic Diversity in Essay Grading

4.2.8. Enhancing Essay Scoring Accuracy with Contextual Features

4.2.9. Improving Confidence Estimation in AES

4.2.10. Improving AI Feedback with Error Handling

4.2.11. Optimizing AI Models for Scoring Efficiency

4.2.12. Enhancing Text Cohesion Assessment with AI

4.2.13. Optimizing Grading Efficiency with AI

4.2.14. Improving AI Scoring Accuracy Through Example Selection

4.3. Advancements in Predictive Modeling for Student Performance

4.3.1. Knowledge Tracing with Large Language Models (LLMs)

4.3.2. Recurrent Neural Collaborative Filtering (RNCF) for Knowledge Tracing

4.3.3. Data-Driven Prediction of Student Performance

4.3.4. Physiological Signals for Performance Prediction

4.3.5. Algorithmic Fairness in Performance Prediction

4.3.6. Predicting Performance in Automated Essay Scoring with IRT Integration

4.3.7. Early Prediction in Intelligent Tutoring Systems

4.4. Generative AI in Enhancing Programming Education: Tools, Methods, and Insights

4.4.1. Enhancing Debugging Skills Through LLMs

4.4.2. Generative AI for Conceptual Support and Debugging

4.4.3. Assessing Code Explanations with Semantic Similarity

4.4.4. Logic Block Analysis for Feedback in Programming Submissions

4.4.5. ChatGPT’s Role in Programming Education

4.4.6. Gamified Programming Exercises with Generative AI

4.4.7. Dynamic Feedback in Collaborative Programming

4.5. Advancements in GAI for Educational Question Generation

4.5.1. Large vs. Small Language Models for Educational Question Generation

4.5.2. Reinforcement Learning for Educational Question Generation

4.5.3. Improving Content Generation with Retrieval-Augmented Generation (RAG)

4.5.4. Contextualized Multiple-Choice Question Generation for Mathematics

4.5.5. Evaluating LLMs for Bloom’s Taxonomy-Based Question Generation

4.5.6. GPT-4 for Bloom’s Taxonomy-Aligned MCQ Generation in Biology

4.6. GAI for Educators: Enhancing Decision Making and Teaching Practices

4.6.1. Enhancing Decision Making with AI-Driven Learning Analytics

4.6.2. Improving Teacher Professional Development with AI Frameworks

4.6.3. Automating Educational Test Item Evaluation with AI

4.6.4. Personalized Pedagogical Recommendations with AI

4.6.5. AI for Quality Control in Multiple-Choice Question Design

4.6.6. Enhancing Personalized Learning with Memory Modeling

4.6.7. AI-Enhanced Peer Feedback for Collaborative Learning

4.6.8. Analyzing Collaborative Learning with AI in Interdisciplinary Contexts

4.6.9. Conversational AI for Engaging Biology Education

4.6.10. Ensuring Academic Integrity with AI-Generated Content Detection

4.6.11. Optimizing Question Difficulty Estimation with Knowledge Graphs

4.7. GAI for Learners: Enhancing Personalized Learning and Educational Experiences

4.7.1. Improving Learner Engagement with AI-Generated Scaffoldings in ITS

4.7.2. Cultural Intelligence and Personalized Learning with AI Chatbots

4.7.3. Task-Based Learning and Instructional Video Enhancement with Generative AI

4.7.4. Expanding Online Learning Content with AI-Generated Educational Videos

4.7.5. AI-Driven Conversational Systems for Healthcare Education

4.7.6. Reinforcement Learning for Personalized Feedback in Math Education

4.7.7. AI-Powered Conversational Assistants for Online Classrooms

4.7.8. Hybrid AI–Human Collaboration for Educational Data Coding

4.7.9. Personalized Vocabulary Learning with AI

4.7.10. Intelligent Tutoring System for Advanced Mathematics

4.7.11. Generative AI for Scalable Feedback Generation in Higher Education

4.7.12. ChatGPT’s Impact on Knowledge Comprehension

4.7.13. AI-Driven Personalized Learning Feedback for Large-Scale Courses

4.8. Generative AI for Student Success: Early Detection and Career Readiness Prediction

4.8.1. Multitasking Models for Identifying At-Risk Students

4.8.2. LLMs for Predicting Student Dropout

4.8.3. Learning Analytics for Student Dropout Prediction

4.8.4. ACO-LSTM Model for Dropout Prediction

4.8.5. Career Readiness Prediction Using LLMs

5. Challenges and Limitations

5.1. Scalability and Consistency

5.2. Data Imbalances and Quality Issues

5.3. Model Accuracy and Transparency

5.4. Ethical Concerns

5.5. User Trust and Engagement

5.6. Practical Implementation and Real-World Applicability

5.7. Long-Term Impact and Sustainability

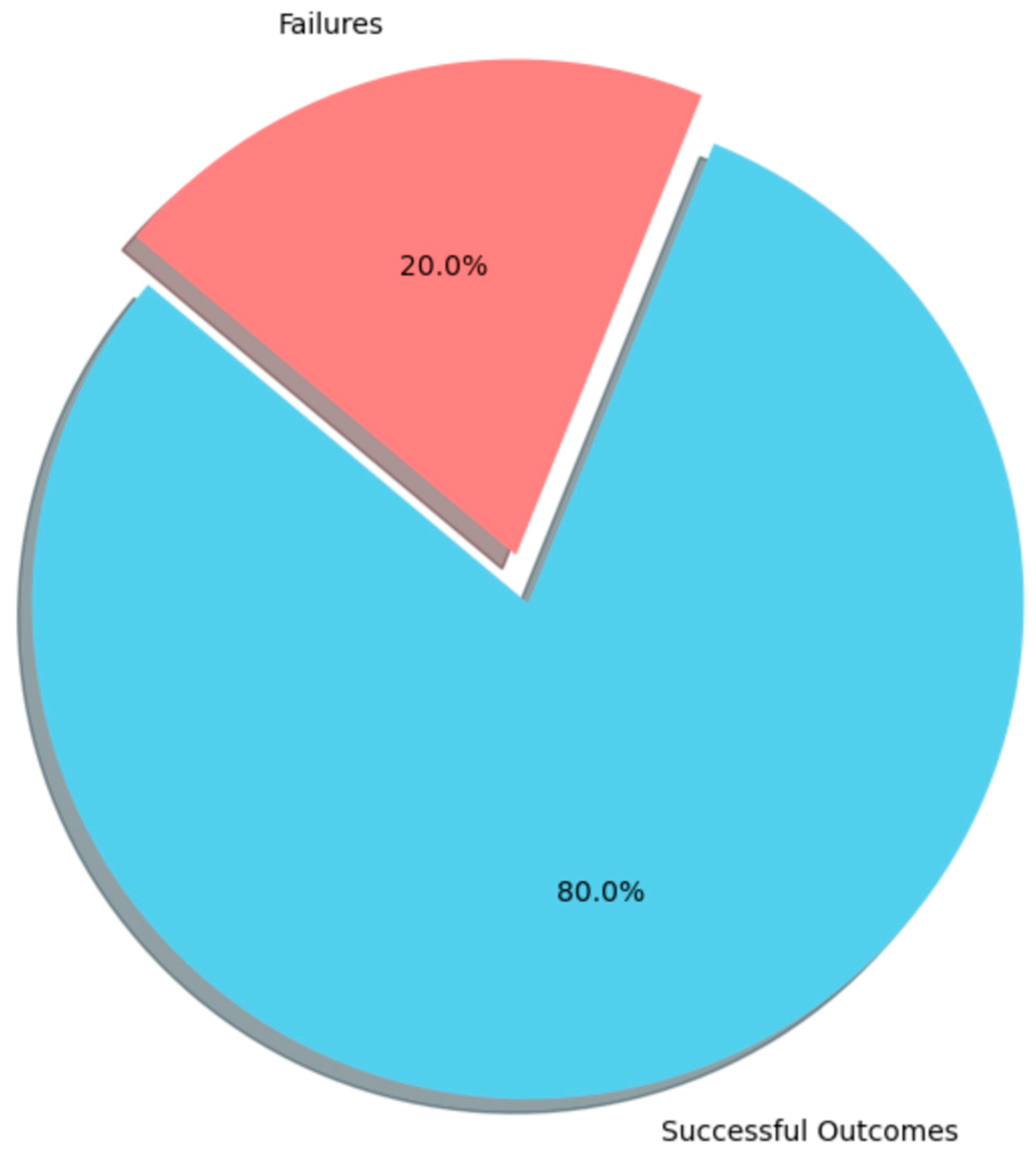

5.8. Failures in GAI Applications

6. Future Directions in Generative AI in Education

6.1. Enhancing Personalization and Adaptivity

6.2. Ethical and Bias Considerations in Generative AI

6.3. Improving System Usability and Trustworthiness

6.4. Scalable and Robust AI Models for Diverse Educational Contexts

6.5. Long-Term Impact and Integration into Curriculum Design

6.6. Interdisciplinary Collaboration for Holistic AI Development

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Moore, R.; Caines, A.; Buttery, P. Recurrent Neural Collaborative Filtering for Knowledge Tracing. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Messer, M.; Shi, M.; Brown, N.C.C.; Kölling, M. Grading Documentation with Machine Learning. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Cechinel, C.; Queiroga, E.M.; Primo, T.T.; dos Santos, H.L.; Ramos, V.F.C.; Munoz, R.; Mello, R.F.; Machado, M.F.B. LANSE: A Cloud-Powered Learning Analytics Platform for the Automated Identification of Students at Risk in Learning Management Systems. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Yan, L.; Zhao, L.; Echeverria, V.; Jin, Y.; Alfredo, R.; Li, X.; Gaševi’c, D.; Martinez-Maldonado, R. VizChat: Enhancing Learning Analytics Dashboards with Contextualised Explanations Using Multimodal Generative AI Chatbots. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Stamper, J.; Xiao, R.; Hou, X. Enhancing LLM-Based Feedback: Insights from Intelligent Tutoring Systems and the Learning Sciences. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Machado, G.M.; Soni, A. Predicting Academic Performance: A Comprehensive Electrodermal Activity Study. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Ma, Q.; Shen, H.; Koedinger, K.; Wu, S.T. How to Teach Programming in the AI Era? Using LLMs as a Teachable Agent for Debugging. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Banjade, R.; Oli, P.; Sajib, M.I.; Rus, V. Identifying Gaps in Students’ Explanations of Code Using LLMs. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Naik, A.; Yin, J.R.; Kamath, A.; Ma, Q.; Wu, S.T.; Murray, C.; Bogart, C.; Sakr, M.; Rose, C.P. Generating Situated Reflection Triggers About Alternative Solution Paths: A Case Study of Generative AI for Computer-Supported Collaborative Learning. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Hwang, H. The Role of First Language in Automated Essay Grading for Second Language Writing. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Sonkar, S.; Ni, K.; Tran Lu, L.; Kincaid, K.; Hutchinson, J.S.; Baraniuk, R.G. Automated Long Answer Grading with RiceChem Dataset. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Dey, I.; Gnesdilow, D.; Passonneau, R.; Puntambekar, S. Potential Pitfalls of False Positives. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Sil, P.; Chaudhuri, P.; Raman, B. Can AI Assistance Aid in the Grading of Handwritten Answer Sheets? In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Scarlatos, A.; Smith, D.; Woodhead, S.; Lan, A. Improving the Validity of Automatically Generated Feedback via Reinforcement Learning. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Liang, Z.; Sha, L.; Tsai, Y.S.; Gašević, D.; Chen, G. Towards the Automated Generation of Readily Applicable Personalised Feedback in Education. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Rosa, B.A.B.; Oliveira, H.; Mello, R.F. Prediction of Essay Cohesion in Portuguese Based on Item Response Theory in Machine Learning. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Fonteles, J.; Davalos, E.; Ashwin, T.S.; Zhang, Y.; Zhou, M.; Ayalon, E.; Lane, A.; Steinberg, S.; Anton, G.; Danish, J.; et al. A First Step in Using Machine Learning Methods to Enhance Interaction Analysis for Embodied Learning Environments. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Villalobos, E.; Pérez-Sanagustín, M.; Broisin, J. From Learning Actions to Dynamics: Characterizing Students’ Individual Temporal Behavior with Sequence Analysis. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Condor, A.; Pardos, Z. Explainable Automatic Grading with Neural Additive Models. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Chen, P.; Fan, Z.; Lu, Y.; Xu, Q. PBChat: Enhance Student’s Problem Behavior Diagnosis with Large Language Model. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Zhan, B.; Guo, T.; Li, X.; Hou, M.; Liang, Q.; Gao, B.; Luo, W.; Liu, Z. Knowledge Tracing as Language Processing: A Large-Scale Autoregressive Paradigm. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Blanchard, E.G.; Mohammed, P. On Cultural Intelligence in LLM-Based Chatbots: Implications for Artificial Intelligence in Education. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Latif, E.; Fang, L.; Ma, P.; Zhai, X. Knowledge Distillation of LLMs for Automatic Scoring of Science Assessments. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- de Souza Cabral, L.; Dwan Pereira, F.; Ferreira Mello, R. Enhancing Algorithmic Fairness in Student Performance Prediction Through Unbiased and Equitable Machine Learning Models. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Pan, K.W.; Jeffries, B.; Koprinska, I. Predicting Successful Programming Submissions Based on Critical Logic Blocks. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Uto, M.; Takahashi, Y. Neural Automated Essay Scoring for Improved Confidence Estimation and Score Prediction Through Integrated Classification and Regression. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Yu, H.; Allessio, D.A.; Rebelsky, W.; Murray, T.; Magee, J.J.; Arroyo, I.; Woolf, B.P.; Bargal, S.A.; Betke, M. Affect Behavior Prediction: Using Transformers and Timing Information to Make Early Predictions of Student Exercise Outcome. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Li, T.; Bian, C.; Wang, N.; Xie, Y.; Chen, K.; Lu, W. Modeling Learner Memory Based on LSTM Autoencoder and Collaborative Filtering. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Aboukacem, A.; Berrada, I.; Bergou, E.H.; Iraqi, Y.; Mekouar, L. Investigating the Predictive Potential of Large Language Models in Student Dropout Prediction. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Miladi, F.; Psyché, V.; Lemire, D. Leveraging GPT-4 for Accuracy in Education: A Comparative Study on Retrieval-Augmented Generation in MOOCs. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Galhardi, L.; Herculano, M.F.; Rodrigues, L.; Miranda, P.; Oliveira, H.; Cordeiro, T.; Bittencourt, I.I.; Isotani, S.; Mello, R.F. Contextual Features for Automatic Essay Scoring in Portuguese. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Aramaki, K.; Uto, M. Collaborative Essay Evaluation with Human and Neural Graders Using Item Response Theory Under a Nonequivalent Groups Design. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Tsutsumi, E.; Nishio, T.; Ueno, M. Deep-IRT with a Temporal Convolutional Network for Reflecting Students’ Long-Term History of Ability Data. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Li, R.; Wang, Y.; Zheng, C.; Jiang, Y.H.; Jiang, B. Generating Contextualized Mathematics Multiple-Choice Questions Utilizing Large Language Models. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Elbouknify, I.; Berrada, I.; Mekouar, L.; Iraqi, Y.; Bergou, E.H.; Belhabib, H.; Nail, Y.; Wardi, S. Student At-Risk Identification and Classification Through Multitask Learning: A Case Study on the Moroccan Education System. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Singh, A.K.; Karthikeyan, S. Heuristic Technique to Find Optimal Learning Rate of LSTM for Predicting Student Dropout Rate. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Li, J.; Xu, M.; Zhou, Y.; Zhang, R. Research on Personalized Hybrid Recommendation System for English Word Learning. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Lamsiyah, S.; El Mahdaouy, A.; Nourbakhsh, A.; Schommer, C. Fine-Tuning a Large Language Model with Reinforcement Learning for Educational Question Generation. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Ma, B.; Chen, L.; Konomi, S. Enhancing Programming Education with ChatGPT: A Case Study on Student Perceptions and Interactions in a Python Course. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Chen, S.; Lan, Y.; Yuan, Z. A Multi-task Automated Assessment System for Essay Scoring. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Sonkar, S.; Liu, N.; Mallick, D.B.; Baraniuk, R.G. Marking: Visual Grading with Highlighting Errors and Annotating Missing Bits. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Feng, H.; Du, S.; Zhu, G.; Zou, Y.; Phua, P.B.; Feng, Y.; Zhong, H.; Shen, Z.; Liu, S. Leveraging Large Language Models for Automated Chinese Essay Scoring. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Yoshida, L. The Impact of Example Selection in Few-Shot Prompting on Automated Essay Scoring Using GPT Models. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Wang, Z.; Koprinska, I.; Jeffries, B. Interpretable Methods for Early Prediction of Student Performance in Programming Courses. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Ghimire, A.; Edwards, J. Coding with AI: How Are Tools Like ChatGPT Being Used by Students in Foundational Programming Courses. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Montella, R.; Giuseppe De Vita, C.; Mellone, G.; Ciricillo, T.; Caramiello, D.; Di Luccio, D.; Kosta, S.; Damasevicius, R.; Maskeliunas, R.; Queiros, R.; et al. GAMAI, an AI-Powered Programming Exercise Gamifier Tool. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Fawzi, F.; Balan, S.; Cukurova, M.; Yilmaz, E.; Bulathwela, S. Towards Human-Like Educational Question Generation with Small Language Models. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Scaria, N.; Dharani Chenna, S.; Subramani, D. Automated Educational Question Generation at Different Bloom’s Skill Levels Using Large Language Models: Strategies and Evaluation. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Hwang, K.; Wang, K.; Alomair, M.; Choa, F.S.; Chen, L.K. Towards Automated Multiple Choice Question Generation and Evaluation: Aligning with Bloom’s Taxonomy. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Yang, K.; Chu, Y.; Darwin, T.; Han, A.; Li, H.; Wen, H.; Copur-Gencturk, Y.; Tang, J.; Liu, H. Content Knowledge Identification with Multi-agent Large Language Models (LLMs). In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Do, H.; Lee, G.G. Aspect-Based Semantic Textual Similarity for Educational Test Items. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Dehbozorgi, N.; Kunuku, M.T.; Pouriyeh, S. Personalized Pedagogy Through a LLM-Based Recommender System. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Moore, S.; Costello, E.; Nguyen, H.A.; Stamper, J. An Automatic Question Usability Evaluation Toolkit. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Saccardi, I.; Masthoff, J. Adapting Emotional Support in Teams: Quality of Contribution, Emotional Stability and Conscientiousness. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Cohn, C.; Snyder, C.; Montenegro, J.; Biswas, G. Towards a Human-in-the-Loop LLM Approach to Collaborative Discourse Analysis. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Schmucker, R.; Xia, M.; Azaria, A.; Mitchell, T. Ruffle &Riley: Insights from Designing and Evaluating a Large Language Model-Based Conversational Tutoring System. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Oliveira, E.A.; Mohoni, M.; Rios, S. Towards Explainable Authorship Verification: An Approach to Minimise Academic Misconduct in Higher Education. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Gherardi, E.; Benedetto, L.; Matera, M.; Buttery, P. Using Knowledge Graphs to Improve Question Difficulty Estimation from Text. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Pian, Y.; Li, M.; Lu, Y.; Chen, P. From “Giving a Fish” to “Teaching to Fish”: Enhancing ITS Inner Loops with Large Language Models. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Kwon, C.; King, J.; Carney, J.; Stamper, J. A Schema-Based Approach to the Linkage of Multimodal Learning Sources with Generative AI. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Thareja, R.; Dwivedi, D.; Garg, R.; Baghel, S.; Mohania, M.; Shukla, J. EDEN: Enhanced Database Expansion in eLearning: A Method for Automated Generation of Academic Videos. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Garg, A.; Chaudhury, R.; Godbole, M.; Seo, J.H. Leveraging Language Models and Audio-Driven Dynamic Facial Motion Synthesis: A New Paradigm in AI-Driven Interview Training. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Taneja, K.; Maiti, P.; Kakar, S.; Guruprasad, P.; Rao, S.; Goel, A.K. Jill Watson: A Virtual Teaching Assistant Powered by ChatGPT. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Barany, A.; Nasiar, N.; Porter, C.; Zambrano, A.F.; Andres, A.L.; Bright, D.; Shah, M.; Liu, X.; Gao, S.; Zhang, J.; et al. ChatGPT for Education Research: Exploring the Potential of Large Language Models for Qualitative Codebook Development. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Fang, Y.; He, B.; Liu, Z.; Liu, S.; Yan, Z.; Sun, J. Evaluating the Design Features of an Intelligent Tutoring System for Advanced Mathematics Learning. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Chen, L.; Li, G.; Ma, B.; Tang, C.; Okubo, F.; Shimada, A. How Do Strategies for Using ChatGPT Affect Knowledge Comprehension? In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

- Cui, C.; Abdalla, A.; Wijaya, D.; Solberg, S.; Bargal, S.A. Large Language Models for Career Readiness Prediction. In Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024. [Google Scholar]

| Technology | Representative References | Use Cases |

|---|---|---|

| Sequence Analysis, Temporal Analysis | [18] | Applied in analyzing temporal patterns of student behavior and engagement across multiple learning sessions, helping to identify trends and predict potential performance issues or dropout risks. |

| Neural Additive Models (NAMs) | [19] | Utilized in building predictive models that are both accurate and interpretable, helping instructors understand the contributing factors behind student success and performance outcomes. |

| Explainable AI (XAI) | [15,16] | Employed to make AI-driven predictions (e.g., in automated grading or student success models) more understandable and transparent, enabling instructors to trust and act on AI recommendations. |

| ChatGLM2 Model, QLoRA Algorithm | [20] | Applied in diagnosing and predicting student problem behaviors, providing a personalized learning assistant via chat, and offering tailored interventions to improve student outcomes. |

| Multimodal Learning Analytics (MMLA) | [17] | Combines data from different learning platforms (e.g., learning management systems, student interactions) to track performance, predict engagement levels, and deliver tailored interventions. |

| Large Language Models (LLMs) | [21,22,23] | Used for knowledge tracing, automated essay grading, and providing personalized learning experiences through adaptive conversational agents, such as ChatGPT, enhancing student engagement and feedback. |

| Knowledge Tracing (KT) | [1] | Used in tracking and predicting student knowledge progression, identifying gaps in understanding, and providing timely interventions for at-risk students, often incorporated in intelligent tutoring systems. |

| Machine Learning (ML) | [2,10] | Applied in various educational contexts to predict student success, personalize learning pathways, and optimize learning materials based on past performance, thus enhancing student retention. |

| Decision Trees (DTs), Random Forests (RFs) | [24,25] | Used for predicting student success, dropout rates, and performance in assessments by analyzing historical student data, enabling early interventions for struggling students. |

| Transformers (BERT, RoBERTa, TinyBERT) | [26,27] | BERT-based models are applied in automated essay grading, improving the accuracy of text comprehension tasks and enhancing the evaluation of written assignments in real time. |

| Collaborative Filtering (CF) | [28] | Used in adaptive learning systems and recommendation engines to suggest personalized learning content, resources, and activities based on student preferences and learning behaviors. |

| Knowledge Graphs (KGs) | [29] | Employed to model the relationships between various learning concepts and student data, improving question difficulty estimation and enhancing dropout prediction by capturing contextual information. |

| Reinforcement Learning (RL) | [14], | Applied in adaptive learning environments where AI models learn from student interactions, optimizing learning paths and predicting when and how to provide interventions for improved outcomes. |

| Retrieval-Augmented Generation (RAG) | [29,30] | Used in dropout prediction models and personalized learning systems, where the AI model retrieves relevant learning materials to generate tailored recommendations for at-risk students. |

| Automated Essay Grading (AEG) | [31,32] | Implemented in AI-based grading systems to evaluate student essays efficiently, offering real-time feedback on content quality, grammar, structure, and relevance while reducing instructor workload. |

| Prescriptive Learning Analytics (PLA) | [15] | Combined with XAI, prescriptive analytics offer actionable insights to educators, suggesting personalized interventions to improve student outcomes, particularly for struggling learners. |

| Item Response Theory (IRT) | [16,33] | Applied in assessing student proficiency on standardized tests or quizzes, analyzing responses to predict overall mastery levels, and informing personalized learning interventions. |

| Natural Language Processing (NLP) | [11,12,13] | Used in automated feedback generation, essay grading, and student sentiment analysis, enhancing interactions with AI tools and enabling real-time academic support based on student input. |

| LangChain Framework | [34] | Utilized for integrating various generative AI tools (e.g., GPT-4, knowledge graphs) into a cohesive framework for personalized learning, adaptive feedback systems, and interactive student assistants. |

| XGBoost | [35] | Applied in predictive models for student success, dropout risk, and academic performance by analyzing complex student datasets and making highly accurate predictions. |

| Long Short-Term Memory (LSTM) | [36,37] | Employed in sequence prediction tasks, such as analyzing student performance trends and predicting future learning trajectories based on historical engagement and test results. |

| Criterion | Description |

|---|---|

| Originality | Novel contributions to AI in education. |

| Relevance | Addressing significant challenges in higher education. |

| Methodological Rigor | Clear research designs, reproducible results, and valid metrics. |

| Impact | Addressing critical gaps or advancing the field of GAI in education. |

| Case Study Title | Technology Used | Application |

|---|---|---|

| Leveraging Generative AI for Enhancing Student Engagement and Behavior Analysis | Multimodal Learning Analytics (MMLA), PBChat | Analyzing student behavior and improving engagement |

| Advancing Educational Assessment with Generative AI | Large Language Models (GPT-4), NLP | Automated essay grading and personalized feedback |

| Advancements in Predictive Modeling for Student Performance | ML (Random Forests), LLMs | Knowledge tracing and student performance prediction |

| Generative AI in Enhancing Programming Education | Programming-Specific GAI Tools | Debugging, code comprehension, and automated feedback |

| Advancements in GAI for Educational Question Generation | LLMs, Small Language Models (sLMs) | Generating diverse educational questions |

| GAI for Educators: Enhancing Decision-Making and Teaching Practices | GPT, NLP | Improving assessment and streamlining administrative tasks |

| GAI for Learners: Enhancing Personalized Learning and Educational Experiences | Intelligent Tutoring Systems (ITSs), LLMs | Personalized learning and adaptive feedback |

| Generative AI for Student Success: Early Detection and Career Readiness Prediction | Multitasking AI Models, Career Readiness Models | Early identification of at-risk students and career readiness prediction |

| Study | Key Focus | Key Findings and Innovations |

|---|---|---|

| [18,20] | Enhancing Self-Regulated Learning with LADs and Diagnosing Student Behaviors with PBChat | LADs support SRL by providing real-time insights into learning behaviors. PBChat uses GAI for diagnosing student behaviors, offering systematic and scalable interventions. |

| [17] | Multimodal Learning Analytics in Mixed-Reality Educational Environments | Integration of ML and MMLA in a mixed-reality setting provides real-time insights into student behaviors, enhancing engagement in scientific learning tasks, like photosynthesis. |

| Study | Study Focus | Key Findings and Innovations |

|---|---|---|

| [19] | Grading Transparency | NAMs improve grading accuracy and offer more interpretable AI decisions, enhancing AI integration in educational systems. |

| [2,40] | Assessment in Programming and Feedback | GAI automates the assessment of code and documentation quality, offering personalized, real-time feedback through models like BERT and GPT. |

| [10,11,41,42] | Complex Grading and Linguistic Diversity | GAI improves long-answer grading and addresses linguistic diversity in essay grading, providing equitable assessments across languages. |

| [26,31] | Essay Scoring and Confidence Estimation | Integrating IRT and confidence estimation enhances essay scoring accuracy, making GAI more reliable in high-stakes assessments. |

| [12,13,23] | Grading Efficiency and Feedback Optimization | AI-assisted grading systems streamline grading processes and reduce time, improving feedback quality in large-scale assessments. |

| [16,43] | Feedback and Scoring with Example Selection | Careful example selection improves AI feedback accuracy and reduces biases in essay scoring systems. |

| Study | Study Focus | Key Findings and Innovations |

|---|---|---|

| [1,21] | Knowledge Tracing with LLMs and RNCF | LLMs and RNCF improve student performance prediction by enhancing personalization, scalability, and adapting to real-time learning data. |

| [6,44] | Data-Driven and Physiological Prediction | Data-driven models and electrodermal activity (EDA) signals offer accurate predictions of student performance, identifying stress and enabling early interventions. |

| [24] | Algorithmic Fairness in Prediction | Efforts to mitigate biases in performance prediction models contribute to more equitable and interpretable results across student demographics. |

| [27,32] | IRT Integration and Early Prediction | IRT integration in AES improves performance comparison, while affective computing enables early prediction of student success, refining real-time interventions. |

| Study | Study Focus | Key Findings and Innovations |

|---|---|---|

| [7,45] | Enhancing Debugging Skills and Conceptual Support | Generative AI tools improve debugging skills and provide on-demand support, fostering student-centered learning and enhancing engagement through real-time assistance. |

| [8,25] | Assessing Code Explanations and Logic Block Analysis | LLMs and AI techniques, such as abstract syntax trees (ASTs), are used for evaluating code explanations and providing personalized feedback, significantly improving student understanding of programming concepts. |

| [39,46] | ChatGPT and Gamified Exercises | ChatGPT and GPT-based models enhance learning experiences through interactive feedback, gamified programming tasks, and code generation, although further refinement is needed to optimize long-term interactions and content quality. |

| [9] | Dynamic Feedback in Collaborative Programming | The use of ChatGPT in collaborative programming exercises supports dynamic student interactions, improving the learning process by integrating contextualized feedback and reflection triggers. |

| Study | Study Focus | Key Findings and Innovations |

|---|---|---|

| [47] | LLMs vs. sLMs for Question Generation | Compares large and small language models for generating educational questions, balancing efficiency with ethical concerns. |

| [38] | Reinforcement Learning for Question Generation | Demonstrates reinforcement learning’s role in improving question generation by addressing biases and inconsistencies. |

| [30] | RAG for Enhanced Content Accuracy | Integrates retrieval-augmented generation (RAG) with GPT to improve accuracy in content generation, reducing hallucinations. |

| [34] | Contextualized MCQs for Mathematics | Uses AI to generate contextually relevant multiple-choice questions (MCQs) for mathematics, reducing educator workload. |

| [48] | Evaluating LLMs for Bloom’s Taxonomy | Assesses LLMs for creating questions aligned with Bloom’s Taxonomy, improving pedagogical relevance across cognitive levels. |

| [49] | GPT-4 for Bloom’s Taxonomy-Aligned MCQs | Evaluates GPT-4 in generating Bloom’s Taxonomy-based MCQs for biology, finding room for improvement in higher cognitive levels. |

| Study | Study Focus | Key Findings and Innovations |

|---|---|---|

| [4,50] | Enhancing Decision Making and Teacher Development | VizChat improves Learning Analytics Dashboards (LADs) with context-sensitive explanations (GPT-4V, RAG). LLMAgent-CK assesses teachers’ content knowledge, improving evaluation accuracy without extensive labeled datasets. |

| [51,52] | Automating Test Evaluation and Personalized Pedagogical Recommendations | Uses AI (LLMs, RAG) to automate exam quality control and personalize teaching practices via PDP recommender systems, improving teaching and assessment processes. |

| [53] | AI for Quality Control in MCQ Design | SAQUET automates flaw detection in multiple-choice questions using NLP, ensuring high-quality, pedagogically sound assessments. |

| [28,54] | Enhancing Personalized Learning and Peer Feedback | Uses LSTM autoencoder for memory modeling to enhance adaptive learning. AI-enhanced peer feedback tailors support based on personality traits, improving collaborative learning. |

| [55,56] | Analyzing Collaborative Learning and Conversational AI | GPT-4-Turbo aids in analyzing interdisciplinary collaboration in STEM. Conversational AI automates tutoring interactions, enhancing student engagement in biology education. |

| [57,58] | Ensuring Academic Integrity and Optimizing Question Difficulty | Enhanced authorship verification (AV) detects AI-generated content. Knowledge graphs (KGs) improve question difficulty prediction, contributing to personalized learning and fair assessments. |

| Study | Study Focus | Key Findings and Innovations |

|---|---|---|

| [22,59] | Learner Engagement and Cultural Intelligence | LLMs used in ITS to generate dynamic scaffolding for enhanced engagement and critical thinking. Chatbots with cultural intelligence tailored personalized learning experiences. |

| [60,61] | Task-Based Learning and Video Enhancement | Integrated text- and video-based learning for complex tasks. EDEN system expands online content with AI-generated videos, ensuring high-quality resources. |

| [62] | Conversational AI for Healthcare Education | AI chatbot simulating courtroom scenarios for nursing students, providing immersive and personalized training. |

| [14,15] | Reinforcement Learning for Feedback | Applied reinforcement learning to provide personalized feedback, improving engagement and performance. PLA with XAI for scalable feedback in large courses. |

| [63,64] | AI-Powered Conversational Assistants | Jill Watson AI assistant supports online classrooms, increasing engagement. Hybrid AI–human collaboration for more efficient educational data coding. |

| [37,65] | Personalized Learning and ITS | AI system for personalized vocabulary learning and ITS for advanced math, improving feedback and learning outcomes. |

| [5,66] | Scalable Feedback and Knowledge Comprehension | LLMs generate scalable feedback in ITS. ChatGPT’s impact on students’ knowledge comprehension through effective AI use. |

| Study | Study Focus | Key Findings and Innovations |

|---|---|---|

| [3,29,35] | At-Risk Student Detection and Dropout Prediction | Multitasking AI models and LLMs predict at-risk students and dropout risks with high accuracy using data analytics and AI-enhanced learning models. |

| [36] | Dropout Prediction using ACO-LSTM | ACO-LSTM approach improves dropout prediction accuracy by optimizing the LSTM model’s learning rate, offering better retention predictions. |

| [67] | Career Readiness Prediction using LLMs | LLMs predict career readiness based on students’ narrative responses, analyzing identity status and preparing students for workforce entry. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pang, W.; Wei, Z. Shaping the Future of Higher Education: A Technology Usage Study on Generative AI Innovations. Information 2025, 16, 95. https://doi.org/10.3390/info16020095

Pang W, Wei Z. Shaping the Future of Higher Education: A Technology Usage Study on Generative AI Innovations. Information. 2025; 16(2):95. https://doi.org/10.3390/info16020095

Chicago/Turabian StylePang, Weina, and Zhe Wei. 2025. "Shaping the Future of Higher Education: A Technology Usage Study on Generative AI Innovations" Information 16, no. 2: 95. https://doi.org/10.3390/info16020095

APA StylePang, W., & Wei, Z. (2025). Shaping the Future of Higher Education: A Technology Usage Study on Generative AI Innovations. Information, 16(2), 95. https://doi.org/10.3390/info16020095