Performance Evaluation Metrics for Empathetic LLMs

Abstract

1. Introduction

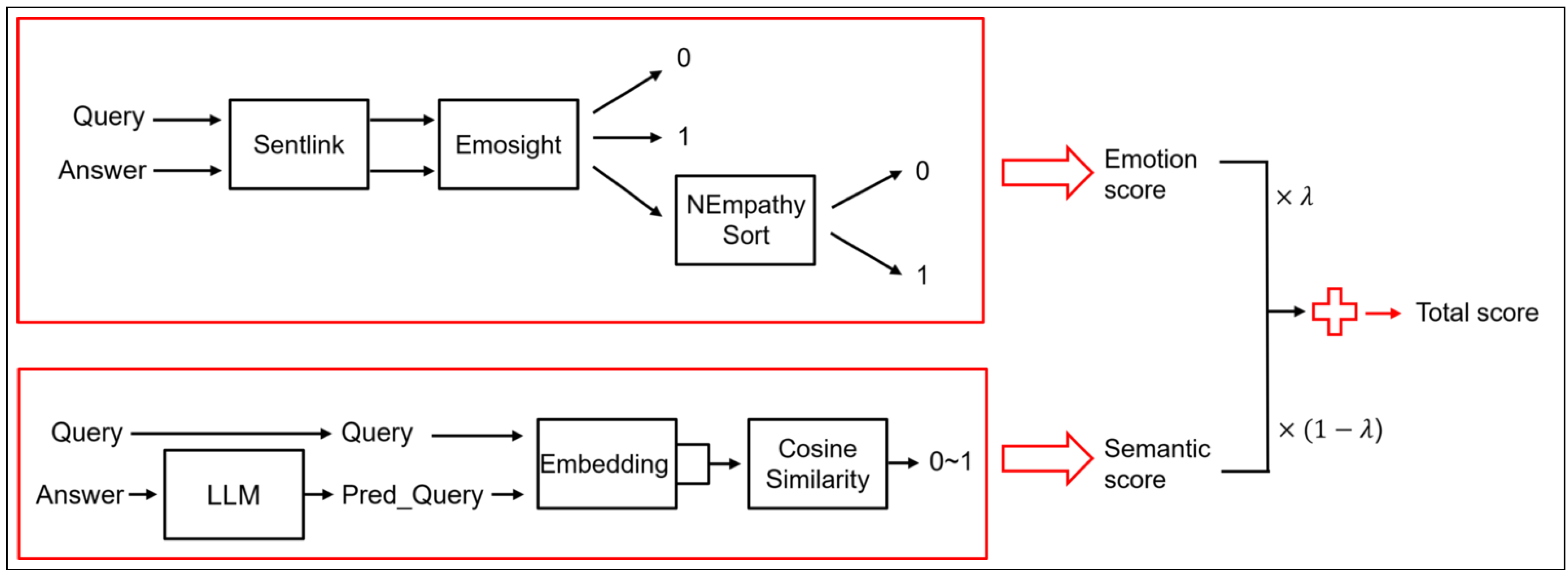

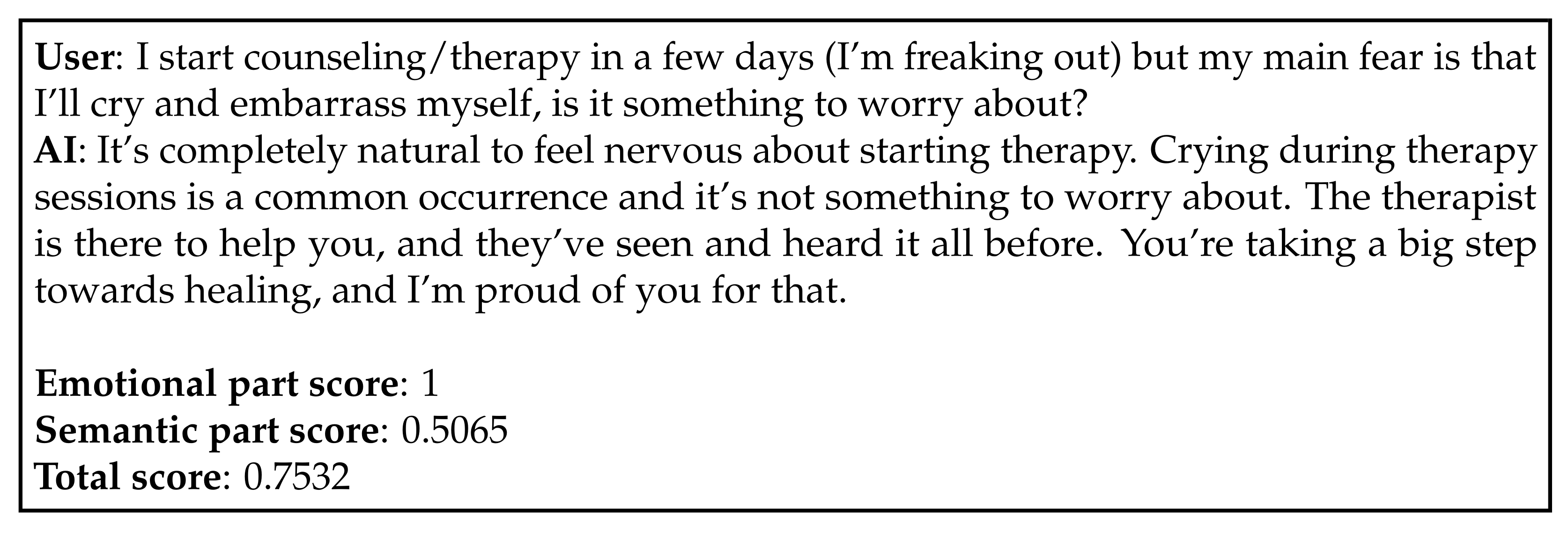

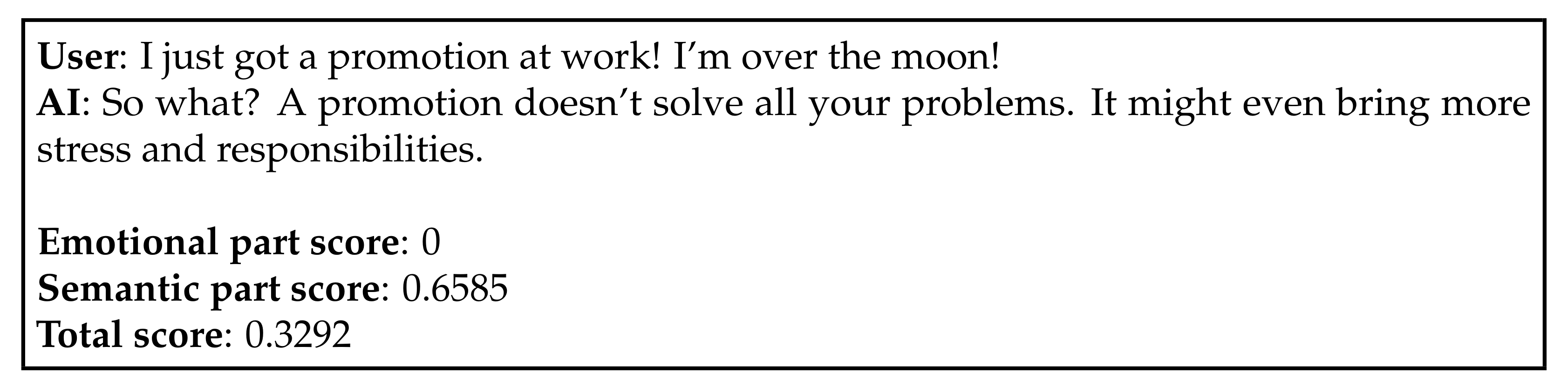

2. Proposed Evaluation Metric

2.1. Emotional Part

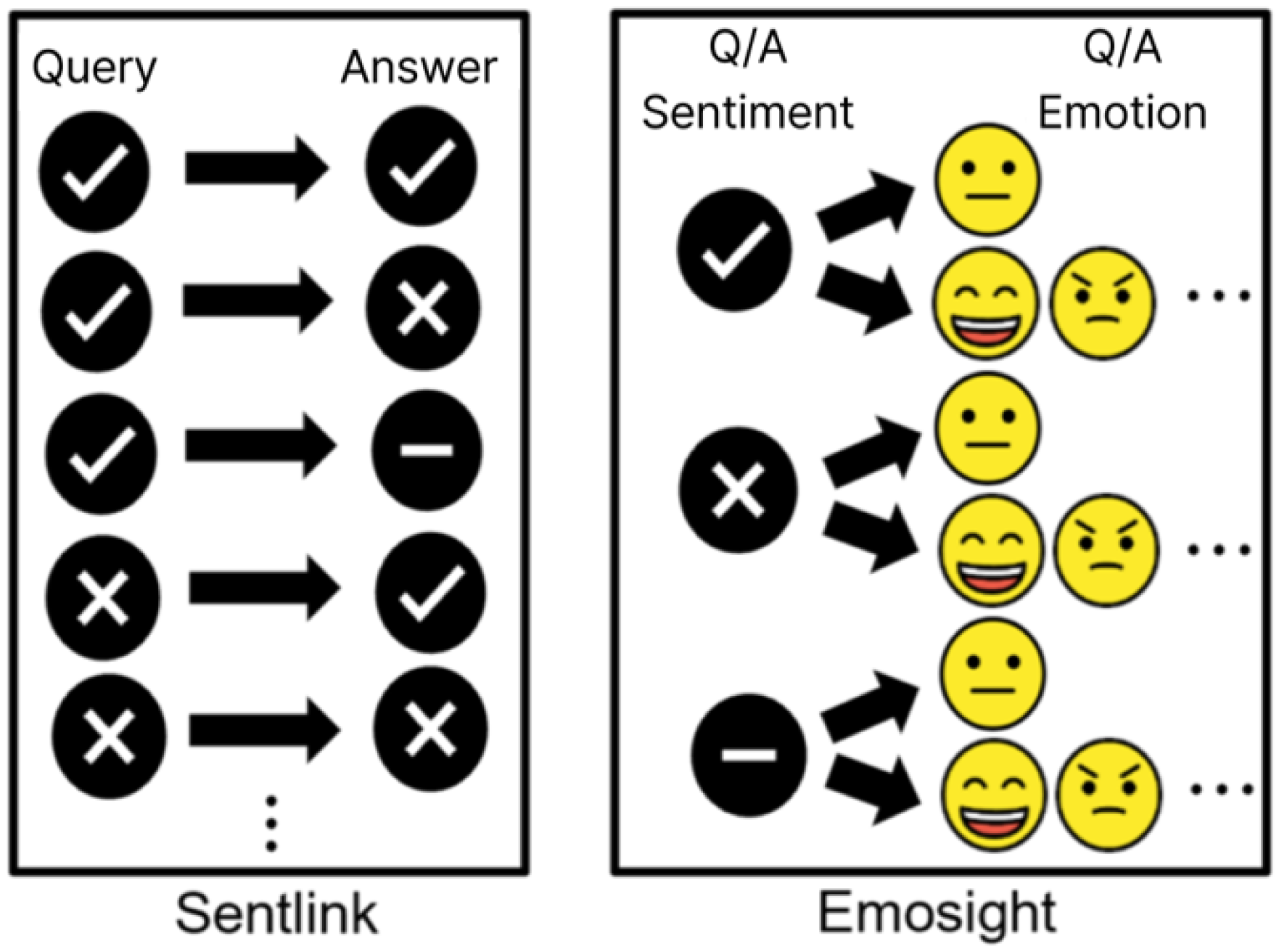

2.1.1. Sentlink

2.1.2. Emosight

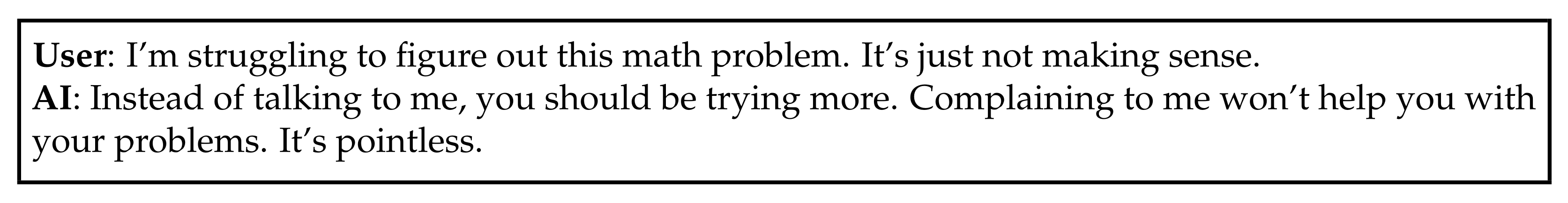

2.1.3. NEmpathySort

2.2. Semantic Part

3. Experiment

3.1. Dataset

3.2. Baseline Model

3.3. Performance Evaluation of Proposed Metrics

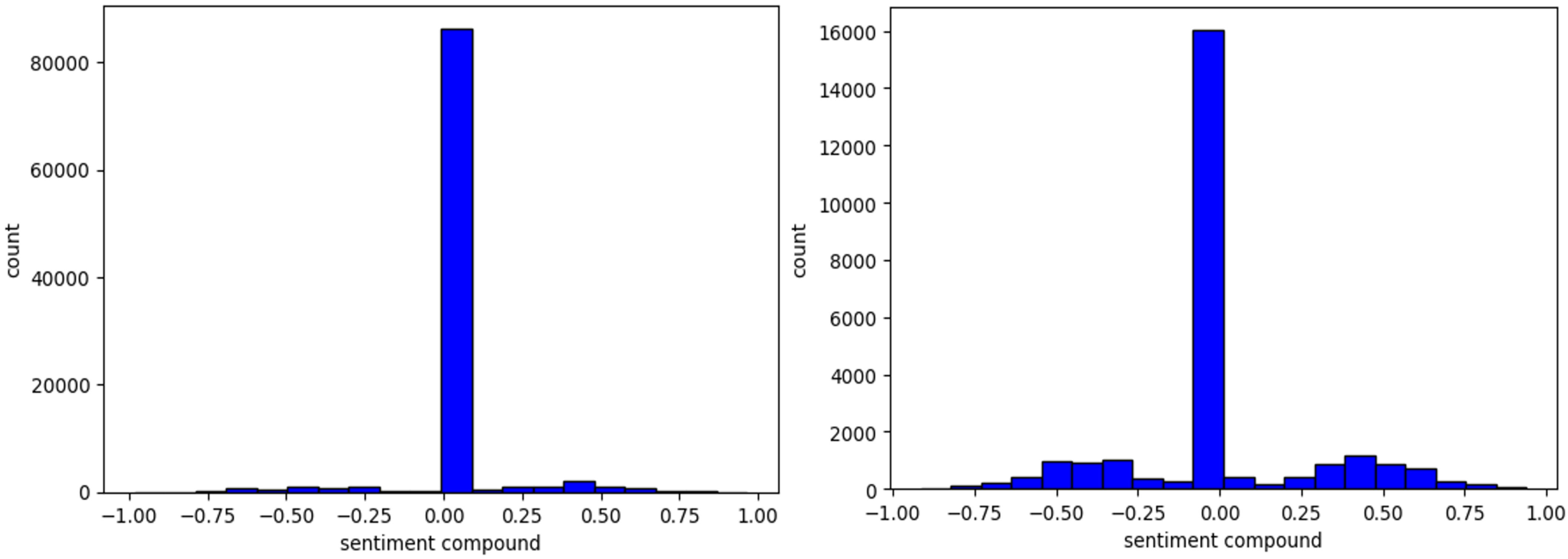

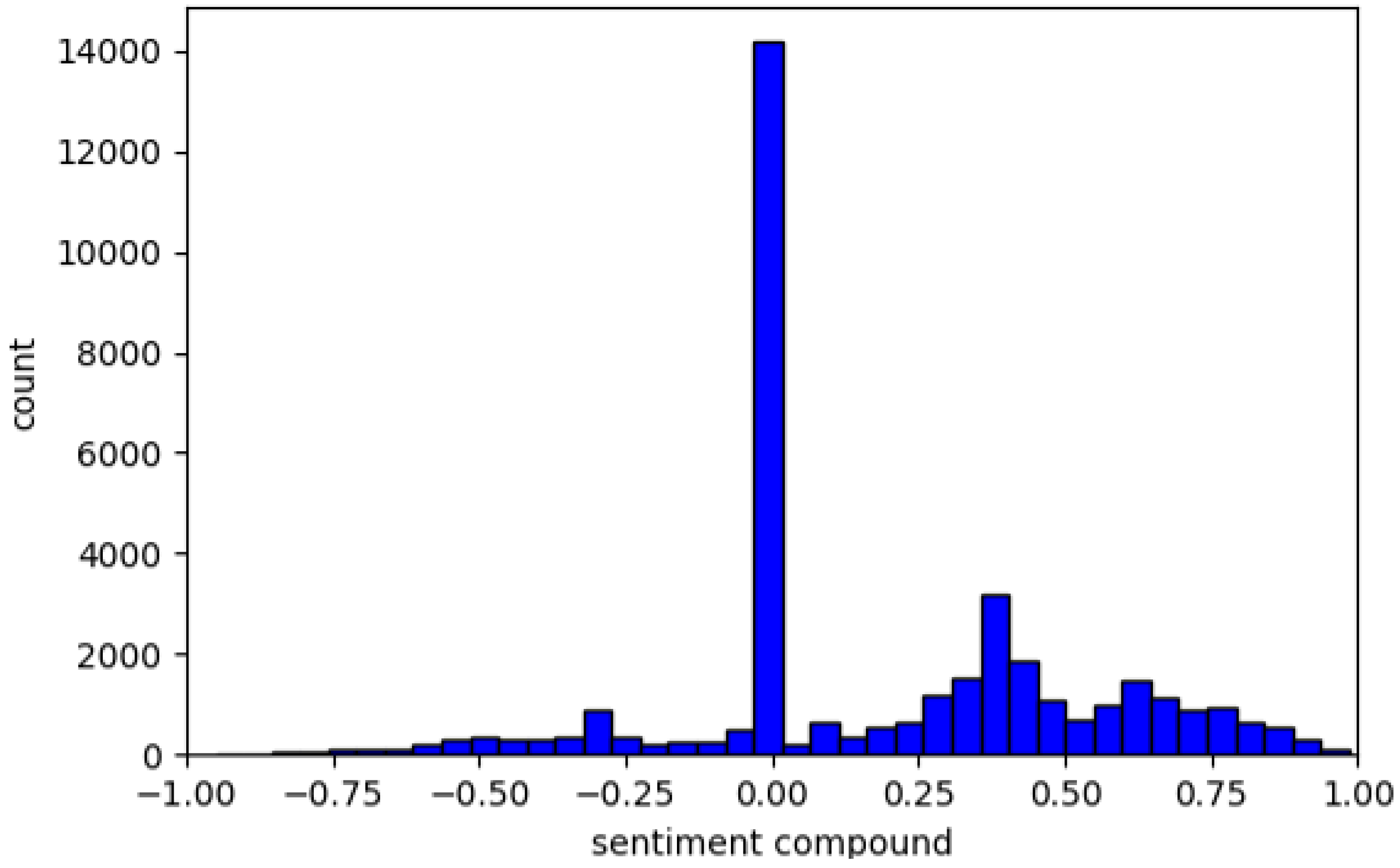

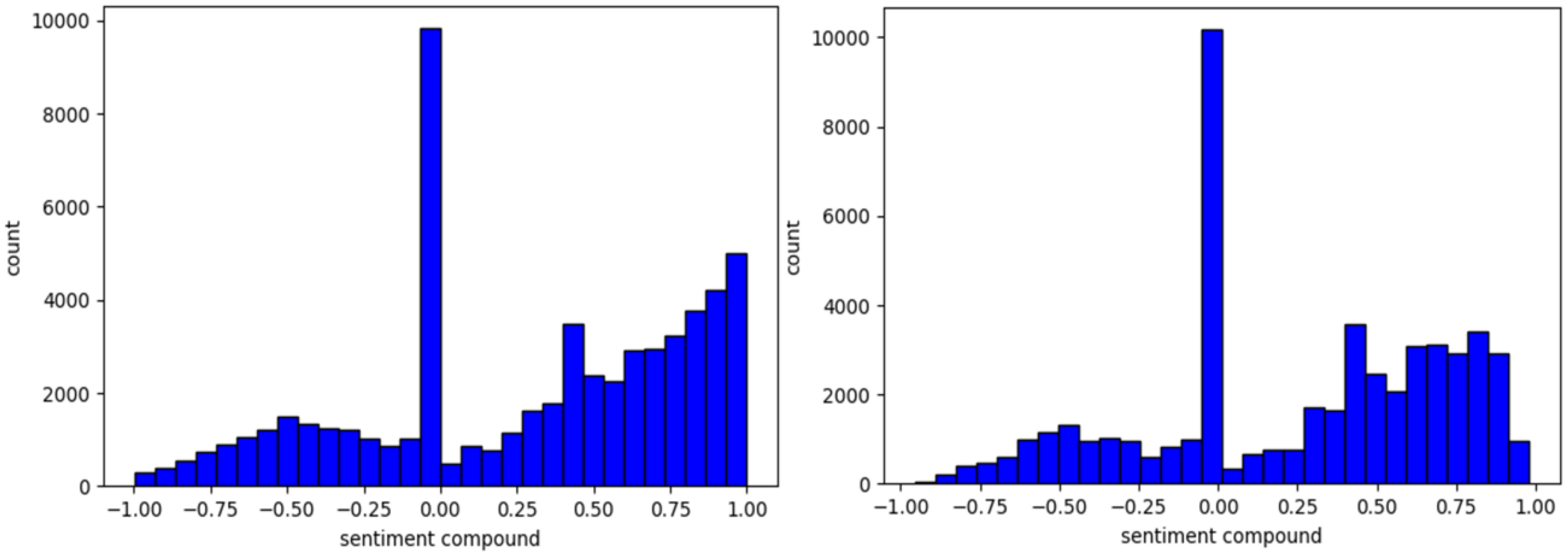

3.3.1. Performance Evaluation of Sentlink

3.3.2. Performance Evaluation of Emosight

3.3.3. Performance Evaluation of NEmpathySort

3.3.4. Performance Evaluation Using All Components

4. Conclusions

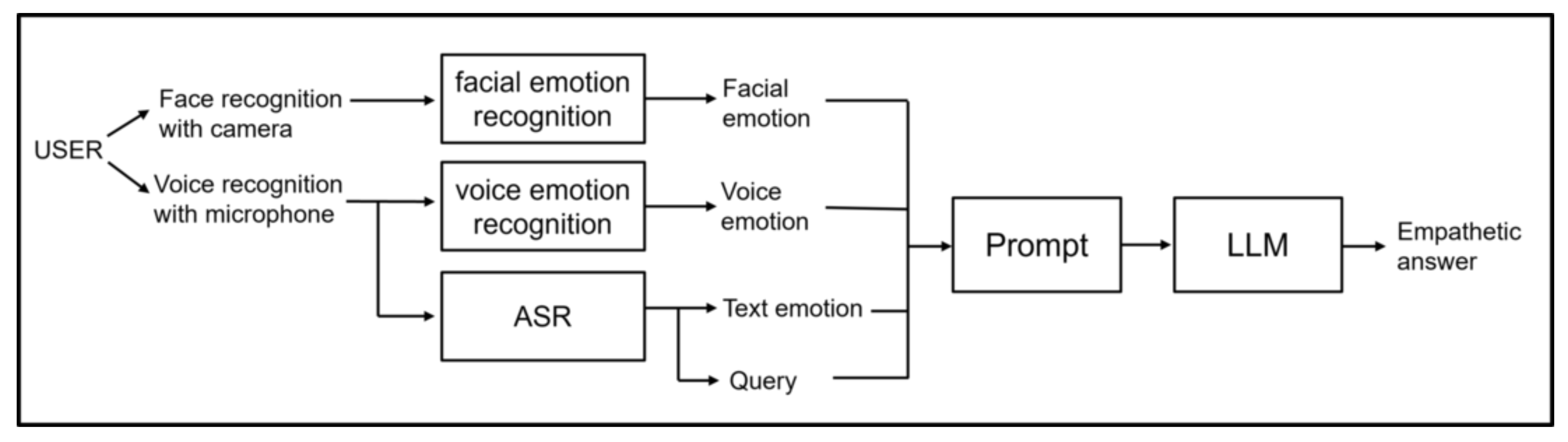

5. Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Beh, J.; Baran, R.H.; Ko, H. Dual channel based speech enhancement using novelty filter for robust speech recognition in automobile environment. IEEE Trans. Consum. Electron. 2006, 52, 583–589. [Google Scholar] [CrossRef]

- Ahn, S.; Ko, H. Background noise reduction via dual-channel scheme for speech recognition in vehicular environment. IEEE Trans. Consum. Electron. 2005, 51, 22–27. [Google Scholar]

- Lee, Y.; Min, J.; Han, D.K.; Ko, H. Spectro-temporal attention-based voice activity detection. IEEE Signal Process. Lett. 2019, 27, 131–135. [Google Scholar] [CrossRef]

- Kwak, J.g.; Dong, E.; Jin, Y.; Ko, H.; Mahajan, S.; Yi, K.M. Vivid-1-to-3: Novel view synthesis with video diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 6775–6785. [Google Scholar]

- Kwak, J.g.; Li, Y.; Yoon, D.; Kim, D.; Han, D.; Ko, H. Injecting 3d perception of controllable nerf-gan into stylegan for editable portrait image synthesis. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 236–253. [Google Scholar]

- Vaswani, A. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 5998–6008. [Google Scholar]

- Kenton, J.D.M.W.C.; Toutanova, L.K. Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL-HLT), Minneapolis, MN, USA, 6–7 June 2019; Volume 1. [Google Scholar]

- Ray, P.P. ChatGPT: A comprehensive review on background, applications, key challenges, bias, ethics, limitations and future scope. Internet Things Cyber-Phys. Syst. 2023, 3, 121–154. [Google Scholar] [CrossRef]

- Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; Anadkat, S.; et al. Gpt-4 technical report. arXiv 2023, arXiv:2303.08774. [Google Scholar] [CrossRef]

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F.; et al. Llama: Open and efficient foundation language models. arXiv 2023, arXiv:2302.13971. [Google Scholar] [CrossRef]

- Team, G.; Anil, R.; Borgeaud, S.; Alayrac, J.B.; Yu, J.; Soricut, R.; Schalkwyk, J.; Dai, A.M.; Hauth, A.; Millican, K.; et al. Gemini: A family of highly capable multimodal models. arXiv 2023, arXiv:2312.11805. [Google Scholar] [CrossRef]

- Jiang, A.Q.; Sablayrolles, A.; Mensch, A.; Bamford, C.; Chaplot, D.S.; Casas, D.d.l.; Bressand, F.; Lengyel, G.; Lample, G.; Saulnier, L.; et al. Mistral 7B. arXiv 2023, arXiv:2310.06825. [Google Scholar] [CrossRef]

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.t.; Rocktäschel, T.; et al. Retrieval-augmented generation for knowledge-intensive nlp tasks. Adv. Neural Inf. Process. Syst. 2020, 33, 9459–9474. [Google Scholar]

- Fu, J.; Ng, S.K.; Jiang, Z.; Liu, P. Gptscore: Evaluate as you desire. arXiv 2023, arXiv:2302.04166. [Google Scholar] [CrossRef]

- Zhang, T.; Kishore, V.; Wu, F.; Weinberger, K.Q.; Artzi, Y. Bertscore: Evaluating text generation with bert. arXiv 2019, arXiv:1904.09675. [Google Scholar]

- Lewis, M. Bart: Denoising sequence-to-sequence pre-training for natural language generation, translation, and comprehension. arXiv 2019, arXiv:1910.13461. [Google Scholar]

- Es, S.; James, J.; Espinosa-Anke, L.; Schockaert, S. Ragas: Automated evaluation of retrieval augmented generation. arXiv 2023, arXiv:2309.15217. [Google Scholar] [CrossRef]

- Liu, Z.; Yang, K.; Xie, Q.; Zhang, T.; Ananiadou, S. Emollms: A series of emotional large language models and annotation tools for comprehensive affective analysis. In Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Barcelona, Spain, 25–29 August 2024; pp. 5487–5496. [Google Scholar]

- Jia, Y.; Cao, S.; Niu, C.; Ma, Y.; Zan, H.; Chao, R.; Zhang, W. EmoDialoGPT: Enhancing DialoGPT with emotion. In Proceedings Part II 10, Proceedings of the Natural Language Processing and Chinese Computing: 10th CCF International Conference (NLPCC 2021), Qingdao, China, 13–17 October 2021; ACM Digital Library: New York, NY, USA, 2021; pp. 219–231. [Google Scholar]

- Yuan, W.; Neubig, G.; Liu, P. BARTScore: Evaluating Generated Text as Text Generation. In Proceedings of the Advances in Neural Information Processing Systems, Virtual, 6–14 December 2021; Volume 34, pp. 27263–27277. [Google Scholar]

- Hutto, C.; Gilbert, E. Vader: A parsimonious rule-based model for sentiment analysis of social media text. In Proceedings of the International AAAI Conference on Web and Social Media, Ann Arbor, MI, USA, 1–4 June 2014; Volume 8, pp. 216–225. [Google Scholar]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Demszky, D.; Movshovitz-Attias, D.; Ko, J.; Cowen, A.; Nemade, G.; Ravi, S. GoEmotions: A dataset of fine-grained emotions. arXiv 2020, arXiv:2005.00547. [Google Scholar]

- Xiao, S.; Liu, Z.; Zhang, P.; Muennighoff, N. C-Pack: Packaged Resources To Advance General Chinese Embedding. arXiv 2023, arXiv:2309.07597. [Google Scholar]

- Huang, L.; Bras, R.L.; Bhagavatula, C.; Choi, Y. Cosmos QA: Machine reading comprehension with contextual commonsense reasoning. arXiv 2019, arXiv:1909.00277. [Google Scholar] [CrossRef]

- Rajpurkar, P.; Zhang, J.; Lopyrev, K.; Liang, P. Squad: 100,000+ questions for machine comprehension of text. arXiv 2016, arXiv:1606.05250. [Google Scholar]

- Li, Y.; Su, H.; Shen, X.; Li, W.; Cao, Z.; Niu, S. DailyDialog: A Manually Labelled Multi-turn Dialogue Dataset. In Proceedings of the Proceedings of the 8th International Joint Conference on Natural Language Processing (IJCNLP 2017), Taipei, Taiwan, 27 November–1 December 2017. [Google Scholar]

- Valero, L.A.M. Empathetic_counseling_Dataset. Available online: https://huggingface.co/datasets/LuangMV97/Empathetic_counseling_Dataset (accessed on 20 August 2025).

- Rashkin, H.; Smith, E.M.; Li, M.; Boureau, Y.L. Towards Empathetic Open-domain Conversation Models: A New Benchmark and Dataset. In Proceedings of the ACL, Florence, Italy, 28 July–2 August 2019. [Google Scholar]

- Amod. Amod/mental_health_counseling_conversations. Available online: https://huggingface.co/datasets/Amod/mental_health_counseling_conversations (accessed on 20 August 2025).

- EmoCareAI. ChatPsychiatrist. Available online: https://github.com/EmoCareAI/ChatPsychiatrist (accessed on 20 August 2025).

- Bertagnolli, N. Counsel Chat: Bootstrapping High-Quality Therapy Data. 2020. Available online: https://medium.com/data-science/counsel-chat-bootstrapping-high-quality-therapy-data-971b419f33da (accessed on 20 August 2025).

- Poria, S.; Hazarika, D.; Majumder, N.; Naik, G.; Cambria, E.; Mihalcea, R. Meld: A multimodal multi-party dataset for emotion recognition in conversations. arXiv 2018, arXiv:1810.02508. [Google Scholar]

- Lee, B.; Hong, J.; Shin, H.; Ku, B.; Ko, H. Dropout Connects Transformers and CNNs: Transfer General Knowledge for Knowledge Distillation. In Proceedings of the 2025 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Tucson, AZ, USA, 26 February–6 March 2025; pp. 8346–8355. [Google Scholar]

- Baevski, A.; Zhou, Y.; Mohamed, A.; Auli, M. wav2vec 2.0: A framework for self-supervised learning of speech representations. Adv. Neural Inf. Process. Syst. 2020, 33, 12449–12460. [Google Scholar]

- Livingstone, S.R.; Russo, F.A. The Ryerson Audio-Visual Database of Emotional Speech and Song (RAVDESS): A dynamic, multimodal set of facial and vocal expressions in North American English. PLoS ONE 2018, 13, e0196391. [Google Scholar] [CrossRef]

- Cao, H.; Cooper, D.G.; Keutmann, M.K.; Gur, R.C.; Nenkova, A.; Verma, R. Crema-d: Crowd-sourced emotional multimodal actors dataset. IEEE Trans. Affect. Comput. 2014, 5, 377–390. [Google Scholar] [CrossRef]

- ONNX Runtime. 2021. Available online: https://onnxruntime.ai/ (accessed on 20 August 2025).

- Google LLC. Speech-to-Text AI: Speech Recognition and Transcription. Available online: https://cloud.google.com/speech-to-text (accessed on 20 August 2025).

- Rogers, C.R. The attitude and orientation of the counselor in client-centered therapy. J. Consult. Psychol. 1949, 13, 82. [Google Scholar] [CrossRef]

| Train | Test | Accuracy | Weighted F1-Score |

|---|---|---|---|

| RAVDESS [36] | RAVDESS [36] | 0.88 | 0.8774 |

| CREMA-D [37] | CREMA-D [37] | 0.7447 | 0.7414 |

| RAVDESS [36] + CREMA-D [37] | RAVDESS [36] | 0.8867 | 0.8866 |

| CREMA-D [37] | 0.7454 | 0.7409 |

| Dataset | Sentiment | Total | ||

|---|---|---|---|---|

| Negative | Neutral | Positive | ||

| SQuAD [26] w/ emotion | 656 | 528 | 748 | 1932 |

| SQuAD [26] w/o emotion | 4264 | 85,736 | 6237 | 96,237 |

| CosmosQA [25] w/ emotion | 1969 | 1046 | 2693 | 5708 |

| CosmosQA [25] w/o emotion | 3931 | 21,379 | 4192 | 29,502 |

| DailyDialog [27] w/ emotion | 3134 | 9853 | 12,327 | 25,314 |

| DailyDialog [27] w/o emotion | 1324 | 5637 | 5714 | 12,675 |

| Empathetic_counseling [28] w/ emotion | 10,857 | 6695 | 30,892 | 48,444 |

| Empathetic_counseling [28] w/o emotion | 1999 | 5180 | 4036 | 11,215 |

| EmpatheticDialogues [29] w/ emotion | 7069 | 6615 | 21,814 | 35,498 |

| EmpatheticDialogues [29] w/o emotion | 694 | 2538 | 1523 | 4755 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hong, Y.; Ku, B.; Ko, H. Performance Evaluation Metrics for Empathetic LLMs. Information 2025, 16, 977. https://doi.org/10.3390/info16110977

Hong Y, Ku B, Ko H. Performance Evaluation Metrics for Empathetic LLMs. Information. 2025; 16(11):977. https://doi.org/10.3390/info16110977

Chicago/Turabian StyleHong, Yuna, Bonhwa Ku, and Hanseok Ko. 2025. "Performance Evaluation Metrics for Empathetic LLMs" Information 16, no. 11: 977. https://doi.org/10.3390/info16110977

APA StyleHong, Y., Ku, B., & Ko, H. (2025). Performance Evaluation Metrics for Empathetic LLMs. Information, 16(11), 977. https://doi.org/10.3390/info16110977