1. Introduction

Due to the rapid advancement of power infrastructure and the growing frequency of on-site operations, safety concerns in the electric power operation process have been significantly amplified; therefore, the protection of operators’ safety has become a critical challenge in the power industry. Traditional methods for electric power operation violation recognition (EPOVR) predominantly rely on manual inspection through human patrols or the back-end video monitoring, which are not only inefficient and resource-intensive but also susceptible to oversight due to human fatigue or negligence [

1]. The advancement of computer vision and artificial intelligence has catalyzed the emergence of image recognition-based EPOVR technologies in recent years. Such technology can automatically identify and warn whether the operators’ wearing and code of conduct are compliant, which greatly improves the efficiency of safety supervision and significantly reduces the labor cost [

2].

The core of EPOVR lies in the accurate detection of operators, tools, and protective equipment at the operational site, such as safety helmets, safety belts, and work clothes, which form the foundation for subsequent violation analysis [

3]. To date, significant advancements have been made in deep learning-based object detection technologies [

4,

5,

6,

7,

8], where the You Only Look Once (YOLO) object detection models [

9,

10,

11,

12,

13,

14,

15,

16,

17,

18,

19] have gained widespread adoption across various industrial and commercial applications, due to their excellent detection efficiency. The YOLO models have undergone rapid evolution since the introduction of YOLOv1 [

9], with the latest iteration being YOLOv13 [

20]. The YOLOv12 model, distinguished by its dual advantages in computational efficiency and detection accuracy, has been selected as the baseline for our object detection model.

Up to now, several improvements of official YOLO models have been proposed to enhance their capabilities. The YOLO-LSM model [

21] leveraged shallow and multi-scale feature learning to enhance small object detection accuracy while reducing parameters. The YOLO-SSFA model [

22] employed adaptive multi-scale fusion and attention-based noise suppression to enhance object detection accuracy in complex infrared environments. The YOLO-ACR [

23] model introduced an adaptive context optimization module, which leveraged contextual information to enhance object detection accuracy. Ji et al. [

24] introduced an enhanced YOLOv12 model for detecting small defects in transmission lines, which integrated a two-way weighted feature fusion network to improve the interaction between low-level and high-level semantic features, additionally using spatial and channel attentions to boost the feature representation of defects.

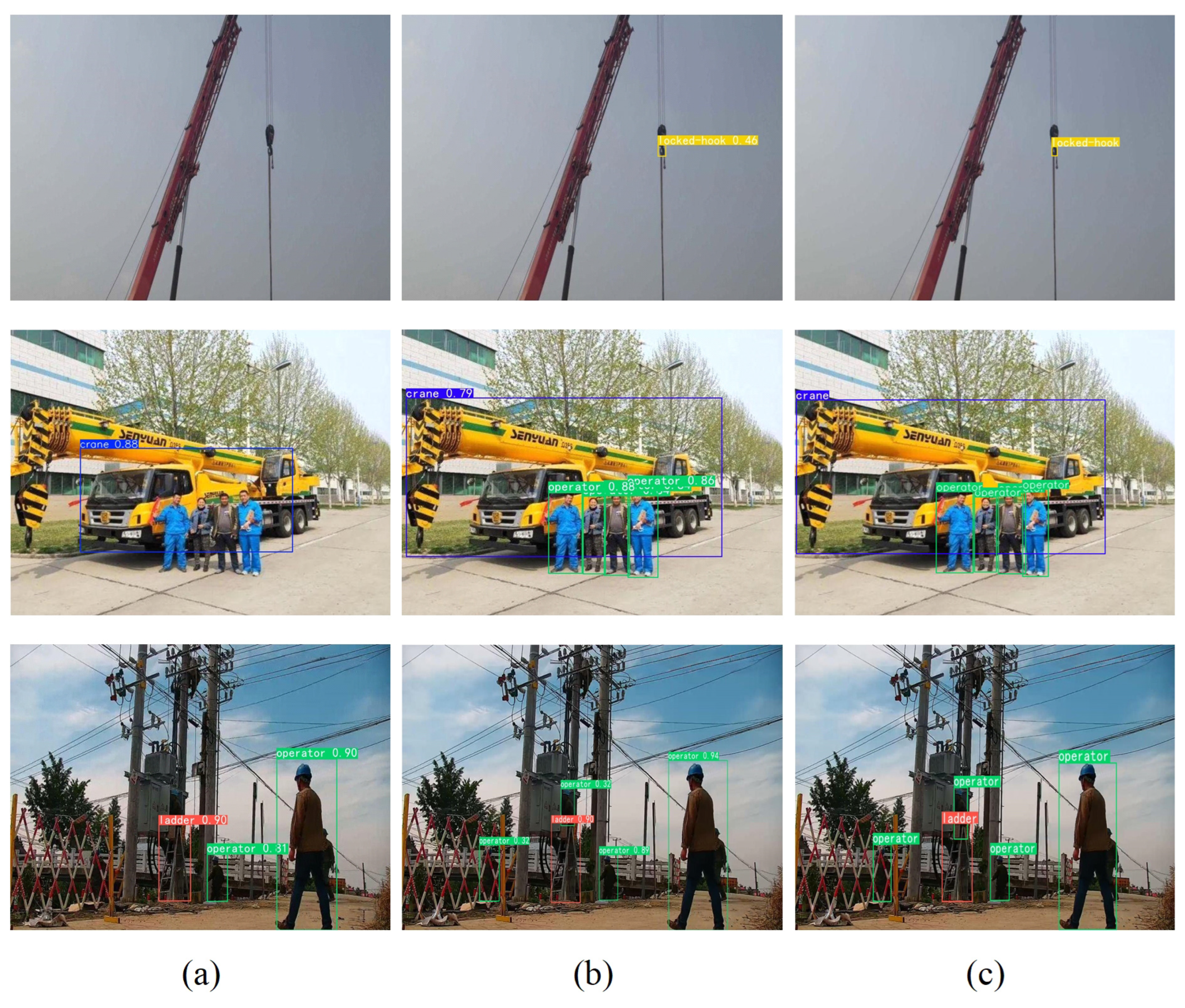

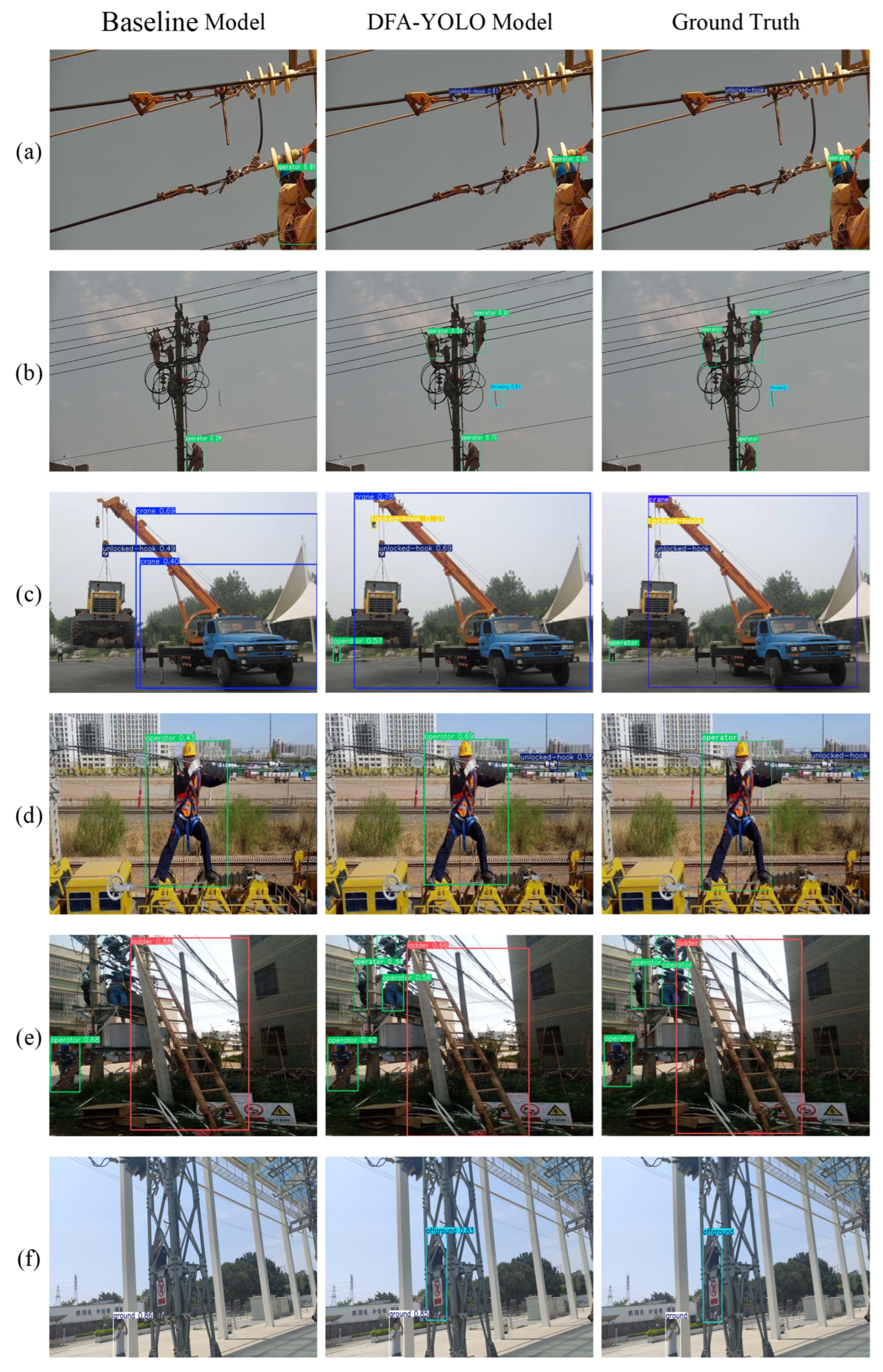

Although the YOLOv12 model demonstrates a commendable balance between object detection precision and real-time inference, it still exhibits three significant limitations in feature extraction. Firstly, the absence of a dedicated feature extraction strategy for multi-scale objects restricts its capability to detect multi-scale key targets in EPOVR. As demonstrated in the first row of

Figure 1a, YOLOv12 did not detect the small-sized locked-hook, and it inaccurately localizes the large-sized crane, as shown in the second row of

Figure 1a. Secondly, current methods often add an attention module in the final stage of the backbone network to enhance feature representation, and these modules usually combine spatial and channel attentions in a straightforward manner, rather than integrating them into an organic whole, thereby limiting the discriminative capability of features. This limitation is evident in the third row of

Figure 1a, where YOLOv12 fails to detect two operators that are significantly affected by background interference. Thirdly, early convolutional neural networks suffered from the vanishing gradient problem during backpropagation. Although the introduction of residual connections has substantially mitigated this issue, the influence of labels still diminishes with increasing distance from the detection head of YOLO models, which results in weaker representation of low-level features in the backbone network, leading to suboptimal detection of small-sized objects. This is another critical factor contributing to the failure of detecting the locked-hook in the first row of

Figure 1a.

To tackle the aforementioned mentioned challenges, a real-time object detection model called DFA-YOLO is introduced. The primary contributions are summarized as follows.

- (1)

To tackle the first issue, a lightweight dynamic weighted multi-scale convolution (DWMConv) module is proposed to substitute a convolution layer in the backbone network. The DWMConv module leverages depthwise separable multi-scale convolutions to extract multi-scale features, and employs learnable adaptive weights to dynamically fuse these features. The fused features have powerful representations for multi-scale objects. As demonstrated in the first two rows of

Figure 1b, the DFA-YOLO model successfully detects the small-sized locked-hook and accurately localizes the large-sized crane.

- (2)

To address the second issue, a full-dimensional attention (FDA) module is proposed and inserted at the end of backbone network. The FDA module computes and integrates attention across the height, width, and channel dimensions, producing a unified feature representation that captures important information from these above three dimensions. It overcomes the limitation of conventional designs in which spatial attention and channel attention are computed independently, thereby significantly improving feature discriminability. As shown in the third row of

Figure 1b, the DFA-YOLO model can detect two operators despite serious background interference.

- (3)

To resolve the third issue, a set of auxiliary detection heads (Aux-Heads) is incorporated into the baseline model. The Aux-Heads are added at multiple feature extraction nodes distant from the primary object detection heads, providing additional supervision signals to enhance the training of low-level features. Note that the Aux-Heads are only involved in training stage, thereby improving the representational capability of low-level features without compromising inference speed. Consequently, adding Aux-Heads can boost the model’s capability for detecting small-sized objects, as evidenced by the accurate detection of locked-hook in

Figure 1b.

Author Contributions

Conceptualization, X.Q.; formal analysis, X.Q.; funding acquisition, W.W.; methodology, X.Q. and X.D.; project administration, P.X.; resources, W.W.; software, X.D., P.W. and J.G.; supervision, W.W.; validation, W.W. and H.C.; writing—original draft, X.D.; writing—review and editing, X.Q. and P.X. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Natural Science Foundation of Henan (Grant No. 252300421063), the Key Research Project of Henan Province Universities (Grant No. 24ZX005), and the National Natural Science Foundation of China (Grant No. 62076223).

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and the protocol was approved by the Ethics Committee of Zhengzhou University of Light Industry (41580459-6) on 18 September 2025.

Informed Consent Statement

Informed consent for participation was obtained from all subjects involved in the study.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding authors.

Conflicts of Interest

Author Jungang Guo was employed by Zhengzhou Fengjia Technology Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| YOLO | You Only Look Once |

| DWMConv | Dynamic weighted multi-scale convolution |

| FDA | Full-dimensional attention |

| Aux-Heads | Auxiliary detection heads |

| EPOVR | Electric power operation violation recognition |

| A2C2f | Area attention-enhanced cross-stage fusion |

| SPPF | Spatial pyramid pooling-fast |

| DSConv | Depthwise separable convolution |

| SGD | Stochastic gradient descent |

| NMS | Non-maximum suppression |

| Params | Parameter count |

| FLOPs | Floating-point operations |

| FPSs | Frames per second |

| mAP | mean Average Precision |

References

- Meng, L.; He, D.; Ban, G.; Xi, G.; Li, A.; Zhu, X. Active Hard Sample Learning for Violation Action Recognition in Power Grid Operation. Information 2025, 16, 67. [Google Scholar] [CrossRef]

- Vukicevic, A.M.; Petrovic, M.; Milosevic, P.; Peulic, A.; Jovanovic, K.; Novakovic, A. A systematic review of computer vision-based personal protective equipment compliance in industry practice: Advancements, challenges and future directions. Artif. Intell. Rev. 2024, 57, 319. [Google Scholar] [CrossRef]

- Ji, X.; Gong, F.; Yuan, X.; Wang, N. A high-performance framework for personal protective equipment detection on the offshore drilling platform. Complex Intell. Syst. 2023, 9, 5637–5652. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Qian, X.; Wu, B.; Cheng, G.; Yao, X.; Wang, W.; Han, J. Building a Bridge of Bounding Box Regression Between Oriented and Horizontal Object Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5605209. [Google Scholar] [CrossRef]

- Qian, X.; Jian, Q.; Wang, W.; Yao, X.; Cheng, G. Incorporating Multiscale Context and Task-consistent Focal Loss into Oriented Object Detection. IEEE Trans. Geosci. Remote Sens. 2025, 11, 5628411. [Google Scholar] [CrossRef]

- Qian, X.; Zhang, B.; He, Z.; Wang, W.; Yao, X.; Cheng, G. IPS-YOLO: Iterative Pseudo-fully Supervised Training of YOLO for Weakly Supervised Object Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2025, 14, 5630414. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Farhadi, A.; Redmon, J. Yolov3: An incremental improvement. In Computer Vision and Pattern Recognition; Springer: Berlin/Heidelberg, Germany, 2018; Volume 1804, pp. 1–6. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Ultralytics. YOLOv5 (v7.0). 2023. Available online: https://github.com/ultralytics/yolov5 (accessed on 22 November 2022).

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Jocher, G. YOLOv8. 2025. Available online: https://github.com/ultralytics/ultralytics (accessed on 10 January 2023).

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y.M. Yolov9: Learning what you want to learn using programmable gradient information. In European Conference on Computer Vision; Springer Nature: Cham, Switzerland, 2024; pp. 1–21. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. Yolov10: Real-time end-to-end object detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Khanam, R.; Hussain, M. YOLOv11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Lei, M.; Li, S.; Wu, Y.; Hu, H.; Zhou, Y.; Zheng, X.; Gao, Y. YOLOv13: Real-Time Object Detection with Hypergraph-Enhanced Adaptive Visual Perception. arXiv 2025, arXiv:2506.17733. [Google Scholar]

- Wu, C.; Cai, C.; Xiao, F.; Wang, J.; Guo, Y.; Ma, L. YOLO-LSM: A Lightweight UAV Target Detection Algorithm Based on Shallow and Multiscale Information Learning. Information 2025, 16, 393. [Google Scholar] [CrossRef]

- Wang, Y.; Cao, M.; Yang, Q.; Zhang, Y.; Wang, Z. YOLO-SSFA: A Lightweight Real-Time Infrared Detection Method for Small Targets. Information 2025, 16, 618. [Google Scholar] [CrossRef]

- Scapinello Aquino, L.; Rodrigues Agottani, L.F.; Seman, L.O.; Cocco Mariani, V.; Coelho, L.D.S.; González, G.V. Fault Detection in Power Distribution Systems Using Sensor Data and Hybrid YOLO with Adaptive Context Refinement. Appl. Sci. 2015, 15, 9186. [Google Scholar] [CrossRef]

- Ji, Y.; Ma, T.; Shen, H.; Feng, H.; Zhang, Z.; Li, D.; He, Y. Transmission Line Defect Detection Algorithm Based on Improved YOLOv12. Electronics 2025, 14, 2432. [Google Scholar] [CrossRef]

- Qian, X.; Li, Y.; Ding, X.; Luo, L.; Guo, J.; Wang, W.; Xing, P. A Real-Time DAO-YOLO Model for Electric Power Operation Violation Recognition. Appl. Sci. 2025, 15, 4492. [Google Scholar] [CrossRef]

- Tian, Y.; Ye, Q.; Doermann, D. Yolov12: Attention-centric real-time object detectors. arXiv 2025, arXiv:2502.12524. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Ramachandran, P.; Zoph, B.; Le, Q.V. Searching for activation functions. arXiv 2017, arXiv:1710.05941. [Google Scholar] [CrossRef]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Tianchi Platform, Guangdong Power Information Technology Co., Ltd. Guangdong Power Grid Smart Field Operation Challenge, Track 3: High-Altitude Operation and Safety Belt Wearing Dataset. Dataset. 2021. Available online: https://tianchi.aliyun.com/specials/promotion/gzgrid (accessed on 23 July 2024).

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Zitnick, C.L. Microsoft COCO: Common objects in context. In European Conference on Computer Vision; Springer International Publishing: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 11534–11542. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Akyon, F.C.; Altinuc, S.O.; Temizel, A. Slicing aided hyper inference and fine-tuning for small object detection. In Proceedings of the 2022 IEEE International Conference on Image Processing, Bordeaux, France, 16–19 October 2022; pp. 966–970. [Google Scholar]

Figure 1.

Detection results of the (

a) YOLOv12 model and (

b) our DFA-YOLO model and (

c) ground truth on the EPOVR-v1.0 dataset [

25].

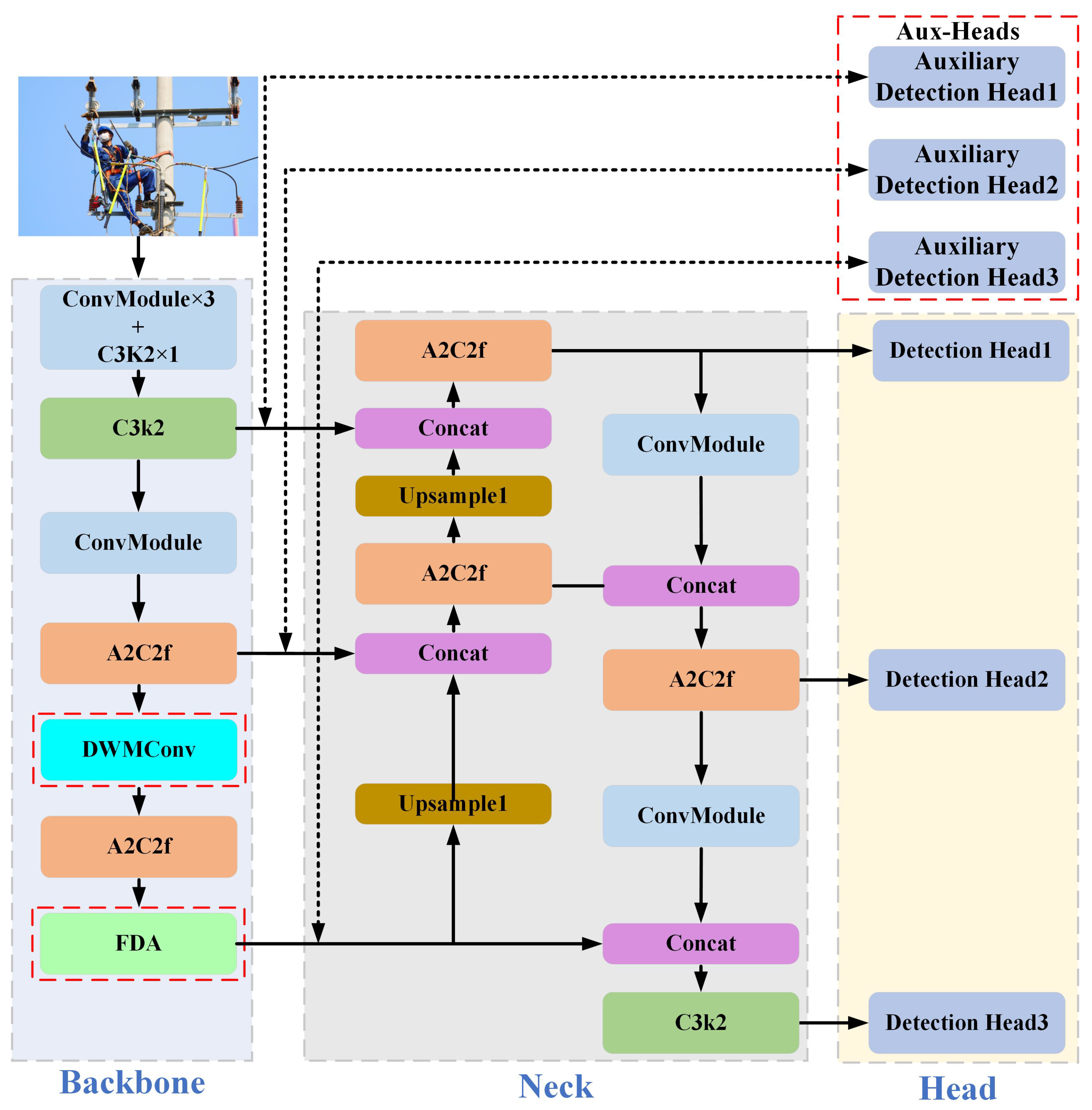

Figure 2.

Architecture of our DFA-YOLO model, where DWMConv, FDA, and auxiliary detection heads are the innovation points of DFA-YOLO model and are highlighted by the red dashed boxes.

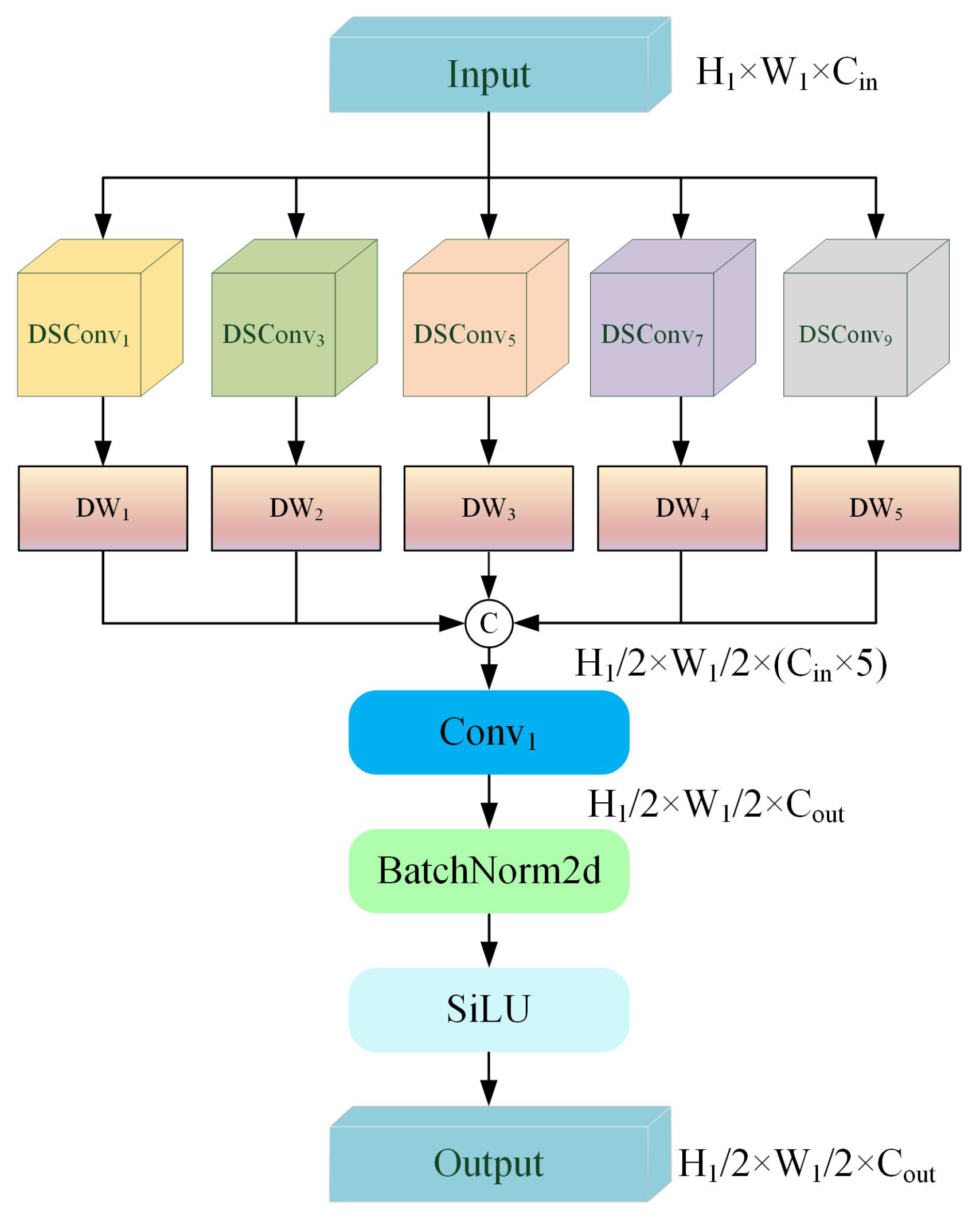

Figure 3.

Architecture of DWMConv module.

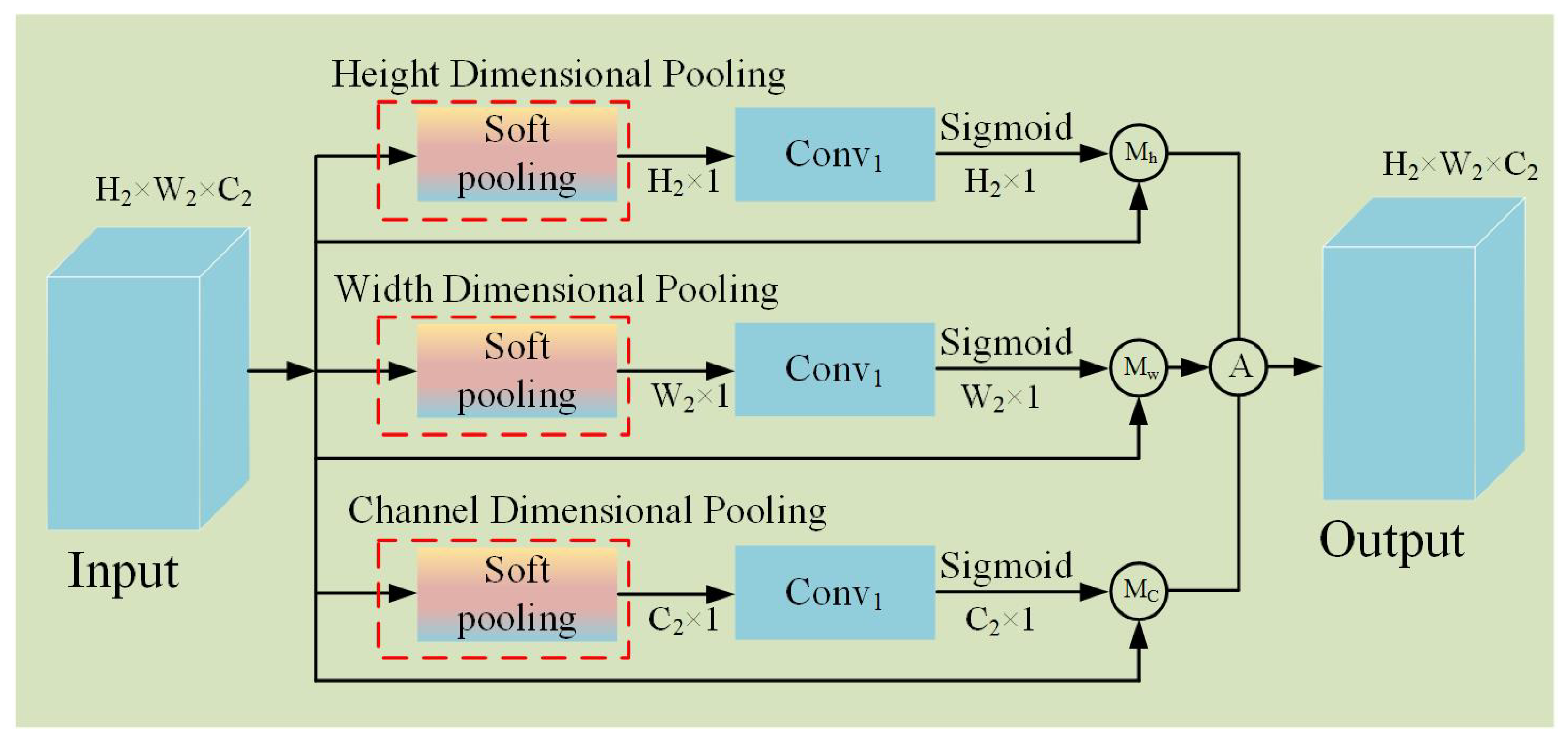

Figure 4.

Architecture of FDA module.

Figure 5.

Illustration of soft pooling.

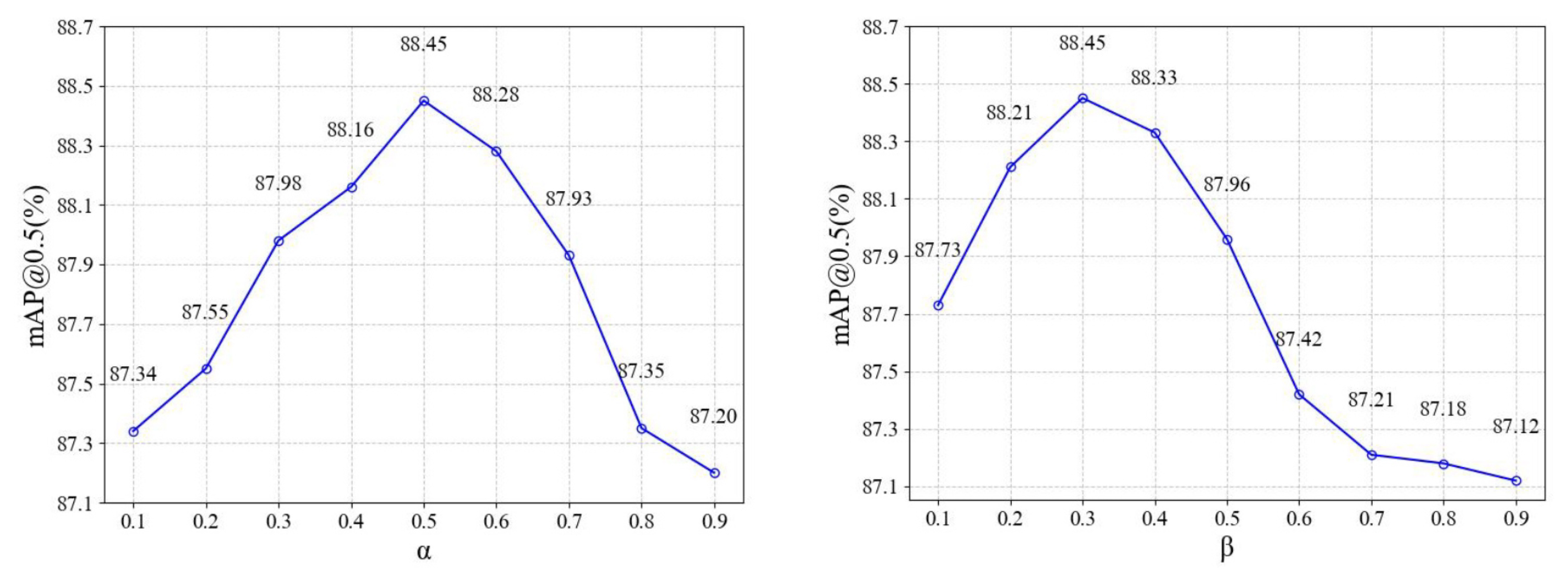

Figure 6.

Quantitative parameter analysis of and on the EPOVR-v1.0 dataset.

Figure 7.

Learning curve of our DFA-YOLO model on the EPOVR-v1.0 dataset.

Figure 8.

Subjective comparison between DFA-YOLO model and baseline model. (a–f) are selected from the EPOVR-v1.0 dataset and Alibaba Tianchi dataset, respectively.

Figure 9.

Quantitative Illustration of failure results. (a,b) are selected from the Alibaba Tianchi dataset.

Table 1.

Quantitative parameter analysis of kernel sizes in DWMConv module.

| Model | mAP@0.5 (%) | mAP@0.5–0.95 (%) | Parameters (M) | GFLOPS | FPS |

|---|

| Baseline | 85.30 | 60.10 | 2.51 | 5.83 | 94.34 |

| Baseline + DWMConv (1, 3, 5, 7, 9) | 86.75 | 61.94 | 2.40 | 5.74 | 98.53 |

| Baseline + DWMConv (3, 5, 7, 9, 11) | 86.14 | 61.37 | 2.42 | 5.75 | 98.11 |

| Baseline + DWMConv (1, 5, 9, 13, 17) | 85.67 | 60.66 | 2.46 | 5.92 | 92.13 |

Table 2.

Ablation experimental results of DFA-YOLO model.

| DWMConv | FDA | Aux-Heads | mAP@0.5 (%) | mAP@0.5–0.95 (%) | Parameters (M) | GFLOPS | FPS |

|---|

| × | × | × | 85.30 | 60.10 | 2.51 | 5.83 | 94.34 |

| ✔ | × | × | 86.75 | 61.94 | 2.40 | 5.74 | 98.53 |

| × | ✔ | × | 86.53 | 61.89 | 2.55 | 5.84 | 93.86 |

| × | × | ✔ | 86.44 | 61.83 | 2.51 | 5.83 | 94.34 |

| ✔ | ✔ | × | 87.75 | 62.72 | 2.45 | 5.77 | 97.86 |

| ✔ | × | ✔ | 87.34 | 62.47 | 2.40 | 5.74 | 98.53 |

| × | ✔ | ✔ | 87.04 | 62.36 | 2.55 | 5.84 | 93.86 |

| ✔ | ✔ | ✔ | 88.45 | 64.23 | 2.45 | 5.77 | 97.86 |

Table 3.

Comprehensive quantitative comparison results of DFA-YOLO model and other official YOLO models. Bold fonts denote the best results.

| Model | mAP@0.5 (%) | mAP@0.5–0.95 (%) | Parameters (M) | GFLOPS | FPS |

|---|

| Baseline | 85.30 | 60.10 | 2.51 | 5.83 | 94.34 |

| Baseline + ECA [34] | 85.75 | 60.73 | 2.53 | 5.83 | 94.34 |

| Baseline + CBAM [35] | 86.05 | 61.18 | 2.58 | 5.84 | 93.86 |

| Baseline + SE [36] | 86.11 | 61.34 | 2.52 | 5.83 | 94.34 |

| Baseline + FDA | 86.53 | 61.86 | 2.55 | 5.84 | 93.86 |

Table 4.

Quantitative comparison between DWMConv module and SAHI solution.

| Model | mAP@0.5 (%) | mAP@0.5–0.95 (%) | Parameters (M) | GFLOPS | FPS |

|---|

| Baseline + SAHI | 85.30 | 60.10 | 2.51 | 5.83 | 94.34 |

| Baseline + DWMConv | 86.75 | 61.94 | 2.40 | 5.74 | 98.53 |

| Baseline + FDA + Aux-Heads + SAHI | 86.53 | 61.89 | 2.55 | 5.84 | 93.86 |

| Baseline + FDA + Aux-Heads + DWMConv | 88.45 | 64.23 | 2.45 | 5.77 | 97.68 |

Table 5.

Quantitative analysis in terms of training time and peak memory.

| Model | Training Time (H) | Peak Memory (G) |

|---|

| Baseline (YOLOv12n) | 4.87 | 7.44 |

| Baseline + Aux-Heads | 6.05 | 8.19 |

| DFA-YOLO | 5.89 | 7.93 |

Table 6.

Comprehensive quantitative comparison results of DFA-YOLO model and other official YOLO models on the EPOVR-v1.0 dataset. The values of mAP@0.5, mAP@0.5–0.95, and FPS are shown in the form of ‘Mean ± STD’. * indicates that the Aux-Heads are used for ensemble prediction in the inference stage, and † denotes that the resolution of input images is changed to 1280 × 1280.

| Model | mAP@0.5 (%) | mAP@0.5–0.95 (%) | Parameters (M) | GFLOPS | FPS |

|---|

| YOLOv5n [13] | 83.19 ± 0.13 | 57.32 ± 0.14 | 2.52 | 7.08 | 88.73 ± 2.1 |

| YOLOv6n [14] | 82.48 ± 0.12 | 56.94 ± 0.13 | 4.18 | 12.12 | 83.37 ± 1.8 |

| YOLOv7t [15] | 84.34 ± 0.07 | 58.48 ± 0.16 | 6.03 | 13.13 | 79.68 ± 1.7 |

| YOLOv8n [16] | 85.00 ± 0.06 | 59.47 ± 0.15 | 3.04 | 8.07 | 86.25 ± 1.5 |

| YOLOv9t [17] | 82.33 ± 0.12 | 57.66 ± 0.13 | 2.1 | 7.68 | 87.87 ± 1.3 |

| YOLOv10n [18] | 81.87 ± 0.11 | 56.83 ± 0.13 | 2.28 | 6.53 | 89.74 ± 2.1 |

| YOLOv11n [19] | 84.32 ± 0.13 | 59.03 ± 0.15 | 2.58 | 6.31 | 90.33 ± 1.4 |

| YOLOv12n [23] | 85.30 ± 0.09 | 60.10 ± 0.12 | 2.51 | 5.83 | 94.34 ± 1.3 |

| YOLOv13n [20] | 84.08 ± 0.08 | 59.88 ± 0.11 | 2.45 | 6.22 | 91.34 ± 1.6 |

| DFA-YOLO * | 87.59 ± 0.04 | 62.53 ± 0.05 | 2.58 | 6.38 | 90.15 ± 1.2 |

| DFA-YOLO † | 89.87 ± 0.05 | 66.38 ± 0.06 | 2.45 | 23.10 | 24.21 ± 1.3 |

| DFA-YOLO (Ours) | 88.45 ± 0.03 | 64.23 ± 0.04 | 2.45 | 5.77 | 97.86 ± 1.1 |

Table 7.

Comprehensive quantitative comparison results of DFA-YOLO model and other official YOLO models on the Alibaba Tianchi dataset. * indicates that the Aux-Heads are used for ensemble prediction in the inference stage, and † denotes that the resolution of input images is changed to 1280 × 1280.

| Model | mAP@0.5 (%) | mAP@0.5–0.95 (%) | Parameters (M) | GFLOPS | FPS |

|---|

| YOLOv5n | 90.92 ± 0.12 | 66.44 ± 0.13 | 2.52 | 7.08 | 89.23 ± 2.2 |

| YOLOv6n | 90.31 ± 0.11 | 65.83 ± 0.12 | 4.18 | 12.12 | 84.16 ± 1.9 |

| YOLOv7t | 92.13 ± 0.06 | 69.51 ± 0.15 | 6.03 | 13.13 | 80.24 ± 1.6 |

| YOLOv8n | 91.38 ± 0.05 | 69.18 ± 0.14 | 3.04 | 8.07 | 87.12 ± 1.4 |

| YOLOv9t | 90.48 ± 0.11 | 65.73 ± 0.12 | 2.1 | 7.68 | 88.47 ± 1.2 |

| YOLOv10n | 89.22 ± 0.10 | 64.18 ± 0.12 | 2.28 | 6.53 | 90.24 ± 2.0 |

| YOLOv11n | 90.38 ± 0.12 | 65.16 ± 0.14 | 2.58 | 6.31 | 91.28 ± 1.5 |

| YOLOv12n | 94.01 ± 0.08 | 74.96 ± 0.11 | 2.51 | 5.83 | 95.21 ± 1.5 |

| YOLOv13n | 93.89 ± 0.07 | 74.78 ± 0.10 | 2.45 | 6.22 | 92.68 ± 1.8 |

| DFA-YOLO * | 94.78 ± 0.04 | 73.86 ± 0.05 | 2.58 | 6.38 | 91.14 ± 1.2 |

| DFA-YOLO † | 95.89 ± 0.05 | 78.37 ± 0.06 | 2.45 | 23.10 | 24.21 ± 1.3 |

| DFA-YOLO (Ours) | 95.37 ± 0.03 | 77.25 ± 0.04 | 2.45 | 5.77 | 98.47 ± 1.1 |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).