Authorship Attribution Methods, Challenges, and Future Research Directions: A Comprehensive Survey

Abstract

1. Introduction

1.1. Key Contributions

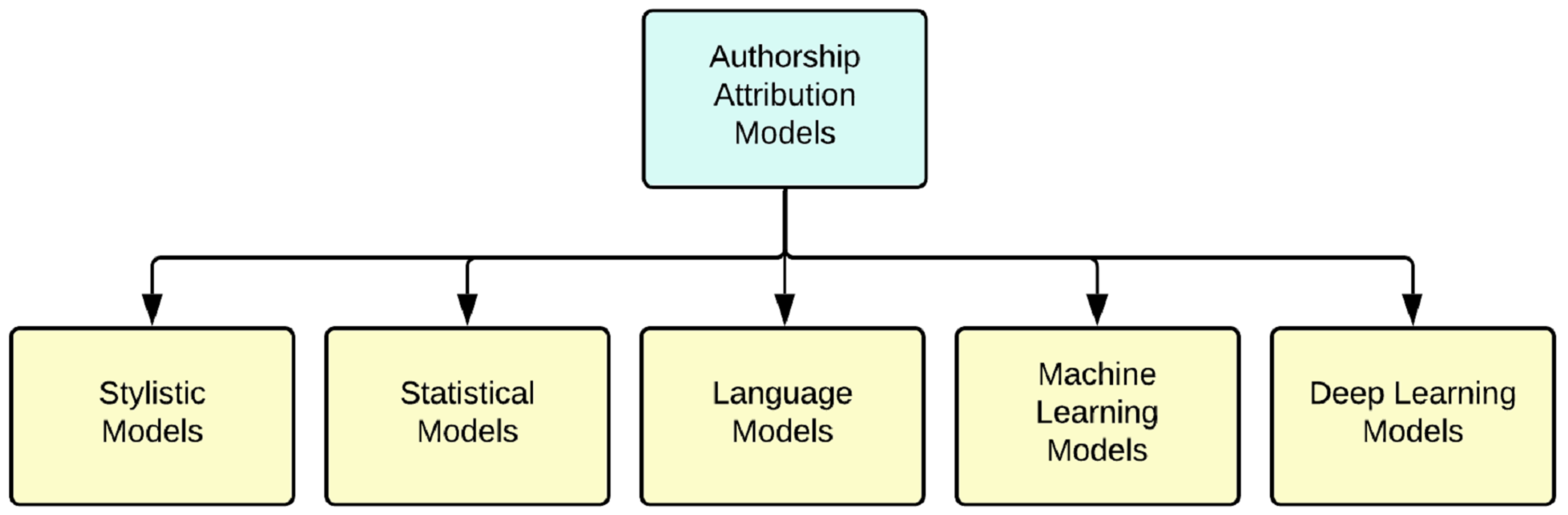

- We extensively surveyed the state-of-the-art authorship attribution methods and classified them into five categories based on their model characteristics: stylistic, statistical, language, machine learning, and deep learning. We synthesized the characteristics of the model types and also listed their shortcomings.

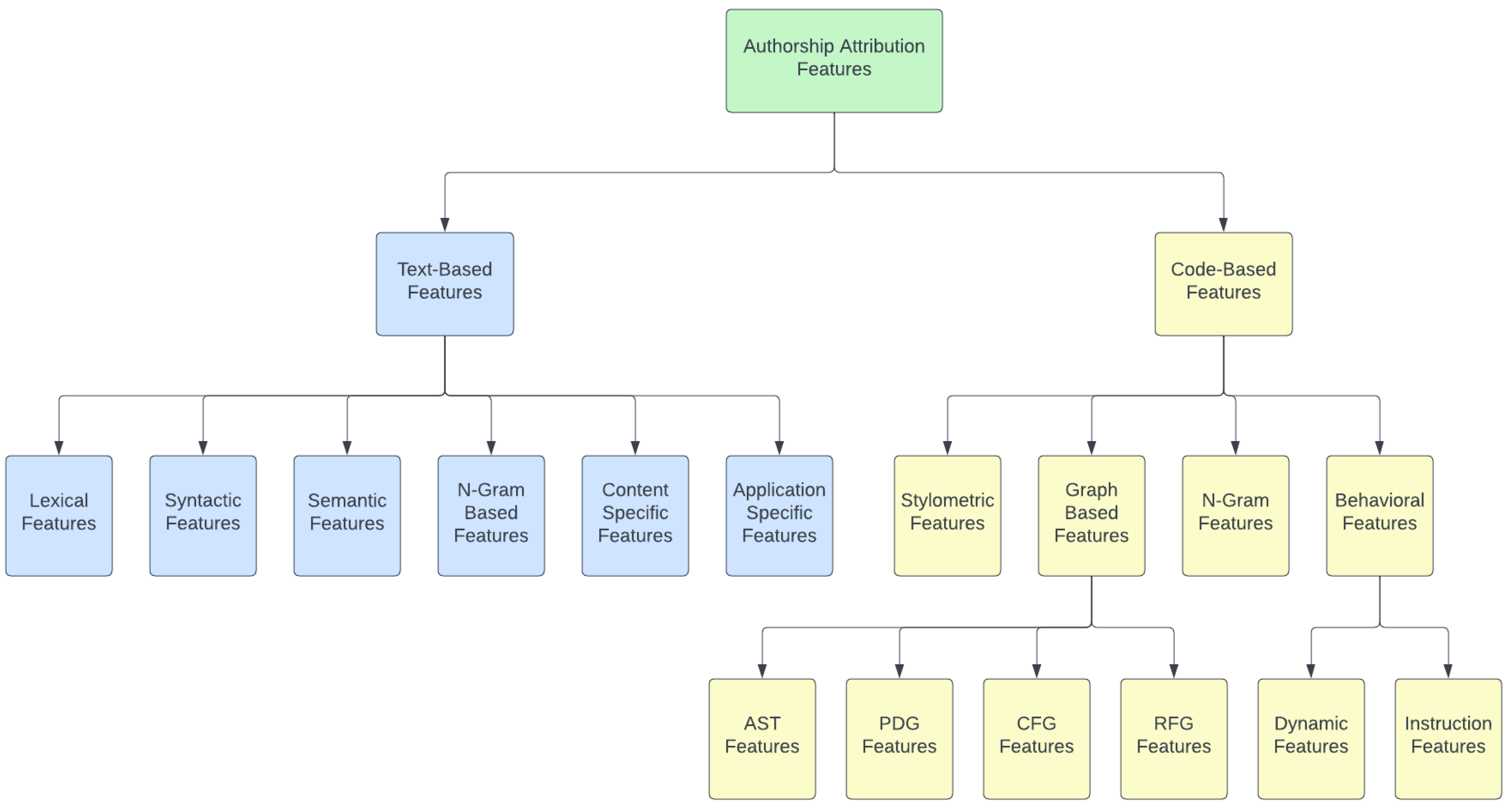

- We investigated what feature sets are used in the state-of-the-art authorship attribution models. In particular, we surveyed the state-of-the-art and feature extraction methods and feature types they used.

- We developed an authorship attribution features taxonomy for text-based and code-based feature sets. In addition, we synthesized the critical properties of each feature type and discussed their shortcomings.

- We surveyed the available datasets and summarized their applicability, size, and shortcomings.

- We also surveyed various evaluation methods used to validate the performance of authorship attribution methods. We discussed the evaluation metrics used to assess the performance of authorship attribution methods.

- We discussed the key distinctive tasks of the source code or text authorship attribution models compared to authorship verification models. We briefly discussed the state-of-the-art authorship attribution methods and their shortcomings.

- Lastly, we discussed the challenges and limitations obtained from this extensive survey and suggested future work directions.

1.2. Paper Structure

- Section 2 discusses the key model types and their classifications by synthesizing their characteristics with shortcomings.

- Section 3 presents the existing feature types and their taxonomy.

- Section 4 discusses available datasets primarily used in the source code and/or text authorship attribution research. Additionally, we discuss existing datasets and their shortcomings.

- Section 5 surveys metrics used to evaluate the effectiveness of the authorship attribution approaches in terms of their accuracy, precision, recall, and computational complexity.

- Section 6 provides a short review of the existing authorship verification methods. This section helps readers know how the authorship attribution task differs from the authorship verification task. This section presents the state-of-the-art authorship verification methods with their shortcomings.

- Section 7 discusses the challenges and limitations of the existing authorship attribution methods covered in this work.

- Section 8 discusses potential future works and suggests future research directions in text, documents, and/or source code authorship attribution.

- Section 9 concludes this survey work.

2. Review on Models and Their Classification

2.1. Stylistic Models

2.2. Language Models

2.3. Statistical Models

2.4. Machine Learning Models

2.5. Deep Learning Models

2.6. Synthesis

- Stylistic models, which take into account the styles unique to each author.

- Statistical models that use computational, statistical, and mathematical methods on data to make inferences on their authorship and also on the confidence levels of the results.

- Language models that work with the probability distributions over words and phrases.

- Machine learning models that convert the authorship attribution problem into a multi-class classification problem and aim to use the most effective classifier from a set of classifiers to solve the said problem.

- Deep learning models can capture more distinct features and provide better results than certain machine learning methods for more complex attribution problems.

- Statistical methods have the disadvantage of taking neither the lexicon used nor the theme of the suspect document into account.

- For selecting optimal values of n in n-gram-based language models, a large value is better for lexical and contextual information. Still, it would significantly increase the representation size, and a small value will represent the syllables but will not be enough to describe contextual information.

- Some feature extraction techniques, such as n-grams, might not be able to capture all dependency features over a long distance in the text.

- Most research in the field assumes that documents and codes are written by a single author, which is not valid in most cases. Another case is that many files might have a primary author who contributed most of the code, with other authors making minor additions and modifications. These scenarios can affect the accuracy negatively.

- Finding an adequately large and balanced dataset has always been a challenge in this field. It is much harder to attribute short texts accurately.

- About the extraction of the style of source code authors, some approaches are language-dependent; therefore, they cannot be used in other languages.

- For some statistical models and machine learning models, the selection of metrics was not an easy process, for example, thresholds needed to be set to eliminate those less contributive metrics to classifiers.

- The model accuracy decreased while the number of authors increased, but this issue was more obvious for some machine learning models. For example, the degradation of the accuracy of models such as random forest and SVM is worse than neural networks such as LSTM and BiLSTM.

- Neural networks cannot generalize well when the number of source code samples is insufficient since there is not enough information that can be analyzed.

- Most of the existing models cannot provide any explanations for the attribution results.

3. Review on Feature Types and Their Classification

3.1. Text-Based Features

3.1.1. Lexical Features

| Paper | Features |

|---|---|

| Tamboli and Prasad [39] | Character n-grams (including space, special characters, punctuation), word-to-character ratio, average word length (AWL), average word length per sentence (AWLS), average character length per sentence (ACLS), function word distributions, total lines, words, punctuation, alphabet cases, short words, big words, cognitive error [125] |

| Segarra et al. [22] | Function words, directed proximity between two words, window length, sentence delimiters |

| Koppel et al. [130] | Function words, word length distributions, word contents |

| Argamon and Levitan [126], Zhao and Zobel [127], Yu [128], Kestemont [129] | Function words |

| Ahmed et al. [132] | Characters, sentence length [131], word length [47], rhyme, first word in a sentence |

| Holmes [133], Yavanoglu [117] | Number of nouns, verbs, adjectives and adverbs |

| Ali et al. [19] | 2–3 Letter Words, 2–4 Letter Words, 3–4 Letter Words, Character Bigrams, Unicode Character frequencies, Character Tetragrams, Character Trigrams, Dis Legomena, Hapax Legomena, Hapax-Dis Legomena, MW function Words, Words frequencies, Vowel 2–3 letters words, Vowel 2–4 letters words, Vowel 3–4 letters words, Vowel initial words, Word Bigrams, Word Length, Syllables per word, Word Tetragrams, Word Trigrams |

| Zhao and Zobel [62] | Frequent words |

| Can and Patton [134] | Number of tokens, token types, average token length, average type length |

3.1.2. Syntactic Features

3.1.3. Semantic Features

3.1.4. N-Gram-Based Features

3.1.5. Content-Specific Features

3.1.6. Application-Specific Features

3.2. Code-Based Features

3.2.1. Stylometric Features

3.2.2. N-Gram Features

3.2.3. Graph-Based Features

AST Features

Program Dependence Graph (PDG) Features

CFG Features

RFG Features

3.2.4. Behavioral Features

Dynamic Features

Instruction Features

3.2.5. Neural Networks Generated Representations of Source Code or Features

3.2.6. Select Influential Features

3.2.7. Explanation of Attribution through Features

3.3. Synthesis

- Text-based features

- Lexical information tends to overlook the structure of the sentences and the textual complexity.

- For n-gram features, a large n would significantly increase the representation size.

- N-gram features fall short when representing the contextual information.

- Content-specific features focus more on the essence content of the work; the weakness lies in their inability to consider the structure and style of the text.

- Code-Based Features

- Stylometric features are usually language-dependent; some traits contribute to one programming language but cannot be used in another language.

- Available keywords, statements, and structures in source code are limited and restrained by guidelines. Stylometric features are not very distinguishing for coding style.

- Some style-related and layout-related characteristics are distinguishing but easily changed by code formatting tools, so they lack robustness to obfuscators.

- Stylometric features have to be extracted manually or by tools, which is time-consuming. Stylometric features have to be extracted manually or by tools—manually is time-consuming, and by tools is usually quick.

- An extra procedure is needed to retrieve dynamic and instruction features instead of static analysis, and it needs to be compiled and executed under a specific running environment.

- They can be obtained only from executable source code, which is hard to extract from code fragments.

4. Review on Available Datasets

4.1. Sizes of Datasets

4.2. Sample Size Per Author

4.3. Dataset from “Wild”

4.4. Synthesis

- There are no benchmark datasets with large sizes, and they are widely accepted by researchers in the domain of source code authorship attribution.

- There is no consensus on the sample size per author.

- Some researchers claimed to obtain high accuracies, but the experiments were conducted on small-size datasets, so the results lacked soundness.

- Google Code Jam data are more reliable than GitHub due to the fact that all the files are solutions for limited coding questions and the author labels to the files are more reliable. However, most authors have more advanced skills and experiences, so GCJ data are also more imbalanced.

- GitHub data have a large size of the source file that contains the most realistic programs, but they come with issues relating to the collaboration of multiple authors and code reuse. Although some researchers brought up solutions to these issues, such as the use of Git commit and Git-author, most authorship attribution tasks are for single-author attribution, and this makes the authorship attribution on the GitHub dataset problematic.

5. Review on Model Evaluation Metrics

5.1. Accuracy

5.2. Mean Reciprocal Rank and Mean Average Precision

5.3. Precision, Recall, and F-Measure

5.4. Algorithm Complexity

5.5. Attribution Accuracy under Obfuscation

6. Review on Authorship Verification Methods

- Deep neural network-based AV approaches integrate the feature extraction task into a deep-learning framework for authorship verification [115,188,189]. Similarity learning and attention-based neural network models were developed to verify authorship for social media messages [190,191]. An attention-based Siamese network topology approach learns linguistic features using the bidirectional recurrent neural network. Recently, Hu et al. [189] proposed a topic-based stylometric representation learning for authorship verification.

- Lack of publicly available appropriate corpora that are required to train and evaluate the models. The metadata with the existing publicly available corpora lack features such as precise time, location, and topic category of the texts.

- There is no more research on how the length of the text message affects the results of the AV model. There is a dramatic shift in the author’s characteristics of their writing according to the situation and posting small text messages on social media platforms.

- Limited investigation on the effect of topical influence. In AV problems, the topic of the documents is not always known beforehand, which can lead to a challenge regarding the recognition of the writing style. In addition, the existing AV methods are not robust against topical influence [178].

7. Challenges and Limitations

- Limitations with models: Various techniques have been employed in this field, categorized into stylistic, statistical, language, machine, and deep learning models. Based on our review, these methods face several challenges and limitations as below:

- –

- Statistical Methods: They do not consider vocabulary or document themes, limiting their effectiveness.

- –

- Language Models: Selecting optimal ‘n’ values in n-grams is challenging, as larger values increase representation size, while smaller values lack contextual information.

- –

- Feature Extraction: Some techniques struggle to capture long-distance dependency features in text.

- –

- Multiple Authors: Many documents and codes involve multiple authors, impacting accuracy.

- –

- Data Availability: Finding large and balanced datasets, especially for short texts, is challenging.

- –

- Language Dependency: Some approaches are language-specific.

- –

- Metric Selection: Determining useful metrics for models can be complex.

- –

- Model Scalability: Some models suffer accuracy degradation with increased authors.

- –

- Generalization: Neural networks may struggle with limited samples.

- –

- Explainability: Most models lack explanations for results.

- Limitations with datasets: There are several key issues and challenges related to datasets in the authorship attribution research domain. The key challenges and limitations of datasets are as follows:

- –

- Lack of benchmark and standardization: State-of-the-art research lacks widely accepted large benchmark datasets, hindering standardization and robust evaluation of models.

- –

- Variability in data sample size per author: There is no consensus on the ideal sample size per author, leading to variability in research approaches.

- –

- Data validity and imbalance issues: The reliability of small datasets raises concerns about result validity. Even reliable datasets can suffer from imbalance issues, affecting the representativeness of the data and potentially biasing attribution models.

These limitations underscore the need for more comprehensive and standardized datasets. - Limitations with feature sets: In this survey, we found the following several limitations and challenges for the feature sets:

- –

- Limitations with text-based feature sets: These feature sets suffer from lexical oversights, potentially missing structural nuances in the text. The choice of the ‘n’ value in n-gram features impacts representation size and context, with larger values increasing size but potentially lacking contextual information. Moreover, while content-specific features focus on content, they may overlook the text’s essential structural and stylistic elements.

- –

- Limitations with code-based feature sets: In code-based feature sets, stylometric features are language-dependent and may not generalize effectively across programming languages. The limited availability of keywords and language elements restricts the capabilities of these features. Code-based features are also vulnerable to alterations caused by code formatting, reducing their robustness. Furthermore, extracting stylometric features can be a complex and time-consuming process. Additionally, other code-based features, such as graph-based and dynamic features, face challenges in extraction, language dependence, and additional procedures. Finally, there is a limited exploration of feature categories, and many existing models lack feature-wise explanations for their results.

8. Future Research Directions

- Code reuse detection and dynamic author profiling: Authors of both malware and legitimate software reuse software codes and libraries that save time and effort because they have already been written and created [9]. Dynamic author profiling methods can adapt authorship attribution that tracks the changing or evolving coding styles of the author over time. Identifying the reuse of codes, clone detection techniques, and dynamic profiling of the authors can be a potential direction of future work.

- Multi-author attribution: Multi-author attribution focuses on determining who wrote a particular program code or document among multiple authors. In multi-author attribution, it is challenging to divide the code boundaries of different authors in a sample code and identify the code segments belonging to different authors. Investigation into the development of multi-author attribution models for effectively and accurately identifying code segment that belongs to an author among multiple authors can be a potential future work.

- Explainable and interpretable models for authorship attribution: An explainable and interpretable model helps to understand why the model made a particular prediction with the given input variables. Identifying the most influential features or predictor variables and their role in prediction is challenging with linguistic or stylometric features. Design and development of explainable and interpretable methods for authorship attribution can be a potential future research topic.

- Advancement in deep learning models: There are numerous works that leverage deep learning-based models for the classification of authorship attribution. The performance of these models can be further improved by considering the combination of advanced deep neural architectures and loss functions. Investigation into the recent advances in deep learning models (e.g., LSTM, Autoencoder, CNNs, BERT, GPT, etc.) and their possible combinations with different loss functions can be an interesting potential future research topic.

- Against obfuscation or adversarial attack: Authorship obfuscation is a protective countermeasure that aims to evade authorship attribution by obfuscating the writing style in a text [192]. Most of the existing authorship obfuscation approaches are rule- or learning-based, and these approaches do not consider the adversarial model and cannot defend against adversarial attacks on authorship attribution systems. The development of the obfuscation approaches considering the adversarial setup could be a potential future research direction in authorship attribution.

- Open world problem: An open-world classification deals with scenarios in which the set of classes is not known in advance. In authorship attribution, if a set of potential authors is not known in advance, the model requires making a generalization with the set of known other authors and predicting the authors that have never been seen before. Dynamic authorship modeling and incremental learning, ensemble models, etc., can adapt to the changing authorship styles and characteristics over time. Also, research on authorship attribution methods in blockchain and quantum computing technology can be an interesting potential research direction.

- Dynamic and incremental authorship attribution: Dynamic and incremental authorship attribution methods are used to identify authors in a dynamic situation where they change their writing or coding styles over time. It is a more crucial and challenging task because authorship patterns evolve and work environments change dynamically over time. Adaptive and incremental machine learning models can capture changing authorship patterns by continuously training and updating the models with the new data. Similarly, time series analysis and change-point detection methods can adapt to change authorship patterns that allow for the recognition of new coding or writing styles. The continuous evaluation and validation of the models are crucial to ensure their performance over time.

9. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Al-Sarem, M.; Saeed, F.; Alsaeedi, A.; Boulila, W.; Al-Hadhrami, T. Ensemble Methods for Instance-Based Arabic Language Authorship Attribution. IEEE Access 2020, 8, 17331–17345. [Google Scholar] [CrossRef]

- Mechti, S.; Almansour, F. An Orderly Survey on Author Attribution Methods: From Stylistic Features to Machine Learning Models. Int. J. Adv. Res. Eng. Technol. 2021, 12, 528–538. [Google Scholar]

- Swain, S.; Mishra, G.; Sindhu, C. Recent approaches on authorship attribution techniques—An overview. In Proceedings of the 2017 International conference of Electronics, Communication and Aerospace Technology (ICECA), Coimbatore, India, 20–22 April 2017; IEEE: Piscataway, NJ, USA, 2017; Volume 1, pp. 557–566. [Google Scholar]

- Rocha, A.; Scheirer, W.J.; Forstall, C.W.; Cavalcante, T.; Theophilo, A.; Shen, B.; Carvalho, A.R.B.; Stamatatos, E. Authorship Attribution for Social Media Forensics. IEEE Trans. Inf. Forensics Secur. 2017, 12, 5–33. [Google Scholar] [CrossRef]

- Theophilo, A.; Giot, R.; Rocha, A. Authorship Attribution of Social Media Messages. IEEE Trans. Comput. Soc. Syst. 2023, 10, 10–23. [Google Scholar] [CrossRef]

- Spafford, E.H.; Weeber, S.A. Software forensics: Can we track code to its authors? Comput. Secur. 1993, 12, 585–595. [Google Scholar] [CrossRef][Green Version]

- Bull, J.; Collins, C.; Coughlin, E.; Sharp, D. Technical Review of Plagiarism Detection Software Report; Computer Assisted Assessment Centre: Luton, UK, 2001. [Google Scholar]

- Culwin, F.; MacLeod, A.; Lancaster, T. Source Code Plagiarism in UK HE Computing Schools, Issues, Attitudes and Tools; Technical Report SBU-CISM-01-02; South Bank University: London, UK, 2001. [Google Scholar]

- Kalgutkar, V.; Kaur, R.; Gonzalez, H.; Stakhanova, N.; Matyukhina, A. Code authorship attribution: Methods and challenges. ACM Comput. Surv. CSUR 2019, 52, 1–36. [Google Scholar] [CrossRef]

- Li, Z.; Chen, G.Q.; Chen, C.; Zou, Y.; Xu, S. RoPGen: Towards Robust Code Authorship Attribution via Automatic Coding Style Transformation. In Proceedings of the 44th International Conference on Software Engineering, Pittsburgh, PA, USA, 21–29 May 2022; pp. 1906–1918. [Google Scholar] [CrossRef]

- Zheng, W.; Jin, M. A review on authorship attribution in text mining. Wiley Interdiscip. Rev. Comput. Stat. 2023, 15, e1584. [Google Scholar] [CrossRef]

- Stamatatos, E. A survey of modern authorship attribution methods. J. Am. Soc. Inf. Sci. Technol. 2009, 60, 538–556. [Google Scholar] [CrossRef]

- Juola, P. Authorship attribution. Found. Trends Inf. Retr. 2008, 1, 233–334. [Google Scholar] [CrossRef]

- Mosteller, F.; Wallace, D.L. Applied Bayesian and Classical Inference: The Case of The Federalist Papers; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Zheng, R.; Li, J.; Chen, H.; Huang, Z. A framework for authorship identification of online messages: Writing-style features and classification techniques. J. Am. Soc. Inf. Sci. Technol. 2006, 57, 378–393. [Google Scholar] [CrossRef]

- Jin, M.; Jiang, M. Text clustering on authorship attribution based on the features of punctuations usage. In Proceedings of the 2012 IEEE 11th International Conference on Signal Processing, Beijing, China, 21–25 October 2012; IEEE: Piscataway, NJ, USA, 2012; Volume 3, pp. 2175–2178. [Google Scholar]

- Stuart, L.M.; Tazhibayeva, S.; Wagoner, A.R.; Taylor, J.M. Style features for authors in two languages. In Proceedings of the 2013 IEEE/WIC/ACM International Joint Conferences on Web Intelligence (WI) and Intelligent Agent Technologies (IAT), Atlanta, GA, USA, 17–20 November 2013; IEEE: Piscataway, NJ, USA, 2013; Volume 1, pp. 459–464. [Google Scholar]

- Hinh, R.; Shin, S.; Taylor, J. Using frame semantics in authorship attribution. In Proceedings of the 2016 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Budapest, Hungary, 9–12 October 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 004093–004098. [Google Scholar]

- Ali, N.; Hindi, M.; Yampolskiy, R.V. Evaluation of authorship attribution software on a Chat bot corpus. In Proceedings of the 2011 XXIII International Symposium on Information, Communication and Automation Technologies, Sarajevo, Bosnia and Herzegovina, 27–29 October 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 1–6. [Google Scholar]

- Evaluating Variation in Language (EVL) Lab. Java Graphical Authorship Attribution Program Classifiers. 2010. Available online: https://github.com/evllabs/JGAAP/tree/master/src/com/jgaap/classifiers (accessed on 20 December 2023).

- Goodman, R.; Hahn, M.; Marella, M.; Ojar, C.; Westcott, S. The use of stylometry for email author identification: A feasibility study. In Proceedings of the Student/Faculty Research Day (CSIS) Pace University, White Plains, NY, USA, 4 May 2007; pp. 1–7. [Google Scholar]

- Segarra, S.; Eisen, M.; Ribeiro, A. Authorship attribution through function word adjacency networks. IEEE Trans. Signal Process. 2015, 63, 5464–5478. [Google Scholar] [CrossRef]

- Zhao, Y.; Zobel, J.; Vines, P. Using relative entropy for authorship attribution. In Proceedings of the Asia Information Retrieval Symposium, Singapore, 16–18 October 2006; Springer: Berlin/Heidelberg, Germany, 2006; pp. 92–105. [Google Scholar]

- Kesidis, G.; Walrand, J. Relative entropy between Markov transition rate matrices. IEEE Trans. Inf. Theory 1993, 39, 1056–1057. [Google Scholar] [CrossRef]

- Khmelev, D.V.; Tweedie, F.J. Using Markov chains for identification of writer. Lit. Linguist. Comput. 2001, 16, 299–307. [Google Scholar] [CrossRef]

- Sanderson, C.; Guenter, S. Short text authorship attribution via sequence kernels, Markov chains and author unmasking: An investigation. In Proceedings of the 2006 Conference on Empirical Methods in Natural Language Processing, Sydney, Australia, 22–23 July 2006; pp. 482–491. [Google Scholar]

- Cox, M.A.; Cox, T.F. Multidimensional scaling. In Handbook of Data Visualization; Springer: Berlin/Heidelberg, Germany, 2008; pp. 315–347. [Google Scholar]

- Abbasi, A.; Chen, H. Writeprints: A stylometric approach to identity-level identification and similarity detection in cyberspace. ACM Trans. Inf. Syst. 2008, 26, 1–29. [Google Scholar] [CrossRef]

- Argamon, S.; Burns, K.; Dubnov, S. The Structure of Style: Algorithmic Approaches to Understanding Manner and Meaning; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Oman, P.W.; Cook, C.R. A paradigm for programming style research. ACM Sigplan Not. 1988, 23, 69–78. [Google Scholar] [CrossRef]

- Burrows, S. Source Code Authorship Attribution. Ph.D. Thesis, RMIT University, Melbourne, Australia, 2010. [Google Scholar]

- Krsul, I.; Spafford, E.H. Authorship analysis: Identifying the author of a program. Comput. Secur. 1997, 16, 233–257. [Google Scholar] [CrossRef]

- Macdonell, S.; Gray, A.; MacLennan, G.; Sallis, P. Software forensics for discriminating between program authors using case-based reasoning, feedforward neural networks and multiple discriminant analysis. In Proceedings of the ICONIP’99 & ANZIIS’99 & ANNES’99 & ACNN’99 6th International Conference on Neural Information Processing, Perth, WA, Australia, 16–20 November 1999; Volume 1, pp. 66–71. [Google Scholar] [CrossRef]

- Ding, H.; Samadzadeh, M.H. Extraction of Java program fingerprints for software authorship identification. J. Syst. Softw. 2004, 72, 49–57. [Google Scholar] [CrossRef]

- Lange, R.C.; Mancoridis, S. Using code metric histograms and genetic algorithms to perform author identification for software forensics. In Proceedings of the 9th Annual Conference on Genetic and Evolutionary Computation, London, UK, 7–1 July 2007; pp. 2082–2089. [Google Scholar]

- Elenbogen, B.S.; Seliya, N. Detecting outsourced student programming assignments. J. Comput. Sci. Coll. 2008, 23, 50–57. [Google Scholar]

- Agun, H.V.; Yilmazel, O. Document embedding approach for efficient authorship attribution. In Proceedings of the 2007 2nd International Conference on Knowledge Engineering and Applications (ICKEA), London, UK, 21–23 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 194–198. [Google Scholar]

- Le, Q.; Mikolov, T. Distributed representations of sentences and documents. In Proceedings of the International Conference on Machine Learning, Beijing, China, 21–26 June 2014; pp. 1188–1196. [Google Scholar]

- Tamboli, M.S.; Prasad, R.S. Feature selection in time aware authorship attribution. In Proceedings of the 2018 International Conference on Advances in Communication and Computing Technology (ICACCT), Sangamner, India, 8–9 February 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 534–537. [Google Scholar]

- Ge, Z.; Sun, Y.; Smith, M. Authorship attribution using a neural network language model. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30. [Google Scholar]

- Pratanwanich, N.; Lio, P. Who wrote this? Textual modeling with authorship attribution in big data. In Proceedings of the 2014 IEEE International Conference on Data Mining Workshop, Shenzhen, China, 14 December 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 645–652. [Google Scholar]

- Blei, D.M.; Ng, A.Y.; Jordan, M.I. Latent dirichlet allocation. J. Mach. Learn. Res. 2003, 3, 993–1022. [Google Scholar]

- Seroussi, Y.; Zukerman, I.; Bohnert, F. Authorship attribution with latent Dirichlet allocation. In Proceedings of the Fifteenth Conference on Computational Natural Language Learning, Portland, OR, USA, 23–24 June 2011; pp. 181–189. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- McCallum, A.K. Multi-label text classification with a mixture model trained by EM. In Proceedings of the AAAI 99 Workshop on Text Learning, Orlando, FL, USA, 18–19 July 1999. [Google Scholar]

- Seroussi, Y.; Zukerman, I.; Bohnert, F. Authorship attribution with topic models. Comput. Linguist. 2014, 40, 269–310. [Google Scholar] [CrossRef]

- Mendenhall, T.C. The characteristic curves of composition. Science 1887, 237–246. [Google Scholar] [CrossRef] [PubMed]

- Labbé, C.; Labbé, D. Inter-textual distance and authorship attribution Corneille and Moliére. J. Quant. Linguist. 2001, 8, 213–231. [Google Scholar] [CrossRef]

- Marusenko, M.; Rodionova, E. Mathematical methods for attributing literary works when solving the “Corneille–Molière” problem. J. Quant. Linguist. 2010, 17, 30–54. [Google Scholar] [CrossRef]

- Mosteller, F.; Wallace, D.L. Inference in an authorship problem: A comparative study of discrimination methods applied to the authorship of the disputed Federalist Papers. J. Am. Stat. Assoc. 1963, 58, 275–309. [Google Scholar]

- Mosteller, F.; Wallace, D.L. Inference and Disputed Authorship: The Federalist; CSLI: Stanford, CA, USA, 1964. [Google Scholar]

- Khomytska, I.; Teslyuk, V. Authorship attribution by differentiation of phonostatistical structures of styles. In Proceedings of the 2018 IEEE 13th International Scientific and Technical Conference on Computer Sciences and Information Technologies (CSIT), Lviv, Ukraine, 11–14 September 2018; IEEE: Piscataway, NJ, USA, 2018; Volume 2, pp. 5–8. [Google Scholar]

- Khomytska, I.; Teslyuk, V. Modelling of phonostatistical structures of English backlingual phoneme group in style system. In Proceedings of the 2017 14th International Conference The Experience of Designing and Application of CAD Systems in Microelectronics (CADSM), Lviv, Ukraine, 21–25 February 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 324–327. [Google Scholar]

- Khomytska, I.; Teslyuk, V. Specifics of phonostatistical structure of the scientific style in English style system. In Proceedings of the 2016 XIth International Scientific and Technical Conference Computer Sciences and Information Technologies (CSIT), Lviv, Ukraine, 6–10 September 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 129–131. [Google Scholar]

- Khomytska, I.; Teslyuk, V. The method of statistical analysis of the scientific, colloquial, belles-lettres and newspaper styles on the phonological level. In Advances in Intelligent Systems and Computing; Springer: Berlin/Heidelberg, Germany, 2017; pp. 149–163. [Google Scholar]

- Khomytska, I.; Teslyuk, V. Authorship and style attribution by statistical methods of style differentiation on the phonological level. In Proceedings of the 2018 Conference on Computer Science and Information Technologies, Lviv, Ukraine, 11–14 February 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 105–118. [Google Scholar]

- Khomytska, I.; Teslyuk, V.; Holovatyy, A.; Morushko, O. Development of Methods, Models, and Means for the Author Attribution of a Text. East. Eur. J. Enterp. Technol. 2018, 3, 41–46. [Google Scholar] [CrossRef][Green Version]

- Inches, G.; Harvey, M.; Crestani, F. Finding participants in a chat: Authorship attribution for conversational documents. In Proceedings of the 2013 International Conference on Social Computing, Alexandria, VA, USA, 8–14 September 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 272–279. [Google Scholar]

- Burrows, J. ‘Delta’: A measure of stylistic difference and a guide to likely authorship. Lit. Linguist. Comput. 2002, 17, 267–287. [Google Scholar] [CrossRef]

- Savoy, J. Authorship attribution based on a probabilistic topic model. Inf. Process. Manag. 2013, 49, 341–354. [Google Scholar] [CrossRef]

- Gal, Y.; Ghahramani, Z. Pitfalls in the use of parallel inference for the Dirichlet process. In Proceedings of the International Conference on Machine Learning, Beijing, China, 21–26 June 2014; pp. 208–216. [Google Scholar]

- Zhao, Y.; Zobel, J. Searching with style: Authorship attribution in classic literature. In Proceedings of the ACM International Conference Proceeding Series, Ballarat, VIC, Australia, 30 January–2 February 2007; Volume 244, pp. 59–68. [Google Scholar]

- Grieve, J. Quantitative authorship attribution: An evaluation of techniques. Lit. Linguist. Comput. 2007, 22, 251–270. [Google Scholar] [CrossRef]

- Gray, A.; Sallis, P.; MacDonell, S. Identified: A dictionary-based system for extracting source code metrics for software forensics. In Proceedings of the Software Engineering: Education and Practice, International Conference on, Dunedin, New Zealand, 26–29 January 1998; IEEE: Piscataway, NJ, USA, 1998; p. 252. [Google Scholar]

- Kešelj, V.; Peng, F.; Cercone, N.; Thomas, C. N-gram-based author profiles for authorship attribution. In Proceedings of the Conference Pacific Association for Computational Linguistics (PACLING 2003), Halifax, Canada, 22–25 August 2003; Volume 3, pp. 255–264. [Google Scholar]

- Frantzeskou, G.; Stamatatos, E.; Gritzalis, S.; Katsikas, S. Effective identification of source code authors using byte-level information. In Proceedings of the 28th International Conference on Software Engineering, Shanghai, China, 20–28 May 2006; pp. 893–896. [Google Scholar]

- Burrows, S.; Uitdenbogerd, A.L.; Turpin, A. Application of Information Retrieval Techniques for Source Code Authorship Attribution. In Proceedings of the Database Systems for Advanced Applications, Brisbane, Australia, 21–23 April 2009; Springer: Berlin/Heidelberg, Germany, 2009; pp. 699–713. [Google Scholar]

- Burrows, S.; Uitdenbogerd, A.L.; Turpin, A. Temporally Robust Software Features for Authorship Attribution. In Proceedings of the 2009 33rd Annual IEEE International Computer Software and Applications Conference, Seattle, WA, USA, 20–24 July 2009; Volume 1, pp. 599–606. [Google Scholar] [CrossRef]

- Burrows, S.; Tahaghoghi, S.M. Source code authorship attribution using n-grams. In Proceedings of the Twelth Australasian Document Computing Symposium, Melbournem, Australia, 10 December 2007; pp. 32–39. [Google Scholar]

- Holmes, G.; Donkin, A.; Witten, I.H. Weka: A machine learning workbench. In Proceedings of the ANZIIS’94-Australian New Zealnd Intelligent Information Systems Conference, Brisbane, QLD, Australia, 29 November–2 December 1994; IEEE: Piscataway, NJ, USA, 1994; pp. 357–361. [Google Scholar]

- Witten, I.H.; Frank, E.; Hall, M.A.; Pal, C.J. Practical machine learning tools and techniques. In Data Mining; Elsevier: Amsterdam, The Netherlands, 2005; Volume 2. [Google Scholar]

- Kothari, J.; Shevertalov, M.; Stehle, E.; Mancoridis, S. A Probabilistic Approach to Source Code Authorship Identification. In Proceedings of the 4th International Conference on Information Technology (ITNG’07), Las Vegas, NV, USA, 2–4 April 2007; pp. 243–248. [Google Scholar] [CrossRef]

- Rosenblum, N.; Zhu, X.; Miller, B.; Hunt, K. Machine learning-assisted binary code analysis. In Proceedings of the NIPS Workshop on Machine Learning in Adversarial Environments for Computer Security, Whistler, BC, Canada, 3–4 December 2007. [Google Scholar]

- Kindermann, R.; Snell, J. Contemporary Mathematics: Markov Random Fields and their Applications; American Mathematical Society: Providence, RI, USA, 1980. [Google Scholar]

- Shevertalov, M.; Kothari, J.; Stehle, E.; Mancoridis, S. On the Use of Discretized Source Code Metrics for Author Identification. In Proceedings of the 2009 1st International Symposium on Search Based Software Engineering, Windsor, UK, 13–15 May 2009; pp. 69–78. [Google Scholar] [CrossRef]

- Rosenblum, N.; Zhu, X.; Miller, B.P. Who wrote this code? identifying the authors of program binaries. In Proceedings of the Computer Security—ESORICS 2011, Leuven, Belgium, 12–14 September 2011; Springer: Berlin/Heidelberg, Germany, 2011; pp. 172–189. [Google Scholar]

- Layton, R.; Azab, A. Authorship analysis of the Zeus botnet source code. In Proceedings of the 2014 5th Cybercrime and Trustworthy Computing Conference, Auckland, New Zealand, 24–25 November 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 38–43. [Google Scholar]

- Fred, A.; Jain, A.K. Evidence accumulation clustering based on the k-means algorithm. In Proceedings of the Joint IAPR International Workshops on Statistical Techniques in Pattern Recognition (SPR) and Structural and Syntactic Pattern Recognition (SSPR), Windsor, ON, Canada, 6–9 August 2002; Springer: Berlin/Heidelberg, Germany, 2002; pp. 442–451. [Google Scholar]

- Layton, R.; Watters, P.; Dazeley, R. Automated unsupervised authorship analysis using evidence accumulation clustering. Nat. Lang. Eng. 2013, 19, 95–120. [Google Scholar] [CrossRef]

- Alazab, M.; Layton, R.; Broadhurst, R.; Bouhours, B. Malicious spam emails developments and authorship attribution. In Proceedings of the 2013 4th Cybercrime and Trustworthy Computing Workshop, Sydney, NSW, Australia, 21–22 November 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 58–68. [Google Scholar]

- Layton, R.; Watters, P.; Dazeley, R. Recentred local profiles for authorship attribution. Nat. Lang. Eng. 2012, 18, 293–312. [Google Scholar] [CrossRef]

- Layton, R.; Perez, C.; Birregah, B.; Watters, P.; Lemercier, M. Indirect information linkage for OSINT through authorship analysis of aliases. In Proceedings of the Pacific-Asia Conference on Knowledge Discovery and Data Mining, Golden Coast, QLD, Australia, 14–17 April 2013; Springer: Berlin/Heidelberg, Germany, 2013; pp. 36–46. [Google Scholar]

- Caliskan-Islam, A.; Harang, R.; Liu, A.; Narayanan, A.; Voss, C.; Yamaguchi, F.; Greenstadt, R. De-anonymizing programmers via code stylometry. In Proceedings of the 24th USENIX security symposium (USENIX Security 15), Washington, DC, USA, 12–14 August 2015; pp. 255–270. [Google Scholar]

- Caliskan, A.; Yamaguchi, F.; Dauber, E.; Harang, R.; Rieck, K.; Greenstadt, R.; Narayanan, A. When Coding Style Survives Compilation: De-anonymizing Programmers from Executable Binaries. In Proceedings of the 2018 Network and Distributed System Security Symposium, San Diego, CA, USA, 18–21 February 2018. [Google Scholar] [CrossRef]

- Meng, X. Fine-grained binary code authorship identification. In Proceedings of the 2016 24th ACM SIGSOFT International Symposium on Foundations of Software Engineering, Seattle, WA, USA, 13–18 November 2016; pp. 1097–1099. [Google Scholar]

- Cortes, C.; Vapnik, V. Support vector machine. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Meng, X.; Miller, B.P.; Williams, W.R.; Bernat, A.R. Mining software repositories for accurate authorship. In Proceedings of the 2013 IEEE International Conference on Software Maintenance, Eindhoven, The Netherlands, 22–28 September 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 250–259. [Google Scholar]

- Meng, X.; Miller, B.P.; Jun, K.S. Identifying multiple authors in a binary program. In Proceedings of the European Symposium on Research in Computer Security, Oslo, Norway, 11–15 September 2017; Springer: Berlin/Heidelberg, Germany, 2017; pp. 286–304. [Google Scholar]

- Zhang, C.; Wang, S.; Wu, J.; Niu, Z. Authorship Identification of Source Codes. In Proceedings of the Asia-Pacific Web (APWeb) and Web-Age Information Management (WAIM) Joint Conference on Web and Big Data, Beijing, China, 7–9 July 2017; Springer International: Cham, Switzerland, 2017; pp. 282–296. [Google Scholar]

- Dauber, E.; Caliskan, A.; Harang, R.; Greenstadt, R. Poster: Git blame who?: Stylistic authorship attribution of small, incomplete source code fragments. In Proceedings of the 2018 IEEE/ACM 40th International Conference on Software Engineering: Companion (ICSE-Companion), Gothenburg, Sweden, 27 May–3 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 356–357. [Google Scholar]

- Zhang, S.; Li, X.; Zong, M.; Zhu, X.; Cheng, D. Learning k for knn classification. ACM Trans. Intell. Syst. Technol. 2017, 8, 1–19. [Google Scholar] [CrossRef]

- Ewais, A.; Samara, D.A. Adaptive MOOCs based on intended learning outcomes using naive bayesian technique. Int. J. Emerg. Technol. Learn. 2020, 15, 4–21. [Google Scholar] [CrossRef]

- Dai, T.; Dong, Y. Introduction of SVM related theory and its application research. In Proceedings of the 2020 3rd International Conference on Advanced Electronic Materials, Computers and Software Engineering (AEMCSE), Shenzhen, China, 24–26 April 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 230–233. [Google Scholar]

- Sapkota, U.; Solorio, T.; Montes-y-Gómez, M.; Ramírez-de-la-Rosa, G. Author Profiling for English and Spanish Text. In Proceedings of the Working Notes for CLEF 2013 Conference, Valencia, Spain, 23–26 September 2013. [Google Scholar]

- Das, M.; Ghosh, S.K. Standard Bayesian network models for spatial time series prediction. In Enhanced Bayesian Network Models for Spatial Time Series Prediction; Springer: Berlin/Heidelberg, Germany, 2020; pp. 11–22. [Google Scholar]

- Zheng, Q.; Li, L.; Chen, H.; Loeb, S. What aspects of principal leadership are most highly correlated with school outcomes in China? Educ. Adm. Q. 2017, 53, 409–447. [Google Scholar] [CrossRef]

- Argamon, S.; Whitelaw, C.; Chase, P.; Hota, S.R.; Garg, N.; Levitan, S. Stylistic text classification using functional lexical features. J. Am. Soc. Inf. Sci. Technol. 2007, 58, 802–822. [Google Scholar] [CrossRef]

- Alkaabi, M.; Olatunji, S.O. Modeling Cyber-Attribution Using Machine Learning Techniques. In Proceedings of the 2020 30th International Conference on Computer Theory and Applications (ICCTA), Alexandria, Egypt, 12–14 December 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 10–15. [Google Scholar]

- Li, J.; Zheng, R.; Chen, H. From fingerprint to writeprint. Commun. ACM 2006, 49, 76–82. [Google Scholar] [CrossRef]

- Pillay, S.R.; Solorio, T. Authorship attribution of web forum posts. In Proceedings of the 2010 eCrime Researchers Summit, Dallas, TX, USA, 18–20 October 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 1–7. [Google Scholar]

- Donais, J.A.; Frost, R.A.; Peelar, S.M.; Roddy, R.A. A system for the automated author attribution of text and instant messages. In Proceedings of the 2013 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining, Niagara, ON, Canada, 25–28 August 2013; pp. 1484–1485. [Google Scholar]

- Khonji, M.; Iraqi, Y.; Jones, A. An evaluation of authorship attribution using random forests. In Proceedings of the 2015 International Conference on Information and Communication Technology Research (ICTRC), Abu Dhabi, United Arab Emirates, 17–19 May 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 68–71. [Google Scholar]

- Pacheco, M.L.; Fernandes, K.; Porco, A. Random Forest with Increased Generalization: A Universal Background Approach for Authorship Verification. In Proceedings of the CLEF Working Notes 2015, Toulouse, France, 8–11 September 2015. [Google Scholar]

- Pinho, A.J.; Pratas, D.; Ferreira, P.J. Authorship attribution using relative compression. In Proceedings of the 2016 Data Compression Conference (DCC), Snowbird, UT, USA, 30 March 2016–1 April 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 329–338. [Google Scholar]

- Bengio, Y.; Courville, A.; Vincent, P. Representation learning: A review and new perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1798–1828. [Google Scholar] [CrossRef]

- Abuhamad, M.; AbuHmed, T.; Mohaisen, A.; Nyang, D. Large-scale and language-oblivious code authorship identification. In Proceedings of the 2018 ACM SIGSAC Conference on Computer and Communications Security, Toronto, ON, Canada, 15–19 October 2018; pp. 101–114. [Google Scholar]

- Shin, E.C.R.; Song, D.; Moazzezi, R. Recognizing functions in binaries with neural networks. In Proceedings of the 24th USENIX security symposium (USENIX Security 15), Washington, DC, USA, 12–15 August 2015; pp. 611–626. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Kohavi, R. A study of cross-validation and bootstrap for accuracy estimation and model selection. In Proceedings of the International Joint Conference on Artificial Intelligence, Montreal, QB, Canada, 20–25 August 1995; Volume 14, pp. 1137–1145. [Google Scholar]

- Abuhamad, M.; Abuhmed, T.; Mohaisen, D.; Nyang, D. Large-Scale and Robust Code Authorship Identification with Deep Feature Learning. ACM Trans. Priv. Secur. 2021, 24, 23. [Google Scholar] [CrossRef]

- Zafar, S.; Sarwar, M.U.; Salem, S.; Malik, M.Z. Language and Obfuscation Oblivious Source Code Authorship Attribution. IEEE Access 2020, 8, 197581–197596. [Google Scholar] [CrossRef]

- White, R.; Sprague, N. Deep Metric Learning for Code Authorship Attribution and Verification. In Proceedings of the 2021 20th IEEE International Conference on Machine Learning and Applications (ICMLA), Pasadena, CA, USA, 13–16 December 2021; pp. 1089–1093. [Google Scholar] [CrossRef]

- Bogdanova, A. Source Code Authorship Attribution Using File Embeddings. In Proceedings of the 2021 ACM SIGPLAN International Conference on Systems, Programming, Languages, and Applications: Software for Humanity, Chicago, IL, USA, 17–22 October 2021; pp. 31–33. [Google Scholar] [CrossRef]

- Bogdanova, A.; Romanov, V. Explainable source code authorship attribution algorithm. J. Phys. Conf. Ser. 2021, 2134, 012011. [Google Scholar] [CrossRef]

- Bagnall, D. Author identification using multi-headed recurrent neural networks. arXiv 2015, arXiv:1506.04891. [Google Scholar]

- Ruder, S.; Ghaffari, P.; Breslin, J.G. Character-level and multi-channel convolutional neural networks for large-scale authorship attribution. arXiv 2016, arXiv:1609.06686. [Google Scholar]

- Yavanoglu, O. Intelligent authorship identification with using Turkish newspapers metadata. In Proceedings of the 2016 IEEE International Conference on Big Data (Big Data), Washington, DC, USA, 5–8 December 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1895–1900. [Google Scholar]

- Shrestha, P.; Sierra, S.; González, F.A.; Montes-y Gómez, M.; Rosso, P.; Solorio, T. Convolutional Neural Networks for Authorship Attribution of Short Texts. In Proceedings of the 15th Conference of the European Chapter of the Association for Computational Linguistics, Valencia, Spain, 3–7 April 2017; pp. 669–674. [Google Scholar]

- Zhao, C.; Song, W.; Liu, X.; Liu, L.; Zhao, X. Research on Authorship Attribution of Article Fragments via RNNs. In Proceedings of the 2018 IEEE 9th International Conference on Software Engineering and Service Science (ICSESS), Beijing, China, 23–25 November 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 156–159. [Google Scholar]

- Yang, X.; Xu, G.; Li, Q.; Guo, Y.; Zhang, M. Authorship attribution of source code by using back propagation neural network based on particle swarm optimization. PLoS ONE 2017, 12, e0187204. [Google Scholar] [CrossRef]

- Abuhamad, M.; su Rhim, J.; AbuHmed, T.; Ullah, S.; Kang, S.; Nyang, D. Code authorship identification using convolutional neural networks. Future Gener. Comput. Syst. 2019, 95, 104–115. [Google Scholar] [CrossRef]

- Ullah, F.; Wang, J.; Jabbar, S.; Al-Turjman, F.; Alazab, M. Source Code Authorship Attribution Using Hybrid Approach of Program Dependence Graph and Deep Learning Model. IEEE Access 2019, 7, 141987–141999. [Google Scholar] [CrossRef]

- Kurtukova, A.; Romanov, A.; Shelupanov, A. Source Code Authorship Identification Using Deep Neural Networks. Symmetry 2020, 12, 2044. [Google Scholar] [CrossRef]

- Bogomolov, E.; Kovalenko, V.; Rebryk, Y.; Bacchelli, A.; Bryksin, T. Authorship Attribution of Source Code: A Language-Agnostic Approach and Applicability in Software Engineering. In Proceedings of the 29th ACM Joint Meeting on European Software Engineering Conference and Symposium on the Foundations of Software Engineering, Athens, Greece, 23–28 August 2021; pp. 932–944. [Google Scholar] [CrossRef]

- Burns, K. Bayesian inference in disputed authorship: A case study of cognitive errors and a new system for decision support. Inf. Sci. 2006, 176, 1570–1589. [Google Scholar] [CrossRef]

- Argamon, S.; Levitan, S. Measuring the usefulness of function words for authorship attribution. In Proceedings of the Joint Conference of the Association for Computers and the Humanities and the Association for Literary and Linguistic Computing, Victoria, BC, Canada, 15–18 June 2005; pp. 1–3. [Google Scholar]

- Zhao, Y.; Zobel, J. Effective and scalable authorship attribution using function words. In Proceedings of the Asia Information Retrieval Symposium, Jeju Island, Republic of Korea, 13–15 October 2005; Springer: Berlin/Heidelberg, Germany, 2005; pp. 174–189. [Google Scholar]

- Yu, B. Function words for Chinese authorship attribution. In Proceedings of the NAACL-HLT 2012 Workshop on Computational Linguistics for Literature, Montreal, Canada, 8 June 2012; pp. 45–53. [Google Scholar]

- Kestemont, M. Function words in authorship attribution. From black magic to theory? In Proceedings of the 3rd Workshop on Computational Linguistics for Literature (CLFL), Gothenburg, Sweden, 27 April 2014; pp. 59–66. [Google Scholar]

- Koppel, M.; Schler, J.; Argamon, S. Computational methods in authorship attribution. J. Am. Soc. Inf. Sci. Technol. 2009, 60, 9–26. [Google Scholar] [CrossRef]

- Yule, G.U. On sentence-length as a statistical characteristic of style in prose: With application to two cases of disputed authorship. Biometrika 1939, 30, 363–390. [Google Scholar]

- Ahmed, A.F.; Mohamed, R.; Mostafa, B.; Mohammed, A.S. Authorship attribution in Arabic poetry. In Proceedings of the 2015 10th International Conference On Intelligent Systems: Theories and Applications (SITA), Rabat, Morocco, 20–21 October 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1–6. [Google Scholar]

- Holmes, D.I. The evolution of stylometry in humanities scholarship. Lit. Linguist. Comput. 1998, 13, 111–117. [Google Scholar] [CrossRef]

- Can, F.; Patton, J.M. Change of writing style with time. Comput. Humanit. 2004, 38, 61–82. [Google Scholar] [CrossRef]

- Ramezani, R.; Sheydaei, N.; Kahani, M. Evaluating the effects of textual features on authorship attribution accuracy. In Proceedings of the ICCKE 2013, Mashhad, Iran, 31 October 2013–1 November 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 108–113. [Google Scholar]

- Wanner, L. Authorship Attribution Using Syntactic Dependencies. In Artificial Intelligence Research and Development; IOS Press: Amsterdam, The Netherlands, 2016; pp. 303–308. [Google Scholar]

- Varela, P.; Justino, E.; Britto, A.; Bortolozzi, F. A computational approach for authorship attribution of literary texts using syntactic features. In Proceedings of the 2016 International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 24–29 July 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 4835–4842. [Google Scholar]

- Varela, P.J.; Justino, E.J.R.; Bortolozzi, F.; Oliveira, L.E.S. A computational approach based on syntactic levels of language in authorship attribution. IEEE Lat. Am. Trans. 2016, 14, 259–266. [Google Scholar] [CrossRef]

- Wu, H.; Zhang, Z.; Wu, Q. Exploring syntactic and semantic features for authorship attribution. Appl. Soft Comput. 2021, 111, 107815. [Google Scholar] [CrossRef]

- Sidorov, G.; Velasquez, F.; Stamatatos, E.; Gelbukh, A.; Chanona-Hernández, L. Syntactic n-grams as machine learning features for natural language processing. Expert Syst. Appl. 2014, 41, 853–860. [Google Scholar] [CrossRef]

- Cutting, D.; Kupiec, J.; Pedersen, J.; Sibun, P. A practical part-of-speech tagger. In Proceedings of the 3rd Conference on Applied Natural Language Processing, Trento, Italy, 31 March–3 April 1992; pp. 133–140. [Google Scholar]

- Solorio, T.; Pillay, S.; Montes-y Gómez, M. Authorship identification with modality specific meta features. In Proceedings of the CLEF 2011, Amsterdam, The Netherlands, 17–20 September 2011. [Google Scholar]

- Baayen, R. Analyzing Linguistic Data: A Practical Introduction to Statistics Using R; Cambridge University Press: Cambridge, UK, 2008; Volume 4, pp. 32779–32790. [Google Scholar]

- Kanade, V. What Is Semantic Analysis? Definition, Examples, and Applications in 2022. Available online: https://www.spiceworks.com/tech/artificial-intelligence/articles/what-is-semantic-analysis/ (accessed on 10 December 2023).

- McCarthy, P.M.; Lewis, G.A.; Dufty, D.F.; McNamara, D.S. Analyzing Writing Styles with Coh-Metrix. In Proceedings of the Flairs Conference, Melbourne Beach, FL, USA, 11–13 May 2006; pp. 764–769. [Google Scholar]

- Miller, G.A. WordNet: A lexical database for English. Commun. ACM 1995, 38, 39–41. [Google Scholar] [CrossRef]

- Yule, G.U. The Statistical Study of Literary Vocabulary; Cambridge University Press: Cambridge, UK, 1944; Volume 42, pp. b1–b2. [Google Scholar]

- Holmes, D.I. Vocabulary richness and the prophetic voice. Lit. Linguist. Comput. 1991, 6, 259–268. [Google Scholar] [CrossRef]

- Tweedie, F.J.; Baayen, R.H. How variable may a constant be? Measures of lexical richness in perspective. Comput. Humanit. 1998, 32, 323–352. [Google Scholar] [CrossRef]

- Koppel, M.; Akiva, N.; Dagan, I. Feature instability as a criterion for selecting potential style markers. J. Am. Soc. Inf. Sci. Technol. 2006, 57, 1519–1525. [Google Scholar] [CrossRef]

- Cheng, N.; Chandramouli, R.; Subbalakshmi, K. Author gender identification from text. Digit. Investig. 2011, 8, 78–88. [Google Scholar] [CrossRef]

- Ragel, R.; Herath, P.; Senanayake, U. Authorship detection of SMS messages using unigrams. In Proceedings of the 2013 IEEE 8th International Conference on Industrial and Information Systems, Peradeniya, Sri Lanka, 17–20 December 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 387–392. [Google Scholar]

- Laroum, S.; Béchet, N.; Hamza, H.; Roche, M. Classification automatique de documents bruités à faible contenu textuel. Rev. Des Nouv. Technol. Inf. 2010, 18, 25. [Google Scholar]

- Ouamour, S.; Sayoud, H. Authorship attribution of ancient texts written by ten arabic travelers using a smo-svm classifier. In Proceedings of the 2012 International Conference on Communications and Information Technology (ICCIT), Hammamet, Tunisia, 26–28 June 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 44–47. [Google Scholar]

- Spitters, M.; Klaver, F.; Koot, G.; Van Staalduinen, M. Authorship analysis on dark marketplace forums. In Proceedings of the 2015 European Intelligence and Security Informatics Conference, Manchester, UK, 7–9 September 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1–8. [Google Scholar]

- Vazirian, S.; Zahedi, M. A modified language modeling method for authorship attribution. In Proceedings of the 2016 Eighth International Conference On Information and Knowledge Technology (IKT), Hammamet, Tunisia, 7–8 September 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 32–37. [Google Scholar]

- Escalante, H.J.; Solorio, T.; Montes, M. Local histograms of character n-grams for authorship attribution. In Proceedings of the 49th Annual Meeting of The Association for Computational Linguistics: Human Language Technologies, Portland, OR, USA, 19–24 June 2011; pp. 288–298. [Google Scholar]

- Martindale, C.; McKenzie, D. On the utility of content analysis in author attribution: The Federalist. Comput. Humanit. 1995, 29, 259–270. [Google Scholar] [CrossRef]

- Marinho, V.Q.; Hirst, G.; Amancio, D.R. Authorship attribution via network motifs identification. In Proceedings of the 2016 5th Brazilian conference on intelligent systems (BRACIS), Recife, Brazil, 9–12 October 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 355–360. [Google Scholar]

- Bayrami, P.; Rice, J.E. Code authorship attribution using content-based and non-content-based features. In Proceedings of the 2021 IEEE Canadian Conference on Electrical and Computer Engineering (CCECE), Virtual, 12–17 September 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–6. [Google Scholar]

- Oman, P.W.; Cook, C.R. Programming style authorship analysis. In Proceedings of the 17th Conference on ACM Annual Computer Science Conference, Louisville, KY, USA, 21–23 February 1989; pp. 320–326. [Google Scholar]

- Oman, P.W.; Cook, C.R. A taxonomy for programming style. In Proceedings of the 1990 ACM Annual Conference on Cooperation, Washington, DC, USA, 20–22 February 1990; pp. 244–250. [Google Scholar]

- Sallis, P.; Aakjaer, A.; MacDonell, S. Software forensics: Old methods for a new science. In Proceedings of the 1996 International Conference Software Engineering: Education and Practice, Dunedin, New Zealand, 24–27 January 1996; IEEE: Piscataway, NJ, USA, 1996; pp. 481–485. [Google Scholar]

- Tennyson, M.F.; Mitropoulos, F.J. Choosing a profile length in the SCAP method of source code authorship attribution. In Proceedings of the IEEE SOUTHEASTCON 2014, Lexington, KY, USA, 13–16 March 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 1–6. [Google Scholar]

- Pellin, B.N. Using Classification Techniques to Determine Source Code Authorship; White Paper; Department of Computer Science, University of Wisconsin: Madison, WI, USA, 2000. [Google Scholar]

- Alsulami, B.; Dauber, E.; Harang, R.; Mancoridis, S.; Greenstadt, R. Source code authorship attribution using long short-term memory based networks. In Proceedings of the Computer Security ESORICS 2017—22nd European Symposium on Research in Computer Security, Oslo, Norway, 11–15 September 2017; pp. 65–82. [Google Scholar]

- Alrabaee, S.; Saleem, N.; Preda, S.; Wang, L.; Debbabi, M. Oba2: An onion approach to binary code authorship attribution. Digit. Investig. 2014, 11, S94–S103. [Google Scholar] [CrossRef]

- Ferrante, A.; Medvet, E.; Mercaldo, F.; Milosevic, J.; Visaggio, C.A. Spotting the Malicious Moment: Characterizing Malware Behavior Using Dynamic Features. In Proceedings of the 2016 11th International Conference on Availability, Reliability and Security (ARES), Salzburg, Austria, 31 August–2 September 2016; pp. 372–381. [Google Scholar] [CrossRef]

- Wang, N.; Ji, S.; Wang, T. Integration of Static and Dynamic Code Stylometry Analysis for Programmer De-Anonymization. In Proceedings of the 11th ACM Workshop on Artificial Intelligence and Security. Association for Computing Machinery, Toronto, Canada, 15–19 October 2018; pp. 74–84. [Google Scholar]

- Frantzeskou, G.; MacDonell, S.; Stamatatos, E.; Gritzalis, S. Examining the significance of high-level programming features in source code author classification. J. Syst. Softw. 2008, 81, 447–460. [Google Scholar] [CrossRef]

- Wisse, W.; Veenman, C. Scripting DNA: Identifying the JavaScript programmer. Digit. Investig. 2015, 15, 61–71. [Google Scholar] [CrossRef]

- Arp, D.; Spreitzenbarth, M.; Hubner, M.; Gascon, H.; Rieck, K. DREBIN: Effective and Explainable Detection of Android Malware in Your Pocket. In Proceedings of the Network and Distributed System Security Symposium 2014, San Diego, CA, USA, 23–26 February 2014. [Google Scholar]

- Melis, M.; Maiorca, D.; Biggio, B.; Giacinto, G.; Roli, F. Explaining Black-box Android Malware Detection. arXiv 2018, arXiv:1803.03544. [Google Scholar]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. “Why Should I Trust You?”: Explaining the Predictions of Any Classifier. arXiv 2016, arXiv:1602.04938. [Google Scholar]

- Murenin, I.; Novikova, E.; Ushakov, R.; Kholod, I. Explaining Android Application Authorship Attribution Based on Source Code Analysis. In Proceedings of the Internet of Things, Smart Spaces, and Next Generation Networks and Systems: 20th International Conference, NEW2AN 2020, and 13th Conference, RuSMART 2020, St. Petersburg, Russia, 26–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 43–56. [Google Scholar]

- Abuhamad, M.; Abuhmed, T.; Nyang, D.; Mohaisen, D. Multi-χ: Identifying Multiple Authors from Source Code Files. Proc. Priv. Enhanc. Technol. 2020, 2020, 25–41. [Google Scholar] [CrossRef]

- Alrabaee, S.; Shirani, P.; Debbabi, M.; Wang, L. On the Feasibility of Malware Authorship Attribution. In Proceedings of the 9th International Symposium FPS 2016, Quebec City, QC, Canada, 24–25 October 2016; pp. 256–272. [Google Scholar] [CrossRef]

- Halvani, O.; Winter, C.; Graner, L. Assessing the applicability of authorship verification methods. In Proceedings of the 14th International Conference on Availability, Reliability and Security, Canterbury, UK, 26–29 August 2019; pp. 1–10. [Google Scholar]

- Tyo, J.; Dhingra, B.; Lipton, Z.C. On the state of the art in authorship attribution and authorship verification. arXiv 2022, arXiv:2209.06869. [Google Scholar]

- Potha, N.; Stamatatos, E. Intrinsic author verification using topic modeling. In Proceedings of the 10th Hellenic Conference on Artificial Intelligence, Patras, Greece, 9–12 July 2018; pp. 1–7. [Google Scholar]

- Koppel, M.; Schler, J. Authorship verification as a one-class classification problem. In Proceedings of the 21st International Conference on Machine Learning, Alberta, Canada, 4–8 July 2004; p. 62. [Google Scholar]

- Koppel, M.; Winter, Y. Determining if two documents are written by the same author. J. Assoc. Inf. Sci. Technol. 2014, 65, 178–187. [Google Scholar] [CrossRef]

- Ding, S.H.; Fung, B.C.; Iqbal, F.; Cheung, W.K. Learning stylometric representations for authorship analysis. IEEE Trans. Cybern. 2017, 49, 107–121. [Google Scholar] [CrossRef] [PubMed]

- Halvani, O.; Winter, C.; Graner, L. Unary and binary classification approaches and their implications for authorship verification. arXiv 2018, arXiv:1901.00399. [Google Scholar]

- Luyckx, K.; Daelemans, W. Authorship attribution and verification with many authors and limited data. In Proceedings of the 22nd International Conference on Computational Linguistics (COLING 2008), Manchester, UK, 18–22 August 2008; pp. 513–520. [Google Scholar]

- Veenman, C.J.; Li, Z. Authorship Verification with Compression Features. In Proceedings of the Working Notes for CLEF 2013 Conference, Valencia, Spain, 23–26 September 2013. [Google Scholar]

- Hernández-Castañeda, Á.; Calvo, H. Author verification using a semantic space model. Comput. Sist. 2017, 21, 167–179. [Google Scholar] [CrossRef]

- Litvak, M. Deep dive into authorship verification of email messages with convolutional neural network. In Proceedings of the Information Management and Big Data: 5th International Conference, SIMBig 2018, Lima, Peru, 3–5 September 2018; Springer: Berlin/Heidelberg, Germany, 2019; pp. 129–136. [Google Scholar]

- Hu, X.; Ou, W.; Acharya, S.; Ding, S.H.; Ryan, D.G. TDRLM: Stylometric learning for authorship verification by Topic-Debiasing. Expert Syst. Appl. 2023, 233, 120745. [Google Scholar] [CrossRef]

- Boenninghoff, B.; Nickel, R.M.; Zeiler, S.; Kolossa, D. Similarity Learning for Authorship Verification in Social Media. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 2457–2461. [Google Scholar] [CrossRef]

- Boenninghoff, B.; Hessler, S.; Kolossa, D.; Nickel, R.M. Explainable Authorship Verification in Social Media via Attention-based Similarity Learning. In Proceedings of the 2019 IEEE International Conference on Big Data (Big Data), Los Angeles, CA, USA, 9–12 September 2019; pp. 36–45. [Google Scholar] [CrossRef]

- Zhai, W.; Rusert, J.; Shafiq, Z.; Srinivasan, P. Adversarial Authorship Attribution for Deobfuscation. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics 2022, Dublin, Ireland, 22–27 May 2022; pp. 7372–7384. [Google Scholar]

| Criteria | Our Work | Zheng and Jin [11] | Kalgutkar et al. [9] | Rocha et al. [4] | Stamatatos [12] |

|---|---|---|---|---|---|

| Model taxonomy | ✓ | ✓ (discussion only) | ✓ | ✓ (discussion only) | ✓ |

| Features taxonomy | ✓ | ✓ (discussion only) | ✓ | ✓ (social media only) | ✓ |

| Datasets | ✓ | ✗ | ✓ (partially) | ✗ | ✗ |

| Evaluation metrics | ✓ | ✗ | ✗ | ✓ (partially) | ✓ (partially) |

| Synthesizing with shortcomings | ✓ | ✗ | ✗ | ✗ | ✗ |

| Authorship verification | ✓ | ✗ | ✗ | ✓ (partially) | ✗ |

| Challenges | ✓ | ✓ | ✓ | ✗ | ✗ |

| Future research directions | ✓ | ✗ | ✗ | ✓ (social media only) | ✗ |

| Paper | Features |

|---|---|

| Varela et al. [137] | Morphological (nouns, determinants, pronouns, adjectives, adverbs, verbs, prepositions, conjunctions, etc.), flexion (number (singular, plural), gender (male, female), a person (first, second, third), time (past, present, future), mode (imperative, indicative, subjunctive), etc.); Syntactic (subject, predicate, direct object, indirect object, main verb, auxiliary verb, adjunct, complementary object, passive agent (of the subject, of the predicate), etc.); Syntactic Auxiliary (article (definite, indefinite), pronouns (demonstrative, quantitative, possessive, personal, reflective), etc.); and Distance Between Key Syntactic Elements (absolute distances between the main verb, subject, predicate, direct object, indirect object, supplement, pronouns, adverbs, adjectives, and conjunctions) and essential terms, accessories of a sentence, such as subject, predicate, and accessories |

| Wu et al. [139] | N-grams of the characters, words, parts of speech (POS), phrase structures, dependency relationships, and topics from multiple dimensions (style, content, syntactic and semantic features) |

| Sidorov et al. [140] | Syntactic n-grams |

| Cutting et al. [141], Solorio et al. [142] | Parts of speech and parts-of-speech taggers |

| Wanner et al. [136] | Syntactic dependency trees |

| Baayen [143] | Frequencies of syntactic writing rules, frequency of appearance of coordination or subordination relationships |

| Jin and Jiang [16], Yavanoglu [117] | Punctuation |

| Paper | Features |

|---|---|

| McCarthy et al. [145] | Implicit meaning carried by the individual words—synonyms, hypernyms, hyponyms of words—and the identification of causal verbs to analyze text on over 200 indices of cohesion and difficulty |

| Yule [147], Holmes [148], Tweedie and Baayen [149] | Vocabulary richness, yule measures, entropy measures such as positive and negative emotion words, cognitive words, stable words (those that can be replaced by an equivalent) [150], and frequencies [151] |

| Hinh et al. [18] | Semantic frames |

| Paper | Features |

|---|---|

| Holmes [133], Grieve [63], Stamatatos [12] | N-Grams |

| Ragel et al. [152] | Uni-grams |

| Laroum et al. [153] | Frequency of character n-grams |

| Ouamour and Sayoud [154] | Characters n-grams [155], word n-grams |

| Vazirian and Zahedi [156] | Uni-grams, bi-grams, tri-grams |

| Escalante et al. [157] | Character level local histograms |

| Paper | Features |

|---|---|

| Agun and Yilmazel [37] | Document Embedding Representations, Bag of Words Representations |

| Pratanwanich and Lio [41] | Word Distributions per topic, Latent Topics, Author Contributions per document, Vector of Word Counts (Feature Vector) |

| Paper | Features |

|---|---|

| Abbasi and Chen [28] | Word length, frequency of different length words, count of letters and letter n-gram, count of digits and digit n-gram, bag of words, word n-gram, vocabulary richness, message features, paragraph features, frequency of function words, occurrence of punctuation and special characters, frequency of POS tags, POS tag n-gram, technical structure features, misspellings |

| Marinho et al. [159] | Absolute frequencies of thirteen directed motifs from co-occurrence networks |

| Khomytska and Teslyuk [52], Khomytska and Teslyuk [56] | Frequencies of occurrence of eight consonant phoneme groups (Labial—ll, Forelingual—fl, Mesiolingual—ml, Backlingual—bl, Sonorant—st, Nasal—nl, Constrictive—cv, and Occlusive—ov) |

| Alkaabi and Olatunji [98] | Packet Arrival Date, Packet Arrival Time, Honeypot Server (Host), Packet Source, Packet Protocol Type, Source Port, Destination Port, Source Country, Time |

| Paper | Features |

|---|---|

| Oman and Cook [161] | Typographic Boolean metrics—inline comments on the same line as source code, blocked comments, bordered comments, keywords followed by comments, one or two space indentation occurred more frequently, three or four space indentation occurred more frequently, five space indentation or greater occurred more frequently, lower-case characters only, upper-case characters only, case used to distinguish between keywords and identifiers, underscore used in identifiers, BEGIN followed by a statement on the same line then followed by a statement on the same line, multiple statements per line, blank lines |

| Sallis et al. [163] | Cyclomatic complexity of the control flow, use of layout conventions |

| Krsul and Spafford [32] | Curly brackets position, different style of indentation, different comments style and their percentage, comments and code agree, separator style, percentage of blank lines, line len, len of function, len of function name, parameter name style, len of parameter name, parameter declaration type, number of parameter, variable name style, percentage and number of different style variable names, len of different type of variables, ratio of different type of variables, number of live variables per statement, lines of code between variable references, use of ”ifdef”, most common type of statement, ratio of different type of statement, number of statement output, types of error, cyclomatic complexity, program volume, use of “go to”, use of internal representation of data objects, use of debugging symbols, use of macro, use of editor, use of compiler, use of revision control systems, use of development tools, use of software development standards. |

| Macdonell et al. [33] | Percentage of blank lines, non-whitespace lines, percentage of different style of comments, percentage of operators with different whitespace position, the ratio of different compilation keywords, the ratio of different decision statements, the ratio of gotos, line len, percentage of uppercase letters, use of debug variables, cyclomatic complexity |

| Ding and Samadzadeh [34] | Percentage of different curly brackets position, number of space in a different position, percentage of blank lines, number of indentation in a different position, different comments style and their percentage, percentage of comment lines, percentage of different format of statement, percentage of different type of statement, len of different type of variables, ratio of different type of variables, len of function name, percentage of different style of identifier, lines of class or interface, percentage of interfaces, number of variables per class or interface, number of functions per class or interface, ratio of different keywords, number of characters |

| Lange and Mancoridis [35] | Histogram distributions, relative frequencies of curly brace positions, relative frequencies of the class member access protection scopes, the relative frequency of uses of the three different types of commenting, the relative frequency of the use of various control-flow techniques, the indentation whitespace used at the beginning of each line, the whitespace that occurs on the interior areas of each non-whitespace line, the trailing whitespace at the end of a line, the use of the namespace member and class member operator, the use of the underscore character in identifiers, the complexity of switch statements used in the code, how densely the developer packs code constructs on a single line of text, the frequency of the first characters used in identifiers and the len of identifiers |

| Elenbogen and Seliya [36] | Number of comments, lines of code, variables count, variable Name len, use of for-loop, program compression size |

| Caliskan-Islam et al. [83] | Term frequency of word unigrams, len of line, the ratio of white space, if newline before the majority of the open brace, if the majority of indented lines begin with spaces or tabs, depth of nesting, branching factor, number of parameters, the standard deviation of the number of parameters, log of the number of different elements divided by file len where the elements are keywords, operators, word tokens, comments, literals, functions, preprocessors, tabs, spaces, empty lines, AST features |

| Zhang et al. [89] | Frequency of comments, number of comment lines, len of comments, frequency of different statements and different format statements, percentage of different variables, variables len, variable name style, percentages of different keywords, percentages of different operators, the format of the operator, percentage of different types of methods, len of methods, use of “return 0”, use of “go to”, frequency of class and interface, percentage of blank lines, len of lines, the number of leading whitespaces of lines, use of the two-dimensional array |

| Paper | Features |

|---|---|

| Pellin [165] | AST function tree |

| Caliskan-Islam et al. [83] | Maximum depth of an AST node, term frequency AST node bigrams, term frequency of 58 possible AST node type excluding leaves, term frequency-inverse document frequency of 58 possible AST node type excluding leaves, average depth of 58 possible AST node types excluding leaves, term frequency of code unigrams in AST leaves, term frequency-inverse document frequency of code unigrams in AST leaves, average depth of code unigrams in AST leaves |

| Alsulami et al. [166] | AST features generated by neural network |

| Dauber et al. [90] | AST features from small fragments |

| Bogomolov et al. [124] | AST-path-based representations |

| Ullah et al. [122] | Control flow and data variations features from PDG |

| Rosenblum et al. [76] | CFG Features |

| Meng et al. [88] | Control flow features from CFG, context features from CFG (for example, the width and depth of a function’s CFG) |

| Alrabaee et al. [167] | RFG Features (register manipulate patterns) |

| Paper | Features |

|---|---|

| Wang et al. [169] | Function call, module running time, total running time, function memory usage, total memory usage, memory access patterns (range of memory addresses and operation frequencies), len of disassembled code |

| Paper | Features |

|---|---|

| Meng et al. [88] | Instruction features: instruction prefixes, instruction operands, constant value in instructions. Control flow features, data flow features, and context features. |

| Rosenblum et al. [76] | Idiom feature capturing low-level details of the instruction sequence. |

| Paper | Features | Classification Model | Language | Author Number | Files Per Author | Data Source | Accuracy |

|---|---|---|---|---|---|---|---|

| Abuhamad et al. [121] | NN representation | 3S-CNN | C++ | 1600 | 9 | GCJ | 96.20% |

| Abuhamad et al. [121] | NN representation | 3S-CNN | JAVA | 1000 | 9 | GCJ | 95.80% |

| Abuhamad et al. [121] | NN representation | 3S-CNN | Python | 1500 | 9 | GCJ | 94.60% |

| Abuhamad et al. [121] | NN representation | 3S-CNN | C | 745 | 10 | Github | 95.00% |

| Abuhamad et al. [121] | NN representation | 3S-CNN | C++ | 142 | 10 | Github | 97.00% |

| Ullah et al. [122] | PDG features | Deep learning | C# | 1000 | GCJ | 98% | |

| Ullah et al. [122] | PDG features | Deep learning | JAVA | 1000 | GCJ | 98% | |

| Ullah et al. [122] | PDG features | Deep learning | C++ | 1000 | GCJ | 100% | |

| Zafar et al. [111] | NN representation | KNN | multiple | 20458 | 9 | GCJ | 84.94% |

| Zafar et al. [111] | NN representation | KNN | C++ | 10280 | 9 | GCJ | 90.98% |

| Zafar et al. [111] | NN representation | KNN | Python | 6910 | 9 | GCJ | 84.79% |

| Zafar et al. [111] | NN representation | KNN | JAVA | 3911 | 9 | GCJ | 79.36% |

| Abuhamad et al. [110] | NN representation | RFC | Binary | 1500 | 9+ | GCJ | 95.74% |

| Abuhamad et al. [110] | NN representation | RFC | C | 566 | 7 | GCJ | 94.80% |

| Caliskan-Islam et al. [83] | Stylometric features | RFC | C,C++ | 1600, | 9 | GCJ | 92.83% |

| Caliskan-Islam et al. [83] | Stylometric features | RFC | Python | 229 | 9 | GCJ | 53.91% |

| Abuhamad et al. [176] | NN representation | RFC | C, C++ | 562 | Github | 93.18% | |

| Abuhamad et al. [176] | NN representation | RFC | C, C++ | 608 | 6 | Github | 91.24% |

| Abuhamad et al. [176] | NN representation | RFC | C, C++ | 479 | 10 | Github | 92.82% |

| Abuhamad et al. [110] | NN representation | RFC | JAVA | 1952 | 7 | GCJ | 97.24% |