Measurement of Music Aesthetics Using Deep Neural Networks and Dissonances

Abstract

1. Introduction

1.1. Aesthetics and Its Relation to Nature

1.2. Music Generation Using Neural Networks

1.3. Methods for Aesthetic Measurement

- Conjunct melodic motion (CMM) in which the melody of a piece of music flows over small distances note by note.

- Acoustic consonance in which consonant harmonies are regarded as a point of stability in music and are favored over dissonant harmonies.

- Harmonic consistency in which the harmonies of a musical section tend to be similar in structure to one another.

- Limited macroharmony (LM) in which tonal music favors the usage of small macroharmonies (a sequence of notes that are heard over a small period of time), usually including five to eight notes.

- Centricity (CENT) in which a single note is perceived to be more dominant than the rest, and over periods of time, it begins to appear more frequently and acts as an objective for the melodic movement.

1.4. Current Research Objective

- Proposing a new method that computes the aesthetics of a melody fragment based on good and bad dissonance frequency (this classification is made using a neural network trained on the largest corpus of MIDI melodies that is currently available). In order to facilitate the understanding of our method, we publicly release the source code at https://github.com/razvan05/evaluated_ai_melodies (accessed on 29 May 2023).

- Realizing and presenting the results of two studies with 108 and 30 participants, in which melody fragments have been evaluated based on how aesthetically pleasant the melodies have been considered. The melodies that were used for evaluating our method have been uploaded on YouTube at https://youtu.be/uHKaeTF1PCw (accessed on 29 May 2023).

- Proposing a new mathematical formula that is used for computing the aesthetics of a melody fragment following the newly proposed method from point 1 and evaluating the formula using one of the studies from point 2.

- A comparative analysis was conducted to assess the performance of our new method in relation to two other state-of-the-art methods developed by psychologists and musicians. By comparing the results obtained from these different methodologies, we were able to gain insights into the strengths and limitations of each method, thereby highlighting the advancements and contributions offered by our novel approach.

2. Methodology

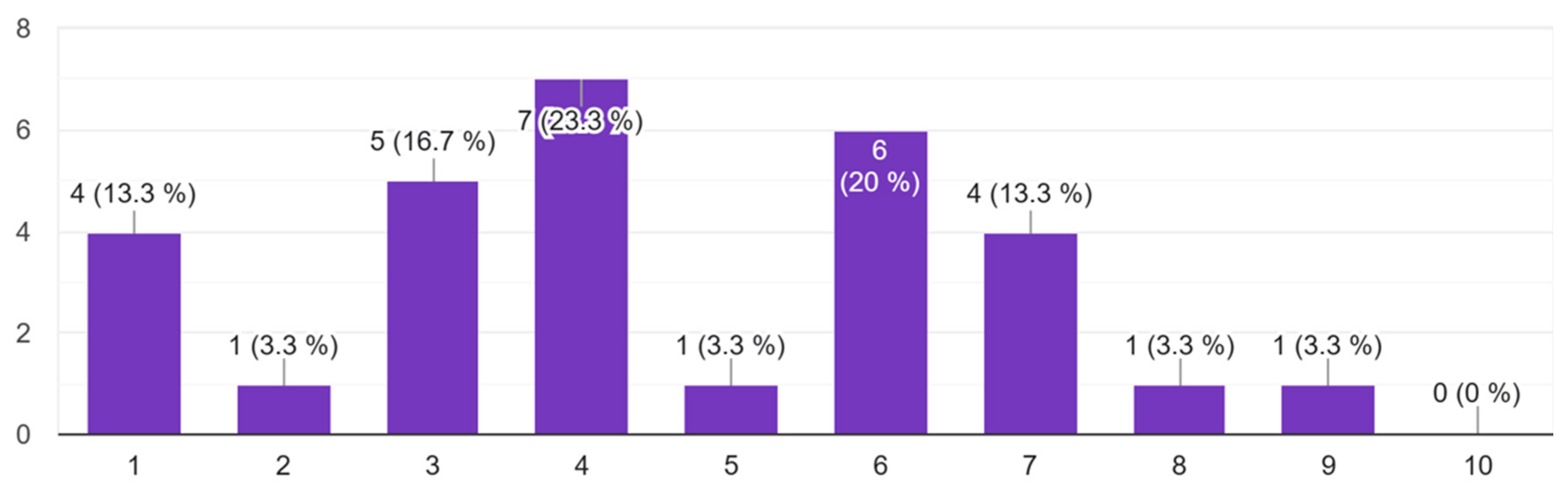

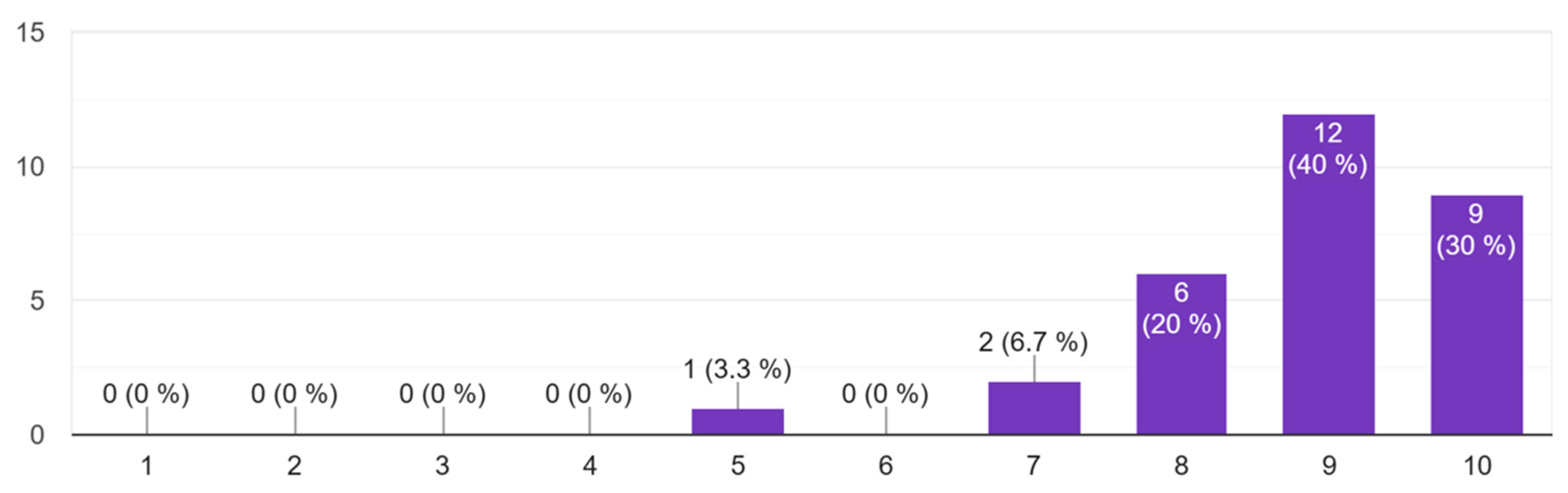

2.1. General Description of the Experiments

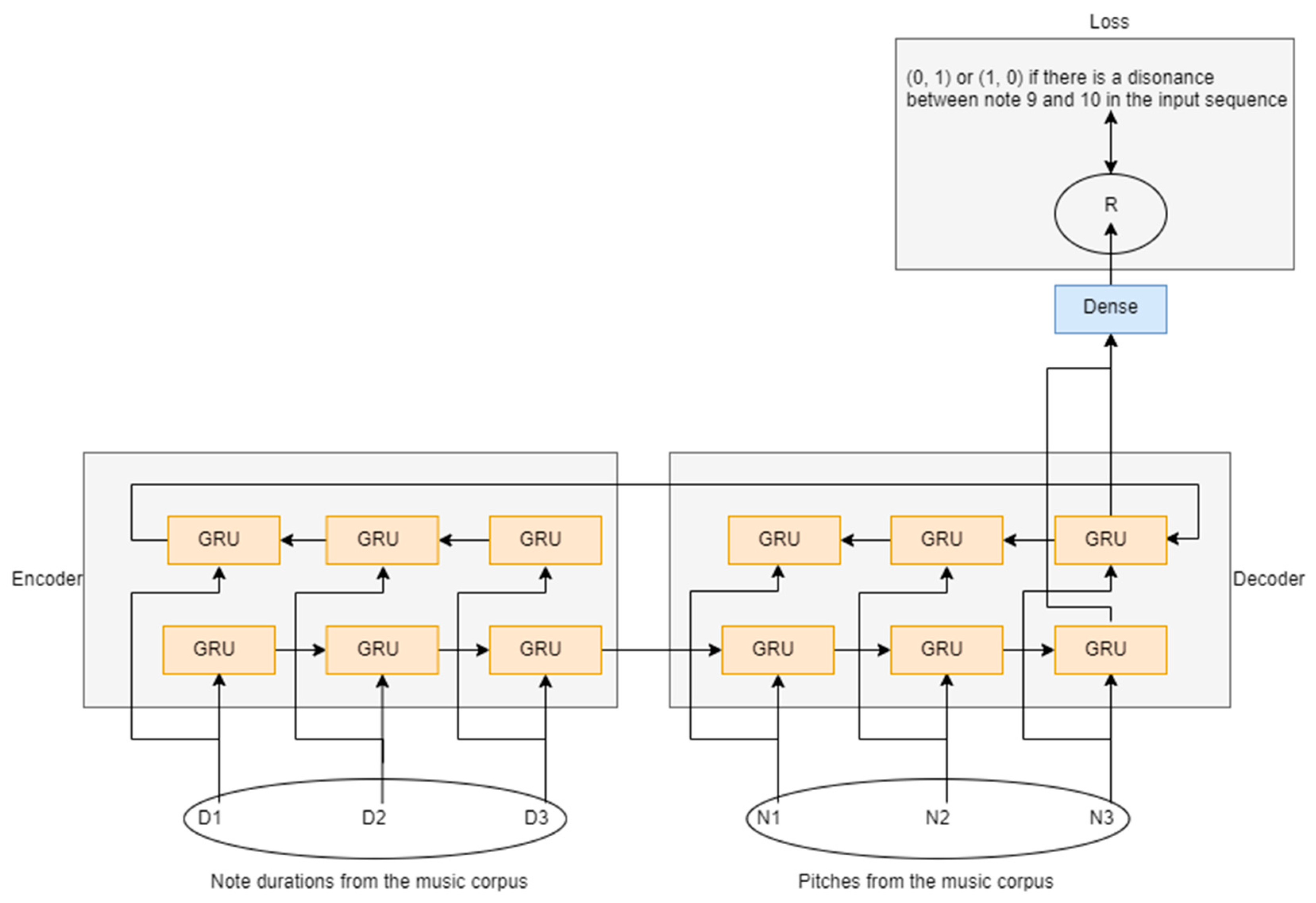

2.2. Finding the Good and Bad Dissonances

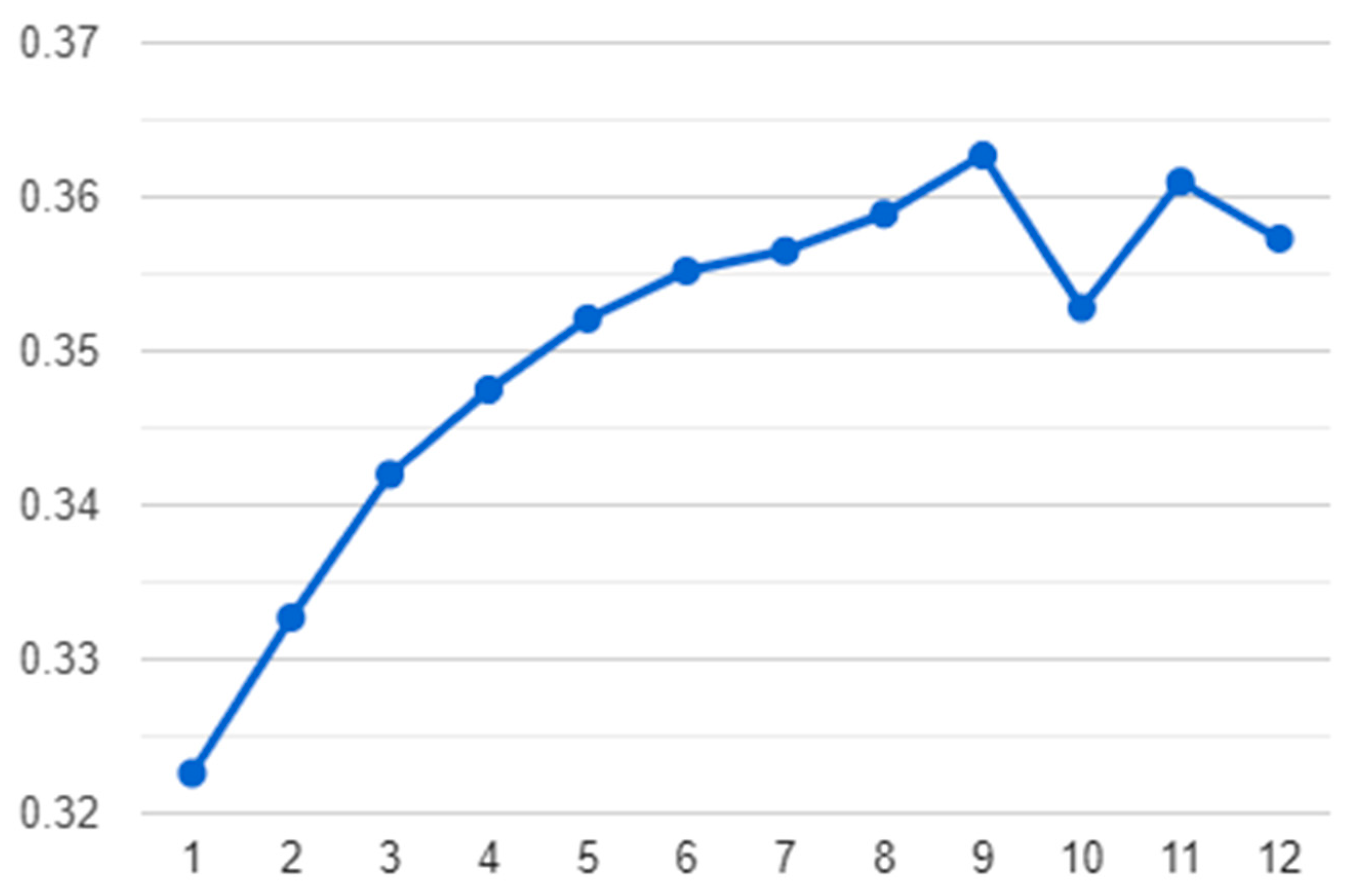

2.3. Choosing the Best Formula

2.4. Evaluation

- Melodies 1, 3, 5, and 8 were all randomly selected from the testing corpus and are all human compositions. Additionally, they share the characteristic of being not popular because no references were found to them in their MIDI file;

- During prior research, melodies 2 and 7 were created by combining chat sonification with neural network pitch generation [45] with a similar method to that used for generating the last three melodies from the first study. The strategy was based on the polyphonic model theory [15] described in the Introduction;

- Melodies 4 and 6 were created using a transformer neural network that was trained on the same corpus (Lakh MIDI dataset) as the sequence-to-sequence bilateral GRU from this study. In this situation, both the durations and pitches of the notes were generated using the neural network. In order to train the transformer, the following hyperparameters were used: sine and cosine positional encoding [27], 256 embed-ding size, eight-head multi-head attention, sparse categorical crossentropy loss, and an Adam optimizer with a learning rate of 0.0001. The network was trained for a total of 10 epochs.

3. Results and Discussion

- The first observation is that the neural network recognizes almost all the dissonances in the testing corpus because the current aesthetic measure presented in this paper gives the melodies from the testing corpus, written by human composers, a final score of almost 10.

- The second observation is that the Birkhoff measure based on human music expectation (Schellenberg method) yielded a higher score for artificial-intelligence-generated melodies (especially melodies 2, 4, and 7) than human-composed melodies (melodies 1, 3, 5, and 8), which is in contrast to the scores provided by human listeners who were able to determine which melodies were made by human composers.

4. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bo, Y.; Yu, J.; Zhang, K. Computational aesthetics and applications. Vis. Comput. Ind. Biomed. Art 2018, 1, 6. [Google Scholar] [CrossRef] [PubMed]

- Hanfling, O. Philosophical Aesthetics: An Introduction; Wiley-Blackwell: Hoboken, NJ, USA, 1992. [Google Scholar]

- Britannica. Available online: https://www.britannica.com/dictionary/aesthetics (accessed on 9 October 2022).

- Rigau, J.; Feixas, M.; Sbert, M. Conceptualizing Birkhoff’s Aesthetic Measure Using Shannon Entropy and Kolmogorov Complexity. In Computational Aesthetics in Graphics, Visualization, and Imaging; The Eurographics Association: Eindhoven, The Netherlands, 2007. [Google Scholar]

- Ghyka, C. Matila, Numarul de Aur; Editura Nemira: Bucharest, Romania, 2016. [Google Scholar]

- Birkhoff, G.D. Aesthetic Measure; Harvard University Press: Cambridge, MA, USA, 1933. [Google Scholar]

- Servien, P. Principes D’esthétique: Problèmes D’art et Langage des Sciences; Boivin: Paris, France, 1935. [Google Scholar]

- Marcus, S. Mathematische Poetik; Linguistische Forschungen: Frankfurt, Germany, 1973; Volume 13. [Google Scholar]

- Aeon. Uniting the Mysterious Worlds of Quantum Physics and Music. Available online: https://aeon.co/essays/uniting-the-mysterious-worlds-of-quantum-physics-and-music (accessed on 9 October 2022).

- Hossenfelder, S. Lost in Math: How Beauty Leads Physics Astray; Basic Books: New York, NY, USA, 2018. [Google Scholar]

- Rosenkranz, K. O Estetică a Urâtului; Meridiane: Bucharest, Romania, 1984. [Google Scholar]

- Trausan-Matu, S. Detecting Micro-Creativity in CSCL Chats. In International Collaboration toward Educational Innovation for All: Overarching Research, Development, and Practices—Proceedings of the 15th International Conference on Computer-Supported Collaborative Learning (CSCL), Hiroshima, Japan, 30 May–5 June 2022; Weinberger, A., Chen, W., Hernández-Leo, D., Chen, B., Eds.; International Society of the Learning Sciences: Bloomington, IN, USA, 2022; pp. 601–602. [Google Scholar]

- Trăușan-Matu, Ș. Muzica, de la Ethos la Carnaval. In Destinul—Pluralitate, Complexitate și Transdisciplinaritate; Alma: Craiova, Romania, 2015; pp. 210–221. [Google Scholar]

- Carrascosa Martinez, E. Technology for Learning and Creativity. Inf. Commun. Technol. Musical Field 2017, 8, 7–13. [Google Scholar]

- Trausan-Matu, S. The polyphonic model of collaborative learning. In The Routledge International Handbook of Research on Dialogic Education; Mercer, N., Wegerif, R., Major, L., Eds.; Routledge: London, UK, 2019; pp. 454–468. [Google Scholar] [CrossRef]

- Trausan-Matu, S. Chat Sonification Starting from the Polyphonic Model of Natural Language Discourse. Inf. Commun. Technol. Musical Field 2018, 9, 79–85. [Google Scholar]

- Bakhtin, M.M. Problems of Dostoevsky’s Poetics; Emerson, C., Ed.; Emerson, C., Translator; University of Minnesota Press: Minneapolis, MN, USA, 1984. [Google Scholar]

- Winograd, T.; Flores, F. Understanding Computers and Cognition; Addison-Wesley Professional: Boston, MA, USA, 1987. [Google Scholar]

- Heidegger, M. Being and Time; Harper Perennial Modern Thought: New York, NY, USA, 2008. [Google Scholar]

- Goller, C.; Kuchler, A. Learning task-dependent distributed representations by backpropagation through structure. In Proceedings of the International Conference on Neural Networks (ICNN), Washington, DC, USA, 3–6 June 1996; Volume 1, pp. 347–352. [Google Scholar]

- Liu, I.-T.; Ramakrishnan, B. Bach in 2014: Music Composition with Recurrent Neural Network. arXiv 2014, arXiv:1412.3191. [Google Scholar]

- Mozer, C.M. Neural network music composition by prediction: Exploring the benefits of psychoacoustic constraints and multi-scale processing. Connect. Sci. 1994, 6, 247–280. [Google Scholar] [CrossRef]

- Cho, K.; Van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning phrase representations using rnn encoder-decoder for statistical machine translation. arXiv 2014, arXiv:1406.1078. [Google Scholar]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Eck, D.; Schmidhuber, J. A First Look at Music Composition Using Lstm Recurrent Neural Networks; Technical Report No. IDSIA-07-02; Istituto Dalle Molle Di Studi Sull Intelligenza Artificiale: Lugano, Switzerland, 2002. [Google Scholar]

- Huang, A.; Wu, R. Deep Learning for Music. arXiv 2016, arXiv:1606.04930. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, N.A.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural Machine Translation by Jointly Learning to Align and Translate. arXiv 2015, arXiv:1409.0473. [Google Scholar]

- Huang, C.-Z.A.; Vaswani, A.; Uszkoreit, J.; Shazeer, N.; Simon, I.; Hawthorne, C.; Dai, A.M.; Hoffman, M.D.; Dinculescu, M.; Eck, D. Music Transformer: Generating Music with Long-Term Structure. arXiv 2018, arXiv:1809.04281. [Google Scholar]

- Magenta. Available online: https://magenta.tensorflow.org/2016/12/16/nips-demo (accessed on 5 October 2022).

- Dong, H.-W.; Chen, K.; Dubnov, S.; McAuley, J.; Berg-Kirkpatrick, T. Multitrack Music Transformer: Learning Long-Term Dependencies in Music with Diverse Instruments. arXiv 2022, arXiv:2207.06983. [Google Scholar]

- Sahyun, M.R.V. Aesthetics and Entropy III: Aesthetic measures. Preprints.org 2018, 2018010098. [Google Scholar] [CrossRef]

- Schellenberg, E.G.; Adachi, M.; Purdy, K.; Mckinnon, M. Expectancy in melody: Tests of children and adults. J. Exp. Psychol. 2003, 131, 511. [Google Scholar] [CrossRef]

- Streich, S. Music Complexity: A Multi-Faceted Description of Audio Content. Ph.D. Thesis, Universitat Pompeu Fabra, Barcelona, Spain, 2006. Available online: https://www.tdx.cat/handle/10803/7545;jsessionid=CA218D41A8E9F503121413EE4169907E#page=1 (accessed on 21 December 2022).

- Tymoczko, D. A Geometry of Music: Harmony and Counterpoint in the Extended Common Practice: Oxford Studies in Music Theory; Oxford University Press: Oxford, UK, 2010. [Google Scholar]

- Valencia, S.G. GitHub. Music Geometry Eval. 2019. Available online: https://github.com/sebasgverde/music-geometry-eval (accessed on 12 February 2023).

- Gonsalves, R.A. Towardsdatascience. AI-Tunes: Creating New Songs with Artificial Intelligence. 2021. Available online: https://towardsdatascience.com/ai-tunes-creating-new-songs-with-artificial-intelligence-4fb383218146 (accessed on 12 February 2023).

- Liu, H.; Xue, T.; Schultz, T. Merged Pitch Histograms and Pitch-duration Histograms. In Proceedings of the 19th International Conference on Signal Processing and Multimedia Applications—SIGMAP, Lisbon, Portugal, 14–16 July 2022. [Google Scholar]

- Liu, H.; Jiang, K.; Gamboa, H.; Xue, T.; Schultz, T. Bell Shape Embodying Zhongyong: The Pitch Histogram of Traditional Chinese Anhemitonic Pentatonic Folk Songs. Appl. Sci. 2022, 12, 8343. [Google Scholar] [CrossRef]

- Raffel, C. Learning-Based Methods for Comparing Sequences, with Applications to Audio-to-MIDI Alignment and Matching. 2016. Available online: https://academiccommons.columbia.edu/doi/10.7916/D8N58MHV (accessed on 21 December 2022).

- Qiu, L.; Li, S.; Sung, Y. 3D-DCDAE: Unsupervised Music Latent Representations Learning Method Based on a Deep 3D Convolutional Denoising Autoencoder for Music Genre Classification. Mathematics 2021, 9, 2274. [Google Scholar] [CrossRef]

- ISMIR. Available online: https://ismir.net/resources/datasets/ (accessed on 21 December 2022).

- Music21. Available online: http://web.mit.edu/music21/ (accessed on 20 December 2022).

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Paroiu, R.; Trausan-Matu, S. A new approach for chat sonification. In Proceedings of the 23rd Conference on Control Systems and Computer Science (CSCS23), Bucharest, Romania, 25–28 May 2021. [Google Scholar]

- Trausan-Matu, S.; Diaconescu, A. Music Composition through Chat Sonification According to the Polyphonic Model. In Annals of the Academy of Romanian Scientists Series on Science and Technology of Information; Academy of Romanian Scientists: Bucharest, Romania, 2013. [Google Scholar]

| Melody 1 | Melody 2 | Melody 3 | Melody 4 | Melody 5 | |

|---|---|---|---|---|---|

| Human aesthetics score | 6.88 | 6.62 | 6.29 | 4.12 | 6.18 |

| Good dissonances (G) | 167 | 636 | 52 | 20 | 20 |

| Bad dissonances (B) | 20 | 6 | 1 | 2 | 0 |

| 8.93 | 9.9 | 9.81 | 9.09 | 10 | |

| 2.94 | 9.46 | 9.81 | 8.33 | 10 | |

| 1.72 | 8.98 | 9.62 | 7.14 | 10 | |

| 4.55 | 9.72 | 9.9 | 9.09 | 10 | |

| 7.97 | 9.81 | 9.62 | 8.26 | 10 | |

| 6.5 | 9.63 | 9.27 | 6.94 | 10 | |

| 5.41 | 9.45 | 8.93 | 5.91 | 10 |

| Melody 1 | Melody 2 | Melody 3 | Melody 4 | Melody 5 | |

|---|---|---|---|---|---|

| Variance | 1.33 | 1.35 | 1.49 | 0.84 | 1.65 |

| Standard deviation | 1.15 | 1.16 | 1.22 | 0.91 | 1.28 |

| Pearson Correlation Score | Pearson Correlation Score | ||

|---|---|---|---|

| 0.31 | 0.32 | ||

| −0.25 | 0.33 | ||

| −0.16 | 0.34 | ||

| −0.31 |

| Melody 1 | Melody 2 | Melody 3 | Melody 4 | Melody 5 | Melody 6 | Melody 7 | Melody 8 | |

|---|---|---|---|---|---|---|---|---|

| Human aesthetics score | 5.56 | 3.9 | 7.3 | 5.2 | 6.56 | 4.5 | 4.83 | 8.83 |

| Variance | 3.35 | 2.85 | 2.97 | 4.63 | 3.97 | 4.81 | 4.41 | 1.31 |

| Standard deviation | 1.83 | 1.68 | 1.72 | 2.15 | 1.99 | 2.19 | 2.1 | 1.14 |

| Schellenberg method score | 2 | 6.08 | 4.22 | 6.04 | 1.76 | 1.81 | 6.58 | 3.33 |

| Current method score | 7.85 | 7.56 | 10 | 4.82 | 10 | 0.33 | 10 | 9.23 |

| Conjunct melodic motion (CMM) | 9.65 | 12.73 | 10.74 | 7.07 | 15.1 | 11.12 | 10.41 | 7.75 |

| Limited macroharmony (LM) | 1.71 | 1.64 | 7.03 | 10.02 | 1.04 | 12 | 1.37 | 5.95 |

| Centricity (CENT) | 0.03 | 0.13 | 0.02 | 0.03 | 0.07 | 0.07 | 0.06 | 0.05 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Paroiu, R.; Trausan-Matu, S. Measurement of Music Aesthetics Using Deep Neural Networks and Dissonances. Information 2023, 14, 358. https://doi.org/10.3390/info14070358

Paroiu R, Trausan-Matu S. Measurement of Music Aesthetics Using Deep Neural Networks and Dissonances. Information. 2023; 14(7):358. https://doi.org/10.3390/info14070358

Chicago/Turabian StyleParoiu, Razvan, and Stefan Trausan-Matu. 2023. "Measurement of Music Aesthetics Using Deep Neural Networks and Dissonances" Information 14, no. 7: 358. https://doi.org/10.3390/info14070358

APA StyleParoiu, R., & Trausan-Matu, S. (2023). Measurement of Music Aesthetics Using Deep Neural Networks and Dissonances. Information, 14(7), 358. https://doi.org/10.3390/info14070358