Multi-Target Rough Sets and Their Approximation Computation with Dynamic Target Sets

Abstract

:1. Introduction

- A rough set model considering the label correlation is proposed for multi-label learning. It provides a novel approach for handling multi-label information systems.

- The properties of the proposed models are investigated in this paper.

- An algorithm for calculating the approximations in the proposed rough set model is designed in this paper. It will boost the application of the proposed multi-target rough set model.

- Two algorithms for calculating the approximations in the proposed rough set model under the situation of adding (removing) a target concept to (from) the target group are proposed in this paper. It will improve the efficiency of calculating the approximations of the proposed model.

- Experiments are conducted to validate the efficiency and effectiveness of all proposed algorithms.

2. Global Multi-Target Rough Sets

2.1. Definitions

| U | a1 | a2 | a3 | X1 | X2 |

|---|---|---|---|---|---|

| x1 | 1 | M | 1 | 0 | 1 |

| x2 | 2 | F | 2 | 1 | 1 |

| x3 | 2 | M | 2 | 1 | 0 |

| x4 | 1 | M | 2 | 0 | 0 |

| x5 | 2 | F | 2 | 1 | 1 |

2.2. Properties

- Forall if and only if;

- There exists if and only if ;

- There exists if and only if ;

- Forall if and only if .

- For all implies, for all , for all , which implies if and only if , so ;

- There exists , which implies, for all , that there exists , which implies if and only if , so ;

- There exists , which implies, for all , that there exists which implies , so ;

- For all implies, for all , for all , which implies , so .□

- For all;

- For all.

- For all , it is implied that if and only if , which implies for all , so .

- For all , it is implied that there exists , which implies , so . □

- ;

- ;

- ;

- .

- For all , it is implied that, for all and, for all , which implies, for all if and only if , so ;

- For all , if and only if there exists , which implies there exists and there exists , which implies , so

- For all , if and only if, for all , which implies, for all or, for all , which implies which implies so ;

- For all , if and only if there exists , if and only if there exists or there exists , which implies if and only if .□

- ;

- .

- For all , if and only if, for all which implies for all which implies so .

- For all , if and only if there exists , which implies there exists which implies so . □

3. Approximation Computation of GMTRSs

- ;

- .

- ;

- .

- For all if and only if, for all if and only if ;

- There exists if and only if there exists if and only if . □

- if and only if for all , if and only if, for all if and only if

- if and only if there exists , if and only if there exists if and only if □

| Algorithm 1. Computing Approximations of GMTRSs (CAG). | |

| Input: | |

| Output: | |

| 1: | |

| 2: | for |

| 3: | for |

| 4: | ifthen |

| 5: | |

| 6: | else |

| 7: | |

| 8: | end if |

| 9: | ifthen |

| 10: | |

| 11: | else |

| 12: | |

| 13: | end if |

| 14: | end for |

| 15: | end for |

| 16: | |

| 17: | |

| 18: | Return |

4. Dynamical Approximation Computation

4.1. Dynamical Approximation Computation while Adding a Target

- ;

- For all if and only if for all if and only if for all if and only if ;

- For all . if and only if there exists such that or there exists such that if and only if there exists such that if and only if □

| Algorithm 2. Dynamic Computing Approximations of a GMTRS while Adding a Target Concept (DCAGA). | |

| Input: and satisfied for all . | |

| Output: | |

| 1: | |

| 2: | for |

| 3: | if then |

| 4: | |

| 5: | else |

| 6: | |

| 7: | end if |

| 8: | if then |

| 9: | |

| 10: | else |

| 11: | |

| 12: | end if |

| 13: | end for |

| 14: | |

| 15: | |

| 16: | Return |

4.2. Dynamical Approximation Computation while Removing a Target

- For all if and only if (for all , for all or (for all and for all ) if and only if (for all , for all and ) if and only if

- For all if and only if if and only if □

| Algorithm 3. Dynamic Computing Approximations of a GMTRS while Removing a Target Concept (DCAGR). | |

| Input: and satisfied for all | |

| Output: | |

| 1: | |

| 2: | for |

| 3: | if then |

| 4: | |

| 5: | else |

| 6: | |

| 7: | end if |

| 8: | if then |

| 9: | |

| 10: | else |

| 11: | |

| 12: | end if |

| 13: | end for |

| 14: | |

| 15: | |

| 16: | Return |

5. Experimental Evaluations

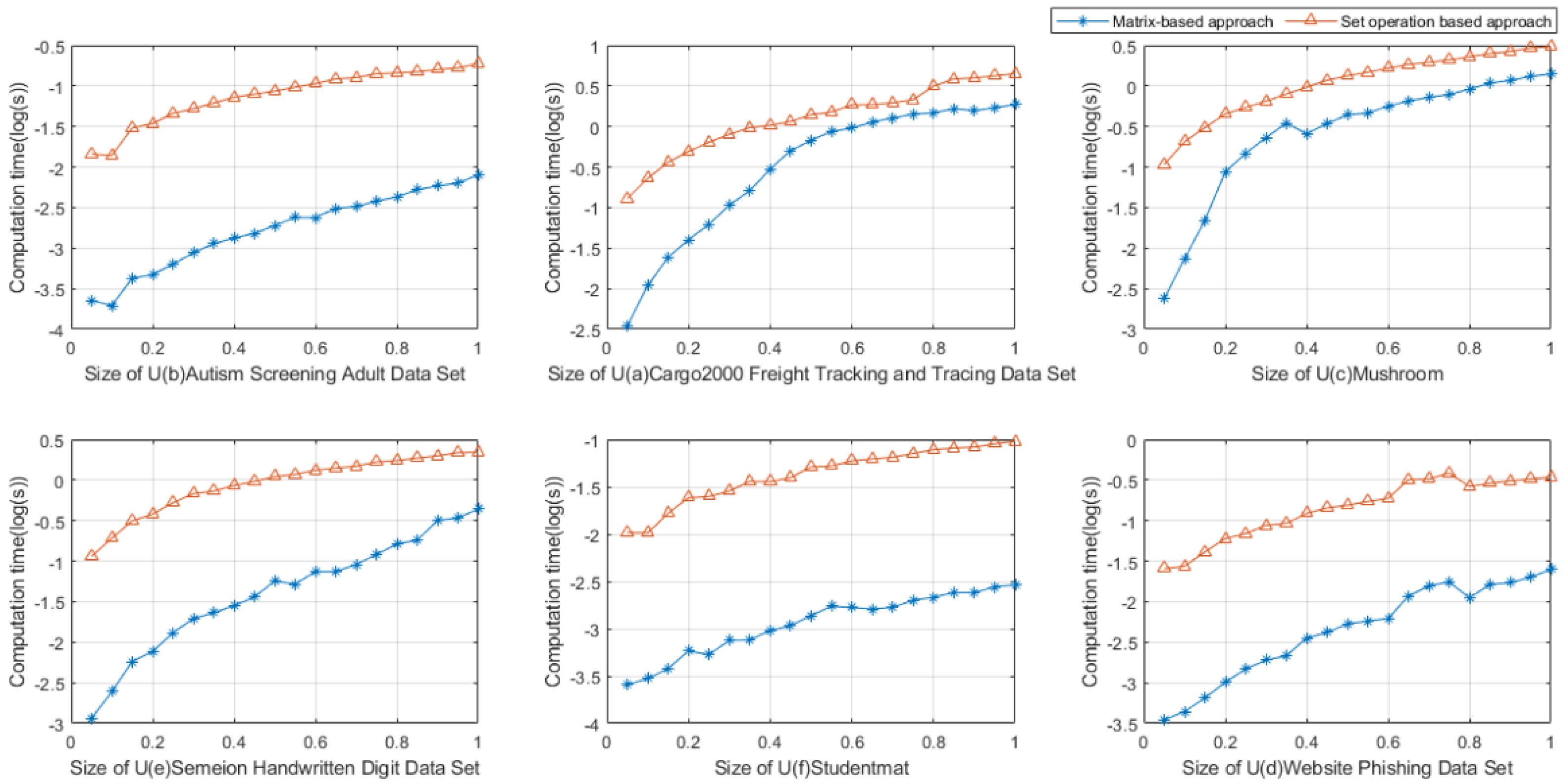

5.1. Comparison of Computational Time Using Matrix-Based Approach and Set-Operation-Based Approach

5.1.1. Experimental Settings

5.1.2. Discussions of the Experimental Results

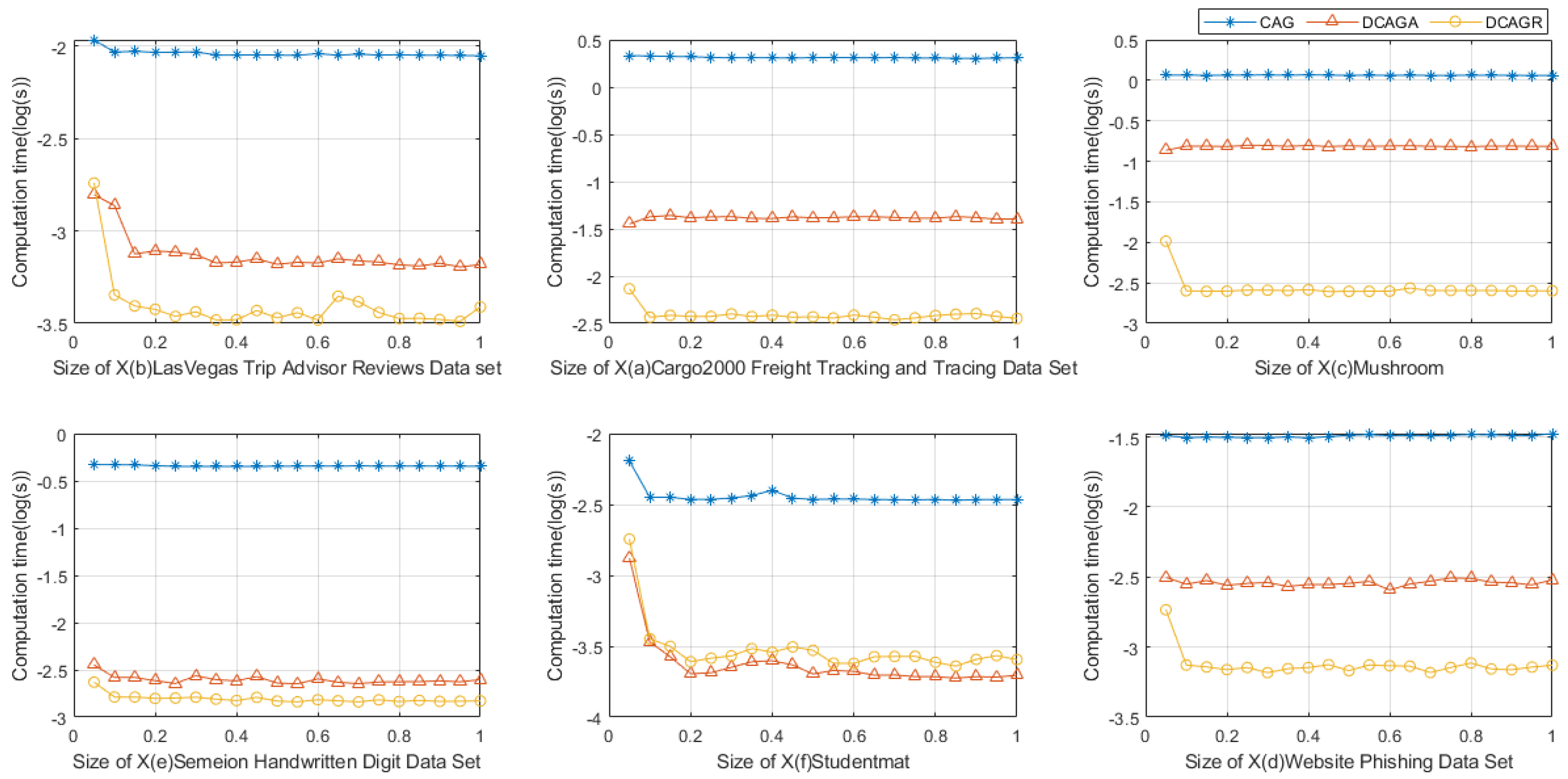

5.2. Comparison of Computational Time Using Elements in Target Concept with Different Sizes

5.2.1. Experimental Settings

5.2.2. Discussions of the Experimental Results

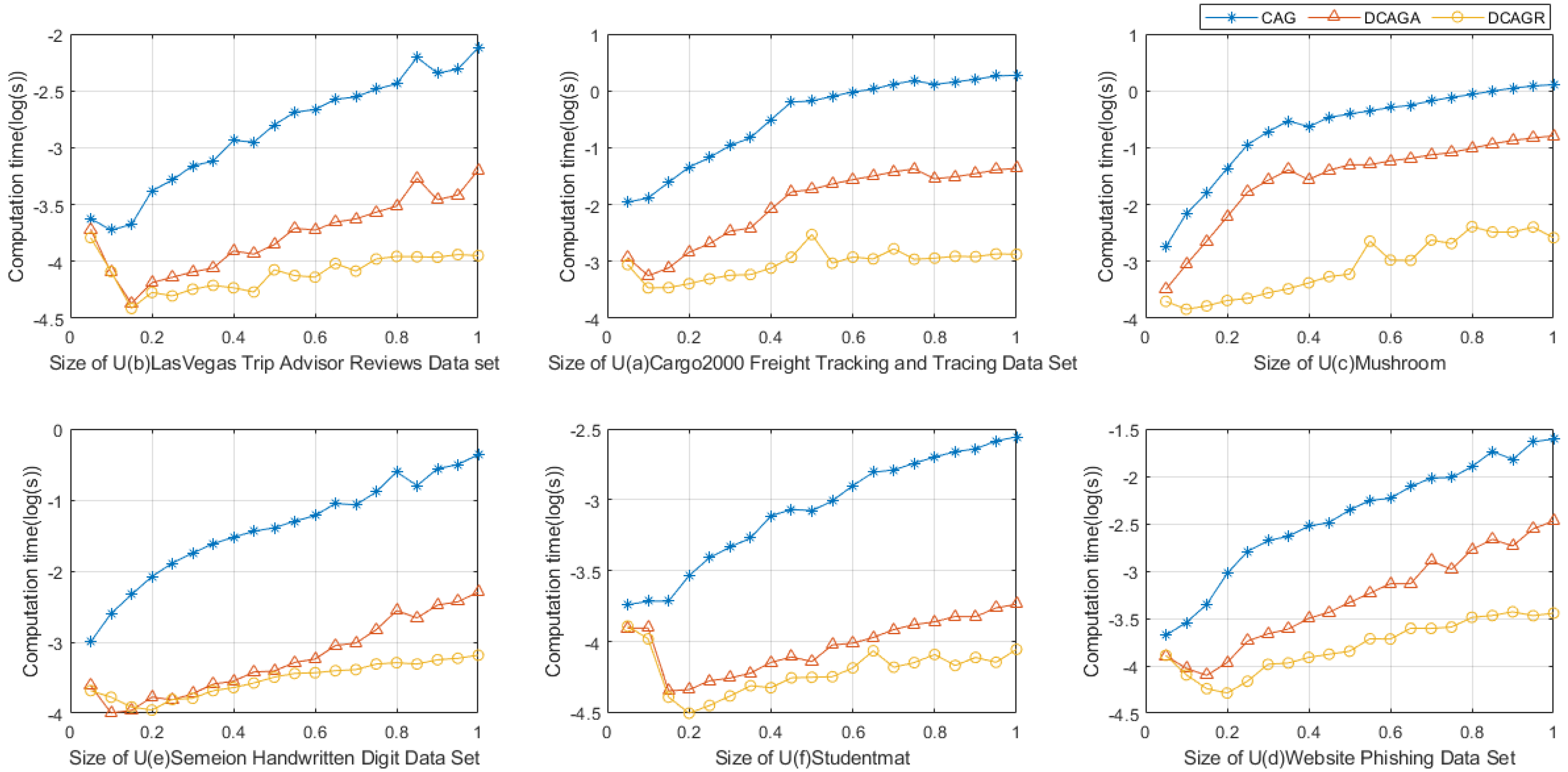

5.3. Comparison of Computational Time Using Data Sets with Different Sizes

5.3.1. Experimental Settings

5.3.2. Discussions of the Experimental Results

5.4. Parameter Analysis Experiments of α

5.4.1. Experimental Settings

5.4.2. Discussions of the Experimental Results

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Pawlak, Z.; Grzymala-Busse, J.; Slowinski, R.; Ziarko, W. Rough sets. Commun. ACM 1995, 38, 88–95. [Google Scholar] [CrossRef]

- Sharma, H.K.; Kumari, K.; Kar, S. Forecasting Sugarcane Yield of India based on rough set combination approach. Decis. Mak. Appl. Manag. Eng. 2021, 4, 163–177. [Google Scholar] [CrossRef]

- Sahu, R.; Dash, S.R.; Das, S. Career selection of students using hybridized distance measure based on picture fuzzy set and rough set theory. Decis. Mak. Appl. Manag. Eng. 2021, 4, 104–126. [Google Scholar] [CrossRef]

- Heda, A.R.; Ibrahim, A.M.M.; Abdel-Hakim, A.E.; Sewisy, A.A. Modulated clustering using integrated rough sets and scatter search attribute reduction. In Proceedings of the Genetic and Evolutionary Computation Conference Companion, Kyoto, Japan, 15–19 July 2018; pp. 1394–1401. [Google Scholar]

- Wang, C.; Shi, Y.; Fan, X.; Shao, M. Attribute reduction based on k-nearest neighborhood rough sets. Int. J. Approx. Reason. 2019, 106, 18–31. [Google Scholar] [CrossRef]

- El-Bably, M.K.; Al-Shami, T.M. Different kinds of generalized rough sets based on neighborhoods with a medical application. Int. J. Biomath. 2021, 14, 2150086. [Google Scholar] [CrossRef]

- Elbably, M.K.; Abd, E.A. Soft β-rough sets and their application to determine COVID-19. Turk. J. Math. 2021, 45, 1133–1148. [Google Scholar] [CrossRef]

- Saha, I.; Sarkar, J.P.; Maulik, U. Integrated rough fuzzy clustering for categorical data analysis. Fuzzy Sets Syst. 2019, 361, 1–32. [Google Scholar] [CrossRef]

- Zhao, J.; Liang, J.M.; Dong, Z.N.; Tang, D.Y.; Liu, Z. Accelerating information entropy-based feature selection using rough set theory with classified nested equivalence classes. Pattern Recognit. 2020, 107, 107517. [Google Scholar] [CrossRef]

- Shu, W.; Qian, W.; Xie, Y. Incremental feature selection for dynamic hybrid data using neighborhood rough set. Knowl.-Based Syst. 2020, 194, 105516. [Google Scholar] [CrossRef]

- Xue, H.; Yang, Q.; Chen, S. SVM: Support vector machines. In The Top Ten Algorithms in Data Mining; Chapman and Hall/CRC: Boca Raton, FL, USA, 2009; pp. 51–74. [Google Scholar]

- Zhang, M.L.; Zhou, Z.H. A review on multi-label learning algorithms. IEEE Trans. Knowl. Data Eng. 2013, 26, 1819–1837. [Google Scholar] [CrossRef]

- Yu, Y.; Witold, P.; Duoqian, M. Neighborhood rough sets based multi-label classification for automatic image annotation. Int. J. Approx. Reason. 2013, 54, 1373–1387. [Google Scholar] [CrossRef]

- Li, H.; Li, D.; Zhai, Y.; Wang, S.; Zhang, J. A novel attribute reduction approach for multi-label data based on rough set theory. Inf. Sci. 2016, 367, 827–847. [Google Scholar] [CrossRef]

- Chen, H.; Li, T.; Luo, C.; Horng, S.-J.; Wang, G. A Rough Set-Based Method for Updating Decision Rules on Attribute Values′ Coarsening and Refining. IEEE Trans. Knowl. Data Eng. 2014, 26, 2886–2899. [Google Scholar] [CrossRef]

- Duan, J.; Hu, Q.; Zhang, L.; Qian, Y.; Li, D. Feature selection for multi-label classification based on neighborhood rough sets. J. Comput. Res. Dev. 2015, 52, 56. [Google Scholar]

- Liu, J.; Lin, Y.; Li, Y.; Weng, W.; Wu, S. Online multi-label streaming feature selection based on neighborhood rough set. Pattern Recognit. 2018, 84, 273–287. [Google Scholar] [CrossRef]

- Lin, Y.; Hu, Q.; Liu, J.; Chen, J.; Duan, J. Multi-label feature selection based on neighborhood mutual information. Appl. Soft Comput. 2016, 38, 244–256. [Google Scholar] [CrossRef]

- Sun, L.; Wang, T.; Ding, W.; Xu, J.; Lin, Y. Feature selection using Fisher score and multilabel neighborhood rough sets for multilabel classification. Inf. Sci. 2021, 578, 887–912. [Google Scholar] [CrossRef]

- Yang, X.; Chen, H.; Li, T.; Wan, J.; Sang, B. Neighborhood rough sets with distance metric learning for feature selection. Knowl.-Based Syst. 2021, 224, 107076. [Google Scholar] [CrossRef]

- Che, X.; Chen, D.; Mi, J. Label correlation in multi-label classification using local attribute reductions with fuzzy rough sets. Fuzzy Sets Syst. 2022, 426, 121–144. [Google Scholar] [CrossRef]

- Lin, Y.; Li, Y.; Wang, C.; Chen, J. Attribute reduction for multi-label learning with fuzzy rough set. Knowl.-Based Syst. 2018, 152, 51–61. [Google Scholar] [CrossRef]

- Vluymans, S.; Cornelis, C.; Herrera, F.; Saeys, Y. Multi-label classification using a fuzzy rough neighborhood consensus. Inf. Sci. 2018, 433, 96–114. [Google Scholar] [CrossRef]

- Qu, Y.; Rong, Y.; Deng, A.; Yang, L. Associated multi-label fuzzy-rough feature selection. In Proceedings of the 2017 Joint 17th World Congress of International Fuzzy Systems Association and 9th International Conference on Soft Computing and Intelligent Systems (IFSA-SCIS), Otsu, Japan, 27–30 June 2017; pp. 1–6. [Google Scholar]

- Li, Y.; Lin, Y.; Liu, J.; Weng, W.; Shi, Z.; Wu, S. Feature selection for multi-label learning based on kernelized fuzzy rough sets. Neurocomputing 2018, 318, 271–286. [Google Scholar] [CrossRef]

- Bai, S.; Lin, Y.; Lv, Y.; Chen, J.; Wang, C. Kernelized fuzzy rough sets based online streaming feature selection for large-scale hierarchical classification. Appl. Intell. 2021, 51, 1602–1615. [Google Scholar] [CrossRef]

- Xu, J.; Shen, K.; Sun, L. Multi-label feature selection based on fuzzy neighborhood rough sets. Complex Intell. Syst. 2022, 8, 1–25. [Google Scholar] [CrossRef]

- Zhu, Y.; Kwok, J.T.; Zhou, Z.H. Multi-label learning with global and local label correlation. IEEE Trans. Knowl. Data Eng. 2017, 30, 1081–1094. [Google Scholar] [CrossRef]

- Yang, X.; Qi, Y.; Yu, H.; Song, X.; Yang, J. Updating multi-granulation rough approximations with increasing of granular structures. Knowl.-Based Syst. 2014, 64, 59–69. [Google Scholar] [CrossRef]

- Hu, C.; Liu, S.; Liu, G. Matrix-based approaches for dynamic updating approximations in multi-granulation rough sets. Knowl.-Based Syst. 2017, 122, 51–63. [Google Scholar] [CrossRef]

- Yu, P.; Wang, H.; Li, J.; Lin, G. Matrix-based approaches for updating approximations in neighborhood multi-granulation rough sets while neighborhood classes decreasing or increasing. J. Intell. Fuzzy Syst. 2019, 37, 2847–2867. [Google Scholar] [CrossRef]

- Xian, Z.; Chen, J.; Yu, P. Relative relation approaches for updating approximations in multi-granulation rough sets. Filomat 2020, 34, 2253–2272. [Google Scholar] [CrossRef]

- Cheng, Y. Dynamic maintenance of approximations under fuzzy rough sets. Int. J. Mach. Learn. Cybern. 2018, 9, 2011–2026. [Google Scholar] [CrossRef]

- Hu, J.; Li, T.; Luo, C.; Fujita, H.; Li, S. Incremental fuzzy probabilistic rough sets over two universes. Int. J. Approx. Reason. 2017, 81, 28–48. [Google Scholar] [CrossRef]

- Ziarko, W. Variable precision rough set model. J. Comput. Syst. Sci. 1993, 46, 39–59. [Google Scholar] [CrossRef]

| U | a1 | a2 | a3 | X1 | X2 | P |

|---|---|---|---|---|---|---|

| x1 | 1 | M | 1 | 0 | 1 | 0 |

| x2 | 2 | F | 2 | 1 | 1 | 0 |

| x3 | 2 | M | 2 | 1 | 0 | 0 |

| x4 | 1 | M | 2 | 0 | 0 | 1 |

| x5 | 2 | F | 2 | 1 | 1 | 1 |

| No. | Data Sets | Samples | Attributes |

| 1 | Autism Screening Adult Data Set | 366 | 11 |

| 2 | Cargo2000 Freight Tracking and Tracing Data Set | 3943 | 98 |

| 3 | Mushroom | 8124 | 23 |

| 4 | Semeion Handwritten Digit Data Set | 1593 | 267 |

| 5 | Studentmat | 395 | 33 |

| 6 | Website Phishing Data Set | 1353 | 10 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zheng, W.; Li, J.; Liao, S. Multi-Target Rough Sets and Their Approximation Computation with Dynamic Target Sets. Information 2022, 13, 385. https://doi.org/10.3390/info13080385

Zheng W, Li J, Liao S. Multi-Target Rough Sets and Their Approximation Computation with Dynamic Target Sets. Information. 2022; 13(8):385. https://doi.org/10.3390/info13080385

Chicago/Turabian StyleZheng, Wenbin, Jinjin Li, and Shujiao Liao. 2022. "Multi-Target Rough Sets and Their Approximation Computation with Dynamic Target Sets" Information 13, no. 8: 385. https://doi.org/10.3390/info13080385

APA StyleZheng, W., Li, J., & Liao, S. (2022). Multi-Target Rough Sets and Their Approximation Computation with Dynamic Target Sets. Information, 13(8), 385. https://doi.org/10.3390/info13080385