Construction of a Low-Cost Layered Interactive Dashboard with Capacitive Sensing

Abstract

:1. Introduction

- Is it feasible to construct an interacive dashboard by using everyday materials?

- Is it possible for this interactive construction to be made multifunctional so different layers of information are projected over the dashboard?

- Is it possible for this development to operate reliably and be robust for use in a public space?

- Is it possible this contruction to be content agnostic so several use cases can be accommodated over the same dashboard?

2. Related Work

3. Materials and Methods

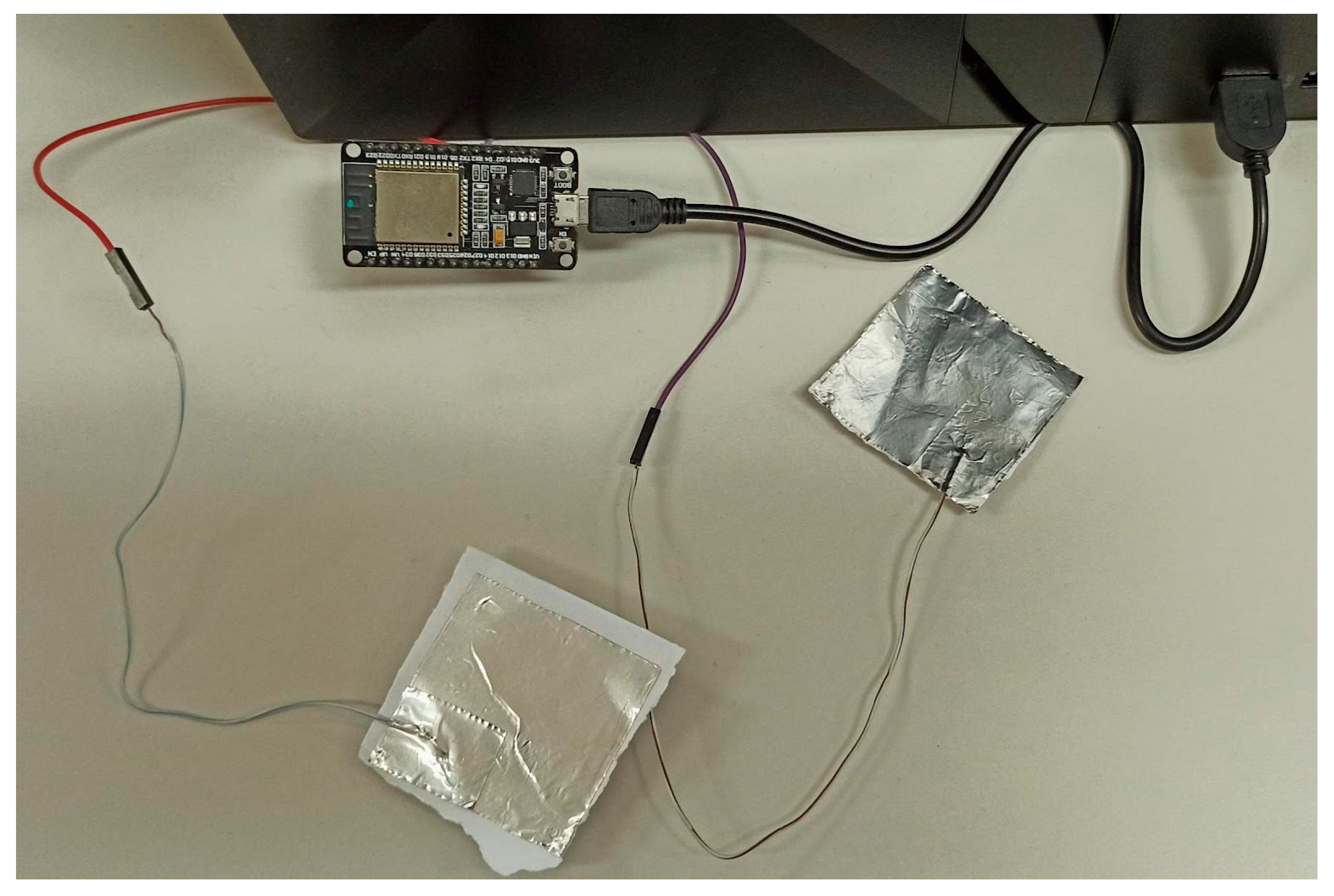

3.1. Low-Cost DIY Capacitive Sensors

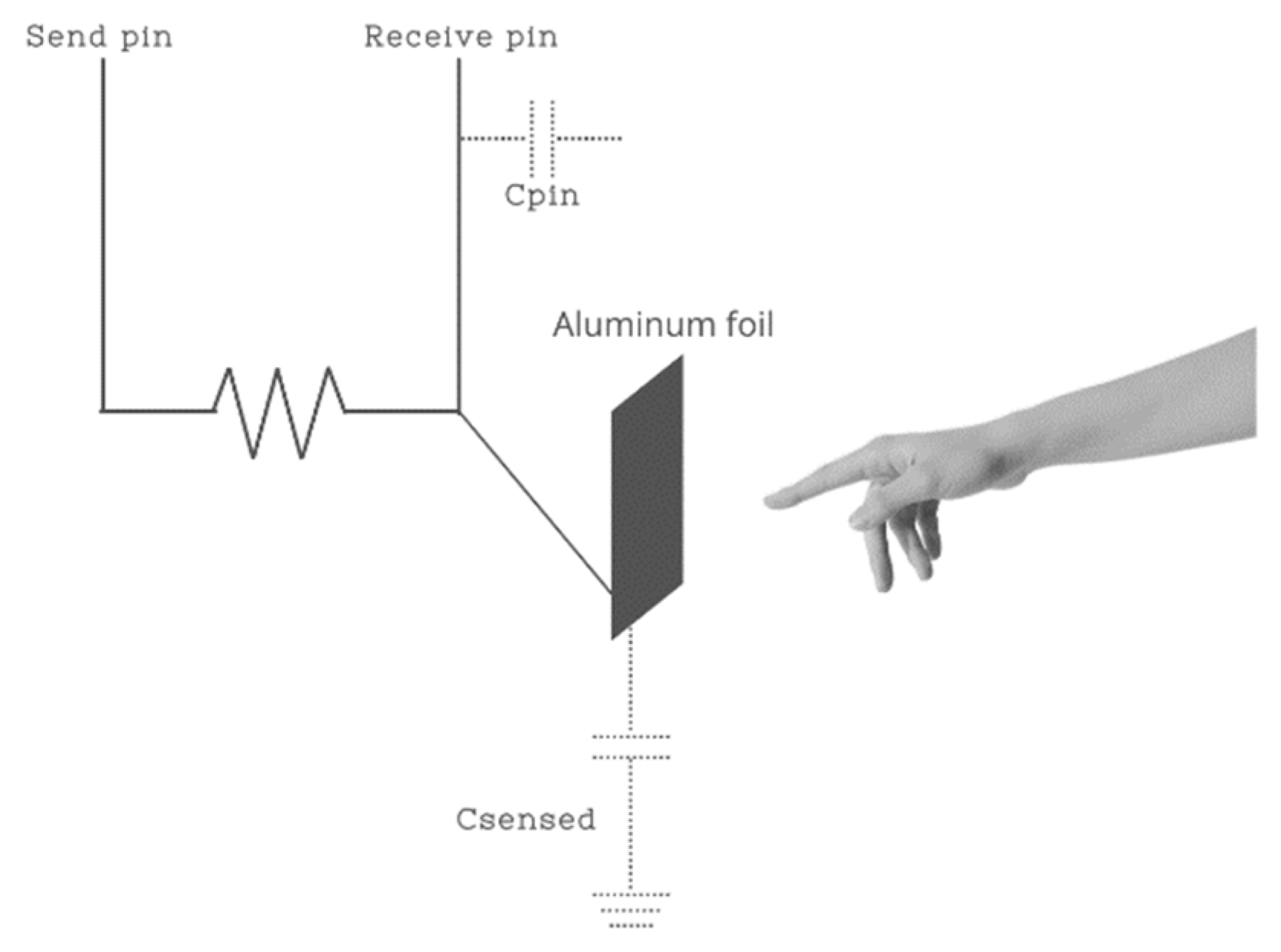

3.1.1. Introduction to Capacitive Sensing

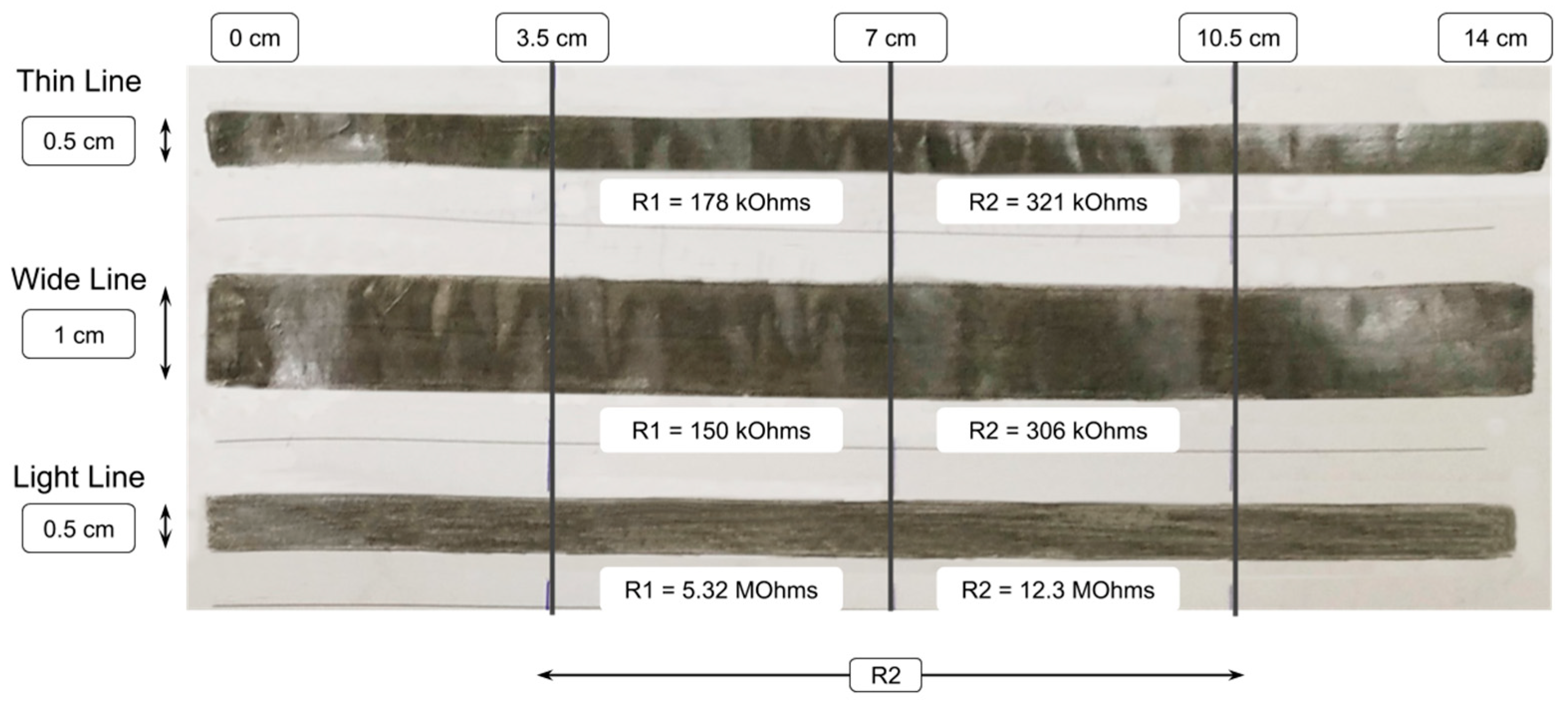

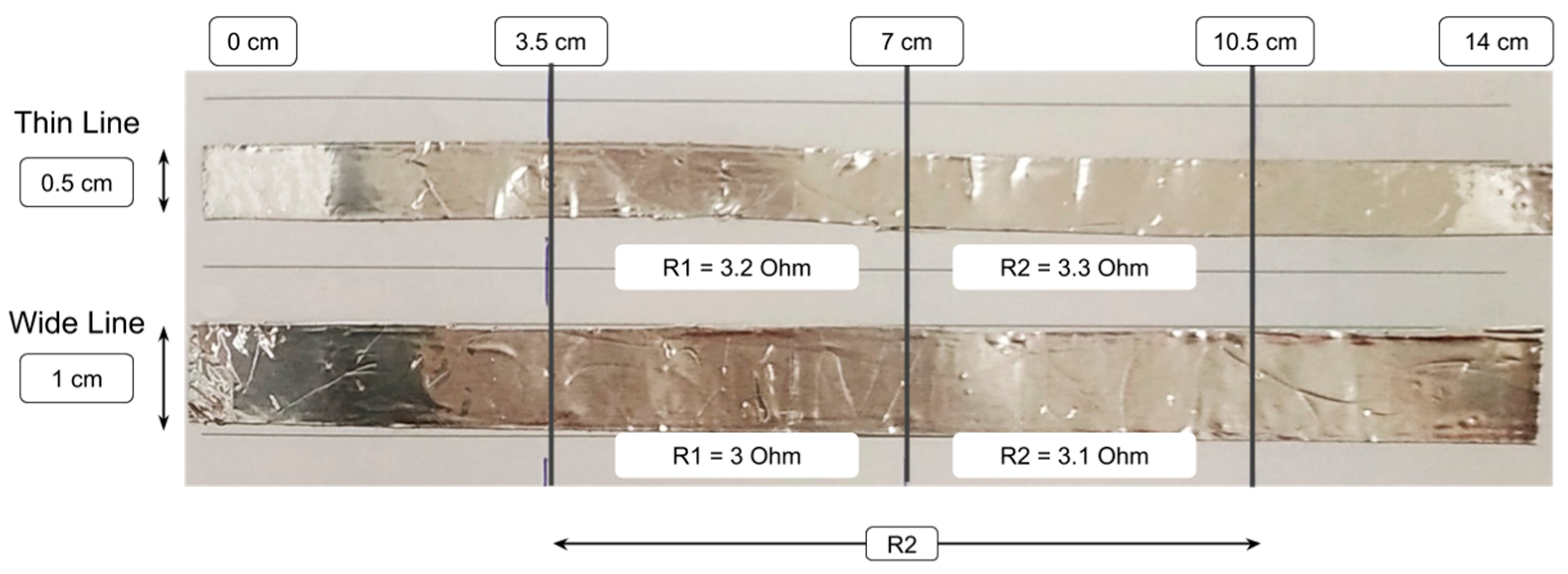

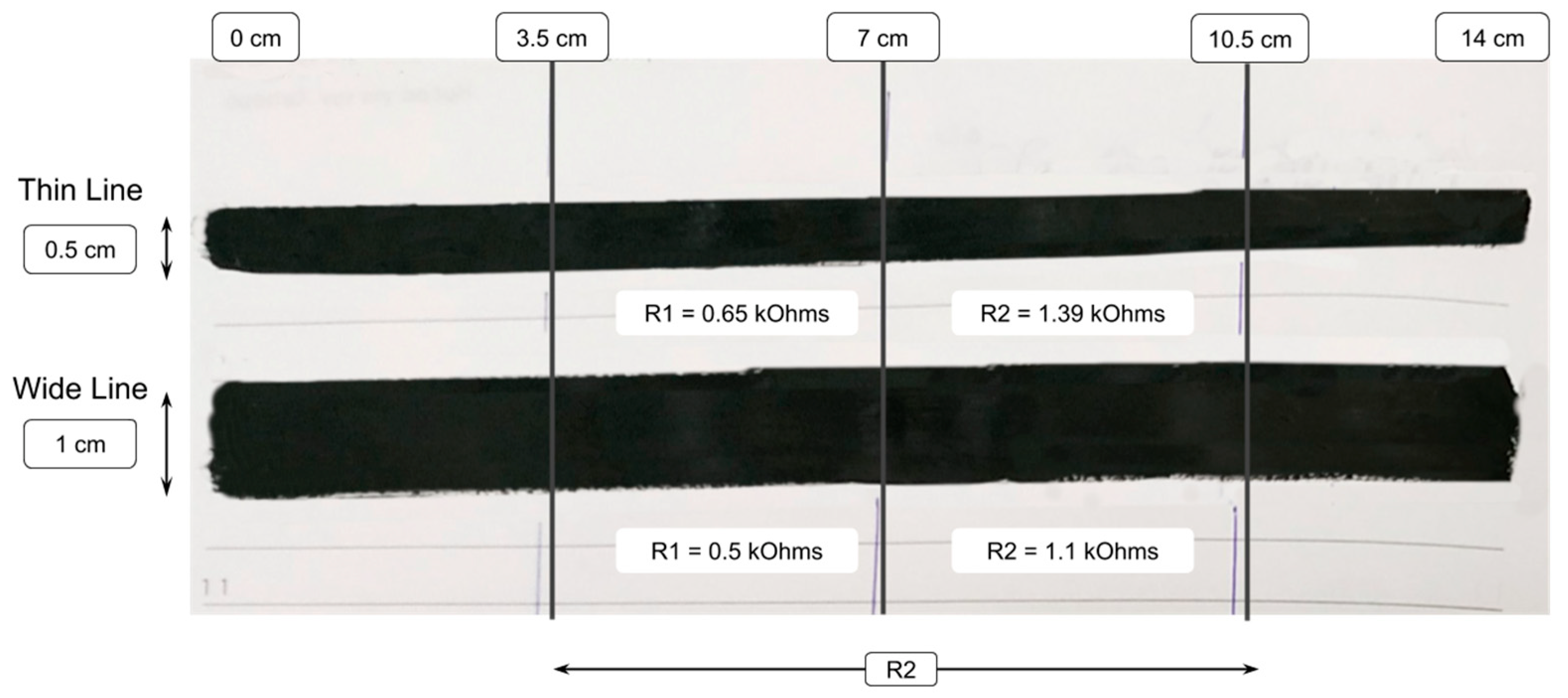

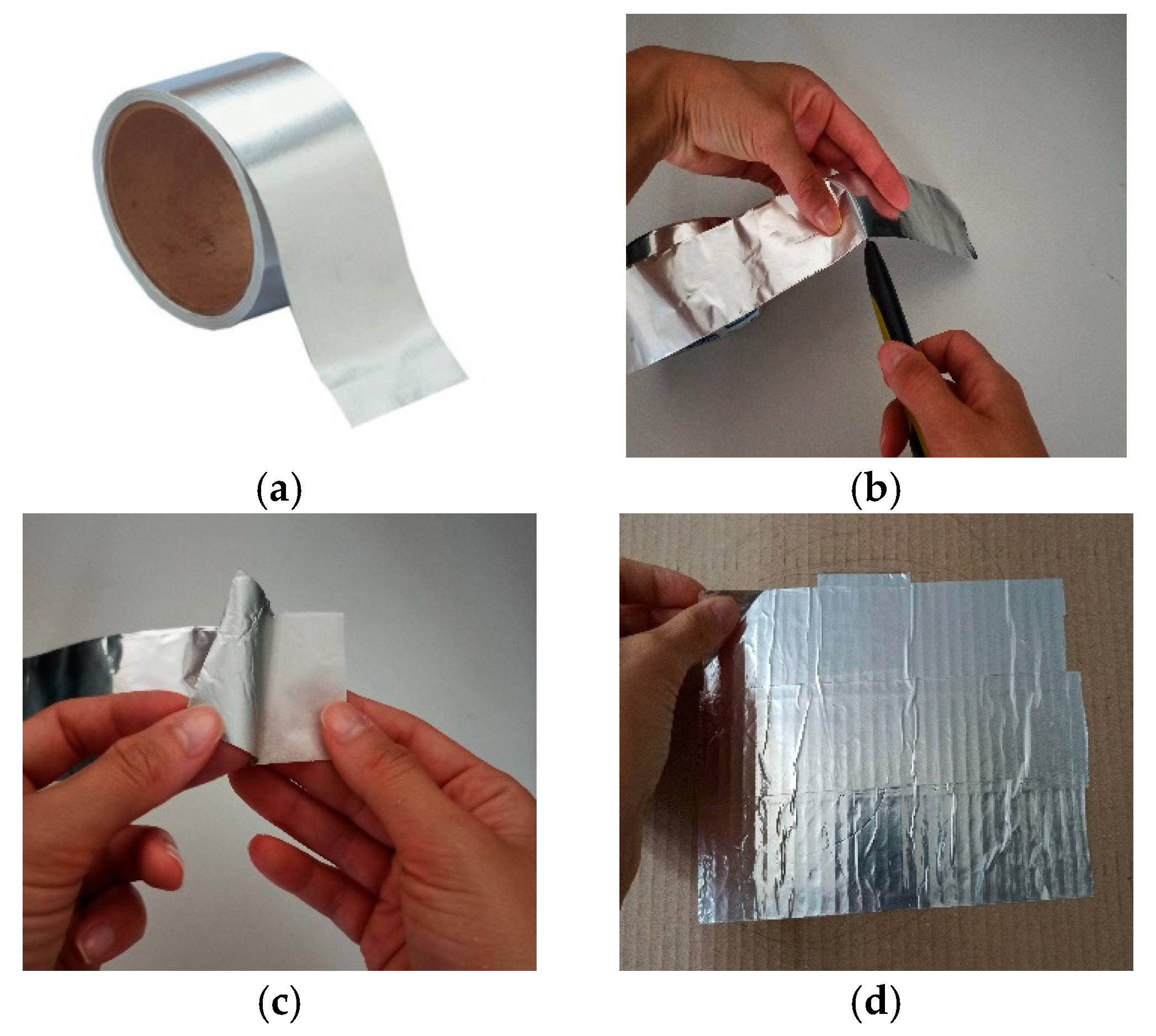

3.1.2. Selection of Conductive Materials

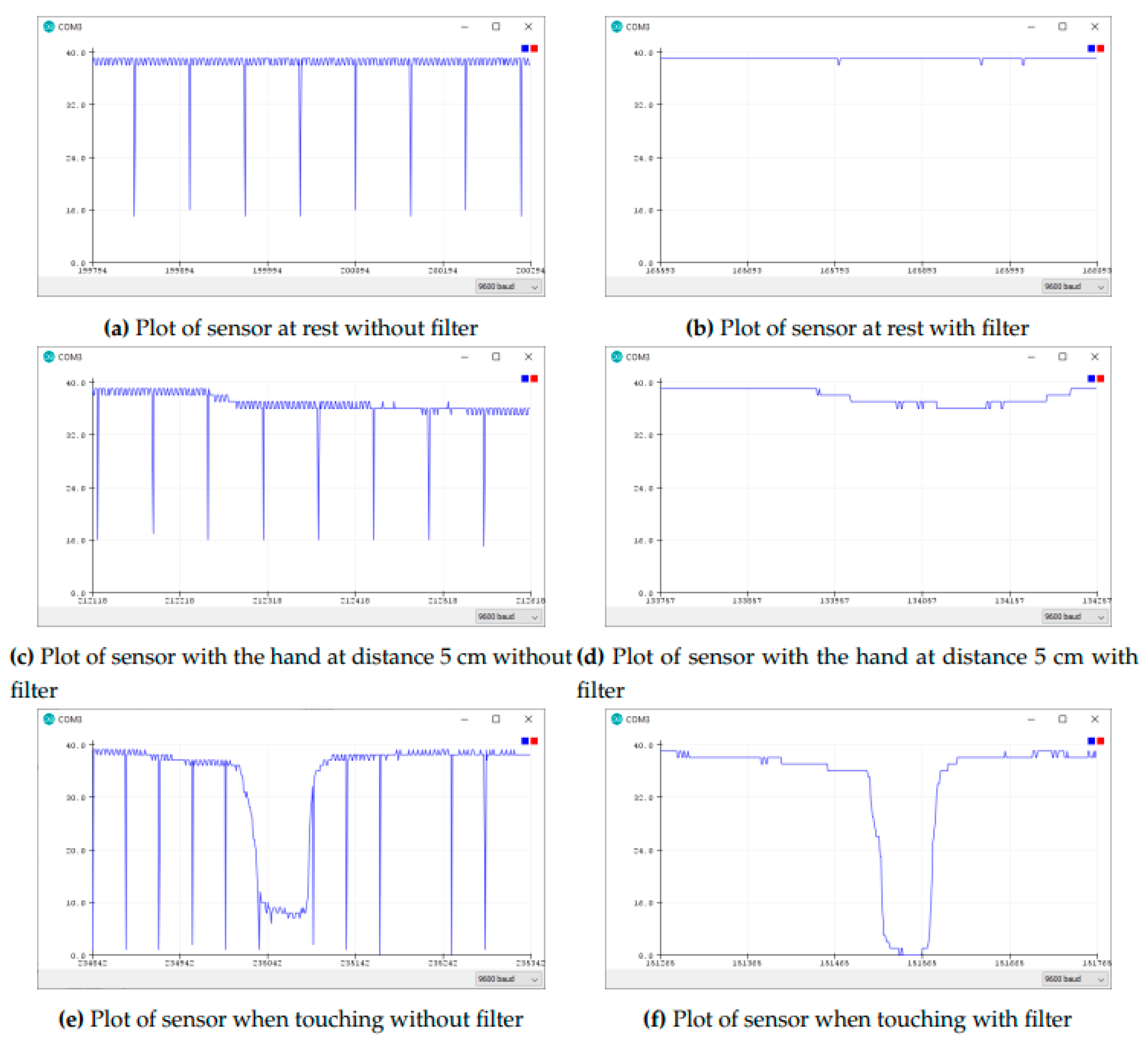

3.1.3. Calibration of the Sensors

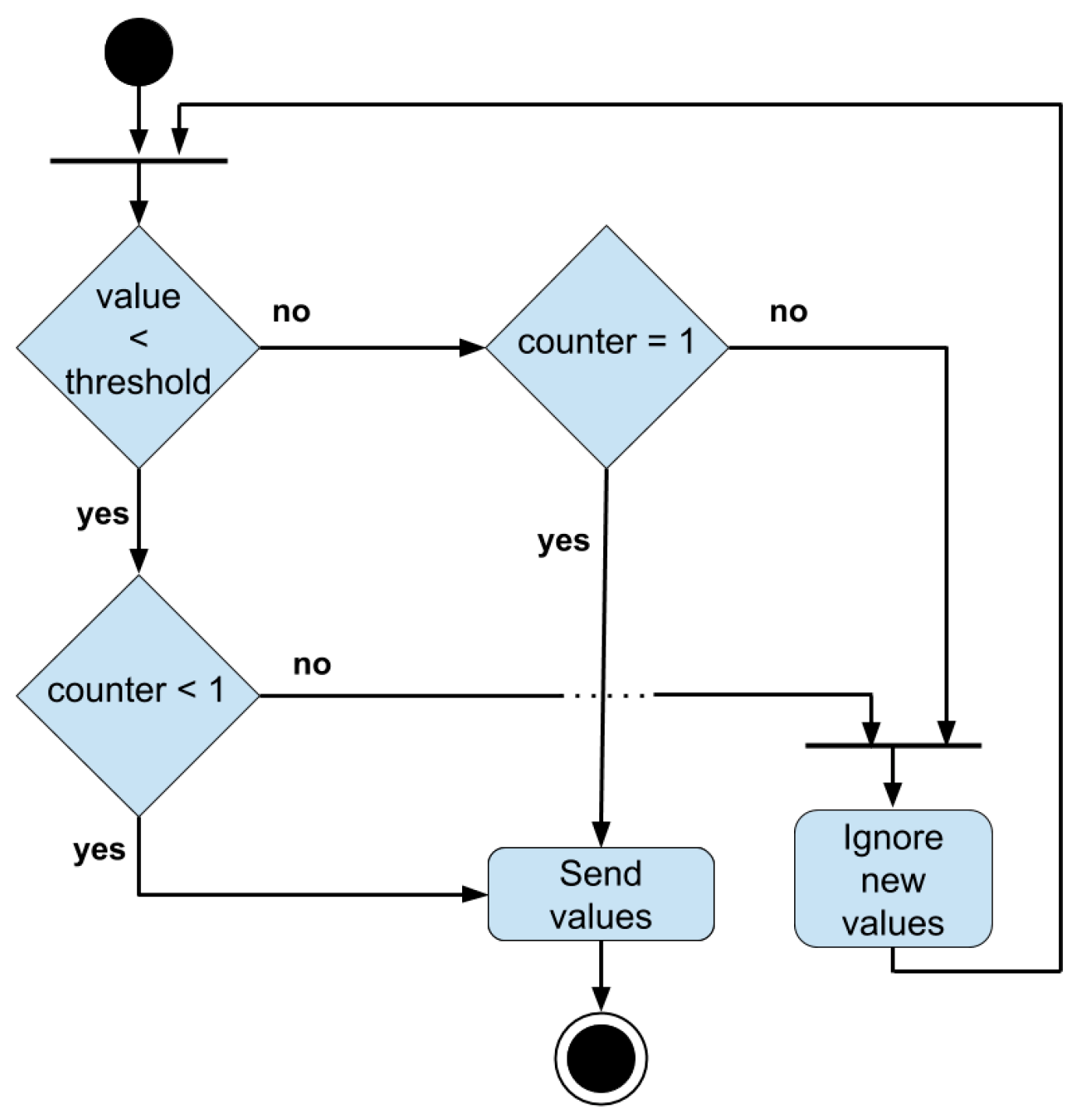

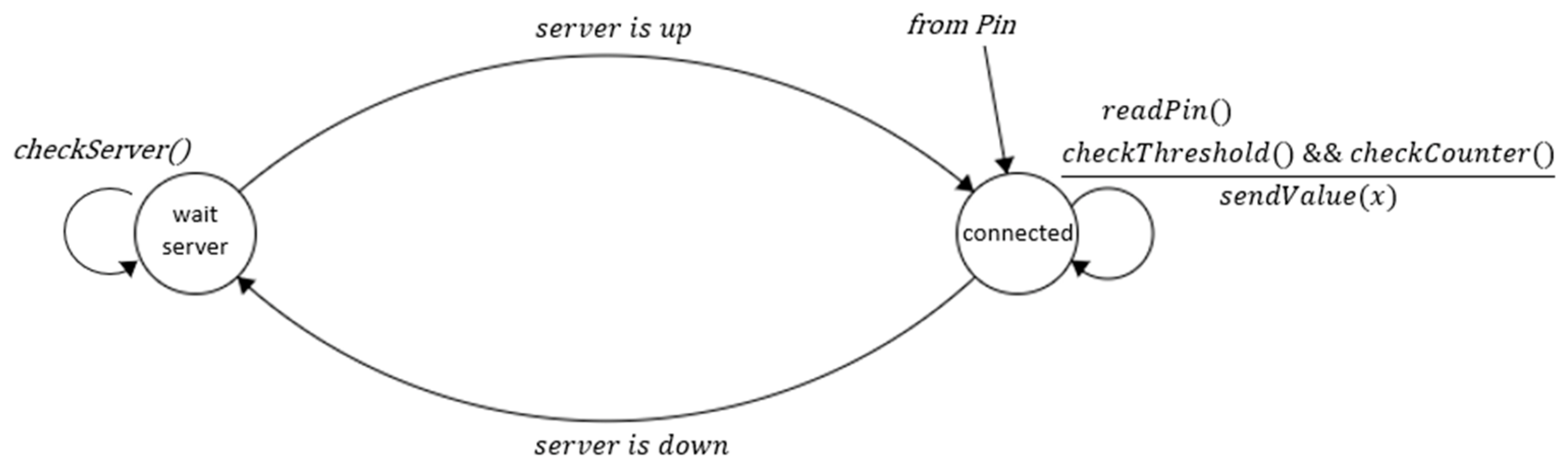

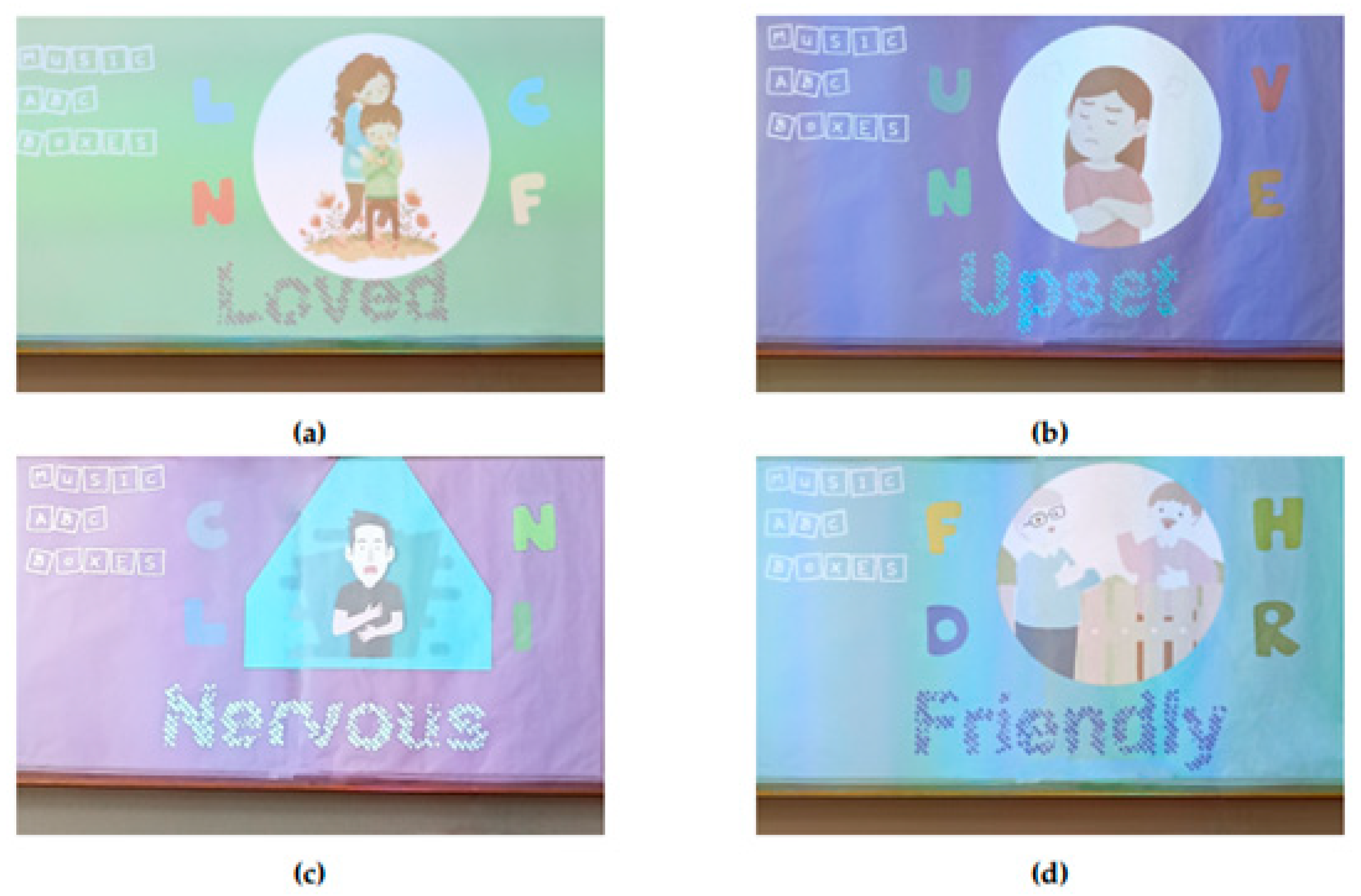

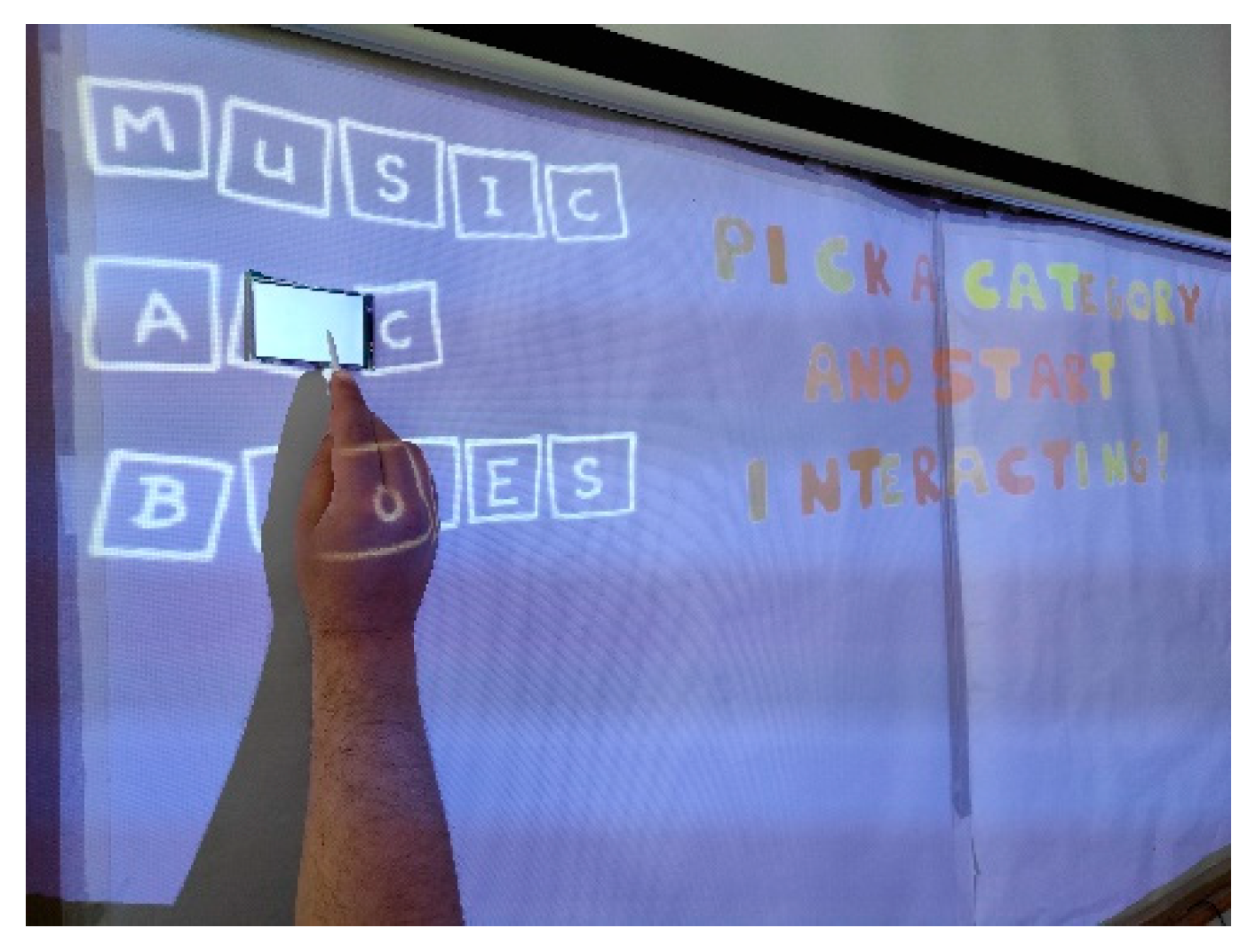

3.2. Development of the Interactive Dashboard with Capabilities for Projection Mapping

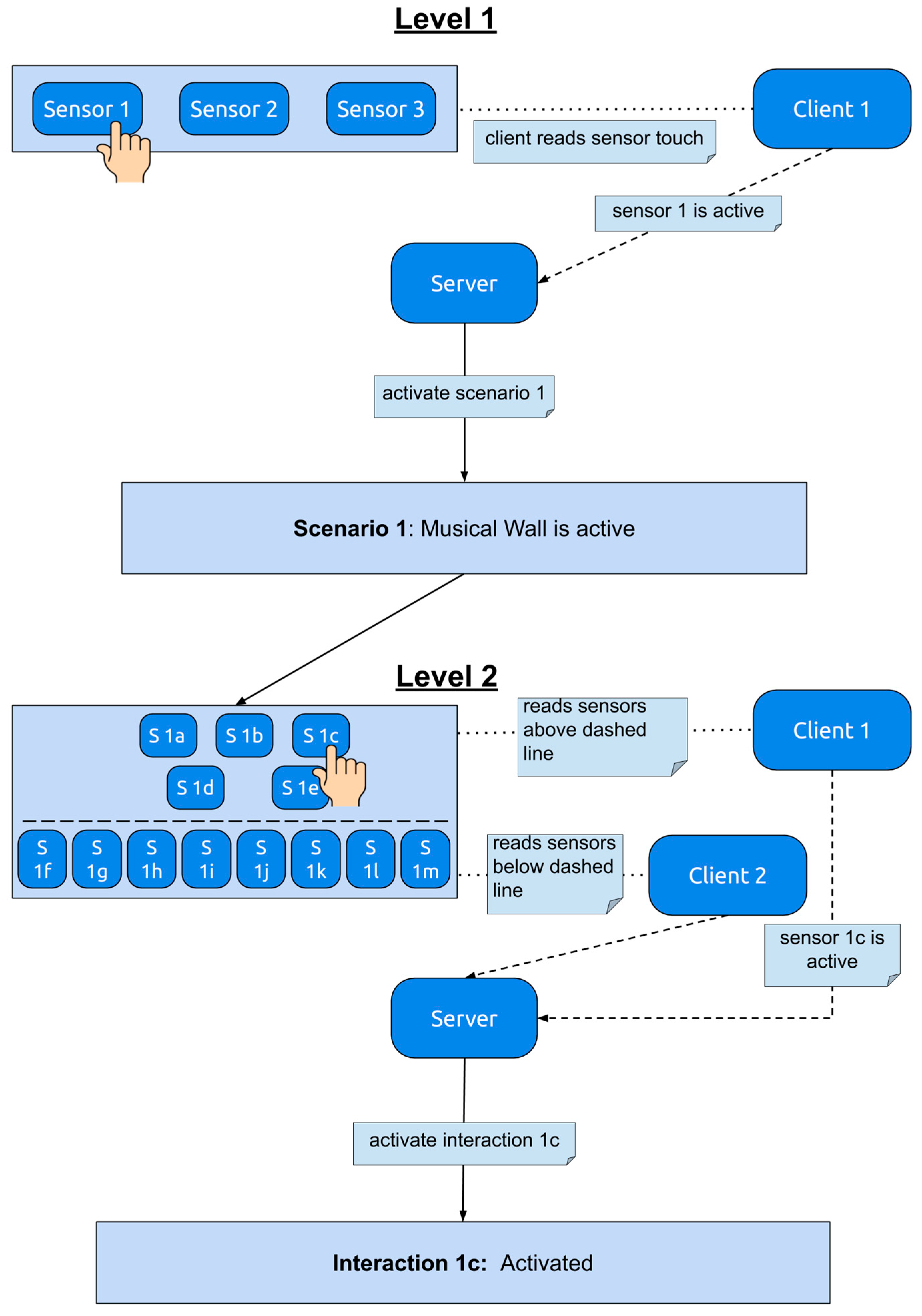

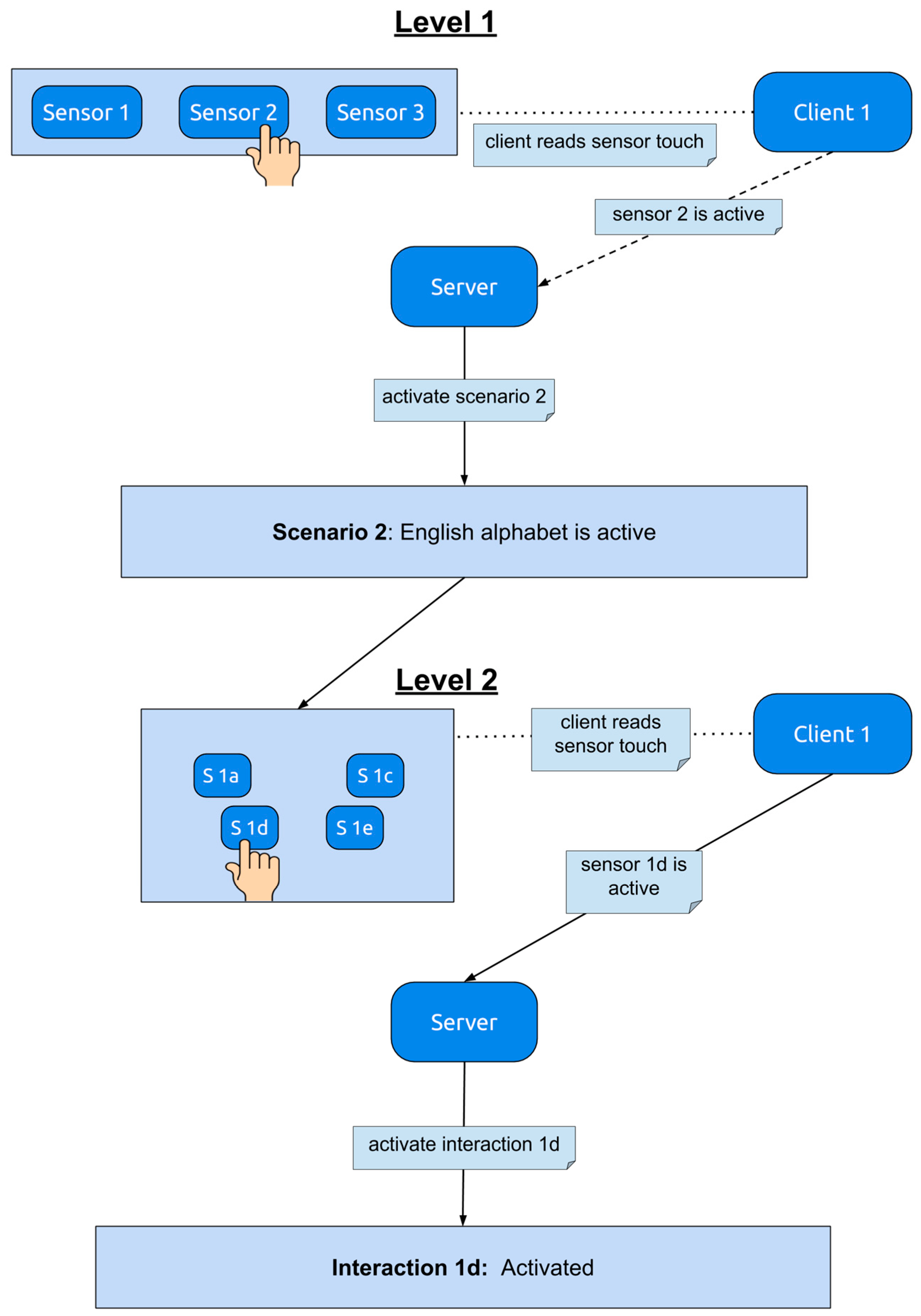

3.2.1. High-Level Architecture and Requirement Analysis

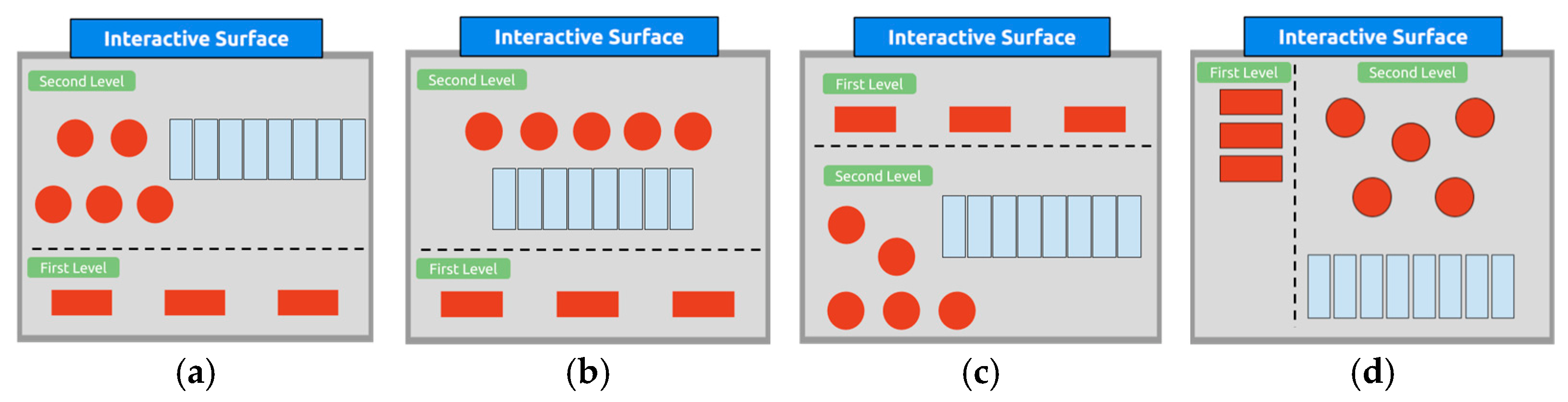

3.2.2. Layout and Layered Design of the Tangible Surface

3.2.3. Crafting of the Tangible Surface

3.3. Programming of the System

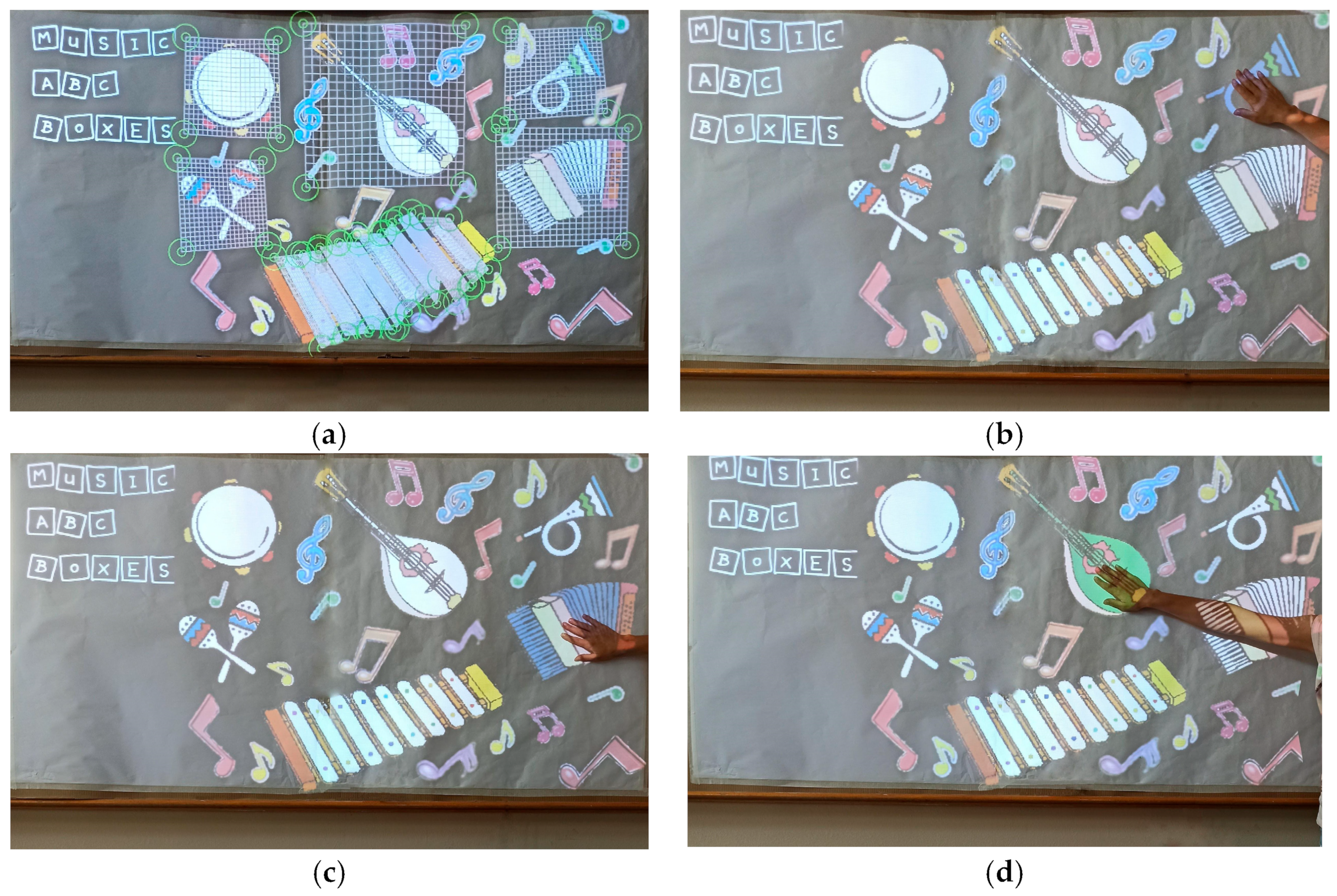

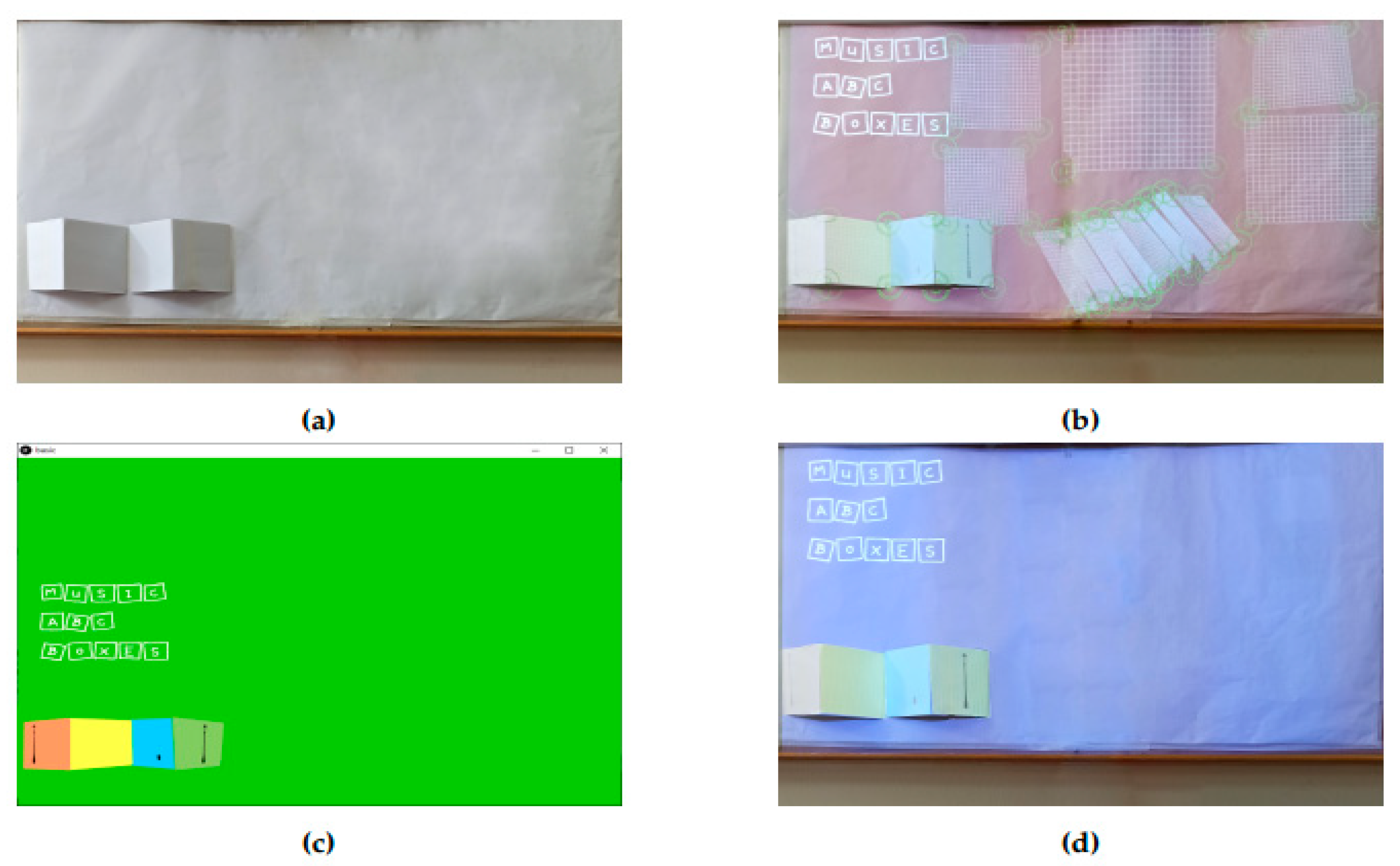

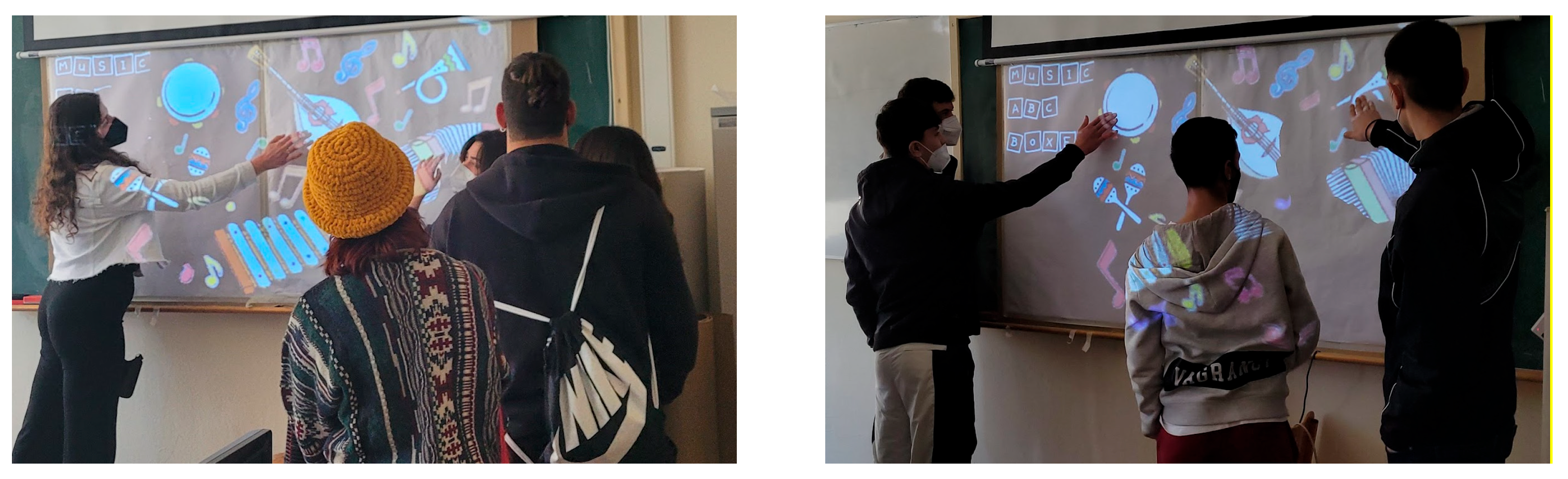

- Scenario 1: Music Wall with six different music instruments

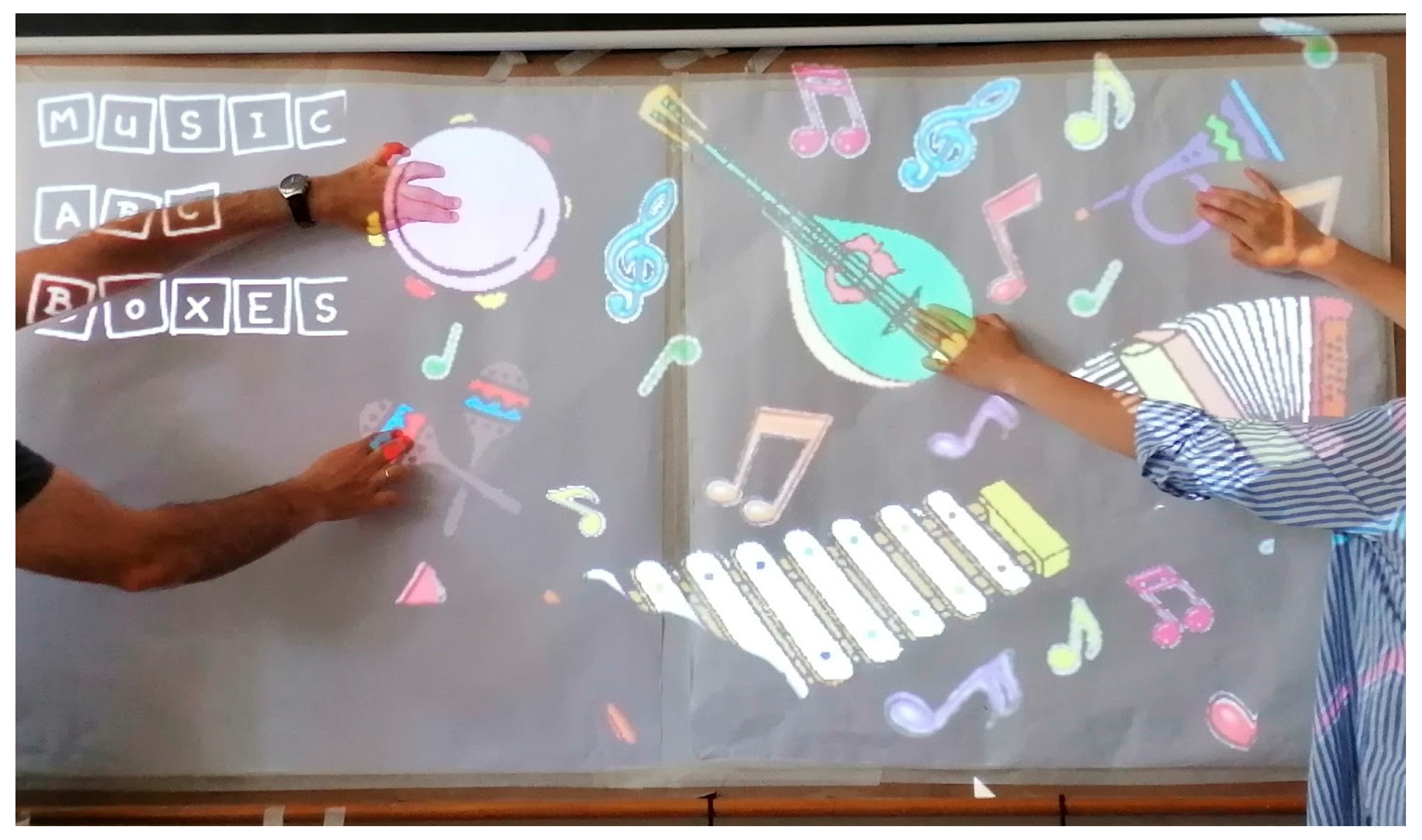

- Scenario 2: English Alphabet Wall

- Scenario 3: Non-Interactive animation based on projection mapping

4. Results

4.1. Use Cases

4.2. Evaluation

4.2.1. Touching the Sensors with One or More Fingers

4.2.2. Touching the Sensors at Different Frequencies

4.2.3. Touching the Sensors with Lighter Pressure

4.2.4. Simultaneous Use of the Touch Sensors

4.2.5. Comparison of Our Touch Sensors with a Commercial Touch Sensor

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Atsali, G.; Panagiotakis, S.; Markakis, E.; Mastorakis, G.; Mavromoustakis, C.X.; Pallis, E.; Malamos, A. A mixed reality 3D system for the integration of X3DoM graphics with real-time data. Multimed. Tools Appl. 2018, 77, 4731–4752. [Google Scholar] [CrossRef]

- Vakintis, I.; Panagiotakis, S.; Mastorakis, G.; Mavromoustakis, C.X. Evaluation of a Web Crowd-Sensing IoT Ecosystem Providing Big Data Analysis. In Chapter Contribution in the “Resource Management for Big Data Platforms, Algorithms, Modelling, and High-Performance Computing Techniques”; Florin, P., Joanna, K., Beniamino, D., Eds.; Springer: Berlin/Heidelberg, Germany, 2016; pp. 461–488. [Google Scholar]

- Papadokostaki, K.; Panagiotakis, S.; Malamos, A.; Vassilakis, K. Mobile Learning in the Era of IoT: Is Ubiquitous Learning the Future of Learning? In Early Childhood Education; IGI Global: Hershey, PA, USA, 2020; pp. 252–280. [Google Scholar] [CrossRef]

- Pinikas, N.; Panagiotakis, S.; Athanasaki, D.; Malamos, A. A Device Independent Platform for Synchronous Internet of Things collaboration and Mobile Devices Screen Casting. Sci. Publ. Group Int. J. Inf. Commun. Sci. 2017, 2, 59–67. [Google Scholar] [CrossRef]

- Alexakis, G.; Panagiotakis, S.; Fragkakis, A.; Markakis, E.; Vassilakis, K. Control of Smart Home Operations Using Natural Language Processing, Voice Recognition and IoT Technologies in a Multi-Tier Architecture. Designs 2019, 3, 32. [Google Scholar] [CrossRef] [Green Version]

- Qian, K.; Kakarala, R.; Akhtar, H. A review of sensing technologies for small and large-scale touch panels. In Proceedings of the Fifth International Conference on Optical and Photonics Engineering, Singapore, 4–7 April 2017; p. 1044918. [Google Scholar] [CrossRef]

- Sathyan, A.; Manikandan, L.C. A Study and Analysis of Touch Screen Technologies. Int. J. Sci. Res. Comput. Sci. Eng. Inf. Technol. 2020, 6, 737–744. [Google Scholar] [CrossRef]

- Grosse-Puppendahl, T.; Holz, C.; Cohn, G.; Wimmer, R.; Bechtold, O.; Hodges, S.; Reynolds, M.S.; Smith, J.R. Finding Common Ground: A Survey of Capacitive Sensing in Human-Computer Interaction. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018. [Google Scholar]

- Pourjafarian, N.; Withana, A.; Paradiso, J.A.; Steimle, J. Multi-Touch Kit: A Do-It-Yourself Technique for Capacitive Multi-Touch Sensing Using a Commodity Microcontroller. In Proceedings of the 32nd Annual ACM Symposium on User Interface Software and Technology (UIST’19), New Orleans, LA, USA, 20–23 October 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 1071–1083. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Y.; Yang, C.; Hudson, S.E.; Harrison, C.; Sample, A. Wall++: Room-Scale Interactive and Context-Aware Sensing. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; Volume 273, pp. 1–15. [Google Scholar] [CrossRef]

- Interactive Touch Wall. Available online: https://www.core77.com/posts/35697/How-Dalziel-and-Pow-Realized-This-Awesome-Interactive-Touch-Wall (accessed on 10 April 2022).

- Zippy. Available online: https://www.dalziel-pow.com/news/zippy-digital-installations-making (accessed on 10 April 2022).

- Jacoby, S.; Buechley, L. Drawing the Electric: Storytelling with Conductive Ink. In Proceedings of the 12th International Conference on Interaction Design and Children, New York, NY, USA, 24–27 June 2013; pp. 265–268. [Google Scholar] [CrossRef]

- Buechley, L.; Mellis, D.; Perner-Wilson, H.; Lovell, E.; Kaufmann, B. Living Wall: Programmable Wallpaper for Interactive Spaces. In Proceedings of the 18th ACM International Conference on Multimedia, Firenze, Italy, 25–29 October 2010. [Google Scholar]

- Qi, J.; Buechley, L. Electronic Popables: Exploring Paper-Based Computing through an Interactive Pop-Up Book. In Proceedings of the Fourth International Conference on Tangible, Embedded, and Embodied Interaction, Cambridge, MA, USA, 25–27 January 2010. [Google Scholar]

- Freed, N.; Qi, J.; Setapen, A.; Breazeal, C.; Buechley, L.; Raffle, H. Sticking Together: Handcrafting Personalized Communication Interfaces. In Proceedings of the 10th International Conference on Interaction Design and Children, Ann Arbor, MI, USA, 20–23 June 2011. [Google Scholar]

- Russo, A.; Ahn, B.Y.; Adams, J.J.; Duoss, E.B.; Bernhard, J.T.; Lewis, J.A. Pen-on-Paper Flexible Electronics. Adv. Mater. 2011, 23, 3426–3430. [Google Scholar] [CrossRef] [PubMed]

- How to Make an Arduino Capacitance Meter. Available online: https://www.circuitbasics.com/how-to-make-an-arduino-capacitance-meter/ (accessed on 19 March 2022).

- How Capacitive Sensors Work and How to Use Them Effectively. Available online: https://www.sensorland.com/HowPage070.html (accessed on 2 April 2022).

- ESP32 Capacitive Touch Sensor Pins with Arduino IDE. Available online: https://randomnerdtutorials.com/esp32-touch-pins-arduino-ide/ (accessed on 19 March 2022).

- Simple Median Filter Library Designed for the Arduino Platform. Available online: https://github.com/daPhoosa/MedianFilter (accessed on 10 April 2022).

- Grundhöfer, A.; Iwai, D. Recent Advances in Projection Mapping Algorithms, Hardware and Applications. Comput. Graph. Forum 2018, 37, 653–675. [Google Scholar] [CrossRef]

- The Illustrated History of Projection Mapping. Available online: http://projection-mapping.org/the-history-of-projection-mapping/ (accessed on 8 April 2022).

- Welcome to Processing! Available online: https://processing.org/ (accessed on 10 April 2022).

| Graphite | Aluminum Tape | Conductive Paint | ||

|---|---|---|---|---|

| Thin Line | R1 | 178 kOhms | 3.2 Ohms | 0.65 kOhms |

| R2 | 321 kOhms | 3.3 Ohms | 1.39 kOhms | |

| Wide Line | R1 | 150 kOhms | 3 Ohms | 0.5 kOhms |

| R2 | 306 kOhms | 3.1 Ohms | 1.1 kOhms | |

| Light Line | R1 | 5.32 kOhms | - | - |

| R2 | 12.3 kOhms | - | - |

| BPM | 50 | 80 | 100 | 120 | 150 |

|---|---|---|---|---|---|

| Success Rate | 19/20 | 20/20 | 18/20 | 19/20 | 19/20 |

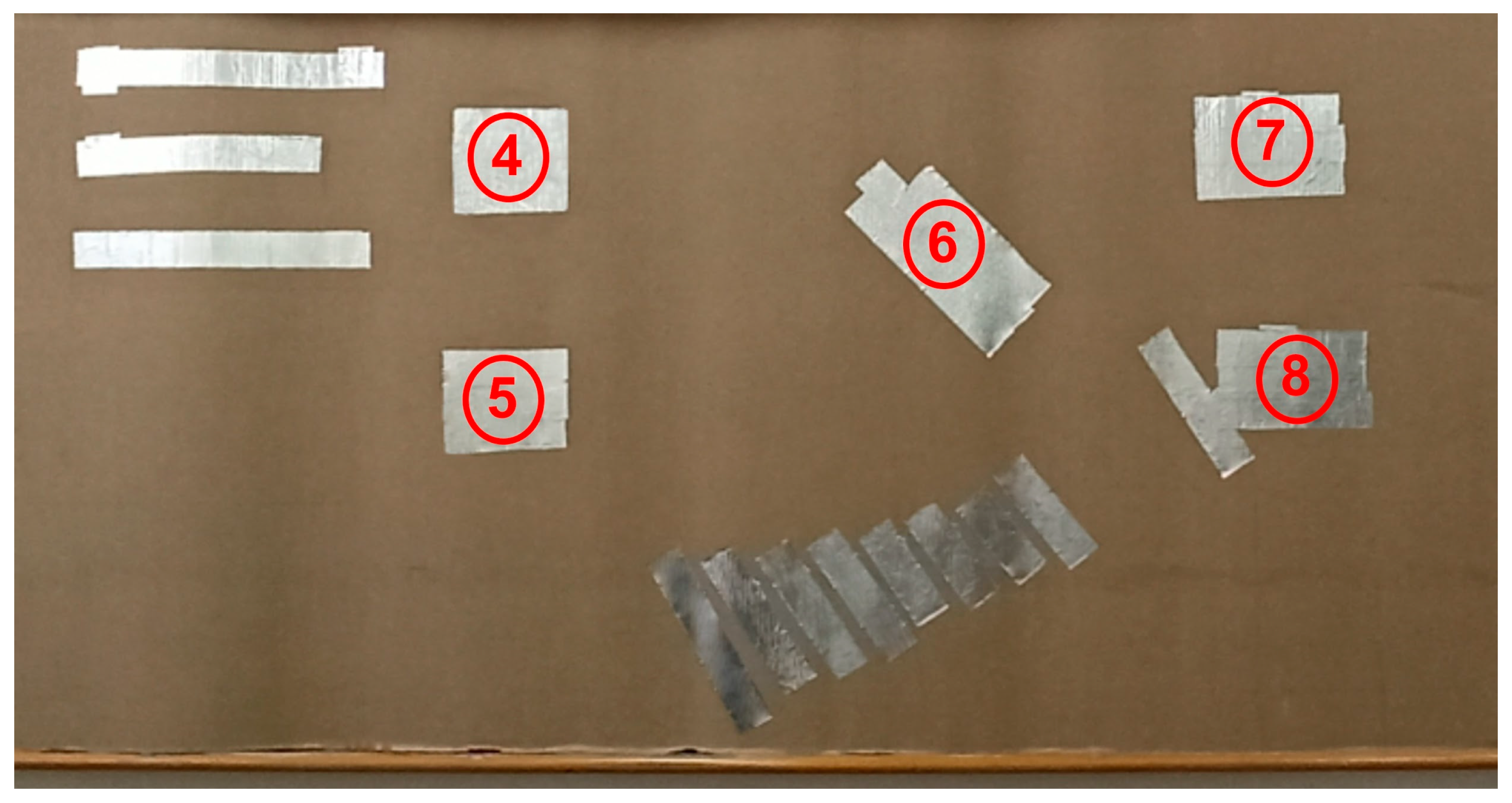

| Touch Sensor | 4 | 5 | 6 | 7 | 8 |

|---|---|---|---|---|---|

| Success Rate | 86% | 83% | 86% | 84% | 80% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tsironi Lamari, A.; Panagiotakis, S.; Kamarianakis, Z.; Loukas, G.; Malamos, A.; Markakis, E. Construction of a Low-Cost Layered Interactive Dashboard with Capacitive Sensing. Information 2022, 13, 304. https://doi.org/10.3390/info13060304

Tsironi Lamari A, Panagiotakis S, Kamarianakis Z, Loukas G, Malamos A, Markakis E. Construction of a Low-Cost Layered Interactive Dashboard with Capacitive Sensing. Information. 2022; 13(6):304. https://doi.org/10.3390/info13060304

Chicago/Turabian StyleTsironi Lamari, Agapi, Spyros Panagiotakis, Zacharias Kamarianakis, George Loukas, Athanasios Malamos, and Evangelos Markakis. 2022. "Construction of a Low-Cost Layered Interactive Dashboard with Capacitive Sensing" Information 13, no. 6: 304. https://doi.org/10.3390/info13060304

APA StyleTsironi Lamari, A., Panagiotakis, S., Kamarianakis, Z., Loukas, G., Malamos, A., & Markakis, E. (2022). Construction of a Low-Cost Layered Interactive Dashboard with Capacitive Sensing. Information, 13(6), 304. https://doi.org/10.3390/info13060304