Multilingual Offline Signature Verification Based on Improved Inverse Discriminator Network

Abstract

1. Introduction

- We build a multi-language offline handwritten signature database, use PS to segment the signature, and perform preprocessing operations.

- For the IDN model, the channel attention machine and the improved spatial attention mechanism are used to further improve the handwritten signature identification.

- The method proposed in this paper can effectively improve the handwritten signature verification rate of single and mixed languages, and pave the way for multilingual signatures such as Kazakh and Kirgiz.

2. Related Work

3. Multilingual Signature Verification

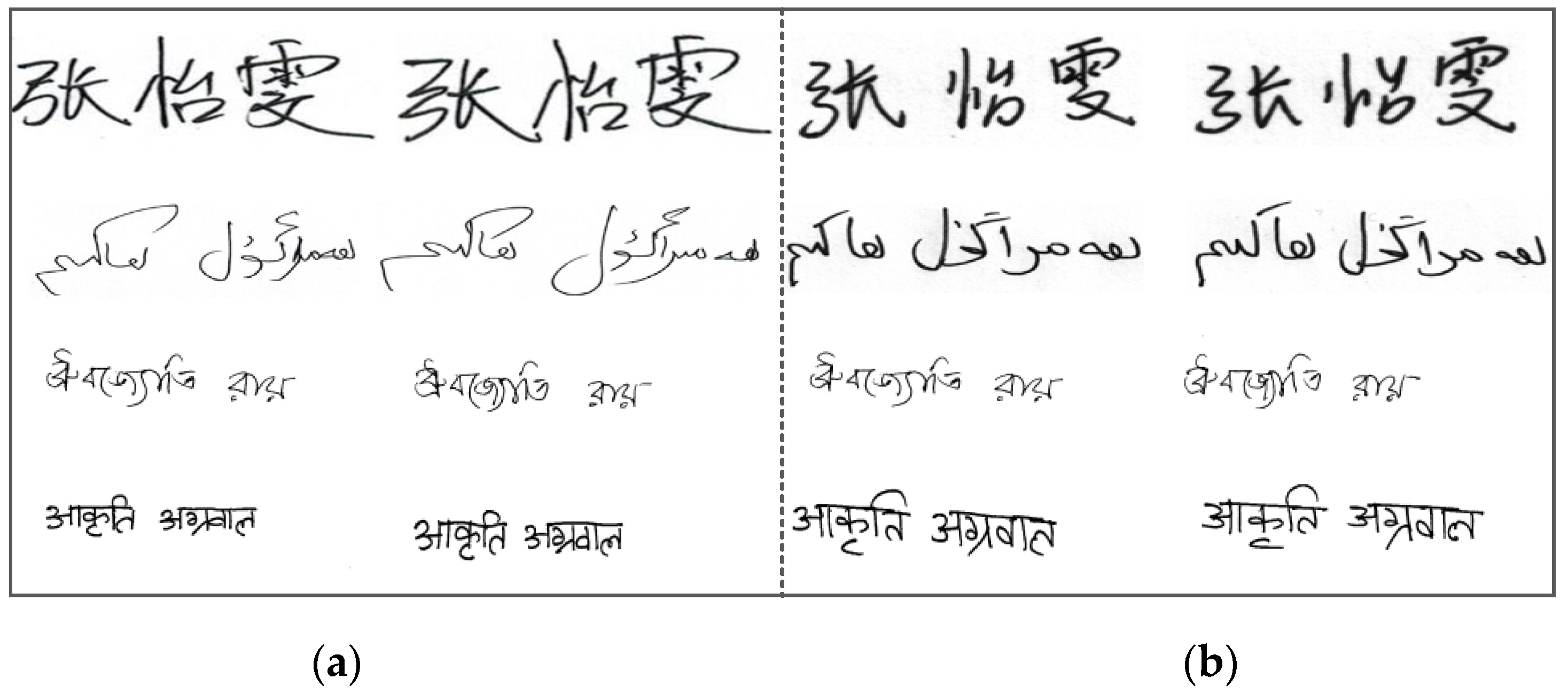

3.1. Signature Datasets

3.2. Preprocessing

4. Proposed Method

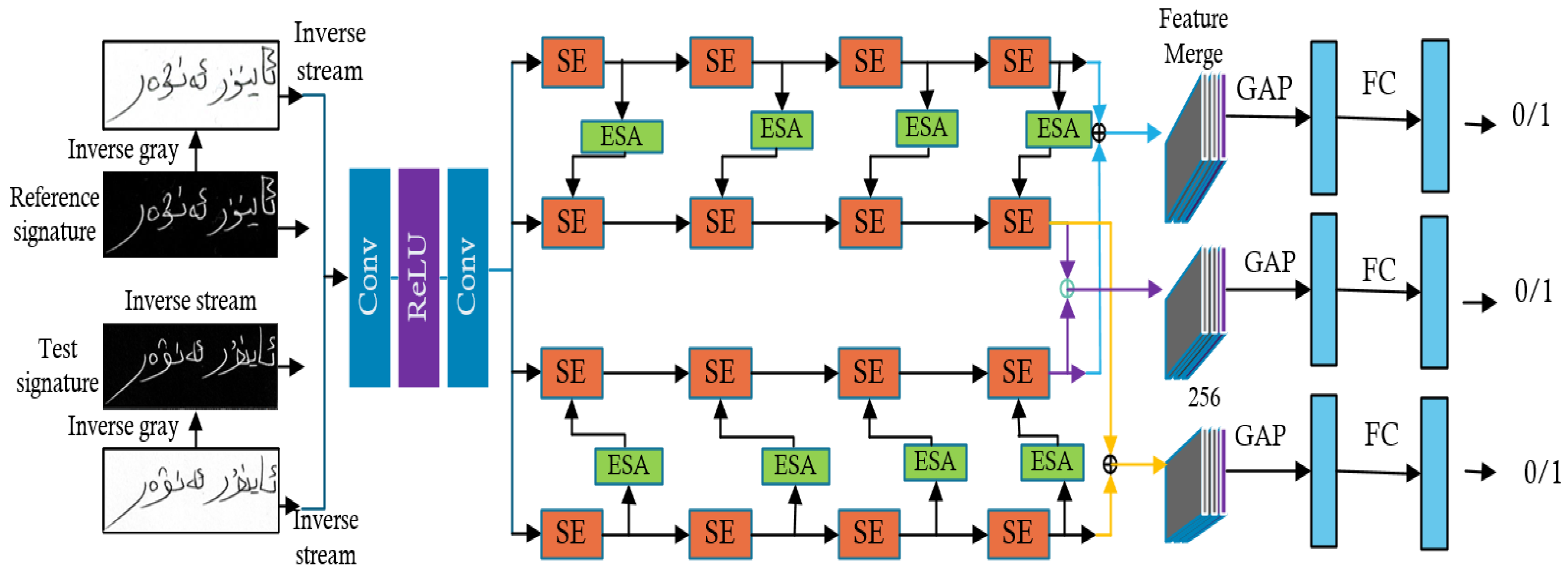

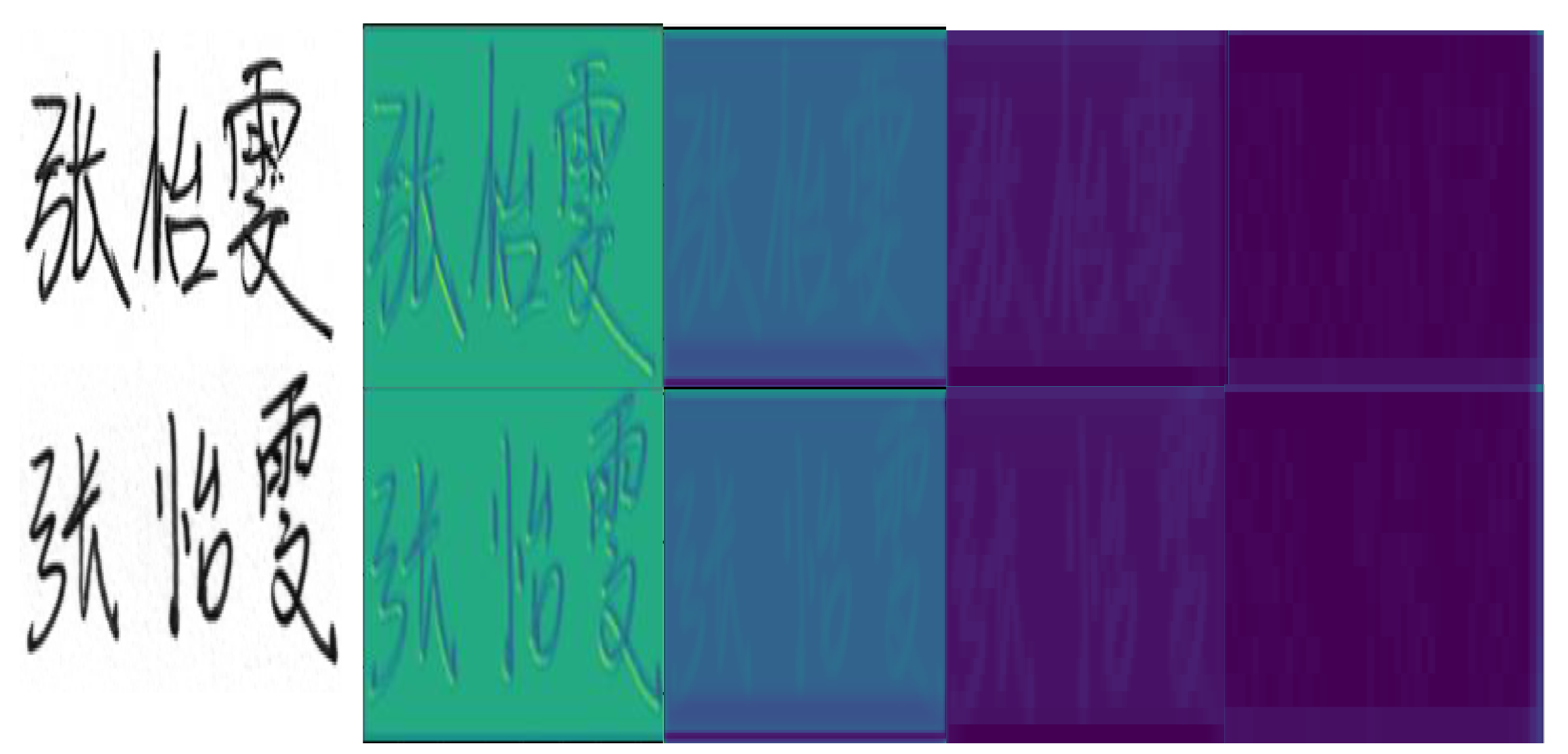

4.1. Inverse Discriminative Networks

4.2. Method Structure

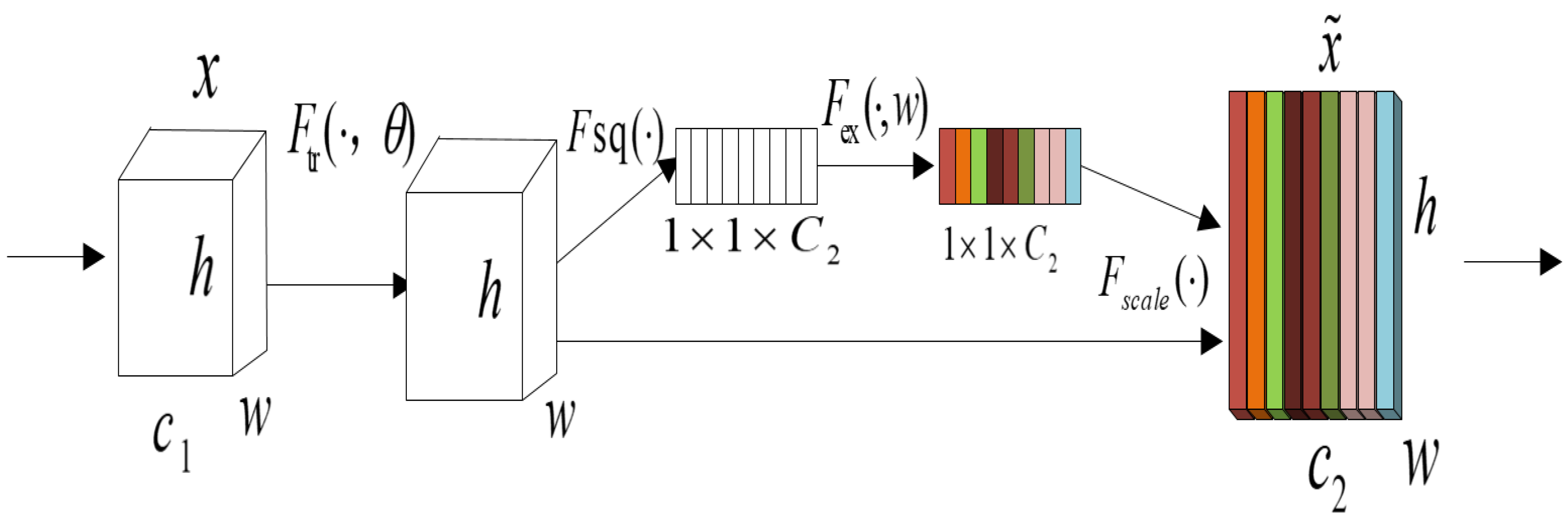

4.3. Channel Attention Mechanism

- Squeeze: compress H × W × C feature map 1 × 1 × C.

- Excitation: With the FC full connectivity layer, you can learn the importance of the feature channel. Different weights are assigned according to their importance.

- Reweight: this operation recalibrates the attribute channels for the original input with the weights learned.

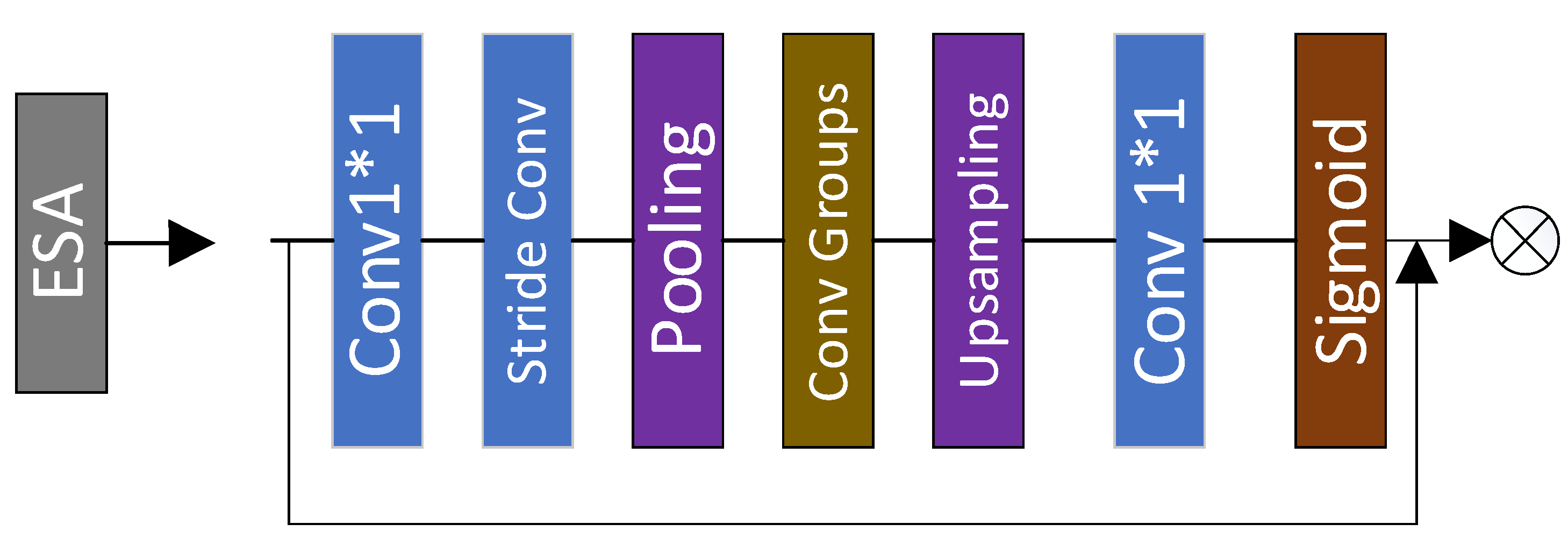

4.4. Enhanced Spatial Attention

4.5. Loss Function

5. Experimental Result

5.1. Evaluation Criteria

5.2. Algorithm Implementation Process of Improved IDN Network

| Algorithm1 The improved inverse discriminator network |

| 1: Input: the images reference and test, matrix size [384, 96]. |

| 2: Initialization: reference signature and test signature images respectively to obtain the inverse-gray reference signature and inverse-gray test signature |

| 3: Feature Extraction: Conv + ReLu + Conv, Feature extraction on four input signature images.Get Ts, Tt, IGRt and IGTt of size [115, 220]. |

| 4: SE: For the reference, the feature extraction of the SE module is performed on the four input streams respectively. |

| 5: ESA: Feature connection is made between reverse flow features and discriminative flow features. |

| 6: for I = 1, 2, 3, …, T do |

| 7: Cat: Splicing the features of the 2 streams |

| 8: GAP: Perform max pooling on the spliced data to reduce the feature size |

| 9: FC: Mapping multi-dimensional features to a 1-dimensional vector representation |

| 10: Classifier: The classification result with the highest output probability |

| 11: end for |

| 12: Evaluation: Average the three classification results and output the final discrimination result. |

| 13: Output: Output is 0: Test is true; output is 1: Test is false. |

5.3. Chinese and Uyghur Data Set

5.4. BHSIG260 Open Data Set

5.5. Experimental Result of Mixing Two Languages

5.6. Comparison of Experimental Results

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Eltrabelsi, J.; Lawgali, A. Offline Handwritten Signature Recognition based on Discrete Cosine Transform and Artificial Neural Network. In Proceedings of the 7th International Conference on Engineering & MIS 2021, Almaty, Kazakhstan, 11–13 October 2021; pp. 1–4. [Google Scholar]

- Hamadene, A.; Chibani, Y. One-Class Writer-Independent Offline Signature Verification Using Feature Dissimilarity Thresholding. IEEE Trans. Inf. Forensics Secur. 2017, 11, 1. [Google Scholar] [CrossRef]

- Souza, V.L.F.; Oliveira, A.L.I.; Cruz, R.M.O. An Investigation of Feature Selection and Transfer Learning for Writer-Independent Offline Handwritten Signature Verification. In Proceedings of the 2020 25th IEEE International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 7478–7485. [Google Scholar]

- Abdul-Haleem, M.G. Offline Handwritten Signature Verification based on Local Ridges Features and Haar Wavelet Transform. Iraqi J. Sci. 2022, 63, 855–865. [Google Scholar] [CrossRef]

- Batool, F.E.; Attique, M.; Sharif, M.; Javed, K.; Nazir, M.; Abbasi, A.A.; Iqbal, Z.; Riaz, N. Offline Signature Verification System: A Novel Technique of Fusion of GLCM and Geometric Features using SVM. Multimed Tools Appl. 2020, 1–20. [Google Scholar] [CrossRef]

- Ajij, M.; Pratihar, S.; Nayak, S.R.; Hanne, T.; Roy, D.S. Off-line Signature Verification using Elementary Combinations of Directional Codes from Boundary Pixels. Neural Comput. Appl. 2021, 1–18. [Google Scholar] [CrossRef]

- Kumar, A.; Bhatia, K. Multiple Classifier System for Writer Independent Offline Handwritten Signature Verification using Hybrid Features. Int. J. Comput. Inf. Syst. Ind. Manag. Appl. 2018, 10, 252–260. [Google Scholar]

- Arab, N.; Nemmour, H.; Chibani, Y. New Local Difference Feature for Off-Line Handwritten Signature Verification. In Proceedings of the 2019 IEEE International Conference on Advanced Electrical Engineering (ICAEE), Algiers, Algeria, 19–21 November 2019; pp. 1–5. [Google Scholar]

- Liu, L.; Huang, L.; Yin, F. Offline Signature Verification using a Region-based Deep Metric Learning Network. Pattern Recognit. 2021, 118, 108009. [Google Scholar] [CrossRef]

- Natarajan, A.; Babu, B.S.; Gao, X.Z. Signature Warping and Greedy Approach based Offline Signature Verification. Int. J. Inf. Technol. 2021, 13, 1279–1290. [Google Scholar] [CrossRef]

- Gyimah, K.; Appati, J.K.; Darkwah, K.; Ansah, K. An Improved Geo-Textural based Feature Extraction Vector for Offline Signature Verification. J. Adv. Math. Comput. Sci. 2019, 32, 1–14. [Google Scholar] [CrossRef][Green Version]

- Lokarkare, C.; Patil, R.; Rane, S. Offline Handwritten Signature Verification using Various Machine Learning Algorithms. In Proceedings of the ITM Web of Conferences, Kochi, India, 21–23 October 2021; EDP Sciences: Paris, France, 2021; Volume 40, p. 03010. [Google Scholar]

- Masoudnia, S.; Mersa, O.; Araabi, B.N. Multi-Representational Learning for Offline Signature Verification using Multi-Loss Snapshot Ensemble of CNNs. Expert Syst. Appl. 2019, 133, 317–330. [Google Scholar] [CrossRef]

- Zhang, S.J.; Aysa, Y.; Ubul, K. BOVW based Feature Selection for Uyghur Offline Signature Verification. In Proceedings of the Chinese Conference on Biometric Recognition, Urumqi, China, 11–12 August 2018; Springer: Cham, The Netherlands, 2018; pp. 700–708. [Google Scholar]

- Gupta, Y.; Kulkarni, S.; Jain, P. Handwritten Signature Verification Using Transfer Learning and Data Augmentation. In Proceedings of the International Conference on Intelligent Cyber-Physical Systems, Coimbatore, India, 11–12 August 2022; Springer: Singapore, 2022; pp. 233–245. [Google Scholar]

- Wei, P.; Li, H.; Hu, P. Inverse Discriminative Networks for Handwritten Signature Verification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 5764–5772. [Google Scholar]

- Chen, J.; Zhang, D.; Suzauddola, M. Identification of Plant Disease Images via a Squeeze-and-Excitation MobileNet Model and Twice Transfer Learning. IET Image Process. 2021, 15, 1115–1127. [Google Scholar] [CrossRef]

- Hagström, A.L.; Stanikzai, R.; Bigun, J. Writer Recognition using Off-line Handwritten Single Block Characters. arXiv 2022, arXiv:2201.10665. [Google Scholar]

- Kancharla, K.; Kamble, V.; Kapoor, M. Handwritten Signature Recognition: A Convolutional Neural Network Approach. In Proceedings of the 2018 International Conference on Advanced Computation and Telecommunication (ICAC), Bhopal, India, 28–29 September 2018; pp. 1–5. [Google Scholar]

- Liu, J.; Zhang, W.; Tang, Y. Residual Feature Aggregation Network for Image Super-Resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 2359–2368. [Google Scholar]

- Wencheng, C.; Xiaopeng, G.; Hong, S. Offline Chinese Signature Verification based on AlexNet. In Proceedings of the International Conference on Advanced Hybrid Information Processing, Harbin, China, 17–18 July 2017; Springer: Cham, The Netherland, 2017; pp. 33–37. [Google Scholar]

- Bonde, S.V.; Narwade, P.; Sawant, R. Offline Signature Verification using Convolutional Neural Network. In Proceedings of the 2020 6th IEEE International Conference on Signal Processing and Communication (ICSC), Noida, India, 5–7 March 2020; pp. 119–127. [Google Scholar]

- Aini, Z.; Yadikar, N.; Ubul, K. Uyghur Signature Authentication based on Feature Weighted Fusion of Gray Level Co-occurrence Matrix. Comput. Eng. Des. 2018, 39, 1195–1201. [Google Scholar] [CrossRef]

- Ghaniheni, Z.; Mahpirat, N.Y.; Ubull, K. Uyghur Off-Line Signature Verification based on the Directional Features. J. Image Signal Process. 2017, 06, 121–129. [Google Scholar]

- Dey, S.; Dutta, A.; Toledo, J.I. Signet: Convolutional Siamese Network for Writer Independent Offline Signature Verification. arXiv 2017, arXiv:1707.02131. [Google Scholar]

- Dutta, A.; Pal, U.; Lladós, J. Compact Correlated Features for Writer Independent Signature Verification. In Proceedings of the 2016 23rd IEEE international conference on pattern recognition (ICPR), Cancun, Mexico, 4–8 December 2016; pp. 3422–3427. [Google Scholar]

- Chattopadhyay, S.; Manna, S.; Bhattacharya, S. SURDS: Self-Supervised Attention-guided Reconstruction and Dual Triplet Loss for Writer Independent Offline Signature Verification. arXiv 2022, arXiv:2201.10138. [Google Scholar]

- Manna, S.; Chattopadhyay, S.; Bhattacharya, S.; Pal, U. SWIS: Self-Supervised Representation Learning for Writer Independent Offline Signature Verification. arXiv 2022, arXiv:2202.13078. [Google Scholar]

| Authors | Data/(Person) | Method | Type | FRR% | FAR% | ACC% |

|---|---|---|---|---|---|---|

| Liu, L. [9] | Chinese (1243) | SigNet | WI | - | - | 90.09 |

| Liu, J. [20] | Chinese (2880) | CNN-OSV | WD | 11.52 | 10.51 | 82.75 |

| Masoudnia, S. [13] | Chinese (249) | AlexNet | SVM | 7.50 | 5.00 | 87.50 |

| Wei, P. [16] | Chinese (749) | IDN | WI | 5.47 | 11.52 | 90.17 |

| Ours | Chinese (100) | LTP | SVM | 9.38 | 9.00 | 90.81 |

| Ours | Chinese (100) | IDN | WI | 8.67 | 6.50 | 92.18 |

| Ours | Chinese (100) | IDN + SE | WI | 5.93 | 7.04 | 93.49 |

| Ours | Chinese (100) | IDN + ESA | WI | 9.51 | 5.51 | 92.40 |

| Ours | Chinese (100) | Our method | WI | 5.2 | 4.46 | 95.17 |

| Authors | Data/(Person) | Method | Type | FRR% | FAR% | ACC% |

|---|---|---|---|---|---|---|

| Aini, Z [23] | Uyghur (30) | GLCM | BP | 5.30 | 7.00 | 91.06 |

| Ghaniheni, Z [24] | Uyghur (30) | Directional feature | KNN | 9.09 | 5.75 | 92.58 |

| Ours | Uyghur (100) | LTP | SVM | 18.29 | 12.33 | 84.37 |

| Ours | Uyghur (100) | IDN | WI | 6.05 | 8.05 | 92.96 |

| Ours | Uyghur (100) | IDN + SE | WI | 6.84 | 6.50 | 93.56 |

| Ours | Uyghur (100) | IDN + ESA | WI | 6.10 | 9.21 | 92.35 |

| Ours | Uyghur (100) | Our method | WI | 7.47 | 3.89 | 94.32 |

| Authors | Dataset | Method | Type | FRR% | FAR% | ACC% |

|---|---|---|---|---|---|---|

| Dey, S [25] | BHsig-B | SigNet | WI | 13.89 | 13.89 | 86.11 |

| Dey, S [25] | Bhsig-H | SigNet | WI | 15.36 | 15.36 | 84.64 |

| Dutta, A [26] | Bhsig-B | LBP, ULBP | WD | - | - | 66.18 |

| Dutta, A [26] | Bhsig-H | LBP, ULBP | WD | - | - | 75.53 |

| Ours | Bhsig-B | IDN | WI | 5.42 | 4.12 | 95.32 |

| Ours | Bhsig-H | IDN | WI | 4.93 | 8.99 | 93.04 |

| Ours | Bhsig-B | IDN + SE | WI | 3.67 | 1.61 | 96.71 |

| Ours | Bhsig-H | IDN + SE | WI | 11.87 | 2.44 | 95.77 |

| Ours | Bhsig-B | IDN + ESA | WI | 3.79 | 11.78 | 96.70 |

| Ours | Bhsig-H | IDN + ESA | WI | 2.72 | 4.68 | 95.68 |

| Ours | Bhsig-B | Our method | WI | 3.14 | 1.50 | 97.17 |

| Ours | Bhsig-H | Our method | WI | 6.65 | 2.31 | 96.86 |

| Dataset | Method | Type | FRR% | FAR% | ACC% |

|---|---|---|---|---|---|

| Uyghur + Chinese | IDN | WI | 9.16 | 7.87 | 91.87 |

| Chinese + BHsig-B | IDN | WI | 15.36 | 15.36 | 84.64 |

| Chinese + BHsig-H | IDN + SE | WI | 7.10 | 14.46 | 89.22 |

| Uyghur + BHsig-B | IDN + SE | WI | 18.55 | 21.15 | 88.49 |

| Uyghur + Chinese | IDN + SE | WI | 5.08 | 4.47 | 95.40 |

| Han + BHsig-H | IDN + ESA | WI | 15.78 | 31.5 | 83.45 |

| Uyghur + Chinese | IDN + ESA | WI | 7.92 | 8.28 | 91.89 |

| Uyghur + BHsig-H | IDN + ESA | WI | 2.29 | 28.73 | 84.48 |

| Uyghur + Chinese | Our method | WI | 10.5 | 2.06 | 96.33 |

| Chinese + BHsig-B | Our method | WI | 7.30 | 8.07 | 92.33 |

| Chinese + BHsig-H | Our method | WI | 3.56 | 10.15 | 93.19 |

| Uyghur + BHsig-B | Our method | WI | 3.21 | 8.78 | 94.00 |

| Uyghur + BHsig-H | Our method | WI | 7.47 | 12.36 | 90.07 |

| Authors | Method | Type | Database | FRR% | FAR% | ACC% |

|---|---|---|---|---|---|---|

| Chattopadhyay, S [27] | ReSent + 2D attention | WI | BHsig-H | 8.98 | 12.01 | 89.50 |

| Ours | Our method | WI | BHsig-H | 3.75 | 2.57 | 97.20 |

| Manna, S [28] | self-supervised learning (SSL) | WI | Chinese | 58.30 | 27.80 | 64.68 |

| Ours | Our method | WI | Chinese | 5.2 | 4.46 | 95.17 |

| Zhang, S [14] | Bovw | WD | Uyghur | 3.58 | 8.81 | 93.81 |

| Ours | Our method | WI | Uyghur | 7.47 | 3.89 | 94.32 |

| Hamadene, A [2] | CT, DCCM | - | CEDAR + GPDS | 16.32 | 16.80 | - |

| Ours | Our method | WI | Chinese + Uyghur | 10.5 | 2.06 | 96.33 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xamxidin, N.; Mahpirat; Yao, Z.; Aysa, A.; Ubul, K. Multilingual Offline Signature Verification Based on Improved Inverse Discriminator Network. Information 2022, 13, 293. https://doi.org/10.3390/info13060293

Xamxidin N, Mahpirat, Yao Z, Aysa A, Ubul K. Multilingual Offline Signature Verification Based on Improved Inverse Discriminator Network. Information. 2022; 13(6):293. https://doi.org/10.3390/info13060293

Chicago/Turabian StyleXamxidin, Nurbiya, Mahpirat, Zhixi Yao, Alimjan Aysa, and Kurban Ubul. 2022. "Multilingual Offline Signature Verification Based on Improved Inverse Discriminator Network" Information 13, no. 6: 293. https://doi.org/10.3390/info13060293

APA StyleXamxidin, N., Mahpirat, Yao, Z., Aysa, A., & Ubul, K. (2022). Multilingual Offline Signature Verification Based on Improved Inverse Discriminator Network. Information, 13(6), 293. https://doi.org/10.3390/info13060293