Abstract

In recent years, the exponential growth of digital documents has been met by rapid progress in text classification techniques. Newly proposed machine learning algorithms leverage the latest advancements in deep learning methods, allowing for the automatic extraction of expressive features. The swift development of these methods has led to a plethora of strategies to encode natural language into machine-interpretable data. The latest language modelling algorithms are used in conjunction with ad hoc preprocessing procedures, of which the description is often omitted in favour of a more detailed explanation of the classification step. This paper offers a concise review of recent text classification models, with emphasis on the flow of data, from raw text to output labels. We highlight the differences between earlier methods and more recent, deep learning-based methods in both their functioning and in how they transform input data. To give a better perspective on the text classification landscape, we provide an overview of datasets for the English language, as well as supplying instructions for the synthesis of two new multilabel datasets, which we found to be particularly scarce in this setting. Finally, we provide an outline of new experimental results and discuss the open research challenges posed by deep learning-based language models.

1. Introduction

Text classification (TC) is a task of fundamental importance, and it has been gaining traction thanks to recent developments in the fields of text mining and natural language processing (NLP). Text classification methods share the common goal of designating a predefined label for a given input text, though this denomination can refer to a variety of specialised methods applied to different domains.

Classic examples of TC include information retrieval, topic labelling, sentiment analysis, and news classification. However, TC has practical applications that extend beyond simple categorisation, such as extractive question answering and summarisation systems. In this case, the intuitive notion of “label” is substituted with a choice between candidates (e.g., an answer or a sentence to include in a summary).

The speed at which textual information is currently being created has long outclassed manual solutions to these tasks, meaning that TC methods are not only useful, but also strictly necessary. Accordingly, developing accurate and unbiased TC systems is of paramount importance.

1.1. Text Classification Tasks

A variety of standard definitions for TC tasks exist in the NLP research area, often used as benchmarks to evaluate new methods. We outline the main representatives, approximately following the taxonomy proposed by Li et al. [1]:

- •

- Sentiment analysis (SA): the task of understanding affective states and subjective information contained in a piece of text, often categorised in terms of stirred emotions;

- •

- Topic labelling (TL): the task of recognising one or more themes for a piece of text (i.e., its topics);

- •

- News classification (NC): the task of assigning categories to news pieces, such as topics or user interests;

- •

- Question answering (QA): the task of selecting an answer to a question, selecting from potential candidate sentences (usually extracted from a context document). This task is usually framed as binary or multiclass classification;

- •

- Natural language inference (NLI): the task of determining whether two sentences entail one another (classifying if the entailment occurs in one of the two directions, or neither);

- •

- Named entity recognition (NER): the task of locating named entities within unstructured text, labelling them with predefined categories;

- •

- Syntactic parsing (SP): a series of tasks related to predicting morpho-syntactic properties of words, such as part-of-speech (PoS) tagging, speech dependencies and semantic role labelling.

It is worth noting that these are generic formulations, meaning that specific tasks will present themselves with slight differences as contextualized to a specific domain.

1.2. Text Representation

An important step required by any TC procedure is the projection of text features in a chosen feature space. Because of its lack of structure (from a computational point of view), it is necessary to apply a number of operations, in order to gradually transform it into a form that is digestible for a computer. Preprocessing must be mindful of the models that are intended to be used in the later stages of the classification pipeline, since there is no “silver bullet” solution.

Most notably, earlier methods rely heavily on a manual feature engineering step, which necessitates considerate treatment and domain expertise. Later methods based on deep learning, on the other hand, are notably different because of the automatic extraction of features. As we will explore, preprocessing is still important for these methods, though it may be applied differently because of the assumptions that they make.

1.3. Broad Categorization of Text Classification Methods

In this work, machine learning methods are divided into the two broad categories of “shallow” and “deep” approaches, which we proceed to define.

1.3.1. Shallow Learning Approaches

Earlier methods are frequently defined as “shallow learning” approaches. However, since this definition is not one that is particularly standardised or agreed upon, we clarify that, with this term, we refer to all those traditional or classical methods related to conventional machine learning. That is, this group encompasses all of those methods preceding neural networks of which the prediction is based on hand-engineered features. In addition, this category also includes neural networks with very few (0–2) hidden layers, which are themselves referred to as “shallow” and which bridge the gap between this group of methods and their deep learning-based successors.

Shallow learning approaches are the successors of rule-based approaches, which they surpassed in both accuracy and stability. Shallow learning methods are still popular in many practical contexts, or as strong baselines. While they do not scale well to large amounts of data, they shine when resources are too scarce for deep methods to be effective. These classical approaches require a feature engineering step that may be costly, depending on the complexity of the domain. While the computational side of this cost can be significant, the domain knowledge requirements that are necessary for the correct application of appropriate feature extraction techniques may be more difficult to achieve in practice.

1.3.2. Deep Learning Approaches

The advent of deep learning models has affected all fields of artificial intelligence, including text classification. These methods have gained traction because of their ability to model complex features without the necessity of hand engineering them, removing part of the domain knowledge requirement. Instead, work has gone towards the development of neural network architectures able to extract effective representations for textual units. Recent developments have been particularly successful in this, giving birth to semantically meaningful and contextual representations. Automatic feature extraction is particularly advantageous in modelling textual data, as it is capable of leveraging the underlying linguistic structure of a document. This structure is intuitive to us if we understand the language, but is usually incomprehensible to a machine.

1.4. Major Differences and Contributions

Recent publications have explored text classification methods from a generic perspective. Among them, we cite the work by Li et al. [1], which provides a complete investigation of models, ranging from shallow to deep. Kowsari et al.’s [2] survey provides an excellent exploration of preprocessing steps, such as feature extraction and dimensionality reduction. Minaee et al.’s [3] work, on the other hand, focuses solely on a thorough exploration of deep approaches, though it also provides quantitative results for classical methods in its experimental performance analysis.

This work aims to enrich the landscape of text classification surveys by giving an outlook of each step involved in the development of a classifier for textual data. Therefore, we provide a detailed description of the most important data preparation operations utilised in conjunction with text classification algorithms. These passages of the TC pipeline are often overlooked, yet understanding their usage and the motivation behind their choices can prove fundamental in building an effective framework for this task. We continue by summarising information about primary English TC datasets and a general benchmark of state-of-the-art approaches in various sub-tasks. Moreover, we provide results on two newly synthesized multilabel TC datasets, laying out the process to reproduce them. We believe this to be an important contribution, as the sub-tasks that they address (namely multilabel TL and NC) are underrepresented.

In summary, this study’s main contributions are as follows:

- •

- We present an analysis of TC procedures, with emphasis on each step of the TC pipeline;

- •

- We showcase a variety of datasets in English, and provide the code for synthesising two multilabel classification datasets;

- •

- We present an overview of quantitative results for main methods in the literature, as well as for the datasets that we presented, complete with an analysis of evaluation metrics.

The rest of the survey is organised as follows. Section 2 explores preprocessing operations in TC pipelines, going through standard operations as well as common tokenisation strategies for more recent, deep learning-based models. Section 3 describes methodologies utilised to project preprocessed data into feature space, starting again from earlier approaches, while also describing word embeddings based on shallow neural networks. Section 4 gives a brief overview of generic classifiers commonly used with such features, while Section 5 goes more in depth into explaining the current landscape of deep learning-based classification methods. We move towards the experimental part of the survey in Section 6, showcasing prominent datasets (as well as two newly synthesised ones) and quantitative results. Finally, we discuss future research directions in Section 7 and conclude in Section 8.

Code and datasets used for experiments in this work are published and available (when legally possible) at https://gitlab.com/distration/dsi-nlp-publib (accessed on 28 December 2021).

2. Preprocessing

Input data for natural language tasks like TC consist of raw, unstructured text. Textual information, unlike other types of data such as images or temporal series, does not possess an intrinsic numerical representation; before feeding it to any classifier, then, it must be projected into an appropriate feature space. Preprocessing procedures are therefore of particular importance, as without them, there is no foundation for feature extraction procedures nor classification algorithms.

In this section, we will provide an overview of the preprocessing operations frequently utilised in TC methods. In the first part, we will cover standard methodologies, most frequently utilised in preparation of manual feature extraction techniques (which are explained in Section 3). We instead reserve the second part of this section to preprocessing operations as contextualized to deep models. By providing an overview of these approaches, we aim to highlight differences and innovations utilised by more recent models, thus offering a more thorough and transparent outlook on how textual data representation is done by state-of-the-art approaches.

2.1. Standard Preprocessing Operations

Preprocessing operations clean and normalise input data in order to improve the results of the feature extraction stage, regardless of whether it is manually built (in earlier methods) or automatically learnt (in deep learning methods). In this section, we outline the most prominent preprocessing techniques.

2.1.1. Tokenisation

The most basic preprocessing operation that must be applied to text is that of tokenisation. This procedure is what determines the level of granularity at which we analyse and generate textual data, and may be generally described as the process of breaking a stream of text into smaller chunks (historically called tokens). Until recently, most NLP models have utilised words as their atomic unit of choice, but recent approaches have been decomposing text into smaller units (such as character n-grams or even more maximal forms of decomposition, such as underlying bytes [4]).

Tokenisation [5], while often taken for granted, is a complex matter in itself and has been widely researched. Conventional methods are rule-based, and may be as simple as separating by space, punctuation and contractions. Clearly, much more refined knowledge-based approaches have been developed, still based on linguistic concepts. However, recent developments have moved strongly in the direction of data-driven tokenisers, which yield better results, but in which tokens no longer correspond to the traditional definition of a typographic unit. In other words, the modern meaning of “tokenisation” refers to the task of segmenting a sentence into units that, crucially, do not have a linguistic motivation or explanation. Because some of these methods still utilise traditional tokenisers as an initial step, conventional approaches are now often called “pre-tokenisers”.

An in-depth exploration of this topic is outside the scope of this survey, and we point to Mielke et al.’s work [6] for an excellent historical review of the evolution of tokenisers over recent years. As both tokenisation and classification approaches evolved in parallel, it is more common to associate conventional methods with pre-tokenisers. On the other hand, deep learning methods tend to approach tokenisation with the more recent and sophisticated methods, as will be outlined in Section 2.2.

2.1.2. Stopword and Noise Removal

The set of tokens produced by the tokenisation procedure may contain unnecessary or misleading elements. Textual noise such as special characters or superfluous symbols should be removed. It can be useful to remove stopwords [7], i.e., non-informative words that appear in large numbers but carry no semantic importance. Other normalisation procedures, such as lowercasing, misspelling correction, and the standardisation of slang words and abbreviations, may be useful in reducing the number of different elements in the feature space.

These processes, however, may cause interpretation issues (e.g., in English, “US” could be used as an acronym for the “United States”, but lowercasing would make it indistinguishable from “us” as a pronoun) [2]. Furthermore, the removal of stopwords and other pieces of text can be detrimental to more recent approaches. Deep models are trained to understand natural language; elements that constitute the syntax of a sentence may be fundamental to the model for it to understand the context of a piece of text. As such, destructive operations should not be applied indiscriminately.

2.1.3. Further Standardisation of Text

On the other hand, classical approaches may benefit from a further simplification of the feature space. Such methods are unable to capture significant semantic information about words; two inflections of the same word, then, cannot be distinguished (e.g., child vs. children), and will be treated as completely different pieces of information. Because of this, simplifying words by reducing inflections to a common form may be beneficial. This is achieved through the process of stemming (deriving a root form for a word) [8] or lemmatisation (deriving the lemma/canonical form for a word) [9]. These procedures can improve the performance of the overall classification, but at the same time, are not devoid of issues. Most notably, words with different meanings might have the same root or lemma, and these approaches will make them indistinguishable.

There are other useful operations that could help towards the improvement of a TC algorithm. An example of this is part-of-speech tagging; a “part of speech” is a category of words with similar grammatical properties, such as noun, verb, and adjective. This is sometimes included as a preprocessing step whenever the task could benefit from limiting the vocabulary to one or a subset of these parts of speech (for example, one might be interested in only nouns and adjectives). PoS tagging is a classification task in itself, and it is easy to recognise how misclassifications can result in worse overall performance by the models employing this procedure. An error of this kind can either introduce unwanted words or seclude important ones by mistake.

2.2. Preprocessing for Deep Models

As briefly mentioned, preprocessing operations should not be applied without consideration of the method being used. Recent models based on deep neural network architectures usually include similar steps for removing special characters, lowercasing and stripping letters of accents and apostrophes. Additionally, tokenised documents are also truncated or padded to a specified number of tokens to ensure that the model receives input samples of uniform size (i.e., with the same number of tokens).

Most of the attention, however, is shifted towards the effective tokenisation of text. Modern tokenisation approaches must strike a balance between a sufficiently large and expressive vocabulary and one that is too expensive in terms of memory. Therefore, different approaches have been proposed, of which we highlight the most prominent. As a side note, it is fairly common for modern tokenisers to also apply normalisation operations within their procedures [6].

2.2.1. Tokenisation in Deep Models

Deep models learn a vectorial representation for each token seen in training data. Learnt vectors are stored inside an embedding matrix, a data structure that maps recognised vocabulary terms to corresponding embeddings. The matrix size is hence dependent on the number of vocabulary terms and the embedding dimensionality. As mentioned, limited time and available memory imply the inability to deal with arbitrarily large vocabularies. These considerations motivate the development of different tokenisation techniques aimed at reducing the vocabulary size, minimising, at the same time, the number of unrepresented words. The latter are denoted as out-of-vocabulary (OOV) words, and are the central weakness of word-level models; these lead to unknown tokens at test time, which are not acceptable for most NLP tasks.

In pre-tokenisers, due to the fact that a representation is learnt for every word in the training corpus, related words like derivations and inflections (e.g., “snowboarding” and “snowboard”) are considered distinct, forcing the model to learn redundant encodings for each of them. Considering that the number of learnable parameters of such models is linearly dependent on the number of distinct words in the corpus, this issue may lead to unmanageable space and time requirements. Conversely, character-level tokenisation would imply a vocabulary set composed of all single characters in the corpus; this results in a minimal vocabulary, at least for alphabetical languages. While this strategy reduces memory and time complexity with respect to word-level tokenisation, it makes it much harder for the model to learn meaningful token representations. For instance, it is much harder to learn meaningful representation for the character “s” than it is for the word “snow”.

Since both of these simple strategies are not entirely satisfactory, most models employ hybrid techniques that segment words in sub-words. The general principle is that frequently used words should not be split into smaller words, but rare words should be decomposed into more meaningful segments. Table 1 summarises the main features of tokenisers commonly paired with deep neural models, which are presented in the next sections.

Table 1.

Overview of modern tokenisation approaches. SentencePiece is included, but should not be mistaken as a separate algorithm.

2.2.2. Byte Pair Encoding

The strategy that is now considered the breakthrough for sub-word tokenisation is Byte Pair Encoding (BPE). Originally proposed as a data compression algorithm [10], it was later adapted for sub-word segmentation (e.g., segmenting “snowboarding” as “snow”-“board”-“ing”) [11]. When learning a tokenisation, this algorithm iteratively computes the occurrences of consecutive pairs of vocabulary terms and merges the most frequent one into a new vocabulary word. When presented with unseen text to tokenise, the same merging procedure is performed by executing all recorded merges in the order in which they were performed during training. BPE’s vocabulary is initialised with the set of characters that appears in the training corpus.

A recent variation of this strategy is used with the GPT-2 [12] and RoBERTa [13] language models (see Section 5.4.4). In particular, these models utilise byte-level BPE [14], which applies this procedure to raw bytes rather than characters.

2.2.3. WordPiece

The WordPiece tokeniser [15], initially devised as a solution to Japanese text segmentation problems, implements a data-driven approach for splitting words into sub-words. It relies on the creation of n-gram-based language models (see Section 3.2) to recognise recurring syllables, prefixes and word segments in a corpus. Referring to the previous “snowboarding” example, the tokeniser must learn that “-ing” is a common ending for verbs, and a word should be likely split before and not after it. Hence, the goal of the model is the creation of a vocabulary of sub-words, such that the size of the training corpus is minimal when segmented according to the selected vocabulary. A greedy algorithm is used to solve this optimisation problem. Again, the initial vocabulary is composed of all single characters appearing in the input documents. Every successive iteration increases the vocabulary size by one, selecting the pair of sub-words that maximises the language model likelihood (hence the greedy nature) and merging them into a new vocabulary term.

The algorithm is stopped when the expected likelihood given by merging falls below a predefined threshold, or the maximum vocabulary size is reached. Recent approaches such as BERT [16] use tokenisers based on WordPiece; BERT, in particular, utilises a vocabulary of 30,000 tokens calibrated on English text.

2.2.4. UnigramLM

Similar to WordPiece, UnigramLM [17] is also based on the usage of language models to judge sub-word candidates (the name is derived from the fact that it utilises a simple unigram LM). Differently from Wordpiece, however, UnigramLM goes in the opposite direction, by initialising to a vocabulary size that is much larger than the count of sub-words desired (such as all pre-tokenised words and common sub-strings), and proceeds to iteratively remove them. In every iteration, the expectation–maximization algorithm is used to prune the lowest probability items, cycling until the vocabulary has reached the desired size.

Most interestingly, this sort of probabilistic setup results in different possible segmentations, all of which are consistent with the given strings. While the algorithm chooses the most likely segmentation in practice, it allows a sampling of different tokenisations based on their probabilities (which are recorded during training), allowing for what is defined as “sub-word regularisation”, which has been shown to improve results on some tasks. While UnigramLM is not commonly utilised by itself, it has seen use as a component of SentencePiece, which we now describe.

2.2.5. SentencePiece

Kudo and Richardson [18] propose some improvements over the previously described algorithms in a software package called SentencePiece, which contains optimised versions of the above approaches (though it is often mistakenly assumed to be a separate algorithm). In particular, all the tokenisers described so far depend on knowing which characters act as word separators in the corpus; these characters can be language-dependent, and specific pre-tokenisation procedures can be used to create rules for recognising word boundaries. SentencePiece removes the dependence on this step by considering text as a raw stream of characters, including word separators. Additionally, it implements sub-word regularisation techniques, mainly to improve segmentation for machine translation tasks. XLNet [19] and XLM-R [20] use SentencePiece to tokenise input data.

3. Projecting into Feature Space

Generally speaking, preprocessing pipelines transform bodies of text into lists of separated, standardised tokens. From a technical perspective, words are mapped to an index-based vocabulary, such as to simplify the internal representation of the tokens. However, tokens (or their index) must still be represented in a form that is digestible by a machine, i.e., a vectorial form. Many have been proposed over the years; this section provides an overview of the most popular and effective ones.

3.1. Bag-of-Words

The most intuitive representation can be found in the bag-of-words (BoW). This approach simplifies bodies of text by considering them as unordered collections of words. Clearly, this has the disadvantage of ignoring sentence structure and semantic relationships between sentence elements (as if shuffled inside of a “bag” of words). Nonetheless, despite its strong assumptions, it has been shown to obtain good results and has seen wide use. In general, efficient feature extraction can usually lead to strong performances, even when discarding important but difficult to encapsulate information, which is why this approach has been utilised extensively in NLP as well as other machine learning fields (where it is referred to as “bag-of-features”) [21,22,23].

The original idea behind BoW models is to represent each word as a one-hot-encoded vector with the same size as the vocabulary. It is immediate to see that this may lead to size issues, since the vocabulary itself may have a cardinality in the order of millions. As such, BoW models are usually implemented in conjunction with feature extraction techniques based on the multiplicity of words, which allows one to maintain a single vector per document rather than one for each word. For this purpose, term frequency (TF) counts the number of times that a word occurs within a body of text. When applied to a corpus of texts, rather than the explicit count, it is common practice to utilise the relative frequency of a term in the text in relation to other documents. One may also observe that, in very large corpora, common words, in particular, are inherently less useful (as they appear in all documents, hence do not help in distinguishing them). Consequently, TF is often weighted by inverse document frequency (IDF) [24], which lessens the effect of common words by penalising their overall score (and boosting the one of rarer words).

Depending on the size of the vocabulary, the size of TF-IDF representations may still be excessively large. To alleviate issues related to time complexity and memory consumption, it is possible to set a limit to the maximum number of features in the vectors (effectively pruning low-score words from the vocabulary). Alternatively, it is also possible to utilise a dimensionality reduction algorithm on the full-sized representations. Although a detailed description is outside the scope of this review, the general aim of these algorithms is to find a mapping between these representations and a lower-dimensional, compressed feature space. Popular methods include Principal Components Analysis (PCA) [25], Linear Discriminant Analysis (LDA) [26], and non-negative matrix factorization (NMF) [27]. See Kowsari et al.’s [2] survey for an introduction of these methods.

3.2. Language Models

Language modelling is the task of predicting the likelihood of a string given a sequence of preceding or surrounding context words—at its simplest, guess the next word in a sentence. Language models play an important part in more recent, deep learning-based developments, but their inception much precedes neural networks. N-gram models [28] are some of the earliest implementations of language models, and work by assigning probabilities to sequences of words (i.e., sentences). The specific interpretation of this probability value is task-dependent; intuitively, a higher score is associated with a better-structured sentence (e.g., a good translation).

While the goal is to assign probabilities to whole sentences, the task is related to the computation of the probability of an upcoming word, and is framed as such. These models usually make a simplifying assumption (the Markov assumption): it is assumed that the probability of an upcoming word only depends on the last n words before it. The probability value itself can be computed with methods such as Maximum Likelihood Estimation (MLE) [29], a robust estimation approach used thoroughly in probabilistic approaches. Notably, the BoW model can be seen as an n-Gram model with .

3.3. Word Embeddings

While previous methods have focused on capturing the syntactic representations of words and, in some cases, a small subset of the syntactic relationships that tie them together in sentences, they critically still lack the capability of capturing their semantic meaning. A classic example of this issue is represented by word synonyms; though they are—semantically speaking—the same, these models cannot capture their similarity. When looking at the feature space, this translates into representations that are orthogonal to each other, meaning that they are seen as completely different and separate.

In the last decade, researchers have proposed word embeddings as a solution to this problem. Intuitively, this self-supervised feature learning technique is aimed at learning a mapping between each piece of text (most commonly words, hence the name) to a n-dimensional vector of real numbers. An embedding is therefore a vectorial representation, which is digestible by a machine, but also encodes part of the underlying meaning of the words. These approaches are based on neural networks, which learn these mappings through different learning procedures; in general, they are based on the assumption that a word’s meaning can be extracted from its surrounding words in a sentence (similarly to language modelling, but focused on the embeddings as individual entities).

Earlier word embeddings are often informally defined as “static”, which can be attributed to how, in their basic form, they encode words outside of context. Practically speaking, this means that they do not model polysemy [30] (where an individual word can have different meanings). The embedding for a word is just one, regardless of how many meanings it could have; if a word token is particularly polysemous, it is likely that its embedding will be a combination of its multiple senses.

As an example, consider the word “sound”; as a noun, we may associate this with something that can be heard, whereas, as an adjective, we can associate it with a description of something in good condition. However, these are just two of the almost 50 different meanings that this word can have; based on this consideration, it appears obvious that a single representation can hardly be effective at representing them all at once. As will be further discussed in Section 5.4.7, recent developments have proposed to utilise surrounding context words in order to differentiate a word’s meaning.

3.3.1. Word2Vec

One of the first popular families of word embedding architectures is to be attributed to Mikolov et al.’s Word2Vec [31,32]. Their approach utilises shallow neural networks in order to create a high dimensional vector for each word (though they are much smaller and denser than, for example, TF-IDF vectors). Word2Vec was first proposed with two architectural variants: the Continuous-bag-of-words (CBOW) and the continuous skip-gram.

The way in which the CBOW approach learns word representations is to try to predict a middle word based on its surrounding context words. The reference to bag-of-words derives from the fact that the order of the context elements is not actually taken into account. The continuous skip-gram model instead flips the task on its head, as it attempts to predict the neighbours of a word, given the word itself.

These tasks are clearly hard, and the model is not meant to learn perfectly how to guess these words; the true aim is not that of predicting the words correctly, but to create meaningful mappings for words to embeddings [31].

3.3.2. GloVe

Global Vectors for Word Representations (GloVe) [33] represent another popular word embedding technique. The approach is similar to Word2Vec, though it differs fundamentally by being a count-based model, whereas standard Word2Vec is a predictive model. While predictive models learn word vectors by minimising the loss between target and prediction given context words and vector representations, a count-based model essentially learns semantic similarity between words by explicitly probing the underlying statistics of the corpus, such as words co-occurrence [34]. The key difference is that Word2Vec only leverages local information (the context of each word) to obtain word vectors, while GloVe embeddings are trained by also considering global co-occurrence statistics. Something that should also be noted is that GloVe models utilise a dimensionality reduction step in order to handle the large dimensions of the word co-occurrence matrix that it uses in its calculations. Although compressing representations can arguably lead to a more robust representation (as it theoretically forces the model to try to preserve the most significant pieces of information), a bigger advantage comes from the fact that this approach is more suitable for parallelisation, making it easier to train on more data.

3.3.3. FastText

In the context of static word embeddings, FastText is one of the most novel techniques, developed by Bojanowski et al. [35]. The main concern addressed by this method is the fact that its predecessors ignore the morphology of words by assigning a distinct vector to each word. In FastText, each word is represented instead by a “bag-of-characters n-gram”. For example, the word “where”, with would be represented as:

as well as the complete word as a special sequence.

In terms of architecture, FastText embeddings are trained using a skip-gram architecture. Due to the way in which words are represented, however, the final vector for a word will be constituted by the sum of its character n-grams. This is beneficial, as it allows it to generate good word embeddings for rare words—their n-grams will also be shared by more common words. Most importantly, this also means that FastText is able to handle OOV words, as long as it has seen its composing n-grams during training. Both GloVe and Word2Vec are instead unable to handle the case of OOV words.

4. Overview of Shallow Learning Classification Methods

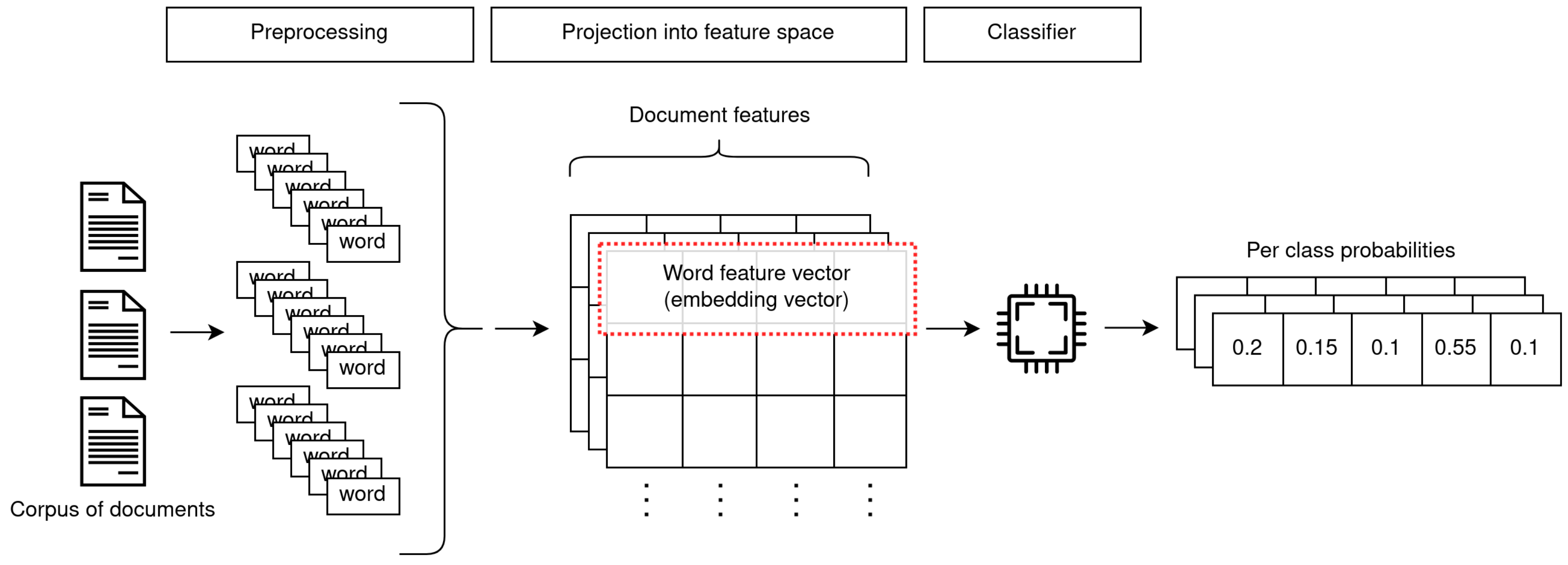

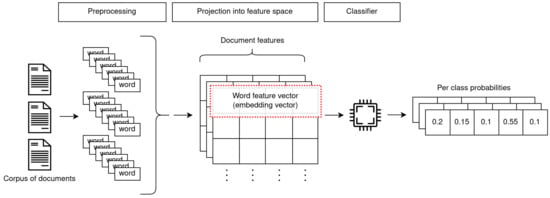

Shallow learning models were, up until recently, the go-to approach for text classification. In terms of actual classification algorithms, these methods mostly rely on general-purpose classifiers that are not specific to this context. The particular challenges presented by textual data are somewhat “offloaded” to the preceding steps of the TC pipeline (Figure 1), which consist in the extraction of machine-interpretable features and representations from documents (i.e., text interpretation).

Figure 1.

An overview of the two-step procedure adopted by shallow learning methods.

In this section, we provide a brief overview of TC classification algorithms, which are usually categorised as shallow learning-based. These methods are built on generic classification approaches; as highlighted, a large amount of focus is placed on scrupulous data preparation and feature engineering in order to obtain competitive results. As such, we only provide high-level concepts for these approaches, and refer to Kowsari et al.’s work [2] for a more in-depth exploration. Table 2 provides an overview of these methods.

Table 2.

Conventional shallow classification techniques.

4.1. Probabilistic Classification

Probabilistic graphical models (PGMs) have seen broad use thanks to their effectiveness. Models like Naïve Bayes (NB) [36] are especially popular because of their simplicity, both in structure and calculation process. The simplicity is derived from its independence assumption—that is, each feature is assumed to have no influence on the others. The essence of the Naïve Bayes approach stands in utilising the prior probability of a class given the features—as observed in the training set—to calculate its posterior probability. Other PGM models such as hidden Markov models [37] (HMMs) and conditional random fields (CRFs) [38] have been proposed to encapsulate the sequential nature of textual data (ignored by the independence assumption of NB).

4.2. K-NN-Based Classification

Text classification based on k-nearest neighbours (k-NN) algorithms [39,40] tackles the problem differently, by finding the k most similar labelled instances and, in its simplest iteration, assigning the most common category to the unlabelled instance being classified. This non-parametric method can be quite fast, since it only needs to calculate the distances between data points. However, its performance greatly depends on the chosen distance function, and different functions or even approximations may be needed to deal with large datasets, where k-NN-based methods’ performance may suffer [41]. Furthermore, the crucial assumption that similar instances are close in the feature space gradually falters as the number of dimensions in this space becomes larger and larger (i.e., the infamous “curse of dimensionality” [42]).

4.3. Support Vector Machines

Support vector machines (SVMs) [43,44] have been historically robust prediction methods, and have seen success by turning TC tasks into (possibly multiple) binary classification tasks. In short, SVMs construct an optimal hyperplane in a simple one-dimensional feature space, such as to separate the two categories belonging to the binary classification sub-task. To extend to multi-dimensional, non-linear classification, SVMs map their inputs to a higher-dimensional space, so that they may be able to better separate training categories. This procedure is referred to as the kernel trick, since the function that maps to this higher-dimensional space is called a kernel function. Choosing its form and parameters is fundamental to achieving good performance.

4.4. Decision Trees and Random Forests

Decision trees [45] are some of the earliest and most popular classifiers, based on an intuitive tree structure learning method that hierarchically decomposes the data space. The desired result is a tree in which each internal node serves to examine a feature, sending unlabelled instances towards one of its children, depending on the feature value found. Leaf nodes will represent specific categories. Although very intuitive, their basic form can be sensitive to small perturbations in data and overfitting. Random forests [46,47], collections of decision trees trained using random subsets of features, have achieved much better performance and are more used in practice.

4.5. Logistic Regression

One of the earliest methods for classification worth citing is that of logistic regression (LR) [48]. LR is a linear classifier, which predicts probabilities over classes by attempting to discern which features are most useful to discriminate examples. Its base formulation is best suited to binary classification tasks, but it can be extended to the multinomial case [49] by utilizing a formulation that includes (usually) the softmax function, or by creating an ensemble of multiple binary classifiers in a one-vs.-rest scheme.

4.6. Ensemble Learning

Integration-based (or ensemble learning) methods are also another noteworthy mention; these approaches aggregate the results of multiple algorithms, such as to obtain better performance and interpretation. These include various subcategories, the most popular of which consist of bagging and boosting. Bagging [50] (also referred to as bootstrap aggregation methods) averages the output of multiple classifiers without strong dependencies, training each of them separately on a subset of the training data (sampling with replacement). This procedure improves accuracy and stability, and random forests are the most common example of such an approach. Boosting [51] instead sequentially trains weak classifiers in order to obtain a strong ensemble. Each weak classifier “re-weights” the data points based on its own accuracy, giving greater weight to misclassified examples and lower ones to correct predictions. Thus, its successors will know to focus on the “hard” data points that were previously wrongly classified. A classical example of boosting is given by AdaBoost [52].

4.7. Neural-Based Methods

Even though, with regards to neural networks, deep learning methods are undoubtedly the most popular approaches in the NLP field as of now, shallow architectures have been used for TC tasks with competitive results. As an example, the FastText classifier is based on one such architecture; this method extracts n-gram features (based on the embeddings extracted as previously explained in Section 3.3.3), averages them, and then feeds them to a linear classifier (e.g., with a softmax output, if we are in a multiclass scenario) [53]. Another domain-specific shallow neural method is GHS-NET [54], which combines a CNN and GRU layer to classify biomedical texts into a predefined set of disease codes. The model achieved competitive results on several multilabel biomedical benchmark datasets.

4.8. Summary

While it covers the most popular approaches, the list of methods showcased in the previous subsections is not an exhaustive representation of all existing conventional classification models. What is perhaps most notable about these methods, however, is that they have arguably received the most attention in terms of improvements and updated iterations even in recent years, which is why we chose to highlight them. In particular, ensemble meta-algorithms provide an excellent way of utilising “weaker” classifiers and still obtain good results.

5. Deep Learning Methods

One of the limiting factors of classical models is their reliance on explicit feature extraction procedures. Good feature engineering requires extensive domain knowledge, which in turn, makes it difficult to generalise approaches to new tasks. Furthermore, manual feature crafting does not utilise the full potential of large amounts of training data because of how features are predefined rather than discovered.

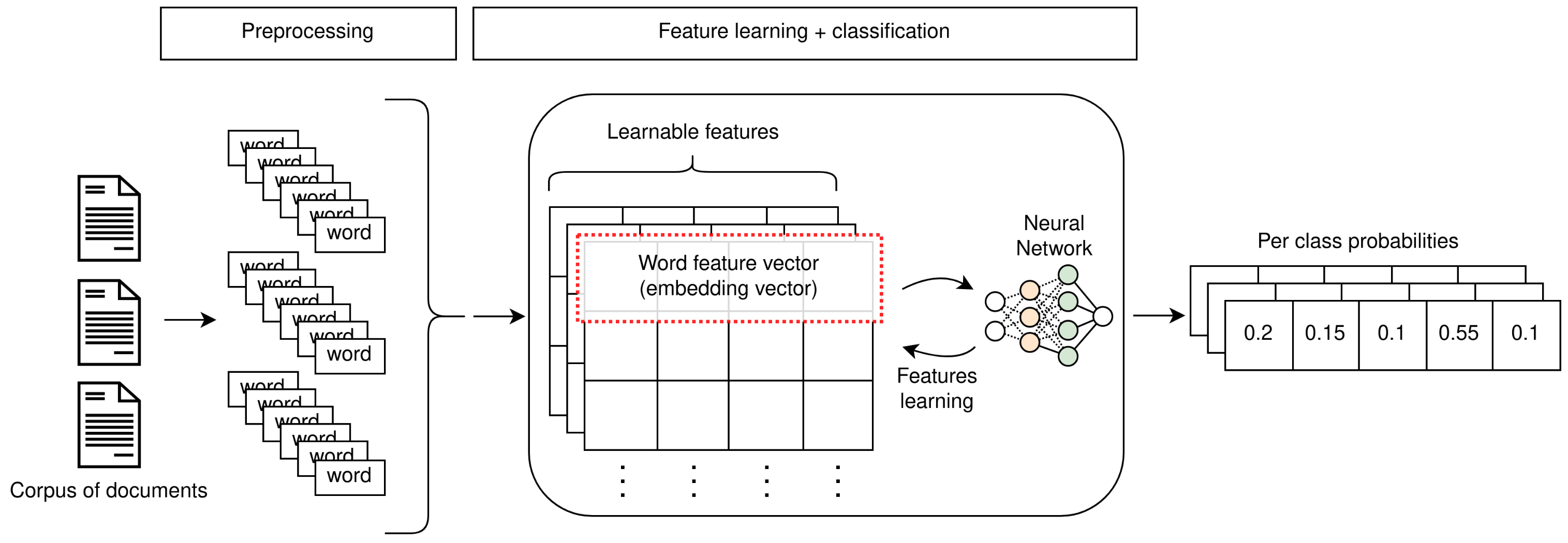

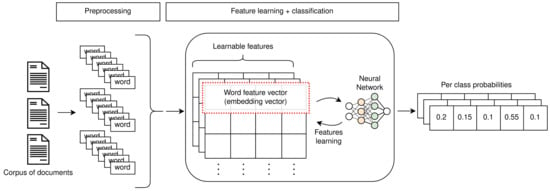

As a consequence, the development of word embeddings marks the beginning of a paradigm shift towards approaches able to leverage vast amounts of data. Deep learning approaches have gained popularity thanks to their ability to capture meaningful latent information in the training data (depicted in Figure 2); in the case of NLP, such architectures are able to create semantically meaningful representations of text. Recently developed contextualized versions of word embeddings have obtained outstanding results in classification, even when paired with very simple classifiers. An informal yet intuitive explanation of this result is that understanding the content of a body of text is the most important step in the classification pipeline, much like a person would likely be able to label a piece of text if they understood what it meant.

Figure 2.

Overview of the training procedure used with deep learning methods.

5.1. Multilayer Perceptrons

Simpler architectures, such as multilayer perceptrons (MLPs), bridge the gap between shallow and deep methods. These are some of the most basic neural network designs, yet they are the foundation of the first word embedding techniques, while also obtaining excellent results as stand-alone classifiers. MLP models such as these usually treat input text as an unordered bag-of-words, where input words are represented through some feature extraction technique (like TF-IDF or word embeddings). For example, deep averaging networks (DAN) [55] are able to perform comparably or better than much more sophisticated methods [3], despite ignoring the syntactic ordering of words. Paragraph-Vec [56] tries instead to incorporate such ordering and the contextual information of paragraphs by utilising an approach that is comparable with CBOW [57], leading to better performances than previous methods.

5.2. Recurrent Neural Networks

The most influential architectures of this period of time were, however, recurrent neural networks (RNNs). RNNs are a popular choice for any type of sequential data; these architectures are aimed at extracting information regarding the structure of sentences, thus capturing latent relationships between context words. The input of an RNN model is usually a sentence represented by a sequence of word embeddings, with its words entering the model one at a time. Long short-term memory networks (LSTMs) are the most popular variant of RNNs, as they address the gradient vanishing or exploding issues faced by standard RNN architectures [58]. Many TC approaches using LSTMs have been proposed, and we mention a few. Tree-LSTM [59] extends LSTMs to tree structures, rather than sequential chain structures, arguing that trees provide a more suitable representation for phrases. TopicRNN [60] integrates the capabilities of latent topic models to overcome the difficulties of RNNs on long-range dependencies. Both of these approaches exhibit improvements on baselines when applied to TC tasks (in particular, sentiment analysis). Howard and Ruder [61] propose the Universal Language Model Fine-tuning (ULMFiT), a recurrent architecture based on an LSTM network trained using discriminative fine-tuning, which allows the tuning of LSTMs using different learning rates in each layer. The Disconnected Recurrent Neural Network (DRNN), introduced by Wang [62], demonstrates the benefits of boosting the feature extraction capabilities of RNNs by incorporating position–invariance—a property attributed to CNNs—into a network based on gated recurrent units (GRU) [63]. In both cases, the proposed enhancements allow surpassing state-of-the-art results on several benchmark datasets. The introduction of bidirectionality in RNNs has also been proven beneficial [64], and has been applied to LSTMs, with notable results such as ELMo [65], a language modelling approach that relies on bidirectional LSTMs, and is one of the first milestones in the development of contextualized word embeddings.

5.3. Convolutional Neural Networks

Convolutional neural networks (CNNs) are commonly associated with computer vision applications, yet they have also seen applications in the context of NLP and TC specifically [66]. The easiest way to understand these approaches in this context is to examine their input, which also relies on word embeddings. While, generally speaking, RNNs input the words of a sentence sequentially, sentences in CNNs are instead presented as a matrix in which each row is the embedding of a word (therefore, the number of columns corresponds to the size of the embeddings). To make a comparison, in image-related tasks, convolutional filters usually slide over local patches of an image across two directions. Instead, filters in text-related tasks are most commonly made as wide as the embedding size, so that this operation only moves in directions that make sense sentence-wise, always considering the entire embedding for each word. In general, the main upside associated with CNNs is their speed and how efficient their latent representations are. Conversely, other properties that could be exploited while working with images, such as location invariance and local compositionality [67,68], make little sense when analysing text. Many approaches have been proposed, one of the most popular being TextCNN [69], a comparatively simple CNN-based model with a one layer convolution structure that is placed on top of word embeddings. More recently, the interest in CNN applications to TC tasks has been renewed thanks to the introduction of temporal convolutional networks (TCN) [70,71,72], which enhances CNNs with the ability to capture high-level temporal information. For instance, Conneau et al. [73] propose the Very Deep CNN classifier, a 29-layer CNN with skip connections, alternating temporal convolutional blocks to max pooling operations. They achieve state-of-the-art performance on reported TL and SA datasets. Duque et al. [74] modify the VDCNN architecture to lessen the resource requirements and fit mobile platform constraints, while retaining most of its performance.

5.4. Deep Language Models for Classification

In this section, we outline the theoretical notions that lead to the development of current deep language model-based approaches. Then, we proceed to discuss some of these models in more detail. Currently, very few other models are able to compete performance-wise with such classifiers (among them, we discuss the particularly interesting graph-based models).

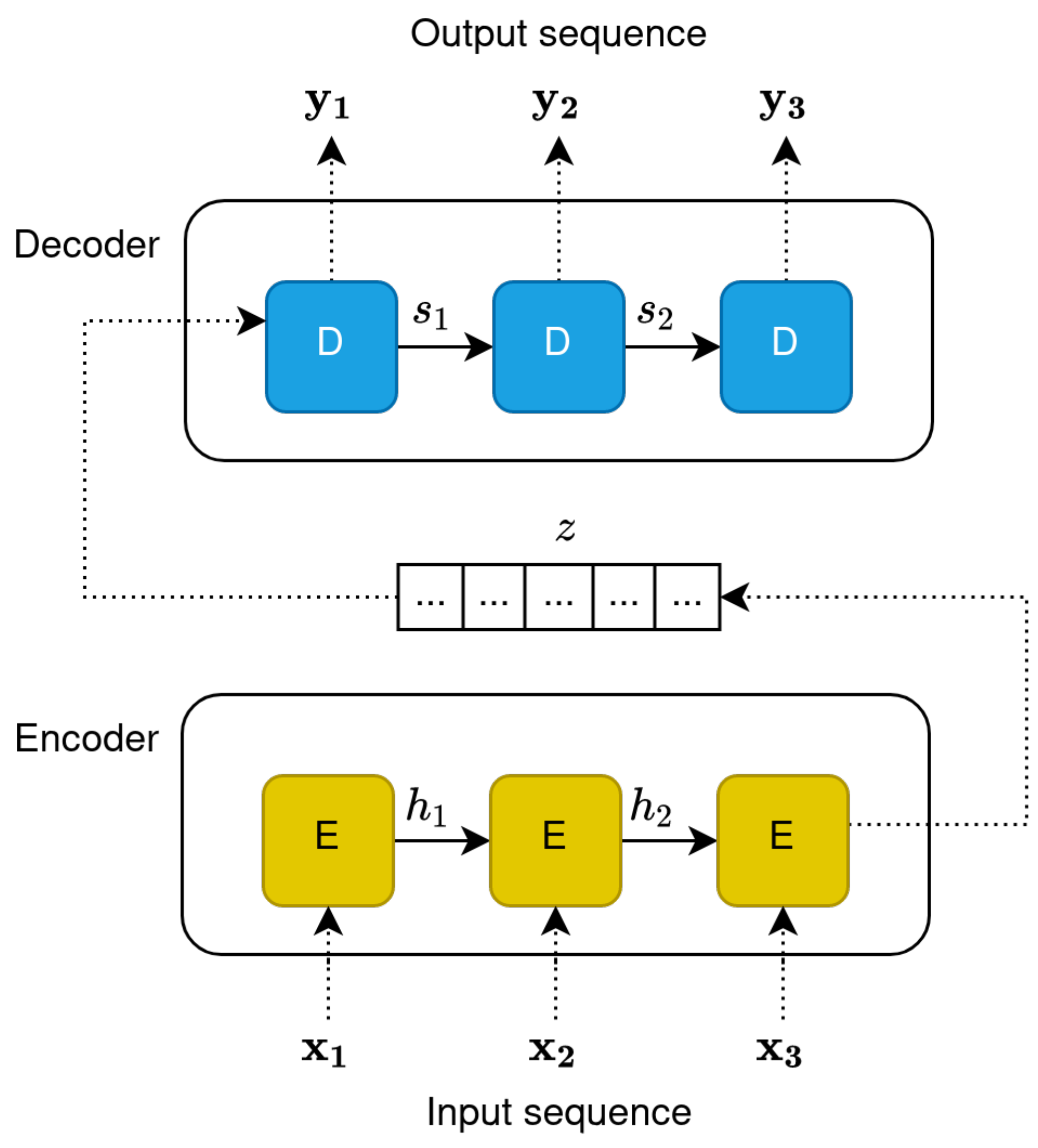

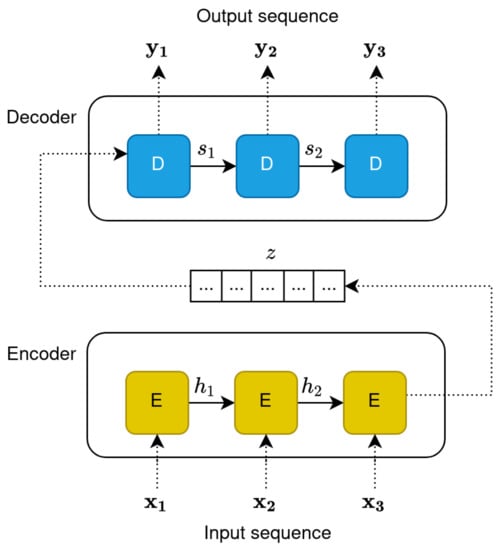

5.4.1. RNN Encoder–Decoders

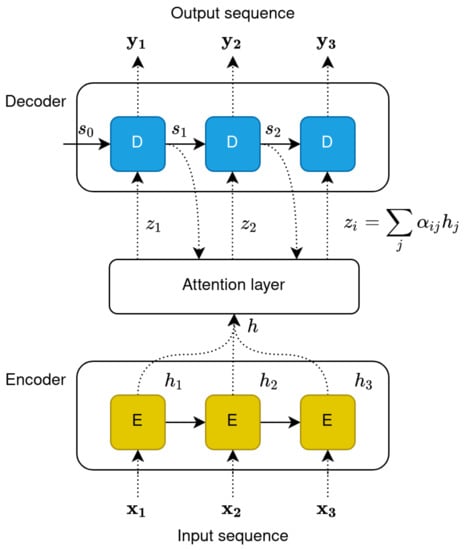

For many years, networks based on RNN-like architectures have dominated sequence transduction approaches. Researchers started pushing the boundaries of TC through recurrent language models (evolutions of classic word embedding techniques) and RNN-based encoder–decoder architectures [63,75] (Figure 3).

Figure 3.

Unfolding of encoder–decoder architecture based on RNN.

RNN encoder–decoder architectures are particularly important in this regard. As an example, consider a machine translation task; the input sequence may be a sentence in English, and the output sequences its translation in a different language. In this case, each word of the input sentence is fed to the encoder sequentially, such that at time step t, the model receives the new input word and the hidden state at time step . The input is hence consumed sequentially, and the dependence on the output of the previous step should, in theory, allow RNNs to learn long- and short-term dependencies between words. The output of the encoder is the compressed representation of the input sequence, called “context”. The decoder then proceeds to interpret the context and produce a new sequence of words (e.g., a translation in a different language) in a sequential manner, where every word depends on the output of the previous time step. With encoder–decoder networks, semantically and syntactically meaningful information is implicitly captured during the encoding phase (the context), which can then potentially be used for tasks such as text classification.

The main issue with this approach is that the encoder needs to compress all relevant information in a fixed-length vector. This was found to be problematic, especially for longer sentences, and it was reported that the performance of a basic encoder–decoder deteriorates rapidly as the length of an input sentence increases [76]. In addition, recurrent models have inherent limitations due to their sequential nature. Sequentiality precludes parallelisation, which means higher computational complexity. Longer sentences can run into memory constraints and, more crucially, are seen as RNNs’ true bottleneck because of how the network tends to forget earlier parts of the sequence, making for an incomplete representation (an issue linked to the vanishing gradient problem) [77].

One of the solutions devised to solve the limitations of recurrent architectures was that of the attention mechanism [76,78]. This mechanism would eventually become an integral part of the Transformer architecture, which represents one of the most important milestones in the field of NLP. We denote the particularly effective language models of this era as “Deep” (deep language models, DLM for short), such as to distinguish them from earlier approaches and highlight their reliance on deep architectures. As a matter of fact, depth in these architectures has been proven to be extremely beneficial to their performance, while LSTM-based models were unable to gain much from a large increase in their size [79].

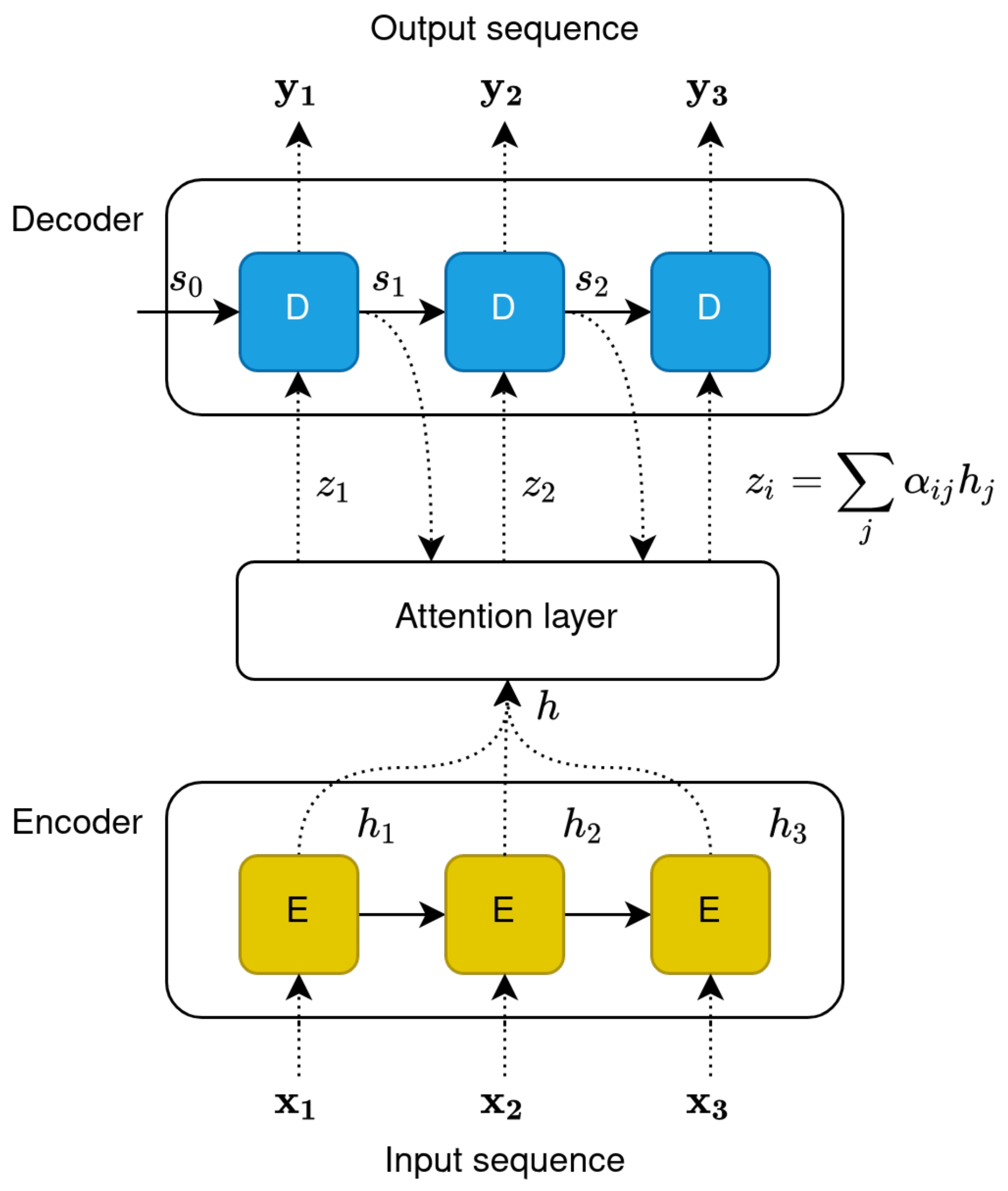

5.4.2. The Attention Mechanism

Attention was initially utilised as an enhancement to various architectures, and was meant to allow the learning process to focus on more relevant parts of input sentences (i.e., give them “attention”). As previously stated, seq2seq tasks have been traditionally approached with RNN-based encoder–decoder architectures, where both the encoder and the decoder are stacked RNN layers.

The concept of attention was introduced by Bahdanau et al. [76] to mitigate this problem in neural machine translation tasks. The authors argued that, by propagating information about the complete input sequence, the decoder can discern between input words and learn which of them are relevant for generating the next target word. Formally, the attention mechanism relies on enriching the input context () of each decoder unit, with the hidden state of the encoder (also called “annotation”) that carries information on the whole input sequence. Figure 4 highlights the architecture of a simple encoder–decoder recurrent model enhanced with the attention mechanism. Equation (1) describes the computation of the attention score between the input word at position j and the generated word i. This score can be seen as an alignment score, measuring how well the two words match. In the equation, denotes the previously generated word.

Figure 4.

Unfolding of encoder–decoder architecture with attention mechanism.

The context of each decoder unit is then computed as in Equation (2), by multiplying the annotations of every word position () with the attention score generated by the attention model.

The mechanism described here is generally addressed as “additive attention”. While there are different approaches to the integration of the attention mechanism in seq2seq architectures (content-based, dot-product, etc.), the goal remains to learn an alignment score that measures the relative importance between words in the input and output sequence.

Applications of attentive neural networks are now widespread, even beyond the boundaries of natural language processing, where attention initially proved its value. Notable examples of application in the text classification domain are hierarchical attention networks (HAN) [71,80]. These methods rely on the application of attention both at the word level, while encoding document sentences, and at the sentence level, roughly encoding the importance of each sentence with respect to the target sequence. Recently, Abreu et al. [71] have proposed a hybrid model (HAHNN) resorting to attention, along with temporal convolutional layers and GRUs.

However, attention has been since used as a strong foundational basis rather than just an added augmentation. The Transformer architecture is based on this idea, retaining a well-known encoder–decoder structure, but making no use of recurrence; instead, dependencies are drawn between input and output through the attention mechanism alone [81]. Transformers have been shown to lead to better results, while also gaining much in speed because of them being highly parallelisable.

5.4.3. The Transformer Architecture

Vaswani et al. [81] introduced the Transformer architecture, a novel encoder–decoder model that allows one to process all input tokens (e.g., words) simultaneously, rather than sequentially. Input sequences in Transformers are presented as a bag of tokens, without any notion of order. To learn dependencies between tokens, the Transformer relies on what is defined as a “self-attention” mechanism. Additionally, a special encoding step performed before the first layer of the encoder ensures that the embeddings for the same word appearing at a different position in the sentence will have a different representation. This step is called positional encoding, and its purpose is injecting information about the relative positioning between words, which would otherwise be lost.

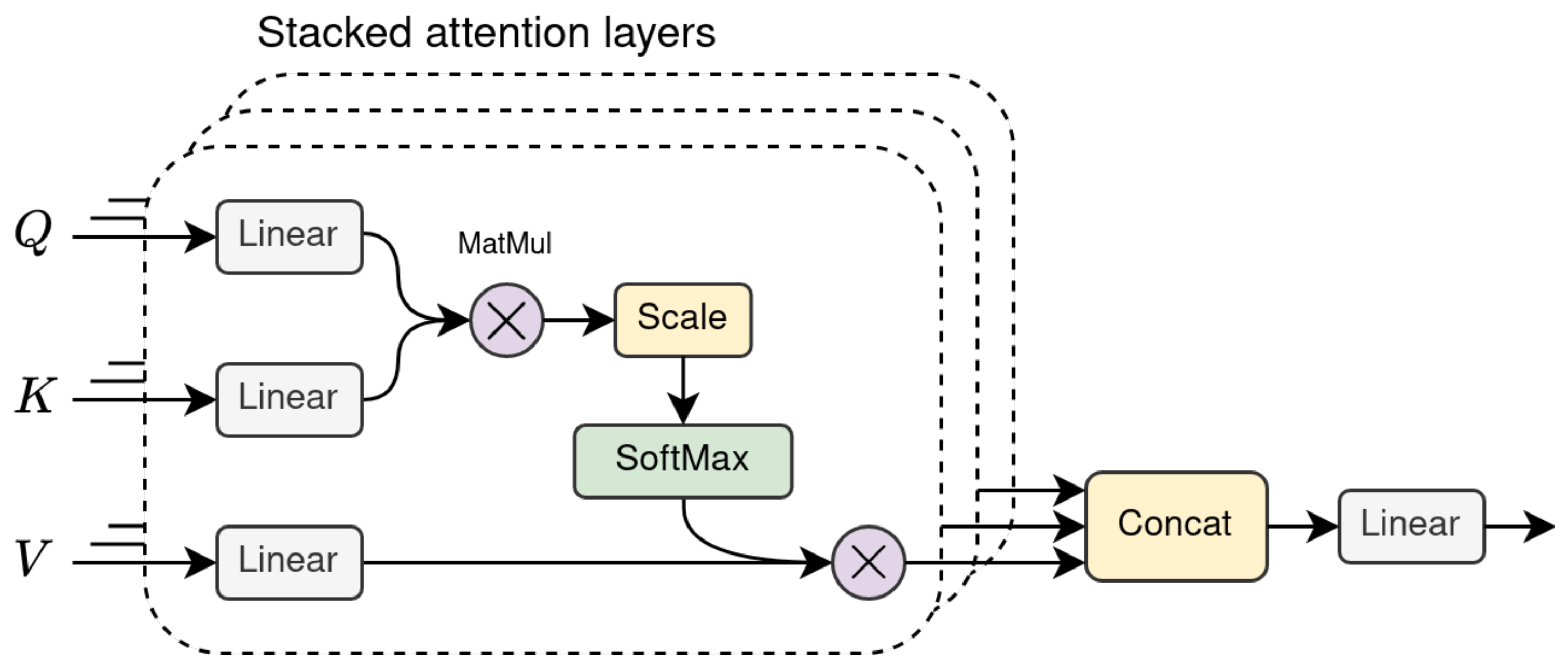

The key component of this architecture is the self-attention layer, which intuitively allows the encoder to look at other words in the input sentence whenever processing one of its words. Stacking multiple layers of this type creates a multi-head attention (MHA) layer, as shown in Figure 5. The individual outputs are then condensed in a single matrix by concatenating the head outputs and passing the result through a linear layer.

Figure 5.

The multi-head attention layer used in the Transformer architecture.

Encoder

In the encoding part, the input embeddings are multiplied by three separate weight matrices (Equation (3)) to generate different word representations, which the authors name Q (queries), K (keys) and V (values), following the naming convention used in information retrieval.

are the learned weight matrices, are the word embeddings for the input sequence and contain the word representations. The product of the query and key matrices (Q and K) is taken to compute the self-attention score. With respect to the general attention mechanism introduced in the previous section, this can be seen as the alignment score between each word and the other word in the sentence, which depends on the relative importance between them. Moreover, the authors decided to use scaled dot-product attention instead of additive attention, mainly for efficiency reasons. In order to improve gradient stability, the score is divided by the square root of the representation length for each word, and a softmax is used to obtain a probability distribution. Eventually, the representation of each word is obtained by multiplying the scaled term with the V matrix containing the input representation. This operation is defined in Equation (4).

The Transformer multi-head self-attention layer performs these operations multiple times in parallel. According to the authors, this was done to extend the diversity of representation sub-spaces that the model can attend to. The output of the attention heads is concatenated and passed through a linear layer to obtain the final representation that condenses information from all the attention heads. This representation is then summed with residual input, normalised and passed to a feed-forward linear layer.

Decoder

During the decoding phase, every decoder layer receives the output of the encoder (the K and V matrices) and the output of the previous decoder layer (or the target sequence for the first one). The key difference between the encoder and decoder layers is the presence, in the latter, of an additional MHA layer, applied to the K and V matrices from the encoder. Additionally, self-attention layers are modified into what are defined as “Masked” self-attention layers. During training, the Transformer decoder is initially fed the real target sequence—this is done to remove the necessity of having to be trained in an autoregressive fashion, hence becoming subject to the same bottleneck as RNNs (a procedure called “teacher forcing”). On the other hand, at inference time, the decoder is indeed autoregressive, since there are no target data and the generation of the next word necessarily depends only on the previously generated sequence. While predicting the token at position i, the masked MH self-attention layer ensures that only the self-attention scores between words at position are used. This is done by adding a factor M to the word embeddings in Equation (5). M is set to -inf for masked positions, and 0 otherwise. The exponential in the softmax operation will zero the attention scores for masked tokens.

This allows one to train the model in a parallel fashion, while ensuring that the model behaves consistently between training and inference time (without “cheating” at training time by looking ahead). The additional MHA layer of the decoder works like the one previously described, with the only exception being that the query matrix Q is the output of the preceding masked MHA layer. Residual connections and layer normalisation are also used in the decoder after each attention and feed-forward layer.

5.4.4. BERT and GPT

Recent DLMs are built upon and expand the Transformer architecture. The performance achievable on various NLP tasks, such as TC subtypes, has been boosted by what is considered the new standard in NLP transfer learning. This consists of utilising a pre-trained language model developed on generic textual data, which are subsequently specialised for the task at hand through a “fine-tuning” process.

The fine-tuning process consists of the adaptation of a model to a downstream task (i.e., classification). Classifiers are often just a small segment of the overall algorithm, yet its training procedure usually affects the pre-trained representation of the DLM as well. This is meant to specialise the overarching pipeline on the domain of the task, and usually leads to better results. Technically speaking, the internal representation of the pre-trained language model receives minimal changes from the fine-tuning process, which is a desirable outcome, as otherwise the DLM would incur an excessive loss of generality.

GPT

Although the original Transformer architecture is well suited for language modelling, researchers have argued that, for some tasks, it may learn redundant information in its encoding and decoding phases. It has been suggested that limiting the architecture to either encoders or decoders may result in equivalent performance and lighter models [82]. According to this principle, the Generative Pre-trained Transformer (GPT) [83] utilises a decoder-only architecture, which stacks multiple Transformer–decoder layers. The decoder block of a Transformer is autoregressive (AR), as it defines the conditional probability distribution of a target sequence, given the previous one. Simply put, GPT’s pre-training task is that of next word prediction. Precisely because it is an autoregressive model, the GPT architecture produces a language model of which the predictions are only conditioned by either its left or right context (GPT’s original architecture is left-to-right). This means that it is not bidirectional (a property that, for instance, BiLSTMs possess).

The decoder-only architecture has the additional benefit of performing better than traditional encoder–decoder models when dealing with longer sequences of text, making it particularly suitable for generative tasks (e.g., abstractive text summarisation and generative question answering). The authors of GPT provide adaptations for it to be used in a variety of downstream tasks, including classification.

BERT

Bidirectional Encoder Representations from Transformers (BERT) [16] closely followed the GPT model, and traded its auto-regressive properties in exchange for bidirectional conditioning. BERT’s architecture is, in contrast with GPT, a multi-layer bidirectional Transformer encoder. BERT’s training objective is also different, as it follows a “masked language model” (MLM) procedure. A percentage of the input is masked and, rather than predicting the next word, the network tries to predict masked tokens. This training strategy is crucial to achieving bidirectional conditioning, and allows the model to learn the relationship between masked words and their left and right context [84]. BERT is also trained on a secondary task, namely next sentence prediction (NSP), in which it is presented with two sentences and must guess in a binary fashion whether the second sentence follows the first. This task is meant to allow the model to better learn sentence relationships.

Most interestingly, the adaptation of BERT to downstream tasks is very simple. In fact, outstanding results have been obtained in classification by simply fine-tuning a model that passes the representations obtained by the encoders through a single-layer, feed-forward neural network [16].

5.4.5. Recent Transformer Language Models

Since the NLP landscape is constantly evolving, we deem it useful to provide a selection of a few recently proposed language models, and outline their main contributions and differences with previous methods. Most recent models build on the Transformer architecture or introduce variations on the BERT and GPT training approaches. Many are general language models jointly trained on multiple NLP tasks, and are evaluated on the GLUE [85] multi-task benchmark.

Direct Successors

BERT and GPT were just the starting point for the development of many variations and improvements, of which we cite the most influential. Robustly Optimised BERT Pretraining Approach (RoBERTa) [13] was introduced by Liu et al. as a successor of BERT. It improves the training procedure by introducing dynamic masking (masked tokens are changed during training epochs), while also removing the next sentence prediction task. The GPT model has also had successors, the most popular being the GPT-2 [12]. The development of GPT-2 models was mostly in terms of data utilised and the scale of the models (a trend that continued in GPT-3 [86]).

XLNet

As with GPT, XLNet [19] is an autoregressive model. More specifically, it is a generalised autoregressive pre-training approach, which calculates the probability of a word token conditioned on all permutations of word tokens in a sentence. To maintain information about the original relative ordering of words in the midst of all the permutations, XLNet borrows the idea of attention masking, by which, during context calculations, it masks tokens outside of the current context. As an example, consider an input sequence with four tokens and its permutation . The first element has no contextual knowledge, and, to reflect this, the attention mask should forbid looking at the other sequence positions (producing mask ), while the second element should know about the first (namely, that it should be in position 3, giving mask ). XLNet also introduces a two-stream self-attention schema to allow position-aware word prediction. In other words, this grants the model the ability for token probabilities to be conditioned on their position index without trivially knowing if the word should be part of the sentence or not. XLNet’s approach allows it to overcome the lack of bidirectionality of previous autoregressive models. Furthermore, this approach addresses the downsides of masked language modelling (namely, the discrepancy between pre-training and fine-tuning tasks), as it does not rely on data corruption. XLNet has achieved state-of-the-art performance on many TC benchmarks in English, as will be showcased in Section 6.3.

T5

The Text-To-Text Transfer Transformer (T5) [87] is a unified text-to-text model trained to solve a variety of NLP tasks. While general multi-task language models are mostly trained using task-specific architectural components and loss functions, T5’s authors build a unified learning framework that casts every NLP problem as a text-to-text problem. This allows them to use the exact same model, loss function and hyperparameters to produce a single, unified multi-task model. The architecture of T5 closely follows the original Transformer architecture. Differences from the original implementation are limited to the layer normalisation (simplified by not using additive bias), the usage of dropout on the linear and attention layers, and a relative positional encoding strategy. Here, word embeddings are altered depending on the alignment between the “key” and “query” matrices computed by the multi-head attention layer, instead of using a fixed embedding for every position. T5 is pre-trained on the C4 English corpus—the Colossal Clean Crawled Corpus, derived from the Common Crawl—using a masked language modelling objective (similarly to BERT) that masks 15% of the tokens in every sentence. Fine-tuning is performed on all tasks at once: all datasets—including the ones for translation, question answering, and classification—are concatenated in a single dataset using a sampling strategy to ensure a balance between samples from each tasks. Differently from BERT, which has an encoder-only architecture, T5 uses the same causal masking strategy used in the Transformer decoder, where the attention output is masked to prevent the model from attending to subsequent positions. T5 has had a recent successor, T0 [88], which utilises the same model, but pushes the boundaries of transfer learning towards zero-shot generalisation further.

DeBERTa

The Decoding-Enhanced BERT with Disentangled Attention [89] is another language model based on the Transformer architecture. As with BERT, the model is pre-trained using a MLM objective, and it is fine-tuned using adversarial training. This strategy improves its generalisation ability and its robustness to adversarial examples. The architecture introduces a disentangled attention layer. Unlike the Transformer, where positional encoding is added to the word content embeddings to create the input representation, DeBERTa encodes words and positions separately. Attention scores for every position are computed using disentangled matrices and explicitly depend on the word content and the word relative position in the sentence. The decoder is also modified to consider word absolute positions when predicting a masked token.

ByT5

ByT5 is an adaptation of the T5 model able to process raw bytes of text, instead of tokens. Models like BERT rely on a separate tokenisation step to chunk documents into a vocabulary of sub-words. This translates into heightened memory constraints, since large vocabularies require massive embedding matrices with a large number of parameters. Additionally, the authors argue that sub-word tokenisation is not robust in handling typos and lexical variants, and reducing all unknown words to the same out-of-vocabulary token prevents the model from distinguishing between different OOV situations. Instead of processing words or sub-words, ByT5 is fed UTF-8 bytes without any preprocessing operation. To represent byte-level embeddings, the model only needs a dense matrix of 256 embeddings with additional embeddings for the special tokens used to pad sequences and to signal the end of a sentence. In this case, there is no need to handle the OOV case, since all possible bytes are represented. Furthermore, removing the dependency on tokenisation allows for the simpler training of the model on multilingual corpora, since there is no need for language-specific tokenisation strategies. ByT5 uses a slightly modified T5 architecture with a heavier encoder, which is three times the size of the decoder. The authors empirically find that a bigger encoder works better for byte-level language models. The model is pre-trained with a “span corruption” self-supervised objective, where the model learns to reconstruct sequences of 20 bytes that are masked in the input sentences.

ERNIE 3.0

ERNIE [90] enhances the language models pre-training phase integrating knowledge-graph information. As with other language models, ERNIE is trained using different unsupervised tasks, including MLM, sentence reordering, and sentence distance. The latter is a variation of the NSP task, where the model is asked to predict whether two sentences are adjacent, within the same document (but not adjacent), or from different documents altogether. In order to integrate knowledge graph information in the training phase, the tasks of knowledge masked language modelling and universal knowledge–text prediction are added. The former objective is used to let the model learn higher-level entity representations, for instance by masking the name of a character (e.g., “Harry Potter”). The latter strategy extends the former by explicitly embedding a knowledge graph. The model is provided with a knowledge graph representation of the sentence (in the shape of a triple) and a sentence, both with masked tokens, and must use this information to fill in the blanks. According to the authors, these tasks allow the model to gain a lexical understanding of words, while traditional language models only capture more global syntactical and semantic knowledge. ERNIE is composed of two modules: a universal representation module, meant to capture shared word representations, and a task-specific module pair, one for natural language understanding tasks, and the other for natural language generation tasks. Both modules use the Transformer-XL architecture [91], which differs from the original Transformer, mainly for the addition of an auxiliary recurrence module to aid in the modelling of long sequences.

FLAN

The Fine-tuned Language Net (FLAN) [92] explores the usage of instruction fine-tuning to enhance the zero-shot generalisation ability of a general pre-trained language model (similarly to T0). Zero-shot learning aims at giving models the ability to solve novel tasks—unseen during training—at inference time. To do so, the authors gathered data from 62 NLP datasets and 12 different tasks—including language inference, translation, question answering, text classification—and aggregated them in a mixed multi-task dataset. A maximum of 30,000 samples are selected from each dataset in order to limit task imbalance. From the final dataset, samples are enriched with instruction templates, defined as descriptions of the task that the model should solve expressed in natural language. For instance, the sentence “classify this movie review as positive or negative” can be used to ask the model to perform binary classification. To increase diversity, 10 different templates are manually created for each dataset, some of them asking the model to perform collateral-derived tasks (e.g., asking for a movie classification from an SA task). FLAN uses a Transformer decoder-only architecture, similarly to GPT, with 137 billion parameters. The model is pre-trained on a collection of English documents that includes a wide variety of textual data, ranging from Wikipedia articles to computer code. This pre-trained model is then used for the instruction-tuning procedure.

5.4.6. Graph Neural Networks

Recent developments in the field of AI are exploring the application of neural networks to graph representations [93]. In this context, graph neural networks (GNNs) [94,95] are architectures that utilise such structures to capture the dependencies and relations between nodes. Well-established approaches are being generalised to arbitrarily structured graphs, most notably CNNs [96,97].

Successful Approaches

Representations are based on heterogeneous graphs in which nodes are both words and documents have seen wide success; TC is thus cast as a node classification task for document nodes. TextGCN [98] utilises a graph convolutional network (GCN) on text mapped onto a graph structure. Words are connected to other words as well as documents, but there are no inter-document relations (documents are able to indirectly exchange information through multiple convolutional layers). Weights of document–word edges are based on word occurrence measurements (usually TF-IDF), while word–word edges are weighted on word co-occurrence in the whole corpus (usually variations of point-wise mutual information [99]). The training procedure learns word and document embeddings, and is therefore connected to text embedding techniques (such embeddings are learnt simultaneously in this case). On this account, it is easy to see how graph architectures can also be integrated with deep language models. BertGCN [100], for example, trains a GCN jointly with a BERT-like model, in order to leverage the advantages of both pre-trained language models and graph-based approaches. Document nodes are initialised through BERT-style embeddings and updated iteratively by the GCN layers. Another example is the MPAD (Message Passing Attention Network for Document Classification) [101], which proposes the application of the message passing framework to TC. The nodes represent unique words and the directed edges encode the text flow and word co-occurrence statistics. Nodes and edges information is iteratively aggregated and combined using GRUs to update word representations.

Transductive Nature

Many GNN architectures include unlabelled test document nodes in the training procedure, making them inherently transductive. Transductive learning [102] can be useful because of how it can allow label influence to be propagated to unlabelled test data during training, notably removing the need for modelling the relation between data and target labels. Often, the data required for comparable performances are fewer than that for traditional inductive learning approaches. However, this also has the downside of not being able to quickly generate predictions for unseen test documents that were not included in the training procedure (i.e., online testing). Furthermore, building a GNN for a large-scale text corpus can be costly due to memory limitations [103].

Weaknesses and Solutions