Abstract

In the context of image-guided surgery, augmented reality (AR) represents a ground-breaking enticing improvement, mostly when paired with wearability in the case of open surgery. Commercially available AR head-mounted displays (HMDs), designed for general purposes, are increasingly used outside their indications to develop surgical guidance applications with the ambition to demonstrate the potential of AR in surgery. The applications proposed in the literature underline the hunger for AR-guidance in the surgical room together with the limitations that hinder commercial HMDs from being the answer to such a need. The medical domain demands specifically developed devices that address, together with ergonomics, the achievement of surgical accuracy objectives and compliance with medical device regulations. In the framework of an EU Horizon2020 project, a hybrid video and optical see-through augmented reality headset paired with a software architecture, both specifically designed to be seamlessly integrated into the surgical workflow, has been developed. In this paper, the overall architecture of the system is described. The developed AR HMD surgical navigation platform was positively tested on seven patients to aid the surgeon while performing Le Fort 1 osteotomy in cranio-maxillofacial surgery, demonstrating the value of the hybrid approach and the safety and usability of the navigation platform.

1. Introduction

Augmented reality (AR) head-mounted displays (HMD) allow the real-time visualization in front of the user’s eyes of additional virtual information within the real environment for an enhanced and user interactive experience in different fields of application [1,2].

The use of commercial AR HMDs in the medical domain, and in particular in surgery, is investigated by many authors [3,4,5,6,7].

In the case of open surgery, the translation of the state-of-the-art surgical navigators based on the virtual reality (VR) paradigm into a wearable system is indeed the natural evolution of such devices, potentially providing surgeons with an improved and more effective navigated experience with a positive impact also on the clinical outcome. AR HMDs avoid the need to continuously switch from the patient to the traditional navigator display view. Furthermore, the real patient view can be enriched with virtual elements consistent with the anatomy to offer the surgeon new guidance paradigms in respect to a complete VR environment.

Many authors report proof of concept experience in the surgical room with commercially available devices [8,9,10,11,12,13,14], underlining the enthusiasm of the surgical world in exploring the potentialities of AR HMDs but also appraising the limitation of using a device not engineered for the surgical tasks: (1) perceptual issues related to the rendering appropriate focus cues [15,16,17], (2) the absence of a framework specifically dedicated to the medical applications [18], (3) the need for a design that takes into consideration the surgeon posture to avoid neck overload [19,20] and the need of maintaining the sterility of his hands, and (4) the need for a millimetric virtual to real registration accuracy in many interventions.

Anyway, the translation of preclinical results from proof-of-concept and feasibility studies to daily practice has been initiated: some start-ups in the world are developing custom HMDs dedicated to surgery and the first FDA approval arrived in 2020 for the Augmedics device proposing AR applications in spinal surgery [21].

The objective of the paper is to describe the full architecture of a wearable surgical navigator implementing a new AR paradigm developed in the framework of the Horizon2020 VOSTARS project (G.A. 731974) and the challenges faced to entering the surgical room in compliance with the EU regulations for non-CE medical devices in clinical trials.

2. Materials and Methods

2.1. Theory

The basic requirements on which the design of the HMD was built were focused on the most important requirements for a wearable surgical navigator: (1) accuracy of the guidance system, (2) comfort for the user, and (3) safety for the patient.

AR HMDs offer the enhancement experience of the natural view with the superimposition of computer-generated three-dimensional (3D) objects implementing either an optical see-through (OST) or a video see-through (VST) paradigm [22].

With OST, the user preserves the direct view of the real scene through a near-eye semitransparent display/s on which the virtual content is projected. While, with VST visors, the real scene is acquired from one or t external cameras and it is then shown on the non-transparent display/s together with the virtual enrichment [23].

A clear advantage of the OST approach is the natural unhindered view of the real world. However, the point of view follows the real-time movements of the user’s eyes and eye gaze. This fact determines that the spatial coherence between the digital content and the real scene, estimated in different ways [24,25,26], is still suboptimal; moreover, perceptual and technological limitations have to be addressed, as they preclude the employment of such devices when a high virtual to real registration accuracy is needed in peripersonal space (i.e., accurately guide and perform surgical manual tasks) [16,27,28].

Despite these drawbacks, OST-based HMDs are mostly considered for surgical applications [11,12,14,19,29] for their intrinsic comfort of the full-scale resolution fruition of the real world and for the fact that they allow the safe completion of every task even if the display fails, since the direct view of reality is not compromised [22,30]. In VST, a technical issue causing the failure of the displays will cause the critical need to remove the HMD to allow the continuation of the task.

On the other hand, the VST paradigm can offer an accurate registration of virtual content to the real scene at the cost of a camera-mediated view [29].

Provided that the virtual information is properly registered with the patient, the use of the VST paradigm allows a pixel-wise digital blending between the computer-generated information and the camera-mediated view of the real patient. When properly implemented (i.e., ensuring low motion-to-photon latency and quasi-orthoscopic visualization of the working area [31,32,33]), VST introduces only negligible chromatic, temporal, and perspective alterations with respect to the naked-eye view that generally does not affect the manual performances [32], at least for short-term use. However, the occlusion of the direct view of the real anatomy raises safety concerns in case of system failure.

To take advantage of the peculiarity of the two modalities the VOSTARS visor was designed to offer both VST and OST viewing modality: high accuracy tasks are designed to be guided in VST modality, and non-guided tasks, or tasks where high accuracy is not demanded, are to be fruited in OST.

This hybrid feature was the first step to design and develop a system aimed at fulfilling strict requirements towards the realization of a functional and reliable AR-based surgical navigator. In the following paragraphs, the whole architecture is described.

2.2. HMD-Based Surgical Navigation Platform

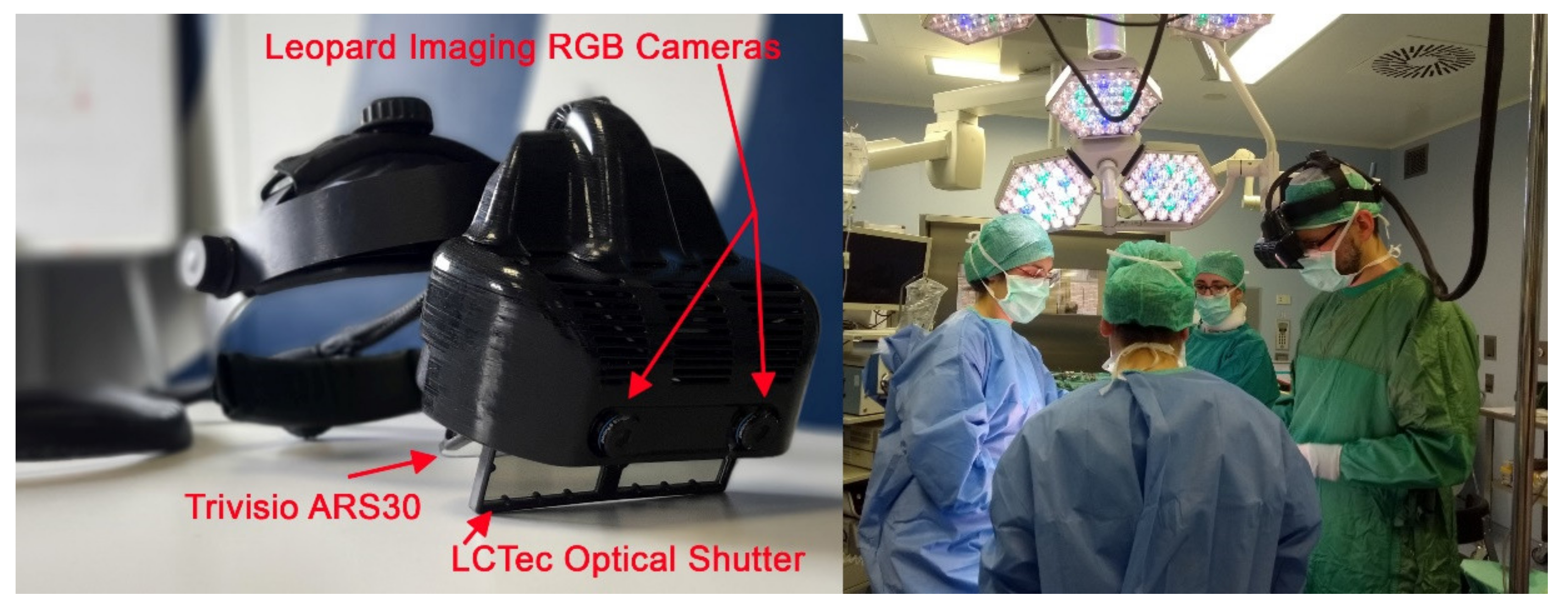

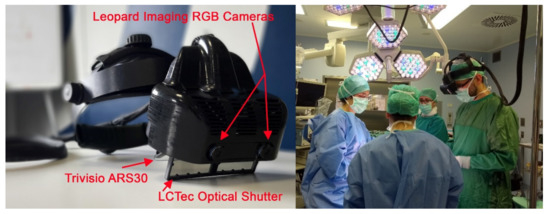

The hybrid AR HMD was designed and assembled on the top of a re-engineered commercial OST visor ARS.30r by Trivisio (Trivisio, www.trivisio.com, Luxembourg) (accessed on 12 January 2022).

The key features of the headset are listed below.

- -

- Hybrid OST/VST feature

The hybrid feature to deploy both an optical and video see-through-based augmentation paradigm was realized thanks to a pair of liquid-crystal (LC) optical shutters (OS). We selected tailored OS: FOS model by LC-Tec (LC-Tec Displays AB, https://www.lc-tec.se/, Sweden) (accessed on 12 January 2022), and we stacked them onto the optical combiners of the OST display thanks to an ad hoc-modified case.

The transparency of the LCOS is controlled by a PWM drive voltage. This allows the switching between the OST modality (OS transparent) and the VST modality (OS obscured). A study confirmed that the light was properly blocked offering a proper VST experience under operating room lighting conditions [34].

- -

- Displays specifications to provide proper guidance in the peripersonal space

The ARS.30r device is provided with two SGXA OLED panel microdisplays offering a large eye-relief of 3 cm each. The resolution is:

1280 × 1024 while the diagonal angle of view is 30°, resulting in an average angular resolution of 1.11 arcmin/pixel, comparable with human eye visual acuity and enough field of view on the surgical table. The collimation optics of the visor was selected to provide the display images in focus at about the surgical table distance (43 cm, depth of field range: 32 cm–58.5 cm). Moreover, the two displays were slightly toed-in with the optical axes that converge approximately to the display’s focus distance to avoid diplopia.

- -

- AR in VST

The visor is housed in a 3D-printed plastic frame to incorporate the two: OS in front of the commercial OST displays, and a pair of world-facing RGB cameras to provide the VST view as well as for the inside-out tracking of the scene. The stereo camera pair is the LI-OV580 stereo (Leopard Imaging, www.leopardimaging.com, Fremont, CA, USA) (accessed on 12 January 2022) providing a USB3.0 electronic interfacing board that syncs the signal coming from two 1/3” OmniVision CMOS 4M pixels sensor (pixel size: 2 μm) coupled with an M12 lens with 6 mm focal length, f/5.6, chosen to ensure a sufficient camera FOV able to cover the entire display angle of the view.

The stereo camera pair is mounted on the top of the visor closer to the displays with a mean anthropometric interaxial distance (63 mm) to mitigate the effect of the camera-to-eye parallax and prevent substantial distortions in the patterns of horizontal and vertical disparities between the stereo camera frames presented on the displays, thus achieving a quasi-orthostereoscopic perception of the scene under VST view.

- -

- Computing Unit

The HMD is connected to an external computing unit to control the hardware components and to run the AR software framework.

The computing unit is a gaming laptop (Alienware M15R, Dell Technologies, www.dell.com, Round Rock, TX, USA) (accessed on 12 January 2022) with Intel Core i7-9750H CPU@ 2.60 GHz, 6 cores, and 12 GB RAM. The graphic card processing unit (GPU) is a Nvidia GeForce RTX 2060 (NVIDIA CORPORATE, www.nvidia.com, Santa Clara, CA, USA) (accessed on 12 January 2022).

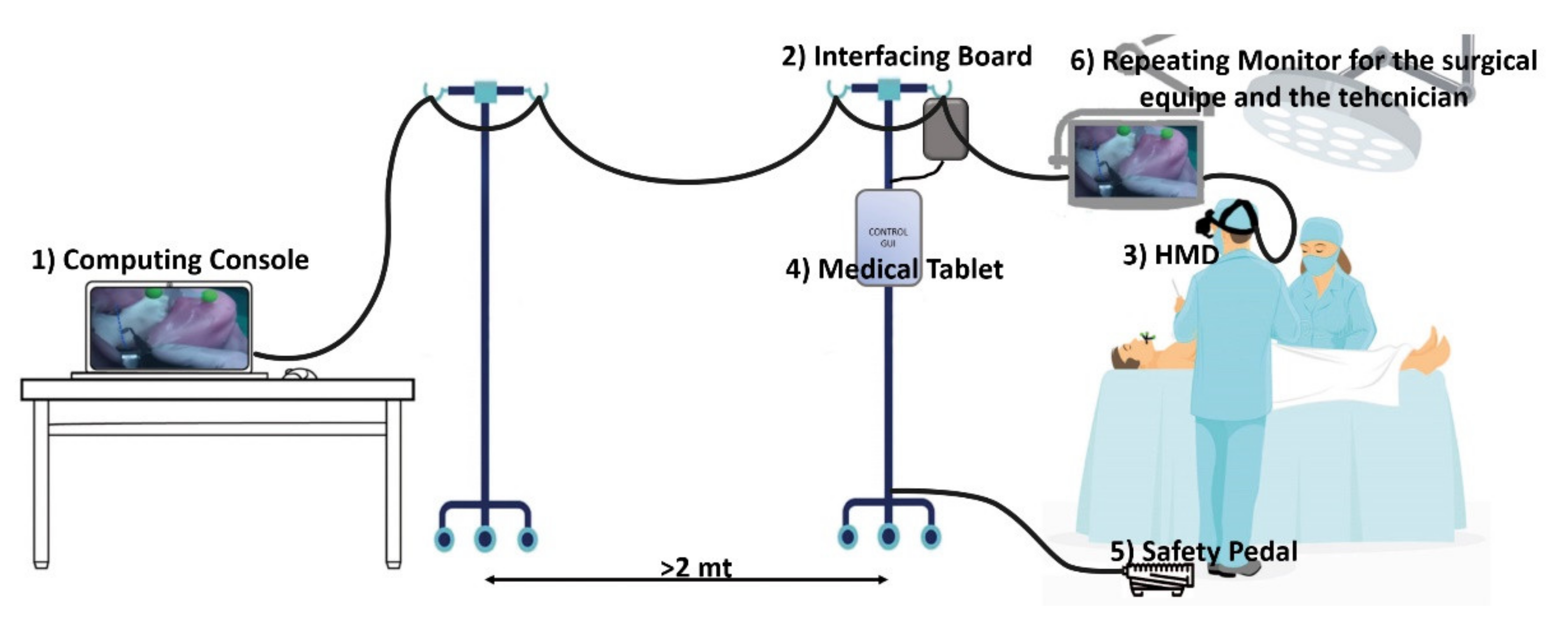

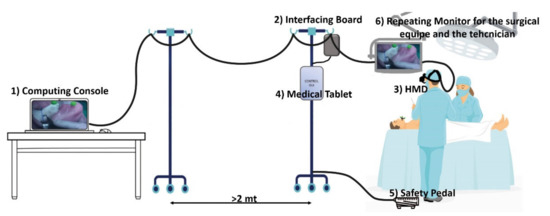

The selected laptop is not yet graded as a medical laptop, thus the console with the laptop was put outside the “surgeon area” (i.e., at least 2 mt away from the operatory table) for regulatory compliance issues.

- -

- Cabling and Safety Pedal

A foot-operated pedal is also provided to allow the surgeon to directly command the OST/VST switching, thus allowing immediate switching to a natural view during the surgical procedure.

This pedal was designed as an emergency tool to abort the VST modality, in the case of an emergency. In the case of a system failure, the pedal pression electromechanically forces the optical shutter open and the OST display turns off to restore the user a natural view.

The safety pedal is connected to a custom electronic board that drives the optical shutter and interfaces the computing unit with the other devices. See Figure 1.

Figure 1.

The surgical navigator platform set up in the operating theatre: the computing unit (1) is connected to the interfacing board (2), which connects the surgeon HMD (3), the medical tablet (4) the safety pedal (5), and the stand-up display for the surgical team (6).

All the cables from the HMD were arranged on a single-cable bundle that runs from the visor to the computing unit console thanks to a bridge obtained with two pole stands that ensure freedom of movement to the surgical team allowing the computing unit console to be far enough away from the surgical field for regulatory compliance issues.

- -

- AR Software Framework

The software framework is developed in C++ under a Linux operating system with an object-oriented design and a CUDA-based architecture to leverage the power of parallel GPU computing. The software relies on OpenCV API (Open Source Computer Vision Library, version 3.4.1., www.opencv.org) (accessed on 12 January 2022) for computer vision algorithms and low-level image operations [35]. To render the virtual scene, we used the open-source library VTK for 3D computer graphics, modelling, and volume rendering [36].

The main key functions of the software framework are:

- The AR software framework is conceived for running AR applications for surgical guidance by supporting in situ visualization of medical imaging data, and it is specifically suited for AR stereoscopic headsets, both commercial and custom-made. As comprehensively described in [37], the software can provide both optical and video see-through-based augmentations of the real scene. Under the OST modality, the user sees the real-world scene through the semi-transparent optical combiners without the need for taking the visor off, and with only the computer-generated elements rendered onto the two microdisplays. Under the VST modality, the direct view of the real world is mediated by the external RGB cameras anchored to the headset. The camera frames of the real scene are first digitally blended with the computer-generated content, and the resulting video streams are fed to the microdisplays of the HMD.

To ensure accurate real-to-virtual alignment for both see-through modalities, the rendering pipeline underpinning the software framework allows the setting of the intrinsic and extrinsic projection parameters of the virtual rendering cameras offline and at runtime (the key functionalities of the rendering pipeline are more thoroughly described in [38]):

- Under VST modality, and for each display side, the rendering pipeline of the virtual camera encapsulates the intrinsic and extrinsic camera parameters of the associated real camera. The intrinsic parameters are determined offline through a standard calibration procedure [39], and they are loaded when the application launches, whereas the extrinsic parameters are obtained through an inside-out marker-based tracking mechanism (as described in point 2.);

- Under OST modality, and for each display side, the intrinsic and extrinsic parameters of the off-axis virtual rendering camera are determined offline for generic user’s eye position through a reliable procedure that relies on a camera used as a replacement of the eye and placed within the eye-box of the OST display [40]. The intrinsic and extrinsic parameters of the two eye/display pinhole camera models obtained from the calibration procedure can be further refined at runtime by the user to account for the actual user’s eye position [38].

- 2.

- A dedicated optical self-tracking mechanism: the head-anchored RGB cameras used for implementing the VST augmentation also provide the stereo localization of three colored spherical markers conveniently placed on patient-specific surgical templates or directly on the patient body, thus without requiring obtrusive external trackers or additional tracking cameras [37].

- 3.

- An automatic image-to-patient registration strategy based on optical markers anchored on patient-specific templates. In particular, in the case of maxillofacial surgery, we designed an occlusal splint that embeds the three optical markers (“maxilla tracker”). The positions of the optical markers are dictated in the reference system of the computed tomography (CT) dataset. By determining their position with respect to the tracking camera, the pose of the 3D virtual planning can be directly computed in a closed-form fashion by solving a standard absolute orientation problem with three points (i.e., estimating the rigid transformation that aligns the two sets of corresponding triplets of 3D points) [37].

- -

- Control Tablet and GUI

A graphical user interface (GUI), consisting of a Microsoft Windows-based medical panel PC (AIM-55 8” Medical Grade Tablet, Advantech), is used to set the OST or VST modality and to select the virtual elements to visualize.

The graphic interface is connected to the AR software framework through a TCP/IP protocol. A unique configuration file (.ini file) is loaded at the beginning of the intervention both by the AR software framework and by the tablet GUI application. In the configuration file, patient-specific information (anatomical 3D models, surgical plan, surgical workflow) and application-specific information (tracking strategy, camera calibration parameters) are defined. Once loaded, both the framework and the GUI are configured for the specific intervention.

The GUI, which is fully reconfigurable, at this moment allows an assistant to: (1) set the transparency of the virtual objects, (2) switch between VST/OST modality, and (3) launch the registration procedure.

Moreover, an acoustic alarm is set to warn in the case of a loss of connection between the control tablet and the computing unit, warning the surgeon that the application may be in a failure status.

- -

- Mechanical Key aspects

From an ergonomic point of view, the HMD design took into account the fundamental need for a reduced workload for the surgeon [20]. This was translated not only on a design-oriented generally balanced weight, but also on the possibility for the surgeon to wear and use the visor in his natural working posture (with the eye gaze oriented to see down towards the surgical field without bending the neck). Thus, the frontal part of the visor is 30° oriented to bring the displays in front of the surgeon’s eyes naturally gazing at his/her hands on the surgical field. Several designs were produced during the project. The VOSTARS1.0 design was the first one consolidated that included the bending option of the frontal part (thanks to the link to the original Trivisio head support) and all the components arranged in an ideal form factor, the frontal case was designed in the CAD software CREO6, 3D printed with the FDM Stratasys Dimension Printing and finished for sealing and cleaning reasons thanks to the 3DFinisher machine produced by 3DNextech (3DNextech s.r.l., www.3dnextech.com, Livorno-Italy) (accessed on 12 January 2022), with a final weight of 580 gr.

The VOSTARS1.0 design was the one that was tested against the regulatory constraints to obtain the approval for the clinical trial, Figure 2.

Figure 2.

Left: The VOSTARS prototype in the design version 1.0 that underwent regulatory testing and was approved for the clinical trial; Right: the surgeon wearing the visor during an intervention.

Subsequent design started from VOSTARS1.0 and went in the direction of more esthetics, casted materials, and refined mechanical movements.

- -

- Visualization Modalities and surgical workflow

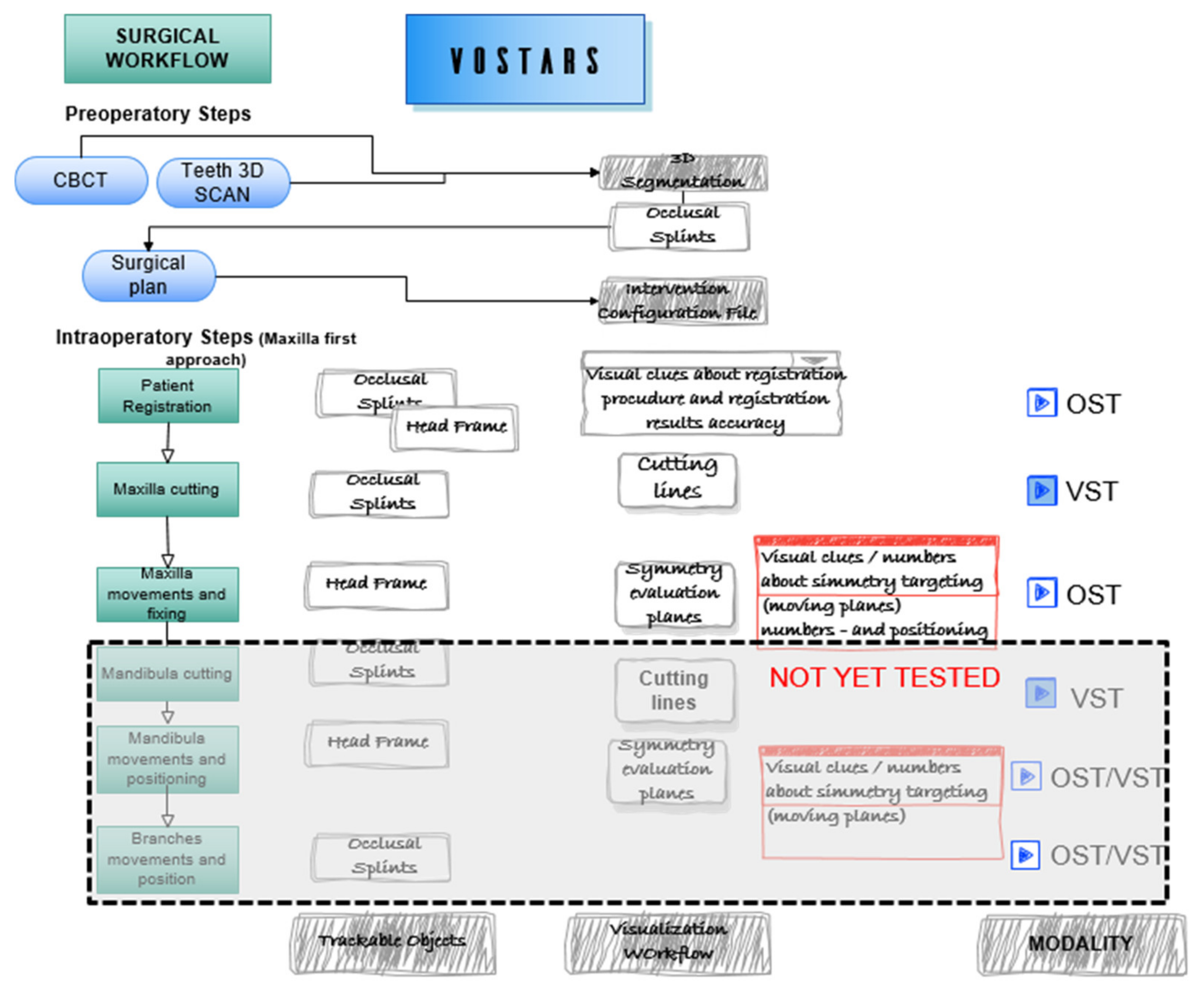

The VOSTARS surgical navigation platform is reconfigurable depending on the surgical intervention to be performed. For each intervention, a study of the surgical workflow allows us to determine the tasks that need to be guided and the better modality to guide them. The 3D models to be loaded in each phase of the intervention are stored in VRML (Virtual Reality Markup Language) file format and their names are reported in the unique configuration file shared between the computing unit and the control tablet.

In general, VST tasks are the ones that demand higher guidance accuracy.

On the other hand, the OST modality can be used just to have the unhindered free view during non-guided tasks: the operator can easily switch between the navigated task and the free operation, without the need to remove the visor; OST can also be used to guide tasks where there is not a direct superimposition between the virtual and real content.

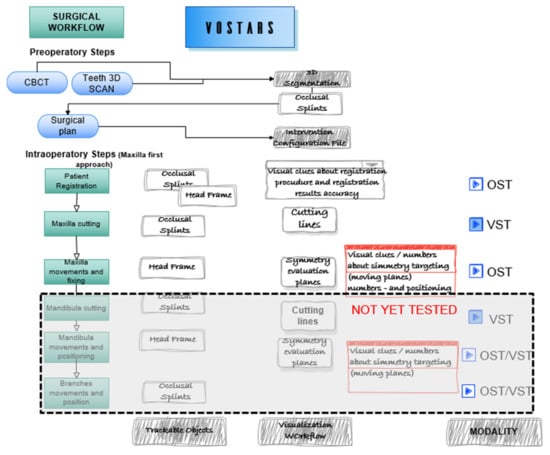

The intervention selected for the clinical trial [41] was analyzed and segmented to define the VST guided tasks, e.g., leFort1 osteotomy tracing, and the OST tasks, e.g., repositioning of the maxilla following a plan (the latter tested only on phantoms). Figure 3 depicts the surgical workflow of a LeFort1 surgery with the “maxilla first” [42,43] approach showing the surgical tasks where VOSTARS is involved and the selected modality.

Figure 3.

Surgical workflow of the selected maxilla-facial surgery. The intervention was segmented in the main tasks to be guided with VOSTARS.

During the intervention, the surgeon can select the modality (OST/VST) to view the virtual content in augmented reality, according to the specific surgical task to be performed.

- (a)

- VST-oriented tasks

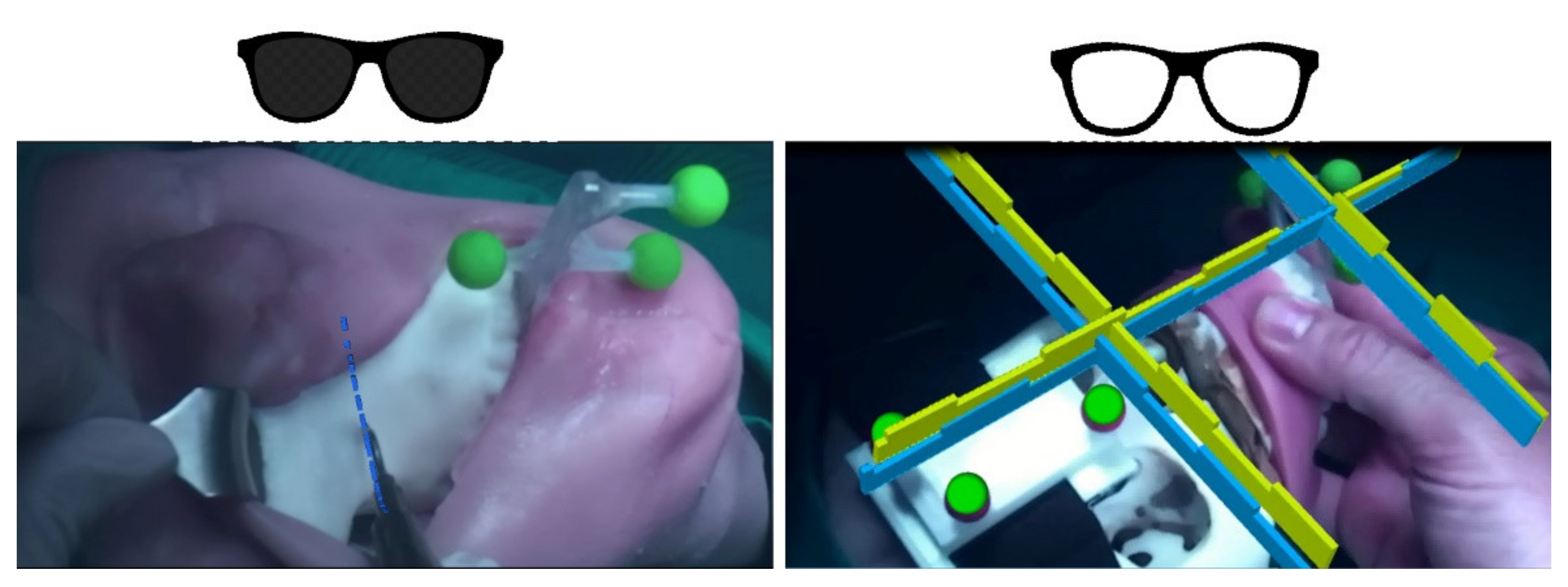

Under the VST modality, the system can offer an accurate registration between digital and real data; hence, this modality is strongly recommended for high-precision surgical tasks, such as the osteotomy tasks which require sub-millimetric accuracy.

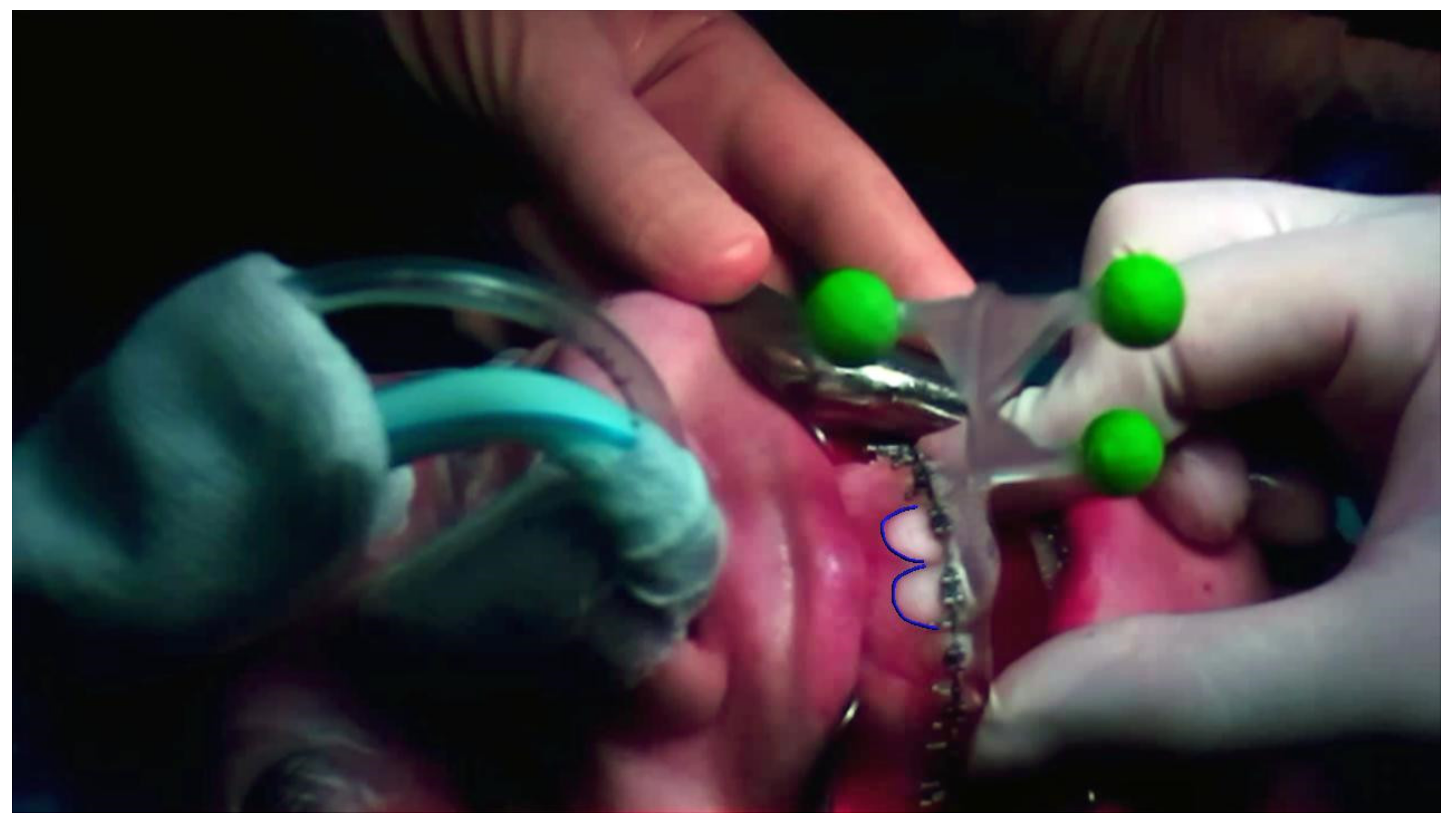

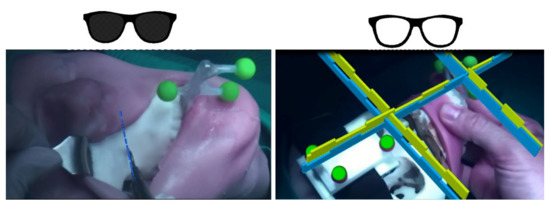

For this reason, the VST modality was selected to carry out the Le Fort 1 osteotomy on the patients enrolled in the clinical trial, as seen in Figure 4 on the left. The osteotomy line is planned preoperatively on the segmented patient anatomy and the virtual dotted line is exported as a 3D object in the configuration file. For each surgery and for each task selected to be guided in VST, the optimal 3D virtual content is selected and tailored for the surgeon.

Figure 4.

Left—under the VST modality the system is able to show to the surgeon the osteotomy line (blue) to be followed with the surgical scalpel. Right—in OST the surgeon should reposition a bone fragment following a plan: the correspondence to the plan is visually shown through the complementary geometries, the unhindered view allows the surgeon to take advantage of all the spatial cues to verify the anatomical symmetries.

- (b)

- OST-oriented tasks

Under the OST modality, the surgeon can take advantage of a natural view during non-guided tasks: the operator can easily switch between navigated tasks and free operation, without the need to remove the visor.

In general, the OST modality has to be preferred for those tasks where sub-millimetric accuracy is not strictly required, for example to see patient data or vital signs.

OST modality can also be used for guiding the “repositioning” task. This task deals with the repositioning of a bone fragment, or an implant, following a preoperatory planned position. The repositioning task is also a general task suitable for a lot of different kinds of surgeries. As demonstrated in a previous work [44], to visualize the virtual anatomy or virtual implant already located at the planned position is not useful to guide the realignment, the geometry complexity and the occurring occlusions in the surgical field do not allow for efficient and useful guidance. Instead, using some geometrical hints (e.g., asterixis on the brackets of an orthodontic appliance) has been confirmed to be more useful. The design of these geometrical hints is not easy nor universal as their usefulness as a guide can also depend on the single surgeon.

In our setup, once the surgeon has planned the final position of the bone segment (in this case the maxilla), the green shape is linked to the coordinate system of the movable part (the maxilla) while the blue shape is linked to the coordinate system of the patient, so to target the final position, as seen in Figure 4 on the right. During this task, the registration must be precise, but sub-millimetric accuracy is not strictly required; moreover, the possibility for the surgeon to see the surgical scene with his/her unhindered view is essential to perceive the whole scenario and take advantage of all the natural cues to evaluate symmetries and so on.

- -

- Regulatory compliance

In Europe, clinical investigation with pre-market medical devices is allowed and it is a prerequisite for obtaining the CE certification.

To perform such pre-market clinical investigations in compliance with Medical Device Directive 93/42/EC and with the new EU Medical Devices Regulation (MDR 2017/745), some requirements should be fulfilled:

- -

- Ethical Committee approval:

- ◦

- “Notification” (statement/documentation on performance and safety of the experimental pre-market medical device) to the government competent authority, i.e., the Italian Ministry of Health.

- ◦

- Fulfillment of a list of conditions for clinical investigations.

- ◦

- Designation of an investigator who exercises a profession that is recognized in the member state and has the necessary scientific knowledge and experience in patient care.

- ◦

- Suitability for clinical investigation of the facilities in which the clinical investigation is to be conducted and their resemblance to the facilities where the device is intended to be used.

A research prototype can be far from full compliance to the regulations but having conceived the VOSTARS prototype for the surgical domain since the very first steps of the design allowed us to fulfill all the requirements.

Preliminary approval of the clinical protocol was obtained from the ethical committee of Ce-AVEC: the Ethical Committee of the Area Vasta Emilia Centro, as the clinical trial was conducted in the Sant’Orsola Malpighi Hospital in Bologna. After that, to collect preclinical data on the device and to complete the required documentation for the Notification to the Italian Ministry of Health, a systematic preliminary in vitro experimental phase was carried out on phantoms [45].

Additionally, a systematic risk analysis was conducted, in compliance with EN ISO14971 standard, in collaboration with a specialized company (MediCon Ingegneria S.r.l., Budrio -BO, Italy) with skills in design and consultancy in the field of medical devices.

As a result of the risk analysis, the main measures undertaken to minimize the risks concerned the execution of tests on the experimental VOSTARS system to verify the compliance with the EN60601-1 and EN60601-1-2 standards (electrical safety and electromagnetic compatibility of electromedical devices, respectively).

The three spherical markers used to localize the patient, even if they never came in contact with the surgical field, were colored with 4-free nail polish (Layla cosmetics–www.laylacosmetics.it) (accessed on 12 January 2022)guaranteeing the total absence of potentially toxic substances and bacteria burden [46]. We selected a fluorescent green that offered us a more robust marker identification.

The safety pedal and the alarms inserted in the GUI described above completed then the minimum safety requisites.

Software compliance was not analyzed in this preliminary risk analysis; nevertheless, certain precautions have been defined to prevent any risks coming from possible software failure: (1) the alarm on the GUI is used to notify whenever the application loses the connection with the control GUI; (2) in VST modality, dedicated control virtual elements were designed and continuously rendered to perform a real-time safety check of the virtual to real registration (Figure 5); (3) a ceiling operating theatre certified monitor was used to repeat the video signal of the HMD so that the whole surgical team can see what the surgeon is seeing, and a dedicated technician continuously monitors the correct alignment of the control virtual elements to the real patient.

Figure 5.

The control virtual element (in this photo the teeth profile) is rendered on the real patient during the VST high-accuracy osteotomy guidance task. Continuously checking the correct alignment of the control virtual element allows the surgeon to monitor the alignment to verify the correct registration in real time.

2.3. Phantom Trials

In order to collect preclinical data on the VOSTARS device and to complete the required documentation on device risk assessment for the Italian MoH, a systematic in vitro experimental phase was carried out on phantoms [45].

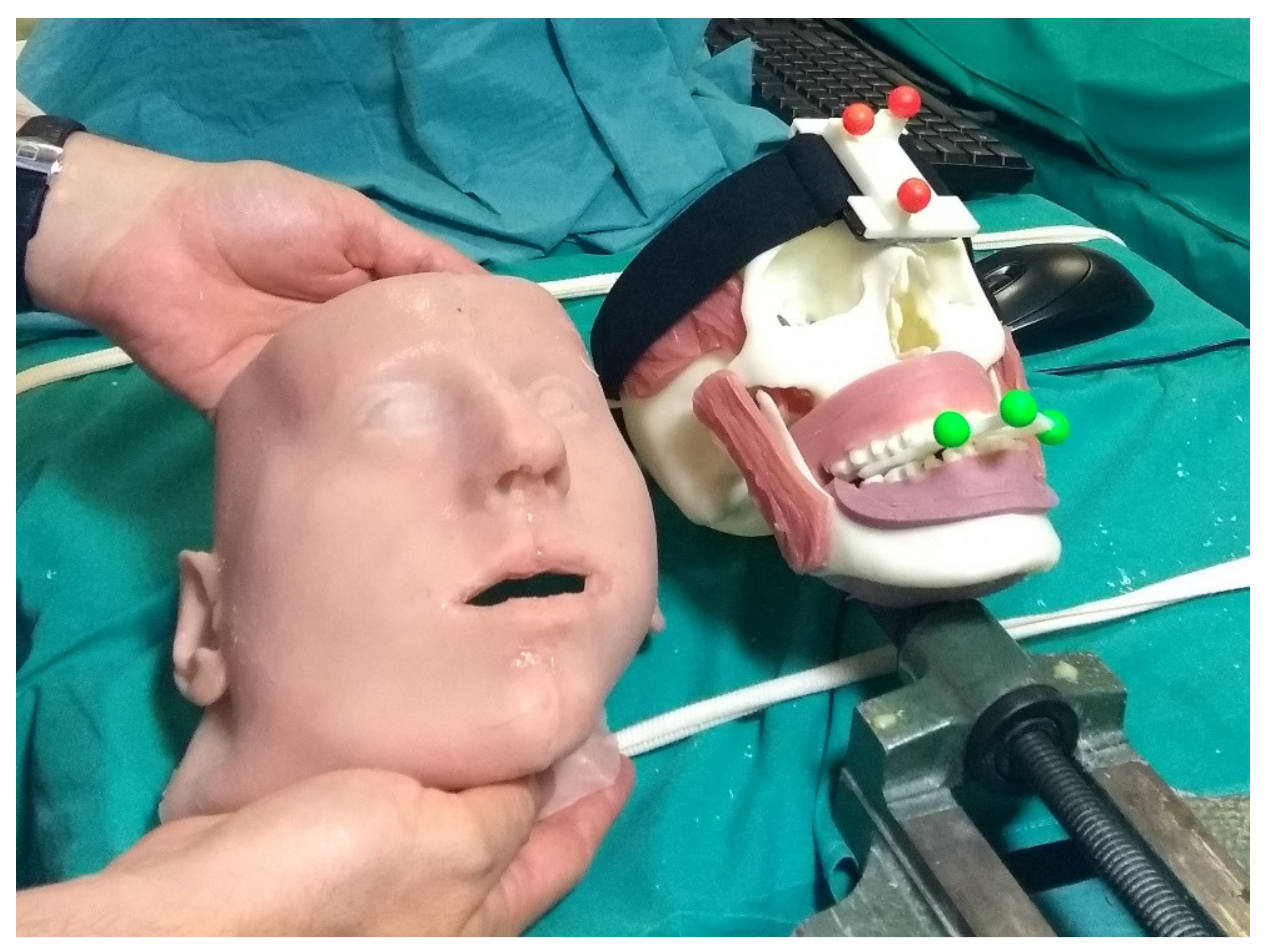

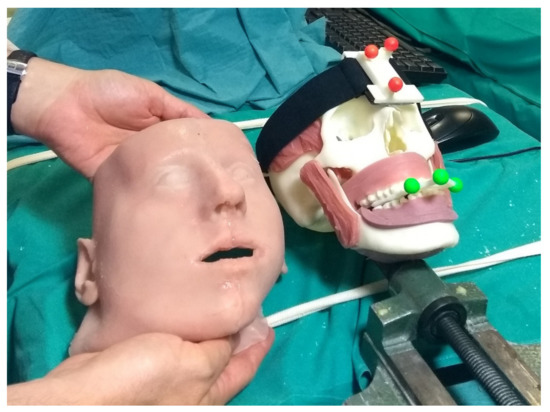

The phantoms, produced through 3D printing and casting technologies, were obtained from diagnostic imaging of real patients and replicated the anatomical structures of interest for the craniomaxillofacial district. From the virtual model, the bony components of the phantom were produced in acrylonitrile butadiene styrene (ABS) via 3D printing (3D printer Dimension Elite Stratasys Ltd., www.stratasys.com, Eden Prairie, MN, USA) (accessed on 12 January 2022). Using the Blender Software [47], the primary muscles of mastication (i.e., temporalis, medial pterygoid, lateral pterygoid, and masseter) were added to the skull virtual model together with facial soft tissues (soft palate, tongue, gums), functional to the realistic simulation of the surgical procedure. Starting from the virtual model, ad hoc molds were designed and 3D printed, then silicone casting was made using these molds. To achieve further realism and to help keep the jaws in position, facial skin was also designed and produced using the silicone casting technique. The resulting maxillofacial phantom is depicted in Figure 6.

Figure 6.

The surgeon is holding the simulated skin, while on the skull details of the reproduced muscles teeth and gums can be observed.

The experimental task performed followed the clinical task selected for the trial (Le Fort 1 osteotomy).

Ad hoc maxilla trackers anchored to phantom-specific occlusal splint were designed and used for virtual-to-real registration, and CAD/CAM templates were produced to evaluate the achievable level of accuracy under VOSTARS guidance [45].

2.4. Clinical Trials

The clinical investigation was designed as a pilot study (the first human study using VOSTARS) on 20 patients requiring maxillofacial (orthognathic) surgical interventions. For all the enrolled patients, the execution of the specific surgical task (the execution of Le Fort1 osteotomy) under VOSTARS guidance was planned. Due to the COVID-19 emergency, the enrollment of patients has suffered a sharp slowdown compared to the initial expectation, so to date, the study has included 7 patients out of 20 planned.

The primary endpoint of the trial was to evaluate the accuracy of surgical reproduction of the preoperative plan when using VOSTARS-assisted navigation, the secondary endpoints were to evaluate the operative times, the post-operative course, the patient-reported outcomes, the economic impact related to the use of the VOSTARS device (e.g., direct, indirect costs) and the usefulness and usability perceived by medical staff.

As for the phantom tests, for each clinical case, we designed a template for the tracker with the three spherical markers to be anchored to the patient-specific occlusal splint.

2.5. AR Virtual Content

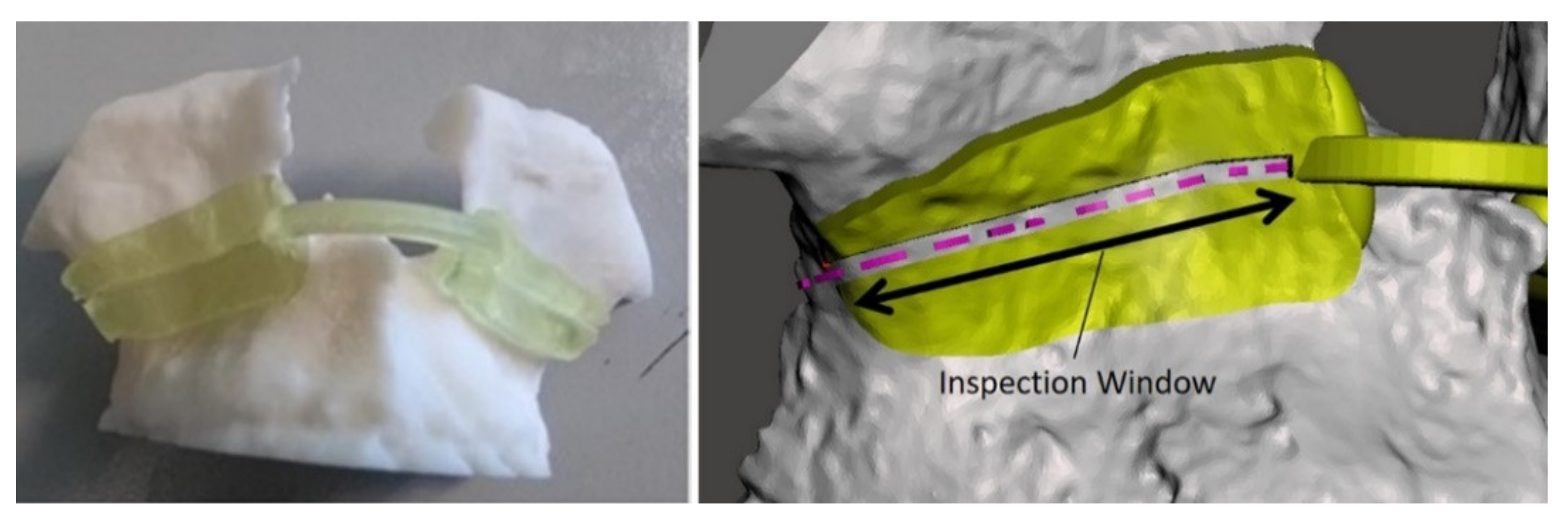

Starting from real-computed tomography datasets, the cranial, maxillary, and mandibular bones were extracted with a semiautomatic segmentation pipeline [48] and a complete 3D virtual model of the skull of each patient was obtained. Creo Parametric software (6.0, PTC Inc., Boston, MA, USA) was used to design the virtual maxillary osteotomy lines (left and right sides) following the surgical planning previously prepared by the surgeon. The two osteotomy lines were represented with dashed curves (0.5 mm thickness) and saved as VRML files to be imported by the AR software framework and displayed by the VOSTARS headset.

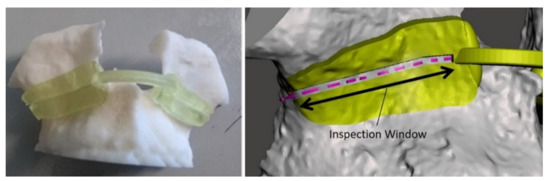

To evaluate the achievable accuracy in performing the VOSTARS-guided Le Fort 1 osteotomy, we used a method similar to the one previously adopted for in vitro phantom tests [45]. A template to match the maxilla surface of each patient was designed and it was provided with an inspection window (IW) shaped as the ideal line to be followed in the planned osteotomy. The IW was 1.5 mm wide in order to evaluate an accuracy level of “± 0.5 mm”, i.e., a range of error ± 0.5 mm around the cut performed, considering a 0.5 mm thickness for the cutting performed with the piezoelectric saw, Figure 7.

Figure 7.

Example of virtual and 3D-printed template for accuracy evaluation. The planned osteotomy line is represented by the dashed pink line.

Both the occlusal splints and the verification template were printed in the biocompatible and certified for use in surgical condition MED610 material, with the Object 30 Printer from Stratasys (Stratasys Ltd., www.stratasys.com, Eden Prairie, MN, USA) (accessed on 12 January 2022).

3. Results

The surgical platform potentialities were assessed on phantoms and then tested on real patients in a clinical trial. The phantom test showed that the system, used in combination with the designed patient-specific maxilla tracker, has excellent tracking robustness under operating room lighting. Moreover, in all the trials, users were able to trace osteotomies trajectories with an accuracy of ±1.0 mm, and on average, 88% of the trajectory’s length was within ±0.5 mm accuracy [45].

After the phantom tests, three surgeons assessed the overall device comfort with a brief four-entry monotone Likert [49] questionnaire about the impression on the overall design and also on the AR experience with the display, statements, and results reported in Table 1.

Table 1.

Four-point Likert questionnaire administered to the surgeons that tested the HMD (1 = strongly disagree, 2 = disagree, 3 = agree, 4 = strongly agree).

All the surgeons involved in this first qualitative evaluation confirmed that the visor offers good transparency when turned off.

The VST modality allows for good visualization of the real world too and regarding focal distances the solution works properly at the settled distance.

Nevertheless, Surgeon n.2 (the only one that then actually used the system on patients) underlined that the intrinsic working principle of the commercial ARS30® display introduced some annoying reflections in the surgical room. Even if the user can get used to the reflections and can work properly despite them, the possibility to avoid such reflection can allow smoother fruition of the visor and less visual fatigue during surgery. A solution is represented by the innovative optic modules, proposed by a project partner, that were mounted in the final version of the VOSTARS prototype that unluckily, due to the COVID-19 pandemic, has not yet undergone a clinical trial.

Moreover, during the tests, surgeons have also appreciated the possibility to rapidly switch from VST to natural view without removing the HMD nor moving the frontal part but simply by just taking advantage of the safety pedal.

Only one surgeon, due to the COVID-19 pandemic, was able to conduct the clinical trial on real patients. In total, seven patients were treated and the trials’ results, which are about to be separately published, confirmed the results on phantoms in terms of safety, accuracy, and reliability of the VOSTARS surgical navigation platform: on average, 86% of the VOSTARS-guided osteotomies were carried out reproducing the preoperative plan with an accuracy level of ±0.5 mm. All interventions were carried out successfully, and in the follow-up period, no unplanned admission or specialist visits were documented. Postoperative complications and adverse events (i.e., hematoma in one patient and slight postoperative edema accompanied by altered skin sensitivity of the upper and lower lip in another two patients) remained in the standard average of the surgical procedure performed in a conventional way, and none of them can be directly attributable to the use of the VOSTARS system.

4. Conclusions

In this paper, we describe the architecture of a hybrid AR HMD surgical navigation platform designed to obtain compliance with medical device regulations and to meet the comfort and accuracy demands required to guide high-accuracy surgical tasks, such as the osteotomies covered by the first clinical trial.

Results obtained on the phantoms demonstrate the accuracy and reliability of the system in maxillofacial surgery, and these results have been confirmed in the clinical study.

The hybrid approach of VST/OST guarantees the high accuracy demanded by surgical guidance tasks while maintaining all the advantages of the OST approach in terms of visual comfort in the remaining navigation tasks.

In general, the developed platform proved to be potentially smoothly adoptable in a real operating room environment, showing a simple setup and fulfilling all the major requirements for a surgery-specific HMD that are related to ergonomics, safety, and reliability [41].

The resulting device will now undergo a definitive engineering process, economic viability analysis, and consequent trials and those results will be fundamental towards straight industrial exploitation.

Author Contributions

Conceptualization, F.C. and V.F.; methodology, M.C., R.D. and F.C.; software, F.C., M.C. and S.C.; validation, S.C., L.C., M.C. G.B. and V.F.; investigation, M.C., F.C., S.C., R.D. and V.F.; data curation, S.C.; writing—original draft preparation, M.C.; writing—review and editing, M.C., F.C. and S.C.; supervision, V.F.; project administration, M.C.; funding acquisition, V.F. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the CrossLab project (Departments of Excellence) of the University of Pisa, Laboratory of Augmented Reality; the project ARTS4.0: Augmented Reality for Tumor Surgery 4.0–Project co-financed under Tuscany POR FESR 2014–2020; and the HORIZON 2020 Project VOSTARS under Grant 731974.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, approved by the Ethics Committee of Area Vasta Emilia Centro (protocol code 3749/2019, 22/10/2019) and notified to the Italian Ministry of Health on December 15 2019.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The phantom experimental data presented in this study are available on request from the corresponding author. These data are not publicly available due to its proprietary nature. Aggregated anonymous data from clinical data are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Rolland, J.P.; Cakmakci, O. The Past, Present, and Future of Head-Mounted Display Designs; SPIE: Bellingham, WA, USA, 2005; Volume 5638. [Google Scholar]

- Azuma, R.T. A Survey of Augmented Reality. Presence: Teleoperators Virtual Environ. 1997, 6, 355–385. [Google Scholar] [CrossRef]

- Carbone, M.; Condino, S.; Cutolo, F.; Viglialoro, R.M.; Kaschke, O.; Thomale, U.W.; Ferrari, V. Proof of Concept: Wearable Augmented Reality Video See-Through Display for Neuro-Endoscopy. AVR 2018; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2018; Volume 10851, pp. 95–104. [Google Scholar]

- Vavra, P.; Roman, J.; Zonca, P.; Ihnat, P.; Nemec, M.; Kumar, J.; Habib, N.; El-Gendi, A. Recent Development of Augmented Reality in Surgery: A Review. J. Healthc. Eng. 2017, 2017, 4574172. [Google Scholar] [CrossRef] [PubMed]

- Cutolo, F. Augmented Reality in Image-Guided Surgery. In Encyclopedia of Computer Graphics and Games, Lee, N., Ed.; Springer International Publishing: Cham, Swizterland, 2017; pp. 1–11. [Google Scholar]

- Ferrari, V.; Klinker, G.; Cutolo, F. Augmented Reality in Healthcare. J. Healthc. Eng. 2019, 2019, 9321535. [Google Scholar] [CrossRef] [PubMed]

- Condino, S.; Turini, G.; Viglialoro, R.; Gesi, M.; Ferrari, V. Wearable Augmented Reality Application for Shoulder Rehabilitation. Electronics 2019, 8, 1178. [Google Scholar] [CrossRef] [Green Version]

- Meulstee, J.W.; Nijsink, J.; Schreurs, R.; Verhamme, L.M.; Xi, T.; Delye, H.H.K.; Borstlap, W.A.; Maal, T.J.J. Toward Holographic-Guided Surgery. Surg. Innov. 2019, 26, 86–94. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pratt, P.; Ives, M.; Lawton, G.; Simmons, J.; Radev, N.; Spyropoulou, L.; Amiras, D. Through the HoloLens™ looking glass: Augmented reality for extremity reconstruction surgery using 3D vascular models with perforating vessels. Eur. Radiol. Exp. 2018, 2, 2. [Google Scholar] [CrossRef] [PubMed]

- Tepper, O.M.; Rudy, H.L.; Lefkowitz, A.; Weimer, K.A.; Marks, S.M.; Stern, C.S.; Garfein, E.S. Mixed Reality with HoloLens: Where Virtual Reality Meets Augmented Reality in the Operating Room. Plast. Reconstr. Surg. 2017, 140, 1066–1070. [Google Scholar] [CrossRef] [PubMed]

- Scherl, C.; Stratemeier, J.; Karle, C.; Rotter, N.; Hesser, J.; Huber, L.; Dias, A.; Hoffmann, O.; Riffel, P.; Schoenberg, S.O.; et al. Augmented reality with HoloLens in parotid surgery: How to assess and to improve accuracy. Eur. Arch. Oto-Rhino-Laryngol. 2021, 278, 2473–2483. [Google Scholar] [CrossRef] [PubMed]

- Golse, N.; Petit, A.; Lewin, M.; Vibert, E.; Cotin, S. Augmented Reality during Open Liver Surgery Using a Markerless Non-rigid Registration System. J. Gastrointest. Surg. 2021, 25, 662–671. [Google Scholar] [CrossRef] [PubMed]

- Desselle, M.R.; Brown, R.A.; James, A.R.; Midwinter, M.J.; Powell, S.K.; Woodruff, M.A. Augmented and Virtual Reality in Surgery. Comput. Sci. Eng. 2020, 22, 18–26. [Google Scholar] [CrossRef]

- Neves, C.A.; Vaisbuch, Y.; Leuze, C.; McNab, J.A.; Daniel, B.; Blevins, N.H.; Hwang, P.H. Application of holographic augmented reality for external approaches to the frontal sinus. Int. Forum Allergy Rhinol. 2020, 10, 920–925. [Google Scholar] [CrossRef] [PubMed]

- Ferrari, V.; Carbone, M.; Condino, S.; Cutolo, F. Are augmented reality headsets in surgery a dead end? Expert Rev. Med Devices 2019, 16, 999–1001. [Google Scholar] [CrossRef] [PubMed]

- Condino, S.; Carbone, M.; Piazza, R.; Ferrari, M.; Ferrari, V. Perceptual Limits of Optical See-Through Visors for Augmented Reality Guidance of Manual Tasks. IEEE T Bio-Med. Eng. 2019, 67, 411–419. [Google Scholar] [CrossRef] [PubMed]

- Gabbard, J.L.; Mehra, D.G.; Swan, J.E. Effects of AR Display Context Switching and Focal Distance Switching on Human Performance. IEEE Trans. Vis. Comput. Graph. 2019, 25, 2228–2241. [Google Scholar] [CrossRef] [PubMed]

- Carbone, M.; Piazza, R.; Condino, S. Commercially Available Head-Mounted Displays Are Unsuitable for Augmented Reality Surgical Guidance: A Call for Focused Research for Surgical Applications. Surg. Innov. 2020, 27. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Al Janabi, H.F.; Aydin, A.; Palaneer, S.; Macchione, N.; Al-Jabir, A.; Khan, M.S.; Dasgupta, P.; Ahmed, K. Effectiveness of the HoloLens mixed-reality headset in minimally invasive surgery: A simulation-based feasibility study. Surg. Endosc. 2020, 34, 1143–1149. [Google Scholar] [CrossRef] [Green Version]

- Galati, R.; Simone, M.; Barile, G.; De Luca, R.; Cartanese, C.; Grassi, G. Experimental Setup Employed in the Operating Room Based on Virtual and Mixed Reality: Analysis of Pros and Cons in Open Abdomen Surgery. J. Healthc. Eng. 2020, 2020, 8851964. [Google Scholar] [CrossRef] [PubMed]

- Dibble, C.F.; Molina, C.A. Device profile of the XVision-spine (XVS) augmented-reality surgical navigation system: Overview of its safety and efficacy. Expert Rev. Med Devices 2021, 18, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Rolland, J.P.; Fuchs, H. Optical Versus Video See-Through Head-Mounted Displays in Medical Visualization. Presence: Teleoper. Virtual Environ. 2000, 9, 287–309. [Google Scholar] [CrossRef]

- Holliman, N.S.; Dodgson, N.A.; Favalora, G.E.; Pockett, L. Three-Dimensional Displays: A Review and Applications Analysis. IEEE T Broadcast 2011, 57, 362–371. [Google Scholar] [CrossRef]

- Grubert, J.; Itoh, Y.; Moser, K.; Swan, J.E. A Survey of Calibration Methods for Optical See-Through Head-Mounted Displays. IEEE Trans. Vis. Comput. Graph. 2018, 24, 2649–2662. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Itoh, Y.; Klinker, G. Performance and sensitivity analysis of INDICA: INteraction-Free DIsplay CAlibration for Optical See-Through Head-Mounted Displays. In Proceedings of the 2014 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Munich, Germany, 10–12 September 2014; pp. 171–176. [Google Scholar]

- Cutolo, F.; Cattari, N.; Fontana, U.; Ferrari, V. Optical See-Through Head-Mounted Displays With Short Focal Distance: Conditions for Mitigating Parallax-Related Registration Error. Front. Robot. AI 2020, 7, 572001. [Google Scholar] [CrossRef] [PubMed]

- Raimbaud, P.; Lopez, M.S.A.; Figueroa, P.; Hernandez, J.T. Influence of Depth Cues on Eye Tracking Depth Measurement in Augmented Reality Using the MagicLeap Device. In Proceedings of the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Atlanta, GA, USA, 22–26 March 2020; pp. 210–214. [Google Scholar]

- Drascic, D.; Milgram, P. Perceptual issues in Augmented Reality. Stereosc. Disp. Virtual Real. Syst. III 1996, 2653, 123–134. [Google Scholar] [CrossRef]

- Condino, S.; Fida, B.; Carbone, M.; Cercenelli, L.; Badiali, G.; Ferrari, V.; Cutolo, A.F. Wearable Augmented Reality Platform for Aiding Complex 3D Trajectory Tracing. Sensors 2020, 20, 1612. [Google Scholar] [CrossRef] [Green Version]

- Cattari, N.; Piazza, R.; D’Amato, R.; Fida, B.; Carbone, M.; Condino, S.; Cutolo, F.; Ferrari, V. Towards a Wearable Augmented Reality Visor for High-Precision Manual Tasks. In Proceedings of the 2020 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Bari, Italy, 1 June–1 July 2020; pp. 1–6. [Google Scholar]

- Cattari, N.; Cutolo, F.; D’amato, R.; Fontana, U.; Ferrari, V. Toed-in vs Parallel Displays in Video See-Through Head-Mounted Displays for Close-Up View. IEEE Access 2019, 7, 159698–159711. [Google Scholar] [CrossRef]

- Cutolo, F.; Fontana, U.; Ferrari, V. Perspective Preserving Solution for Quasi-Orthoscopic Video See-Through HMDs. Technologies 2018, 6, 9. [Google Scholar] [CrossRef] [Green Version]

- State, A.; Ackerman, J.; Hirota, G.; Lee, J.; Fuchs, H. Dynamic virtual convergence for video see-through head-mounted displays: Maintaining maximum stereo overlap throughout a close-range work space. In Proceedings of the Proceedings IEEE and ACM International Symposium on Augmented Reality, New York, NY, USA, 29–30 October 2001; pp. 137–146. [Google Scholar]

- Cutolo, F.; Fontana, U.; Carbone, M.; D’Amato, R.; Ferrari, V. Hybrid Video/Optical See-Through HMD. In Proceedings of the 2017 Ieee International Symposium on Mixed and Augmented Reality (Ismar-Adjunct), Nantes, France, 9–13 October 2017; pp. 52–57. [Google Scholar] [CrossRef] [Green Version]

- OpenCV. Available online: http://opencv.willowgarage.com/documentation/cpp/index.html (accessed on 12 January 2022).

- (VTK), T.V.T. Available online:. Available online: http://www.vtk.org/ (accessed on 12 January 2022).

- Cutolo, F.; Fida, B.; Cattari, N.; Ferrari, V. Software Framework for Customized Augmented Reality Headsets in Medicine. IEEE Access 2020, 8, 706–720. [Google Scholar] [CrossRef]

- Cutolo, F.; Cattari, N.; Carbone, M.; D’Amato, R.; Ferrari, V. Device-Agnostic Augmented Reality Rendering Pipeline for AR in Medicine. In Proceedings of the 2021 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Bari, Italy, 4–8 October 2021; pp. 340–345. [Google Scholar]

- Zhang, Z. A flexible new technique for camera calibration. IEEE T Pattern Anal 2000, 22, 1330–1334. [Google Scholar] [CrossRef] [Green Version]

- Cutolo, F.; Fontana, U.; Cattari, N.; Ferrari, V. Off-Line Camera-Based Calibration for Optical See-Through Head-Mounted Displays. Appl. Sci. 2020, 10, 193. [Google Scholar] [CrossRef] [Green Version]

- VOSTARS. VOSTARS at Work in the Surgical Room. Available online: https://www.youtube.com/watch?v=N8qZUj4amm0 (accessed on 12 January 2022).

- Choi, J.-W.; Lee, J.Y. Update on Orthognathic Surgical Techniques. In The Surgery-First Orthognathic Approach; Springer: Berlin/Heidelberg, Germany, 2021; pp. 149–158. [Google Scholar]

- Naran, S.; Steinbacher, D.M.; Taylor, J.A. Current concepts in orthognathic surgery. Plast. Reconstr. Surg. 2018, 141, 925e–936e. [Google Scholar] [CrossRef] [PubMed]

- Badiali, G.; Ferrari, V.; Cutolo, F.; Freschi, C.; Caramella, D.; Bianchi, A.; Marchetti, C. Augmented reality as an aid in maxillofacial surgery: Validation of a wearable system allowing maxillary repositioning. J. Cranio Maxill. Surg. 2014, 42, 1970–1976. [Google Scholar] [CrossRef] [PubMed]

- Cercenelli, L.; Carbone, M.; Condino, S.; Cutolo, F.; Marcelli, E.; Tarsitano, A.; Marchetti, C.; Ferrari, V.; Badiali, G. The Wearable VOSTARS System for Augmented Reality-Guided Surgery: Preclinical Phantom Evaluation for High-Precision Maxillofacial Tasks. J. Clin. Med. 2020, 9, 3562. [Google Scholar] [CrossRef] [PubMed]

- Hewlett, A.L.; Hohenberger, H.; Murphy, C.N.; Helget, L.; Hausmann, H.; Lyden, E.; Fey, P.D.; Hicks, R. Evaluation of the bacterial burden of gel nails, standard nail polish, and natural nails on the hands of health care workers. Am. J. Infect. Control. 2018, 46, 1356–1359. [Google Scholar] [CrossRef] [PubMed]

- Community BO. Blender—A 3D modelling and rendering package [Internet]. Stichting Blender Foundation, Amsterdam. 2018. Available online: http://www.blender.org (accessed on 12 January 2022).

- Ferrari, V.; Carbone, M.; Cappelli, C.; Boni, L.; Melfi, F.; Ferrari, M.; Mosca, F.; Pietrabissa, A. Value of multidetector computed tomography image segmentation for preoperative planning in general surgery. Surg. Endosc. 2012, 26, 616–626. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jamieson, S. Likert scales: How to (ab)use them. Med. Educ. 2004, 38, 1217–1218. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).