1. Introduction

Computer programming education is key to the acquisition of 21st-century skills such as creativity, critical thinking, problem solving, communication and collaboration, social–intercultural skills, productivity, leadership, and responsibility [

1]. Studies in many countries report using Scratch or games [

2,

3,

4]. That being so, it is still unclear the best order in which to introduce programming concepts to CS1 students. There are difficulties teaching basic concepts such as program construction [

5], loops [

6], control structures, and algorithms [

7]. Difficulties may arise from poor or even a lack of a proper teaching methodology [

8,

9], and teachers need some guidance to approach this task efficiently [

10,

11].

Papert argued that a child able to program a computer would be able to gain an actionable understanding of probabilistic behavior, as, through such activity, they would be connected with empowering knowledge about the way things work [

12]. His view was that programming was a way to connect the programmer with cognitive science, in that programming enables one to articulate ideas explicitly and formally and to see whether the idea works or not. Papert encouraged one to ‘look at programming as a source of descriptive devices’ [

12], predicting that, ‘in a computer-rich world, computer languages that simultaneously provide a means of control over the computer and offer new and powerful descriptive languages for thinking will … have a particular effect on our language for describing ourselves and our learning’ (p. 98). Affected by the learning procedure, instructional materials, and technology used, along with metacognitive factors, there are many variables involved in learning to code. Others emphasized that well-designed lessons with interesting activities become meaningful only when they affect the students in the process [

13]. It was also asserted that innovations in the methods of teaching and the use of teaching aids may improve students’ feeling of success [

14] and may help them develop confidence, which correlates with the practices and theories of Piaget and Vygotsky and the adoption of constructivism in teaching [

15,

16,

17,

18,

19].

Many approaches have been implemented to help students learn programming for the first time, for example with different devices such as using mobile devices [

20] or different methodologies such as pair programming [

21]. Students have traditionally encountered difficulties and misconceptions on the concepts learned [

22]. Along with the learning of programming, researchers and educators in higher education aim to improve student computational thinking (CT) skills using appropriate interventions [

23] and approaches using games for teaching and learning, CT principles, and concepts [

24].

In response to this proposition, the contribution of this paper is a rigorous study aimed at determining a satisfactory way to introduce basic programming concepts and CT at the CS1 level and inquire how this affects students’ learning gains. This paper evaluates a Guided Scratch Visual Executing Environment developed for CS1 students as a method to teach, develop, practice, and learn computer programming. To address this, there are two research questions.

Can programming concepts be improved with a Visual Execution Environment for this cohort for CS1 students?

Which of the programming concepts tend to be more easily understood, and which are more difficult?

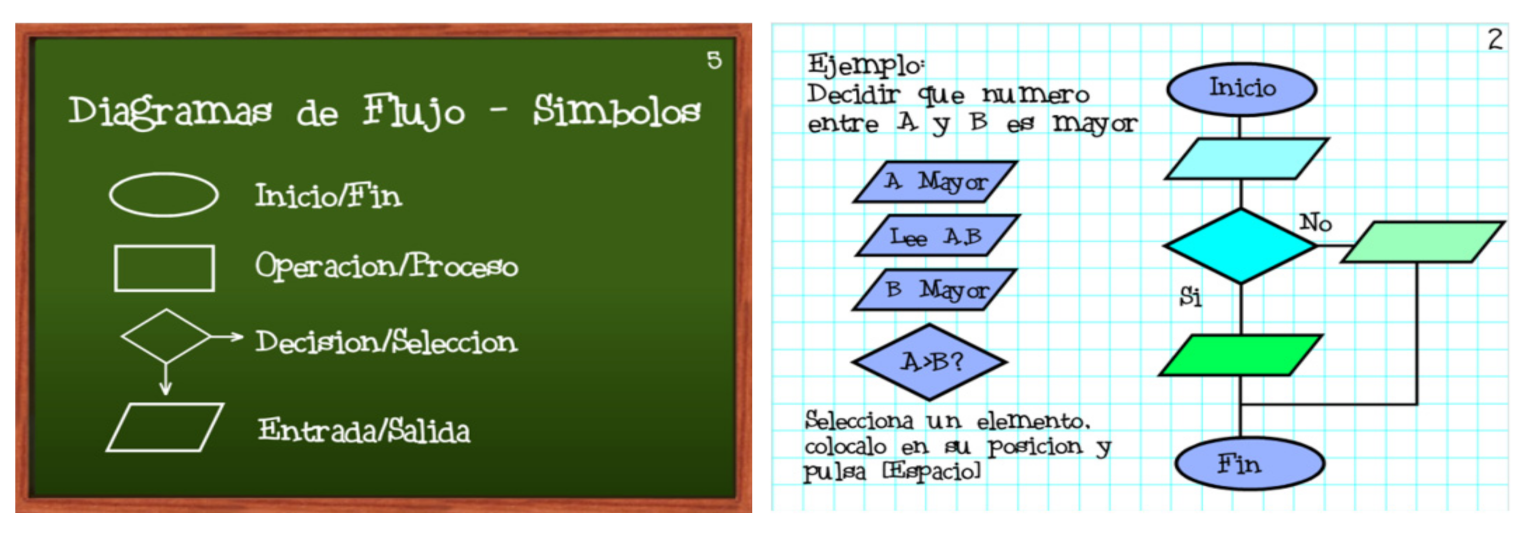

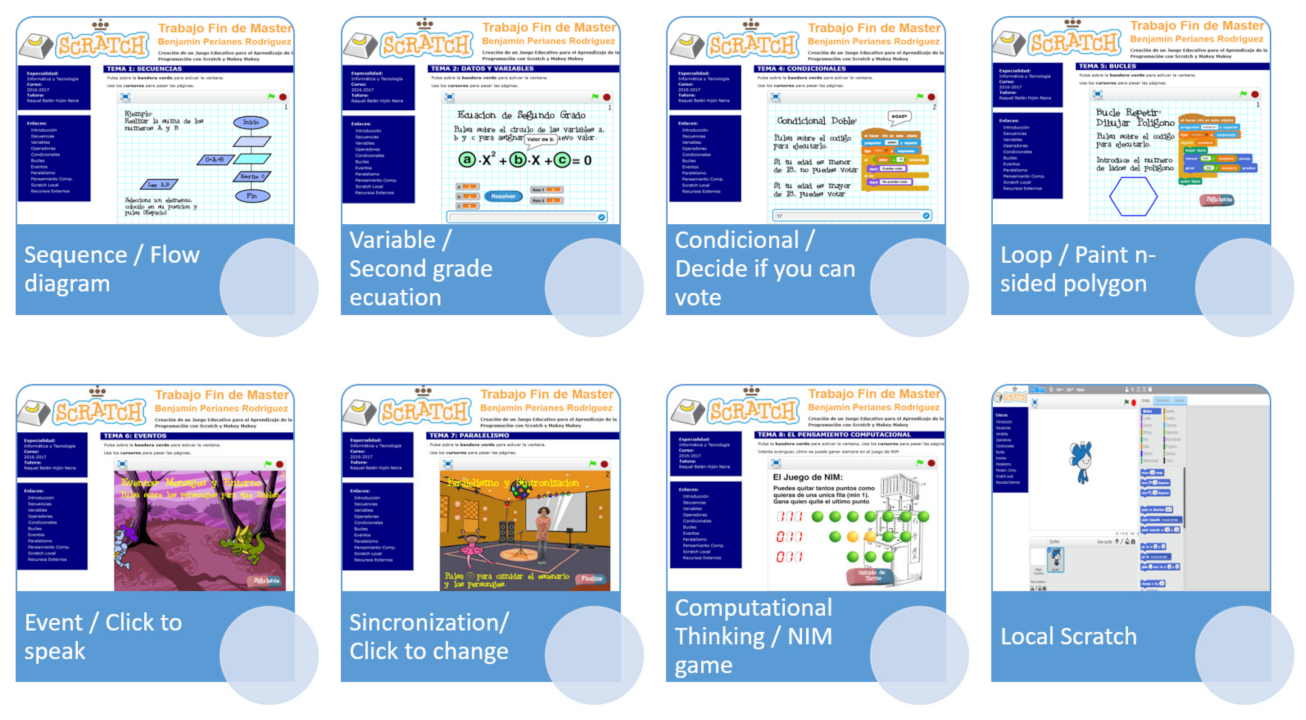

The concepts addressed here are those in a traditional ‘Introduction to Programming’ course such as: sequences, flowcharts, variables and types of data, operators, conditionals, loops, events, and parallelism.

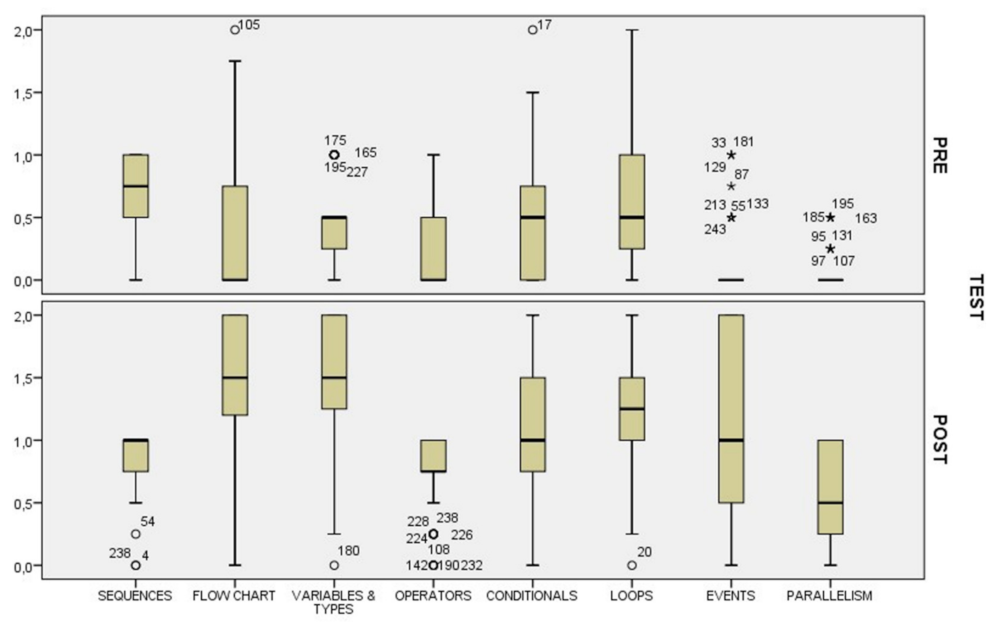

This research proposal investigates if a cohort of 124 CS1 students, from three distinct groups, studying at the same university, are able to improve their programming skills guided by the VEE. Secondly, it investigates if the improvement varies for different programming concepts. The CS1 students were taught the module by the same tutor, and the procedure included a review of material in an interactive way using the Guided Scratch VEE, where there was an explanation of the concepts with prepared ad-hoc exercises based on metaphors for each concept and practice with proposed exercises in Scratch. This was conducted in four 2-h sessions (8 h). There was a pre- and post-test evaluation to measure the gains in students’ learning. The same test was used for the pre-test and the post-test, consisting of 27 short-answer questions covering the programming concepts addressed. The results demonstrate students significantly improved their programming knowledge, and this improvement is significant for all the programming concepts, although greater for certain concepts such as operators, conditionals, and loops. Results also demonstrate that students lacked initial knowledge of events and parallelism, although most had used Scratch in high school. The sequence concept was the most popular. A collateral finding with the study is how the students’ previous knowledge and learning gap affected the grades with which they gain access to study at university.

5. Conclusions

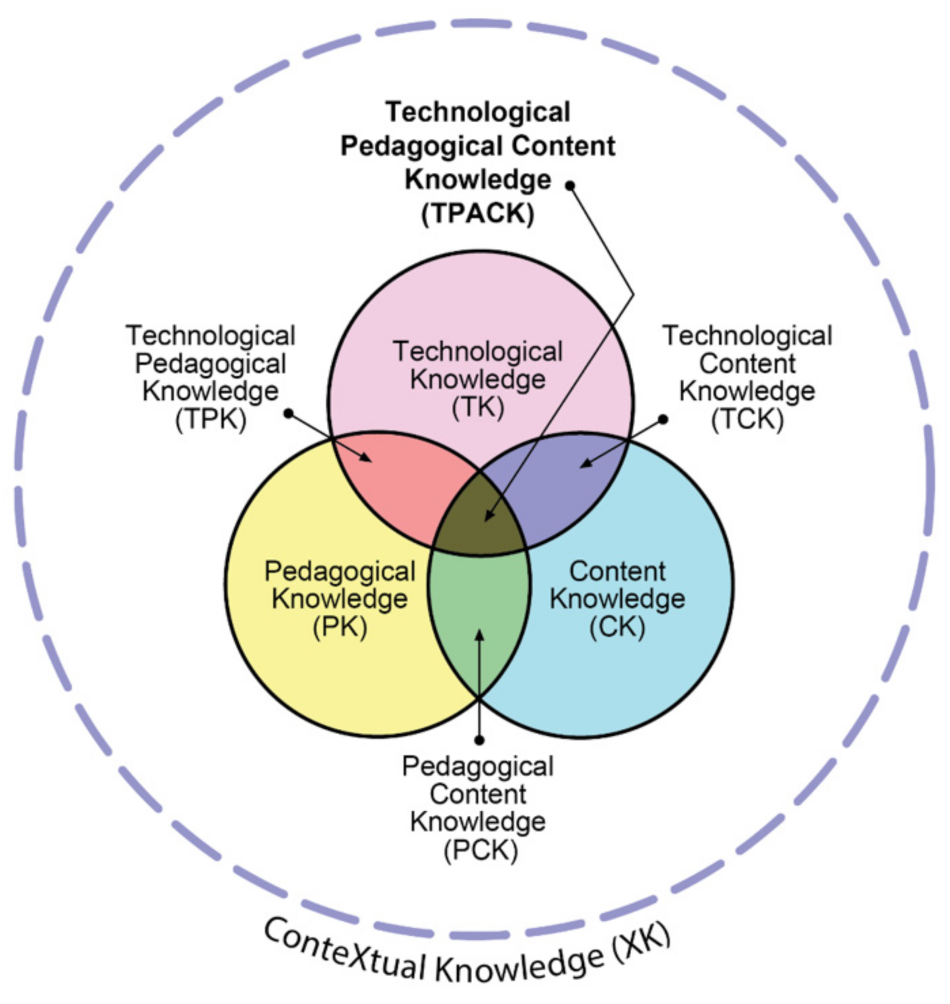

The current COVID-19 pandemic has served to underscore the importance of enhancing young people’s ability, understanding, and use of computer code to develop and deploy new software systems. Productive computer programmers must be able to apply general practices and concepts involved in computational thinking and problem solving. That said, computer science education represents a dynamically changing domain globally and has faced many challenges in teaching programming concepts. This paper uses a guided TPACK framework, incorporating a Scratch VEE for CS1 students as a method to teach and introduce programming concepts. The objective was to investigate if programming concepts could be improved upon by applying this approach and if the CS1 students studying at the one university but different groups of students of the same undergraduate degree were able to improve their programming skills.

This paper had two research questions, the first being, ‘Can programming concepts be improved with a TPACK Visual Execution Environment for a cohort of 124 of CS1 students?’ Statistically significant results of their knowledge improvement in both cases were observed, studying the results as a whole (all students) or dividing them into their own groups, Ferraz, Mostoles, and both, in which case the results vary slightly depending on their previous knowledge and precedence. The second research question focused on if there were differences in the learning path of programming concepts, which could infer that some concepts are easier to understand by students than others. The study observed that there are differences depending on the concept being addressed and their initial knowledge of each. It was observed that students lacked previous knowledge about flow charts, variables, and data types, which they showed great improvement. Slightly the same thing happened with operators, conditionals, and loops, but their initial knowledge was also higher. Moreover, in the case of events and parallelism, students did not know about them at all in the pre-test, and after using the VEE, their knowledge very much improved.

Another conclusion observed after the study is that ‘origin’ matters to CS1 students. The three groups of students followed the same procedure and had the same teachers, but their initial knowledge and knowledge gain were not the same for the three groups. In evaluating their prior knowledge from high-school grades on accessing university, this indicated more prior knowledge for the groups that scored higher.

The work, being a quasi-experimental research case study with CS1 students, though with different groups of students belonging to the same degree, was conducted in one country and therefore has the primary limitation of a narrow focus. This can effectively point to results requiring further evaluation; however, such an approach does not facilitate the development of generalizations.

While this VEE is not a panacea to all programming comprehension and learning, this paper has demonstrated that by using metaphors and Scratch programs to explain and practice the programming concepts addressed in this paper, the VEE effectively guides CS1students in learning programming concepts in a short period of time. While ensuring concepts and practices foundational to programming are understood, the students can continue their practice and become competent and productive computer programmers.

Author Contributions

R.H.-N.; investigation, R.H.-N., D.P.-A., C.C. and O.B.-G.; resources, R.H.-N., C.C., D.P.-A. and O.B.-G.; data curation, R.H.-N., C.C. and O.B.-G.; writing—original draft–preparation, R.H.-N., D.P.-A. and O.B.-G.; writing—review and editing, R.H.-N. and C.C.; visualization, R.H.-N. and D.P.-A.; supervision, R.H.-N. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by in part by the Ministerio de Economía y Competitividad under Grant TIN2015-66731-C2-1-R, in part by the Rey Juan Carlos University under Grant 30VCPIGI15, in part by the Madrid Regional Government, through the project e-Madrid-CM, under Grant P2018/TCS-4307, and in part by the Structural Funds (FSE and FEDER).

Data Availability Statement

All datasets are available and can be requested from the authors.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Lau, W.W.; Yuen, A.H. Modelling programming performance: Beyond the influence of learner characteristics. Comput. Educ. 2011, 57, 1202–1213. [Google Scholar] [CrossRef]

- Campe, S.; Denner, J. Programming Games for Learning: A Research Synthesis; American Educational Research Association (AERA): Chicago, IL, USA, 2015. [Google Scholar]

- Jovanov, M.; Stankov, E.; Mihova, M.; Ristov, S.; Gusev, M. Computing as a new compulsory subject in the Macedonian primary schools curriculum. In Proceedings of the 2016 IEEE Global Engineering Education Conference (EDUCON), Abu Dhabi, United Arab Emirates, 10–13 April 2016. [Google Scholar]

- Ouahbi, I.; Kaddari, F.; Darhmaoui, H.; Elachqar, A.; Lahmine, S. Learning Basic Programming Concepts by Creating Games with Scratch Programming Environment. Procedia Soc. Behav. Sci. 2015, 191, 1479–1482. [Google Scholar] [CrossRef] [Green Version]

- Lahtinen, E.; Ala-Mutka, K.; Järvinen, H.-M. A study of the difficulties of novice programmers. ACM SIGCSE Bull. 2005, 37, 14–18. [Google Scholar] [CrossRef]

- Ginat, D. On Novice Loop Boundaries and Range Conceptions. Comput. Sci. Educ. 2004, 14, 165–181. [Google Scholar] [CrossRef]

- Seppälä, O.; Malmi, L.; Korhonen, A. Observations on student misconceptions—A case study of the Build—Heap Algorithm. Comput. Sci. Educ. 2006, 16, 241–255. [Google Scholar] [CrossRef]

- Barker, L.J.; McDowell, C.; Kalahar, K. Exploring factors that influence computer science introductory course students to persist in the major. ACM SIGCSE Bull. 2009, 41, 153–157. [Google Scholar] [CrossRef]

- Coull, N.J.; Duncan, I. Emergent Requirements for Supporting Introductory Programming. Innov. Teach. Learn. Inf. Comput. Sci. 2011, 10, 78–85. [Google Scholar] [CrossRef]

- Yadav, A.; Gretter, S.; Hambrusch, S.; Sands, P. Expanding computer science education in schools: Understanding teacher experiences and challenges. Comput. Sci. Educ. 2016, 26, 235–254. [Google Scholar] [CrossRef]

- Yadav, A.; Mayfield, C.; Zhou, N.; Hambrusch, S.; Korb, T. Computational Thinking in Elementary and Secondary Teacher Education. ACM Trans. Comput. Educ. 2014, 14, 1–16. [Google Scholar] [CrossRef] [Green Version]

- Papert, S. Mindstorms: Children, Computers, and Powerful Ideas; Basic Books: New York, NY, USA, 1980. [Google Scholar]

- Astin, W.A. College Retention Rates Are often Misleading; Chronicle of Higher Education: Washington, DC, USA, 1993. [Google Scholar]

- Stuart, V.B. Math Course or Math Anxiety? Natl. Counc. Teach. Math. 2000, 6, 330. [Google Scholar]

- Piaget, J. The Moral Judgement of the Child; Penguin Books: New York, NY, USA, 1932. [Google Scholar]

- Piaget, J. Origins of Intelligence in Children; International Universities Press: New York, NY, USA, 1952. [Google Scholar]

- Vygotsky, L.S. Thought and Language, 2nd ed.; MIT Press: Cambridge, MA, USA, 1962. [Google Scholar]

- Vygotsky, L.S. Mind in Society: The Development of Higher Psychological Process; Harvard University Press: Cambridge, MA, USA, 1978. [Google Scholar]

- Vygotsky, L.S. The Genesis of Higher Mental Functions. In Cognitive Development to Adolescence; Richardson, K., Sheldon, S., Eds.; Erlbaum: Hove, UK, 1988. [Google Scholar]

- Maleko, M.; Hamilton, M.; D’Souza, D. Novices’ Perceptions and Experiences of a Mobile Social Learning Environment for Learning of Programming. In Proceedings of the 12th International Conference on Innovation and Technology in Computer Science Education (ITiCSE), Haifa, Israel, 3–5 July 2012. [Google Scholar]

- Williams, L.; Wiebe, E.; Yang, K.; Ferzli, M.; Miller, C. In Support of Pair Programming in the Introductory Computer Science Course. Comput. Sci. Educ. 2002, 12, 197–212. [Google Scholar] [CrossRef]

- Renumol, V.; Jayaprakash, S.; Janakiram, D. Classification of Cognitive Difficulties of Students to Learn Computer Programming; Indian Institute of Technology: New Delhi, India, 2009; p. 12. [Google Scholar]

- De Jong, I.; Jeuring, J. Computational Thinking Interventions in Higher Education. In Proceedings of the 20th Koli Calling International Conference on Computing Education Research, Koli, Finland, 19–22 November 2020. [Google Scholar]

- Agbo, F.J.; Oyelere, S.S.; Suhonen, J.; Laine, T.H. Co-design of mini games for learning computational thinking in an online environment. Educ. Inf. Technol. 2021, 26, 5815–5849. [Google Scholar] [CrossRef] [PubMed]

- Jenkins, T. The motivation of students of programming. ACM SIGCSE Bull. 2001, 33, 53–56. [Google Scholar] [CrossRef]

- Kurland, D.M.; Pea, R.D.; Lement, C.C.; Mawby, R. A Study of the Development of Programming Ability and Thinking Skills in High School Students. J. Educ. Comput. Res. 1986, 2, 429–458. [Google Scholar] [CrossRef]

- Brooks, F.P. No Silver Bullet: Essence and Accidents of Software Engineering. In Proceedings of the Tenth World Computing Conference, Dublin, Ireland, 1–5 September 1986; pp. 1069–1076. [Google Scholar]

- Mishra, D.; Ostrovska, S.; Hacaloglu, T. Exploring and expanding students’ success in software testing. Inf. Technol. People 2017, 30, 927–945. [Google Scholar] [CrossRef] [Green Version]

- Clancy, M.J.; Linn, M.C. Case studies in the classroom. ACM SIGCSE Bull. 1992, 24, 220–224. [Google Scholar] [CrossRef]

- Chandramouli, M.; Zahraee, M.; Winer, C. A fun-learning approach to programming: An adaptive Virtual Reality (VR) platform to teach programming to engineering students. In Proceedings of the IEEE International Conference on Electro/Information Technology, Milwaukee, WI, USA, 5–7 July 2014. [Google Scholar]

- Silapachote, P.; Srisuphab, A. Teaching and learning computational thinking through solving problems in Artificial Intelligence: On designing introductory engineering and computing courses. In Proceedings of the 2016 IEEE International Conference on Teaching, Assessment and Learning for Engineering (TALE), Bangkok, Thailand, 7–9 December 2016. [Google Scholar]

- Liu, C.-C.; Cheng, Y.-B.; Huang, C.-W. The effect of simulation games on the learning of computational problem solving. Comput. Educ. 2011, 57, 1907–1918. [Google Scholar] [CrossRef]

- Kazimoglu, C.; Kiernan, M.; Bacon, L.; Mackinnon, L. A Serious Game for Developing Computational Thinking and Learning Introductory Computer Programming. Procedia Soc. Behav. Sci. 2012, 47, 1991–1999. [Google Scholar] [CrossRef] [Green Version]

- Kazimoglu, C.; Kiernan, M.; Bacon, L.; MacKinnon, L. Learning Programming at the Computational Thinking Level via Digital Game-Play. Procedia Comput. Sci. 2012, 9, 522–531. [Google Scholar] [CrossRef] [Green Version]

- Saad, A.; Shuff, T.; Loewen, G.; Burton, K. Supporting undergraduate computer science education using educational robots. In Proceedings of the ACMSE 2018 Conference, Tuscaloosa, AL, USA, 29–31 March 2012. [Google Scholar]

- Weintrop, W.; Wilensky, U. Comparing Block-Basedand Text-Based Programming in High School Computer Science Classrooms. ACM Trans. Comput. Educ. 2017, 18, 1. [Google Scholar] [CrossRef]

- Martínez-Valdés, J.A.; Velázquez-Iturbide, J.; Neira, R.H. A (Relatively) Unsatisfactory Experience of Use of Scratch in CS1. In Proceedings of the 5th International Conference on Technological Ecosystems for Enhancing Multiculturality, Cadiz, Spain, 18–20 October 2017. [Google Scholar]

- Aristawati, F.A.; Budiyanto, C.; Yuana, R.A. Adopting Educational Robotics to Enhance Undergraduate Students’ Self-Efficacy Levels of Computational Thinking. J. Turk. Sci. Educ. 2018, 15, 42–50. [Google Scholar]

- Basu, S.; Biswas, G.; Kinnebrew, J.S. Learner modeling for adaptive scaffolding in a Computational Thinking-based science learning environment. User Model. User-Adapted Interact. 2017, 27, 5–53. [Google Scholar] [CrossRef]

- Benakli, N.; Kostadinov, B.; Satyanarayana, A.; Singh, S. Introducing computational thinking through hands-on projects using R with applications to calculus, probability and data analysis. Int. J. Math. Educ. Sci. Technol. 2016, 48, 393–427. [Google Scholar] [CrossRef]

- Cai, J.; Yang, H.H.; Gong, D.; MacLeod, J.; Jin, Y. A Case Study to Promote Computational Thinking: The Lab Rotation Approach. In Blended Learning: Enhancing Learning Success; Cheung, S.K.S., Kwok, L., Kubota, K., Lee, L.K., Tokito, J., Eds.; Springer: Cham, Switzerland, 2018; pp. 393–403. [Google Scholar]

- Dodero, J.M.; Mota, J.M.; Ruiz-Rube, I. Bringing computational thinking to teachers’ training. In Proceedings of the 5th International Conference on Technological Ecosystems for Enhancing Multiculturality, Cádiz, Spain, 18–20 October 2017. [Google Scholar]

- Gabriele, L.; Bertacchini, F.; Tavernise, A.; Vaca-Cárdenas, L.; Pantano, P.; Bilotta, E. Lesson Planning by Computational Thinking Skills in Italian Pre-service Teachers. Inform. Educ. 2019, 18, 69–104. [Google Scholar] [CrossRef]

- Curzon, P.; McOwan, P.W.; Plant, N.; Meagher, L.R. Introducing teachers to computational thinking using unplugged storytelling. In Proceedings of the 9th Workshop in Primary and Secondary Computing Education, Berlin, Germany, 5 November 2014; pp. 89–92. [Google Scholar]

- Jaipal-Jamani, K.; Angeli, C. Effect of robotics on elementary preservice teachers’ self-efficacy, science learning, and computational thinking. J. Sci. Educ. Technol. 2017, 26, 175–192. [Google Scholar] [CrossRef]

- Hsu, T.-C.; Chang, S.-C.; Hung, Y.-T. How to learn and how to teach computational thinking: Suggestions based on a review of the literature. Comput. Educ. 2018, 126, 296–310. [Google Scholar] [CrossRef]

- Fogg, B.J. A behavior model for persuasive design. In Proceedings of the 4th international Conference on Persuasive Technology, Claremont, CA, USA, 26–29 April 2009; pp. 1–7. [Google Scholar]

- Piaget, J.; Inhelder, B. Memory and Intelligence; Basic Books: New York, NY, USA, 1973. [Google Scholar]

- Mishra, P.; Koehler, M. Technological Pedagogical Content Knowledge: A Framework for Teacher Knowledge. Teach. Coll. Rec. 2006, 108, 1017–1054. [Google Scholar] [CrossRef]

- Brennan, K.; Resnick, M. New Frameworks for Studying and Assessing the Development of Computational Thinking; American Educational Research Association: Vancouver, BC, Canada, 2012. [Google Scholar]

- Mishra, P.; Koehler, M.J. Introducing Technological Pedagogical Content Knowledge; American Educational Research Association: Vancouver, BC, Canada, 2008; pp. 1–16. [Google Scholar]

- Diéguez, J.L.R. Metaphors in Teaching; Revista Interuniversitaria de Didáctica, Universidad de Salamanca: Salamanca, Spain, 1988; pp. 223–240. [Google Scholar]

- Bouton, C.L. Nim, a Game with a Complete Mathematical Theory. Ann. Math. 1901, 3, 35. [Google Scholar] [CrossRef]

- Ramírez, S.U. Informática y teorías del aprendizaje. Píxel-Bit. Rev. Medios Educ. 1999, 12, 87–100. [Google Scholar]

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).