Product Customer Satisfaction Measurement Based on Multiple Online Consumer Review Features

Abstract

1. Introduction

2. Related Work

2.1. Customer Satisfaction Measurement

2.2. Information-Gain-Algorithm-Related Theories

3. Methodology

3.1. Assumptions and Symbol Descriptions

- Star ratings are consistent with reviews in level of satisfaction.

- The more recent the star rating is given, the more effective it is on potential customers.

- Customers’ ratings and reviews truly reflect their satisfaction level on the product.

- We assume all data we obtain are trustworthy since all of sources are reliable.

3.2. An Improved Information Gain Model

3.3. Determinate Factors on Review Usefulness

3.4. Customer Satisfaction Model

4. Experimental Results and Analysis

4.1. Data Sets

4.2. Informative Words under Different Star Ratings

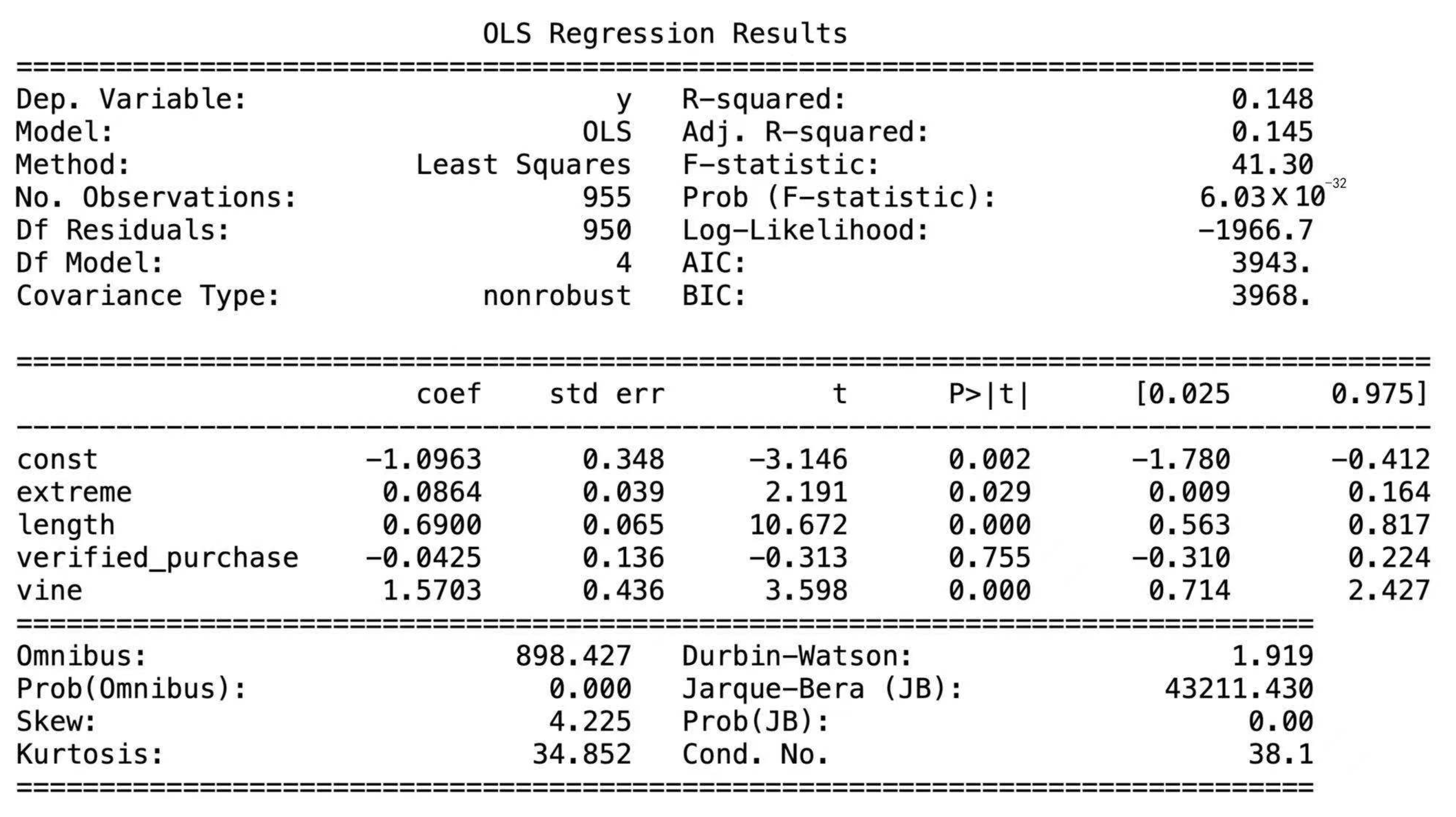

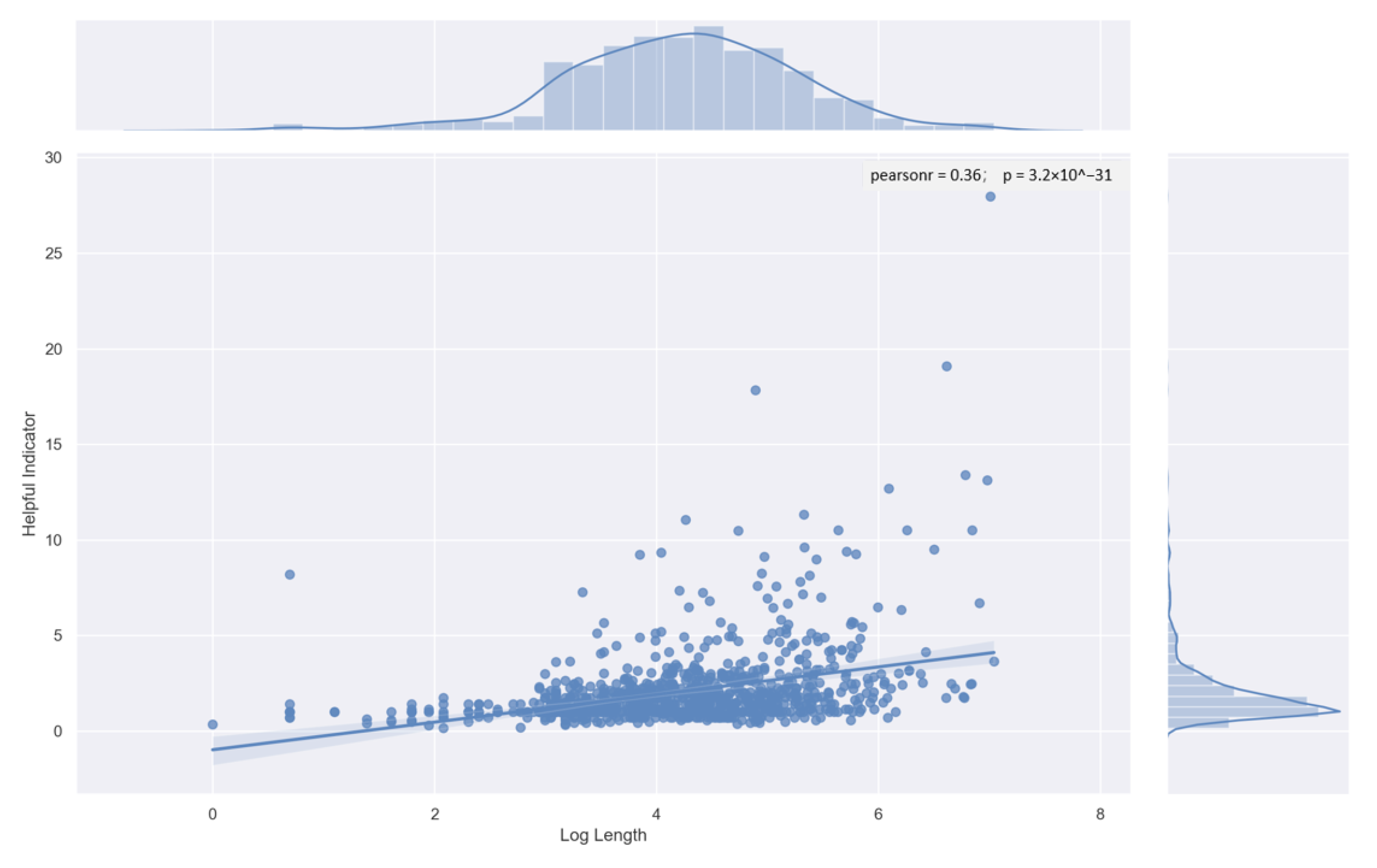

4.3. Review Usefulness Linear Regression Results

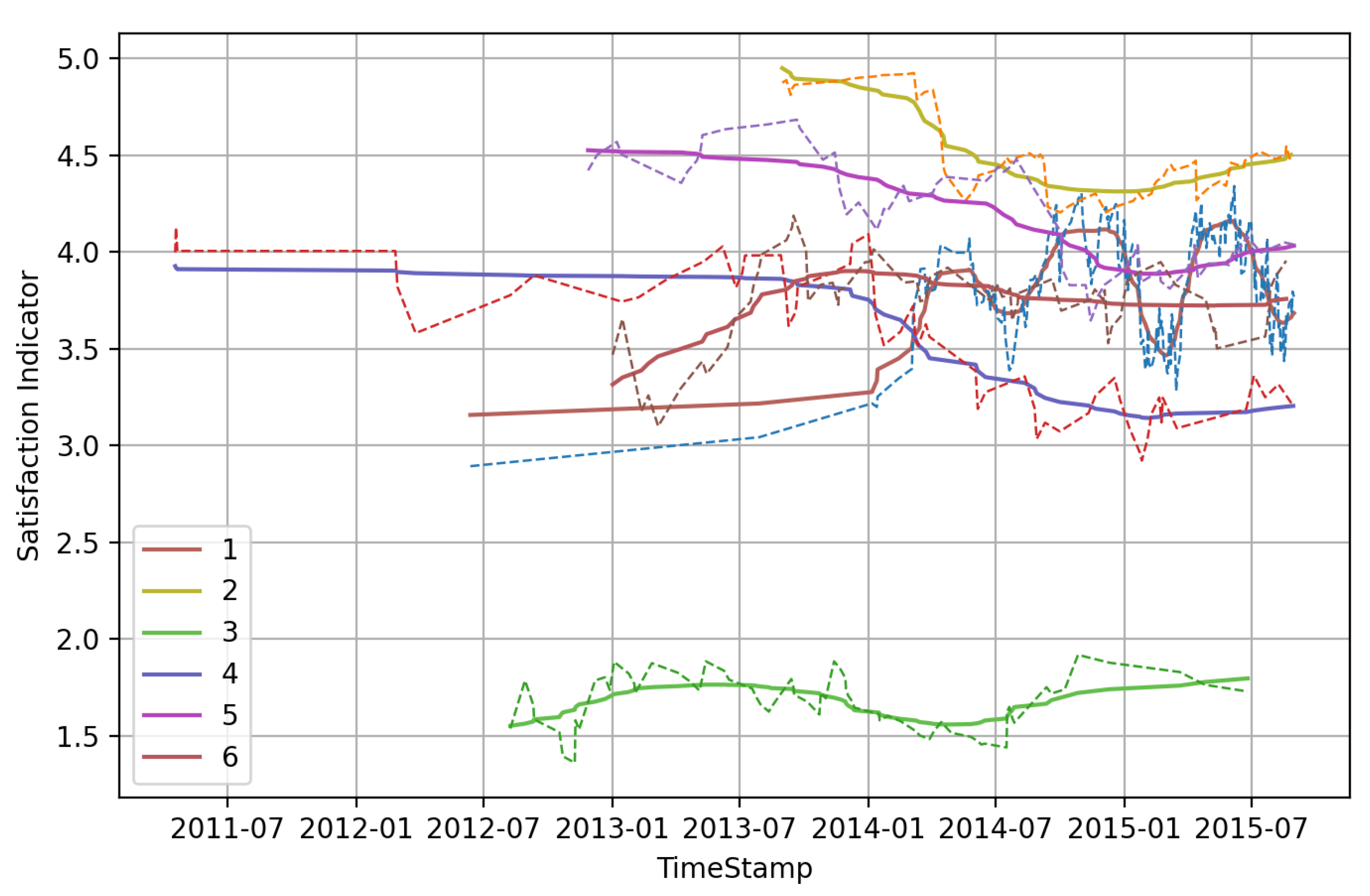

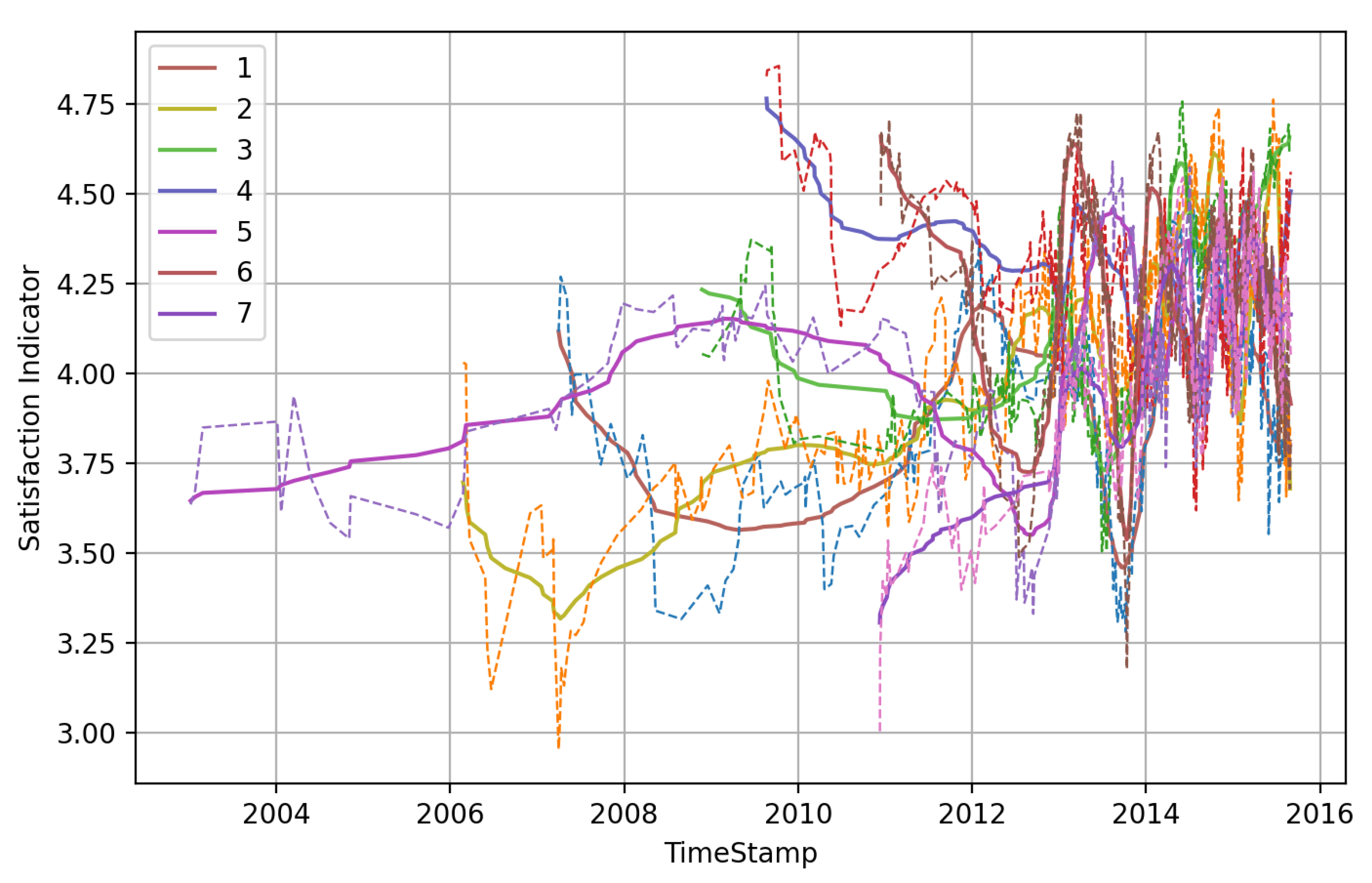

4.4. Customer Satisfaction Experimental Results

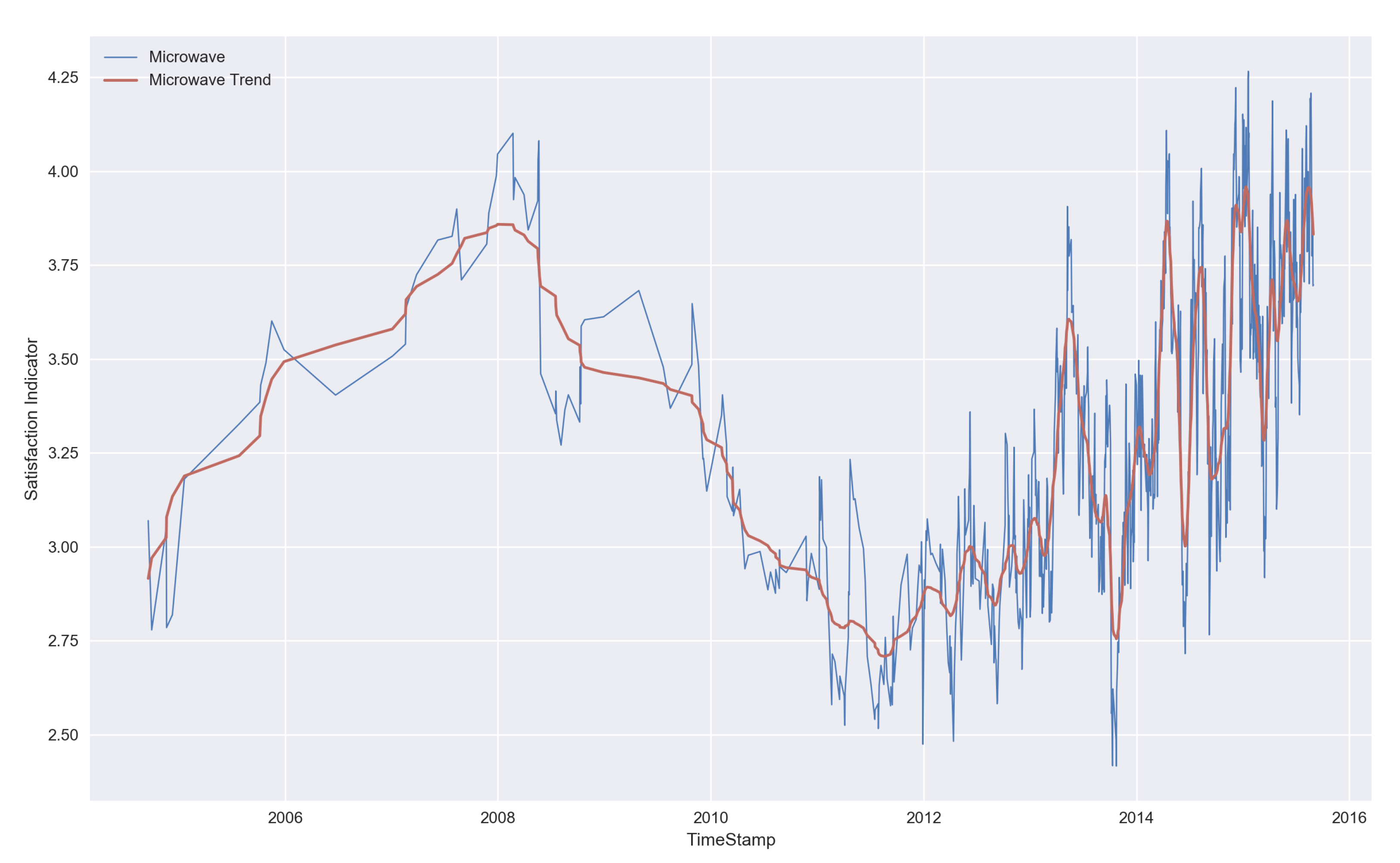

4.5. Discussion of Customer Satisfaction

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Hou, T.; Yannou, B.; Leroy, Y.; Poirson, E. Mining customer product reviews for product development: A summarization process. Expert Syst. Appl. 2019, 132, 141–150. [Google Scholar] [CrossRef]

- Shelly, G.; Vermaat, M. Discovering Computers 2010: Living in a Digital World, Complete; Nelson Education: Toronto, ON, Canada, 2009. [Google Scholar]

- Yan, Z.; Jing, X.; Pedrycz, W. Fusing and mining opinions for reputation generation. Inf. Fusion 2017, 36, 172–184. [Google Scholar] [CrossRef]

- Benlahbib, A. Aggregating customer review attributes for online reputation generation. IEEE Access 2020, 8, 96550–96564. [Google Scholar] [CrossRef]

- Ding, Y.; Xiao, Y. Study on Customer Satisfaction Evaluation of Five-star Hotels Based on Internet Reviews. Econ. Geogr. 2014, 5, 182–186. (In Chinese) [Google Scholar]

- Hu, S. Tourism competitiveness evaluation based on analytic hierarchy process and SVR. J. Shenyang Univ. 2019, 5, 404–409. [Google Scholar]

- Barsky, J.D. Customer satisfaction in the hotel industry: Meaning and measurement. Hosp. Res. J. 1992, 16, 51–73. [Google Scholar] [CrossRef]

- Hu, Y.H.; Chen, Y.L.; Chou, H.L. Opinion mining from online hotel reviews – A text summarization approach. Inf. Process. Manag. 2017, 53, 436–449. [Google Scholar] [CrossRef]

- Shen, C.; Che, W.L.; Gui, H. Analysis of influencing factors of e-commerce customer satisfaction based on Bayesian network-taking Jingdong sports shoes as an example. Math. Pract. Underst. 2020, 50, 285–294. [Google Scholar]

- Feng, K.; Yang, Q.; Chang, X.Y.; Li, Y.L. Customer Satisfaction Evaluation of Fresh Food E-commerce Based on Online Reviews and Random Dominance Criteria. China Manag. Sci. 2021, 2, 205–216. (In Chinese) [Google Scholar]

- Huang, L.; Tan, C.H.; Ke, W.; Wei, K.K. Helpfulness of online review content: The moderating effects of temporal and social cues. J. Assoc. Inf. Syst. 2018, 19, 3. [Google Scholar] [CrossRef]

- Capoccia, C. Online reviews are the best thing that ever happened to small businesses. Forbes. Retrieved Febr. 2018, 2, 2019. [Google Scholar]

- Kuan, K.K.; Hui, K.L.; Prasarnphanich, P.; Lai, H.Y. What makes a review voted? An empirical investigation of review voting in online review systems. J. Assoc. Inf. Syst. 2015, 16, 1. [Google Scholar] [CrossRef]

- Pu, X.; Wu, G.; Yuan, C. Exploring overall opinions for document level sentiment classification with structural SVM. Multimed. Syst. 2018, 25, 21–33. [Google Scholar] [CrossRef]

- Liu, B.; Zhang, Y. Research on evaluation of third-party logistics service quality based on dynamic fuzzy sets. IEEE 2011, 833–837. [Google Scholar]

- Baek, H.; Ahn, J.; Choi, Y. Helpfulness of Online Consumer Reviews: Readers’ Objectives and Review Cues. Int. J. Electron. Commer. 2012, 17, 99–126. [Google Scholar] [CrossRef]

- Maron, M.E.; Kuhns, J.L. On Relevance, Probabilistic Indexing and Information Retrieval. J. ACM 1960, 7, 216–244. [Google Scholar] [CrossRef]

- Yoffie, D.B. Competing in the age of digital convergence. Calif. Manag. Rev. 1996, 38, 31. [Google Scholar] [CrossRef]

- Fornell, C. A national customer satisfaction barometer: The Swedish experience. J. Mark. 1992, 56, 6–21. [Google Scholar] [CrossRef]

- Li, W.; Zhen, W. Frontier Issues of China’s Industrial Development; Shanghai People’s Publishing House: Shanghai, China, 2003. (In Chinese) [Google Scholar]

- Cai, Z.; Men, Y.; Liu, N. Comprehensive Evaluation of Customer Satisfaction of Cross-border Beauty E-commerce Based on F-AHP. China’s Collect. Econ. 2020, 18, 102–104. (In Chinese) [Google Scholar]

- Xu, J.; Huang, Y. AHP-Based Third-Party Logistics Service Provider Evaluation and Countermeasure Research. Chin. Mark. 2021, 2, 173–174. (In Chinese) [Google Scholar]

- Zhang, P.; Han, X.; Guan, Z. Research on User Satisfaction Index Model of Construction Enterprises. J. Southwest Pet. Univ. 2009, 2, 48–58. (In Chinese) [Google Scholar]

- Zhen, Z. Study on Customer Satisfaction of Human Resource Service Enterprises under the Background of “Internet +”. Master’s Thesis, East China Jiaotong University, Nanchang, China, 2020. (In Chinese). [Google Scholar]

- Fan, W.; Chen, X.; Peng, J.; He, Y. Research on Customer Satisfaction Evaluation Index System of Budget Hotels. Mod. Bus. 2018, 27, 98–99. (In Chinese) [Google Scholar]

- Bi, J.-W.; Liu, Y.; Fan, Z.-P.; Zhang, J. Exploring asymmetric effects of attribute performance on customer satisfaction in the hotel industry—ScienceDirect. Tour. Manag. 2015, 77, 104006. [Google Scholar] [CrossRef]

- Geetha, M.; Singha, P.; Sinha, S. Relationship between customer sentiment and online customer ratings for hotels - An empirical analysis. Tour. Manag. 2017, 61, 43–54. [Google Scholar] [CrossRef]

- Liu, Y.; Teichert, T.; Rossi, M.; Li, H.; Hu, F. Big data for big insights: Investigating language-specific drivers of hotel satisfaction with 412,784 user-generated reviews. Tour. Manag. 2017, 59, 554–563. [Google Scholar] [CrossRef]

- Li, M.; Zhao, X. Service quality evaluation method based on customer online evaluation information. J. Liaoning Univ. 2018, 46, 84–94. [Google Scholar]

- Ahani, A.; Nilashi, M.; Yadegaridehkordi, E.; Sanzogni, L.; Tarik, A.R.; Knox, K.; Samad, S.; Ibrahim, O. Revealing customers’ satisfaction and preferences through online review analysis: The case of Canary Islands hotels. J. Retail. Consum. Serv. 2019, 51, 331–343. [Google Scholar] [CrossRef]

- Ostovare, M.; Shahraki, M.R. Evaluation of hotel websites using the multicriteria analysis of PROMETHEE and GAIA: Evidence from the five-star hotels of Mashhad. Tour. Manag. Perspect. 2019, 30, 107–116. [Google Scholar] [CrossRef]

- Feng, J.; Cai, S. Online comment usefulness prediction model fused with information gain and gradient descent algorithm. Comput. Sci. 2020, 47, 69–74. (In Chinese) [Google Scholar]

- Klinger, R. An analysis of annotated corpora for emotion classification in text. In Proceedings of the 27th International Conference on Computational Linguistics, Santa Fe, NM, USA, 20–26 August 2018; pp. 2104–2119. [Google Scholar]

- Ye, X.; Mao, X.; Xia, J.; Wang, B. Improvement of text classification TF-IDF algorithm. Comput. Eng. Appl. 2019, 55, 104–109. (In Chinese) [Google Scholar]

- Du, J.-R.; Gao, L.Q.; Liu, Y.; Li, B.; Yang, Z.M. Research on the Influencing Factors of Consumers’ Willingness to Buy New Energy Vehicles in Beijing, Tianjin and Hebei. Value Eng. 2019, 38, 220–223. [Google Scholar]

- Chen, N. Empirical Research on the Deviation Degree of UGC Anchoring Effect Based on E-Commerce Platform. Master’s Thesis, Beijing University of Posts and Telecommunications, Beijing, China, 2017. (In Chinese). [Google Scholar]

- Hu, H.; Wei, X.; Wang, Q.; Ou, C.; Yan, Y.; Lv, Y.; Liu, X. EMA-qPCR method for detection of lactic acid bacteria content in fermented feed. J. Anim. Husb. Vet. Med. 2019, 50, 2166–2170. (In Chinese) [Google Scholar]

- Sun, W.; Wu, H. Forecasting Analysis of China’s CPI Seasonal Adjustment Model. Stat. Decis. 2017, 2017, 14. [Google Scholar]

- Dellarocas, C.; Zhang, X.M.; Awad, N.F. Exploring the value of online product reviews in forecasting sales: The case of motion pictures. J. Interact. Mark. 2007, 21, 23–45. [Google Scholar] [CrossRef]

| Symbols | Description |

|---|---|

| i | Serial number of rating record |

| t | Serial number of evaluation |

| Helpful votes | |

| Total votes | |

| Helpful rating | |

| Log length of a review | |

| Effectiveness of star rating | |

| The influence of Amazon Vine Voices on | |

| The influence of on | |

| Star rating | |

| Effectiveness weighted star rating | |

| The decay degree parameter in EMA | |

| k | The length of one roll |

| N | The number of rating records in a scrollable pane |

| Weighted satisfaction indicator |

| Product | Microwave | Baby Pacifier | Hair Dryer | |

|---|---|---|---|---|

| Number_of_data | 18,939 | 1615 | 11,470 | |

| Number_of_brands | 5464 | 57 | 499 | |

| Features | Customer_id | Random identifier that can be used to aggregate reviews written by a single author | ||

| Review_id | The unique ID of the review | |||

| Product_id | The unique Product ID the review pertains to | |||

| Star_rating | The 1–5 star rating of the review | |||

| Helpful_votes | Number of helpful votes | |||

| Total_votes | Number of total votes the review received | |||

| Vine | Customers are invited to become Amazon Vine Voices based on the trust that they have earned in the Amazon community for writing accurate and insightful reviews. Amazon provides Amazon Vine members with free copies of products that have been submitted to the program by vendors. Amazon does not influence the opinions of Amazon Vine members, nor do they modify or edit reviews. | |||

| Verified_purchase | A “Y” indicates that Amazon verified that the person writing the review purchased the product at Amazon and did not receive the product at a deep discount. | |||

| Review_date | The date the review was written | |||

| Review_title | The title of the review | |||

| Review_body | The review text | |||

| 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|

| not buy | pem | Slim | four | five |

| junk | pause | Pans | emblem | love |

| calls | nozzle | Fry | preheat | perfect |

| board | powers | Guide | racks | fantastic |

| fire | grinding | Sides | fancy | coats |

| garbage | veggies | careful | grilling | excellent |

| recall | stovetop | straight | value | foot |

| worst | dual | breaded | effort | awesome |

| code | filters | Wood | menu | limited |

| repairman | bulb | Filter | mount | crispy |

| 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|

| fake | tiles | three | four | five |

| junk | engineering | defeats | channel | saver |

| unsafe | boat | bumbleride | vest | lifesaver |

| worst | holy | not horrible | drawback | gifts |

| not suitable | collapses | flight | drapes | cutest |

| refund | relaxes | not necessary | downfall | amazing |

| not waste | streaming | placemat | complaint | brilliant |

| waste | retains | quarter | minor | penny |

| not safe | cameras | alert | overall | excelente |

| horrible | matte | not favorite | limbs | excellent |

| 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|

| junk | not lock | Advance | four | five |

| garbage | not meet | not bad | diffuser | loves |

| dangerous | not recomend | Heads | powder | love |

| waste | cotton | Alright | tan | best |

| worst | substituted | Wavers | complaint | amazing |

| not buy | released | not impressed | minor | excellent |

| refund | lemon | Studio | brown | awesome |

| awful | taste | Philips | elastic | happier |

| exploded | sparking | Okay | cords | fantastic |

| needless | excessive | Loop | only | wonderful |

| Microwave | Pacifier | Hair Dry | |

|---|---|---|---|

| Mean | 3.4446 | 4.3046 | 4.1160 |

| Variance | 2.7068 | 1.4171 | 1.6909 |

| Coefficient of Variation | 0.4776 | 0.2766 | 0.3159 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Y.; Wan, Y.; Shen, X.; Ye, Z.; Wen, J. Product Customer Satisfaction Measurement Based on Multiple Online Consumer Review Features. Information 2021, 12, 234. https://doi.org/10.3390/info12060234

Liu Y, Wan Y, Shen X, Ye Z, Wen J. Product Customer Satisfaction Measurement Based on Multiple Online Consumer Review Features. Information. 2021; 12(6):234. https://doi.org/10.3390/info12060234

Chicago/Turabian StyleLiu, Yiming, Yinze Wan, Xiaolian Shen, Zhenyu Ye, and Juan Wen. 2021. "Product Customer Satisfaction Measurement Based on Multiple Online Consumer Review Features" Information 12, no. 6: 234. https://doi.org/10.3390/info12060234

APA StyleLiu, Y., Wan, Y., Shen, X., Ye, Z., & Wen, J. (2021). Product Customer Satisfaction Measurement Based on Multiple Online Consumer Review Features. Information, 12(6), 234. https://doi.org/10.3390/info12060234