1. Introduction

With the advancement of data collecting and processing technologies, network structure data are universal and extensive. The reason for this lies in the fact that information network is a direct and natural way for organizing data that are from a wide diversity of real-world systems, such as social networks, citation networks, web networks, the Internet, and so forth. Mining useful hidden information from such networks is essential because it is helpful to understand and may benefit the corresponding applications by making good usage of the found information. For example, based on a community structure of an online social network being detected, a better recommendation in terms of friendship can be made due to the observation that users within then same community generally have similar roles or properties and may make friends with a higher probability. The efficiency of network data analyzing heavily relies on the way how a network is represented. Generally, a network can be denoted as a graph where is a node set that represents objects in a system, is an edge set that stands for connection relationships among these objects, and is the side information that associates with the nodes and edges, like node labels, edge weights, etc.. However, such a way of representation will induce high computational complexity and low parallelizability as analyzing due to the nature of complicated interconnected structure of graph nodes. It even may cause the analysis of large-scale networks being impossible in terms of computing time.

Recently, network representation learning (NRL), also called network embedding (NE), provides a practical and promising way to alleviate these issues, and has received a lot of research attention with several algorithms being developed [

1,

2,

3,

4,

5,

6]. Its basic idea is to learn latent, low-dimensional representations (usually continuous vectors) for network nodes, edges, or subgraphs with the property that preserves the network topology structure proximity and node and edge side information affinity. In a low-dimensional space, network analytic tasks, such as node classification, node clustering, link prediction, and so on, can be easily and efficiently carried out by applying a plenty of ready vector-based machine learning algorithms.

Community structure is a prominent mesoscopic topology property in complex networks, therefore it is necessary to preserve it in learned low-dimensional representations. Our previous work [

7] has quantitatively shown the necessity and importance of incorporating community structure properties in network embedding. In that paper, we defined the concept of partial community structure and presented two algorithms using it to enhance node representation learning. The crucial point is how can we find an accurate community structure for a network. However, the method for finding a partial community structure [

7] is executed directly on whole network, and thus is not easy to parallel program. In this paper, we design a multi-process parallel approach for partial community information extracting. We will mention other recently proposed network embedding algorithms that explicitly consider preserving community properties later in

Section 2.

In summary, the main contributions of this paper are:

- (1)

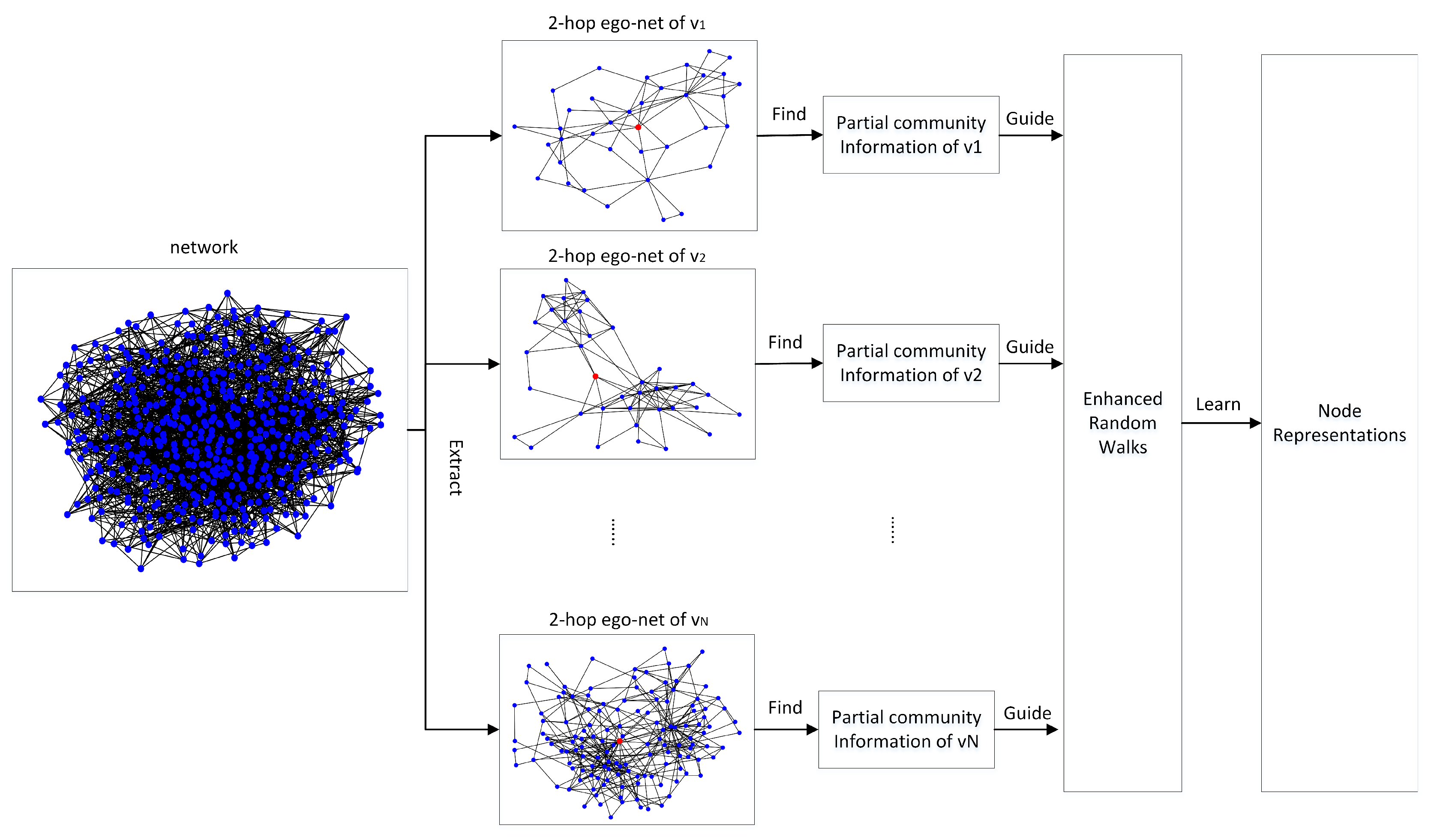

We introduced a multi-process parallel framework for network node representation learning enhanced by partial community structure information. The framework first extracts the ego-net of each node in a network, and then finds the partial community information for the center node on it. The information is then incorporated into collecting random walks that will be used to learn node representations. As a result, the community structure properties can be well preserved in learned low-dimension representations.

- (2)

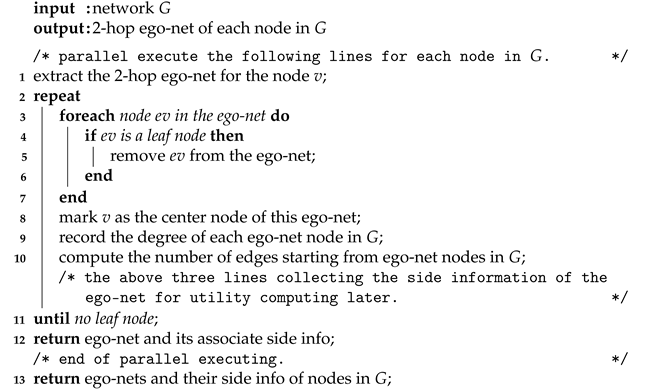

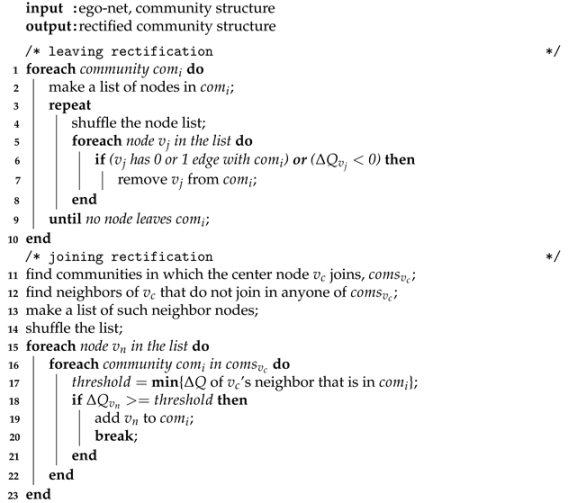

We proposed an improved game theory-based algorithm for partial community information extraction. A merging operation is added at the end of each game-playing iteration, to reduce community labels if it is possible and thus speed up the game convergence. A post-process operation from the viewpoint of a community is introduced as well, to mend the quality of the found information. The algorithm is employed in the proposed framework for partial community information finding on ego-nets. Though game theory-based algorithms are superior at overlapping community structure detecting, they are generally computational cost and thus cannot be used on large-scale networks. Our framework can avoid this drawback because an ego-net is usually much smaller in size than the whole network.

- (3)

We implemented the framework to improve two popular node representation learning methods based on random walk, DeepWalk [

8] and node2vec [

9], and bring forth GameNE-DW (Game-based Network Embedding on DeepWalk) and GameNE-N2V (Game-based Network Embedding on Node2Vec).

- (4)

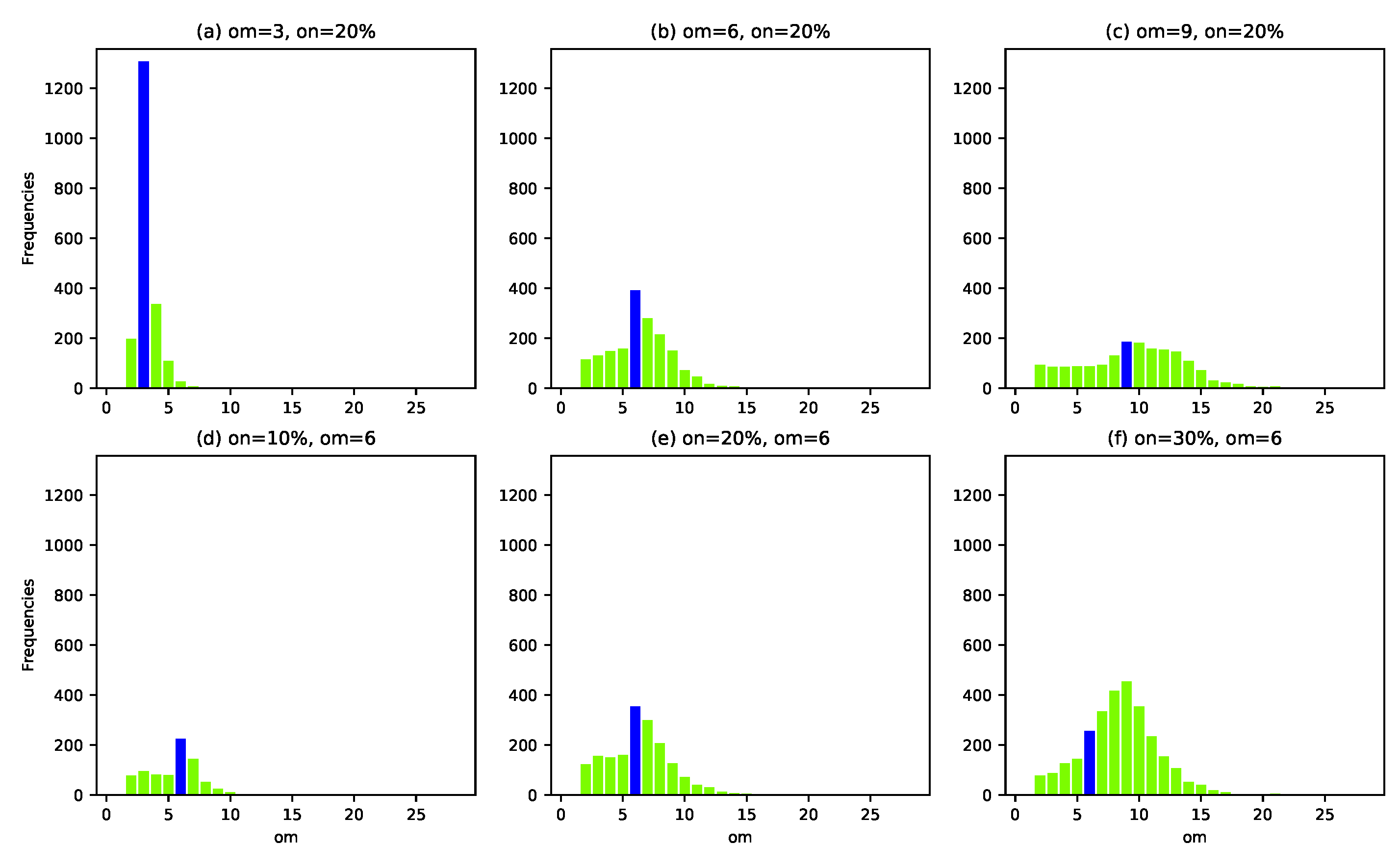

We conducted a series of experiments on synthesized networks, of which their community structure properties can be controlled through model parameters, to evaluate the ability of community structure preservation of our algorithms. We also compare our methods against six state-of-the-art representation learning algorithms. The results demonstrate that GameNE-DW and GameNE-N2V are adept at preserving community structure properties, especially on networks with high overlapping diversity and density.

The structure of the rest paper is organized as follows: in

Section 2, we mainly describe the algorithms that explicitly consider to preserve community property of networks in node representation learning, and game theory-based algorithms designed for community structure detecting; in

Section 3, we briefly introduce the concepts of game theory for community detection;

Section 4 details our multi-process parallel framework that uses game-playing to enhance network node representation learning;

Section 5 gives out the experiment results that show the excellent ability of community structure preserving of our algorithms, especially on networks with high-density overlapping nodes and high-diversity overlapping memberships; at last,

Section 6 discusses the pros and cons of our methods and

Section 7 concludes the paper.

Author Contributions

Conceptualization, H.S. and W.J.; methodology, H.S., J.L. and L.C.; software, G.L. and S.Z.; validation, Z.W. and S.M.; formal analysis, H.S. and W.J.; investigation, G.L.; data curation, S.Z.; writing—original draft preparation, H.S.; writing—review and editing, W.J., J.L. and L.C.; funding acquisition, H.S. and G.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the International Science and Technology Cooperation Project of Shaanxi Province, China, grant number 2019KW-008; and the Science and Technology Projects of Xi’an City, China, grant number 2019218114GXRC017CG018-GXYD17.9.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data and code presented in this study are available on request from the corresponding author. The data are not publicly available due to privacy reasons.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhang, D.; Yin, J.; Zhu, X.; Zhang, C. Network representation learning: A survey. IEEE Trans. Big Data 2020, 6, 3–28. [Google Scholar] [CrossRef]

- Goyal, P.; Ferrara, E. Graph embedding techniques, applications, and performance: A survey. Knowl. Based Syst. 2018, 151, 78–94. [Google Scholar] [CrossRef]

- Hamilton, W.L.; Ying, R.; Leskovec, J. Representation learning on graphs: Methods and applications. arXiv 2018, arXiv:1709.05584. [Google Scholar]

- Cai, H.; Zheng, V.W.; Chang, K.C.C. A comprehensive survey of graph embedding: Problems, techniques, and applications. IEEE Trans. Knowl. Data Eng. 2018, 30, 1616–1637. [Google Scholar] [CrossRef]

- Qi, J.; Liang, X.; Li, Z.; Chen, Y.; Xu, Y. Representation learning of large-scale complex information network: Concepts, methods and challenges. Chin. J. Comput. 2018, 41, 2394–2420. (In Chinese) [Google Scholar]

- Tu, C.; Yang, C.; Liu, Z.; Sun, M. Network representation learning: An overview. Sci. Sin. Inf. 2017, 47, 980–996. (In Chinese) [Google Scholar]

- Sun, H.; Jie, W.; Wang, Z.; Wang, H.; Ma, S. Network Representation Learning Guided by Partial Community Structure. IEEE Access 2020, 8, 46665–46681. [Google Scholar] [CrossRef]

- Perozzi, B.; Al-Rfou, R.; Skiena, S. DeepWalk: Online learning of social representations. In Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, New York, NY, USA, 24–27 August 2014; pp. 701–710. [Google Scholar] [CrossRef]

- Grover, A.; Leskovec, J. node2vec: Scalable feature learning for networks. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 855–864. [Google Scholar] [CrossRef]

- Wang, X.; Cui, P.; Wang, J.; Pei, J.; Zhu, W.; Yang, S. Community preserving network embedding. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; pp. 203–209. [Google Scholar]

- Rozemberczki, B.; Davies, R.; Sarkar, R.; Sutton, C. GEMSEC: Graph Embedding with Self Clustering. In Proceedings of the 2019 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining, Vancouver, BC, Canada, 27–30 August 2019. [Google Scholar]

- Li, Y.; Wang, Y.; Zhang, T.; Zhang, J.; Chang, Y. Learning Network Embedding with Community Structural Information. In Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence, Macao, China, 10–16 August 2019. [Google Scholar] [CrossRef]

- Zhang, Y.; Lyu, T.; Zhang, Y. COSINE: Community-preserving social network embedding from information diffusion cascades. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; pp. 2620–2627. [Google Scholar]

- Cavallari, S.; Zheng, V.W.; Cai, H. Learning community embedding with community detection and node embedding on graphs. In Proceedings of the 2017 ACM on Conference on Information and Knowledge Management, Singapore, 6–10 November 2017; pp. 377–386. [Google Scholar] [CrossRef]

- Cavallar, S.; Cambria, E.; Cai, H.; Chang, K.C.C.; Zheng, V.W. Embedding Both Finite and Infinite Communities on Graphs. IEEE Comput. Intell. Mag. 2019, 14, 39–50. [Google Scholar] [CrossRef]

- Tu, C.; Zeng, X.; Wang, H.; Zhang, Z.; Liu, Z.; Sun, M.; Zhang, B.; Lin, L. A unified framework for community detection and network representation learning. IEEE Trans. Knowl. Data Eng. 2019, 31, 1051–1065. [Google Scholar] [CrossRef]

- Jia, Y.; Zhang, Q.; Zhang, W.; Wang, X. CommunityGan: Community detection with generative adversarial nets. In Proceedings of the World Wide Web Conference, San Francisco, CA, USA, 13–17 May 2019; pp. 784–794. [Google Scholar]

- Sun, F.Y.; Qu, M.; Hoffmann, J.; Huang, C.W.; Tang, J. vGraph: A Generative Model for Joint Community Detection and Node Representation Learning. In Advances in Neural Information Processing Systems (NeurIPS 2019); Wallach, H., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2019; Volume 32. [Google Scholar]

- Keikha, M.M.; Rahgozar, M.; Asadpour, M. Community aware random walk for network embedding. Knowl. Based Syst. 2018, 148, 47–54. [Google Scholar] [CrossRef]

- Tian, Y.; Balmin, A.; Corsten, S.A.; Tatikonda, S.; McPherson, J. From “Think Like a Vertex” to “Think Like a Graph”. In Proceedings of the 40th International Conference on Very Large Data Bases, Hangzhou, China, 1–5 September 2014; pp. 193–204. [Google Scholar] [CrossRef]

- Jonnalagadda, A.; Kuppusamy, L. A survey on game theoretic models for community detection in social networks. Soc. Netw. Anal. Min. 2016, 6, 1–24. [Google Scholar] [CrossRef]

- Chen, W.; Liu, Z.; Sun, X.; Wang, Y. A Game-Theoretic Framework to Identify Overlapping Communities in Social Networks. Data Min. Knowl. Discov. 2010, 21, 224–240. [Google Scholar] [CrossRef]

- Soleimanpour, M.; Hamze, A. A game-theoretic approach for locally detecting overlapping communities in social networks. In Proceedings of the Eighth International Conference on Information and Knowledge Technology, Hamedan, Iran, 7–8 September 2016; pp. 38–44. [Google Scholar]

- Sun, H.L.; Ch’Ng, E.; Yong, X.; Garibaldi, J.M.; See, S.; Chen, D.B. An improved game-theoretic approach to uncover overlapping communities. Int. J. Mod. Phys. C 2017, 28, 1750112. [Google Scholar] [CrossRef]

- Zhou, X.; Zhao, X.; Liu, Y.; Sun, G. A game theoretic algorithm to detect overlapping community structure in networks. Phys. Lett. A 2018, 382, 872–879. [Google Scholar] [CrossRef]

- Avrachenkov, K.E.; Kondratev, A.Y.; Mazalov, V.V. Cooperative Game Theory Approaches for Network Partitioning. In Proceedings of the 6th International Conference on Computational Social Networks, Hong Kong, China, 3–5 August 2017. [Google Scholar]

- Zhou, L.; Lü, K.; Yang, P.; Wang, L.; Kong, B. An Approach for Overlapping and Hierarchical Community Detection in Social Networks Based on Coalition Formation Game Theory. Expert Syst. Appl. 2015, 42, 9634–9646. [Google Scholar] [CrossRef]

- Zhou, L.; Yang, P.; Lü, K.; Zhang, Z.; Chen, H. A Coalition Formation Game Theory-Based Approach for Detecting Communities in Multi-relational Networks. In Proceedings of the 16th International conference on Web-Age Information Management, Qingdao, China, 8–10 June 2015; pp. 30–41. [Google Scholar]

- Moscato, V.; Picariello, A.; Sperlí, G. Community detection based on Game Theory. Eng. Appl. Artif. Intell. 2019, 85, 773–782. [Google Scholar] [CrossRef]

- Newman, M.E.J. Modularity and community structure in networks. Proc. Natl. Acad. Sci. USA 2006, 103, 8577–8582. [Google Scholar] [CrossRef]

- Tang, J.; Qu, M.; Wang, M.; Zhang, M.; Yan, J.; Mei, Q. LINE: Large-scale information network embedding. In Proceedings of the 24th International World Wide Web Conference, Florence, Italy, 18–22 May 2015; pp. 1067–1077. [Google Scholar] [CrossRef]

- Cao, S.; Lu, W.; Xu, Q. GraRep: Learning graph representations with global structural information. In Proceedings of the 24th ACM International Conference on Information and Knowledge Management, Melbourne, Australia, 19–23 October 2015; pp. 891–900. [Google Scholar] [CrossRef]

- Lancichinetti, A.; Fortunato, S.; Radicchi, F. Benchmark graphs for testing community detection algorithms. Phy. Rev. E 2008, 78, 046110. [Google Scholar] [CrossRef] [PubMed]

- Chang, C.C.; Lin, C.J. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. 2011, 2, 27. [Google Scholar] [CrossRef]

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).