Abstract

Digitalization increasingly enforces organizations to accommodate changes and gain resilience. Emerging technologies, changing organizational structures and dynamic work environments bring opportunities and pose new challenges to organizations. Such developments, together with the growing volume and variety of the exchanged data, mainly yield complexity. This complexity often represents a solid barrier to efficiency and impedes understanding, controlling, and improving processes in organizations. Hence, organizations are prevailingly seeking to identify and avoid unnecessary complexity, which is an odd mixture of different factors. Similarly, in research, much effort has been put into measuring, reviewing, and studying complexity. However, these efforts are highly fragmented and lack a joint perspective. Further, this negatively affects the complexity research acceptance by practitioners. In this study, we extend the body of knowledge on complexity research and practice addressing its high fragmentation. In particular, a comprehensive literature analysis of complexity research is conducted to capture different types of complexity in organizations. The results are comparatively analyzed, and a morphological box containing three aspects and ten features is developed. In addition, an established multi-dimensional complexity framework is employed to synthesize the results. Using the findings from these analyses and adopting the Goal Question Metric, we propose a method for complexity management. This method serves to provide key insights and decision support in the form of extensive guidelines for addressing complexity. Thus, our findings can assist organizations in their complexity management initiatives.

1. Introduction

As organizations develop and digitize their businesses, the number of interactions and dependencies between their processes, information systems, and organizational units increases dramatically [1]. To deal with this growth, organizations often enhance the technology supporting their businesses. That can also affect the structure of these organizations [2]. Such dynamics are likely to bring significant challenges [3]. One prominent challenge organizations have to tackle is a complexity that blocks decision-making and leads to unreasonably high and mainly hidden costs. For example, according to the study conducted by The Hackett Group, a world-class strategic consultancy, in 2019 [4], the operating costs of low-complexity companies as a percentage of overall revenue were almost 60% lower than those of the companies operating in highly complex settings. The low-complexity companies employed 66% fewer staff and spent 30% less on technology.

There has always been much interest from both academia and industry in complexity research. Many disciplines have established their own complexity subfields adjusting the complexity concepts to the specific goals [5], for example, social complexity [6], software complexity [7], or managerial complexity [8], in addition to generic studies. While recognizing the contributions of these subfields, it is hard to find an agreed-upon definition. Missing conceptual alignment and differing complexity interpretations complicate the common acceptance of the complexity field [5]. Further, there remains much unclarity and fragmentation on what contributes to the complexity and how to not only measure and reduce it but, more importantly, benefit from it. Especially in the organizational domain, the discussion on complexity is either rather generic [9] or devoted to a narrow topic, like task complexity [10]. Hereby, the methods for measuring complexity are prevailingly designed for a particular type of complexity [11] and, therefore, are hardly transferable to other types [12]. However, the organizational domain can be characterized as a complex environment embracing different types of complexity, often even implicit, for example, related to people, structures, technology, or processes. Hence, in such settings, managers lack comprehensive guidelines on how to approach complexity management initiatives, including steps, measurements, and necessary data and information.

Additionally, ongoing technological developments and the use of diverse information systems increase the variety of data sources and types. Such advancements create a demand for a continual review of the existing studies in complexity research that can offer new perspectives, highlight essential gaps, and suggest approaches for complexity management. Moreover, in the literature, we observed a lack of structured outcome providing an integrated overview of the complexity research.

To address the mentioned shortcomings, in our study, we conduct a Systematic Literature Review (SLR) and create a comprehensive overview of complexity research relevant in organizations. To create such a summary, we take as the basis the People Process Technology (PPT) framework [13,14], as well as typical information and data sources that exist in organizations. With this, we aim to cover the complexity that is related to the main components of organizations, namely people, technology, and processes. Hence, we focus on the typically observed complexities related to an organization as a whole (organizational complexity), technology (technological complexity) and people, in particular, communication. The latter we analyze through the lens of generated textual data serving different purposes (textual complexity).

In fact, complexity can be influenced by a myriad of factors and may arise in various forms. For example, in the case of organizational complexity ranging from complex projects to organizational structures, managers and executives face inefficiency, delays, performance decrease, or even the inability to handle their businesses. Similarly, unstructured textual data, like complex and even confusing instructions, project communication and documentation, textual task descriptions will cause unclarities, errors, and rework. Furthermore, complex technology will likely be not only the source of high costs but also an obstacle to successful business functioning, although it has the goal of supporting the processes in organizations. Hence, dynamic business processes, computing platforms, applications, and services can cause a massive complexity encompassing many concepts, technologies, data and information sources, which are interlinked together in diverse organizational interactions.

Afterwards, to address the confusion impeding a common acceptance of the field, we structure and classify the literature findings in the form of a morphological box and integrate them into a multi-dimensional complexity framework. The morphological box [15] aims at summarizing the fundamental aspects of complexity research, whereas the multi-dimensional complexity framework [5] incorporates the complexity types identified in the literature into one integrated structure contributing to the standardization efforts of the complexity research. Based on these two analyses, we derive our main contribution, that is, a method to apply our study findings by extending the Goal Question Metric (GQM) [16].

Hence, our study contributes to the common acceptance and understanding of complexity research in organizations by addressing its high fragmentation. It provides researchers with a comprehensive overview based on the PPT framework and structured outcome of research results in the form of a morphological box and multi-dimensional framework.

As a practical contribution, we address the lack of instructions for selecting of a specific approach to address complexity in organizations. Hence, the method can serve as practical guidance for organizations in their complexity management projects.

The remainder of the paper is structured as follows: Section 2 provides an overview of the related work in the literature and highlights the gaps. In Section 3, we present the research methods used for conducting the literature review and analyzing and classifying its results. Section 4 gives an overview of the results. Section 5 analyzes and classifies the results providing structured outcomes in the form of the morphological box and integrated complexity framework. In Section 6, we present the key contribution of our study, that is, the method to address complexity in organizations. We discuss our findings, their implications and limitations in Section 7. Finally, conclusions and future work are presented in Section 8.

2. Related Work

The term complexity has always received the attention of scholars in different fields, such as Computer Sciences, Organizational Sciences and Linguistics. For example, in Computer Sciences, the term complexity, as a rule, determines the complexity of an algorithm, that is, the number of resources required to execute the algorithm [17]. Organizational Sciences mostly adapt concepts from Complexity Theory and define an organization as a complex dynamic system, which consists of elements interacting with each other and their environment [18]. In Linguistics, complexity is studied from the language perspective and comprises phonological, morphological, syntactic, and semantic complexities [19]. Hence, complexity reveals various definitions and implications. It can be expressed in exact terms, such as McCabe’s software complexity defined as a number of possible paths in code [7]. Alternatively, it can cover broad ideas serving as an umbrella term for many concepts. For example, Edmonds defines complexity as a property of a language that makes it troublesome to formulate its behavior, even given nearly complete information regarding its atomic parts and their interrelations [20]. Such heterogeneity of complexity concepts hampers its wide recognition [5]. Besides, this high fragmentation becomes apparent when searching for literature review studies conducted in the field of complexity, like in the following reviews: ref. [21] on task complexity, ref. [22] on innovation complexity, and ref. [23] on interorganizational complexity from employer and safety perspectives.

Similarly for the term complexity, there is much ambiguity and fragmentation on what contributes to the complexity and the ways to reduce and benefit from it. For example, ref. [24], while conducting research on complexity drivers in manufacturing companies, observe that the studies mostly focus on one definition (perspective) of complexity drivers providing no comprehensive analyses. Ref. [25] states that the task complexity drivers or contributors found in the literature are the consequence of combining diverse settings and subjective preferences. As a result, they are ambiguous and difficult to grasp and analyze. Ref. [26] developed guidelines on how to reduce complexity, focusing on a rather specific field of product and process complexity in a case study of a consumer products manufacturer. The authors justify the necessity of such recurring complexity studies by an increasingly changing complexity nature. Besides, another group of studies aiming to deal with process complexity in organizations consider it from the specific perspective of process models, workflows, and event logs [27,28,29]. Another rather unconventional approach to process complexity is suggested in [30]. The authors study the complexity of IT Service Management processes through the lens of textual data massively generated in the organizations and the related readability and understandability of textual work instructions. This represents a promising but rather narrow research direction. Further, addressing the process complexity of software development, ref. [31] also emphasize a limited scope of the previous studies focusing on the code-related measures.

Moreover, the shortcoming of a narrow focus causes the drop back of certain industries in the complexity management field [12]. Likewise, despite their abundance, complexity measures, such as software complexity measures that are essential for managing complexity, have not been sufficiently described and evaluated in the literature [32]. This is also confirmed in the recent studies evidencing the poor usage of code complexity measures in the industry [33].

Further, a number of recent studies investigate the notion of complexity in companies empirically based on a bottom-up approach, the fact indicating that there is still no alignment and much dissonance on how to approach complexity in organizations. Accordingly, ref. [34] study how project complexity has been perceived by practitioners in different industry sectors. In total, five sectors and more than 140 projects have been researched. It has been concluded that a comprehensive project complexity framework and guidelines could aid in the management of complex projects by raising awareness of the (anticipated) complexities [34]. Ref. [35] also declare the need for developing approaches to measure and manage complex projects. While studying the complexity of supply chains in four case studies, ref. [36] highlight the necessity of frameworks that can assist managers in building overarching complexity management strategies and practices supporting them [37].

In fact, as fairly noted in the recent work [38], organizations comprise many different interconnected elements which make the complexity study challenging and also have a “negative” context. Interestingly, the authors mention that this often inherent and inevitable complexity implies essential benefits, particularly in dynamic and unpredictable conditions. In addition, they provide some strategic leadership guidance on complexity management in organizations. Similarly, in other works [2,39,40,41], the attempts to suggest guidance to address organizational complexity are limited to high-level strategic leadership recommendations.

Hence, in our study aiming to develop a method to comprehensively address complexity in organizations, the following gaps (IG: Identified Gap) serve as a motivation:

- IG1: Scarce comprehensive analyses and high fragmentation of the complexity field impeding its common acceptance;

- IG2: Much unclarity and confusion on what contributes to complexity, how to measure, reduce, and benefit from it;

- IG3: Lack of extensive guidelines for managers on how to approach complexity;

- IG4: Organizational domain embracing different types of complexity which are often implicit.

3. Research Approach

In this section, we present the research approach we follow to propose a method to deal with complexity. It includes the following: (i) an SLR on complexity in organizations and (ii) morphological box and multi-dimensional framework to classify, analyze, and synthesize the SLR findings. Based on (i) and (ii), we extend the GQM approach and propose practical guidelines for managers to address complexity in organizations.

3.1. Systematic Literature Review

As the starting point in the method development, we perform an SLR on complexity management in organizations, which we describe in the subsections below.

3.1.1. Scope

As complexity is a very broad subject area, conducting an SLR solely on complexity would have yielded an incomparably large set of papers, which is difficult to analyze and provide valuable insights. Hence, we set the focus on complexity management in organizations.

Organizations are commonly described by three main components: people, technology, and processes connecting them [13]. This viewpoint is also known as the People Process Technology (PPT) framework [14]. Despite being a popular concept, especially in industry, its origin is not straightforward. The oldest and most prominent source using the logic of these three elements is Leavitt’s diamond model [13]. This model focuses on problem-solving in organizations and highlights three types of solutions—structure (by means of organizational chart or responsibilities), technology (by means of technologies), and people (by means of Human Resources). Later on, the PPT components make up the fundamentals of the prominent Information Technology Infrastructure Library (ITIL) framework launched in the 1980s [42]. Based on the underlying assumptions in ITIL, any technology solution is only as right as the processes it supports. Similarly, processes are only as good as the people who follow them. Hence, we focus on these three PPT components (people, technology, and process) and build up our complexity types accordingly. In particular, we take organizational, technological, and textual complexity types as the starting point. For each type, we explain our motivation below.

- Organizational complexity: In the first type of complexity, organizational, we consider a broad organizational perspective discussed by Mintzberg [43] and related studies [44]. Among others, the authors consider organizations from the viewpoint of allocation of tasks and resources. Such a configuration is enabled by major organizational components and common assets, such as tasks, projects, and processes in relation to projects and organizations [45]. In this regard, task complexity research going back to the 1980s [21,46] can be considered the most well studied one in the organizational context [25]. Consequently, task, project, and process complexities are considered important constituents and subtypes of organizational complexity.

- Technological complexity: As the second type of complexity, we study technological complexity. The oldest and most popular example of technological complexity can be acknowledged software complexity, such as McCabe cyclomatic complexity [7], which is based on the control flows represented in the form of graphs. The McCabe cyclomatic complexity served as a basis for several other technological complexities related to process models and event logs [47];

- Textual complexity: To address the people component, we focus on communication, that is, on how people exchange information in organizations and receive their tasks. Subsequently, we pose a question about how we can obtain this information. Textual data generated inside and outside organizations remain one of the most valuable types of unstructured data [30,48,49,50]. Hence, we consider textual complexity as the third type of complexity, which, among others, reflects the people component in organizations. Indeed, beyond the structured program codes and event logs, analysts estimate that upward of 80% of enterprise data today is unstructured, whereby the lion’s share is occupied by textual data [48]. There is a great variety of textual data types relevant for organizations. Emails, files, instant messages, posts and comments on social media are some examples.

To provide an integrated overview of the research on the complexity from organizational, technological, and textual perspectives, it is necessary to analyze how complexity is measured in each perspective. Accordingly, we cover the following topics: what complexity metrics are available in the related literature and whether any tool support is provided for them. To capture what the existing studies in the literature focus on, apart from complexity measurement, we investigate the motivations and declared novelty in the studies. Likewise, we set to unveil the practical contributions of the studies in the complexity research on organizations. Another objective is to check the future work avenues mentioned in the related literature so that potential contribution areas can be identified.

Based on the discussion of the scope above and the gaps identified in the related work section, we design five research questions (RQs):

- RQ1: What concepts of complexity are available in the literature from the organizational, technological, and textual perspectives?

- RQ2: What metrics are proposed to measure the complexity concepts in organizations?

- RQ3: What are common motivations and declared novelty areas of the complexity research on organizations?

- RQ4: Which focus areas and application cases are typically used in the related literature on complexity in organizations?

- RQ5: What future research directions are communicated in the complexity research on organizations?

To answer the RQs and identify and analyze the existing studies in the related literature, we conduct an SLR. For this purpose, we follow the well-established guidelines by Kitchenham [51]. The main reason is that these guidelines are rigorously applied in the literature, cover major steps of a typical SLR, and could be used to design a review protocol in various fields. For guiding and evaluating literature reviews, we point to the framework proposed in [52]. In addition, we emend our analysis with the tool-support guidelines as presented in [53]. Accordingly, the retrieval and selection mechanism of the papers used in the SLR is given in the subsection below.

3.1.2. Paper Retrieval and Selection

Search strategy: To retrieve papers in the literature, we create search strings that are generic enough to include the studies discussing at least one of the three complexity types. Specifically, based on the complexity types and subtypes that we observed while identifying the gaps in the related work, we outline the following set of search strings:

- for organizational complexity: “task complexity” or “project complexity” or “process complexity”;

- for technological complexity: “software complexity” or “process model complexity” or “event log complexity” or “workflow complexity” or “control flow complexity”;

- for textual complexity: “textual complexity” or “readability” or “understandability”.

Having such separated search strings for each complexity type has advantages. One of them is balancing the granularity in the terms for complexity types. This way, we eliminate the risk of having an unequal number of papers for each complexity type. Another advantage is determining the papers that are mostly devoted to the study of a particular complexity type.

We applied the search strings in the search engine Google Scholar. The reason for this choice is twofold. Firstly, Google Scholar is the world’s largest academic search engine and provides an integrated search environment [54] by encompassing other academic databases, like ACM Digital Library and IEEE Xplore, Scopus, and Web of Science. Secondly and more importantly, Google Scholar ranks search results by their relevance considering all major features of papers, such as full text, authors, published source, and how often each paper has been cited in academic databases. The papers that have at least one of the above search strings in their title, keywords, or in their main body are retrieved. For the three complexity types, our search resulted in a large number of papers, that is, more than 5000.

Inclusion and exclusion criteria: To exclude irrelevant papers, we defined and applied the inclusion and exclusion criteria listed in Table 1. Specifically, we aimed at those papers closely related to organizational, technological, and textual complexities in organizations. We added the citation minimum to select meaningful papers recognized by other researchers. Hereby, the citation minimum was not applied to the recently published papers. The papers dealing with how people in organizations are grouped were excluded, as they mostly focus on organizational structure. Likewise, papers without a scientific basis and containing assumptions or expectations, that is, theoretical speculations, were filtered out. Lastly, as the papers on the same topic of the same authors have a high overlap, the ones with extended content were considered more relevant.

Table 1.

Inclusion and exclusion criteria.

As the result of filtering out based on the inclusion and exclusion criteria, in total, 130 papers were selected and combined in the final set. Due to the practical orientation of complexity and relevance, we included a limited number of technical reports and Ph.D. theses, which consisted of less than 5% of the final set of papers. Using the reference management tool Zotero (Check out Zotero, https://www.zotero.org/, accessed on 15 August 2021), paper metadata were obtained for each paper in the final set. Paper metadata included the reference data about a paper, for example, authors, publication date, authors’ keywords, and abstract.

3.2. Literature Classification

In this subsection, we explain the two approaches, that is, morphological box and multi-dimensional complexity framework, that we used to classify and synthesize the SLR findings obtained following the process described in the previous subsection.

Morphological box: To summarize the primary aspects of complexity research on organizations, we analyzed and classified the literature review results. For this, we used a morphological box, which is a commonly used (for example, in [55,56]) method to present a set of relationships inherent in the multi-dimensional non-quantifiable problem complexes [15]. Developing a morphological box to describe complexity aspects requires the identification of relevant features of papers and grouping these features. To do so, we focused on the three main themes: (1) common characteristics that can be observed in any research paper; (2) complexity quantification; and (3) tangible research artifacts for quantifying complexity. With the last two themes, we aim to identify reusable and distributed complexity research outputs that we can exploit for developing our method on addressing complexity.

Regarding the first theme, we took the identified gaps IG1 and IG4 listed in the related work section. With this, we aimed to show the fragmented studies on complexity. Hence, the following features were identified: motivation, novelty, focus area, application cases, and future research. We grouped them and named this group generic aspects, as they can be observed in any research paper. Based on the common sense on quantification and the second gap IG2 in the related work, four features were identified in the second theme. These are metrics origin, input, output, and validation. To combine these features, we propose complexity metrics and analysis aspects as the second group. Aligned with the third theme and IG3, it might be critical to know the specific conditions of implementation, such as being openly accessible, distributed in a free or commercial manner. Moreover, the implementation of a complexity analysis and metrics should be of deep concern. It is the only procedure that allows the application of particular approaches in the sense of evaluation or a real-world scenario. Thus, we included tool support as another feature in the morphological box. In this feature, we considered the usage of existing tools, own development tools, or no tool support. Table 2 shows the defined three aspects with their features.

Table 2.

Identified aspects and features for morphological box.

Based on the identified features, each paper in the final set was coded to extract topics necessary to answer the RQs. For this, deductive coding was applied [51]. A predefined set of codes observed while identifying the gaps in the literature was taken as the starting point for coding. While assigning the codes in each paper, newly observed codes were added to the code set. In other words, observed concepts in papers were used as codes. In deductive coding, it is important to avoid the researcher’s bias, that is, the researcher’s preferences are likely to influence the selection of papers [51]. Therefore, the topics in papers were identified and coded by two researchers independently. Afterwards, a discussion session was carried out to align on differences in coding. The coding was conducted using the qualitative data analysis tool NVivo (Check out Nvivo, https://www.qsrinternational.com/nvivo-qualitative-data-analysis-software, accessed on 15 August 2021) to ensure consistency. The results obtained from coding served as the basis for the morphological box development.

Multi-dimensional complexity framework: In a variety of disciplines, the term complexity has been applied to the specific context that prompted the establishment of standalone complexity research subfields [5]. This variety impairs the general acceptance of the complexity research (see IG1 in the identified gaps). To address this problem, [5] suggest a four dimension framework unifying the most prevalent views on complexity. Hereby, each dimension comprises two opposing complexity notions derived based on the established Complexity Science literature: objective and subjective (D1, observer perspective), structural and dynamic (D2, time perspective), qualitative and quantitative (D3, measures perspective), and organized and disorganized (D4, perspective of dynamics predictability). In Table 3, the dimensions and their complexity notions are listed. These dimensions allowed us to synthesize the SLR findings based on an agreed-upon vocabulary. As a result, we obtained an integrated multi-dimensional complexity framework.

Table 3.

Complexity dimensions and notions.

3.3. Goal Question Metric

The morphological box and multi-dimensional complexity framework described in the previous subsection served to structure and standardize our efforts in the complexity research review. However, these structured outcomes of the SLR findings lack a practical application value for the managers having to deal with complexity in their daily businesses. Hence, questions remain: (i) how to select a suitable approach to complexity analysis and measurement; and (ii) which type of complexity is relevant for a given problem or in a specific situation.

To address this kind of challenge, several approaches exist in the literature. For example, one popular approach originating from strategic management is Balanced Score Card (BSC) [57] embracing four perspectives, that is, financial (shareholders’ view), customer (value-adding view), internal (process-based view), and learning and growth (future view). Initially developed in the business domain, BSC has been adapted to the software domain, specifically in relation to GQM [58,59]. GQM is one of the well-established and widely used approaches to determine metrics for goals, which is also known to be the most goal-oriented approach [16]. GQM was originally developed for the evaluation of defects in the NASA Goddard Space Flight Center environment in a series of projects. Despite this original specificity, its application has been extended to a broader context, software development among others [16].

GQM has a hierarchical structure beginning with a goal definition at the corporate, unit, or project level. It should contain the information on the measurement purpose, the object to be measured, the problem to be measured, and the point of view from which the measure is taken. The goal is refined into several questions that typically break down the problem into its main components. Next, various metrics and measurements are proposed to address each question. Finally, one needs to develop the data collection methods, including validation and analysis [16].

GQM opponents criticize it for being too flexible and generating a large number of metrics [60]. To address the GQM shortcomings, several approaches have been proposed, such as Goal Argument Metric (GAM) [61], or generic approaches as in [62]. Accordingly, GQM should be applied based on the organization’s process maturity level to select the most acceptable metrics [62]. Ref. [63] suggests including a prioritization step into GQM to minimize the number of generated metrics. However, such an approach has a drawback that certain perspectives may remain neglected. To sum up, in the GQM related literature, we have observed a common practice of extending the GQM to adjust it to particular research needs, like in [64] for agile software development and [65] for data warehouses quality assessment.

Hence, in our study, we extend GQM for the purpose of the method development to comprehensively address the complexity in organizations. When adapting GQM to our study needs, we also set to overcome the above-mentioned limitations. Introducing a step-by-step guidance leading from the problem statement to a solution specification, we aim to assist organizations in identifying what is actually needed to deal with complexity.

In the following sections, we describe in detail the application of the discussed research approach. Accordingly, we start with reporting the SLR findings followed by their classification. The latter includes the morphological box development and integration of the results into a multi-dimensional complexity framework. Lastly, a GQM extension is proposed using an illustrative example from a real-world setting.

4. Systematic Literature Review

This section describes the results of our literature review. We address each RQ in a separate subsection and elaborate on our findings. In the visualization of our findings, we use a standard coloring for the three complexity types for the reasons of consistency. The assigned color for each complexity type is as follows: organizational  (RGB: 199,199,199), technological

(RGB: 199,199,199), technological  (RGB: 127,127,127), and textual

(RGB: 127,127,127), and textual  (RGB: 65,65,65).

(RGB: 65,65,65).

(RGB: 199,199,199), technological

(RGB: 199,199,199), technological  (RGB: 127,127,127), and textual

(RGB: 127,127,127), and textual  (RGB: 65,65,65).

(RGB: 65,65,65).4.1. Complexity Concepts

We present our findings on the complexity concepts of the three complexity types that we focus on, that is, organizational, technological, and textual. In particular, we elaborate on the trend of papers over the years, the components of complexity types, and their distribution.

The majority of the analyzed 130 papers, 54 papers, discuss technological complexity, whereas 40 of the remaining papers are devoted to textual complexity and the rest 36 are about organizational complexity.

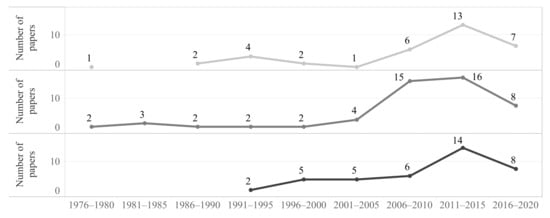

In Figure 1, the trend of publications over time is presented. As can be seen, the distribution of papers in all three types of complexity represents a similar trend with a peak at the period between 2011 and 2015. This indicates that, despite the different nature of the three complexities, they are all triggered by and based on today’s information age developments. The most common examples of these developments are fast-expanding technology solutions, complex processes, and new organizational skills needed to deliver products and services faster, with higher quality, and at lower costs than before [66].

Figure 1.

Publication trend per complexity type.

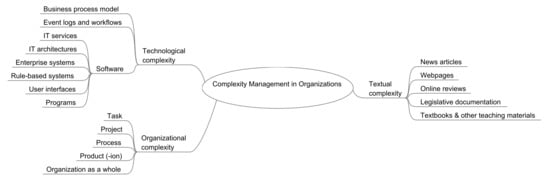

Based on the topics that appeared in the reviewed papers, we developed a mind map of complexity concepts, which is depicted below in Figure 2.

Figure 2.

Complexity concepts mind map.

Key topics mentioned in the definitions of the organizational type of complexity are task, project, process, and product complexities. In the task complexity, which takes up the major part (36%) of all papers in the organizational complexity group, key topics are task complexity elements and models [11,46,67,68,69], cognitive resources necessary to perform the tasks [70], identification and utilization of complexity factors [71] and effects [72,73,74,75], performing reviews and analyses, as well as building frameworks [10,21,25]. Very often, one and the same study addresses a bundle of topics. For example, in the project complexity (25%), apart from reviews [76], the core topics are building frameworks and models [77,78,79]. Process complexity (14%) can be characterized by either the study of specific processes such as (IT) services [80,81,82] or generic topic of complexity factors and effects [83]. Product (and production) complexity (%) is studied as part of project complexity in the context of new product development projects [84] or generic approaches to production complexity [85]. The product or production complexities are largely dependent on the type of the product itself. Hence, we include very few works in our analysis.

In the technological type of complexity, we found several topics as the key topics. For example, those related to business processes, business process models with 41%, such as in [86] and event logs and workflows taking up 9%, like [87]. Software programs in general (22%), for example, ref. [7], and with a specific focus on user interfaces (13%), as [88], are the topics related to software. Furthermore, there are topics that relate to systems. To name the most common ones, rule-based systems (4%), for example, ref. [89], enterprise systems (4%), like [90], and IT architectures (6%), as discussed in [5].

In the textual complexity type, the most studied topic appeared to be corporate and accounting narratives and legislative documentation in general, such as contracts or institutional mission statements, making up 68% [91,92]. This can be accounted for a strong need for clarity in communication between institutional management and various stakeholders. Other, rather rare topics relate to two groups: (1) textual complexity of webpages and online reviews (13%) [93,94] and (2) management textbooks [95], texts used for reading comprehension [96], and news articles (10%) [97].

4.2. Complexity Metrics and Analysis

In this subsection, to answer the RQ2 “What metrics are proposed to measure the complexity concepts in organizations?” and get a deep understanding of existing approaches on measuring complexity, we analyze the following guiding questions:

- Which theories and disciplines laid the foundation of complexity metrics, that is, metrics origin?

- What kind of information and data serve as input for complexity metrics?

- What kind of output is expected?

- Whether tool support is provided?

- How are the proposed metrics validated?

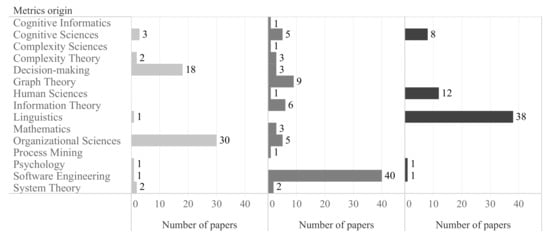

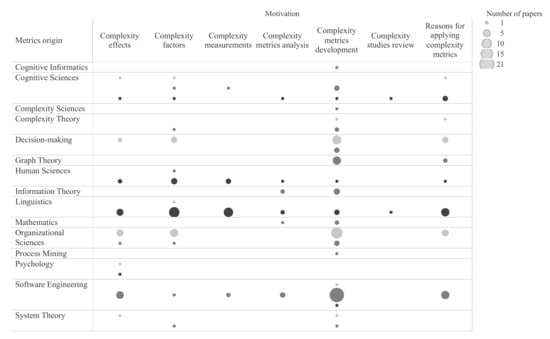

In this analysis, we filtered out the literature and critical review papers (24 in total), since, as a rule, they do not offer any metric. In Figure 3, metrics origin is shown for each type of complexity. Organizational Sciences is the prominent origin of metrics in organizational complexity. In the technological complexity, Software Engineering is the dominating origin, whereas textual complexity metrics, as naturally, are mainly driven by Linguistics. In general, Cognitive Sciences can be considered as a significant common driver for the origin of the metric in the three complexity types.

Figure 3.

Metrics origin distribution per complexity type.

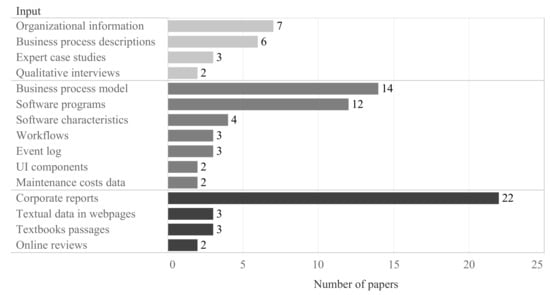

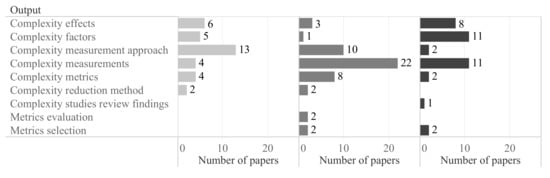

To understand how respective complexities are measured, we provide the analysis of inputs and outputs related to complexity metrics. In Figure 4 and Figure 5, commonly used inputs and outputs in each complexity type are shown. Since business processes are one of the main assets of organizations, various data types related to business processes (for example, business process model, event log, and business process descriptions) are used to measure organizational and technological complexities.

Figure 4.

Types of inputs per complexity type.

Figure 5.

Types of outputs per complexity type.

Answering the question regarding validation, we identified that most of the complexity research (66%) is validated empirically. This is an indication of the practical value and applicability of complexity metrics. However, tool support can be fairly considered as a catalyst for the reproducibility and applicability of research findings.

4.3. Complexity Research Motivations and Novelty

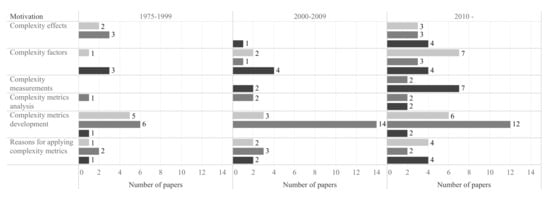

For any novice, but also advanced researchers, it may be useful to get an overview of those motivating considerations and successful research “selling points”, or declared novelty, prominent in the field. In Figure 6, we present the motivations according to complexity type over time. We exclude literature and critical review papers. One can observe whether motivation gains importance over time for a complexity type. For example, in organizational complexity, there is a continuous interest in complexity factors and effects.

Figure 6.

Motivations over time per complexity type.

In Figure 7, we present motivations in relation to the metrics origin for each complexity type. We excluded literature and critical review papers. One can observe those metrics origin areas that are essential for particular motivations related to complexity metrics. For example, in the development of new complexity metrics, Decision-making and Organizational Sciences are the two prominent areas to consider.

Figure 7.

Motivations in relation to metrics origin per complexity type.

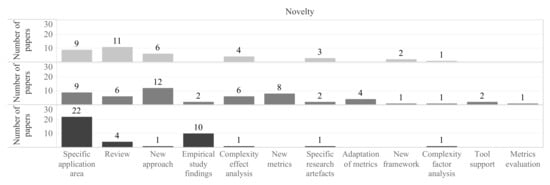

In Figure 8, we present the absolute numbers regarding the research novelty aspect. The most attractive topic is selecting a specific application area. Metrics evaluation and tool support are the two topics that attracted less interest in this context.

Figure 8.

Novelty per complexity type.

4.4. Complexity Research Focus Areas and Application Cases

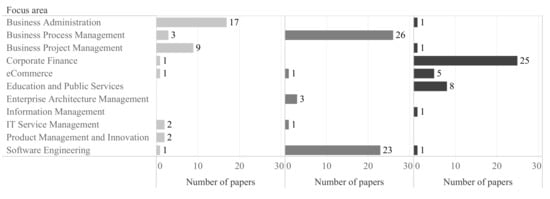

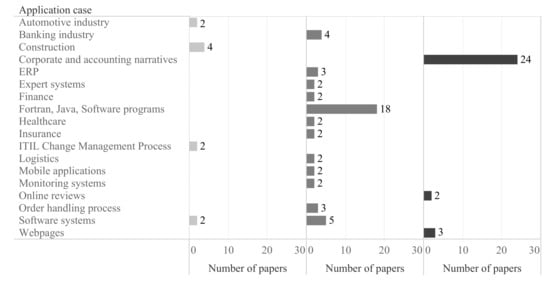

In Figure 9 and Figure 10, the absolute distributions of specific focus areas and application cases according to complexity type are shown. The papers in which no application is specified are excluded from the application case distribution. To keep the most frequent values of application cases, the threshold for the minimum number of papers is set to two.

Figure 9.

Focus areas per complexity type.

Figure 10.

Application cases per complexity type.

4.5. Complexity Research Future Research Directions

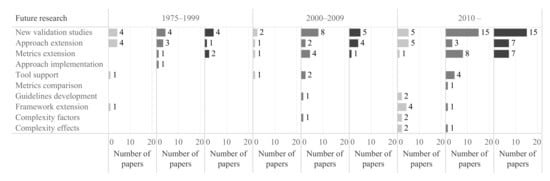

In this subsection, we share our findings regarding the future research directions we identified in the reviewed papers.

In Figure 11, we summarize the absolute distributions of the future research directions over time. The most significant future research directions are new validation studies, approaches, and metrics extensions. Such directions as guidelines development, metrics comparison, complexity factors, and complexity effects are rarely mentioned.

Figure 11.

Future research directions over time per complexity type.

5. Literature Classification

In this section, we first classify the SLR results explained in the previous section and develop a morphological box. Second, we exploit a multi-dimensional framework to synthesize the SLR findings.

5.1. Morphological Box

To build the morphological box, the three aspects and ten features described in the research approach section are employed. Initially, each paper in the final set comprised of 130 papers is distributed to the three aspects based on the values in its ten features. Then, thematically related feature values are grouped, and the relative number of papers in each group is calculated. For example, in the novelty feature of generic aspects, “new approach”, “new framework”, and “new metrics” novelties are grouped. As they are mentioned in 30 out of the 130 papers, the relative frequency for that group is 23%. Using the frequency distribution in each group in the three aspects, the morphological box shown in Table 4 is formed. As one paper may discuss multiple topics (that is, have multiple values in a single feature), the percentage value for a group in a feature does not necessarily add up to 100.

Table 4.

Morphological box describing three complexity aspects.

In the aspects with an observable amount of values, like validation or tool support, no groups were formed. Hence, the relative number of papers containing the certain value can be directly derived from the table. For example, an empirical validation has been performed in 66% of papers. The three aspects in the created morphological box are explained below.

5.1.1. Generic Aspects

The motivations for performing complexity research can be that multiplex as the term complexity is. As our review shows, they vary from measurement, reviews of complexity studies, development of new complexity metrics, to the investigation of complexity factors and effects. Motivations are highly beneficial to make researchers familiar with the complexity research. Furthermore, for the researchers already into the topic, it would be advantageous to explore the studies related to complexity metrics development as well as practical studies. Practitioners can gain insights into complexity factors, effects, as well as reasons for applying complexity metrics.

The overview of the most frequent and also uncommon focus areas and application cases will help researchers to propose fruitful directions for case study-oriented complexity research and beyond. Business Process and Project Management, Corporate Finance, Software Engineering, and IT Service Management are some of the examples of focus areas. We use Statistical Classification of Economic Activities in the European Community [98] (RAMON) to provide a consistent overview of application areas, like Healthcare, Governmental Services, and Automotive.

Diverse novelty aspects (for example, a new approach, framework, new metrics or adaption of existing ones, findings on empirical studies, application area, and comparative evaluations) may serve as a source of inspiration for both complexity novices and expert researchers. Similarly, the future research aspect can point to some significant limitations of the studies and potential further research directions. Additionally, gaps or problems mentioned in future research can be further investigated to contribute to the existing body of knowledge.

5.1.2. Complexity Metrics and Analysis Aspects

Metrics are common instruments for measurement. They are often used as a communication tool among stakeholders to control and assess the quality and status of artifacts. Moreover, various complexity metrics are applied to measure complexity broadly and in distinct areas. Such complexity analysis and metrics are characterized by certain input and output data. For example, depending on the type of complexity and application case, textual documentation, event logs, software programs, expert interviews, or even image data can serve as an input for the complexity analysis and measurements. The output is directly related to the research motivation in general and associated input in particular.

In the metrics development process, another important aspect is their theoretical base or origin. As a rule, theories are used to name observed concepts and explain relationships between them. The theory is a well-known tool that helps to identify a problem and plan a solution. Hence, metrics origin is included as a feature in the proposed morphological box. Accordingly, the disciplines that are widely used for metrics development are summarized to give an overview and support the metrics development process. Some frequent examples are Organizational Sciences, Cognitive Sciences, Cognitive Informatics, Human Sciences, Software Engineering, Process Mining, and Mathematics.

As a matter of fact, metrics can be of different quality, depending on how precisely they describe an attribute of an entity. Hereby, metrics validity is among the most critical quality characteristics [86]. Validation is essential to guarantee that the outcomes of the metric application are legitimate. There are two types of validation methods: theoretical and empirical [99,100]. Theoretical validation is conducted using one of the following typical ways: (i) metrics development exclusively based on a theory; (ii) metrics development solely based on existing studies; (iii) checking compliance with a standard framework (for example, Briand’s framework properties [101] and Weyuker’s properties [102]; or (iv) illustration of the metrics application with the help of an example. In general, empirical validation of metrics complements theoretical validation. For empirical validation, different strategies are used, for example, case studies, surveys, or experiments. The objective of empirical validation is to find out whether the given metric measures what it is supposed to. Thus, for a metric to be structurally sound and useful, both theoretical and empirical validation are required [103].

5.1.3. Implementation Aspects

As can be seen in Table 4, approximately a third of the papers (34%) provide information about tool support. In particular, 23% of the papers mention that they use existing tools. In the remaining 11%, a tool was developed to support complexity measurement. We observed that in organizational complexity, there is a high demand for tool development, whereas, in technological complexity, tool support is more common. Notably, the studies providing tool support are prevailingly motivated by complexity metrics development.

5.2. Integrated Multi-Dimensional Complexity Framework

While performing the comprehensive literature analysis, we identified multiple specific approaches focusing on complexity and, using them, developed the mind map of complexity concepts shown in Figure 2. Although these approaches somewhat reflect a generic commonly accepted complexity definition, that is, quantity and variety of the elements and their relationships, they are loosely coupled and focus on narrow areas. While addressing only one particular area, they fall short to embrace the whole variety of elements in the environment and support complexity management on large scales. Hence, motivated by the identified gaps IG1 and IG2, we synthesize the SLR results in the multi-dimensional complexity framework explained in the research approach section. In this subsection, we elaborate on that synthesis.

We consolidate the most common complexity concepts with the four dimensions of the framework. Specifically, we integrate the identified concepts to the complexity notions in the framework. Then, we calculate the relative distributions of the complexity notions per complexity type. Similar to the comprehensive literature analysis, the synthesis of the SLR results was performed using the qualitative data analysis tool NVivo. Hereby, each paper was analyzed and assigned to the complexity dimensions and respective notions by two researchers independently. Further, the discrepancies were discussed, and a common decision was taken. The resulting synthesis is depicted in Table 5 and explained below using an illustrative example.

Table 5.

Relative distribution of the complexity notions per complexity type.

The business process model is one of the complexity concepts we identified in technological complexity. As can be seen in the first row of technological complexity in Table 5, in 91 % of the technological complexity papers focus on the objective observer perspective whereas, in 36 % of the papers, the focus is on the subjective observer perspective. In the remainder of this subsection, we elaborate on the synthesis from the three complexity perspectives, namely organizational, technological, and textual.

In technological complexity, the indicated complexity concepts are the business process model, event logs and workflows, and software. Though technological complexity subtypes are expected to be similar in their nature and, hence, complexity, we observed the diversity of combinations, especially if compared to organizational and textual complexities (see Table 5). Owing to its technological nature, we expected technological complexity to be objective (D1) and quantitatively measured (D3). Interestingly, both subjective (D1) and qualitative (D3) complexity approaches gained popularity in this type of complexity. Accordingly, subjective approaches to analyze complexity are exemplified below:

- Business process models, event logs and workflows: understandability, cognitive load perspectives [104] and quality measure of a process based on the number of generated process logs [105];

- IT services: complexity theory-based conceptualization [106];

- Enterprise systems: defining case study-based complexity factors [107];

- Rule-based systems: difficulty of problems that can be solved [108];

- User interfaces: case study-based evaluation [109];

- Software programs: dependency on the programmer’s skills [110].

In the time perspective (D2) for technological complexity, all but one concepts are either purely or substantially structural in the analyzed papers. As can be seen in Table 5, IT services is the only concept considered completely dynamic (100 %). Similarly, in D4, it is the single disorganized technological complexity concept. Additionally, rule-based systems is the concept with a considerable disorganized relative distribution value.

Due to its intrinsic diversity, that is, the diversity of tasks, projects, and processes and approaches to analyze their complexity, organizational complexity mostly includes all complexity notions of four dimensions, except for product (-tion) complexity, which, as a rule, reflects a defined number of elements (structural notion, D2) that interact in a specifically designed way (organized notion, D4) [85].

Regarding textual complexity, we observed the same complexity notions in the most textual complexity concepts, that is, legislative documentation, news articles, webpages, online reviews, and textbooks. It is noteworthy that they are considered purely structural (D2) and organized (D4). Moreover, except for legislative documentation and textbooks and other teaching materials, textual complexity concepts are highlighted as objective (D1) and quantitative (D3).

6. Method to Address Complexity

In this section, we present our method to address complexity in organizations with an illustrative example taken from a real-life setting.

For developing the method, GQM is taken as the basis and extended using the complexity analysis approaches obtained from the SLR, the developed morphological box, and the integrated multi-dimensional complexity framework. Aligned with the hierarchy in GQM, the starting point of our method is a goal definition. In other words, the method exploits a top-down mechanism in tackling complexity. This is also necessary to provide a staged complexity guidance, starting with a goal statement and reaching a specific solution. In our case, the specific solution is a collection data structure, each element of which is a map with the following key-value pairs: a question derived from a given goal and a set of complexity measurement and analysis approaches relevant for the problem origin of the question.

At the question level, the morphological box is used to refine an expressed goal into several questions. In particular, except for metrics origin, all features in the morphological box and the groups in these features are employed to facilitate deriving specific questions. For example, the groups in the input feature can be used to break down a given goal and define specific questions about the available inputs regarding complexity in the organization.

Next, at the measurement level, each question is analyzed with the help of the integrated multi-dimensional complexity framework. In the analysis, complexity types, complexity concepts, complexity dimensions, and notions are investigated to determine which of the complexity approaches obtained from the SLR can be beneficial for answering each question. With this, the aim is to distill the comprehensive analysis and provide a relevant subset of approaches mapping to each question. To build such a subset, initially, related complexity types are identified for each question. Then, per complexity type, matching complexity concepts, complexity dimensions, and notions are discovered. Based on these obtained inputs, the complexity approaches retrieved in the SLR are filtered, and a subset is created. The created subsets are merged into a final subset per question.

In the final level, that is, the data level, each subset is further filtered considering the available data related to each question. Importantly, in the case of data unavailability, data transformation possibilities can be investigated based on the input groups in the morphological box and the input attributes of the approaches in the created subsets. With this, our method enables organizations to consider and evaluate alternatives that may be beneficial depending on their context.

Based on the explanation on each level above, we summarize the steps of the proposed method:

- Define a goal;

- Formulate specific questions from the goal using the morphological box;For each question:

- Analyze the question using the integrated multi-dimensional complexity framework;

- Identify complexity types related to the question;For each complexity type:

- 4.1.

- Using the integrated multi-dimensional complexity framework, find matching;

- i

- complexity concepts;

- ii

- complexity dimensions;

- iii

- notions;

- 4.2.

- Create complexity approaches subset based on complexity type, i, ii, and iii;

- Merge complexity approaches subsets and form a final subset;

- Filter the final subset considering the available data;

- Check the need and possibilities for data transformation using the morphological box.

In the remainder of this section, we provide an example of addressing complexity (see Table 6). The setting of the example is taken from a company. The company operates in the healthcare sector and provides Business Intelligence (BI) solutions to various healthcare institutions. To gather requirements and change requests, business analysts of the company visit its clients. However, due to the Covid-19 pandemic and its consequent restrictions, visits were not possible. Moreover, healthcare practitioners were deeply in need of adequate dashboards to better understand the pandemic and were sending multiple immediate requests to the company via email. Hence, the business analysis department of the company has to tackle mostly unstructured and ambiguous texts in the received emails. Regarding the situation, the company is looking for a way to deal with the complexity that emerged due to the changes in its work environment.

Table 6.

An example illustrates the use of the proposed method.

Following our illustrative example in Table 6, in the growing popularity of remote working, the employees receive their tasks prevailingly in a textual form. The goal is to analyze the workload of employees. This goal is broken down into four questions.

In Question 1, the required data are email texts. Hence, the complexity type in focus is a textual one. Based on Table 5, we can conclude about the most typical complexity dimensions analyzed within this complexity type. Afterward, one can make use of the literature analysis results (For the details of this study, please check out our project on GitHub (https://github.com/complexityreview/comprehensiveOverview, accessed on 11 October 2021)) we obtained with the help of Nvivo. Based on the complexity type (and subtype if applicable) and selected suitable complexity dimensions, one can determine relevant papers and, this way, derive necessary complexity analysis approaches and metrics. In the case of Question 1 of the illustrative example, these are standard readability formulae such as Flesh Reading Ease score or Gunning Fog Index [111].

In Question 2, specific recent complexity analysis approaches, such as [112], are more relevant to answer the question and extract the exact activities from the textual data.

In Question 3 and Question 4, different data and complexity types are required. In Question 3, organizational complexity type and task subtype are to be identified. Due to the inherent variability of organizational complexity, all possible complexity dimensions and notions are observed in this case (see Table 5), which complicates the choice. Thus, individual managerial decisions need to be taken to estimate the efforts and further actions. Additionally, gathering necessary data, that is, specific information from employees regarding task complexity, can be problematic and time-consuming. However, one can rely on solid research works for guidance, for example, [11]. At the same time, in Question 4, the required data, derived complexity type, dimensions, and notions are rather straightforward, and complexity analysis is easy to implement.

To summarize, GQM provides an opportunity to demonstrate the direct practical value of the study findings, that is, the comprehensive literature analysis, obtained complexity types, complexity concepts mind map, the morphological box and the integrated multi-dimensional complexity framework. We extended the standard GQM process with an additional three points based on our study findings: data, complexity type, complexity dimensions, notions, and analysis approaches.

7. Discussion

In this section, we discuss the findings of our study in accordance with the demands we have inferred from the gaps listed in the related work section and which served as a motivation for our study. These are:

- Demand for an extensive literature analysis embracing different types of complexity in organizations (IG1, IG4);

- Demand for a structured overview and integration of findings based on existing standard approaches, frameworks, and vocabularies to promote the common acceptance of complexity research in organizations (IG1);

- Demand for practical guidance supporting managers in addressing the complexity and planning comprehensive complexity management initiatives in companies (IG2, IG3).

7.1. Demand for an Extensive Literature Analysis

In the related literature, we have noted a high fragmentation in the research on complexity in general and in organizations in particular. This tendency has been observed both in regular and review papers. For example, the literature review works consider different types of complexity implicitly related to organizations, such as task complexity [10], project complexity [113], or interorganizational complexity [23]. However, each of such works focuses on a specific aspect of an organization. Our study sets itself apart by addressing multiple types of complexity in an extensive SLR of the complexity studies from the People Process Technology (the PPT framework [14]) perspective. Moreover, our SLR has shown that the peak popularity of complexity research in organizations has been in 2011–2015, which highlights the demand for an up-to-date review.

7.2. Demand for a Structured Overview

The related work shows that the mentioned problem of high fragmentation applies not only to the term complexity but also to the complexity drivers [25], measurements, and approaches to address complexity [26]. We address this limitation while classifying the SLR findings using the morphological box and the integrated multi-dimensional complexity framework. The developed morphological box (see Table 4) provides a logically structured outcome highlighting the most interesting and important points with the help of the three groups of aspects: generic, complexity metrics and analysis, and implementation aspects.

The generic aspects represent a summary on research motivations, novelty, focus areas, application cases, and future research on complexity in organizations, the information typical for any research study. This way, practitioners can get a quick overview on how the complexity research is structured and approached in the academic community. The researchers can also draw valuable insights using the information on motivations or future research directions. For example, some complexity studies are driven by research on the reasons for performing complexity analysis (16%) and complexity effects (12%), that is, hidden benefits of working on the complexity, interesting insight for both researchers and practitioners.

Accordingly, our study aims to facilitate and promote the efforts in this direction while streamlining the complexity research projects. Thus, the less frequent motivations, for example, complexity metrics analysis (5%) and complexity studies review (7%), may serve as a potential gap for further complexity studies. Likewise, the information about novelty areas can be useful for better positioning and justifying research projects in the sense of envisioned contributions. The summary on focus areas and application cases is valuable for researchers and practitioners in two ways. First, the information on the popularity of focus areas can help them to identify relevant areas requiring more research. Second, similar application cases can be closely studied for comparison-related purposes.

In addition, our findings can serve to prove the reusability of existing application cases. In the context of future research directions, the interested researchers can either follow the strategy of the trendy topics or pick up rare cases on which a few research activities have been conducted so far. For example, one can clearly see that the suggested complexity analysis approaches, metrics, and frameworks lack a thorough validation in all three complexity types.

The complexity metrics and analysis aspects include the inputs, resulting outputs, origins of complexity metrics, and their validation. Whereas metrics origin provides a theoretical background, input and output types, as well as validation approaches, provide practical insights into complexity measurement for both researchers and practitioners.

Aside from that, tool support, that is, implementation aspect, is one of the topics that need special attention since most of the complexity metrics (35%, see Table 4) are not using any tool. In other words, there is a clear lack of tool support in complexity measurement, which should be addressed in future research.

7.3. Demand for an Integration of Findings

We integrated our findings into a solid complexity framework [5] whereby four universal dimensions based on time, observer, measures, and dynamics and predictability were applied. Such a generic classification approach allows for independent documentation of diverse complexity concepts. Moreover, it enables more transparency in presenting and understanding the existing work on complexity. In our study, we demonstrated the possibility of integrating seemingly different complexity types into one complexity framework. This also enables other researchers to use their complexity concepts in the context of such a framework without a need to precisely define them [5].

In the classification of our study findings, we could identify various combinations of dimensions’ values in the technological complexity indicating approaches not covered in the specific complexity concept. For example, the complexities of event logs and workflows, IT architectures, and IT services evidence the lack of subjective approaches (see Table 5). Though organizational complexity comprises prevailingly all dimensions and values, product(-ion) complexity lacks dynamic and disorganized approaches. Being structural and organized in its nature, textual complexity demonstrates the absence of subjective approaches in the case of the analysis of news articles, webpages, and reviews. All these open points offer potential for further exploration and, hence, future work. Furthermore, though the classification is not always straightforward, we highlight the importance of formalization in the context of complexity measurement.

7.4. Demand for a Practical Guidance

As mentioned in Section 2, [36,37] state the need for frameworks and practices assisting managers in the development of broad scope complexity management strategies. In our work, we address this shortcoming by proposing a method for complexity management by adopting GQM, a well-known approach for deriving and selecting metrics for a specific task in a goal-oriented manner [16]. In GQM, goals and metrics are adjusted for a specific setting. The upfront problem and, hence, goal statements allow for the selection of the metrics relevant for achieving these goals, which reduces the data collection effort considerably. The interpretation of the measurements becomes also straightforward due to the traceability between data and metrics. Hence, the wrong interpretations can be prevented [114]. Despite such advantages, GQM reveals certain limitations, such as high flexibility and generation of a large number of solutions [60], which were discussed in Section 3. The attempts to deal with this limitation end up in other shortcomings, like dismissing important perspectives [63]. Therefore, we have observed multiple extensions of GQM in line with a particular study purpose. In our work, we adopt and extend GQM with complexity types, dimensions, and notions making it more specific to our objectives and study setting. This way, we address the high flexibility of GQM. Further, in the metric selection stage of GQM, we consider not only metrics but also comprehensive complexity analyses approaches we found in the literature. In doing so, we deal with another shortcoming, that is, the risk of missing relevant solutions.

Although addressing the shortcomings of GQM [60,63], we are aware that our method also reveals some limitations. For example, it uses the SLR results as an input. Hence, it naturally inherits typical SLR limitations, such as authors’ bias while building the search strings, exclusion and inclusion criteria definition, coding, and synthesizing the results [115]. Further, though our SLR contains significant ground work, there is a need for repeating such reviews to have an up-to-date list of complexity metrics and analysis approaches. Moreover, in its current state, our method represents a conceptually designed artifact that is manually applied in a real-world example to illustrate its relevance. Hence, automation solutions such as [116,117] should be considered as a part of future work.

8. Conclusions and Future Work

In this paper, we proposed a method to address complexity in organizations. With the method, our main goal was to develop practical guidelines for the selection of complexity analysis approaches for a particular problem about complexity management in organizations. To achieve this goal, we extended the Goal Question Metric approach [16] using the SLR on complexity and its results, which we comparatively analyzed and synthesized.

In particular, to retrieve the body of knowledge on complexity research and practice, we conducted an SLR on the complexity research considering the three main complexity types, that is, organizational, technological, and textual, as the starting point. We analyzed 130 papers that discuss complexity. Hereby, we took the PPT framework [14] as the basis. Then, to provide structured outcomes and address the problem of high fragmentation, we designed and implemented two classification approaches: a morphological box and an integrated multi-dimensional framework. The developed morphological box summarizes the three fundamental aspects of complexity research. The three aspects reveal ten features capturing the information extracted from the analyzed papers. To contribute to the standardization efforts in the complexity research field, we synthesized our SLR findings by integrating them in a solid multi-dimensional complexity framework [5].

Next, the obtained knowledge and findings from the classification of the SLR results were used to extend GQM, providing the method to address complexity in organizations. With an illustrative example taken from a company, we demonstrated the practical value of the method. Hence, as a practical contribution in the form of the comprehensive guidance, the method can assist organizations in their complexity management initiatives. Thus, our study sets itself apart from the existing work in the way that it serves as a guideline both for complexity researchers and practitioners willing to perform the complexity analysis in an organization. It is important to note that the structuring and conceptualization of complexity presented in our study is the first attempt to align diverse types of complexity.

For future work, two prominent avenues considering the limitations of the method can be highlighted. First, to have an up-to-date set of complexity analysis approaches, the SLR on complexity needs to be automated, as it is currently manual. For this, we aim to use automated systematic review solutions that employ machine learning technologies, for example, ASReview [117]. Such solutions may help to enrich results serving as a reusable basis to better deal with the researchers’ bias. Second, we plan to conduct case studies and investigate the usefulness of the method in organizations, as it is currently demonstrated using an illustrative example from a real-life setting. Moreover, with case studies, we would like to collect the opinions of practitioners in terms of the fit of the method in their daily routine.

Author Contributions

Conceptualization, A.R.; methodology, A.R.; software, Ü.A.; formal analysis, A.R. and Ü.A.; investigation, A.R. and Ü.A.; resources, V.G.M.; data curation, Ü.A.; writing—original draft preparation, A.R. and Ü.A.; writing—review and editing, A.R.; visualization, Ü.A.; supervision, V.G.M.; project administration, A.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on https://github.com/complexityreview/comprehensiveOverview (accessed on 15 October 2021).

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. List of Papers Analyzed in This Study

Below, in Table A1, we list the papers analyzed in this study. For the sake of simplicity and due to limited space, we only provide the most relevant features of the papers in addition to their title and authors. In particular, complexity type, motivation, input, and future research features are kept in the list. For the detailed list with all features and encoding, we refer to the project page on check out our project on GitHub (https://github.com/complexityreview/comprehensiveOverview, accessed on 11 October 2021).

Table A1.

Analyzed papers in this study.

Table A1.

Analyzed papers in this study.

| Reference | Complexity Type | Title | Motivation | Input | Future Research |

|---|---|---|---|---|---|

| [72] | Organizational | Task complexity and contingent processing in decision making: An information search and protocol analysis | Reasons for applying complexity metrics | Other; Case studies and interviews | Tool support |

| [46] | Organizational | Task complexity: Definition of the construct | Complexity metrics development | Other | Framework extension |

| [21] | Organizational | Task complexity: A review and analysis | Complexity factors; Complexity metrics development | Not Applicable | New validation studies; Approach extension |

| [118] | Organizational | Review of concepts and approaches to complexity | Complexity studies review | Not Applicable | Not Specified |

| [11] | Organizational | A model of the effects of audit task complexity | Complexity effects | Not Applicable | Approach extension |

| [119] | Organizational | Task complexity affects information seeking and use | Complexity effects | Other; Case studies and interviews | New validation studies |

| [120] | Organizational | The impact of knowledge and technology complexity on information systems development | Complexity metrics development | Software and Architectures | Approach extension; New validation studies |

| [68] | Organizational | Task and technology interaction (TTI): a theory of technological support for group tasks | Complexity metrics development | Other | New validation studies |

| [121] | Organizational | Perspective: Complexity theory and organization science | Complexity metrics development | Not Applicable | Approach extension |

| [84] | Organizational | Sources and assessment of complexity in NPD projects | Complexity factors | Business textual information | New validation studies |

| [81] | Organizational | Quantifying the Complexity of IT Service Management Processes | Complexity metrics development | Business textual information | Metrics extension; New validation studies |

| [122] | Organizational | Complexity of megaprojects | Complexity factors | Not Applicable | Not Specified |

| [80] | Organizational | Estimating business value of IT services through process complexity analysis | Reasons for applying complexity metrics | Business textual information | Approach extension; Tool support |

| [123] | Organizational | The inherent complexity of large scale engineering projects | Complexity metrics development | Not Applicable | Not Specified |

| [70] | Organizational | Complexity of Proceduralized Tasks | Complexity metrics development; Reasons for applying complexity metrics | Not Applicable | Not Specified |

| [66] | Organizational | Finding and reducing needless complexity | Complexity factors | Other | Not Specified |

| [79] | Organizational | Revisiting project complexity: Towards a comprehensive model of project complexity | Complexity metrics development | Not Applicable | Approach extension |

| [71] | Organizational | Model-based identification and use of task complexity factors of human integrated systems | Complexity factors | Other | Framework extension; Guidelines development |

| [10] | Organizational | Task complexity: A review and conceptualization framework | Complexity studies review; Complexity metrics development | Not Applicable | New validation studies; Framework extension |

| [85] | Organizational | Testing complexity index-a method for measuring perceived production complexity | Complexity effects | Business textual information | Not Specified |

| [83] | Organizational | The impact of business process complexity on business process standardization | Complexity factors | Other | Not Specified |

| [124] | Organizational | Relationships between project complexity and communication | Complexity effects | Other; Case studies and interviews | Approach extension |

| [78] | Organizational | Building up a project complexity framework using an international Delphi study | Complexity metrics development | Other; Case studies and interviews | New validation studies |

| [76] | Organizational | An extended literature review of organizational factors impacting project management complexity | Complexity factors | Not Applicable | Not Specified |

| [125] | Organizational | Revisiting complexity in the digital age | Reasons for applying complexity metrics | Not Applicable | Not Specified |

| [77] | Organizational | Complexity in the Context of Systems Approach to Project Management | Complexity effects | Not Applicable | New validation studies; Framework extension |