2. The Dialogue

M: Let’s discuss the problems of the essence of information and how it is possible to define it.

J: My interest in the topic of information results from becoming acquainted with the work of Trewavas and Baluska [

20]. In turn, it introduced me to the works of Maturana and Varela [

21,

22]. Eventually, I identified the problem of understanding information as an essential issue for answering fundamental questions that have eluded explanation. One of my explorations led me to publications of Gregory Bateson, where he introduces a definition of information as “a difference which makes a difference” [

23,

24]. This is certainly very different from the belief that information is a fundamental quantity of the universe, implying that we humans are able to identify it as existing everywhere and use it to our advantage [

25,

26,

27,

28]. This point of view appeared with the creation of cybernetics and the construction of computers during World War II, demonstrating that machines can have what before were considered as exclusively human capabilities.

M: It looks like you are suggesting taking Bateson’s statement that

information is the difference which makes a difference [

23] as an ultimate definition of information.

J: Indeed, Bateson’s simplicity of definition is certainly attractive as a starting point, and many researchers always seemed to accept this definition, but no one seemed to go beyond this phrase. As I developed my interest in Bateson, I more and more appreciated his musings while making them my own. One interesting aspect of Bateson’s statement is its subjective aspect, which is atypical in the world where “true” scientists emphasize the objective aspect of everything. This apparent contradiction is certainly something that needs to be taken seriously as the following questions come into view. How does one become an objective scientist if he or she is a subjective being? Does not Bateson’s statement hold the promise of simultaneous subjectivity and objectivity?

M: Okay, taking Bateson’s statement as a candidate for a definition of information, its thorough analysis shows that it is as deceptive as it is beautiful. First, without a sufficiently exact definition of difference, this is not a definition, as it is wrong to define one unknown term through another unknown term. This is a logical fallacy. Second, even if we take the mundane meaning of the word difference, we may come to the conclusion that anything is a difference because anything is different from something else. Besides, being different, anything makes this difference and thus, we have to logically conclude that anything is information. This makes the concept of information void from a scientific point of view. Fortunately, this is not true, and the concept of information is not only meaningful but is becoming one of the pillars of contemporary science.

J: I fully understand your concern about logical consistency and general confusion when the word “difference” is used. That is why I said that

information as a difference which makes a difference is only a starting point. To get a measure of what difference means, we can appeal to its dictionary definition in the Merriam-Webster Online Collegiate Dictionary [

29]: (1) the quality or state of being dissimilar or different; and (2) an instance of being unlike or distinct in nature, form, or quality. So, indeed, we are in agreement, though at first glance we seem to be in a logical quandary and great confusion. However, let me remind you that context is very important in any situation. We have to ask, who determines these differences? We are certainly talking about human beings, such as Bateson or ourselves, who are determining these differences. Sometimes, they can be very complex differences such as the ones we might experience in looking at and analyzing an abstract painting that compounds many different levels and guises of differences that are possible to distinguish. Luckily for us, that is not where we begin our process of distinguishing differences. We begin this process from the point of our conception as living beings. A single cell has to become two, then four, and so on until the emergence of the child from the womb to begin an additional gestation period out of the womb. How does the single cell know how to become two cells? What is/are the difference(s) that it detects that allows this certain process to become effective? I am not a biologist, so I do not want to delve into all of the biological complexity that probably plays a role. However, I do want to assert that at some point in this process, our five primary senses (touch, sight, hearing, smell, and taste) come online 24/7. This is the only basis for our access to the world. One thing that can be said with certainty about our senses is that they are continuously detecting spatial and/or temporal differences in our dynamic environment. We do it because the alternative, to not do it, would only lead to our certain demise. We have to be good at detecting differences so that when made to feel a nipple we can suck as though our life depended on it, but unaware that that is why we are doing it.

One important aspect of our primary senses is that they deal with quantities/qualities that are commensurable, i.e., that have a common measure. For example, the sense of touch (though multidimensional as composed of mechanoreceptors, thermoreceptors, nociceptors, proprioceptors) might be simply ascribed as being sensitive to pressure, and in that role, it is able to keep track of all such pressure sensations that come into its sphere of action. As might be imagined, from one instant of time to the next, pressure sensations are felt by the human in question and become part of her experience. This is how quantitatively and unambiguously “a (pressure) difference” becomes qualitatively “a (pressure) difference which makes a difference”. This is the process of information that Bateson discovered and applies to any and all of our primary senses, which not only act individually but in concert. Primary senses provide our only contact with our environment and provide keys to our development. This is why we can say that:

“A key issue in reaching a unified definition of information is the fundamental problem of identifying how a human organism, in a self-referential process, develops from a state in which its knowledge of the human-organism-in-its-environment is almost non-existent to a state in which the human organism not only recognizes the existence of the environment but also sees itself as part of the human-organism-in-its-environment system. This allows a human organism not only to self-referentially engage with the environment and navigate through it, but also to transform it into its own image and likeness. In other words, the Fundamental Problem of the Science of Information concerns the phylogenetic development process, as well as the ontogenetic development process of Homo sapiens from a single cell to our current multicellular selves, all in a changing long-term and short-term environment, respectively.”

Summarizing the above quote, we can ask the question: What is the process of how we become what we become? Note that implicit in this conception of information is that all information that human beings have access to is produced by us. In other words, we can conceive it as a process of info-autopoiesis, or of self-produced information, as central to humankind [

30,

31]. This can be extended, more generally, to all cognitive beings that exist in nature.

M: Thus, you are implying that information is produced only by people.

J: Yes, the phylogenetic and ontogenetic development of humankind is a long process of information creation, which necessarily impacts our environment and us. However, we can even extend this concept and talk about an infosphere created by all living beings, but I am getting ahead of myself.

M: Now you are contradicting yourself, because at first, you once more assert that information is produced only by people, but then you add that all living beings create an infosphere.

J: As mentioned previously, we can conceive info-autopoiesis or self-produced information as central to humankind, but this ability may be extended more generally to all cognitive beings that exist in nature. This goes to the heart of whether or not humankind is unique as to its abilities when compared to other living beings. Asserting our uniqueness does not require denying the uniqueness of other living beings, or even constraining their abilities due to our inability to access their world.

M: I can agree that people created an infosphere and that all living beings created even a larger infosphere. Nevertheless, your statement causes many other questions.

First, do only living beings produce information, or can information be produced by other systems?

Second, do all living beings create information or only some of them?

Third, is all information created by living beings or only a part of it?

Four, do people only create information, or do they also receive information? Then, what does it mean to receive a difference?

Fifth, do people create or produce information from nothing or from something else?

Six, do only living beings contain/store information, or can other natural and/or artificial systems also contain/store information? In addition, what does it mean to store difference?

Seven, if living beings only create information, then what does information transmission mean? Then, what does it mean to transmit difference?

J: I am glad that we are in agreement as to that people created an infosphere and that all living beings created even a larger infosphere. Of course, this cannot but raise many additional questions such as the seven questions you have framed. Let me answer each question, in turn, as thoroughly as possible to allow us to come to a better understanding of our positions.

So, let us consider the first question, asking whether only living beings produce information or information can be produced by other systems.

I want to propose and postulate that all living beings engage in a process of info-autopoiesis, i.e., in a process of self-production of information. This extends the concept of autopoiesis by Maturana and Varela [

21,

22], who did not consider information as an element of autopoiesis. Indeed, the process of info-autopoiesis is the basis to understanding information in biology. This proposal could be considered as an attempt to do what Varela [

32] suggested when he identified the need for the inclusion of information in autopoiesis. In the case of tool-making and machine-making living beings, this implies that the systems that are created by people and other living beings can also have the capacity to produce information.

To better organize my explanation of this issue, I would like to divide the history of information utilization and exploration into two major epochs. In the

first information epoch, the need to provide a quantitative/objective appraisal of information might have existed but was not a priority. There was an implicit understanding (qualitative/subjective) of information as something that added to our existing knowledge, i.e., “when ‘what we know’ has changed” [

33], with mostly semantic undertones. The major producers and consumers of information in this time period were human beings. Note that our understanding of information as “when ‘what we know’ has changed” has the connotation of difference, i.e., something has changed. However, we can also view this from another perspective that few would ascribe to information, but it is just as real: the role of human actions and effort (labor) exerted on our environment, which is more directly related to Bateson’s “difference which makes a difference” producing changes in our environment. This perspective also falls in line with a historically meaningful perspective on the word information. We find that it derives from the Latin stem

informatio, which comes from the verb

informare (to inform) in the sense of the act of giving a form to something material as well as the act of communicating knowledge to another person [

34,

35,

36,

37,

38,

39]. The first of these meanings is what allows us to see a connection of information to human labor exertion. In other words, the term information may be said to mediate the act of labor between humans and nature, i.e., the act of labor as a metabolic connection between humans and nature is the action of giving form to something material, i.e., labor

in-forms matter. In addition, matter

in-forms humans by reacting to the efforts of humans. It is a never-ending interactive process of action–sensing–action. This notion of information correlates with that of Bateson emphasizing the utilization of our five primary senses in our environmental interactions.

Now, let us consider a description by Gregory Bateson, of a laborer wielding an ax:

“Consider a tree and a man and an ax. We observe that the ax flies through the air and makes certain sorts of gashes in a pre-existing cut in the side of the tree. If now we want to explain this set of phenomena, we shall be concerned with differences in the cut face of the tree, differences in the retina of the man, differences in his central nervous system, differences in his efferent neural messages, differences in the behavior of his muscles, differences in how the ax flies, to the differences which the ax then makes on the face of the tree. Our explanation (for certain purposes) will go round and round that circuit. In principle, if you want to explain or understand anything in human behavior, you are always dealing with total circuits, completed circuits. This is the elementary cybernetic thought [

23].”

This is a description that evolves from a cybernetic perspective of the world by Gregory Bateson that identifies information/differences that are pertinent, in this case, to the dynamic and evolving labor effort at hand, which is no different from many typical labor tasks, and can be ascribed as a series of material informational efforts involving the use of the human brain, nerves, muscles, and sense organs. In short, we want to argue that labor and information/differences are intimately entwined and that every artifact embodies labor and information/differences. This aspect of all human artifacts goes largely unnoticed, but it lends much credence to the fact that we can easily recognize implements manufactured by humans no matter their anthropological age. Another example is signs of butchery in animal bones that are more than 2 million years old [

40,

41].

The

second information epoch begins with Shannon’s landmark paper on the Mathematical Theory of Information that was central to the establishment of ‘Information Theory’ as a discipline [

42]. This prompted the need to quantify measurements of different kinds that would allow one “to discover the method which will give us the maximum amount of information for a given outlay of time or space or other resources” [

33] with mostly syntactic constructions that negate semantic content. In this current epoch, producers and consumers of information are not only humans but also machines that are designed and built for that purpose by humans. The production and consumption of information by humans consists in being the producers and users of Information and Communication Technologies (ICTs) such as wireless radios, cybernetic control mechanisms, encryption machines, and television, evolving to the technological levels that make items of common usage today such as cell phones, digital televisions, satellite communications, the internet, social media, etc. These ICTs allow messages to be composed by humans/machines, coded, and optimally transmitted as communication signals, which are received, denoised, decoded, and interpreted by humans/machines. This is the information basis of the information age.

M: I don’t object to your stratification of the history of information utilization and exploration. At the same time, I would like to suggest that it is possible to distinguish more periods or epochs in this history.

The first period (epoch) began with the emergence of human beings and is characterized by the situation in which people operated with information but did not have the notion of information.

The second period (epoch) is characterized by the emergence and utilization of the notion of information.

The third period (epoch) is characterized by the beginning of information studies.

I would also like to remark that although etymology does always define a scientific concept, often it provides a path to a better understanding.

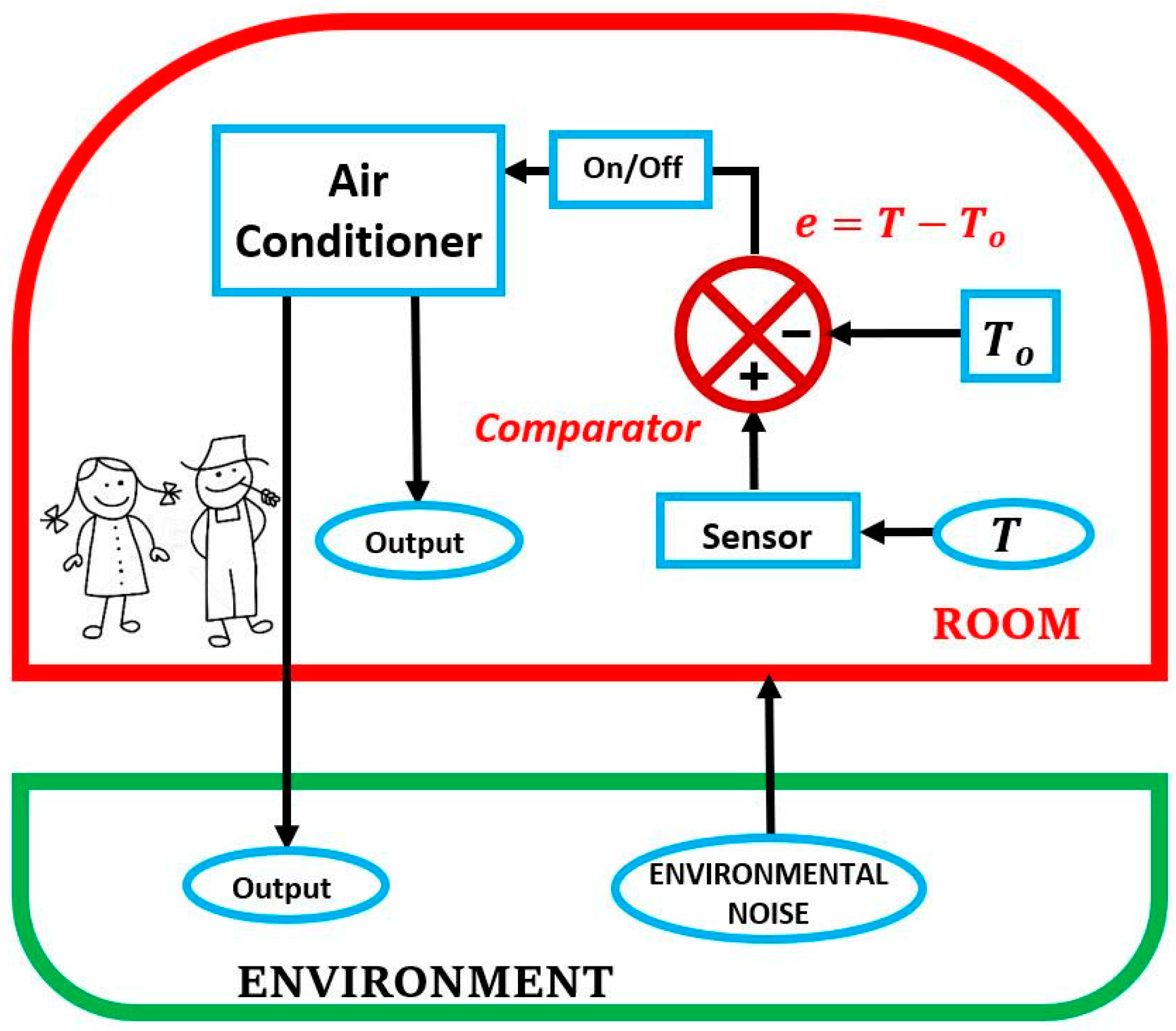

J: Your periodization is consistent with mine. So, let us return to our main problem and illustrate how Bateson’s “difference which makes a difference” translates into a mechanism that is capable of generating information. For example, we can consider a thermostat represented in

Figure 1. Note that the design, construction, and functioning of such a thermostat is the result of human labor, i.e., of an info-autopoietic process.

Figure 1 shows the relationship between a room and the surrounding environment. The room incorporates an air conditioner unit that can cool/heat the room and the associated thermostat control unit that controls its operation. The thermostat control unit consists of a one-parameter

Sensor and a

Comparator. The one-parameter

Sensor measures the room temperature

on a continuous basis. This temperature is an input to the

Comparator as the

detected parameter. Another input to the

Comparator is the temperature setting

arbitrarily set by the occupant of the room based on her level of comfort. The temperature setting

is regarded as the

reference parameter. The role of the

Comparator is to obtain the difference

e =

T −

To between the

detected parameter and the

reference parameter. This is an example of the Bateson

difference, which may also be referred to as

error. This

difference/

error is the parameter used to trigger the

On–Off switch of the air conditioner unit. In cooling mode, the air conditioner turns

On when

, and turns

Off when

. The opposite is true when the air conditioner is in heating mode.

This configuration shows that the actions of the thermostat depend on the Comparator as a function of the difference/error obtained from comparing the detected parameter to the reference parameter. The circuit that comprises this system is a semi-closed-loop cybernetic feedback circuit, not a closed-loop cybernetic feedback circuit, since there is no direct connection between the air conditioner and the Sensor. In addition, there is no connection between the Comparator output and the temperature setting. This brings about the existence of two semi-closed-loop cybernetic feedback circuits. One feedback circuit comprises the air conditioner that exhibits two outputs: one to the room and the other to the outside environment. The impact of the air conditioner on the Sensor is by way of the air currents in the room as well as the environmental noise, which is the impact that the environment might exert on the walls of the room by being more/less windy or sunny/cloudy than the room. This feedback circuit has an eventual effect on the detected parameter of the temperature at the one-parameter temperature Sensor, leading to a subsequent action affecting the Comparator output that leads to an effect on the On/Off switch of the air conditioner. A second semi-closed-loop cybernetic feedback circuit mechanism to change the temperature setting is by way of the room occupant. In either of these two cases, the room occupants can intervene to make the room temperature amenable to their needs.

The definition of information by Bateson as

a difference which makes a difference may be used in the context of this thermostat example. Let us note that this definition of information implies a quantitative portion (

a difference) and a qualitative portion (

a difference which makes a difference) [

23]. The quantitative portion is the

difference/

error e =

T −

To that is calculated by the Comparator that results in the On/Off actuation of the air conditioner; the qualitative portion is that given by the comfort level of the person inside the room that controls the setting

To. Furthermore, the person whose temperature comfort is at issue in the use of the air conditioner may have the use of a thermometer to compare its experienced comfort level. This is what drives the temperature setting. Here, we identify, for illustrative reasons that the

difference/

error,

, represents Bateson

information. This means that the terms

difference/

error/

information here are treated, although not reducible to each other, as equivalent. We further note that in this example, the

error is the term that acts as a cybernetic correction factor in pursuing the goal of achieving temperature

To.

In short, the four-step conceptualization of how this semi-closed-loop cybernetic feedback system consisting of a one-parameter Sensor and Comparator works is as follows:

A Comparator (thermostat) is set to a reference parameter (room temperature setting);

The sensor (room temperature sensor) distinguishes the value of the detected parameter;

The Comparator obtains the difference/error between the detected parameter and the reference parameter;

The detected difference/error is the information needed to send a signal to turn-on/turn-off the system governing the level of temperature in the room.

In summary, all living beings engage in a process of info-autopoiesis, i.e., in a process of self-production of information, which includes the capacity of information production by all artifacts that incorporate the designed means for such production.

M: Your example of a thermostat is a good illustration of a possible interpretation of Bateson’s statement. However, what system creates information e? Is it a human being or the thermostat?

Indeed, the human being only introduces To, while the thermostat constructs the difference/error e = T − To, and you interpret this difference/error as information.

However, this example shows that even such a simple system as a thermostat creates information. Besides, when you stated before that “matter in-forms humans by reacting to the efforts of humans,” it implied that matter created information. So, how to balance this with your previous assertion that only living beings create information?

J: Human beings in the process of interacting with their environment conceive, design, and create devices such as the thermostat. Implicit in the conception, design, and creation of these devices is the inclusion of information in the devices by human beings. Furthermore, the implication is that if the devices serve a specific purpose for humans, the devices are designed so as to process specific information for humans and then to display that information so that humans can take advantage of it. So, your appraisal is correct: the thermostat does create information that a human can interpret and use, but at the behest of humans.

This is the same as when I stated that “matter in-forms humans by reacting to the efforts of humans.” Consider any mundane activity that engages a human being with matter, such as the cutting down of a tree. With each cut of the tree, the wood is acted on and transformed. This transformation, due to human actions, allows the human the opportunity to assess her work and decide what to do next. The in-forming of matter by the human has a purpose, which the human continuously assesses. In each and every case, it is the human that initiates action on matter, i.e., in-forms matter, with a specific purpose. Of course, matter, as a reactive object, yields a response that the human uses to gain information about the behavior, mechanical or otherwise, of the matter at hand for present or future use. This is at the heart of info-autopoietic behavior by humans, i.e., humans self-produce information from their interactions with the environment. So, it is not matter that creates information; rather, it is matter, as a function of the actions and needs of humans, that reflects desired information by humans. The thermostat is also an example of this, since it processes and produces information at the behest of humans. There is no example of information that does not reflect this dynamic: the process of info-autopoiesis is at the heart of information creation.

M: So, you agree that matter, or in other words, any material thing, can inform.

J: I agree that any material thing can inform, but only as a result of prior human action. This would imply that there is no information in matter before living beings engage with such matter. Info-autopoiesis is the key to understand this connection.

This brings us to your second question of whether all living beings create information or only some of them.

My opinion is that all living beings, from the simplest unicellular organism to the most complex multicellular organism have the capacity for info-autopoiesis, or the process of self-production of information.

M: I can completely agree with you that all living beings create information.

J: Now, I will answer your third question of whether all information is created by living beings or only a part of it.

As I mentioned before, all living beings have the capacity for info-autopoiesis, or the process of self-production of information, but this is not all the information that exists in the infosphere. As argued before, human beings are capable of designing, building, and putting into operation systems that are fully capable of generating information, e.g., Information and Communication Technologies (ICTs).

Consequently, the conclusion is: “not only living beings create information but there are other systems that create information”. These systems are conceived, designed, and built at the behest of living beings, for the benefit of living beings, as part of the process of info-autopoiesis. The process of info-autopoiesis is part and parcel of this creative process of conceiving, designing, and building systems that have the capacity for creating information.

M: Thus, you come to the conclusion that not only living beings but many other systems create information. I would like to add that according to the general theory of information, any system contains information and any active system creates information [

11].

J: The only caveat that I would add to the general theory of information is that only living systems or systems created by living beings engage in a process of info-autopoiesis or of creating information.

Now, I will answer your fourth question of whether people only create information or they also receive information.

People in the process of information creation engage with the design, construction, and functioning of Information and Communication Technologies (ICTs). These ICTs allow messages to be composed by humans or machines, coded, and optimally transmitted as communication signals that are received, denoised, decoded, and interpreted by humans or machines. So, the conclusion is: “living beings do not only create information but also receive information from other systems”.

M: Thus, you come to the conclusion that living beings do not only create information but also receive information from other systems. If we try to use Bateson’s definition, we have to say that people receive differences. We intuitively know and information theory explains what it means to receive information, but what does it mean to receive differences?

J: In the illustrative thermostat example above, we have said that the notions of difference, error, and information can be equivalent in some situations. In the information theory of the communication process, a syntactic process, what is transmitted are a series of 0s and 1s. These 0s and 1s are certainly differences and can be characterized as syntactic information. So, in any communication process, such as a telegraph or a digital telephone, syntactic information in the guise of differences allows messages to be composed by humans/machines, coded, and optimally transmitted as communication signals, which are received, denoised, decoded, and interpreted by humans/machines.

On a more fundamental basis, humans have access to their environment primarily by way of their five major senses: sight, hearing, touch, smell, and taste. Concentrating on our sense of hearing, we find that it comes about as a result of air pressure variations (differences) that impinge on our eardrums and that we learn to interpret in an info-autopoietic process. Similar arguments can be made concerning the other senses. However, what is most noteworthy is that if humans are sensorially deprived, it is tantamount to torture. Sometimes, after a short period of time, individuals feel unease and even experience hallucinations [

43]. What this might show is that our senses expect differences in order to function effectively. Of course, this is part of the process of interactivity with the environment, and it exists in the normal course of living and becoming who we are.

M: I can agree that variations can be treated as differences but it is strange to understand symbols such as zeroes and ones as differences.

J: As you know, Claude Shannon created the possibility of digital communication, which involves the use of syntactic information represented by a series of zeros and ones. Syntactic information is nothing more than the coding of language, images, sound, etc., which humans can recognize in terms of 0 and 1 (binary digits) that allow messages to be composed by humans or machines, coded, and optimally transmitted as communication signals that are received, denoised, decoded, and interpreted by humans or machines. So indeed, the symbols 0 and 1 express a difference, so that these digitalization communication processes can take place.

Now, I will answer your fifth question as to whether people create or produce information from nothing or from something else.

The process of information creation or production clearly needs to be specified.

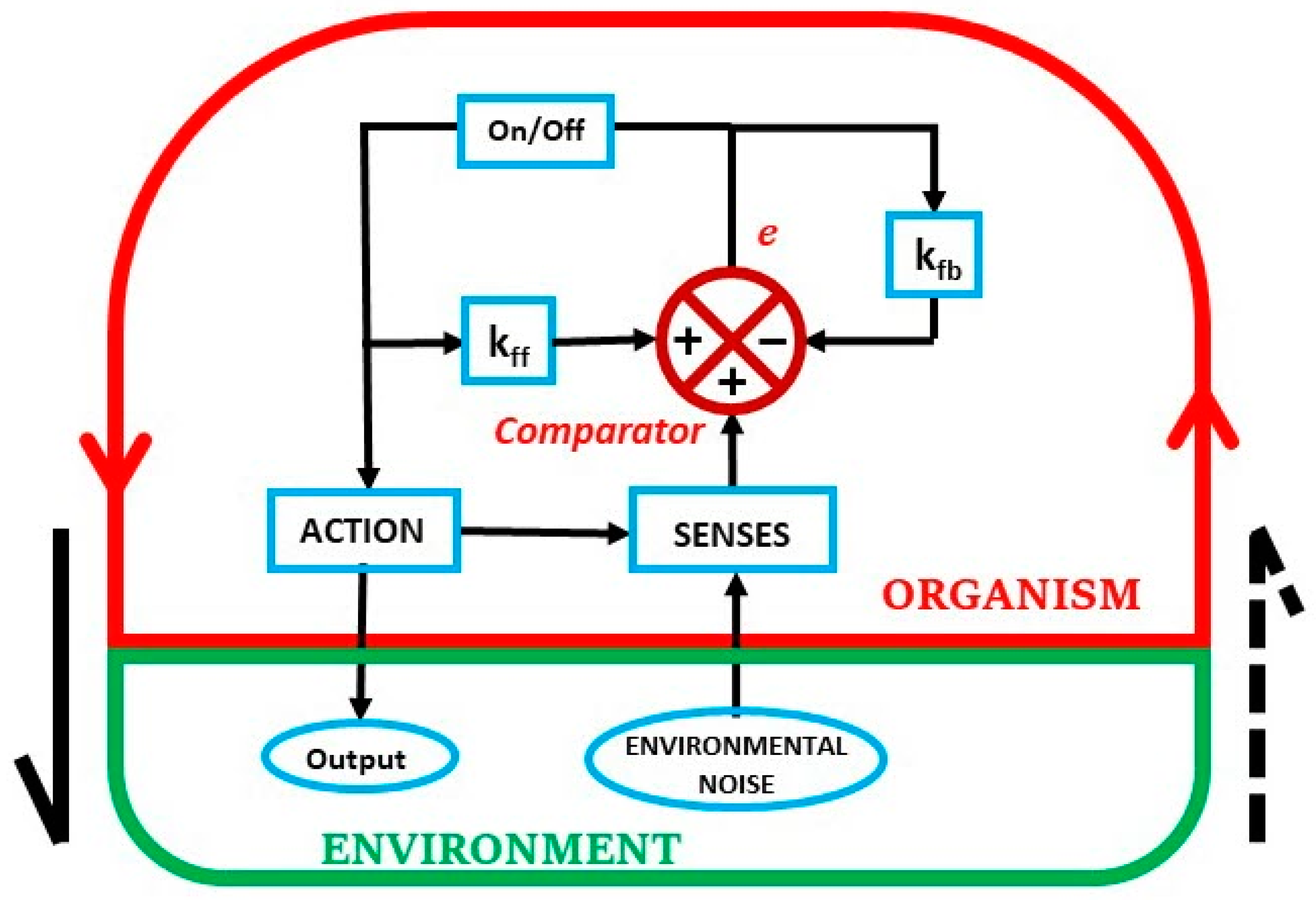

An organism may be considered, for the most part, as a collection of reflex-actions, i.e., involuntary and nearly instantaneous movements in response to a stimulus. An important assumption here is that reflex-actions are phylogenetic behavior. For example, a reflex-action such as blinking in humans ontogenically is made possible by neural pathways called reflex arcs, which can act on an impulse before that impulse reaches the brain, implying a response to a stimulus that phylogenetically relies on anticipatory ontogenic-derived behavior. If such reflex-actions did not exist, the human organism would not operate as intended. That is, phylogenetic behavior can be considered the first layer of homeorhesis.

Figure 2 shows a feedback simulation of the organism-in-its-environment (O/E) depicting cyclic self-referenced reflex-action operations to keep homeorhetic trajectories. This figure is drawn with similar elements as those of

Figure 1, so to make possible an eventual comparison as to its functioning. This depiction seeks to follow that of Maturana and Varela relating to autopoietic unity [

21]. Note that the ORGANISM enclosing line has two arrows on opposite sides denoting a counterclockwise sense. This symbolizes the metabolism of the organism. Additionally, there are two arrows going in opposite directions, to the sides of the organism, to denote the structural coupling of the organism with its environment. The difference in the arrows (one is dashed) denotes the asymmetric relationship between the organism and the environment. Some elementary actions of organisms are phylogenetic reflex-actions, which generally have something to do with keeping our internal milieu within homeorhetic bounds. It is common that feedback control mechanisms can be ascribed to these phylogenetic reflex-actions, although each type of reflex-action obeys its own non-mechanistic homeorhetic requirements. What we would like to elucidate is how Bateson information,

a difference which makes a difference, may be used to explain how these homeorhetic processes can occur, although not surrogated, in feedback simulations and compare its functioning to the previously presented one-parameter semi-closed-loop cybernetic feedback homeostatic mechanism.

Referring to

Figure 2, consider the beginning of the O/E cyclic interactions as the detection of

environmental noise (perturbation) by the

senses of the organism. This is the only window that the O/E has to access the environment.

Environmental noise is particular to each individual O/E, since each individual O/E has a particular set of senses that are attuned to its phylogenetic and ontogenetic development within a specified environment. The primary motivation of the O/E in sensing the noisy environment that may resemble white noise, particular to the O/E, is to maintain its individuation and homeorhetic trajectories in epigenetic landscapes due to dynamic openness [

44,

45]. For example, the O/E needs to satisfy its energy needs and is tuned to particular cues in the white noise that leads it to satisfy them. This is true of all our

senses that permit these cues to synchronize to recognize environmental invariance. In time, the organism is particular only to these cues. This is akin to being able to talk with another person in a noisy room.

The portrayal in

Figure 2 defines the fundamental relationship of the O/E as it exists embedded in its environment. There are two essential connections with the environment. The first, shown directly connected to the one-parameter feedback loop, is the single sense element that is the intermediary between the external environment and the internal milieu of the organism. This single sense element represents a microcosm of reality, since a typical human organism is composed of millions of these sense elements that define each particular sense organ in the human body. The second connection is the capacity of the O/E to physically impact the environment, either directly or by other means, including our body, tools, and machines in the case of humans. It is represented by an ACTION that results in an Output to the environment. It must be recognized at the outset that these two essential connections define an asymmetrical relationship between the organism and its environment, i.e., the impact that the organism has on the environment is not a mirror reflection of the impact of the environment on the organism. The intent in what follows is to concentrate on the sensorial side of this dichotomy, as the single sensor element is the only means that an O/E has to ascertain the reality of the external environment to successfully engage it.

Each sense element has a transduction role; it changes the physical (touch, sound, light) or chemical signals (smell, taste) to a corresponding electrical signal or action potential (AP). It is this AP that needs to be interpreted by the human organism, irrespective of origin, either locally or centrally to generate information,

a difference which makes a difference. In a similar way, as with the thermostat, a

Comparator is used to show how information is created using this single sensor element to begin the cyclic process of self-referenced information. Info-autopoiesis is the self-generation of information of the organism, in structural coupling, as a corollary of its (M, R)-autopoiesis [

46].

This process is akin to the Principle of Undifferentiated Encoding, which is described in the following way:

“The response of a nerve cell does not encode the physical nature of the agents that caused its response. Encoded is only “how much” at this point on my body, but not “what” [

47].”

However, the AP that needs to be interpreted, which involves info-autopoiesis or the information that results from the process of info-autopoiesis. As a result, I suggest that the Principle of Undifferentiated Encoding may be alternatively defined in terms of info-autopoiesis. I argue that because of the specificity of the sensors, info-autopoiesis does imply something not just about the “how much” but about the “why”, “what”, “when”, and “where” aspects of cognition. There is greater specificity in its realization. Examining

Figure 2 shows that the

Comparator has a feedback circuit that incorporates a quantity

and a feedforward circuit with quantity

to modify the error

. The feedback signal independently modifies the incoming sensory AP by subtracting a factor

, while the feedforward signal independently modifies the same sensory AP by adding a factor

, if and when

e is able to overcome the trigger level of the On–Off trigger switch. We note that the feedback circuit represented in

Figure 2 is neither a closed-loop cybernetic feedback circuit nor a semi-closed-loop cybernetic feedback circuit. The info-autopoiesis circuit is independent of the resulting actions that stem from its instantiation.

An equation that can be obtained from looking at the

Comparator, where

is the error and

AP is the Action Potential, yields

leading to,

which after factoring, we obtain,

yielding a relationship between input and output given by,

Each of the quantities and may be regarded as functions of difference/error/information, , of time, of historical and other factors particular to the organism/organ under consideration.

The relationship between input and output is capable of many fluctuations, allowing this basic first-order feedback system to be capable of accommodating multifaceted behavior. For example, with this type of approach, reflex-actions and actions requiring a longer fuse may be accommodated. Note also that and do not have to exist simultaneously, or they may be even triggered by differing phenomena. In addition, all settings related to how . and come about are internal to the organism, which does not exclude external influences by way of the environment influencing their behavior.

For the moment, consider only that the comparator has a feedback circuit that incorporates a constant to modify the difference/error/information, , that is generated as the result of the action of the Comparator on the incoming sensory AP and the feedback signal , that is, we assume . The case for is not further considered here, because the intent is to compare the one-parameter feedback loop to that of a mechanism. In general, the effect of the term is to either enhance or conserve e, acting similar to a memory function. We further note that in this homeorhetic example that the error is not a term that acts as a cybernetic correction factor, since we are not dealing with either a closed-loop cybernetic feedback circuit or with a semi-closed-loop cybernetic feedback circuit. Rather, it reflects a self-referenced comparison of the sensor element. It reflects what the homeorhetic organism identifies as information in the environment.

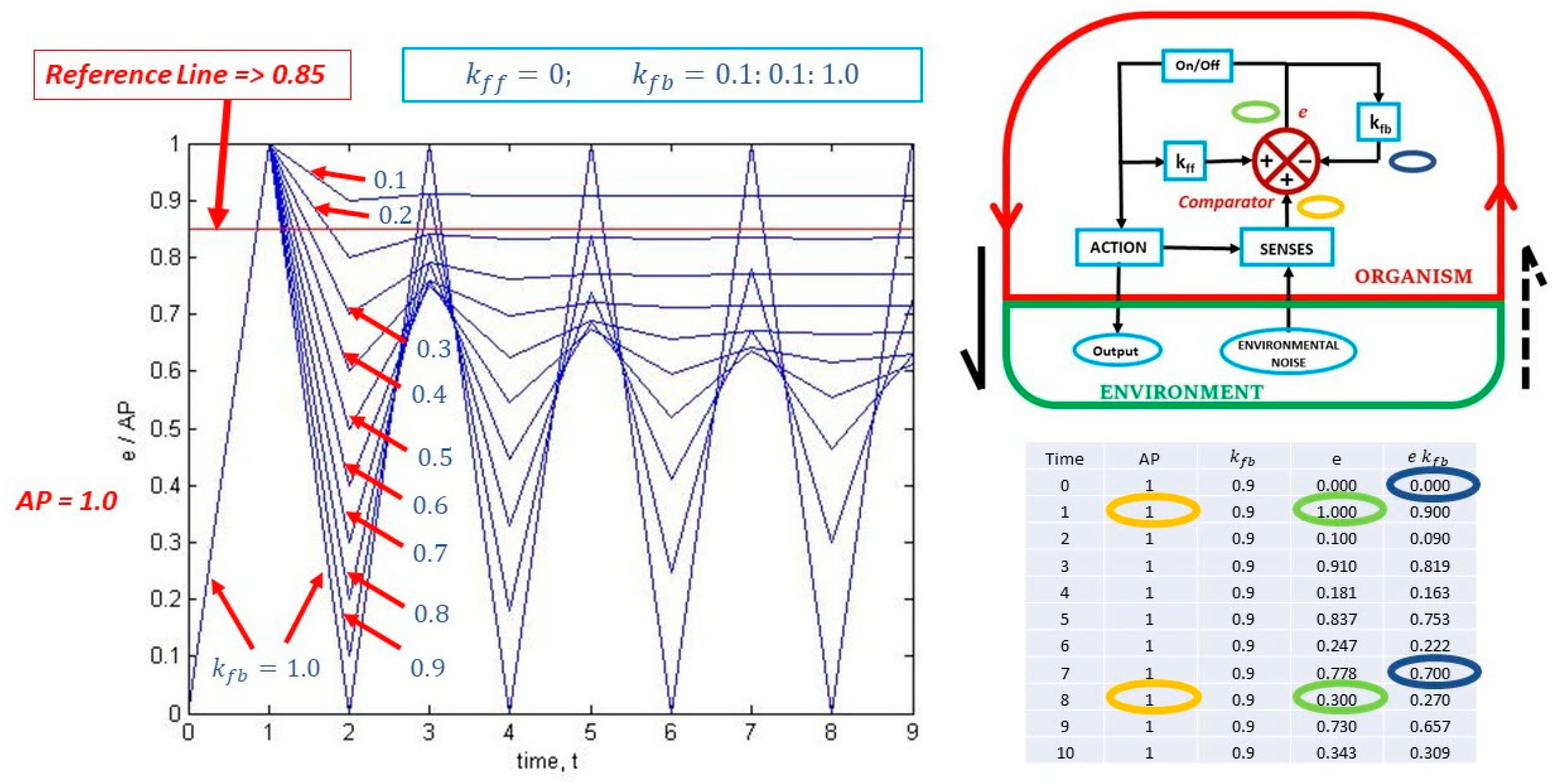

Figure 3 is a plot of the output over the input, i.e.,

. In this particular case, a constant value of AP = 1 is used, and

varies from 0.1 to 1.0 in decrements of 0.1, resulting in 10 curves generated at 10 time-steps. Note that the color-coding in the two small images on the right side is used to show how the calculation of

is performed. The curves in the graph show the versatility of the reflex-action depending on the value of

. The curve for a value of

envelops all the other curves as it oscillates between the values of 0 and 1 over time, implying continuous triggering of the reflex-action. All successive curves show an oscillatory reduction over time. For example, the curve for

after reaching a peak of 1.0 at time interval 1 has a tendency to be stable around a value of 0.9 after a time interval of 2.

Referring again to

Figure 2 (inset in

Figure 3), note that an On/Off trigger switch is present. This trigger switch will remain On for

difference/

error/

information,

, values above a certain

reference value, but it will remain Off below that same

reference value. Looking at

Figure 3, if an arbitrary trigger

reference value is set to the value

, the reflex-action will trigger once for all values of

, but it will trigger four additional times for the value

, one additional time for a value of

, and it will remain triggered continuously for a value of

. Note that this

reference value, as well as the value of

, are both fully defined by the organism in question, depending on many factors, including those mentioned above.

To summarize, the above description gives the context of info-autopoiesis as it relates to phylogenetically derived reflex-actions in the context of the definition of Bateson information:

a difference which makes a difference. This definition of information implies a quantitative portion (a difference) and a qualitative portion (a difference which makes a difference) [

48]. The quantitative portion is the difference/error/information

that is evaluated by the

Comparator that results in the movement of an organism appendage due to the reflex-action; the qualitative portion is the homeorhetic dynamic of the organism. For example, if the feeling of hunger by an individual recurs, the act of eating restores the homeorhetic dynamic as the organism goes about its cognitive business.

In short, here is a four-step conceptualization of how this feedback simulation of the organism consisting of a one-parameter Sensor and Comparator works:

A comparator is set to a reference parameter, which is defined by ;

The sensor (one of the main senses) distinguishes the value of the detected parameter;

The comparator obtains the difference/error between the detected parameter and the reference parameter;

The detected difference/error is the information needed to either allow or not allow the actuation of a reflex-action.

M: You described an interesting example of information production, but it only shows that information is created/produced from something that already exists and functions.

J: This example is related to how a living being functions in the realm of info-autopoiesis or the self-production of information. And you are right, this is “an interesting example of information production but it only shows that information is created/produced from something that already exists and functions”. In this case, that ‘something’ that already functions and exists is a living being. Furthermore, that ‘something’ is the only thing that can create information either directly or indirectly. This is the significance of info-autopoiesis.

Now, I will answer your sixth question of whether only living beings contain/store information or if other natural and/or artificial systems can also contain/store information. Additionally, what does it mean to store difference?

From the above description, not only living beings contain/store information. All types of artifacts, as well as all systems related to Information and Communication Technologies (ICTs), contain/store information. In other words, information is contained/stored in all creations by living beings. Information does not exist in Nature except as a result of the action of all living beings in information creation or info-autopoiesis. If living beings did not exist, there is no information. Or, simplifying, life is information, and information is life. This also implies that there is intrinsic information neither in the genome nor, for that matter, in our environment.

This is in opposition to many information researchers, beginning with Norbert Wiener, who stated that: “Information is information, not matter or energy. No materialism which does not admit this can survive at the present day” [

26]. While defining information, in this instance, in terms of itself, Wiener does go on to develop a definition of information [

26] that parallels that of Shannon. Further, Wiener’s statement implies that information is another fundamental quantity in nature, in addition to matter and energy. However, information is not a fundamental quantity of the universe. Organisms either sense moving matter/energy or shift position/perspective: in a sense creating motion. So, information is a product of the continuous perception of dynamic sensory maps by living beings if you use Bateson’s

difference which makes a difference. Yet Wiener’s claim has remained unquestioned by many, including physicists, and his affirmation of the fundamental nature of information is taken as gospel. This poses an impossible quandary for materialism and motivates the persistence of information as an independent and/or quantitative/objective entity. An interesting question that might be posed to explore these ideas further is: How to explain geological, cosmological, and even biological scientific discoveries as fruits of info-autopoiesis, rather than as the result of taking advantage of intrinsic information in Nature?

Another approach that deals with the existence of information in natural systems, purportedly to deal with questions such as the one posed above, is that proposed by Kun Wu that states:

“Because of the universality of material interactions, and also because there is no beginning of matter and time, no material system in the universe can have remained in its original initial state with no interaction with other material systems. In the term I have coined to describe this state of affairs, any object is a self-evolved “informosome” that has condensed all kinds of information about past, present and future structures and states. Since it is the material nature of an informosome that the properties of any object constitute a unity of direct and indirect existence, this unity also has the triple property of being the source of information, the carrier of information and the information itself at the same time. As a result, we can see the scope of informational existence: Information and matter coexist. Materialists should thus accept that the term information refers to something that can be completely summed up as material but with its own unique mode and status of existence. Due to internal and external objective interactions, objects constantly radiate and reflect quanta to the external world and in this process send information about the mode and state of their own existence depending on the nature of those quanta and their distribution. This shows that the informational ground that produces and reflects the mode and state of physical existence is in the material world itself, in its own physical movement. It is this physical property, showed by it, that makes this world a knowable world.” [

49].

If I understand Wu correctly, he is postulating the existence of information as co-extensive with the existence of matter and energy, which is a similar perspective to that of Wiener. These are perspectives that ignore the central role of human beings in the process of information. This is clearly in direct opposition to my interpretation of what Bateson proposes.

As to what it means to store difference, this goes back to the equivalence made before among difference/error/information. To store information in any media, what you are really storing is differences: writing on a page shows differences; the grooves in a compact disc are also differences that are recorded and then interpreted by a machine, as are all other instances of recorded information in media. This could even be applied to neuronal imprints in our brain that reflect changes in neuronal circuits, apart from the electrical pathways that are enacted due to use and reuse.

M: Thus, you want to say that when Wiener and many other researchers assert objective existence of information, it contradicts your interpretation of Bateson’s “definition” or in other words, you claim that information does not exist in an objective form.

J: Physicists seek to interpret the world in objective terms. What Bateson’s “definition” achieves is an interpretation of the world that is subjective and objective at the same time. It is syntactic and semantic at the same time. The question to ask someone that believes in only an objective perspective of the world is: how does a subjective being such as you achieve objectivity? However, the point that is really, at the heart of information is whether or not information predates the existence of living beings: whether it is inherent to nature since the origin of the universe. Bateson’s position is that information only exists since the time when life originated in the universe. In addition, a postulate that information is inherent to the universe does not prove its validity. Bateson’s argument relies on the existence of the equivalence of matter and/or energy. Living beings are able to detect moving matter and/or energy; therefore, they detect spatial/temporal differences, and this helps them satisfy their physiological and/or social needs. So, it is a question of what you can postulate as primary: matter and/or energy, or, matter and/or energy and information. The primacy of information is a postulate that needs to be proven: not so for matter and/or energy.

M: Actually, living beings are also able to detect information in many systems and processes.

J: A more accurate statement would be that living beings use their sensory organs to detect the motion of matter/energy that through a process of info-autopoiesis becomes information. Living beings do not have the sensory organs for the direct detection of information.

Now, I will answer your seventh question about the meaning of information transmission, as well as what it means to transmit difference.

My understanding of ‘information transmission’ is that after the process of info-autopoiesis by living beings, information has the capacity to be transmitted into the surrounding environment. Since the surrounding environment includes other living beings, sharing it with these other living beings would be part of information transmission. Let us narrow the scope to human beings, since this is the sphere that most represents what might be true of other living beings. As generally specified above, we transfer information to our environment in two ways: by in-forming matter by our physical interactions (as illustrated by Bateson above), as well as by the act of communicating with another person. The levels of sophistication achieved by humankind in these two modes of ‘information transmission’ are impressive when considering the multifarious related technological manifestations. As before, I want to emphasize that difference/error/information are equivalent expressions. Therefore, in transmitting information, we are transmitting difference.

M: I would like to remind you that before, you stated that information can also be transmitted from inanimate matter to people. Besides, it is unclear why the idea of information as the essence produced only by living beings is implicitly contained in Bateson’s conception of information.

J: Bateson’s conception of information as ‘a difference which makes a difference’ contains two important aspects that make it amenable to both objective/syntactic and subjective/semantic treatment. The objective/syntactic aspect is given by ‘a difference’, which for any specific example can be characterized explicitly and quantitatively. The thermostat example clearly shows the objective/syntactic calculation that can be performed to obtain/calculate ‘a difference’. At the same time, it cannot be the result of a subjective/semantic judgment of a human being as to whether the setting is the right one, i.e., ‘a difference which makes a difference’. For example, it goes without mentioning that the thermostat is a reality just because it is designed and built for a specific purpose by a human being in her efforts to satisfy a perceived need that leads to a more comfortable life with less effort. So, a thermostat, an inanimate object, does transmit information to a living being, but only as a result of the design by that living being. The thermostat is a machine built for a specific purpose by a human being. In that role, it has an information function. Central to any concept of information are human beings, which is also the case for the development of science.

The idea of information as the essence produced only by living beings is implicitly contained in Bateson’s conception of information because only living beings can simultaneously generate an objective/syntactic and subjective/semantic judgment of their living. That is the essence of ‘a difference which makes a difference’.

M: However, we still have the question about the shortcomings of Bateson’s “definition” of information from the scientific point of view.

J: I don’t really understand why there is a question about any shortcomings of Bateson’s description of information from the scientific point of view. The various explanations above provide a sufficient scientific basis to Bateson’s musings. Bateson’s perspective of information has to be examined from an objective/syntactic and also a subjective/semantic perspective. Human beings when engaging in scientific pursuits cannot but start from a subjective perspective, i.e., the subjective drive that feeds their curiosity to postulate personal/subjective hypotheses that are tested, to aspire to achieve results that allow them to be scrutinized by the larger scientific community. If the results pass muster, then they are accepted as scientific truths, which in any case are generally superseded in time by new and more general findings. It has to be recognized that this never-ending process has subjective/objective elements. Indeed, the question that every scientist should be able to answer is: How is it that as a subjective being, you able to achieve the objective perspective that you so revere? As mentioned before, one interesting aspect to Bateson is that the subjective aspect is not far away, in a world where “true” scientists emphasize the objective aspect. This apparent contradiction is certainly something that needs to be taken seriously. How does one of these objective scientists become so, if they start as subjective beings? Does Bateson hold the promise of simultaneous subjectivity and objectivity?

This is why it is important for me to repeat the following quote that encapsulates how we can be in a better position to deal with information if we identify the Fundamental Problem of the Science of Information, which states:

“A key issue in reaching a unified definition of information is the fundamental problem of identifying how a human organism, in a self-referential process, develops from a state in which its knowledge of the human-organism-in-its-environment is almost non-existent to a state in which the human organism not only recognizes the existence of the environment but also sees itself as part of the human-organism-in-its-environment system. This allows a human organism not only to self-referentially engage with the environment and navigate through it, but also to transform it into its own image and likeness. In other words, the Fundamental Problem of the Science of Information concerns the phylogenetic development process, as well as the ontogenetic development process of Homo sapiens from a single cell to our current multicellular selves, all in a changing long-term and short-term environment, respectively [

48].”

This process has been at the center of our phylogenetic and ontogenetic development since the beginning of our existence. It is only since the middle of the last century that we can say that we are beginning to ask fundamental questions about our existence.

M: Thus, we can see that there are many problems with Bateson’s “definition” of information. Besides, there is no analysis of how Bateson’s “definition” is related to other definitions of information, for example, to Shannon’s entropy. At the same time, there is the general theory of information (GTI) where a comprehensive definition of information is given.

J: What has not been said in the above exposition related to Bateson information is that Claude Shannon’s perspective of information is subsumed by Bateson. The shortest argument is to recognize that Bateson’s difference which makes a difference is both syntactic and semantic in its approach. The syntactic approach by Shannon may be shown to be subsumed by Bateson simply because the binary combination (0, 1) is the difference that is used to develop a mathematical theory of communication. Bateson does not take anything away from Shannon’s syntactic approach, but he complements it by adding the semantic portion, which is still under development.

M: Yes, your explanation not only makes clearer Bateson’s ideas but you elaborate a more precise description of information based on these ideas.

J: I expect that this dialogue has provided me a platform from which to expound on the virtues and disadvantages of Bateson’s approach to information. Now, I would like to hear something about the general theory of information (GTI).

M: The general theory of information (GTI) is an innovative approach, which provides a powerful means for all areas of information studies. It rigorously represents static, dynamic, and functional aspects and features of information. These features are modeled and explored by algebraic, analytical, and topological structures of operators in functional spaces as well as functors in the categorical setting forming information algebras, calculi, and topological spaces. It is possible to get acquainted with the GTI in the book

Theory of Information [

11], but I will briefly explain this theory here.

The first thing that we need to know is that the GTI has three components: the axiomatic foundations, the mathematical core, and the functional hull.

J: Please, explain what the axiomatic foundations of the GTI are as well as what the mathematical core and functional hull mean.

M: At first, let us look at the axiomatic foundations of the GTI. They consist of the principles, postulates, and axioms of the GTI.

J: Interesting, usually postulates and axioms are used in logically formalized mathematical theories. Is the GTI a logically formalized mathematical theory?

M: The answer to your question is yes and no, because the GTI has three parts. One is an informal methodological system, another is an operational mathematical theory, and the third is a logically formalized mathematical theory. Thus, the GTI is a methodological system and a logically formalized mathematical theory at the same time.

The first part of the GTI is built on principles, which describe and explain the essence and main regularities of the information terrain. It is possible to find the most detailed exposition of this methodological system in the book [

11].

The second part utilizes various mathematical structures such as categories, functors, epistemic spaces, named sets, operators, and transformations. It is possible to get acquainted with the operational mathematical theory of the GTI in the publications [

12,

13,

14,

18,

19].

The third part uses postulates and axioms to deduce the properties of information and its functioning. Postulates are formalized representations of principles, while axioms describe mathematical and operational structures used in the general theory of information. This part is only initiated and is still waiting for its full development.

J: Do you mean that to understand the GTI, we have to start from the principles?

M: You are right. So, let us look at the principles of the GTI. There are two groups of principles. Principles from the first group are called ontological principles. They explain the essence of information as a natural and artificial phenomenon as well as depict regularities of information functioning. Here is the list of these principles.

Ontological Principle O1 (the Locality Principle). It is necessary to separate information in general from information (or a portion of information) for a system R.

Ontological Principle O2 (the General Transformation Principle). In a broad sense, information for a system R is a capacity to cause or to prevent changes in the system R.

Ontological Principle O3 (the Embodiment Principle). For any portion of information I, there is always a carrier C of this portion of information for a system R.

Ontological Principle O4 (the Representability Principle). For any portion of information I, there is always a representation C of this portion of information for a system R.

Ontological Principle O5 (the Interaction Principle). A transaction/transition/transmission of information goes on only in some interaction of C with R.

Ontological Principle O6 (the Actuality Principle). A system R accepts a portion of information I only if the transaction/transition/transmission causes corresponding transformations in R.

Ontological Principle O7 (the Multiplicity Principle). One and the same carrier C can contain different portions of information for one and the same system R.

As you can see these principles can be divided into three groups:

Substantial ontological principles [O1, O2, and its modifications O2g, O2a, O2c] define information.

Existential ontological principles [O3, O4, O7] describe how information exists in the physical world.

Dynamical ontological principles [O5, O6] show how information functions.

J: Indeed, these principles provide an organized structural view of how information functions. They allow developing a far-reaching theory of information. However, what about a definition of information?

M: Actually, Ontological Principle O2 gives a definition of information in a generalized sense providing possibilities to define a variety of different types and kinds of information. The most important of them are specified in the following modifications of Ontological Principle O2 [

11,

50]. They based on the concept of an infological system. This concept is used as a variable parameter in the definition of information. To have this ability, an infological system is not formally defined in a general case but is specified for certain types of information. Examples of infological systems are:

A system of knowledge (thesaurus)

A system of knowledge, beliefs, ideas, convictions, and principles

A system of values, estimates and measures

A system of propositions

A system of theories

Ontological Principle O2g (the Relativized Transformation Principle). Information for a system R relative to the infological system IF(R) is a capacity to cause changes in the system IF(R) or to prevent such changes.

Ontological Principle O2a (the Special Transformation Principle). Information in the strict sense or proper information or, simply, information for a system R, is a capacity to change structural infological elements from an infological system IF(R) of the system R or to prevent such changes.

Ontological Principle O2c (the Cognitive Transformation Principle). Cognitive information for a system R, is a capacity to cause changes in the cognitive infological system IFC(R) of the system R or to prevent such changes.

The Ontological Principle O2 and its modifications allow affording a scientific meaning to the before mentioned statement of Bateson. Indeed, according to the Ontological Principle O2, information causes changes. Any change results in some difference between what was before action and after it.

At the same time, taking into account the assertion of Wiener that information is neither energy nor matter, we can consider information as difference. Thus, we come to Bateson’s statement “information is difference that makes difference.”

However, this is only half of the coin because according to Ontological Principle O2, information also can prevent changes. This results in the absence of difference between what was before and after. Thus, we see even in its more exact and viable interpretation that Bateson’s statement does not reflect the concept of information.

It is necessary to remark that this interpretation is exactly what Bateson had in mind when he made his statement about the essence of information. The general theory of information only provides a scientific interpretation of Bateson’s idea. In a similar way, physics provided a scientific interpretation of the idea of atoms suggested by ancient Greek philosophers.

J: Now I can better see the connection between GTI and the approach of Bateson. However, I would venture to say that Bateson does not exclude differences of differences, which would allow not only a detection of trends, but even an absence of differences. Moreover, it is possible to envision the creation of a mathematical theory with its axioms, theorems, and lemmas based on the Ontological Principles.

M: I can agree with you that these principles can be easily converted to postulates and axioms, building a base for a mathematical theory. However, a vital tool of any scientific theory is measurement, which connects theory with reality. Thus, the general theory of information (GTI) needs more principles.

Principles from the second group are called axiological principles. They explain how to measure information, which measures of information are necessary, and how to build them.

Axiological Principle A1 (the Correspondence Principle). A measure of information I for a system R is some measure of changes caused or prevented by I in R (for information in the strict sense, in IF(R)).

This principle shows that what people call Shannon’s information is the measure of information elaborated by Claude Shannon.

Axiological Principle A2 (the Temporality Principle). According to time orientation, there are three types of measures of information: (1) potential or perspective; (2) existential or synchronic; and (3) actual or retrospective.

This principle reflects the importance of time in measuring information.

Axiological Principle A3 (the Spatiality Principle). According to spatial orientation, there are three types of measures of information: external, intermediate, and internal.

This principle reflects the orientation of changes caused or prevented by information.

Axiological Principle A4 (the Determinacy Principle). With respect to how the measure is determined and evaluated, there are three constructive types of measures of information: abstract, grounded, and experimental.

This principle reflects the application area of the measure.

Axiological Principle A5 (the Totality Principle). With respect to information relations, there are three constructive types of measures of information: absolute, fixed relative, and variable relative.

This principle reflects the relativity of measurement.

Axiological Principle A6 (the Scaling Principle). According to the scale of measurement, there are two groups, each of which contains three types of measures of information: (1) qualitative measures, which are divided into descriptive, operational, and representational measures, and (2) quantitative measures, which are divided into numerical, comparative, and splitting measures.

This principle reflects possible types of measurement scales.

Axiological Principle A7 (the Communication Principle). The measure of information transmission from a carrier C to a system R reflects a relation (such as ratio or difference) between measures of information that is accepted by the system R in the process of transmission and information that is presented by C in the same process.

This principle reflects the acceptability properties of information transmission.

J: To make the picture complete, do you need to describe the mathematical core of the GTI?

M: The mathematical core of the GTI is the mathematical theory based on the principles of the GTI. Now, there are three approaches to the construction of this theory: algebraic, functional, and categorical approaches. Each of them provides efficient mathematical models. As a result, there are three types of models of information dynamics: information algebras, operator models based on functional analysis, and operator models based on category theory. Functional representations of information dynamics preserve internal structures of information spaces associated with infological systems as their state or phase spaces [

11,

13,

19]. The categorical image of information dynamics displays external structures of information spaces associated with infological systems [

12,

14]. An algebraic portrayal of information dynamics maintains intermediate structures of information spaces [

11]. These models allow researchers to discover intrinsic properties of information.

In addition to its own mathematical information theories, the mathematical core of the GTI includes other information theories, such as Shannon’s communication theory [

42], quantum information theory [

27] or the semantic information theory of Bar-Hillel and Carnap [

51], as well as its special sub-theories.

J: Now only the third component of the GTI needs to be specified, i.e., the functional hull.

M: The functional hull of the GTI consists of methods, measures, and algorithms that allow obtaining properties of information used by people as well as developing more powerful and reliable information processing systems.

J: All said, I would like to know whether your theory is only a beautiful abstract edifice far from life of people or if it solves some theoretical and practical problems that other information theories were not able to solve.

M: The general theory of information solves several fundamental problems, which are urgent and may be even vital for people because we live in the information age and information has come to the forefront of society and individuals in all countries and all walks of life. Although many information theories have been created, none of them have been able to solve those problems.

Here, we consider only three of those problems.

The first Problem is elaboration of the exact and all-inclusive definition of information. Understanding the importance of information, many researchers suggested a variety of information definitions. However, each of them had its shortcomings and boundaries. Moreover, as information was present in a diversity of areas, many researchers started thinking that it would be impossible to elaborate a precise consistent definition of information, which will be good for all these areas. Some researchers even tried to prove this [

34,

36,

37,

38]. However, the general theory of information provides such a definition, which encompasses all areas. This was possible to achieve due to the invention of a new type of definition. Namely, the general theory of information gives a parametric definition of information. Only a parametric definition has been able to unite all existing kinds, types, forms, sorts, and classes of information into one comprehensive and constructive concept.

The second Problem is unification of the theoretical knowledge about information. Many diverse theories of information have been elaborated, giving birth to the problem of creation of a unified theory of information. The general theory of information is a unified theory of information because it is demonstrated all existing theories of information, treating them as sub-theories of the general theory of information. At the same time, it is necessary to understand that the existence of the general theory of information does not exclude the necessity of special information theories, which can go deeper into specific aspects of information or in particular areas of information habitat.

The third Problem is finding the place of information in the world as a whole. The general theory of information also solves this problem in an innovative way based on thorough observations and detailed reasoning, which are described in other publications on this theory. Many researchers treated information as an enhanced kind of data or as a simplified kind of knowledge. It means that it was assumed that information has the same nature as data and knowledge. The GTI explains that information has an essentially different nature. It is possible to say that information is related to data and knowledge in the same way that energy is related to matter.

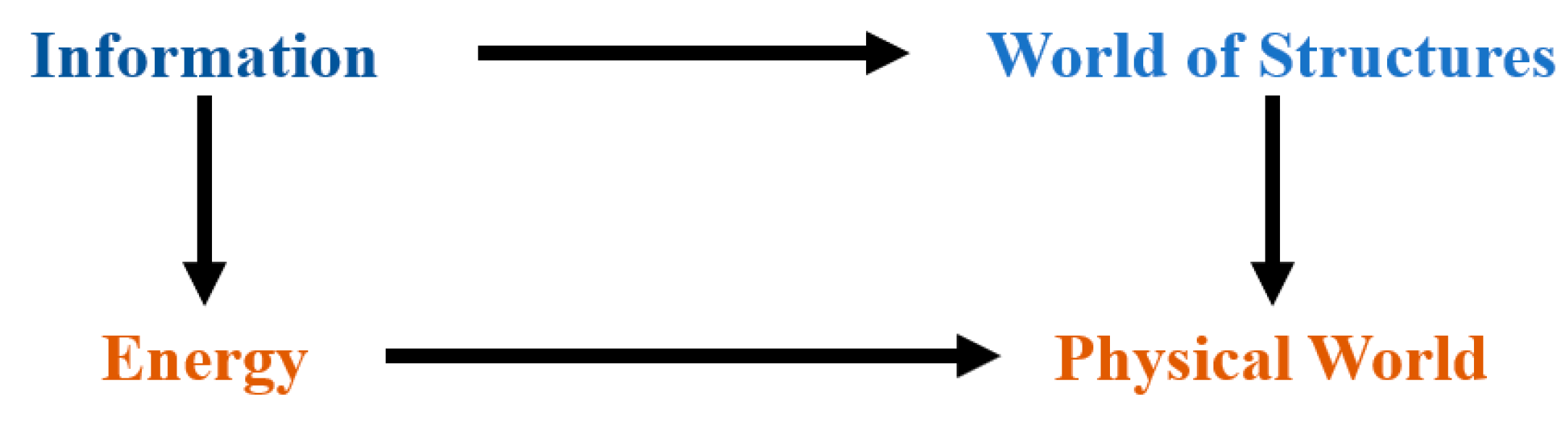

Since data and knowledge are types of structures, it is possible to identify the place of information in the world more exactly through displaying it in the following diagram.

As the world of structures is a scientific explication of Plato’s World of Ideas [

52], we can say that information is energy in the Platonic World of Ideas.

J: How did you come to such innovative ideas about the essence of information?

M: The work on this theory started at the end of the 20th century when I understood that information is similar to energy while data and knowledge are similar to matter. This idea took me outside the box, in which data, information, and knowledge were treated as essences of the same kind. Having this idea in mind, I started a diligent exploration of the properties of information discovered by other researchers. Thorough analysis of the existing knowledge on information allowed me to formulate and then further develop the ontological and axiological principles of the general theory of information (GTI), building its axiomatic foundations.

J: Now, when we discussed our approaches to the definition of information and to the adequate construction of a validated theory of information, let us make some conclusions.

M: Indeed, we discussed two different approaches to these problems and can conclude that these approaches do not contradict but rather complement one another. The general theory of information is a comprehensive unified approach to the realm of information, while the difference theory of information suggested by you is a special theory of information. These theories coexist in the world of structures in the same way as the special relativity and general relativity theories coexist in the physical world.