A Situation Assessment Method with an Improved Fuzzy Deep Neural Network for Multiple UAVs

Abstract

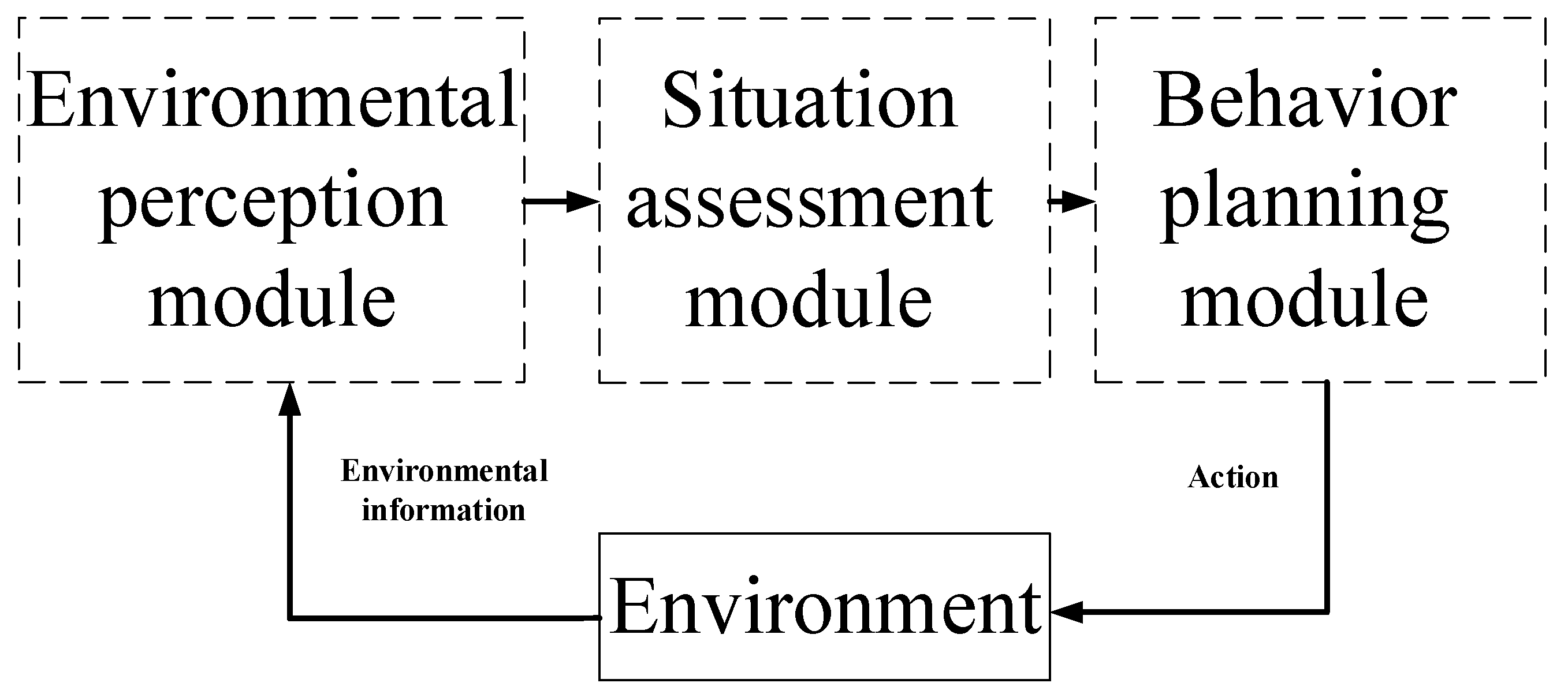

1. Introduction

2. Background

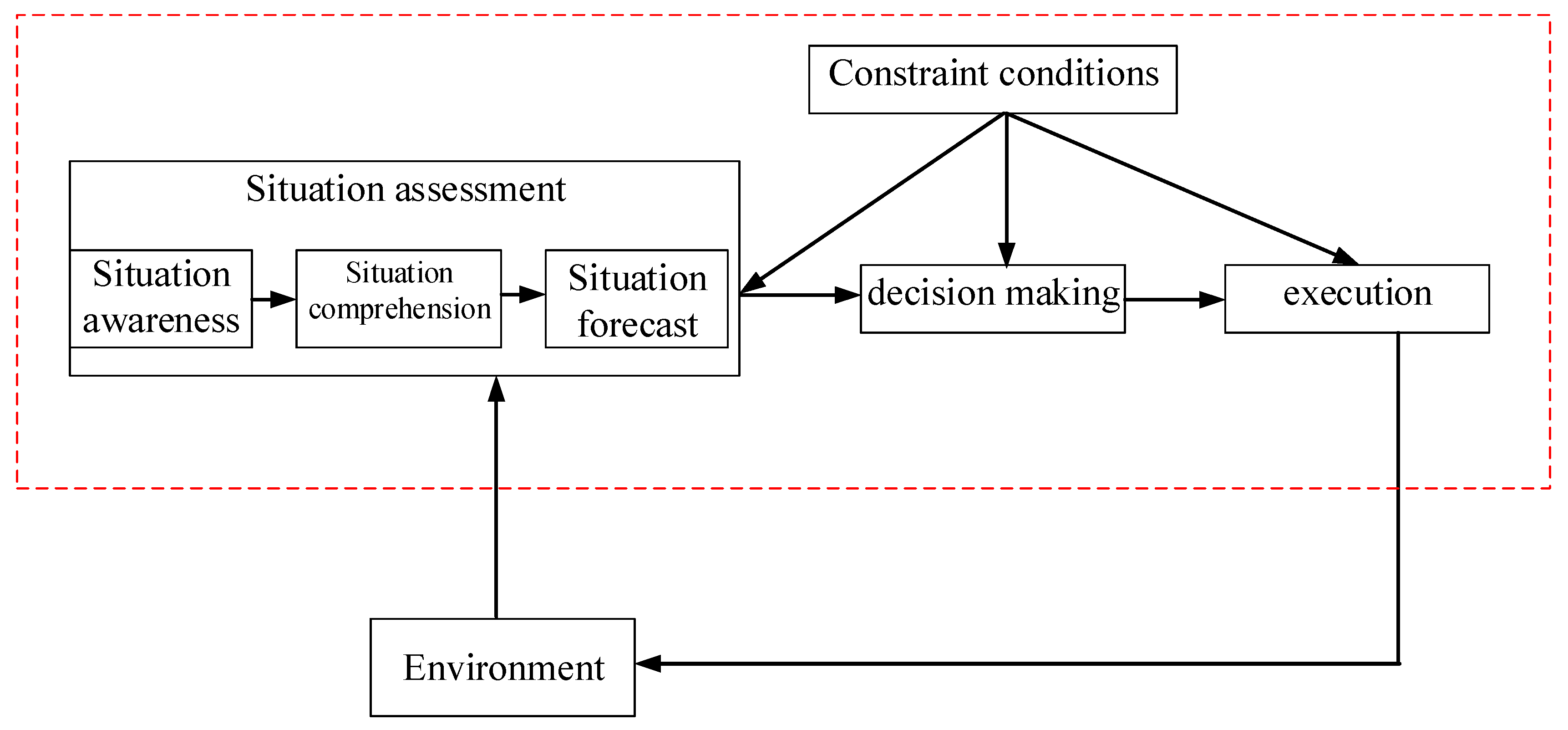

2.1. Situation Assessment

| Algorithm 1. The basic procedure for situation assessment |

| Step 1: Deduce the knowledge base of situation assessment using the expert experience in situation assessment; |

| Step 2: Obtain the complete knowledge map, that is, the knowledge representation via the classification and analysis of domain knowledge base; |

| Step 3: Record the real-time data for the real scene using the knowledge acquisition module and recorded information which is stored in the constructed knowledge base; |

| Step 4: Describe the rule knowledge as rules that can be recognized by the system and stored in the knowledge base according to the rule editor provided by the knowledge acquisition module, such as the data format given by the data acquisition module; |

| Step 5: Answer the real-time feedback question in the knowledge module using the expert experience. The answer to the feedback question is directly sent to the knowledge acquisition module or processed and then sent to the knowledge acquisition module, waiting for a certain behavior decision. |

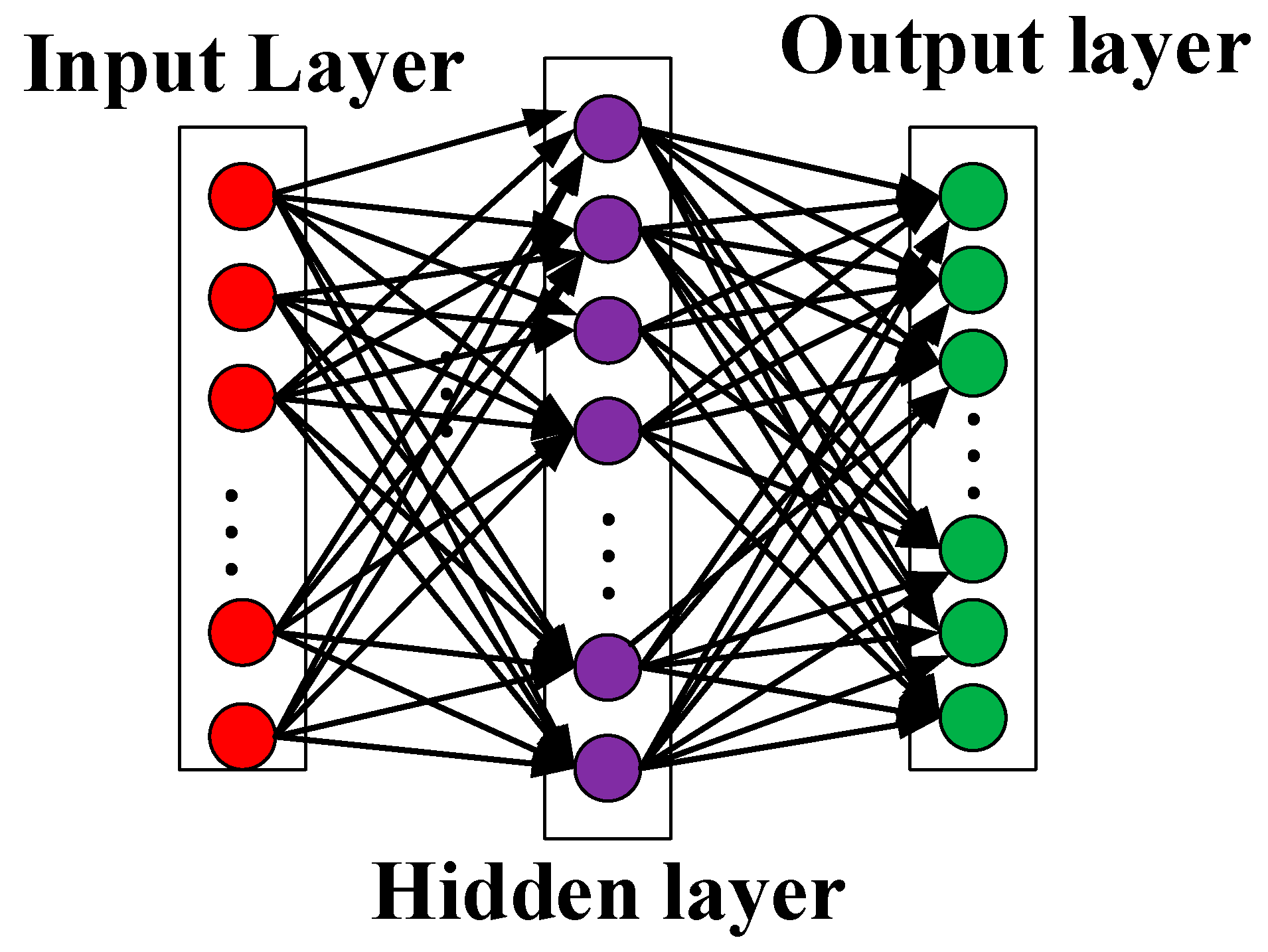

2.2. Neural Network Model

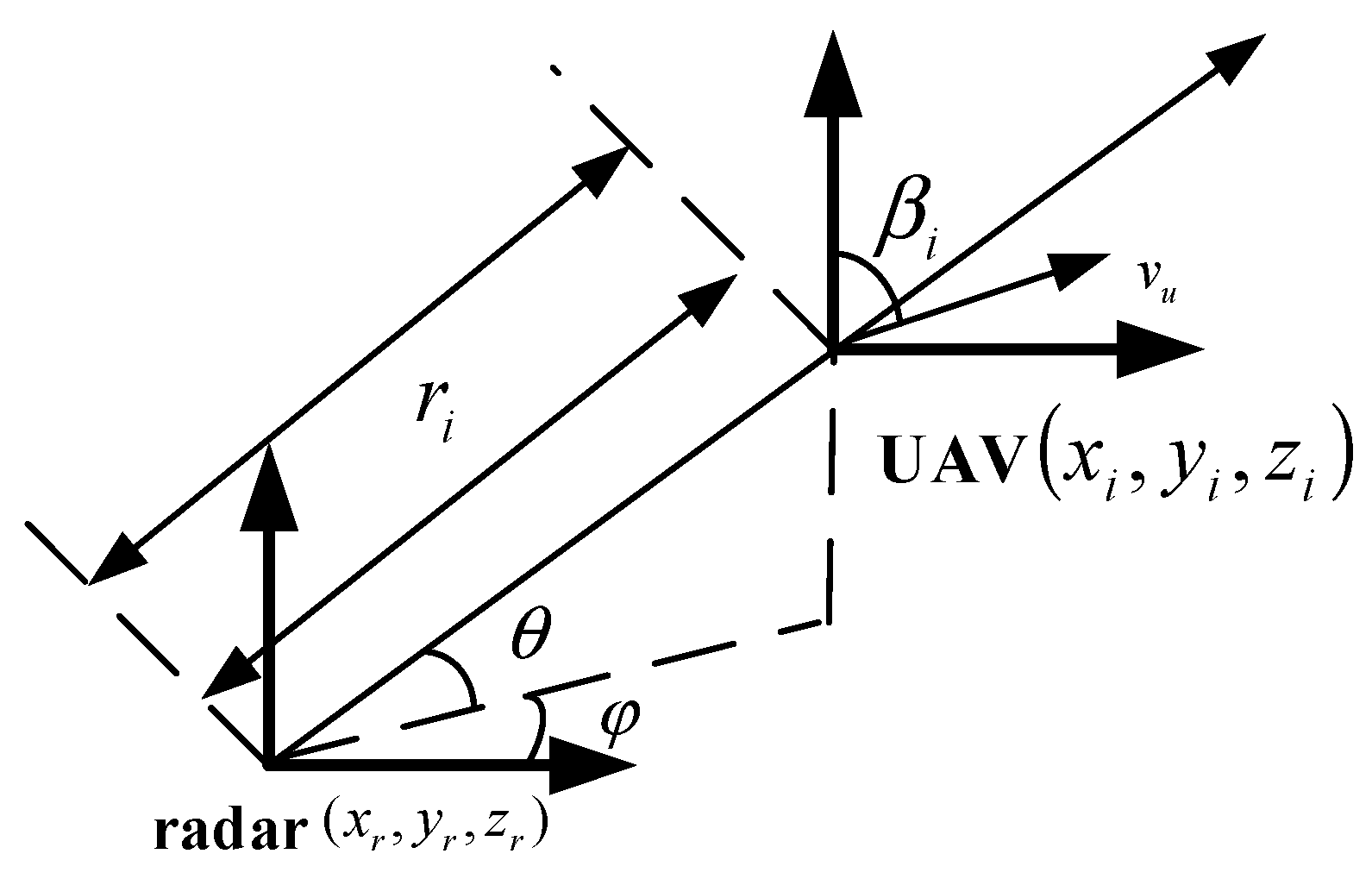

3. The Kinematic Model for UAV

4. Conventional Methods for Situation Assessment

4.1. A Situation Assessment with BP Neural Network (SA-BP)

| Algorithm 2. The conventional method with SA-BP |

| Step 1: Define a BP neural network model. Choose a reasonable activation function and define a neural network model from the input layer to the output layer. Meanwhile, the final assessment model can be obtained by combining it with a certain amount of data training. |

| Step 2: Define the input layer for the neural network. All the information obtained by the situational awareness module is used as a set for input neurons, which includes all scene information data in a certain period pushed forward from the current time. The scene data is recorded according to the evaluation factors. represents n pieces of scene data which have been obtained from the task scene. |

| Step 3: Define a situation assessment set. In order to describe the scene situation reasonably, it is necessary to set the situation label in combination with the real-time situation. situation labels are set and the set for situation label is given by,

|

| Step 4: Normalization of input data. By recording the data within a certain period, an approximate range of each evaluation factor can be obtained. If the minimum value in the recorded data of the evaluation factor is and the maximum value is , then the given value range is given by Equation (10) and the normalized data for the evaluation factor is given by Equation (11). |

| Step 5: Achieve a real-time situation assessment. When the new data enters into the assessment network model, the result calculated by the neural network is a result vector for each situation label. Then, we take the output assessment corresponding to the maximum value as the final situation assessment result. |

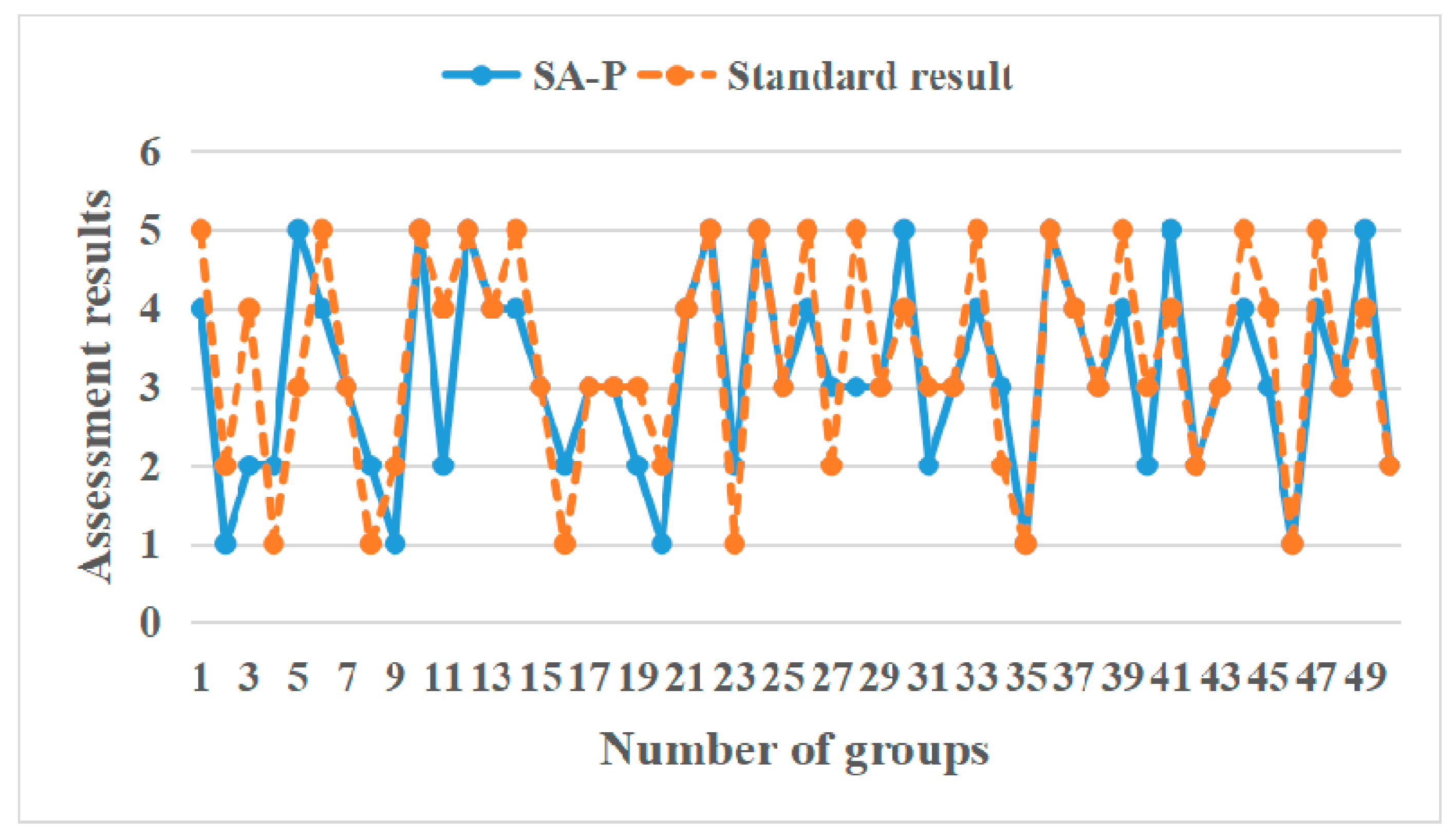

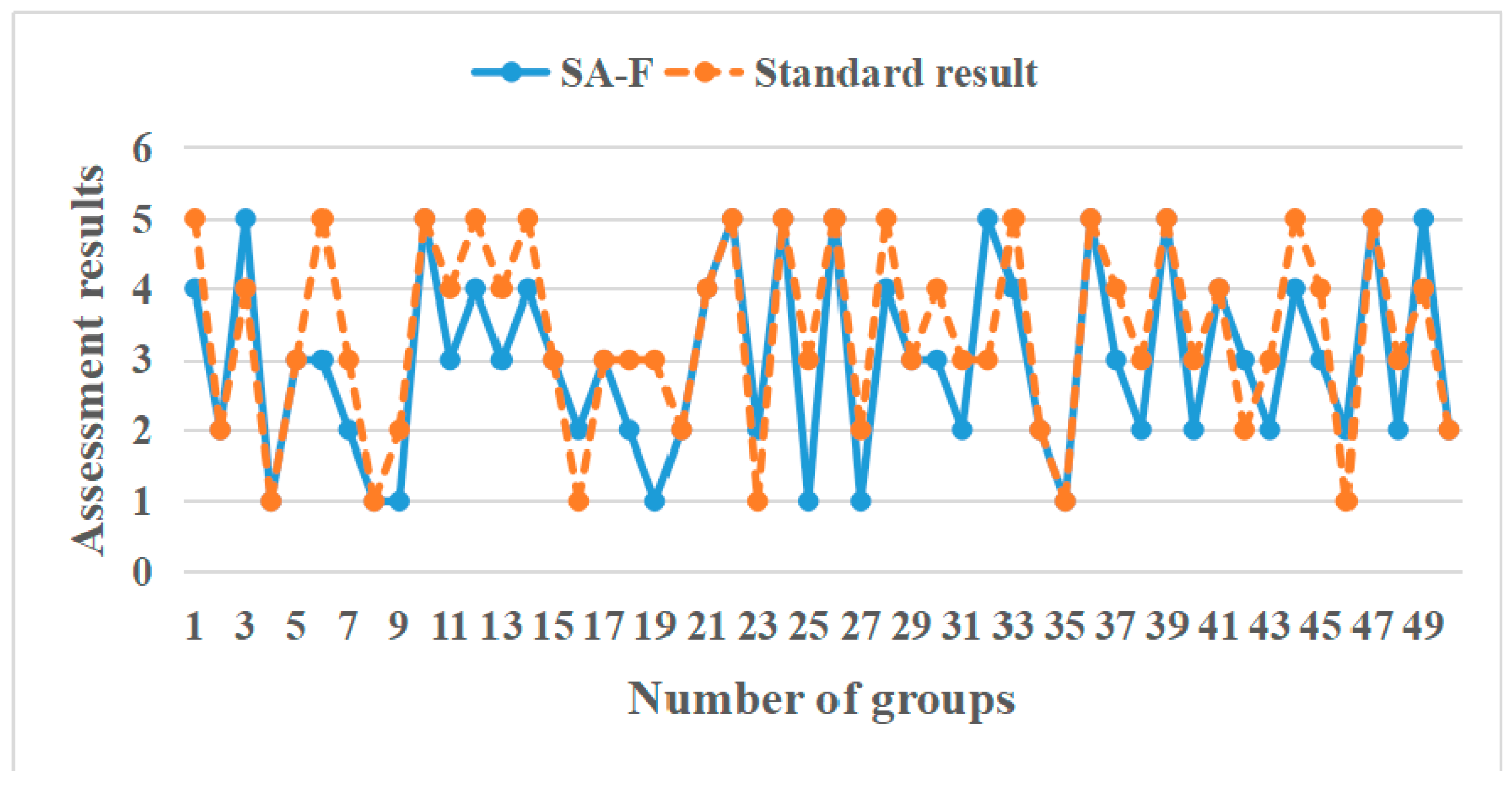

4.2. A Fuzzy Evaluation Method for Situation Assessment (SA-F)

| Algorithm 3. The conventional method with SA-F |

| Step 1: Define the evaluation factor. Considering specific tasks and evaluation factors obtained from the scene, the set for the evaluation factor is . |

| Step 2: Define the situation label. In order to describe the situation of the scene reasonably, we need to set the final situation label. The number of situation labels is , which is . |

| Step 3: Define a fuzzy priority relation matrix. Firstly, the relative importance of each evaluation factor to the final situation assessment needs to be determined. Setting the expression of the relative importance of two factors and to , we can get the Equation (12). |

| Step 4: Define a fuzzy weight set. Combining the fuzzy analytic hierarchy process to transform the fuzzy matrix into the fuzzy consistent matrix .

Therefore, the final set of weights for the evaluation factors can be obtained according to Equation (14) and it is recorded as |

| Step 5: Achieve a fuzzy situation assessment. If the current time is t, the value for the degree of trust of each evaluation factor relative to the situation described in the first T time slices is calculated using the fuzzy method, which is given by , as shown in:

|

| Step 6: Achieve a comprehensive assessment result. According to the maximum operation, a comprehensive assessment result of the current situation which is represented as , can be obtained, which is given by, |

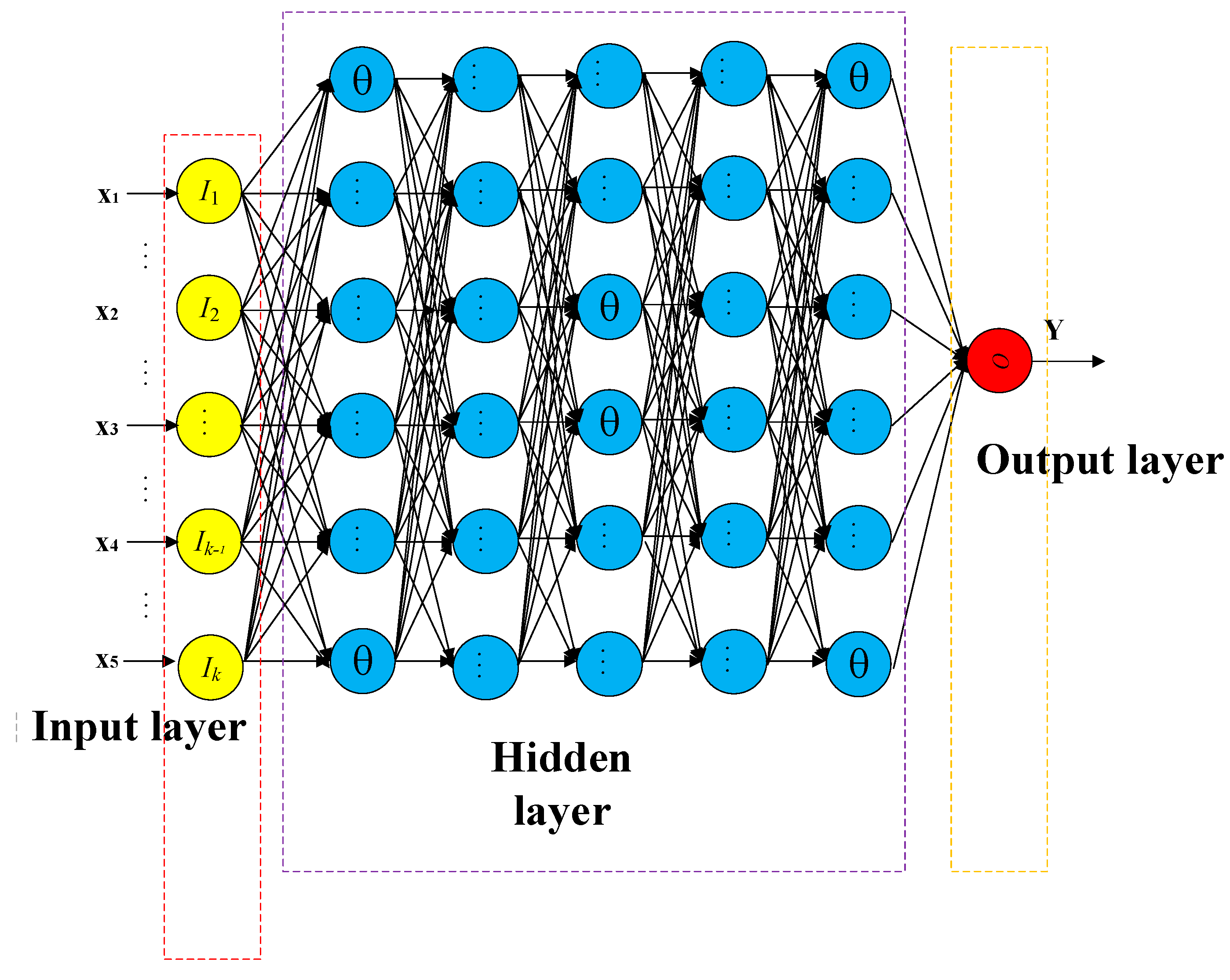

5. An Improved Fuzzy Deep Neural Network for Situation Assessment of Multiple UAVs

5.1. The Framework for the Proposed Method

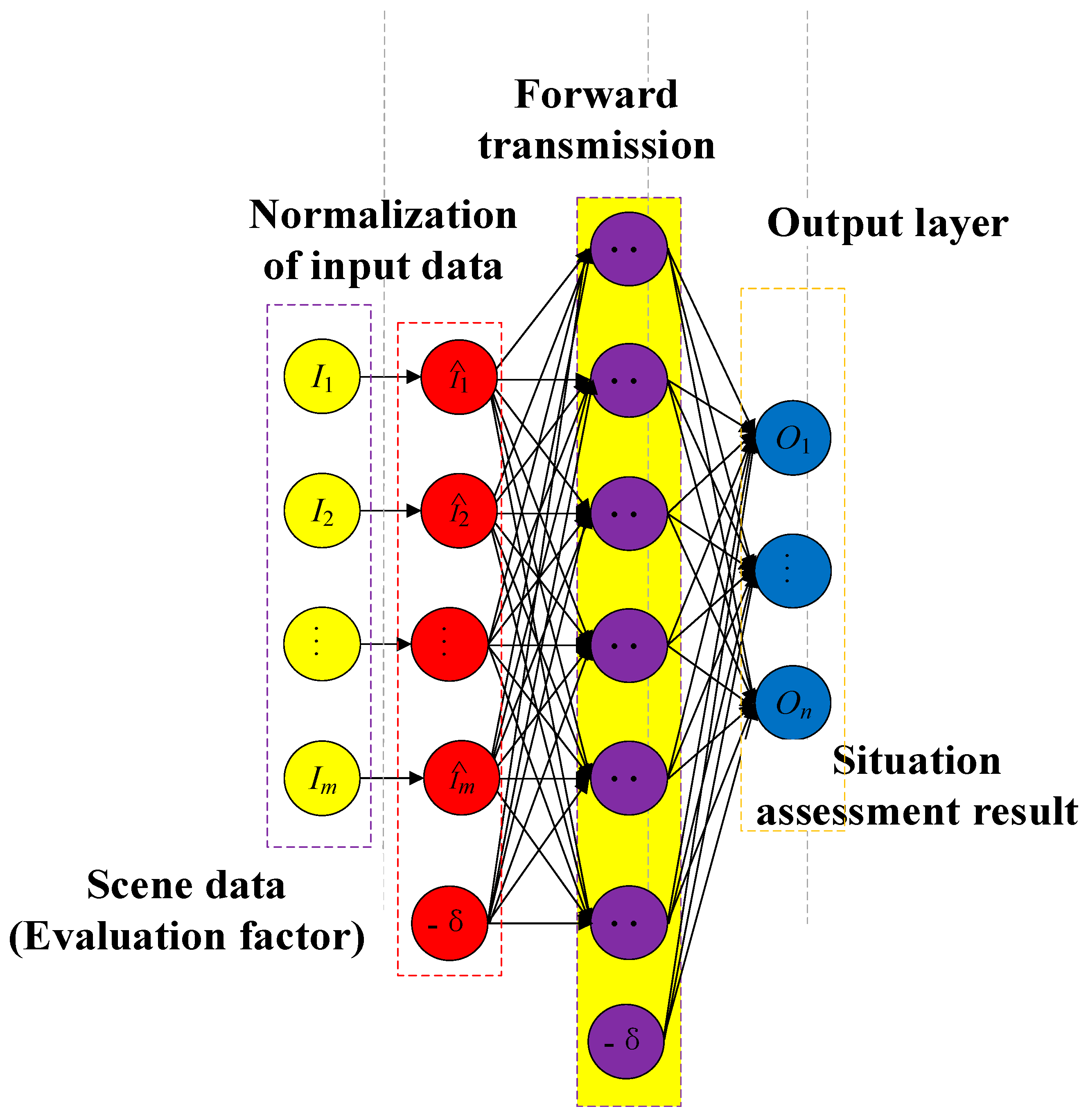

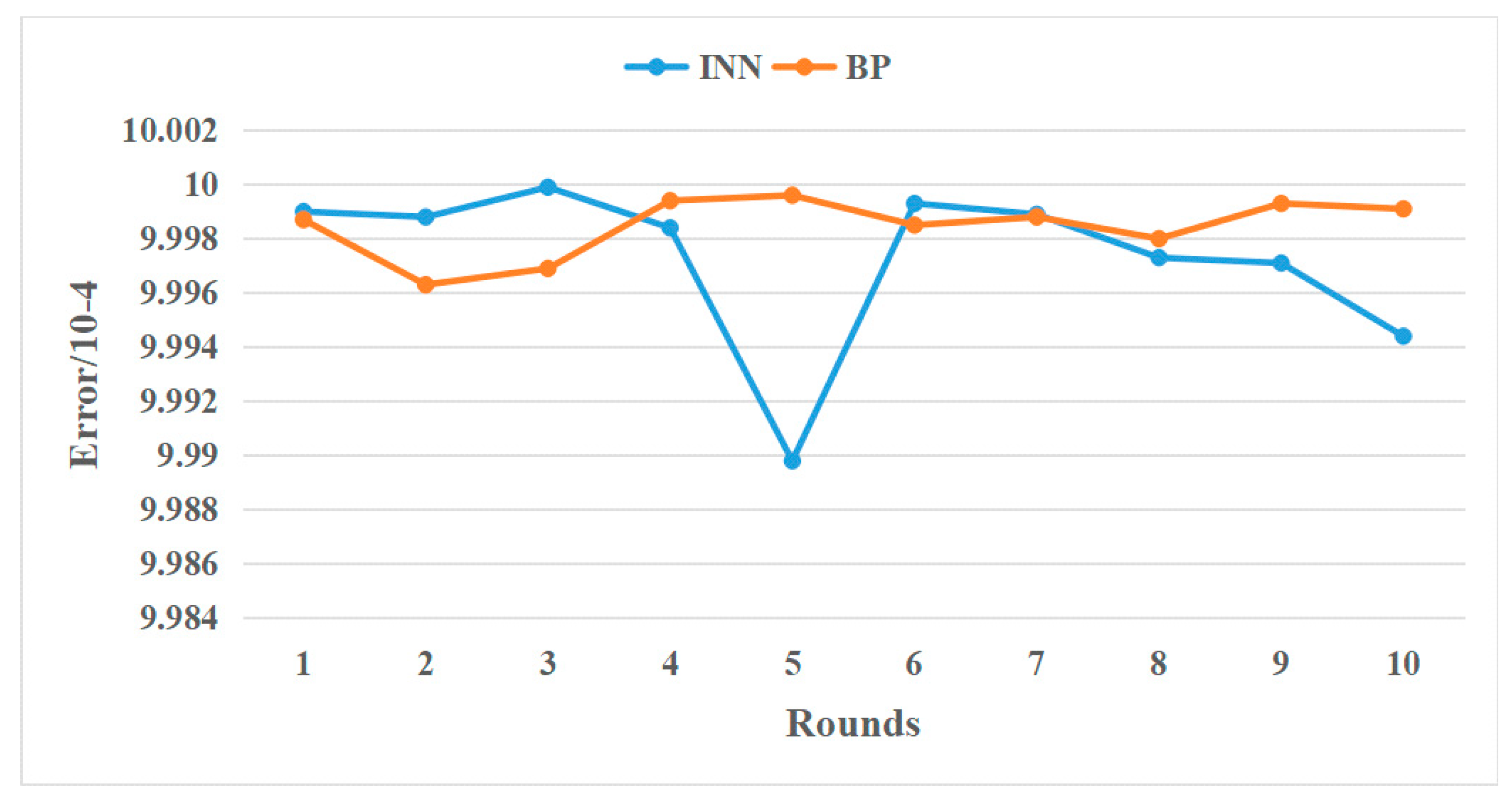

5.2. An Improved Deep Neural Network Model with Adaptive Momentum and Elastic SGD

5.3. The Whole Algorithm Using An Improved Fuzzy Deep Neural Network for Situation Assessment of Multiple UAVs

| Algorithm 4. The proposed situation assessment method for Multiple UAVs. |

| Definition Data_Samples: = Training data with scene data and situation labels. Num_data: = Number of training data. : = The i-th input scene data for input. : = Normalized data for the input. Training_Bylay( ): = Training the hidden layer layer by layer for Improved Deep network Net_Fc: = The activation function for Deep net Im_Deep_Net: = Improved Deep Neural Network (DNN) model. Normalization( ): = Normalization for the input Fuzzy_out( ): = Fuzziness of output Out_DNN ( ): = Calculate the output of the Improved Deep network Training_Im_Deepnet ( ): = Training for the Improved Deep network Offline training period for the Improved DNN: ; Repeat Normalization( Data_Samples ); Training_Bylay(Net_Fc, ); Until Num_data; Training_Im_Deepnet (Im_Deep_Net, Data_Samples); Online training period for the Improved DNN: ; Repeat Normalization(); Out_DNN (, Im_Deep_Net); Fuzzy_out(); Until |

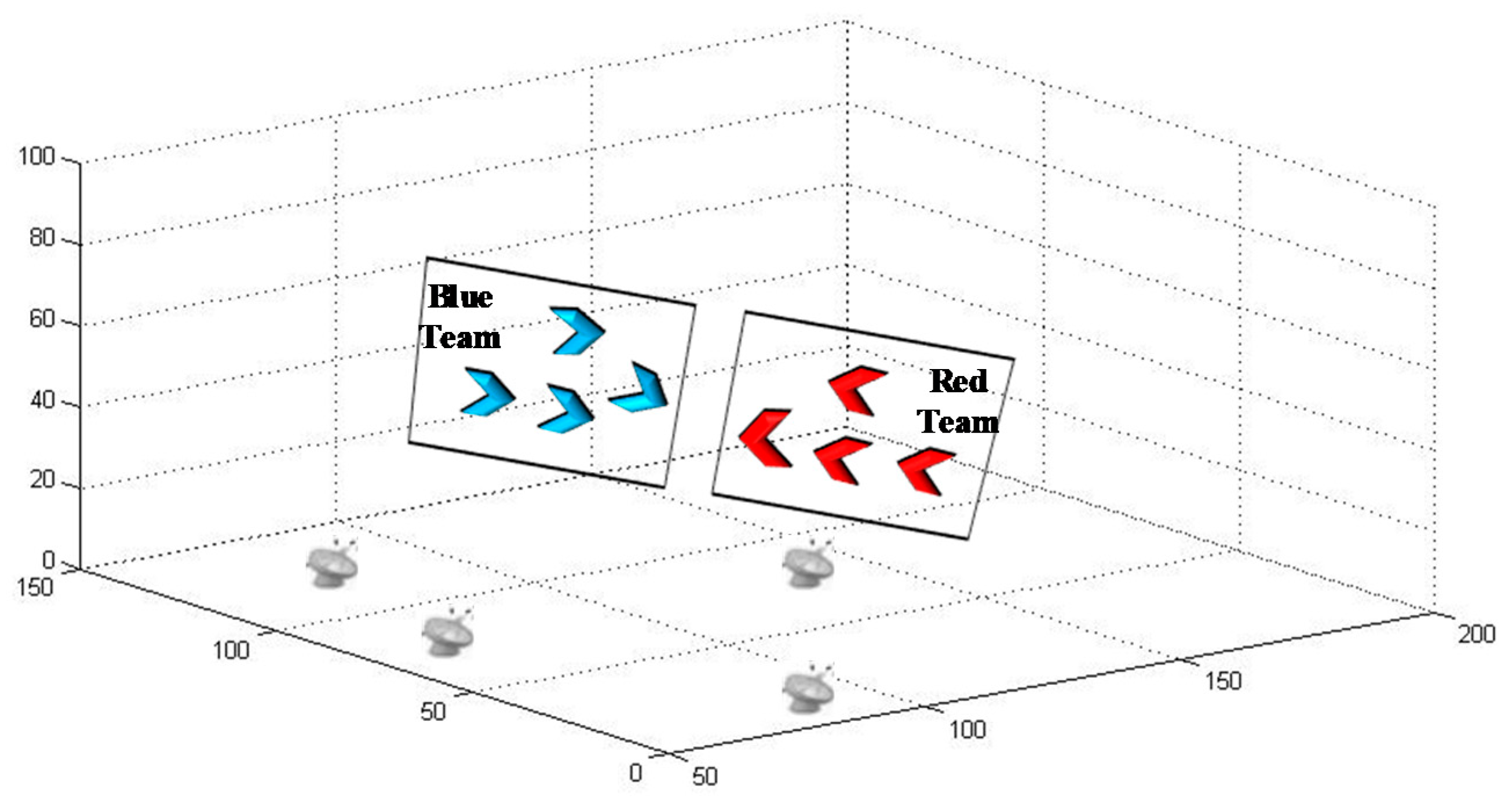

6. Simulation

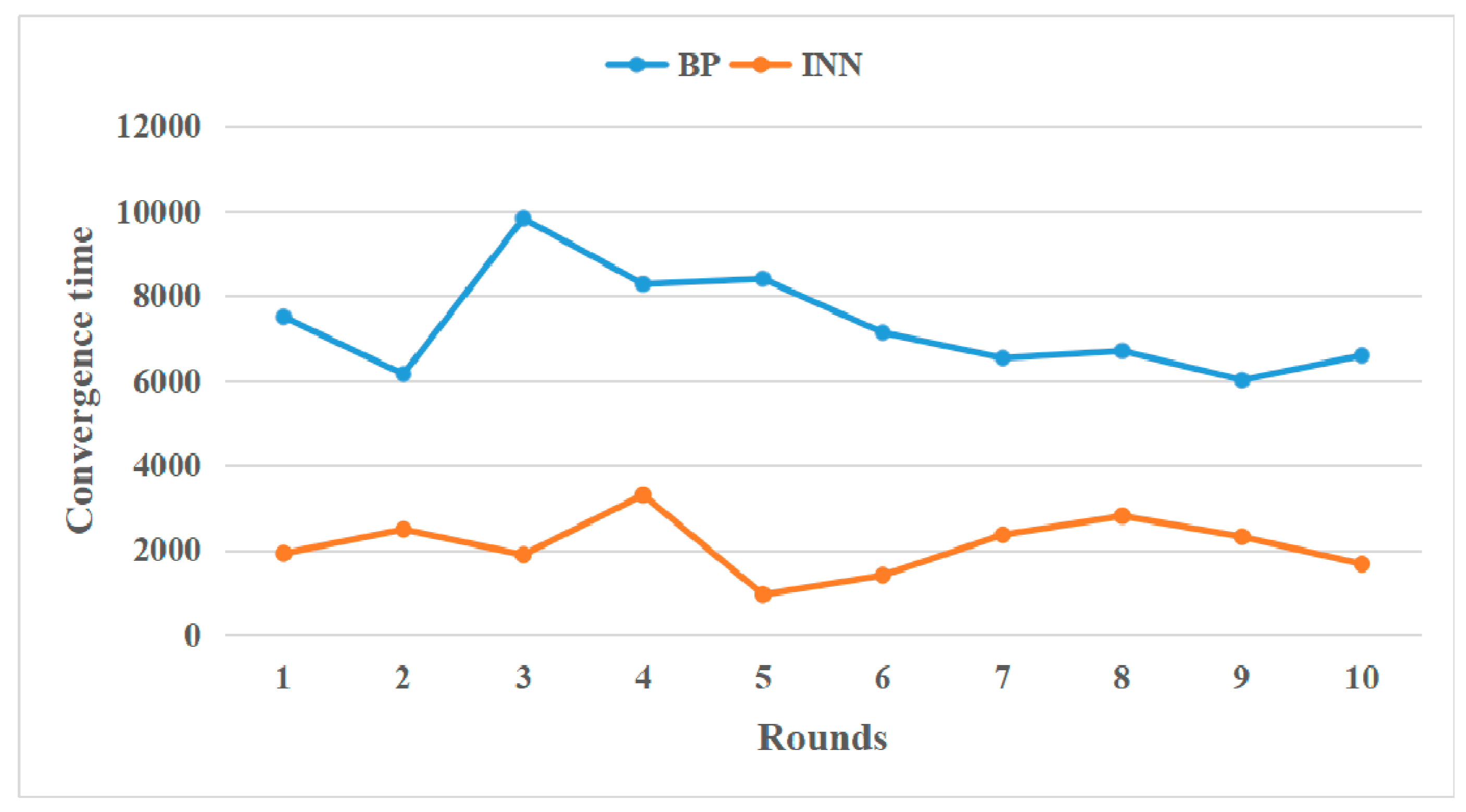

6.1. Experiment on Classification of Situation Labels

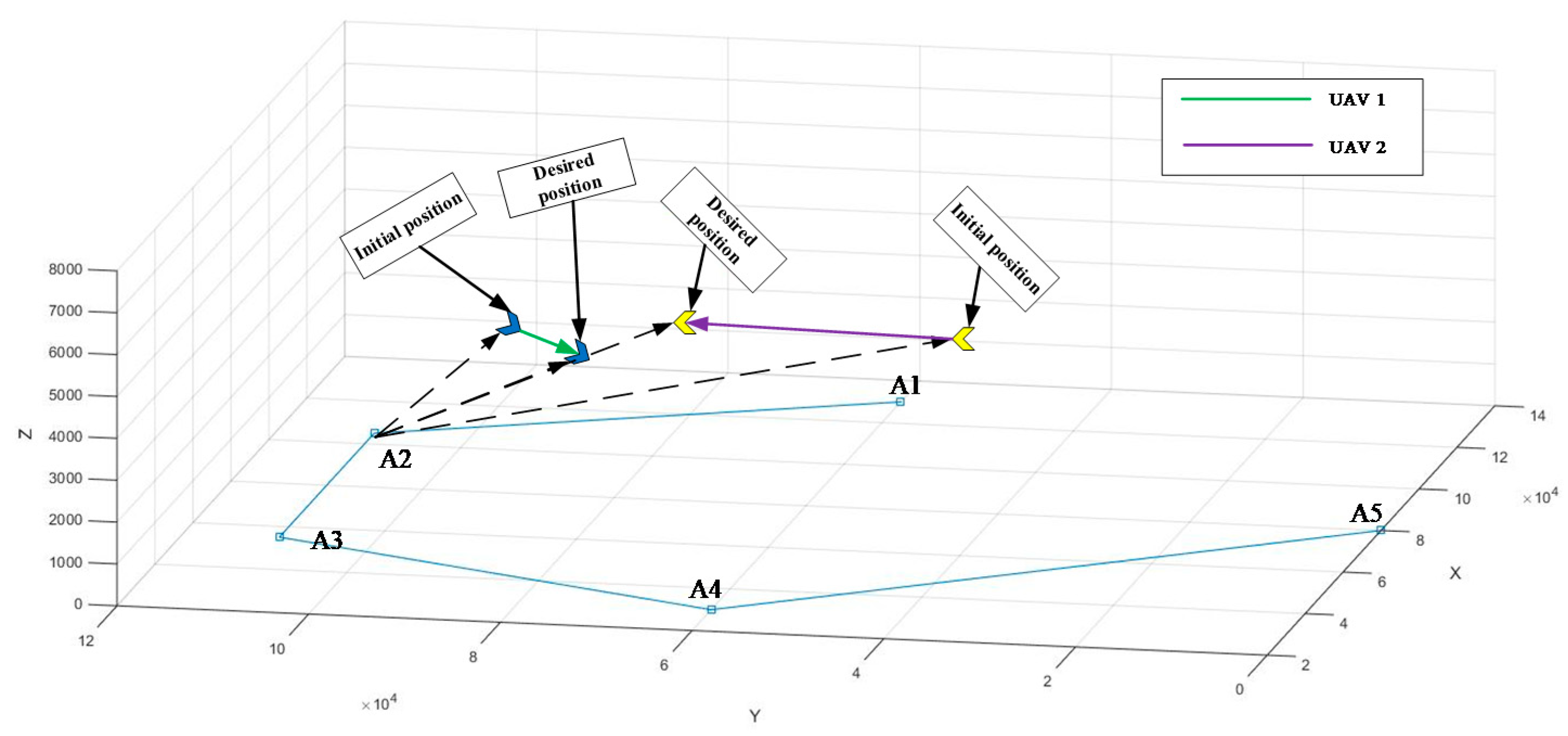

6.2. Experiment on Multiple UAVs

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Vijayavargiya, A.; Sharma, A.A. Unmanned Aerial Vehicle. Journal. Pract. 2017, 168, 1554–1557. [Google Scholar]

- Khawaja, W.; Guvenc, I.; Matolak, D.W. A survey of air-to-ground propagation channel modeling for unmanned aerial vehicles. IEEE Commun. Surv. Tutor. 2019, 21, 2361–2391. [Google Scholar] [CrossRef]

- Shi, H.; Hwang, K.S.; Li, X. A learning approach to image-based visual servoing with a bagging method of velocity calculations. Inf. Sci. 2019, 481, 244–257. [Google Scholar] [CrossRef]

- Shakhatreh, H.; Sawalmeh, A.H.; Al-Fuqaha, A. Unmanned aerial vehicles (UAVs): A survey on civil applications and key research challenges. IEEE Access 2019, 7, 48572–48634. [Google Scholar] [CrossRef]

- Shirani, B.; Najafi, M.; Izadi, I. Cooperative load transportation using multiple UAVs. Aerosp. Sci. Technol. 2019, 84, 158–169. [Google Scholar] [CrossRef]

- Fan, Z.; Xiao, Y.; Nayak, A. An improved network security situation assessment approach in software defined networks. Peer-to-Peer Netw. Appl. 2019, 12, 295–309. [Google Scholar] [CrossRef]

- Kim, T.J.; Seong, P.H. Influencing factors on situation assessment of human operators in unexpected plant conditions. Ann. Nucl. Energy 2019, 132, 526–536. [Google Scholar] [CrossRef]

- Kang, Y.; Liu, Z.; Pu, Z. Beyond-Visual-Range Tactical Game Strategy for Multiple UAVs. In Proceedings of the 2019 Chinese Automation Congress (CAC), Hangzhou, China, 22–24 November 2019; pp. 5231–5236. [Google Scholar]

- Hu, C.; Xu, M. Adaptive Exploration Strategy with Multi-Attribute Decision-Making for Reinforcement Learning. IEEE Access 2020, 8, 32353–32364. [Google Scholar] [CrossRef]

- Ji, H.; Han, Q.; Li, X. Air Combat Situation Assessment Based on Improved Cloud Model Theory. In Proceedings of the 2019 IEEE 8th Joint International Information Technology and Artificial Intelligence Conference (ITAIC), Chongqing, China, 24–26 May 2019; pp. 754–758. [Google Scholar]

- Shen, L.; Wen, Z. Network security situation prediction in the cloud environment based on grey neural network. J. Comput. Methods Sci. Eng. 2019, 19, 153–167. [Google Scholar] [CrossRef]

- Lin, J.L.; Hwang, K.S.; Shi, H. An ensemble method for inverse reinforcement learning. Inf. Sci. 2020, 512, 518–532. [Google Scholar] [CrossRef]

- Wei, X.; Luo, X.; Li, Q. Online comment-based hotel quality automatic assessment using improved fuzzy comprehensive evaluation and fuzzy cognitive map. IEEE Trans. Fuzzy Syst. 2015, 23, 72–84. [Google Scholar] [CrossRef]

- Wu, J.; Ota, K.; Dong, M. Big data analysis-based security situational awareness for smart grid. IEEE Trans. Big Data 2016, 4, 408–417. [Google Scholar] [CrossRef]

- Mannucci, T.; van Kampen, E.; de Visser, C. Safe Exploration Algorithms for Reinforcement Learning Controllers. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 1069–1081. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.X. The Evolution of Computing: AlphaGo. Comput. Sci. Eng. 2016, 18, 4–7. [Google Scholar] [CrossRef]

- Ernest, N.; Cohen, K.; Kivelevitch, E. Genetic fuzzy trees and their application towards autonomous training and control of a squadron of unmanned combat aerial vehicles. Unmanned Syst. 2015, 3, 185–204. [Google Scholar] [CrossRef]

- Tampuu, A.; Matiisen, T.; Kodelja, D. Multiagent cooperation and competition with deep reinforcement learning. PLoS ONE 2017, 12, e0172395. [Google Scholar] [CrossRef]

- Zhang, L.; Zhu, Y.; Shi, H. Adaptive dynamic programming approach on optimal control for affinely pseudo-linearized nonlinear system. IEEE Access 2019, 7, 75132–75142. [Google Scholar] [CrossRef]

- Armenteros, J.J.A.; Tsirigos, K.D.; Sønderby, C.K. SignalP 5.0 improves signal peptide predictions using deep neural networks. Nat. Biotechnol. 2019, 37, 420–423. [Google Scholar] [CrossRef]

- Zhang, J.; Xue, Q.; Chen, Q. Intelligent Battlefield Situation Comprehension Method Based On Deep Learning in Wargame. In Proceedings of the 2019 IEEE 1st International Conference on Civil Aviation Safety and Information Technology (ICCASIT), Kunming, China, 17–19 October 2019; pp. 363–368. [Google Scholar]

- Kwak, J.; Sung, Y. Autoencoder-based candidate waypoint generation method for autonomous flight of multi-unmanned aerial vehicles. Adv. Mech. Eng. 2019, 11, 1687814019856772. [Google Scholar] [CrossRef]

- Hu, C.; Xu, M. Fuzzy Reinforcement Learning and Curriculum Transfer Learning for Micromanagement in Multi-Robot Confrontation. Information 2019, 10, 341. [Google Scholar] [CrossRef]

- Shao, H.; Zheng, G. Convergence analysis of a back-propagation algorithm with adaptive momentum. Neurocomputing 2011, 74, 749–752. [Google Scholar] [CrossRef]

- Zhang, S.; Choromanska, A.E.; LeCun, Y. Deep learning with elastic averaging SGD. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, Canada, 7–12 December 2015; pp. 685–693. [Google Scholar]

- Randel, J.M.; Pugh, H.L.; Reed, S.K. Differences in expert and novice situation awareness in naturalistic decision making. Int. J. Hum. Comput. Stud. 1996, 45, 579–597. [Google Scholar] [CrossRef]

- Endsley, M.R. Situation awareness global assessment technique (SAGAT). In Proceedings of the IEEE 1988 National Aerospace and Electronics Conference, Dayton, OH, USA, 23–27 May 1988; pp. 789–795. [Google Scholar]

- Zhang, J.R.; Zhang, J.; Lok, T.M. A hybrid particle swarm optimization–back-propagation algorithm for feedforward neural network training. Appl. Math. Comput. 2007, 185, 1026–1037. [Google Scholar] [CrossRef]

- Hansen, L.K.; Salamon, P. Neural network ensembles. IEEE Trans. Pattern Anal. Mach. Intell. 1990, 12, 993–1001. [Google Scholar] [CrossRef]

- Xu, M.; Shi, H.; Wang, Y. Play games using Reinforcement Learning and Artificial Neural Networks with Experience Replay. In Proceedings of the 2018 IEEE/ACIS 17th International Conference on Computer and Information Science (ICIS), Singapore, 6–8 June 2018; pp. 855–859. [Google Scholar]

- Hosmer, D.W.; Lemesbow, S. Goodness of fit tests for the multiple logistic regression model. Commun. Stat. Theory Methods 1980, 9, 1043–1069. [Google Scholar] [CrossRef]

- Shi, H.; Xu, M.; Hwang, K. A Fuzzy Adaptive Approach to Decoupled Visual Servoing for a Wheeled Mobile Robot. IEEE Trans. Fuzzy Syst. 2019. [Google Scholar] [CrossRef]

- Shi, H.; Xu, M. A Multiple Attribute Decision-Making Approach to Reinforcement Learning. IEEE Trans. Cogn. Dev. Syst. 2019. [Google Scholar] [CrossRef]

- Shi, H.; Shi, L.; Xu, M.; Hwang, K. End-to-End Navigation Strategy with Deep Reinforcement Learning for Mobile Robots. IEEE Trans. Ind. Inf. 2020, 4, 2393–2402. [Google Scholar] [CrossRef]

- Shi, H.; Xu, M. A data classification method using genetic algorithm and K-means algorithm with optimizing initial cluster center. In Proceedings of the 2018 IEEE International Conference on Computer and Communication Engineering Technology (CCET), Beijing, China, 18–20 August 2018; pp. 224–228. [Google Scholar]

- Shi, H.; Li, X.; Hwang, K.S.; Pan, W.; Xu, G. Decoupled visual servoing with fuzzy Q-learning. IEEE Trans. Ind. Inf. 2016, 14, 241–252. [Google Scholar] [CrossRef]

- Gao, H.; Cheng, B.; Wang, J. Object Classification using CNN-Based Fusion of Vision and LIDAR in Autonomous Vehicle Environment. IEEE Trans. Ind. Inf. 2018, 14, 4224–4231. [Google Scholar] [CrossRef]

- Gao, H.B.; Zhang, X.Y.; Zhang, T.L. Research of intelligent vehicle variable granularity evaluation based on cloud model. Acta Electron. Sin. 2016, 44, 365–373. [Google Scholar]

- Xie, G.; Gao, H.; Qian, L. Vehicle Trajectory Prediction by Integrating Physics- and Maneuver-Based Approaches Using Interactive Multiple Models. IEEE Trans. Ind. Electron. 2018, 65, 5999–6008. [Google Scholar] [CrossRef]

- Hongbo, G.; Xinyu, Z.; Yuchao, L. Longitudinal Control for Mengshi Autonomous Vehicle via Gauss Cloud Model. Sustainability 2017, 9, 2259. [Google Scholar]

- Hongbo, G.; Xinyu, Z.; Lifeng, A. Relay navigation strategy study on intelligent drive on urban roads. J. China Univ. Posts Telecommun. 2016, 23, 79–90. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, L.; Zhu, Y.; Shi, X.; Li, X. A Situation Assessment Method with an Improved Fuzzy Deep Neural Network for Multiple UAVs. Information 2020, 11, 194. https://doi.org/10.3390/info11040194

Zhang L, Zhu Y, Shi X, Li X. A Situation Assessment Method with an Improved Fuzzy Deep Neural Network for Multiple UAVs. Information. 2020; 11(4):194. https://doi.org/10.3390/info11040194

Chicago/Turabian StyleZhang, Lin, Yian Zhu, Xianchen Shi, and Xuesi Li. 2020. "A Situation Assessment Method with an Improved Fuzzy Deep Neural Network for Multiple UAVs" Information 11, no. 4: 194. https://doi.org/10.3390/info11040194

APA StyleZhang, L., Zhu, Y., Shi, X., & Li, X. (2020). A Situation Assessment Method with an Improved Fuzzy Deep Neural Network for Multiple UAVs. Information, 11(4), 194. https://doi.org/10.3390/info11040194