1. Introduction

The rapid growth of the Internet and the popularity of crowd-sourced review platforms have introduced electronic Word-of-Mouth (e-WoM) communities that provide a massive amount of User-Generated Content (UGC), i.e., online product reviews [

1,

2]. The popular review websites, e.g., Yelp, Amazon, TripAdvisor, IMDB, Yahoo, Google, etc., serve as an essential source of information and help users in evaluating product quality and making purchase decisions [

3,

4,

5,

6]. These websites, despite differing, i.e., Yelp reviews business, Amazon is an e-commerce website and review products, TripAdvisor is a booking website, etc., the principle of review helpfulness are common [

7]. According to Bright Local [

8], 86% of consumers read online reviews, whereas 91% of consumers trust online reviews. The volume of online review is increasing day by day. Currently, there are more than 730 million reviews on TripAdvisor [

9] and more than 184 million on Yelp [

10].

The colossal quantity of unstructured data generated by e-WOM communities has become a source of big data to study real consumer behavior [

11,

12,

13], which also introduced many challenges for both businesses and consumers [

14]. The “review helpfulness” is an important dimension of online reviews, which shows the subjectivity and quality perceived by the crowd [

15,

16]. To overcome the problem of information overload and facilitate the consumers in finding helpful reviews from thousands of confusing reviews several solutions have been proposed using statistical modelling and Machine Learning (ML) [

17,

18,

19].

The topic of predicting helpfulness of reviews has been studied by many researchers using similar features but reported inconsistent and contradictory results regarding the performance of different features in predicting helpfulness [

20]. Most of the solutions introduced by previous studies were for a specific category, product or platform [

21,

22]. Researchers have tried to propose a generalized solution for different review platforms and product categories by using only textual features in making the prediction. However, they also suggested utilizing reviewer and product features to enhance the prediction performance [

23]. The datasets used for predicting helpfulness by previous studies are mostly different, small size and overdispersed [

21,

24]. Diaz and Ng [

7] highlighted the disorganized status of research in the area of review helpfulness prediction.

The quality of a business is represented by the average star rating of all reviews. Similarly, the quality of reviews received by a product is reflected by the helpfulness of reviews based on their information value. By using the review helpfulness as a tool, customers’ ability to access the quality of a product or business has been greatly improved. The helpful user reviews are of great use to potential consumers as they provide information about the quality of a product that helps in evaluating and making purchase decisions [

25,

26,

27]. The businesses with more useful reviews are likely to attract more customers and the increased revenue in comparison to businesses with less useful reviews [

28,

29,

30].

The review website usually allows the reader to give feedback to a review, i.e., helpful/not-helpful vote on Amazon, Useful vote on Yelp. This simple feedback boosted Amazon revenue by

$2.3 billion [

31]. Lu et al. [

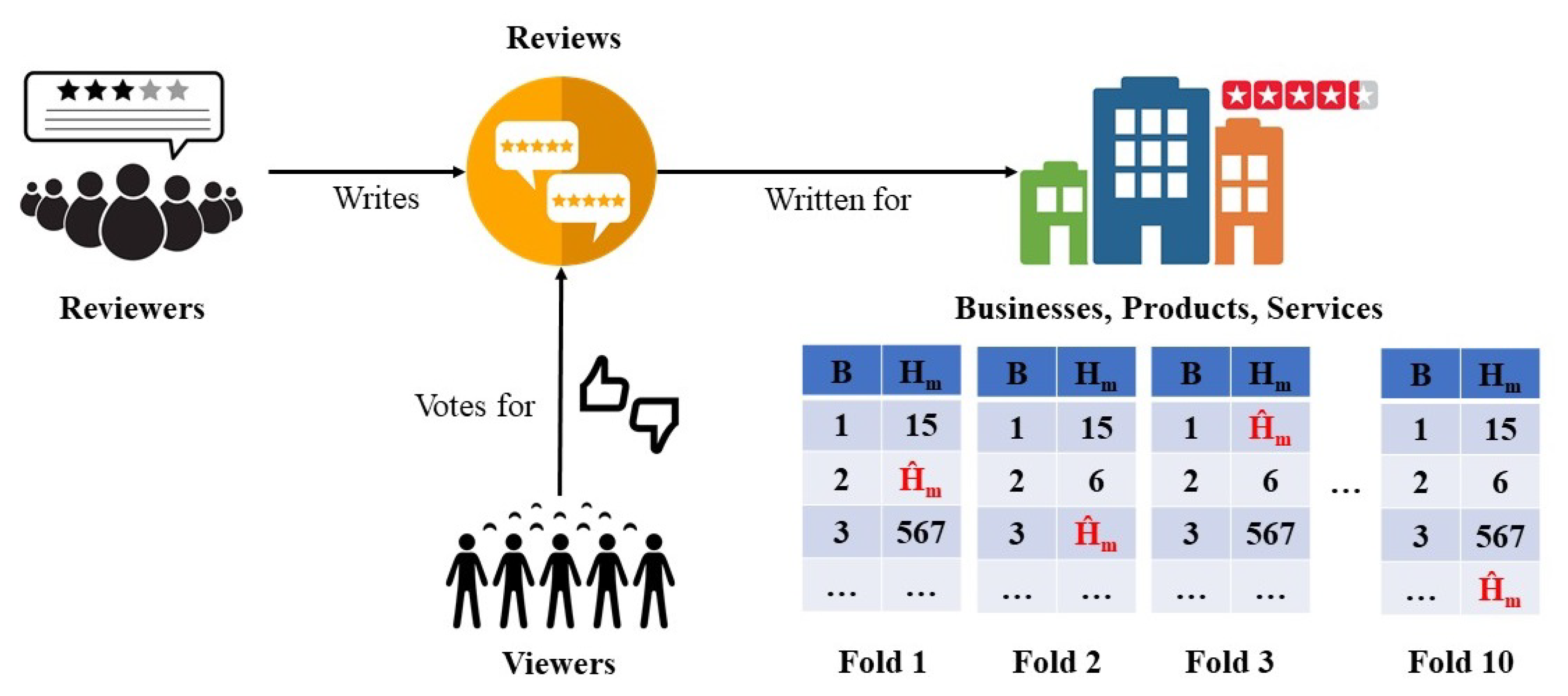

32] reported that a major portion of the reviews has very few or no useful votes because the latest reviews did not get enough time to receive useful votes. Hence, the useful votes for individual reviews are too sparse to access the quality of reviews received by a product [

33,

34]. There is a huge volume of reviews even for a single business, and it is challenging to see the quality reviews received by the business. Moreover, the quality of reviews received by one business is different from others, even for the same category. Therefore, similar to the average star rating of the business, the cumulative helpfulness of reviews for a business should be calculated as well. The cumulative helpfulness can be calculated from the perspective of the reviewer as well as business. “Cumulative helpfulness” is the total helpful votes received by all reviews for a specific business or written by a particular reviewer.

Due to the importance of review helpfulness, the number of studies trying to explore the helpfulness of crowd-sourced reviews is continuously increasing. Despite these rising numbers, the majority of studies have explored the helpfulness of reviews for limited categories, e.g., shopping, restaurants, etc., and platforms, e.g.,

Amazon.com, while ignoring reviews categories, i.e., travel, hotel, health, and platforms, i.e., Yelp and TripAdvisor [

35]. In addition, the researchers have proposed many statistical and ML models for (i) predicting helpfulness of “review” and “reviewer”; and (ii) finding and ranking the top-k helpful “review” and “reviewer”. However, according to our knowledge, there is no published research article attempting to find and predict the cumulative helpfulness (quality) of reviews for a business.

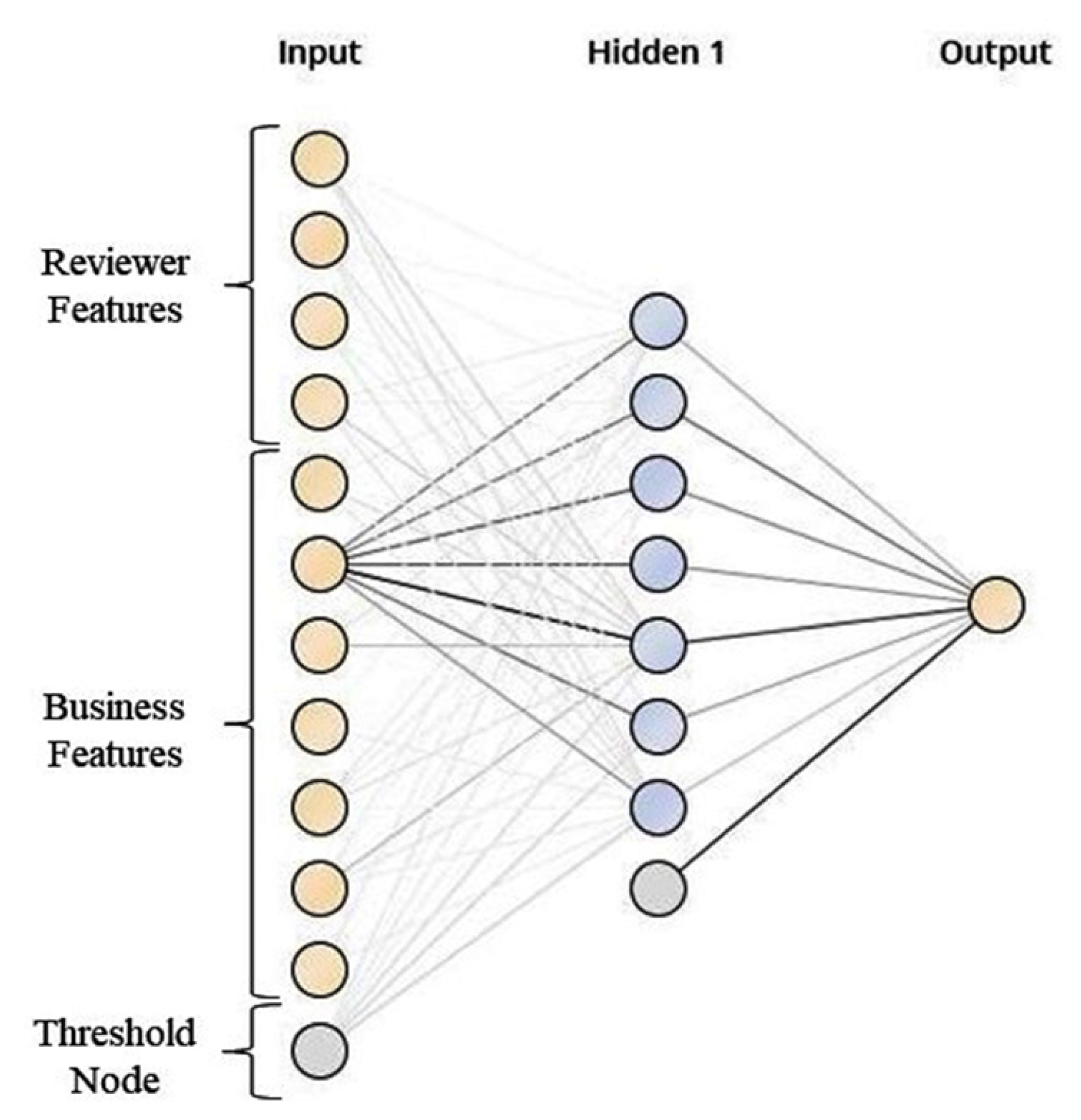

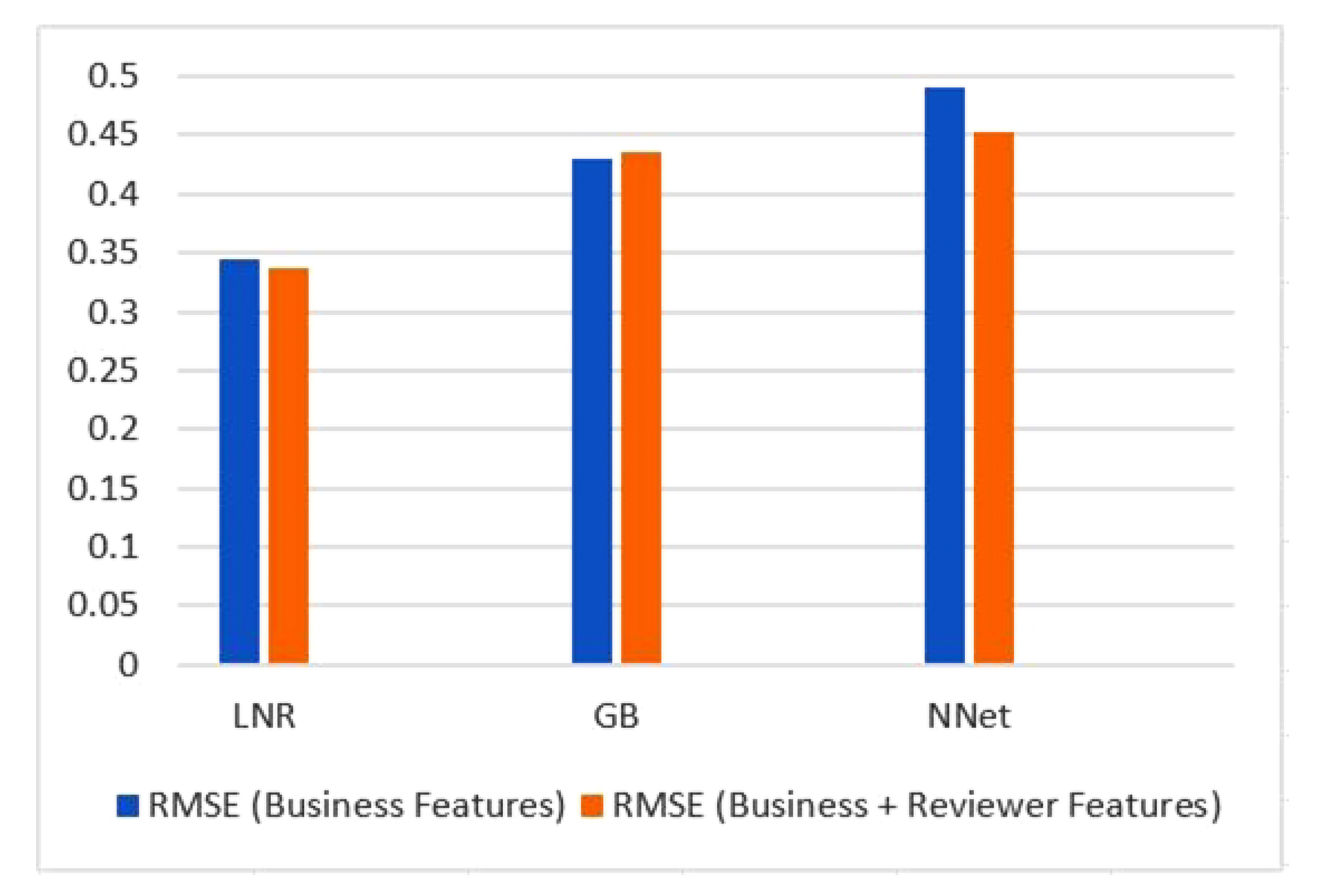

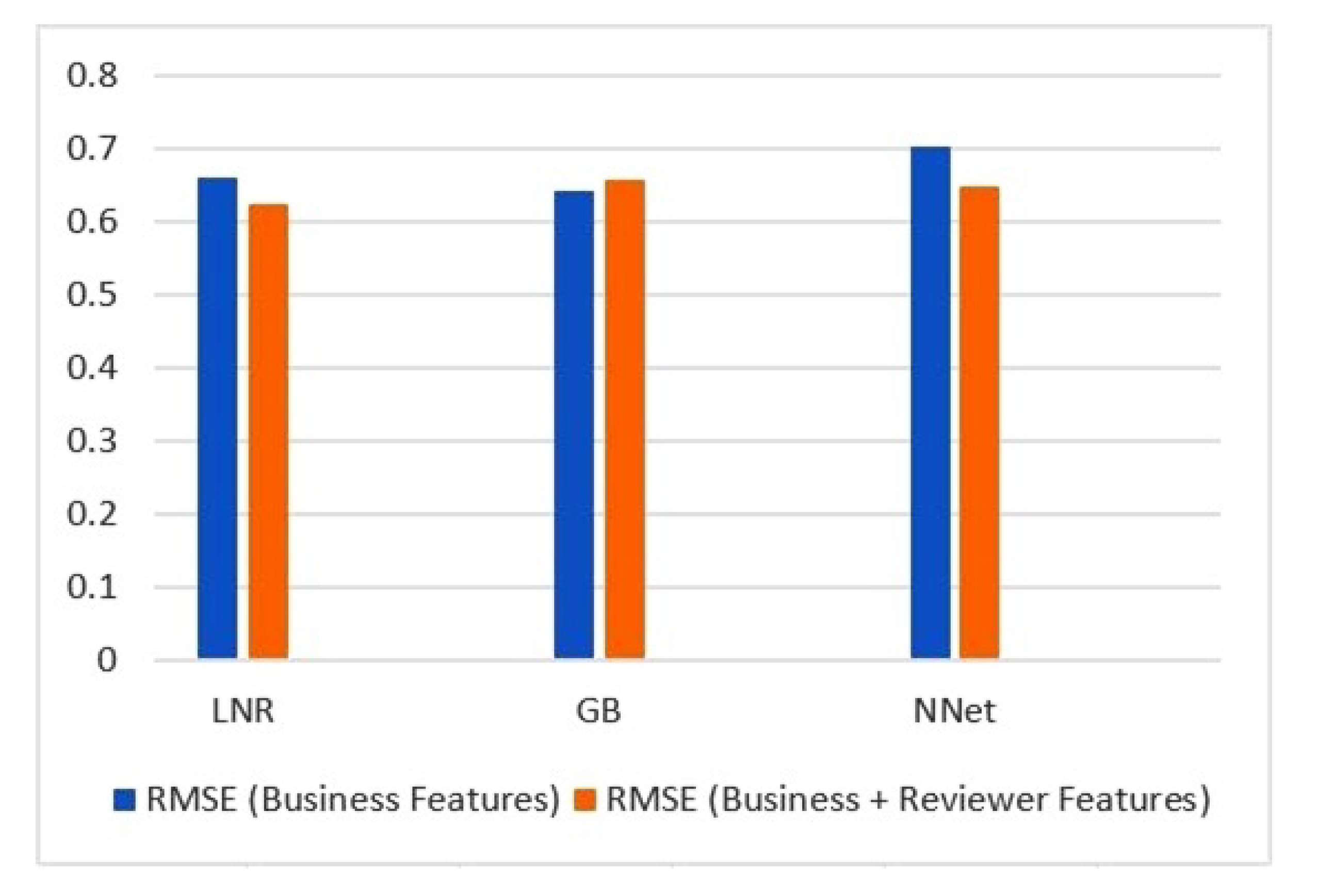

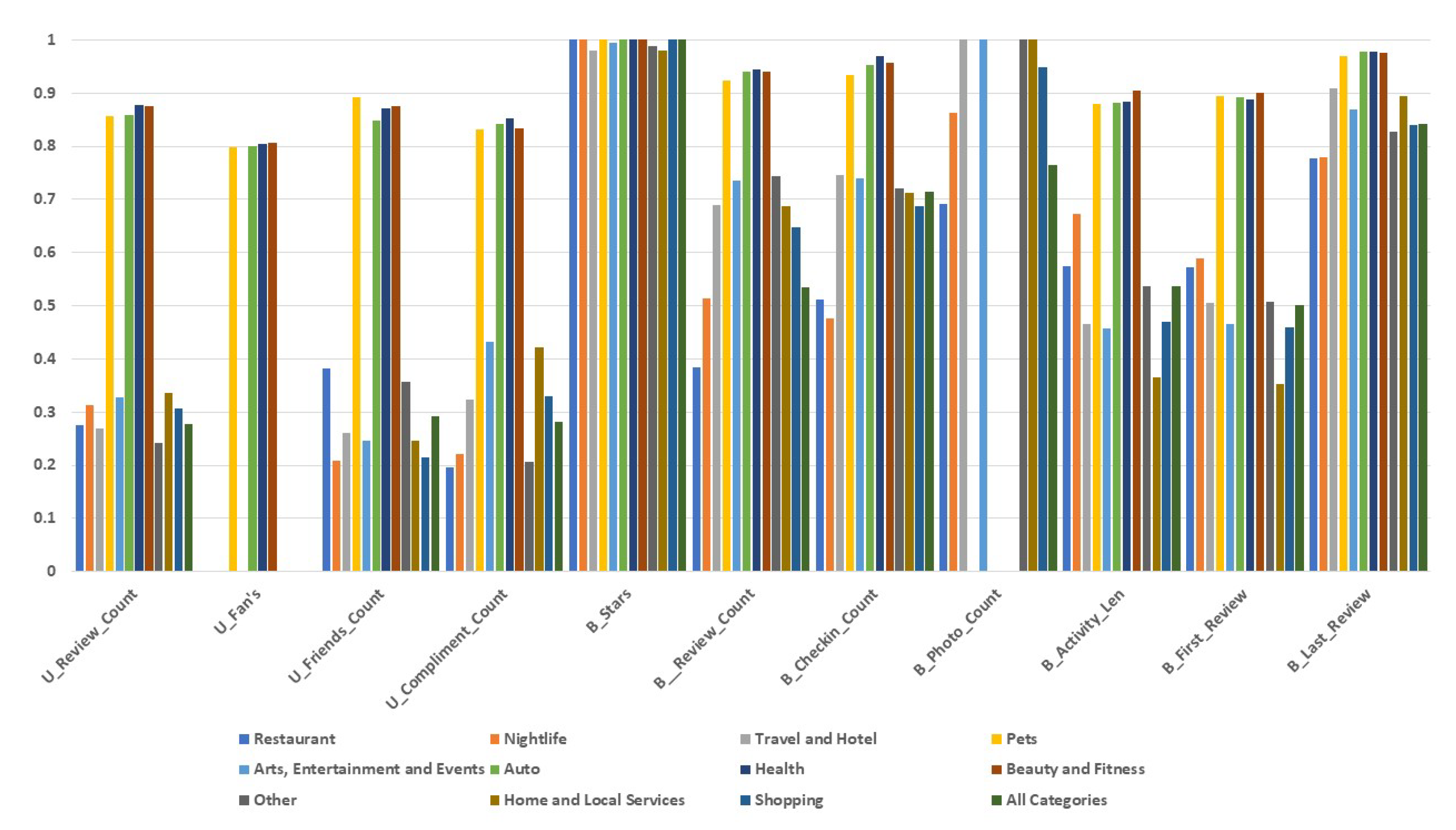

Therefore, the cumulative helpfulness of reviews received by a “business” still needs to be investigated. To fill the gap, this study aimed at finding the cumulative helpfulness of reviews received by a business and compared the prediction performance of various ML algorithms on datasets of different size and business categories. The main contributions of this paper are summarized as follows: (a) propose and calculate the cumulative helpfulness of reviews received by a business; (b) rank and compare top k businesses using cumulative helpfulness, review count and star rating; (c) identify and operationalize the business and reviewer features for predicting cumulative helpfulness of reviews received by a business; (d) analyze the performance of various learning algorithms to predict the cumulative helpfulness of reviews for a business using datasets of different size and business categories; (e) examine the impact of reviewer features in predicting cumulative helpfulness of a business; and (f) explore the importance of different business and reviewer features for predicting cumulative helpfulness.

The rest of the paper is organized as follows.

Section 2 gives a brief overview of literature related to online review helpfulness prediction.

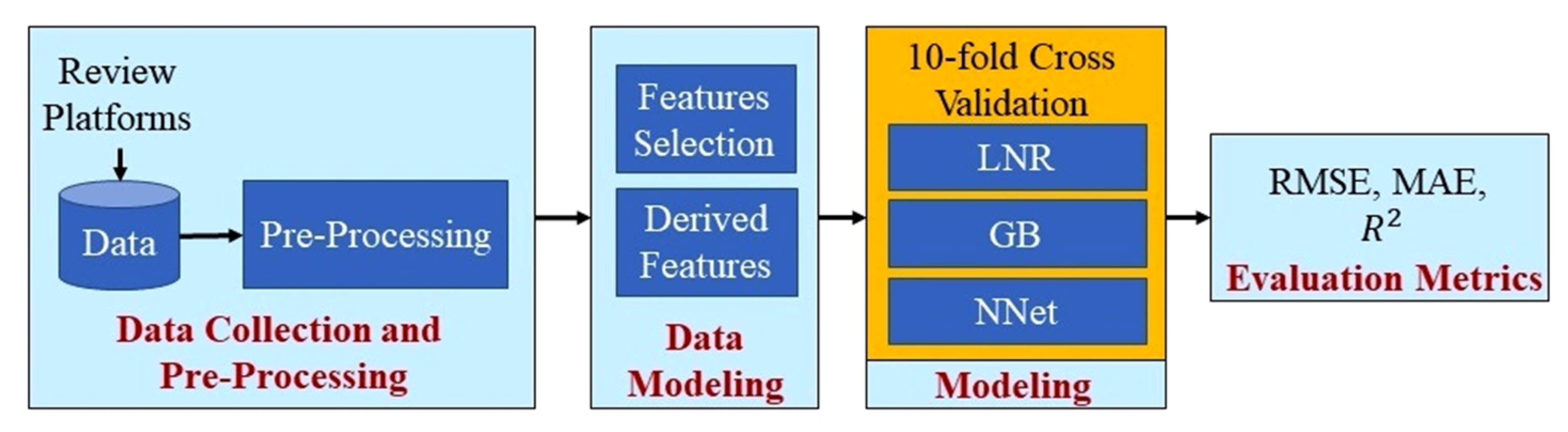

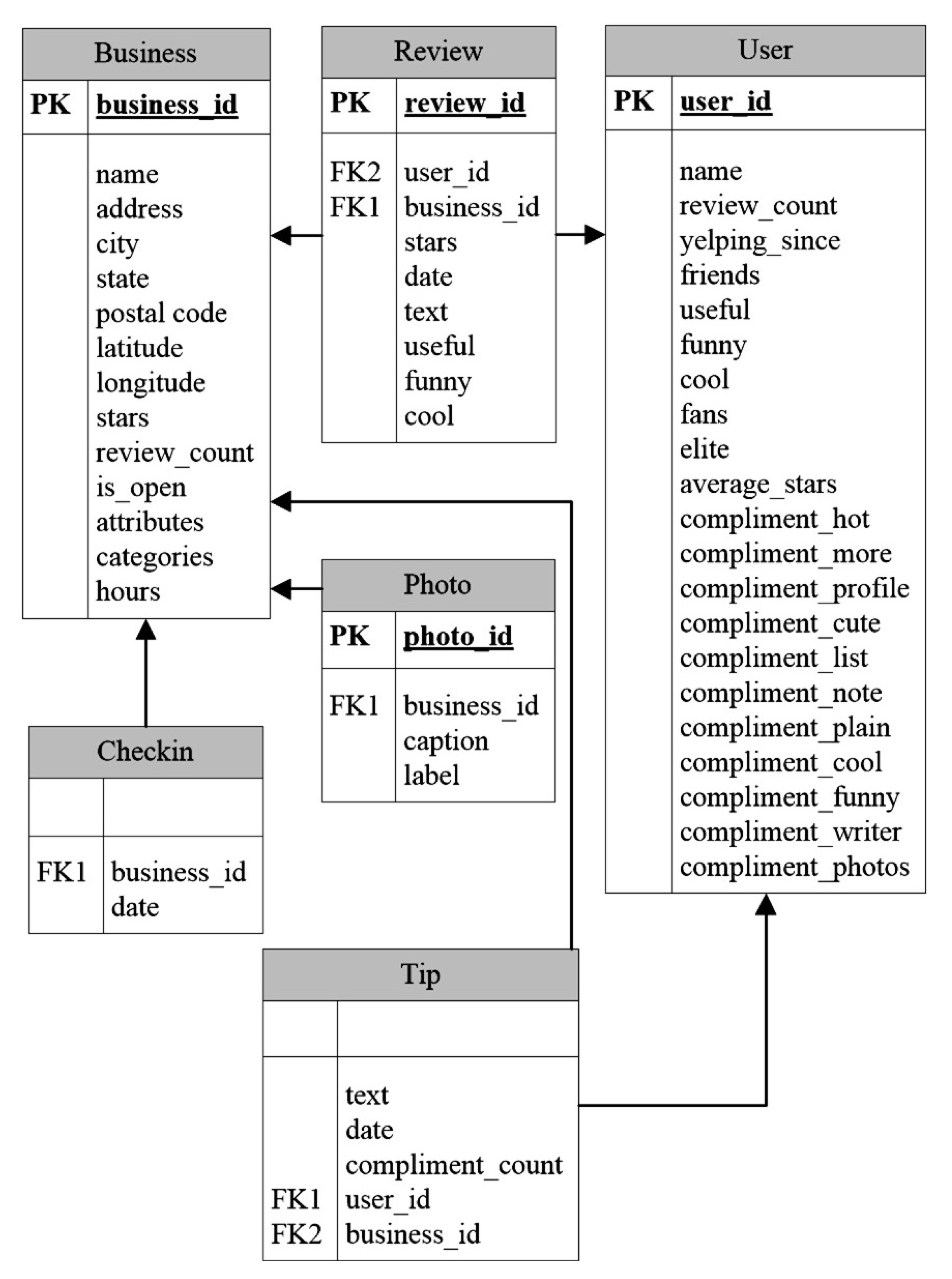

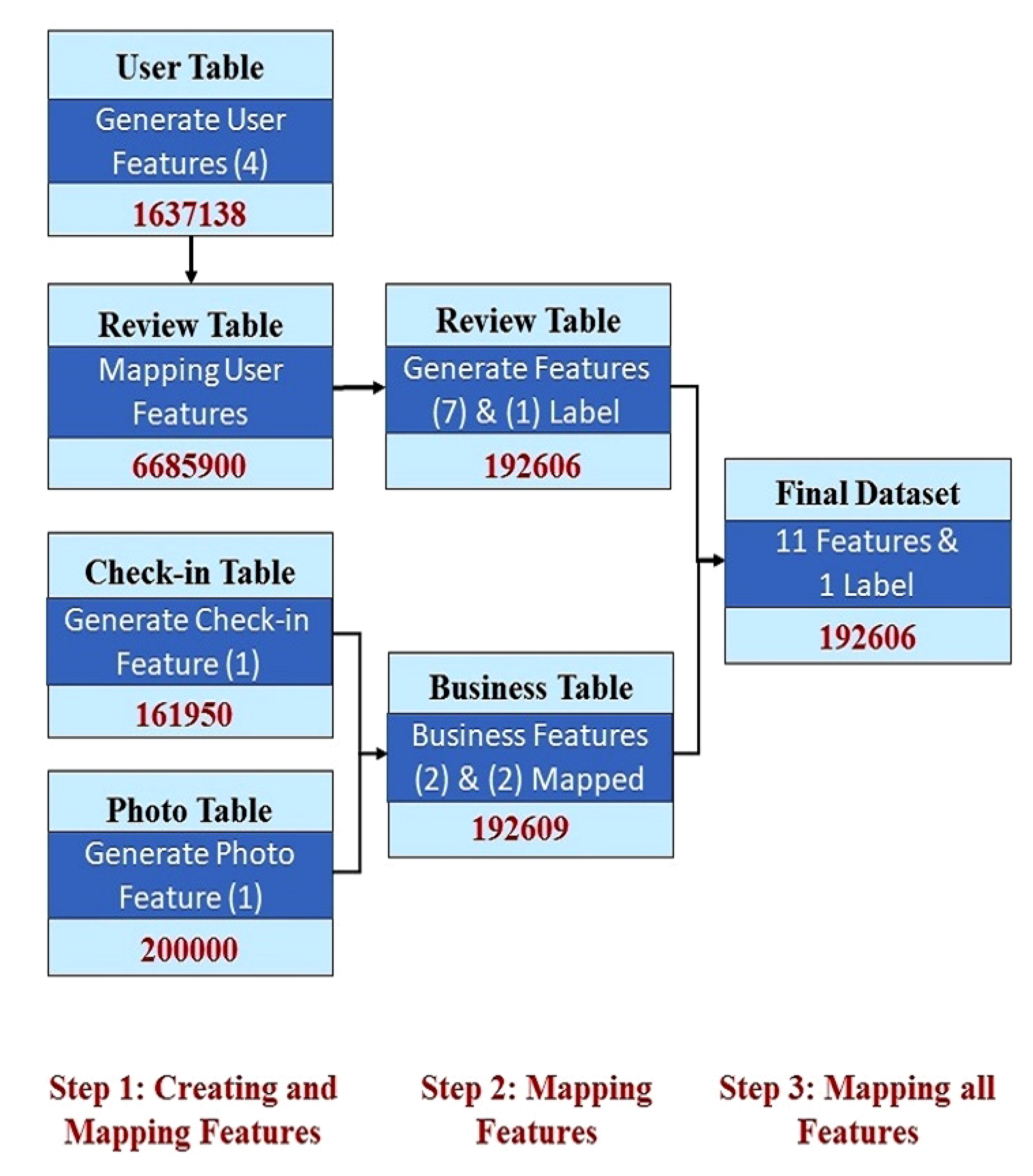

Section 3 illustrates the research methodology.

Section 4 reports and discusses the experimental results.

Section 5 discusses the implications.

Section 6 outlines the limitations and future work. Finally,

Section 7 concludes the study.

2. Literature Review

The literature on predicting helpfulness of reviews is continuously increasing as it becomes a critical factor for consumers in making purchase decisions [

20,

36]. This section provides an overview of the current state of the literature on predicting helpfulness and ranking reviews using multiple features, i.e., review content, reviewer, product/business, emotions, etc., and various techniques. A study found that review extremity, depth, and type of product affect the perceived helpfulness of reviews by analyzing data collected from

Amazon.com. The type of product plays the role of moderator between depth and helpfulness [

25]. Cao et al. [

15] studied the relation of review features with helpfulness. It was found that reviews with extreme opinions are more helpful when compared with neutral reviews. A study explored the helpfulness of online reviews by using both qualitative, i.e., reviewer experience, and quantitative, i.e., word count, features. The analysis was performed on 1375 reviews and data of the top-ranking 60 reviewers from

Amazon.com. The relation of the length of review with the helpfulness appeared significant up to a certain threshold. In addition, the reviewer experience had reported no significant relation with helpfulness. However, the past record of reviewer helpfulness can predict future helpfulness. The study reported a changing impact of different review and reviewer related features on perceived helpfulness [

37]. The important reviewer features, along with review features, were examined. Performance of popular ML algorithms. i.e., NNet, Random Forest (RandF), Stochastic GB, etc., were compared by performing analysis over three datasets containing 32,434, 109,357, and 59,188 reviews collected from

Amazon.com. The proposed review content-related features give the best performance in comparison with reviewer features and previously proposed models. The linguistic features of reviews along with the reviewer helpfulness per day are also strong predictors of review helpfulness [

36].

The features that influence review helpfulness prediction were analyzed using review collected from

Amazon.com and ML algorithms including Logistic Regression (LGR), Support Vector Regression (SVR), Model tree (M5P) and RandF. The results reported that the relation of different features with the review helpfulness prediction varies for all five categories tested. Moreover, SVR shows the best performance in predicting the review helpfulness for all five categories in comparison with LGR, M5, and RandF. To identify the most helpful review from the massive volume of reviews for a given product or business, a NNet based prediction model was proposed. The results reported the significance of features for predicting helpfulness of reviews [

38]. Wu [

39], inspired by communication theories, tried to explore the effectiveness of reviews by keeping in consideration review popularity and helpfulness. The results from the analysis performed on

Amazon.com reviews showed the importance of review popularity and helpfulness in evaluating the effectiveness of reviews. The review, reviewer, and product-related features were analyzed using ML algorithms. The data collected from

Amazon.com contain 32,434 reviews and 3100 products were analyzed. The results revealed that the proposed review category and reviewer features are better predictors of review helpfulness. The recency of reviewer, along with the length of activity, also showed statistically significant relation with the helpfulness of reviews [

40].

The impact of emotions on the helpfulness of online reviews collect from

Amazon.com was studied using Deep Neural Network (DNN). NRC emotion Lexicon was used to extract the emotions attached to reviews. The features that were previously studied, i.e., reviewer, product and linguistics, were used for predicting helpfulness. It was evident from the results that emotions were the best predictors of review helpfulness when features were taken individually. Moreover, the mixture of other features and emotion was reported to produce better overall performance [

41]. The relation of review title features with the review helpfulness has been explored by using data for 475 book reviews from

Amazon.com. A model was proposed based on review content, reviewer, readability and title features. The proposed model was tested on a collected dataset of book reviews using ML algorithms i.e., Decision Tree (DT) and RandF. It was reported that the review title features were not a significant predictor of review helpfulness [

42]. A model based on GB algorithm was proposed to predict review helpfulness by using textual features of reviews, i.e., readability, polarity, and subjectivity. The analysis was performed on reviews related to books, baby products, and electronic products collected from

Amazon.in. The results reported that textual features are a better predictor of review helpfulness [

19].

Gao et al. [

43] studied the consistency and predictability of rating behavior of reviewers over time along with their review helpfulness. The data collected from

TripAdvisor.com was analyzed using econometric models. The results reported that the rating behavior of reviewers is consistent over time. Moreover, the reviewers that currently have higher ratings were reported to be more helpful in future reviews. The results were robust when tested over different product categories. The review content and rating were not significantly related, as reported by previous studies. A review helpfulness prediction model was developed by considering the unexplored features. The analysis was performed by collecting 1500 hotel reviews from

TripAdvisor.com. The results reported that many notions in review and review type have varying impact on the helpfulness of hotel reviews [

44]. The classification of reviews into helpful and not-helpful was performed using 1,170,246 reviews collect from

TripAdvisor.com. The ML classification algorithms used include DT, RandF, LGR, and Support Vector Machine (SVM). Accuracy, sensitivity, specificity, precision, recall, and F-measure were used to evaluate the performance. The results reported that the reviewer features were a good predictor for predicting review helpfulness in comparison with review quality and sentiment [

45].

Customer reviews from

Amazon.in and

Snapdeal.com were analyzed using two-layered Convolutional Neural Network (CNN) to predict the most helpful review for a given product. Three filters, namely tri-gram, four-gram, and five-gram, were used to extract the textual features for predicting helpfulness of reviews. As the study relied only on textual features, the proposed approach was reported to be flexible for predicting helpfulness of reviews for any domain. The results showed better performance for the CNN model in comparison with other ML models [

23]. The unexplored assumptions, i.e., star rating, equal review visibility, the constant status of review and reviewer, made in previous studies were investigated using data collected from

TripAdvisor.com. The review visibility features, e.g., days since the review was posted, days review was displayed on the home page, etc. showed a strong relation with review helpfulness. The M5P showed better performance in comparison with LNR and SVR [

35]. Saumya et al. [

22] proposed a review ranking approach based on their predicted helpfulness. The features related to review content, reviewer and product were extracted from

Amazon.in and

Snapdeal.com reviews. RandF was used for the classification of reviews as high-quality and low-quality reviews. Afterwards, GB regressor was used to calculate the helpfulness score of the high-quality review. The top-k reviews were ranked according to the helpfulness score, whereas the low-quality were simply added at the end. The results reported a fair ranking of reviews as the top ten review include few latest reviews along with a few previous reviews.

The impact of review numerical and textual features in predicting review helpfulness were explored by using Amazon reviews. The analysis was performed on the collected data using RandF. It was reported that the numerical features are a significant predictor of review helpfulness for all three types of reviews, i.e., regular, suggestive and comparative reviews. The review length and complexity were also a significant predictor of helpfulness. However, the relation of review complexity with helpfulness was inverted U-shaped [

46]. The effect of user-controlled features, along with other predictors, was investigated using reviews collected from

TripAdvisor.com. The results showed varying relation of user-controlled filters with selected features. The Recency, Frequency, and Monetary (RFM) model showed consistency among all controlled variables. Moreover, the rating of review and length were reported as the most important predictors of review helpfulness [

47]. The impact of including RFM characteristics of reviewers on the performance of predicting review helpfulness were analyzed using data collected from

Amazon.com and

Yelp.com. The hybrid approach combining textual features extracted using the Bag-of-Words (BoW) model and RFM features produced best results [

48]. Mohammadiani et al. [

49] divided reviewers into two groups based on their strength of the relationship. The analysis performed on data collected from

Epinions.com showed that the effect of review helpfulness on the influence of the reviewer is significant for high similarity.

A study introduced a Deep Learning (DL) model to understand the quality of online hotel reviews. The data collected from

Yelp.com and

TripAdvisor.com were analyzed using CNN and Natural Language Processing (NLP) to explore the relation of photo provided by the user and review helpfulness. The DL models outperform the other models in predicting helpfulness of reviews. The results reported that the photos provided by the user alone are not a good predictor of review helpfulness. Moreover, combining the photos with the features of review text yielded better performance [

50]. The influence of reviewer profile photo on perceived review helpfulness was explored by extracting decorative and information features from photos of 2178 mobile gaming reviews collected from the Google Play store. The experimental results performed using Tobit regression model, reported that the profile photo plays a significant role in the perception of review helpfulness. However, the type of photo did not show any significant impact on review helpfulness. More interestingly, the review length moderates the relation between profile image and review helpfulness rather than review valance or equivocality [

51]. The textual features of the review were examined using ML models, i.e., RandF, Naïve Bayes (NB), etc., to identify the quality of hotel reviews available on TripAdvisor. The stylistic features were reported as a more important determinant of review helpfulness, however, by combining stylistic features with content features, produced better prediction results [

52].

The language used by reviewers in writing product reviews varies a lot. Four stylistic features were identified and analyzed for their relationship with review helpfulness using data collected from

Epinions.com. The stylistic features were reported a good predictor of review helpfulness in comparison with other features. However, it was suggested to use the stylistic features along with social features to gain better performance [

53]. Krishnamoorthy [

5] proposed a predictive model to investigate the review features that have an impact on reviews helpfulness. The data collected from

Amazon.com was analyzed using ML algorithms, i.e., NB, SVM, and RandF. The linguistic features extracted from the review content were analyzed along with readability, subjectivity, and metadata. It was concluded from results that the hybrid set of features produce better accuracy. Moreover, linguistic features were reported as a good predictor for some categories, e.g., books and games. A multilingual technique was introduced to overcome the gap of predicting the review helpfulness for reviews in languages other than English. The dataset of 4248 non-English reviews was collected from

Yelp.com. The previously identified features related to review content, business and reviewer were analyzed using regression, i.e., LNR, and classification techniques, i.e., SVM [

21]. The analysis of scripts for predicting review helpfulness was performed with the help of human annotators that highlight the important phrases that make a review helpful. The results showed that the script enriched model gives better performance even with small training set in comparison with traditional models, e.g., BoW [

54].

This research hypothesized that the cumulative helpfulness prediction using business features, along with reviewer features, give more accurate results and enhance prediction performance. Moreover, the cumulative helpfulness of a business calculated from online reviews can be used as an alternative to rank businesses efficiently and effectively. This research also explored which ML algorithm gives the best performance and which features are more important in predicting cumulative helpfulness.