1. Introduction

In an era of rapid technological development, artificial intelligence (AI) is becoming increasingly present in everyday life. The omnipresence of AI provokes a variety of emotional reactions in society, and these can be determined by numerous factors. For some people, AI can be a source of fascination and hope for future innovations and improvements to the quality of life. Others perceive it as a threat to human autonomy or as a violation of moral and spiritual boundaries. Important factors that determine individuals’ perceptions of new phenomena are religion and religiosity. This sphere of life can significantly influence the formation of individuals’ worldview and behavior (

Wodka et al. 2022), also having a real effect on the way people perceive and react to the development of AI technologies.

Diverse interpretations of and responses to AI may stem from differences among religious doctrines, which offer specific approaches to new technologies and the role of humankind in an increasingly technology-dominated world. While some religions stress the idea of human–technology harmony, others place emphasis on the need to treat new technologies with reserve (

Noble 2013). Consequently, the degree of identification with the doctrine, manifesting itself in the level of religiosity, may determine attitudes towards them.

The perception of AI through the lens of religion and religiosity may also influence ethics related to the development and application of this technology. Issues such as artificial consciousness, autonomous machine decisions, and the impact of AI on the job market may be evaluated differently by individuals with different religious sensitivities, and this leads to a complex debate on the present and future role of AI in society.

This study aimed to investigate and explain how the level of religiosity influenced university students’ emotional responses to AI in Poland. Students are a unique social group. Due to their age and educational level, they are open to technological innovations, have relatively extensive knowledge about them, and gladly use them (

Zdun 2016). In the future, they are going to be the elite of society and will perform functions in various spheres of socioeconomic life and in public administration. This article analyzes the relationships between different levels of religiosity and the perception of and responses to AI, focusing on emotions such as fear, sadness, anger, and trust. This study sought to explain how religiosity shapes individuals’ attitudes towards AI.

There are many definitions of religiosity, just as there are many definitions of religion, which makes the term “religiosity” vague and ambiguous (

Davie 1993). Sociological research takes account of the differences between perspectives on and interpretations of religiosity, usually concerning its characteristics, essence, and unique features (

Kozak 2014). Using traditional research instruments, oriented at the substantive institutional understanding of religiosity, it is difficult to assess individualized and subjective forms of religiosity; “Many contemporary people relate religiosity to personal incidents and experiences rather than institutional forms of religion” (

Mariański 2004, p. 29). Today, religiosity is often interpreted as a part of cultural identity, with traditional beliefs and practices sometimes giving way to more symbolic forms of expressing affiliation (

Wódka et al. 2020). Despite the pluralism of the phenomenon of religiosity itself, the ways of understanding religiosity have certain elements in common; these include a similar way of thinking about social issues shared by members of a religious group (

Fel et al. 2020), a similar doctrine and ethics, and shared worship (

Schilbrack 2022). What seems to be very important in the analysis of religiosity is the self-reported parameter of global attitude to faith (subjective declaration of the “depth” of one’s religiosity) (

Kozak 2015) and its dynamics—namely, the increase, decrease, or stability of the level of religiosity (

Zarzycka et al. 2020).

For the purposes of our research project, we used a parameter combining two key variables: global attitude to faith and engagement in religious practices. This enabled us to build a typology dividing respondents into religious, irreligious, and indifferent groups, which is useful for a better explanation of their emotional responses to AI. This is consistent with the approach recognizing the complexity of religious experience and the need for a multidimensional analysis of that experience in the sociology of religion, as indicated in a study stating, “The question about global attitude to faith gives rise to a simple typology of believers (…) while the answer to the question about self-identification is further verified in a number of detailed questions, including ones about religious practices” (

Bożewicz 2022, p. 42).

We assumed that determining the relationship between religiosity level and emotional responses associated with AI would allow for a deeper exploration of these issues and for explaining how people with different levels of religiosity feel about technological changes. Such findings may be of great significance, for instance, to AI designers and programmers, who are striving to develop a technology that is positively perceived and accepted by diverse communities.

1.1. Review of the Literature

The existing publications present a fairly wide range of perspectives, covering diverse aspects of relationships between AI and social attitudes. The literature approaches issues such as the influence of religion and culture on the perception of AI (

Reed 2021), the effect of AI on communities and human interactions (

Yang et al. 2020), attitudes towards AI and transhumanism (

Nath and Manna 2023), and AI-related ethical and philosophical issues (

Stahl and Eke 2024), both from a theoretical perspective and in light of empirical research results. What seems to be an important object of exploration is the question of how religiosity can influence emotional responses to AI. It is emphasized in the literature that religious beliefs can inspire both positive and negative emotions towards AI, influencing people’s reactions to technological progress and its potential consequences. Researchers stress the importance of including ethical and spiritual aspects in the process of designing AI, which may lead to more balanced and ethically responsible AI development, taking account of the harmony between the world of nature, society, spirituality, and technology (

Uttam 2023).

In the literature, there has also been an ongoing debate on the potential threats and fears connected with various ideas regarding the application of AI and with the different areas of its application. These include existential anxiety caused by threats associated with the latest technologies and the anxiety about the dignity of human life (

Shibuya and Sikka 2023). Authors also engage in theoretical reflection on the influence of various religious and cultural traditions on people’s attitudes towards robots and AI, focusing on the differences between Eastern and Western cultures (

Persson et al. 2021). They analyze how different religions influence the perception and acceptance of technologies and how these are translated into diverse approaches to machines and AI in specific cultures and religious traditions (

Ahmed and La 2021). The conclusions of these analyses indicate a significant effect of religious and cultural traditions on the way different societies react to the development and implementation of advanced technologies (

Prabhakaran et al. 2022). Authors also underscore the importance of taking these cultural and religious differences into account in designing and implementing new technologies (

Jecker and Nakazawa 2022). For example, in cultures shaped by the dominant monotheistic religions (including Christianity, Judaism, and Islam), people may perceive the human being as unique and distinct from machines, which leads to greater caution or skepticism about technologies imitating or replacing human actions (

Yam et al. 2023).

Research by

Koivisto and Grassini (

2023) explores the associations between different religious values, ethics, and the perception and evaluation of AI application and development. They highlight the benefits and limitations of AI, acknowledging its role in facilitating human work without replacing inherent human qualities such as creativity, intuition, and emotions. Similarly,

Markauskaite et al. (

2022) argue that AI cannot be a substitute for interpersonal cooperation or the sense of belonging within a society.

Bakiner (

2023) advocates for AI development grounded in humanistic values like human rights and democracy, while

Robinson (

2020) emphasizes the importance of considering pluralism and religious values in the evolution of AI. These authors collectively argue against viewing AI as a threat to religion or humanity, instead recommending societal participation in AI enhancement (

Abadi et al. 2023). This discourse extends to challenges related to transhumanism and posthumanism, with

Al-Kassimi (

2023) cautioning against threats to human values and dignity from neglecting ethical and spiritual considerations in AI development. The consensus among these scholars is the necessity for deep ethical reflection and the integration of humanistic values in the design and implementation of solutions involving AI.

While theoretical reflection is focused on issues such as the relationship between religious and ethical values and AI perception, empirical studies indicate differences in the perception of AI across different backgrounds. In a study conducted on the Reddit platform, comprising an analysis of 33,912 comments from 388 subreddits,

Qi et al. (

2023) sought to identify the determinants of the social perception of AI, including systems such as ChatGPT. Significant differences in attitudes towards AI were found between what is referred to as technological communities (focused on technological issues) and non-technological ones (those attaching great importance to the social and axiological dimensions of life). The former concentrated on the technical aspects of AI. The latter, by contrast, placed more emphasis on the social and ethical aspects of artificial intelligence, more often engaging in debates on issues such as privacy, data security, and the effect of AI on the job market.

In a study conducted by

Jackson et al. (

2023), a sociological experiment aimed to examine the influence of robot preachers on religious commitment. The study was conducted at Kodaiji Temple in Kyoto, where a robot named Mindar regularly preached sermons. The study included 398 participants, to whom a survey was administered after they had listened to sermons delivered by Mindar or by a human preacher. It was found that the participants who evaluated the robot as better than the human preacher showed a lower degree of religious commitment and a lower tendency to make donations, which suggests that robots may not be perceived as reliable religious authorities.

A study conducted in South Korea in 2022 aimed to determine the associations of the Big Five personality traits (extraversion, agreeableness, conscientiousness, neuroticism, openness) with the perception of AI (

N = 530 adults). It revealed that extraverts often had negative feelings about AI, agreeable subjects saw it as positive and useful, conscientious subjects evaluated AI as useful but less socially friendly, and neurotic subjects experienced negative emotions but perceived AI as socially friendly. Individuals open to novelties considered AI useful, and enthusiasts of new technologies usually exhibited positive attitudes towards AI (

Park and Woo 2022). A different study, conducted by

Schepman and Rodway (

2023) on a sample of 300 adults from the UK, was focused on determining the relationships between personality traits and attitudes towards AI. Using the General Attitudes towards Artificial Intelligence Scale (GAAIS) and other measures, the authors found that introverts, agreeable subjects, and conscientious individuals approached AI more positively. They also observed that general trust in people had a positive effect on attitudes towards AI, whereas lack of trust in corporations had a negative effect on these attitudes.

Research conducted on a sample of 1015 young people entering the labor market from 48 countries aimed to determine their attitudes towards emotional artificial intelligence (EAI) in the context of human resource management. EAI is an artificial intelligence technology that detects, interprets, responds to, and simulates human emotions using voice tone analysis, facial expression analysis, and the analysis of other behavioral indicators. More positive attitudes towards its use were found in students with a higher income, men, students in business-related fields, those in senior years, and individuals with a better understanding of EAI. Religiosity, especially among Muslims and Buddhists, was associated with greater EAI anxiety. Regional differences also influenced attitudes, with respondents from East Asia being less anxious than those from Europe, which may be related to cultural differences (

Mantello et al. 2023).

A study conducted in the UK on a sample of 4200 subjects by the Centre for Data Ethics and Innovation (CDEI) aimed to determine attitudes towards AI, its areas of application, and the use of personal data. Respondents generally accepted the use of personal data by AI, but they were concerned about the security of such data and about fairness in AI use. The vast majority (81%) expressed approval for AI collecting data for the needs of the National Health Service (NHS), and 62% approved AI collecting data for the British government. More than half of the respondents (52%) reported limited knowledge about data use, and 31% were afraid that AI-related benefits would be distributed unequally. The study also revealed expectations for a better supervision of AI and greater transparency of its operations (

Clarke et al. 2022).

1.2. Hypotheses

The literature review in this study suggests that various factors, including religious beliefs, may considerably influence the development of emotional responses—both positive and negative ones—to AI. On this basis, we formulated the following general hypothesis:

General hypothesis: The level of religiosity has a significant effect on emotional responses to AI, conditioning both positive and negative attitudes towards this technology.

To investigate in detail how the level of religiosity translated into different types of emotions in the context of AI, we formulated two specific hypotheses:

A hypothesis concerning negative emotions: Religious people generally show higher levels of negative emotions (such as fear and anger) in response to AI compared to irreligious and indifferent individuals.

A hypothesis concerning positive emotions: Religious people generally show lower levels of positive emotions (e.g., joy) and lower levels of some negative emotions (e.g., sadness as the opposite of joy) in response to AI compared to irreligious and indifferent individuals.

2. Material and Method

2.1. Participants and Procedure

In the first step, we designed a survey questionnaire titled “Students’ Attitudes towards AI”, based on the three-component theory of attitudes by

Rosenberg and Hovland (

1960), in which three basic aspects of attitudes are distinguished: affective, cognitive, and behavioral. The affective component of the survey was focused on respondents’ emotional reactions to AI, collecting data about their feelings, such as enthusiasm about, fear of, or trust in artificial intelligence. The cognitive component measured respondents’ knowledge and beliefs about AI. It included questions about their understanding of the possibilities and limitations of AI and the predicted consequences of its development. Finally, the behavioral component concerned respondents’ AI-related behaviors and actions, such as their willingness to use AI in education and personal life and their active participation in AI-related courses and projects. By combining these three aspects, the questionnaire aimed to provide a comprehensive picture of respondents’ attitudes towards artificial intelligence, reflecting both their inner feelings and beliefs and the external manifestation of these attitudes through actions. In this article, the object of interest was the affective component. We analyzed the relationship between the level of religiosity and emotions towards AI.

In the next step, we conducted a pilot “Students’ Attitudes towards AI” survey to test its effectiveness and comprehensibility. The pilot survey consisted of administering the questionnaire to a test sample of randomly selected university students. The aim of this stage was to test the clarity and intelligibility of the questions; the pilot survey was also meant to check if the structure of the questionnaire was logical and whether or not it caused ambiguities or misinterpretations. The results of the pilot survey allowed for making the necessary modifications and adjustments to the questionnaire before distributing it among a wider population of students. The pilot study was also an opportunity to assess the time needed to complete the questionnaire and to identify the potential technical problems with the LimeSurvey platform, which was used to conduct the survey. The modifications were meant to ensure that the questionnaire collected the data reflecting students’ attitudes towards AI as comprehensively and as effectively as possible, in accordance with the three-component theory of attitudes.

The reliability analysis conducted after collecting all the data and using Cronbach’s alpha coefficient—whose value for the “Students’ Attitudes towards AI” survey questionnaire was 0.925—was focused on assessing the internal consistency of the measure. In our analysis, we used two types of data: answers to questions indicated on a scale (e.g., a Likert scale) and quantitative variables, which together were supposed to assess a specific aspect of sociometric attitudes, making it possible to investigate how these different types of answers were interrelated in the sample. The high value of the alpha coefficient indicates the very good internal consistency of the survey questionnaire, which suggests that specific questions effectively measure consistent constructs of attitudes towards AI. This result attests to the questionnaire being a sound and reliable measure that can be used in further research and analyses in this field.

2.2. Respondent-Driven Sampling (RDS)

In this article, we present the results of quantitative research concerning the attitudes towards AI of university and college students learning in Poland, from whom data were collected using the method known as respondent-driven sampling (RDS) (

Salganik 2006;

Salganik and Heckathorn 2004;

Tyldum 2021;

Sadlon 2022).

RDS is a snowball sampling technique

1 developed by Douglas Heckathorn. It was designed to be based on the use of social networks and consists of participants recruiting further individuals, thus creating multistage chain-referrals (

Heckathorn 2014). This technique presupposes that, through statistical adjustments, it is possible to recruit a representative sample even though the initial subjects—or seeds—are not randomly selected (

Heckathorn and Cameron 2017). Chain-referrals based on social networks can provide representative population samples for sociological research in various social backgrounds, including students (

Dinamarca-Aravena and Cabezas 2023).

To ensure the representativeness of the sample, we used the student numbers released by Statistics Poland (GUS) in June 2023, with a division into groups of fields of study in accordance with the ISCED-F 2013 classification. The data generated from RDS yielded very similar results to the data from Statistics Poland. The sampling frame included student numbers for groups of fields of study in Poland, which made it possible to reliably investigate students’ attitudes towards AI across various fields of study, providing a solid basis for determining the character and diversity of those attitudes in the Polish academic community. Our research resulted in a sample of 1088 respondents, which was a representative reflection of the community of Polish university and college students (

Table 1).

As mentioned above, we used respondent-driven sampling (RDS) to collect data from respondents studying in Poland and took account of their numbers specified by Statistics Poland in accordance with ISCED-F 2013, a classification of groups of fields of study. This ensured a sample representative of the diverse academic community.

The RDS procedure began with the choice of “seeds”—a small, diverse, and well-connected group of students in various fields, who were not randomly selected. The “seed respondents” (Wave 1) were informed about the purpose and manner of sampling. Next, they received an electronic link to the survey questionnaire, which—using an email address—generated a unique token in the LimeSurvey registration system, allowing the respondent to complete the survey questionnaire. Wave 1 respondents were surveyed using an auditorium questionnaire. After completing the questionnaire, they were asked to recruit further participants for the study using a snowball sampling technique by means of an electronic link to the questionnaire or a QR code.

As part of the RDS method, each further recruitment wave (Wave 2, Wave 3, etc.) continued the procedure until the desired sample size was attained. Additionally, to increase the scope and effectiveness of the survey, we involved study ambassadors, such as lecturers and representatives of the student government. Using this methodology, we managed to gather a sample of Polish students that was representative in terms of the diversity of fields of study.

The sample reached the size of 1088 students in two months, and stabilization in its specific segments was achieved after an average of five weeks. In RDS, a decrease in the number of newly recruited participants was observed with each successive recruitment wave. This phenomenon stemmed from the expansion of the respondents’ social networks, which, with time, begin to overlap, and the number of potential new participants who could be recruited decreased. In other words, the longer the recruitment process lasted, the smaller the group of people available for recruitment was, because part of the network had already taken part in the study and had been included in previous “waves.” As a result, the speed of recruitment decreased with every successive wave, and the total number of waves was 5, in order to achieve the desired sample size and for the sample to be representative of the diverse academic community.

To distinguish the waves in the study, we separated them temporally. Respondents from successive waves completed the survey questionnaire in the consecutive weeks. For this purpose, we used the meta-data collected through LimeSurvey, such as each respondents’ date of completing the questionnaire. Every new respondent joining the sample was automatically assigned to the appropriate wave based on the dates of the survey commencement and completion.

To avoid the possible phenomenon known as bottlenecks in RDS, the “seeds” were selected from different types of higher education institutions, from different locations, among students of different ages, and at different stages of education. This diversity-oriented approach to the selection of seeds was intended to ensure the wide representativeness of the sample and the avoidance of isolated subpopulations. Moreover, in the process of recruitment, we consciously took steps to encourage participants to involve individuals from a variety of student circles, which promoted the creation of heterogeneous social networks.

Differences in recruitment speed can also affect research results (

Wojtynska 2011). This problem shows that, in RDS studies, the recruitment process should be carefully monitored and regulated to avoid disproportions in the composition of the sample. For this reason, the seeds were consciously selected from different higher education institutions, different locations in Poland, different years of study, and different age groups in order to ensure diversity and minimize the risk of unequal recruitment speed. This approach was meant to ensure a sample as balanced and representative as possible.

2.3. Measures

In this study, we used a measure of religiosity based on a combination of two variables: religious practices and self-reported religious belief. Variable D08 was the frequency of religious practices, such as visits to the church, such as an Orthodox church, Protestant church, or other places of worship. Respondents rated the frequency of their participation in these practices on a scale from 1 = never to 5 = very often (several times a week). Variable D09 concerned self-rated religiosity. Respondents used a scale from 1 (non-believer) to 5 (strong believer) to rate their religiosity level. The two variables were summed, forming a scale from 2 to 10 that was intended to reflect the overall level of respondents’ religiosity. Next, we converted it into a categorical variable, RELIGIOSITY, which comprised three categories (irreligious: 2–4; indifferent: 5–7; religious: 8–10). This structure enabled a more nuanced analysis of the relationship between religiosity level and emotional responses to artificial intelligence, covering both practices and personal religious identification.

In our study, we used a set of measures designed specifically to assess EMOTIONAL RESPONSES TO AI, based on participants’ self-reports. Labeled as Z09, this set consisted of a series of questions concerning different emotions that might arise in respondents in the context of AI: curiosity, fear, sadness, anger, trust, disgust, and joy. Respondents rated their emotions on a scale from 1 (strongly disagree) to 5 (strongly agree). A similar measure of emotional reactions to new technologies was successfully applied in a study of attitudes towards new technologies in 2023, conducted on a representative sample of Polish adults (

Soler et al. 2023). In our study, this measure made it possible to establish how respondents with different levels of religiosity reacted to the increasing presence of AI in their everyday lives.

2.4. Analytical Method

In the “Students Attitudes towards AI” survey, we initially decided to apply a one-way analysis of variance (ANOVA) using the F-test as a standard method for comparing mean scores of emotions between the three groups—irreligious, religious, and indifferent. As the key instrument in ANOVA, the F-test is used to assess if the differences between the mean scores of the groups are statistically significant. Its application, however, requires meeting certain assumptions, including an equality of variance across the groups.

Therefore, we first performed a Levene’s test of homogeneity of variance. For the emotion variables of curiosity and fear, the Levene’s test indicated a homogeneity of variance, which is usually the basis for using the classic ANOVA

F-test. However, for the emotion variables of sadness, anger, trust, and disgust,

p-values were lower than 0.05, which signaled statistically significant differences in variance across the groups

2.

The violation of the equality of variance assumption (in the case of the analyzed emotions) induced us to apply alternative methods of analysis. We therefore used Welch’s ANOVA test (W-test), which is more resistant to inequalities of variance and more effective in controlling type I errors. The W-test allowed for a comparison of mean scores of emotions across the groups, even when the standard assumptions for the

F-test were not met. Thanks to this approach, we achieved a greater reliability of the results, as far as the differences in the distributions of data across the groups are concerned (

Delacre et al. 2019). In other words, the application of Welch’s ANOVA test allowed us to adjust the analysis to the observed inequalities of variance. This made it possible to reliably compare mean emotion scores between respondents with different levels of religiosity, even when the standard ANOVA assumption was not met.

3. Results

3.1. Religiosity

According to the data, nearly one in three students in Poland (29.3%) reported never visiting places of worship. Additionally, 21.6% of the respondents very rarely attended religious ceremonies, forming a significant portion of non-practicing individuals. Together, these groups represented 50.9% of the sample, indicating a majority that engaged in religious practices infrequently or not at all. Those who attended ceremonies irregularly accounted for 21.5% of the sample. Meanwhile, a smaller proportion of students, 17.6%, reported that they go to places of worship moderately often. A minority of the respondents, constituting 9.9% of the sample, indicated that they were practicing religious believers who frequently attended ceremonies. These data illustrate diverse religious practice frequencies among students, with a notable inclination towards less frequent participation (see

Table 2).

In the case of self-reported belief, about one in five students identified as non-believers, forming 19.9% of the sample. Those who were indifferent to religion accounted for 15.2% of the participants. A significant portion of the sample, 26.3%, were undecided but still attached to religious traditions. Approximately one in four students considered themselves believers, with this group representing 25.4% of the sample. Lastly, strong believers constituted 13.3% of the students, equating to roughly one in seven respondents (see

Table 3).

The analysis of consolidated data on religiosity among students allowed for a division of the sample into three categories: irreligious, indifferent, and religious. The results showed that 36.3% of the respondents identified as irreligious, which suggested a lack of religious affiliation or religious practices. The largest group was indifferent individuals, accounting for 39.4% of the sample; indifference may refer to agnostic attitudes or a low commitment to religious issues. Religious respondents—namely, those who indicated a higher level of religiosity—constituted 24.3% of the analyzed population. Overall, these data may attest to a trend towards the deinstitutionalization of religion and to an individual and subjective approach to religious matters among younger generations (see

Table 4).

3.2. Emotions

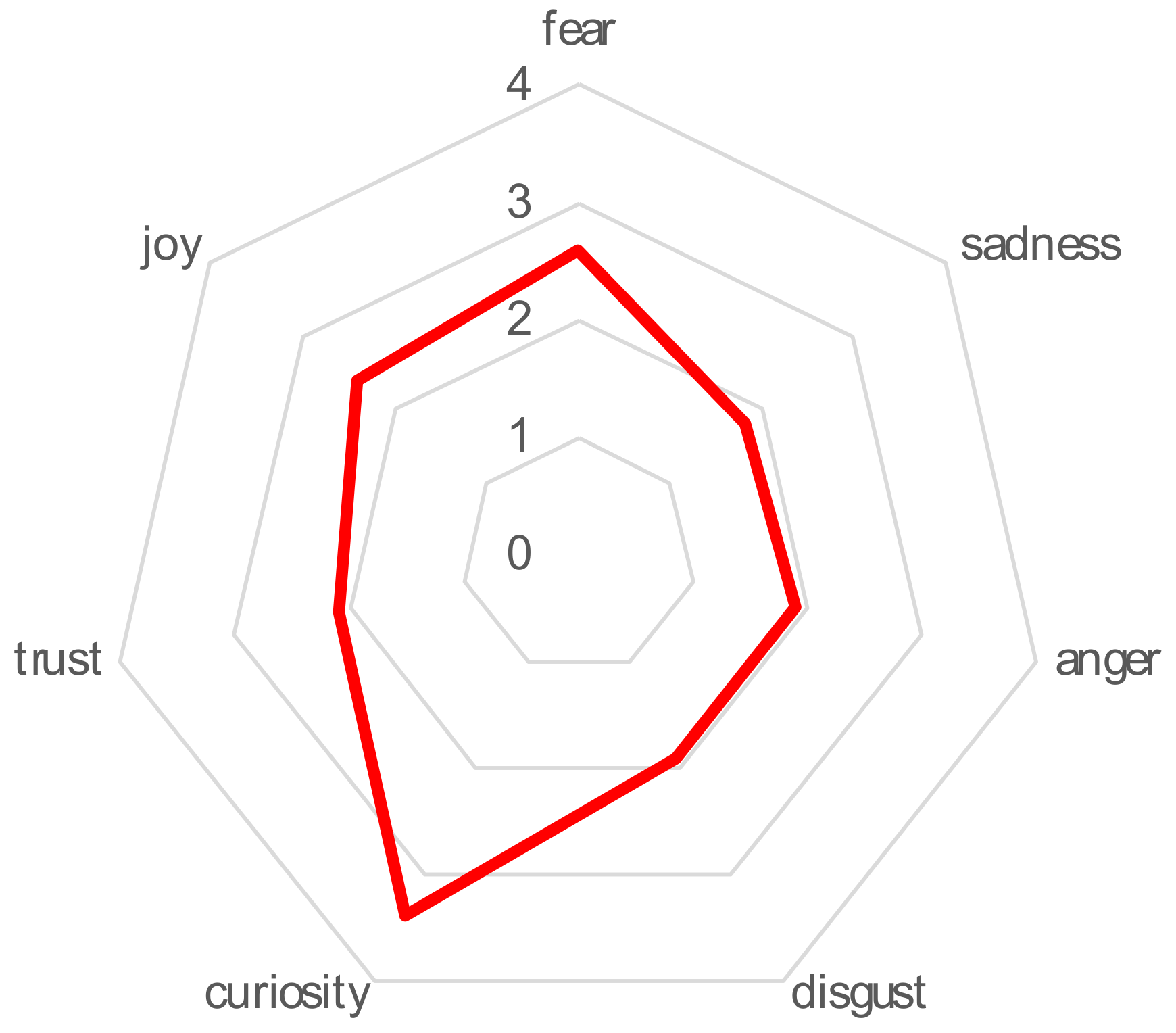

When analyzing emotions associated with the subject of AI among students, one can observe diverse reactions. Curiosity (M = 3.3, SD = 1.2) seemed to be fairly evenly distributed among the respondents, with the largest number (25.5%) choosing 3 on a 5-point scale, which means moderate curiosity. Likewise, reactions of fear (M = 2.78, SD = 1.3) were fairly evenly distributed, though a smaller number of respondents (11.0%) indicated very strong fear (a rating of 5).

More than half of the respondents (52.3%) reported that they felt no sadness (M = 2.3, SD = 1.3) in the context of AI, choosing the lowest level on the scale (a rating of 1). Anger (M = 2.3, SD = 1.3) was also an emotion rarely experienced towards AI. Roughly half of the students most often signaled the absence of anger (53.8%) towards AI technology. The majority of students felt no disgust (M = 2.3, SD = 1.3) towards AI, either; 54.4% of respondents chose option 1, indicating a lack of such feelings about AI.

Trust (M = 2.3, SD = 1.2) in AI among students was limited; 37.7% of the respondents chose the lowest rating (1).

Joy (

M = 2.5,

SD = 1.2) induced by AI was also experienced to various degrees, with 30.4% of respondents selecting option 1, which means a lack of joy, and a fairly high percentage (27.3%) of those indicating a moderate level of joy (a rating of 3). The variations in these emotional responses are visually represented in

Figure 1.

In summation, this study indicates mixed emotions about AI, with a tendency towards moderate trust and curiosity but also with a clear lack of intensive negative emotions such as sadness, anger, and disgust. Most of the respondents seemed to approach the topic of AI with reserve or moderate interest, without strong emotional reactions. The diverse emotional responses across different religiosity levels are further illustrated in

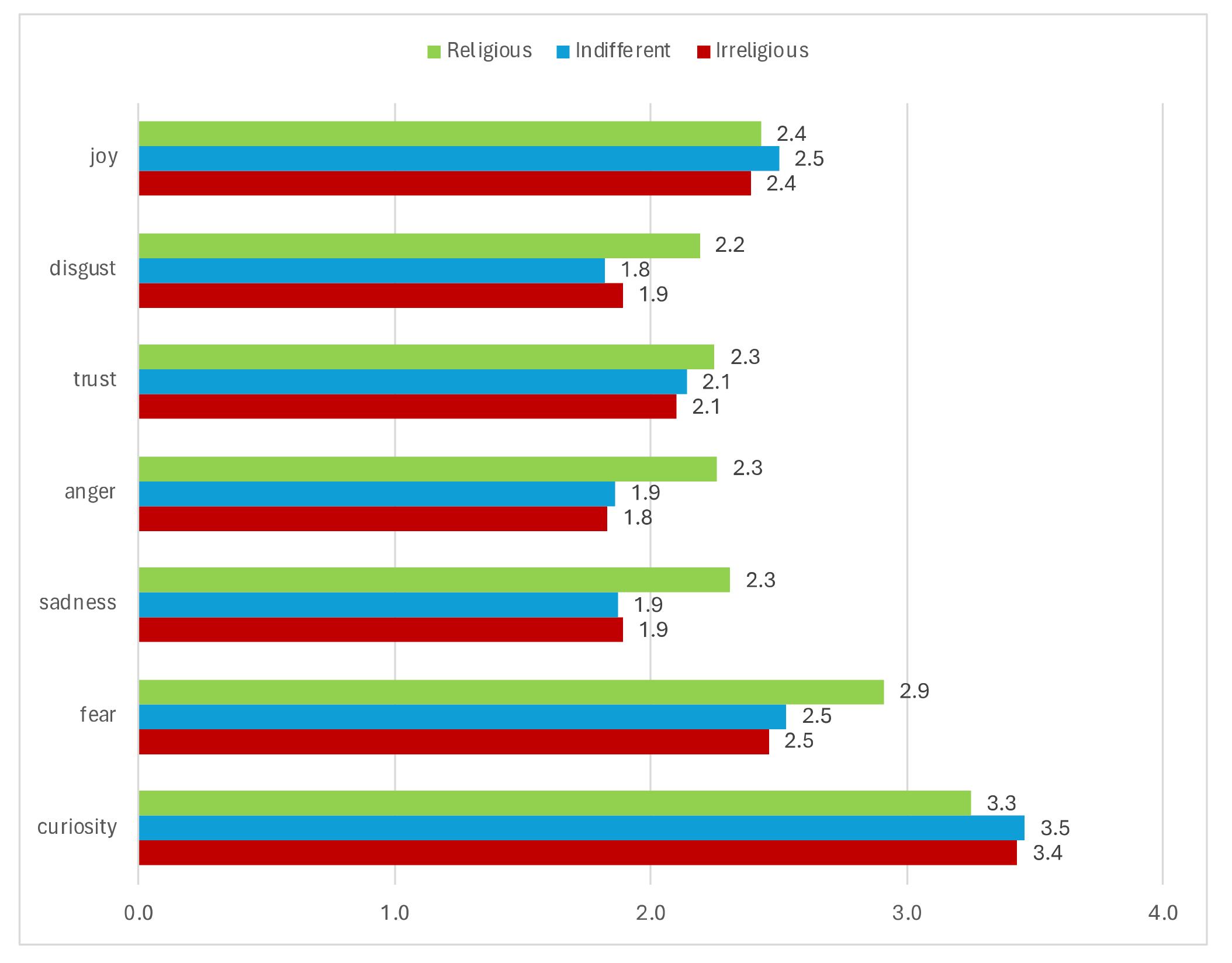

Figure 2.

The application of Welch’s ANOVA test (W-test) allowed us to assess statistical differences in the levels of the following emotions: curiosity, fear, sadness, anger, trust, disgust, and joy. No statistical differences were observed in the level of curiosity between groups with different levels of religiosity (W-test: 2.420, p = 0.090), which suggests a similar degree of interest in AI issues across all these groups. However, in the cases of fear (W-test: 7.176, p < 0.001), sadness (W-test: 10.726, p < 0.001), and anger (W-test: 9.958, p < 0.001), we found statistically significant differences between the groups, which shows that these emotions were experienced to different degrees by individuals with different religiosity levels.

In the cases of trust (W-test: 1.219, p = 0.296) and joy (W-test: 0.829, p = 0.437), there were no statistically significant differences across the groups, which suggests that these emotions were experienced with similar intensity by all respondents, regardless of their religiosity level. By contrast, in the case of disgust (W-test: 6.971, p = 0.001), we did observe statistically significant differences across the groups.

Overall, the results indicate significant differences in emotional responses to AI across the groups with different religious attitudes, particularly in the context of negative emotions such as fear, sadness, and anger. Such differences in the experience of emotions may influence attitudes towards AI and its acceptance in different social groups. These results highlight the significance of including the religious aspect in research on responses to new technologies, such as AI.

After performing Welch’s ANOVA test, we applied the Games–Howell test as a post hoc method in order to more accurately compare the groups pairwise

3. This test proved to be particularly useful in identifying which pairs of groups differed in terms of emotional responses to AI. The detailed results of these pairwise are presented in

Table 5.

The results of the “Students’ Attitudes towards AI” survey indicate significant emotional differences across religious groups in terms of responses to artificial intelligence. Religious individuals showed a higher level of fear compared to both irreligious and indifferent ones. In the case of sadness, we observed a lower level of this emotion in the religious group than in the remaining two groups. The religious group showed a higher level of anger compared to irreligious and indifferent respondents. Religious respondents showed a lower level of disgust compared to irreligious and indifferent ones.

Given the results of our study, people with strong religious beliefs appear to be more susceptible to feelings of fear regarding AI than their non-religious or religiously neutral counterparts. Religious people reported less sadness towards artificial intelligence but showed significantly more anger. This points to a diverse emotional landscape where fear and anger may arise from concerns about AI’s potential to challenge human dignity and the inherent values cherished in many religious traditions. Moreover, religious respondents were less likely to dislike AI than non-religious or neutrally religious respondents, suggesting that emotional responses to AI among religious people are complex and multi-faceted. These findings indicate that levels of religiosity are closely related to more intense and varied emotional reactions to this technology, potentially influencing the acceptance of and attitudes towards artificial intelligence. This finding highlights the need for a more nuanced consideration of emotional responses to this technology, considering individual differences in values and beliefs.

4. Discussion

The results of the “Students’ Attitudes towards AI” survey make it possible to directly address the hypotheses concerning the effect of religiosity level on emotional responses to AI.

The results support the statement that the level of religiosity plays an important role in the development of emotional reactions to AI. It was observed that religious individuals showed diverse emotions in response to AI, which supports the general hypothesis about the significant effect of religiosity on attitudes towards this technology. This would suggest the existence of certain correlations between religiosity level and the perception of and emotional response to AI. Consequently, these results highlight the need to include religious aspects in further research on attitudes towards AI.

The results of this study provide data supporting hypothesis H1 concerning negative emotions. We found that religious individuals exhibited considerably higher levels of fear and anger compared to irreligious and indifferent respondents. This supports the assumption that religiosity can intensify negative emotional reactions to new technologies, such as AI. This means that hypothesis H1, postulating that religiosity level can intensify feelings such as fear and anger towards AI, are supported by the results of our study.

The results of this study do not clearly support the hypothesis concerning positive emotions (H2). Although we found a lower level of sadness among religious respondents, which may indicate a lower intensity of negative emotions, the absence of significant differences across the groups in the level of joy does not provide sufficient evidence to unambiguously support H2. This may mean that the relationship between religiosity and positive emotions towards AI is more complex and requires further research.

The results of the “Students’ Attitudes towards AI” survey indicate the complex effect of the level of religiosity on the emotional response to artificial intelligence. The survey revealed significant emotional differences between religious groups, especially in negative emotions such as fear, sadness, and anger. These results suggest that religiosity is associated with more complex and intense emotions towards AI technology. Our findings support the results of a study concerning cultural and religious differences in the perception of AI (

Yam et al. 2023) and a study (

Abadi et al. 2023) indicating the importance of religious and ethical values in the perception of AI. Also, the studies by

Uttam (

2023),

Shibuya and Sikka (

2023), and

Al-Kassimi (

2023), which underscore the effect of religion and culture on AI-related emotional responses, are in line with the findings of our study.

Our study reveals significant differences in emotional responses to artificial intelligence across individuals with different levels of religiosity, noting that more religious individuals show higher levels of fear and anger towards AI. This corresponds to the results of the study conducted by Mantello and colleagues (

Mantello et al. 2023), who found a negative correlation between religiosity and attitudes towards artificial emotional intelligence (EAI), particularly among the followers of Islam and Buddhism. These results confirm the previous findings that deeply rooted religious beliefs may influence the perception and acceptance of new technologies. Both the results reported by Mantello and our findings point to the significance of the religious and cultural context in shaping emotional responses to AI technology (

Mantello et al. 2023). Our results show stronger AI-related fears and uncertainty among religious respondents, which may stem from anxiety about the consistency of technological development with their ethical and spiritual values.

Our findings indicate that religious individuals experience a higher level of fear when confronted with AI than irreligious or indifferent ones. This increase in fear may stem from anxiety about the unknown and potential threat that new technologies may pose to religious values and beliefs. This fear can be interpreted as a defensive reaction to AI, which is perceived as a threat to traditional values and moral principles deeply rooted in religious doctrines. In modern societies, there is a frequently observed tendency for people to treat religious beliefs as subjective opinions, as opposed to beliefs based on empirical evidence, which are perceived as objective knowledge (

McGuire 2008). In this context, religious people may be afraid that advanced technologies, such as AI, may lead to the deterioration of interpersonal relationships (

Glikson and Woolley 2020), a decrease in the significance of human empathy (

Montemayor et al. 2022), or the blurring of boundaries between human and machine intelligence (

Galaz et al. 2021).

Additionally, the increase in fear may be related to a sense that AI poses a threat to the unique role of the human being as a creature endowed with free will and moral choice. In many religions, humans are perceived as exceptional beings (

Fel 2018), and AI can be seen as a challenge to this uniqueness. In particular, the development of autonomous AI systems, capable of making decisions independently from human supervision, can cause anxiety associated with the possibility of losing control over technology and its unpredictable outcomes (

Ho et al. 2023;

Park et al. 2023).

Moreover, the fear among religious people may be fueled by public debates, which often present artificial intelligence in the context of negative scenarios, such as the human workforce being supplanted by machines (

Modliński et al. 2023), potential threats to privacy and security (

Siriwardhana et al. 2021), and even apocalyptic scenarios associated with the excessive autonomy of AI (

Federspiel et al. 2023). This kind of narrative may increase existing fears and lead to a greater distrust of new technologies.

The lower level of sadness among religious people found in our study may stem from the deeply rooted belief that religion not only provides a system of values but also networks of social and spiritual support (

Isański et al. 2023), which can be helpful in coping with the uncertainty and anxiety caused by rapid technological changes. For many religious people, faith is a source of security and hope (

Adamczyk and Jarek 2020), which may effectively prevent the feeling of sadness associated with the potential threat posed by new technologies to the traditional way of life.

Religion often provides its followers with an interpretative framework that helps them see the world and its changes as meaningful. In the face of the growing role of technology, churches and other religious institutions experience a loss of their monopolistic position in the market of worldviews and interpretations of life (

Mariański 2023). This implies the need for them to adapt to technological realities without violating the principles of faith. Religious institutions may begin to see technological progress as harmonizing with their activities; this helps followers adjust to and find their place in the new technological reality. In some religions, there is a perceptible belief that technologies such as AI can support religious practices. This kind of approach can mitigate negative emotions, such as sadness, in the face of challenges associated with modern technologies.

The tendency to harmonize religion with technological progress finds expression in the practical activities of religious communities. Examples of such adaptation of religiosity and mediatization of religious practices include the transferring of religious activities to the Internet (

Bingaman 2023). Research on the response of churches to the COVID-19 pandemic shows that they quickly adapt to new technologies, enabling the continuation of religious practices online. This attests to the flexibility and adaptability of religious communities in the face of technological changes (

Cooper et al. 2021). Additionally, practices such as prayer, meditation, and participation in rituals can play a role as a collection of mechanisms for coping with stress and anxiety, contributing to greater emotional resistance and a weaker feeling of sadness (

Fel et al. 2023;

Jurek et al. 2023). These activities can give religious believers a sense of control and inner peace, even in the face of dynamic technological changes.

The observation that religious people show a higher level of anger in response to AI may be related to strong anxieties concerning the ethical and moral implications of the developing technologies. Anger may therefore stem from the feeling that AI is being developed without the necessary reflection on its potential impact on traditional values and social norms (

Sætra and Fosch-Villaronga 2021).

The higher level of anger in response to AI that was observed among religious people may be related to strong anxieties concerning the ethical and moral implications of the developing technologies. There are no codes of ethics that would harmonize technological innovations with religious and spiritual values, provoking questions about specific technological implementations based on ethics. What remains a problem is the concrete application of categories such as fairness, transparency, and human supervision in AI systems and the definition of what “human-centered artificial intelligence” means in light of religious and ethical values (

Hagendorff 2020). A modification is recommended in the approach to ethics, which should cover various aspects—from the ethics of technology, through machine ethics, computer ethics, and information ethics, to data ethics. This kind of change in approach is crucial for ethics to be effective and appropriate to the rapidly developing technologies (

Gebru et al. 2021). Moreover, the higher level of anger may also be an expression of fears associated with the possible application of AI in a manner that may violate the privacy, dignity, or freedom of individuals, which is contrary to many religious beliefs about the sacredness of life and personal autonomy. Examples of this kind of application may include surveillance, behavioral control, or even the manipulation of people by means of advanced technologies.

The lower level of disgust observed among religious believers in response to AI may reflect their ability to see these technologies in a wider and often spiritual context. As mentioned before, many religious people may perceive new technologies not as a threat but as tools that can support their religion or even enrich spiritual and social life.

The development of new technologies, especially the Internet, has enabled the emergence of new forms of expressing religiosity and religious practice and has given rise to new religious movements. It has also enabled the adaptation and reinterpretation of traditional religious practices in such a way that they can function and develop in the digital space. In other words, new Internet technologies have not only led to the emergence of new forms of religiosity but have also made it possible to transfer and adjust existing, traditional religious practices to a digital environment. This opens new possibilities for their development and expression (

Campbell 2011).

In this perspective, technologies such as AI are not perceived as inherently good or evil; their evaluation depends on the way people use them. They can be used to support spiritual development, education, and communication or even to achieve a deeper understanding of religious texts and practices.

The lower level of disgust observed may also stem from the fact that many religions promote values such as mercy, compassion, and understanding. These values may encourage the faithful to approach new technologies with greater openness and a less critical attitude. Rather than feel disgust, religious people may be more inclined to look for ways in which technology can serve higher purposes such as helping the needy, improving the quality of life, or even spiritual development.

However, the weaker disgust response does not have to mean a lack of criticism or unawareness of potential threats. Religious people may still be aware of and concerned about the moral consequences of using AI technology, but, at the same time, they see it as a potential tool to achieve positive goals.

In summation, the lower level of disgust towards AI among religious people can be interpreted as resulting from their ability to integrate new technologies with their spiritual worldview and values. Balanced with ethical and moral responsibility, this attitude of openness can contribute to a more harmonious coexistence between technological progress and traditional value systems.