Abstract

The digital transformation of the offshore and maritime industries will present new safety challenges due to the rapid change in technology and underlying gaps in domain knowledge, substantially affecting maritime operations. To help anticipate and address issues that may arise in the move to autonomous maritime operations, this research applies a human-centered approach to developing decision support technology, specifically in the context of ice management operations. New technologies, such as training simulators and onboard decision support systems, present opportunities to close the gaps in competence and proficiency. Training simulators, for example, are useful platforms as human behaviour laboratories to capture expert knowledge and test training interventions. The information gathered from simulators can be integrated into a decision support system to provide seafarers with onboard guidance in real time. The purpose of this research is two-fold: (1) to capture knowledge held by expert seafarers, and (2) transform this expert knowledge into a database for the development of a decision support technology. This paper demonstrates the use of semi-structured interviews and bridge simulator exercises as a means to capture seafarer experience and best operating practices for offshore ice management. A case-based reasoning (CBR) model is used to translate the results of the knowledge capture exercises into an early-stage ice management decision support system. This paper will describe the methods used and insights gained from translating the interview data and expert performance from the bridge simulator into a case base that can be referenced by the CBR model.

1. Introduction

The digital transformation of the offshore and maritime industries will present new safety challenges for operations in ice-covered waters. A main safety concern with the rapid advancement of automation lies in its inherent goal to replace human operators with fully autonomous ships. The forecasted replacement of crews’ responsibilities with automated functions is problematic. Many researchers have argued that humans are vital to the safe operation of complex socio-technical systems [1,2,3]. In response to the technology-driven transformation, offshore and maritime regulators and classification societies, such as the International Maritime Organization (IMO) and Lloyd’s Register, have developed regulations and guidelines to shape the safe implementation of automation for offshore and maritime operations [4,5].

Researchers in the maritime domain have advocated for a more human-centered approach to the development and integration of autonomous operations [6,7]. This is especially important for offshore ice management operations as the safety of these operations relies heavily on the judgement and decision making of experienced captains and their bridge teams, and the team’s ability to routinely perform task adaptations. The expectations of seafarers and the current need for routine adaptations and customized ice management plans highlight two underlying knowledge gap in the domain: (1) a time-consuming and informal on-the-job training of new mates and cadets, and (2) a lack of consensus on operating procedures for the safe management of pack ice offshore. These gaps highlight the need to capture expert knowledge and to standardize the distribution of ice management training. Therefore, emphasis should be placed on improving the transfer of skills from experienced operators to new mates and cadets. To do this, we need a better understanding of how seafarers perform current pack ice management operations and how they adapt to address problems.

A human-centered approach to developing a decision support system can help with the needed knowledge capture and mobilization. Our approach is to use simulation technology to capture expert knowledge on offshore pack ice management operations. We plan to develop an expert-informed decision support system from the information gained from the simulation exercises to foster better knowledge exchange between seafarers. The expert knowledge can be embedded into a decision support system using case-based reasoning (CBR). CBR is well suited to capture the expertise of captains and provide standardized assistance and learning opportunities to cadet and other seafarers.

This paper focuses on the early development stages of a CBR decision support system, which includes (1) gathering contextual knowledge from expert seafarers (e.g., data collection), and (2) indexing the seafarers experiences from a variety of scenarios into a CBR case base. This paper will describe the methods used and the insights gained from translating interview data and expert performance from a bridge simulator into a case base that can be referenced by the CBR model.

2. Background

2.1. Offshore Ice Management Operations

The year-round activity of offshore oil operations combined with the harsh ice conditions off the coast of Newfoundland present an ongoing need for ice management operations to protect the oil platforms in the area and ensure safe operations. In particular, the Grand Banks commonly experience annual seasons of icebergs and variable seasons of pack ice [8,9,10]. Ice management operations offshore involve a broad scope of activities that include (1) monitoring and forecasting ice conditions, (2) detecting and tracking icebergs and pack ice, (3) reporting ice conditions, (4) avoiding, breaking, and deflecting ice threats [9,11].

Focusing on the physical interventions of ice management, there is a variety of operations, such as towing icebergs, deflecting ice or icebergs with water cannons and prop wash, as well as breaking up and dispersing sea ice [9]. This research focuses on offshore operations used to deflect or disperse encroaching pack ice from the operational area of an oil platform. Dunderdale and Wright in their review of pack ice management in offshore Newfoundland outlined five common techniques used by offshore support vessels to physical move pack ice, the techniques include linear, sector, circular, stationary/prop wash and pushing [8]. Combinations of these techniques are used to protect offshore platforms against approaching ice. Overall, operations for managing pack ice are complex and performed in dynamic circumstances (e.g., unpredictable maritime winds and currents, and considerable time constraints to respond to danger). Consequently, it is difficult to anticipate how a situation will unfold due to the uncertainty in ice management operations. Since each pack ice situation offshore is different, many operations require customized strategies and approaches.

The majority of ice management training is provided through on-the-job experience in which inexperienced personnel learn informally by supporting experienced crewmembers in performing ice management operations [12]. Informal on-the-job training is both a regulatory and operational problem. From a regulatory perspective, it has taken time to put new regulations into practice. From an operational perspective, much of the competence requirements and training guidelines associated with ice management operations have been developed for navigating Arctic waters, which may not be directly applicable to the specific context of pack ice management around offshore oil platforms.

In Canada, much of the regulatory guidance on ice management training stems from two model courses (Basic and Advanced Training for Ships Operating in Polar Waters) that where developed by IMO in collaboration with the Marine Institute’s Centre for Marine Simulation and the professional organization Master Mariners of Canada [13,14]. Other regulatory guidance focuses on certification requirements of ‘ice navigators’, which are based on: (1) IMO code for Ships Operating in Polar Waters (Polar Code) [15], and (2) the Canadian Arctic Shipping Safety and Pollution Prevention Regulations (ASPPR) that were developed for navigating in Arctic waters [16]. According to Canada’s ASPPR regulations, a seafarer receives the designation of an ‘ice navigator’ after serving on a vessel as master for at least 50 days, where 30 days of this time must have been in Arctic waters when the vessel is in ice conditions requiring ice avoidance or ice breaking maneuvers [12,16]. Conversely, to receive certification as an ‘ice advisor’, the Joint Industry–Government Guidelines for the Control vessels in Ice Control Zones of Eastern Canada (JIGs) requires master seafarers to complete at least 90 days navigating in ice-covered waters over the course of 5 years (e.g., six trips each with a minimum of 15 days in ice conditions) [17].

Applying the regulations and guidelines for an ice advisor to the context of offshore ice management, it will take seafarers many ice seasons to develop the needed proficiency and expertise to respond to situations where offshore platforms are impeded by sea ice. As such, the conventional on-the-job form of ice management training has created an inefficient knowledge exchange from captains to new mates and training cadets. To compound this issue further, pack ice management operations offshore rely on the inherited experience of captains and their bridge teams, and the team’s joint ability to develop ad-hoc strategies and perform task adaptations. Consequently, the informal nature of on-the-job practice for ice operations has created a skill disparity in the domain. This is evident from Veitch et al. research, which tasked experienced seafarers with performing ice management operations in emergency conditions in a simulator and found a high level of variability in performance [18]. This variability in ice management skills amongst experienced seafarers has likely propagated down the hierarchy to mates and cadets.

2.2. Case-Based Reasoning Theory

Case-based reasoning (CBR) is a constructivist paradigm to solving problems that is useful for machine learning and artificial intelligence applications. The aim of CBR is to mimic how experienced people make decisions based on using their experiences to form plausible approaches for new situations. The CBR method is suitable for domains that require regular interpretation of open-ended or poorly understood problems [19]. Over the last 30 years, CBR has been employed or combined with other methods (e.g., rule-based reasoning) to develop artificially intelligent applications for decision support and learning in education [20], medical diagnosis [21,22], and environmental emergency decision making [23].

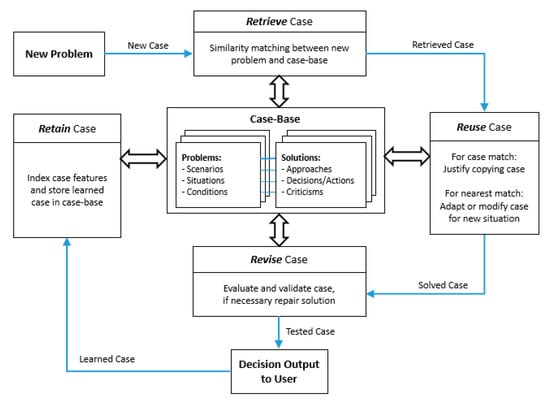

Figure 1 provides the general CBR model. The CBR model builds a case base of previously established knowledge and specific examples from past situations, known as a case base, to help understand new problems and offer solutions [20,24,25]. Cases can take the form of successfully solved problems as well as failed problems [24]. The solved problems create a basis of features and examples used for matching cases to the conditions of new situations. The failed problems form reminders of what approaches did not work and how to avoid repeating mistakes when presented with new problems.

Figure 1.

Case-based reasoning model for decision support, showing the retrieval, reuse, revision, and retention of cases (adapted from [24] with permission from IOS Press, 1994).

As depicted in Figure 1, there are four main stages of the CBR model: retrieval, reuse, revision, and retention. The following is an explanation of the stages based on Aamodt and Plaza [24]. Case retrieval involves identifying features of a new problem, searching for a feature match within the existing case base and selecting the matched or partially matched case for use. Case reuse is a closer examination of the reusable aspects and differences between the new and retrieved cases. For a matched case, a copy of the retrieved case is reused as the solution for the new situation. For a partially or an inferred matched case, adaptations are made to the case solution before it can be transferred for reuse in the new situation. Case revision is a form of validation that involves testing the solution by asking a subject matter expert or by applying the decision in real life or in a simulation. Decisions that resulted in failed solutions are repaired by creating explanations for the errors and modifying the cases to avoid making the same errors again. Case retention is how the CBR model learns by working through new cases. Useful aspects of the successful and repaired cases from the revision stage are indexed and integrated into the case base.

For decision support applications, the CBR model provides an incremental learning method for modeling complicated decisions by representing and organizing expert knowledge in a way that enables decision support systems to quickly retrieve similar cases and adapt them to new situations [20,26]. Gathering subject matter expert knowledge is vital for the development of two aspects of the CBR model: (1) specific domain knowledge, needed to generate example solutions for the case base; and (2) general domain knowledge, needed to develop the reasoning structure of the CBR model. The general domain knowledge guides the case feature matching, retrieval, and indexing by explaining the semantic similarities and contextual links in the case-base network [24].

The CBR model requires an embedded case memory to support the case matching, retrieval, and retention functionality. Case memory indexing can be based on flat organizational models, or more detailed hierarchal models (e.g., 3-layer, dynamic memory, decision tree induction based, and object based) and network-based models (e.g., concept retrieval nets, category exemplar, and ontology based) that are informed by domain knowledge [27]. A common network-based memory model for the reasoning structure of the CBR is the category-exemplar model [27,28]. The category-exemplar model creates contextual meaning in the CBR model by using three indices to link and store case data (e.g., case features, domain informed categories, and index pointers that link the data by feature, case, and differences [24]. The semantic indexing helps identify and compile case features that are similar to the conditions of a new situation and offers the nearest matching case to generate the new solution.

2.3. Case-Based Reasoning for Ice Management Decision Support

The CBR is appropriate for the first-degree automation in which seafarers are provided with onboard decision support. CBR is a good fit for providing decision support in the maritime domain because it offers advice from an embedded expert that is similar to how seafarers currently provide training on-the-job. Seafarers offer words of wisdom (or ‘rules of thumb’) by sharing their experiences on-the-job, often through analogies and storytelling. The CBR model replicates this information exchange. To illustrate the similarities in problem solving, the following is a simple example (modelled after examples from [24]):

A captain is actively clearing pack ice in close proximity to an offshore oil platform when they are reminded of a relevant past experience. Some weeks earlier, this same captain experienced a situation where encroaching pack ice quickly enveloped an offshore platform. Due to the unpredictable environmental conditions, the drift speed of the ice floe abruptly changed, complicating their ice management operations. In particular, they remember a mistake they made of not orienting their vessel away from the platform in order to have a bailout plan. The current ice management task is very similar to the situation from their memory (e.g., within the operational zone of the platform and a sudden change in weather conditions). They use this memory to avoid repeating the mistake in the current situation.

Applying the CBR model to an onboard decision support system could assist a cadet in a similar way to how a captain would use their experience to solve a problem. Collecting a group of expert seafarers’ experiences in ice management can inform the reasoning structure of the CBR decision support system and populate an initial case base of solved problems. The CBR model allows the decision support system to incrementally learn (e.g., build its case base) and increase its accuracy (e.g., improved indexing) as it assists cadets in problem solving.

3. Methods

To gather seafarers’ experiences in managing pack ice offshore, similar to the example above, we conducted a pilot study that used a combination of semi-structured interviews and bridge simulator exercises. The pilot study employed a three-phased knowledge elicitation plan: (i) interview seafarers on their approach to ice management scenarios and what factors they considered during ice management, (ii) ask the seafarers to evaluate the performance of cadets in the same ice management scenarios, and (iii) have the seafarers complete the same ice management scenarios in a bridge simulator. This section describes the participants, the ice management scenarios used in this study, and explains the methods used to collect information from the participants on their approach, criticism, and performance to each of the scenarios. An explanation of the data analysis and the integration of the data into the CBR case base is also provided.

3.1. Participants

Experienced seafarers were recruited to participate in this study. Participants were given the option of attending one or both sessions (e.g., interview or simulation exercises). Information was collected from four participants in the interview session and from four participants in the simulator session. Three participants completed both sessions while two participants attended one session. In total, five participants completed this study.

All participants were experienced seafarers. Participants ranged in experience operating at sea from 10 to 30 years (M = 17.6; SD = ±7.9). Participants also ranged in experience operating in the presence of ice from 2 to 7 years (M = 4.8; SD = ±1.9). Participants also had a range of experience operating in different regions and with different types of vessels. The regions the seafarers operated in included coastal Newfoundland and Labrador, the Arctic/ North of 60, the Gulf of Saint Lawrence, and the Great Lakes. The different vessels included ice breakers, offshore supply vessels (OSVs) and anchor handling tug supply (AHTS), tanker/ bulk/ cargo vessels, and coastal ferries. In relation to the types of ice operations, two participants had only performed watch keeping during transit in the presence of ice. Three participants had experience watch keeping in the presence of ice as well as performing the following operations in ice: ice management in open water and confined water, maneuvering a ship to escort another vessel, towing or emergency response, and maneuvering a ship while being escorted.

In terms of the training the participants had in ice operations, two seafarers had no formal training in ice operations, and the other three seafarers had completed advanced training in ice operations.

3.2. Offshore Ice Management Scenarios

An anchor handling tug supply (AHTS) vessel was selected for this pilot study as it is commonly used for offshore support and handling pack ice management operations in offshore Newfoundland. The AHTS was modeled in the bridge simulator and described to the participants during the interviews and simulator exercises. The following vessel parameters were explained to the participants. The virtual ship was modeled after a 75 m twin-screw AHTS vessel with an ice class of ICE-C [29]. The ship had two 5369 kW engines and was equipped with 896 kW tunnel thrusters in the bow and stern.

Three scenarios that required ice management support from the AHTS vessel were used to gather information from the seafarers. Table 1 provides a brief description of the tabletop scenarios. For comparison purposes, the scenarios were based on two previous simulator experiments [18,29].

Table 1.

Pack ice management tabletop exercises and simulation scenario.

3.3. Seafarers’ Approach to Ice Management

The interview phase used a semi-structured approach to enquire about two aspects: (i) identifying what factors the participants prioritized in different ice management situations, and (ii) capturing the experienced participants’ approach to the different ice management tabletop scenarios. Participants completed the interview individually and their responses were audio recorded.

To assist the researchers in conducting the interview, participants were first asked to complete an experience questionnaire designed to gain background information about the participant and their experience at sea. Then participants were asked to list all the factors they consider during ice management to understand what information seafarers prioritized in their decision making. For each of the factors listed, the researchers asked further questions about how these factors were considered during ice management. If the participant had difficulty generating a list, the researchers provided a list of factors for the participant to comment on. Once the list was generated, the participant was tasked with ranking the factors based on overall importance and again for specific ice management situations. To capture the participants’ approaches to the ice management scenarios, the participants were provided with one of the three ice management tabletop scenarios (shown in Table 1) and were asked to describe how they would approach each situation. They were asked to draw their approach on a schematic and to denote their decision points. The researchers asked the participants to elaborate on the reasoning for their choices at each decision point. Each of the ice management scenarios were discussed individually.

3.4. Critiquing Cadet Training Examples

Once the participants explained their strategy, they were asked to review pre-recorded examples of cadets performing in the same scenarios in a bridge simulator. The objective of this phase was to gather the experienced participants’ advice, recommendations, and feedback on cadets’ performance in the same ice management situations.

The cadet examples used in this phase came from a previous study [29] that trained cadets in different pack ice management techniques using simulation exercises. Six cadet examples were used for the participants’ evaluation (two cadet examples for each of the three ice management scenarios).

The participants, who were experienced seafarers, were shown anonymized replay files of the cadets’ performance in the ice management scenarios. This was in the form of top–down replay videos displaying an offshore supply vessel managing sea ice. The participants were asked to review the cadets’ performance and comment on the efficacy of the cadet examples. The participants provided their observations about the cadets’ performance as well as advice at critical stages in the ice management operations (e.g., how to guide the cadet to maintain course or how to provide intervening instructions). Then the participants were asked to comment on the cadets’ compliance or violation of rules (both regulatory and common-practice procedures). Participants completed this phase of this study individually and their observations and interventions for the cadets were audio recorded.

3.5. Ice Management Bridge Simulator and Exercises

The bridge simulator was used to gather more detailed information about the pack ice management techniques and actions taken by experienced seafarers. Prior to the simulation exercises, participants completed a series of short habituation scenarios that were designed to familiarize the seafarers with the simulator and the control configuration (to reduce any influence the simulator would have on their performance). Then the participants were tasked with completing the three ice management scenarios in the bridge simulator (described in Table 1). After completing each scenario, they were asked a series of debriefing questions related to their approach to the exercise and the overall efficacy of their performance. Participants completed the simulation exercises individually and their responses to the debriefing questions were audio recorded.

3.6. Data Analysis and Integrating Information into the CBR Case Base

The participants’ ice management approaches, reviews of the cadet examples, and debriefs from the simulation exercises were transcribed from the audio recordings. The transcriptions were used to develop the initial reasoning structure of the CBR and to populate cases in the CBR case base.

The general domain knowledge gathered from the participants (e.g., the participants’ factor rankings and comments on ice management strategies) was used to inform the reasoning structure of the CBR (e.g., similarity matching, retrieval, and indexing). The specific domain knowledge gathered from the participants (e.g., the participants’ verbal explanations of their ice management strategies and their observations from the cadets’ ice management performance) was parsed into example cases for the CBR case base.

4. Results

The transcribed data from the three-phased knowledge elicitation study was used to develop cases to populate the CBR case base and to develop the reasoning structure of the CBR. This section describes the knowledge capture process and explains the CBR knowledge representation.

4.1. Knowledge Capture—Gathering Cases to Populate the CBR Case Base

Domain knowledge gathered from the participants was parsed into cases for the CBR case base. The specific domain knowledge included (i) the participants’ verbal explanations of their approaches, decision points and instructions to the ice management scenarios; (ii) the participants’ observations, criticisms, and interventions for the cadets’ performances; and (iii) the participants’ actions in the simulator scenarios and their reflections on their own performance. From the three sources, a list of techniques (and corresponding features) employed by participants for offshore ice management operations was recorded.

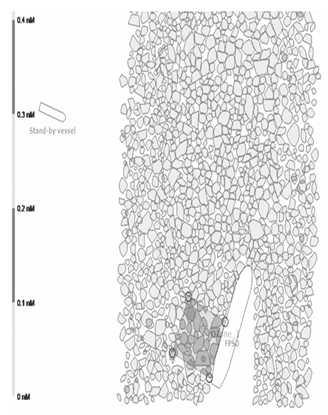

4.1.1. Ice Management Techniques Described by Participants

Table 2 outlines the main ice management techniques that were described in the pilot study. For each scenario, participants suggested and/or performed in the simulator a distinct technique or a combination of techniques. For example, a common approach to scenario Sc.3 was to use the leeway and prop-wash techniques to manage the build up of ice on the FPSO.

Table 2.

Ice management techniques as described by participants.

4.1.2. Translating Participant Data into Cases

The knowledge captured from the pilot study resulted in 34 cases. Table 3 shows a summary of the cases collected and highlights the techniques suggested or employed for each scenario. Specifically, we extracted 18 cases from the interviews, 6 cases from reviewing and advising the cadet examples, and 10 cases from the simulation exercises. Each case linked the pre-programmed attributes of the scenario (e.g., the scenario objective, the weather and ice conditions, and the properties of the target vessel) to the participants’ data (e.g., attributes of their described approaches to the scenario). As such, each case contained details about the ice management technique and the series of decision points (from the approaches interview), interventions (from the cadet examples), or actions (from the simulation exercises) described or performed by the participants. The Navicat Data Modeler software was used as a tool to design the cases and the CBR case base.

Table 3.

Summary of ice management cases collected from each phase of this study.

4.2. Knowledge Representation—Developing the CBR Reasoning Structure

The knowledge representation involved using general domain knowledge gathered from the participants in the three-phased pilot study to establish the reasoning structure of the CBR (e.g., how the case-base data was indexed for similarity matching and case retrieval). The general domain knowledge included (i) the participants’ generated list of important ice management factors and subsequent rankings, (ii) their comments about general ice management strategies, and (iii) their comments on the cadets rule adherence or violations.

4.2.1. Key Ice Management Factors and Rankings

Participants were asked to generate a list of factors that influence their decision making during ice management operations. They were also asked to rank the importance of each factor and how they relate to each of the tabletop scenarios. Table 4 lists the factors the participants discussed in the pilot study.

Table 4.

Important ice management factors generated and ranked by participants.

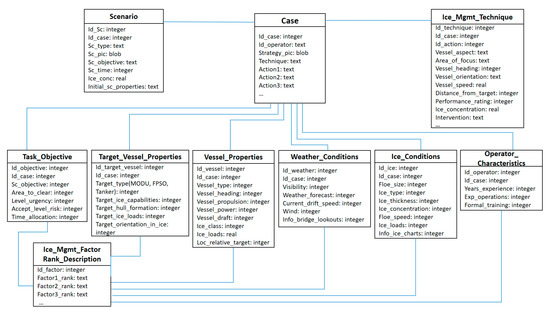

The list of factors was used to develop the CBR knowledge representation. Figure 2 depicts the resulting CBR knowledge representation modeled using Navicat Data Modeler software. Additional factors were added based on the participants’ comments throughout the interviews and simulation exercises (e.g., separate categories for ‘scenario attributes’, ‘target vessel properties’ and splitting the ‘operator characteristics and actions’ category into two: operator characteristics and the operator’s actions represented by the label ‘ice management technique’).

Figure 2.

Class diagram of the CBR for ice management.

4.2.2. Indexing Cases for Matching and Retrieval Using the Category-Exemplar Model

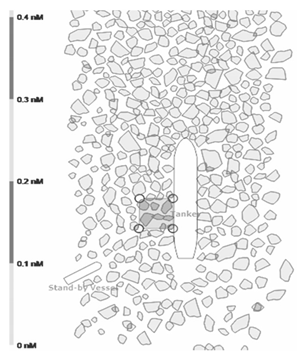

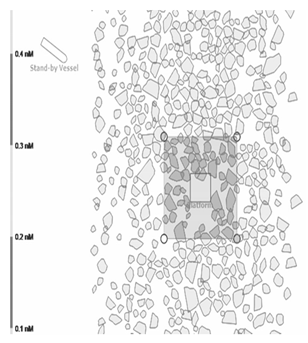

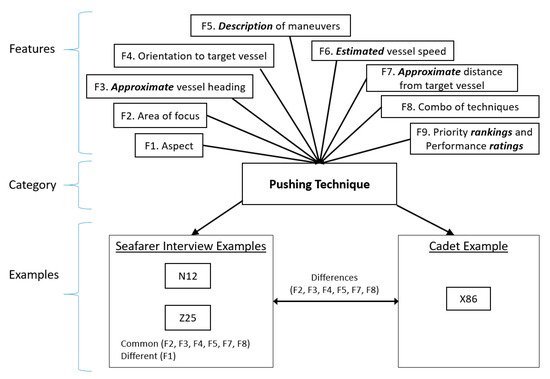

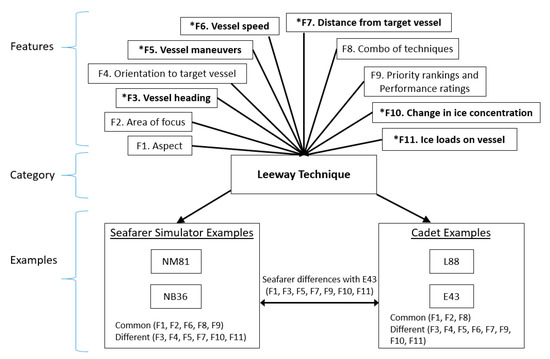

The category-exemplar model [28] was used for the case memory knowledge representation. Indexing features and categorizing common themes that occurred in the case base help to match similar cases with the conditions of future scenarios. To illustrate the indexing, two different ice management techniques described by participants were used. Figure 3 shows the case links for the pushing technique using the interview and cadet example data. Similarly, Figure 4 shows an example of case indexing for the leeway technique using the interview, cadet examples and simulation data. These examples show the relationships between features of the ice management techniques for the seafarer and cadet example cases. The participant or cadet informed cases that used the given technique were compared to identify their commonalities and differences.

Figure 3.

Case indexing for the pushing technique using the interview and cadet data.

Figure 4.

Case indexing for the leeway technique using the interview, cadet examples, and simulator data.

In Figure 3, the participants’ verbal explanations from the interviews and reviewing the cadet examples provided details on the following features: (F1) setting the aspect, (F2) area of focus for the ice clearing, (F3) approximate vessel heading, (F4) orientation of vessel to the target vessel, (F5) the specific vessel maneuvers for the technique, (F6) an estimate of the vessel speed (F7) an approximate distance from the platform, and (F8) whether a combination of techniques were required. A proxy measure of the technique’s efficacy was also added as a feature (F9): the participant’s priority ranking of the technique for the situation and their rating of the cadets’ performance in the examples.

Depending on the source of the data (i.e., interviews or simulation exercises), some cases contained more detailed information on the case features than others. For example, the approaches the participants described in the interviews (Figure 3) represent the techniques under static and ideal circumstances. However, as shown in Figure 4, the simulator data provides more granularity on parameters that cannot be described in detail during the interviews. Such features include (*F3) vessel heading, (*F5) vessel maneuvers, (*F6) vessel speed, and (*F7) distance from target during clearing and the size of the clearing zone. Objective measures of the scenario outcomes were also added as features for the simulator exercises: (*F10) the change in ice concentration in the target zone and (*F11) estimate of the ice loads endured by the vessel.

The indexing process is repeated for all other themes that were identified as important factors by the seafarers. Such categories include the ice management task itself, the weather and ice conditions, and other aspects of the ice management technique. The network of case indices forms the basis of the case memory for the CBR-model.

5. Discussion

5.1. Ice Management Interviews to Establish the CBR Model and Populate Cases

Data captured from the participant interviews was used to develop an early-stage decision support system with a CBR structure and case base. The process of transcribing the audio recordings from the interviews (e.g., seafarer approaches and evaluations of the cadet examples) and converting the data into cases was tedious and time consuming. However, the information captured using this method was useful for classifying ice management strategies and identifying important ice management factors and the relationships between these factors. This information was beneficial for gathering domain knowledge used in establishing the CBR structure and case feature indices.

During the interviews, participants communicated their ice management strategies in a combination of anecdotal descriptions of approaches and highlighted the aspects to consider while conducting ice management operations (e.g., common practices and rules of thumb). In particular, participants described the flexibility of their strategies. They explained that their plan was not fixed and that it was common to approach the situation with multiple strategies or techniques, often referring to it as a trial and error process. This is reflected in the lower ranking of the strategy factor in comparison to other factors in Table 4. When describing the step-by-step decision points of their approach, participants had a tendency to position their vessel in such a way to safely abandon and attempt alternative approaches (e.g., if the current strategy was not working or the conditions of the situation changed, the participants would adjust their strategy and try something different).

It is important to note that the participants’ descriptions of their strategies were in ideal circumstances and resulted in somewhat static strategies. As a result, the interviews were limited in the amount of information they could provide on how to execute the techniques and the resulting outcome of the prescribed approach (e.g., Was the approach successful? How proficient in the technique does a captain need to be to reach the intended outcome?). These limitations were addressed with the data collected from the cadet examples and simulator exercises.

5.2. Evaluating Cadet Examples to Define the Scope of the Ice Management Operations

The CBR model requires a collection of successful and unsuccessful cases to capture the scope of the ice management problem and to offer solutions on how to correct or avoid making mistakes. As such, the participants reviewed examples of successful and unsuccessful ice management operations performed by cadets and provided observations and interventions. The participants’ advice to correct or improve the cadet examples was added as cases to the case base. The cadet examples provided opportunities for the participants to comment on the ways in which ice management techniques can go wrong or indicate which situations require higher proficiency to perform the technique effectively. This review process also prompted the participants to elaborate on why it was necessary to perform the techniques in a certain way or under different conditions. The information captured from evaluating the cadet examples formed the basis of comparison for the feature indexing (e.g., linking the commonalities and differences among the seafarer and cadet cases as represented in Figure 3 and Figure 4).

5.3. Using Simulation Exercises to Refine the CBR Model and Add Details to Cases

In order for the CBR decision support system to be capable of recommending ice management techniques or providing adjustments during the execution of a technique, the CBR model must include a measure of the ice management efficacy. The simulation exercises from the pilot helped improve the CBR model by adding feature details such as the scenario outcome to the case base. For example, cases that were developed from the participant interviews did not contain an objective measure of the technique’s success or failure due to the static circumstances of the tabletop scenarios (as shown in Figure 3). Instead, these cases used subjective measures of the scenario outcomes such as the participants’ priority rankings of their approaches and the participants’ performance ratings of the cadet examples.

Conversely, the simulation data captured the dynamic aspects of ice management approaches. As a result, cases from the simulation exercises provided continuous information on parameters that could not be described in detail in the interviews and outlined the detailed consequences of the participants’ actions in the scenario (as shown in Figure 4). The objective performance metrics from the simulation exercises provided a measure of how well the ice management technique was executed. The performance metrics include (1) the change in ice concentration in the target zone (a measure of ice management effectiveness) and (2) an estimate of the ice loads endured by the vessel (whether the technique is feasible, safe and within the operating capabilities of the vessel). The metrics recorded in the simulator exercises drew out the subtleties of the techniques that were needed to help novice operators, such as cadets, improve their proficiency in the ice management tasks.

6. Conclusions

A human-centered approach is necessary for successful digital transformation for the offshore and maritime industries. We envision that experienced seafarers will play an important role in facilitating the transition from fully crewed vessels to remotely operated vessels and fully autonomous operations. Since one of the goals of first-degree automation is to address the deficiencies of conventional on-the-job training and foster better knowledge exchange between seafarers, it is essential that experienced seafarers be involved in the development of onboard decision support technology. As such, this paper presented a human-centered approach to designing an early-stage CBR decision support system for offshore ice management operations.

This pilot study employed three different data collection methods (e.g., semi-structure interviews on ice management approaches, reviewing cadet examples, and performing simulation exercises) to gather information from experienced seafarers on offshore pack ice management. Overall, the methods used to capture expert knowledge were suitable for the CBR application. Each form of data collection was useful for different aspects of developing the CBR model and case base.

While the 34 cases documented in the pilot study were sufficient to populate the initial CBR case base, the CBR model (both the case memory and corresponding case base) could be improved by adding more cases. The addition of more expert seafarer cases would increase the accuracy of the model’s solution generation, which is important for providing decision support. Cases can be added by repurposing data collected from previous simulation studies [18,29] or collecting new data using simulator exercises. However, simply adding more cases to the CBR model could compromise the decision support system’s efficiency because it takes the system longer to sort through the cases to find a match if the case indexing is deficient. To avoid this problem, it is important to have a well-established and tested case memory to effectively match new situations with the case features. We recommend testing the initial CBR model’s similarity matching and solution generation before collecting more data to populate cases.

The next step of this research is to gather more cases, develop the retrieval algorithm for the CBR model, and test the CBR decision support system’s similarity matching and solution generation functionality in a simulated environment. To get the most value out of future simulation trials, we recommend corresponding the data collection to the intended decision support functionality so that specific case metrics can be recorded automatically. The data collection methods used in this pilot study required substantial post-processing and interpretation before they were integrated into the CBR model as cases. While this was an adequate process to demonstrate the concept of the CBR decision support system, from a practical perspective, future work should focus on automating the data collection. This can be achieved by embedding the case feature recording, parsing, and indexing into the existing simulation technology. This integration would make the decision system more informative and effective, which would improve the practicality of the CBR decision support system.

Future work will focus on the verification and validation of the CBR decision support system with cadets and seafarers in a simulator setting. Training simulators, such as the bridge simulator used in this pilot study, have been shown to be useful human behaviour laboratories for both knowledge capture and testing training interventions such as a decision support system. This pilot study demonstrated that the information gathered from the simulator exercises improved the design of the initial CBR decision support system and increased the level of detail of the case base. Once the development of CBR decision support system has been completed, testing the CBR model in a simulator setting would verify the decision support system’s capabilities of offering onboard guidance on pack ice management techniques.

Author Contributions

Conceptualization and methodology, J.S., F.Y, M.M., and B.V.; data collection and analysis, J.S., F.Y., and R.T.; writing—original draft preparation, J.S.; writing—review and editing, J.S., F.Y., and B.V.; supervision, M.M. and B.V.; project administration and funding acquisition, B.V. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Natural Sciences and Engineering Research Council of Canada (NSERC) and Husky Energy Industrial Research Chair in Safety at Sea, grant number IRCPJ500286-14.

Acknowledgments

The authors acknowledge with gratitude the support of the NSERC/Husky Energy Industrial Research Chair in Safety at Sea.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of this study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

References

- Bainbridge, L. Ironies of automation. Automatica 1983, 19, 775–779. [Google Scholar] [CrossRef]

- Parasuraman, R.; Wickens, C. Humans: Still vital after all these years of automation. Hum. Factors 2008, 50, 511–520. [Google Scholar] [CrossRef] [PubMed]

- Hollnagel, E.; Wears, R.; Braithwaite, J. From safety-I to safety-II: A white paper. In The Resilient Health Care Net; University of Southern Denmark: Odense, Denmark; University of Florida: Gainesville, FL, USA; Macquarie University: Sydney, Australia, 2015. [Google Scholar] [CrossRef]

- IMO Takes First Steps to Address Autonomous Ships. Available online: http://www.imo.org/en/MediaCentre/PressBriefings/Pages/08-MSC-99-MASSscoping.aspx (accessed on 11 June 2020).

- Lloyd’s Register Cyber-Enabled Ships: ShipRight Procedure Assignment for Cyber Descriptive Notes for Autonomous & Remote Access Ships. Available online: http://info.lr.org/l/12702/2016-07-07/32rrbk (accessed on 11 June 2020).

- Mallam, S.; Nazir, S.; Sharma, A. The human Element in Future Maritime Operations—Perceived Impact of Autonomous Shipping. Ergonomics 2020, 63, 334–345. [Google Scholar] [CrossRef] [PubMed]

- Aylward, K.; Johannesson, A.; Weber, R.; MacKinnon, S.; Lundh, M. An evaluation of low-level automation navigation functions upon vessel traffic services work practices. WMU J. Marit. Affairs 2020. [Google Scholar] [CrossRef]

- Dunderdale, P.; Wright, B. Pack Ice Management on the Southern Grand Banks Offshore Newfoundland Canada; PERD/CHC Report 20-76; Noble Denton Canada Ltd.: St. John’s, NL, Canada, 2005; pp. 1–42. [Google Scholar]

- McClintock, J.; McKenna, R.; Woodworth-Lynas, C. Grand Banks Iceberg Management; PERD/CHC Report 20-84; Report prepared for PERD/CHC, National Research Council Canada, Ottawa, ON; Report prepared by AMEC Earth & Environmental, St. John’s, NL, R.F. McKenna & Associates: Wakefield, QC, Canada; PETRA International Ltd.: Cupids, NL, Canada, 2007; pp. 1–82. [Google Scholar] [CrossRef]

- Canadian Coast Guard. Ice Navigation in Canadian Waters; Ice Breaking Program Maritime Services Canadian Coast Guard Fisheries and Oceans Canada: Ottawa, ON, Canada, 2012; pp. 1–158. Available online: https://www.ccg-gcc.gc.ca/publications/icebreaking-deglacage/ice-navigation-glaces/page01-eng.html (accessed on 19 June 2020).

- Keinonen, A. Ice management for ice offshore operations. In Proceedings of the Offshore Technology Conference, Houston, TX, USA, 5–8 May 2008. OTC 19275. [Google Scholar] [CrossRef]

- Østreng, W.; Eger, K.M.; Fløistad, B.; Jørgensen-Dahl, A.; Lothe, L.; Mejlænder-Larsen, M.; Wergeland, T. Shipping and Arctic Infrastructure. In Shipping in Arctic Waters: A Comparison of the Northeast, Northwest and Trans Polar Passages; Springer Praxis Books: Berlin/Heidelberg, Germany, 2013; pp. 177–239. [Google Scholar] [CrossRef]

- International Maritime Organization. Model Course 7.11: Basic Training for Ships Operating in Polar Waters, 2017th ed.; International Maritime Organization: London, UK, 2017; pp. 1–59. [Google Scholar]

- International Maritime Organization. Model Course 7.12: Advanced Training for Ships Operating in Polar Waters, 2017th ed.; International Maritime Organization: London, UK, 2017; pp. 1–37. [Google Scholar]

- International Maritime Organization. International Code for Ships Operating in Polar Waters (Polar Code); MEPC 68/21/Add.1; International Maritime Organization: London, UK, 2017; pp. 1–42. [Google Scholar]

- Arctic Shipping Safety and Pollution Prevention Regulations SOR/2017-286. Available online: https://laws-lois.justice.gc.ca/eng/regulations/SOR-2017-286/FullText.html (accessed on 6 July 2020).

- Transport Canada Joint Industry-Government Guidelines for the Control of Oil Tankers and Bulk Chemical Carriers in Ice Control Zones of Eastern Canada (JIGs) TP15163 (2015). Available online: https://tc.canada.ca/en/marine-transportation/marine-safety/joint-industry-government-guidelines-control-oil-tankers-and (accessed on 6 July 2020).

- Veitch, E.; Molyneux, D.; Smith, J.; Veitch, B. Investigating the influence of bridge officer experience on ice management effectiveness using a marine simulator experiment. J. Offshore Mech. Arct. Eng. 2019, 141, 1–12. [Google Scholar] [CrossRef]

- Kolodner, J. An Introduction to Case-Based Reasoning. Artif. Intell. Rev. 1992, 6, 3–34. [Google Scholar] [CrossRef]

- Kolodner, J.; Camp, P.J.; Crismond, D.; Fasse, B.; Gray, J.; Holbrook, J.; Puntambekar, S.; Ryan, M. Problem-based learning meets case-based reasoning in the middle-school science classroom Putting learning by design (tm) into practice. J. Learn. Sci. 2003, 12, 495–547. [Google Scholar] [CrossRef]

- Rossille, D.; Laurent, J.; Burgun, A. Modelling a decision-support system for oncology using rule-based and case-based reasoning methodologies. Int. J. Med. Inform. 2005, 74, 299–306. [Google Scholar] [CrossRef] [PubMed]

- Kumar, K.; Singh, Y.; Sanyal, S. Hybrid approach using case-based reasoning and rule-based reasoning for domain independent clinical decision support in ICU. Expert Syst. Appl. 2009, 36, 65–71. [Google Scholar] [CrossRef]

- Wang, D.; Wan, K.; Ma, W. Emergency decision-making model of environmental emergencies based on case-based reasoning method. J. Environ. Manag. 2020, 262, 110382. [Google Scholar] [CrossRef] [PubMed]

- Aamodt, A.; Plaza, E. Case-based reasoning: Foundational issues, methodological variations, and system approaches. AI Commun. 1994, 7, 39–59. [Google Scholar] [CrossRef]

- Homem, T.; Santos, P.; Costa, A.; da Costa Bianchi, R.; de Mantaras, R.L. Qualitative case-based reasoning and learning. Artif. Intell. 2020, 283, 103258. [Google Scholar] [CrossRef]

- Han, J.; Kamber, M.; Pei, J. Classification: Advanced methods. In Data Mining: Concepts and Techniques, 3rd ed.; Elsevier: Waltham, MA, USA, 2011; pp. 425–426. [Google Scholar]

- Soltani, S.; Martin, P. Case-Based Reasoning for Diagnosis and Solution Planning; Technical Report No. 2013-611; Queen’s University: Kingston, ON, Canada, 2013; pp. 1–54. [Google Scholar]

- Porter, B.; Bareiss, R.; Holte, R. Concept learning and heuristic classification in weak theory domains. Artif. Intell. 1990, 45, 229–263. [Google Scholar] [CrossRef]

- Thistle, R.; Veitch, B. An evidence-based method of training to targeted levels of performance. In Proceedings of the SNAME Maritime Convention, Tacoma, WA, USA, 30 October–1 November 2019; SNAME-SMC-2019-030. Available online: https://www.onepetro.org/conference-paper/SNAME-SMC-2019-030 (accessed on 2 June 2020).

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).