Towards Multi-Robot Visual Graph-SLAM for Autonomous Marine Vehicles

Abstract

1. Introduction and Related Work

2. Materials and Methods

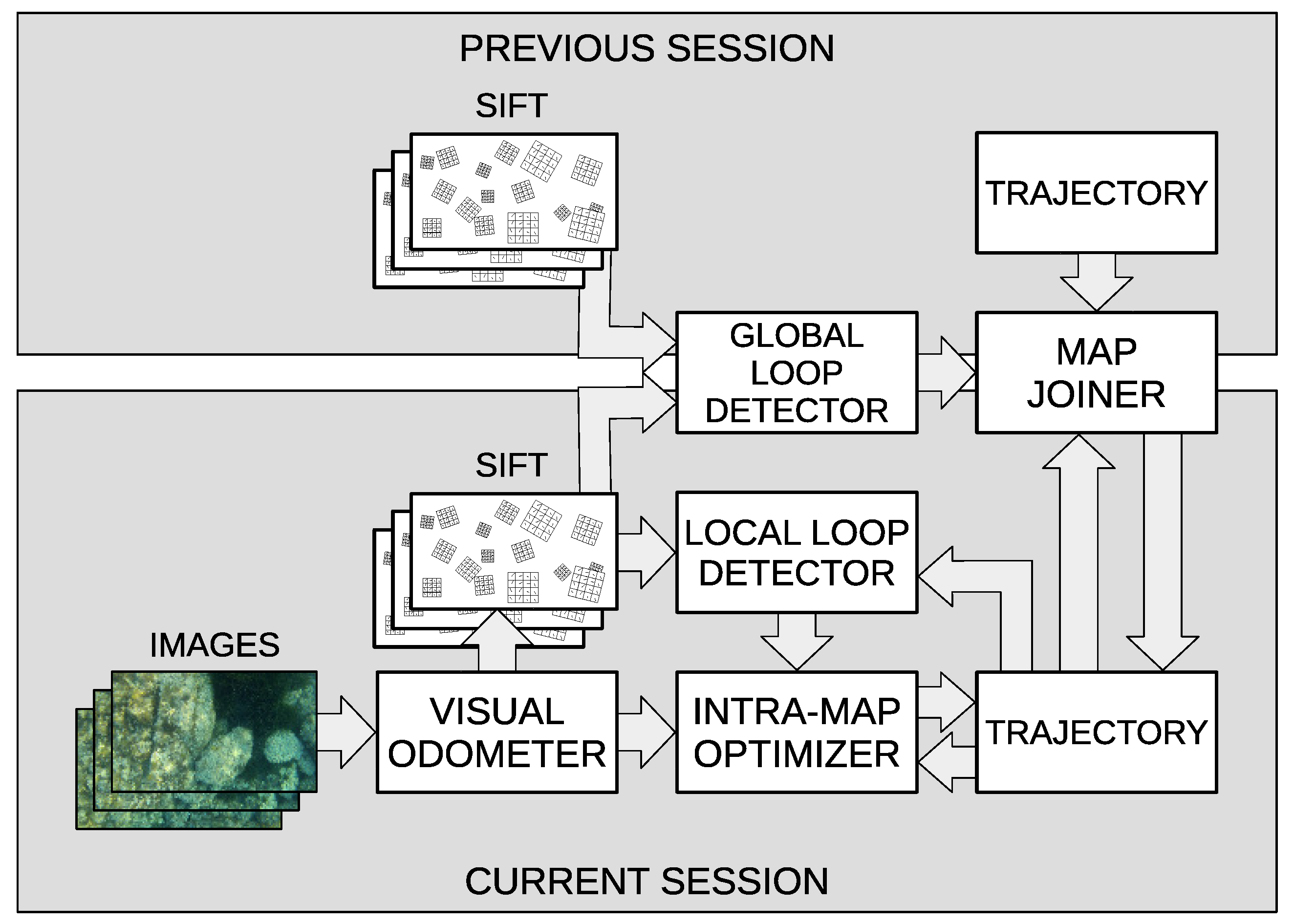

2.1. Overview

2.2. Intra-Session SLAM and Map Joining

2.2.1. Visual Odometry

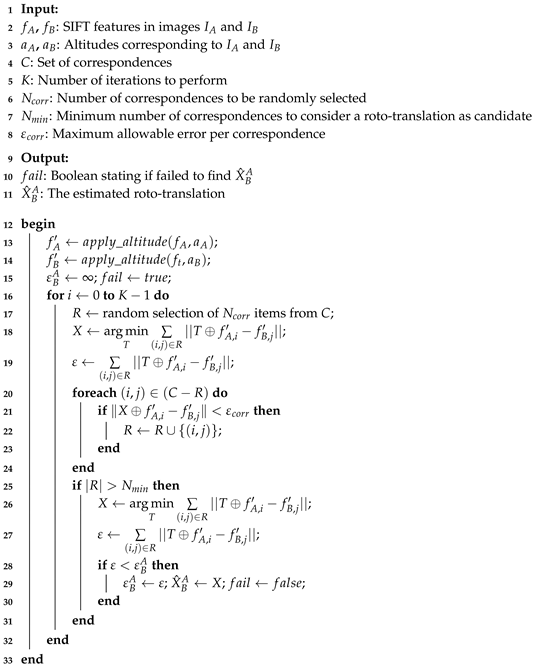

| Algorithm 1: RANSAC approach to estimate the motion from image to image . |

|

2.2.2. Local Loop Detection and Trajectory Optimization

2.2.3. Inter-Session Loop Closings

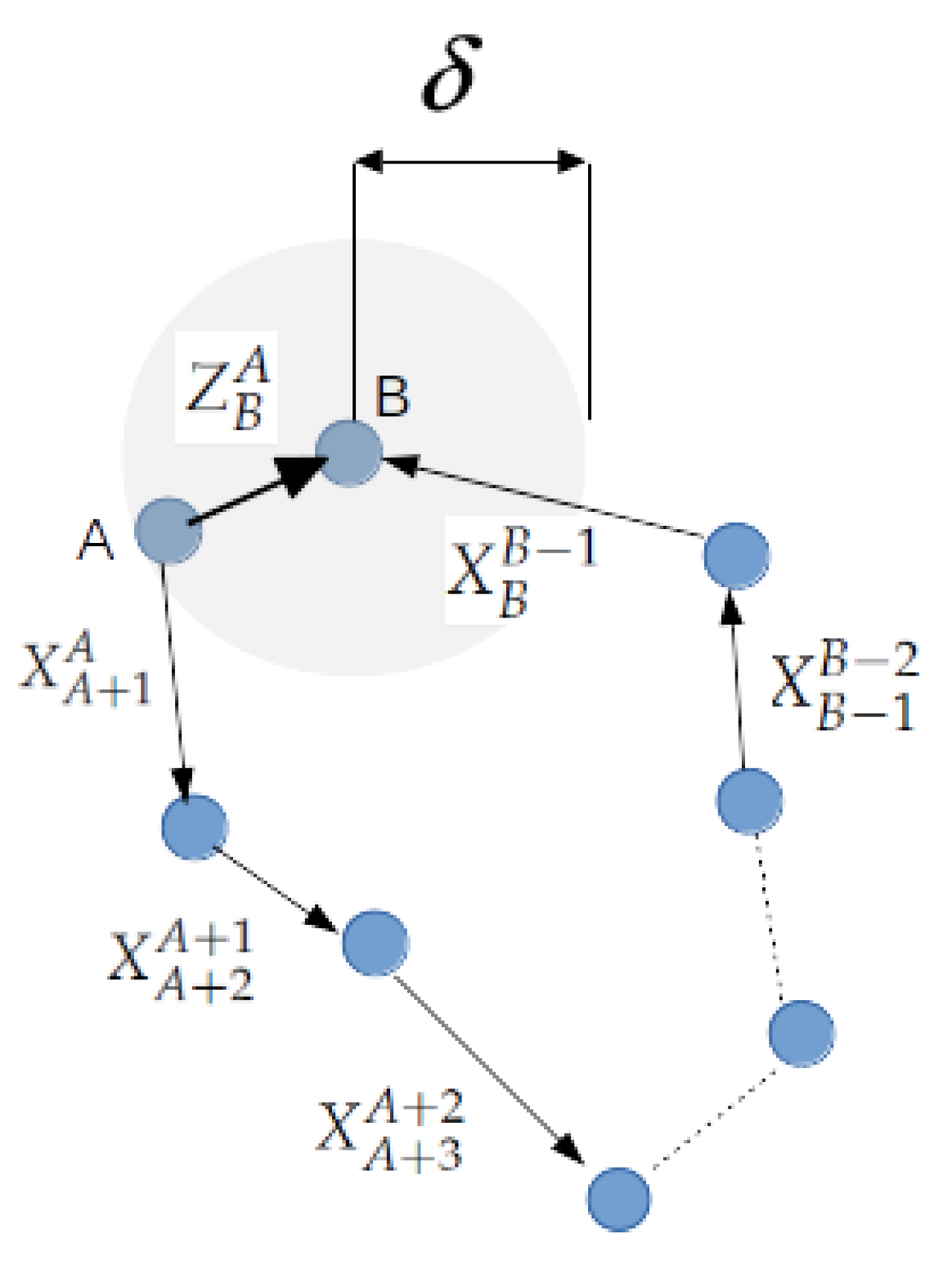

2.2.4. Map Joining

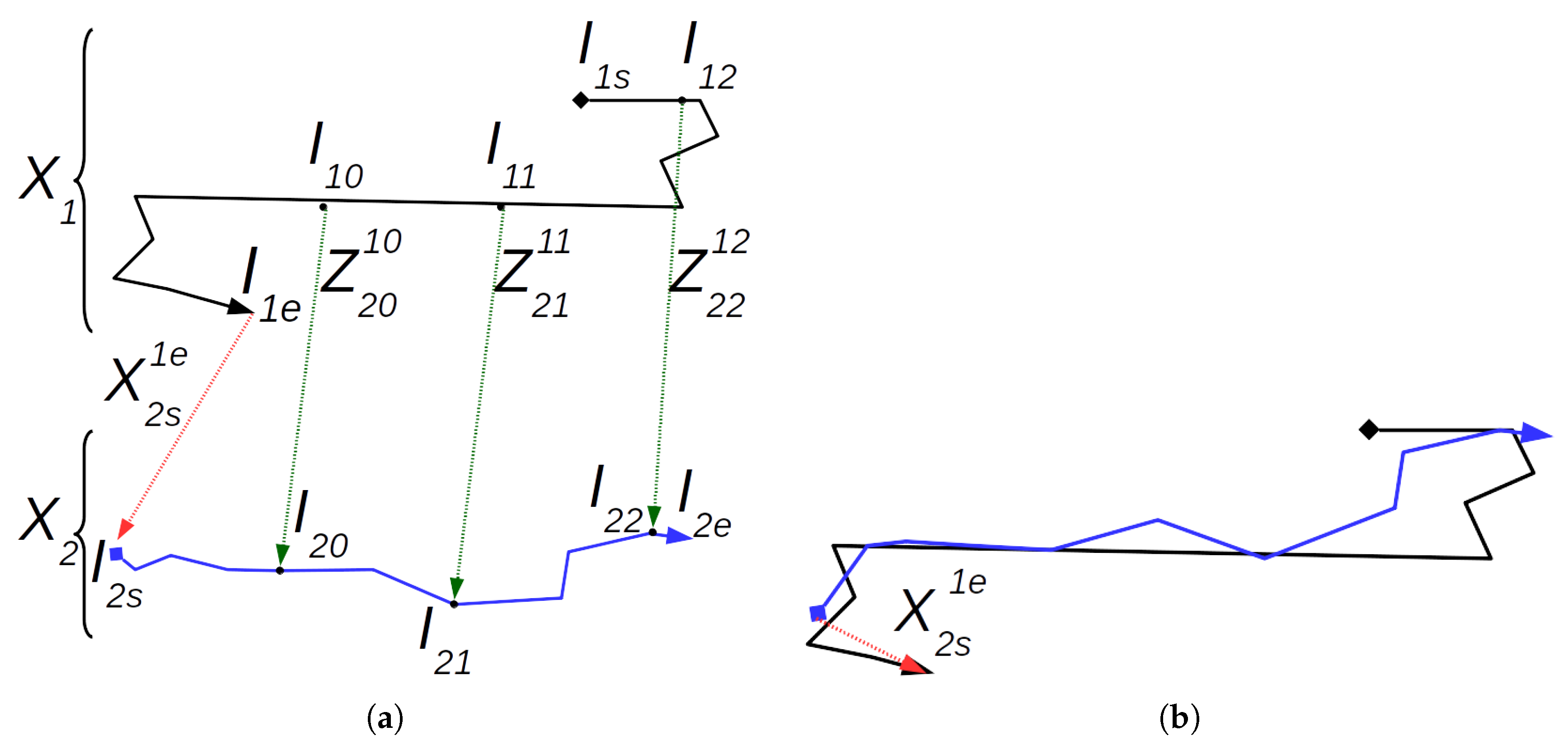

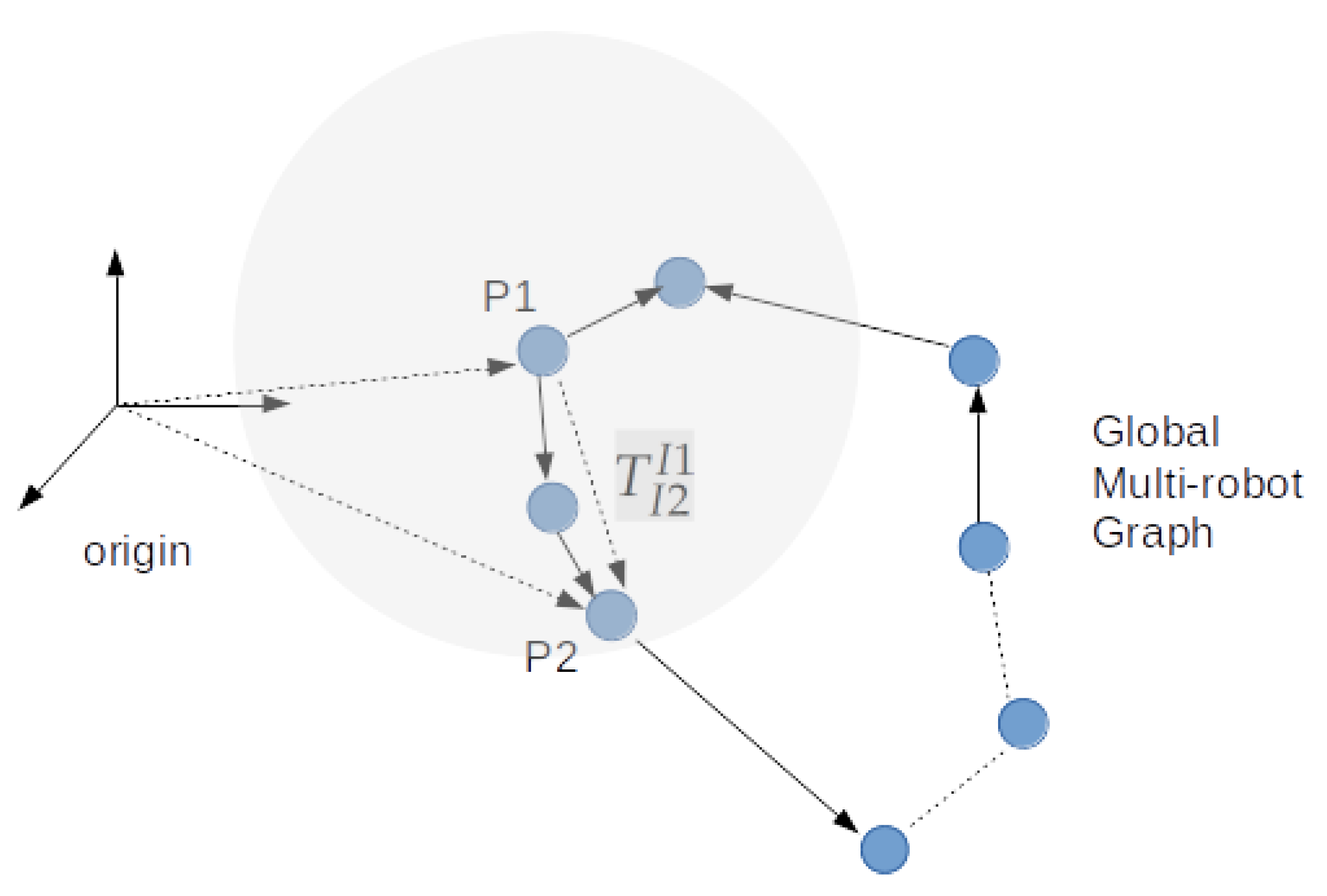

2.3. Multi-Robot Graph SLAM

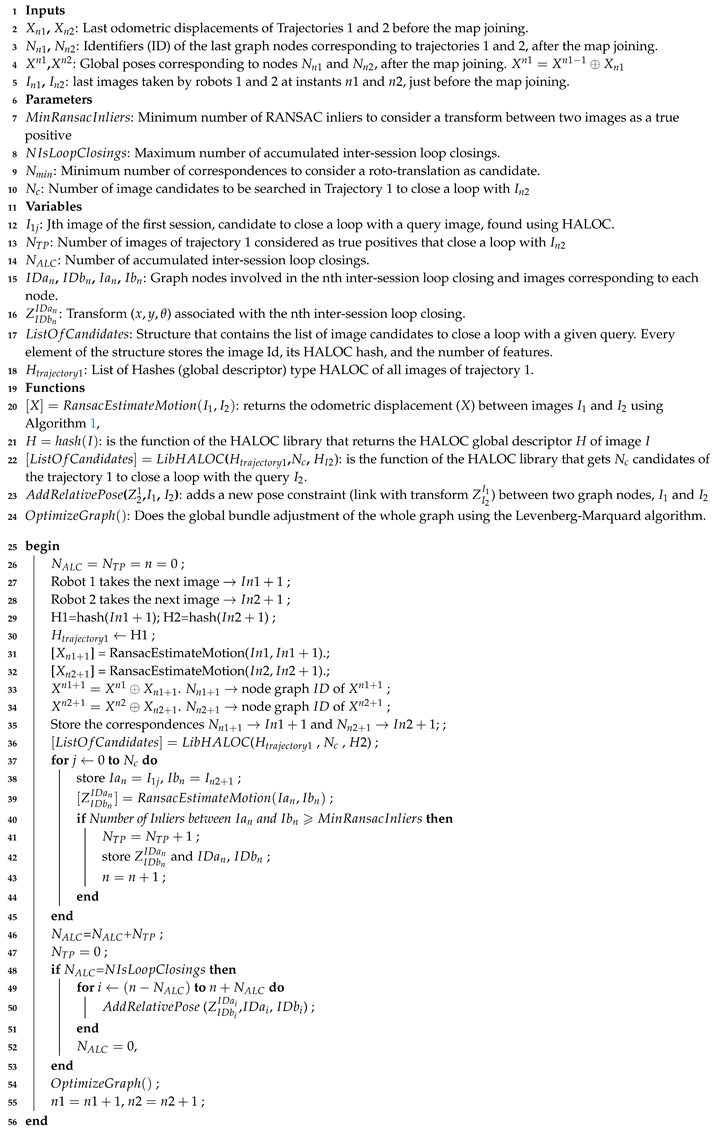

- Let us denote the last (or next) computed odometric displacements of trajectories 1 and 2 as and , respectively. These displacements together with the last images of both session are stored in the system.

- If trajectory 1 has not finished, add a new node () to the graph, linked to with the transform . The global pose contained in this node will be: . will be the last node of trajectory 1.

- If the trajectory 2 has not finished, add a new node () to the graph, linked to with the transform . The global pose of this node will be: . will be the last node of trajectory 2. The link between nodes and , and the link between nodes and will contain the values of and , respectively. Each new node added on the graph is associated in the code to its corresponding image, regardless the trajectory it belongs to. In this way, with the node ID one can find it associated image, and with an image identifier, one can find its associated node ID.

- Search for inter-session loop closings between the last image of session 2 and all images of session 1 using the algorithm explained in Section 2.2.3. Those candidates of session 1 retrieved by HALOC that present a transform after the RANSAC discrimination process with several inliers lower than a pre-fixed parameter (), are discarded and considered false positives that can harm the result of the graph optimization. The rest are accumulated and considered true positives. Let us name the number of true positives that close a loop with the last image of trajectory 2 as . For each true positive, the system stores the next data: (a) The name of both images that close the loop, (b) the identifiers and of both nodes involved in the loop closing and (c) the transform between both images ().

- Let us denote the number of accumulated inter-session loop closings as , initialized to 0 when both sessions are jointed. Then, . When , where is preset at the beginning of the process, then the graph is optimized with all the new pose constraints, following the next steps:

- (a)

- Recover the node IDs of the images associated with each inter-session loop closing classified as true positive, and every corresponding transform.

- (b)

- Add one additional link in the graph between nodes and , which content is , , .

- Run the graph bundle adjustment using the Levenberg Marquardt algorithm. Even if after a certain number of iterations no inter-session loop closings are found, the graph will be optimized as well, just to re-adjust the odometric trajectory estimates.

- , , and .

- Return to the first step, and iterate the process until both trajectories are finished. If one of the two trajectories finishes before the other one, the system keeps adding the corresponding nodes of the session that is still on course. Obviously, no additional inter-session loop closings will be found in this case, so every graph optimization will include only the pose estimates given by the visual odometry of the ongoing mission.

| Algorithm 2: Multi-robot Visual Graph SLAM. |

|

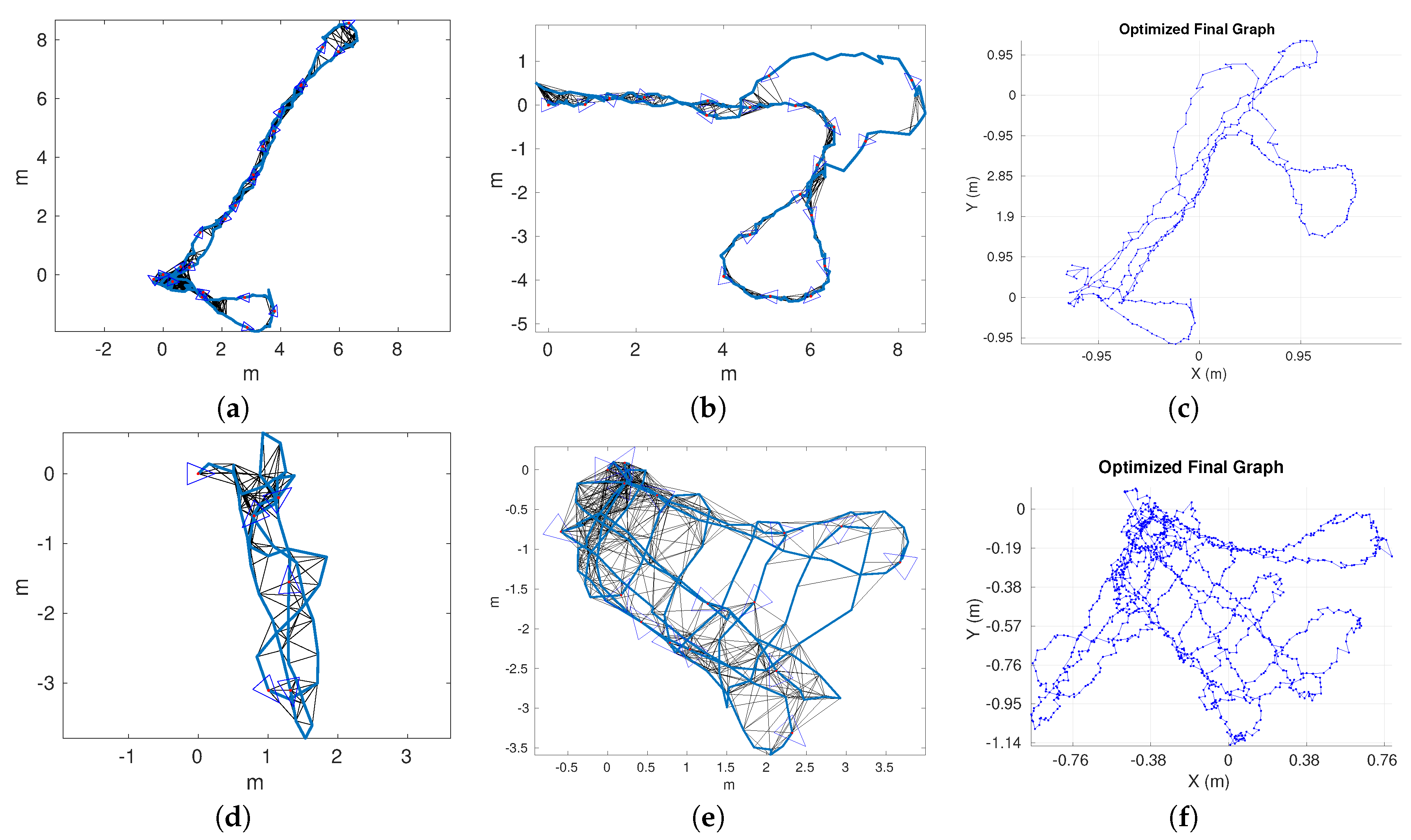

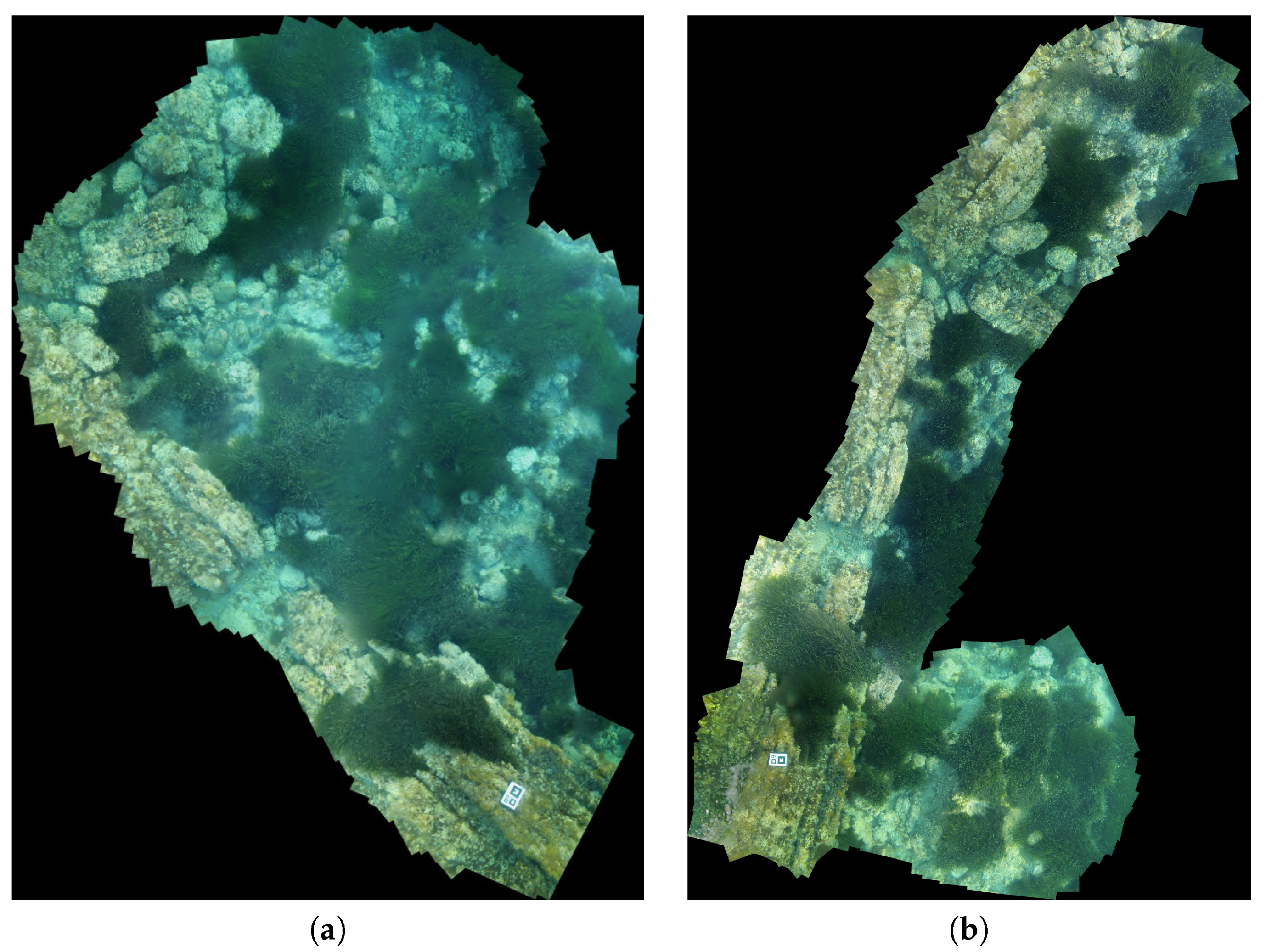

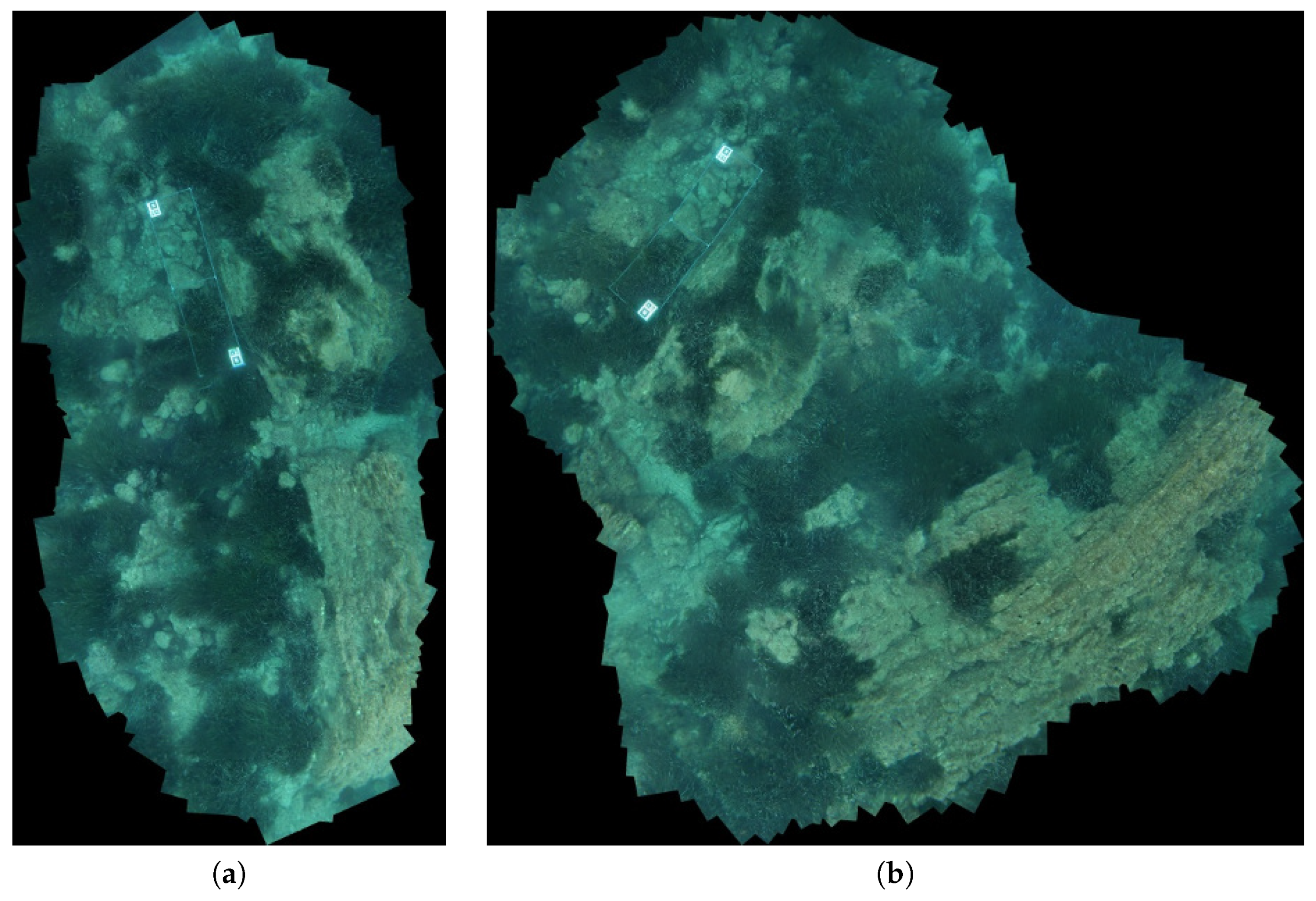

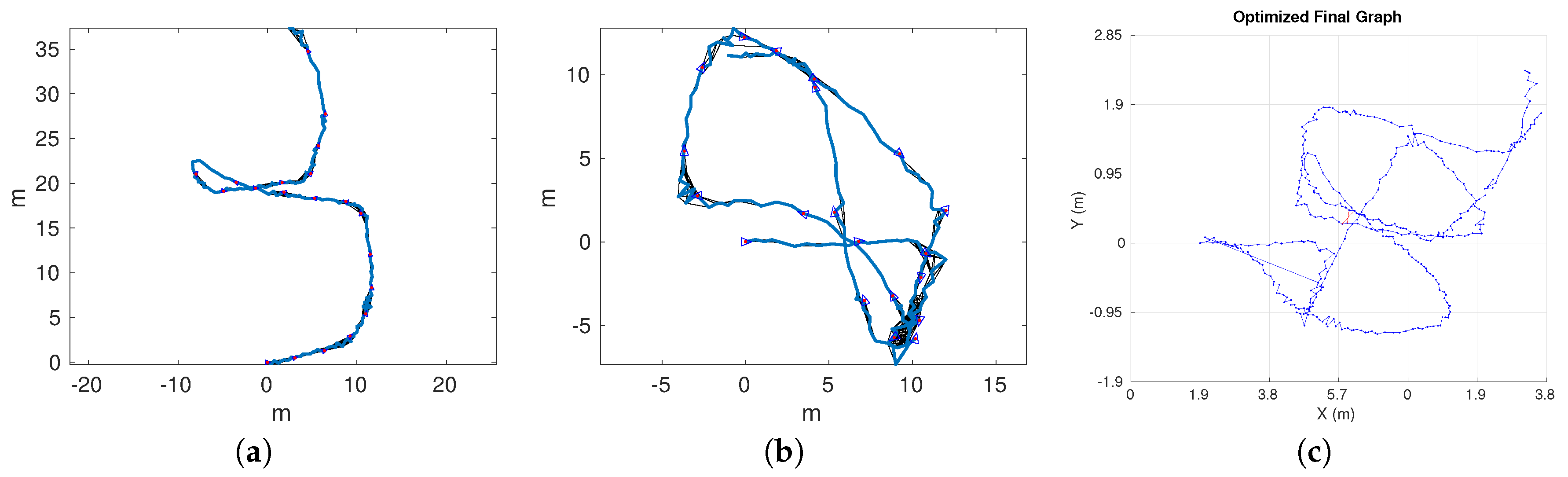

3. Experimental Results

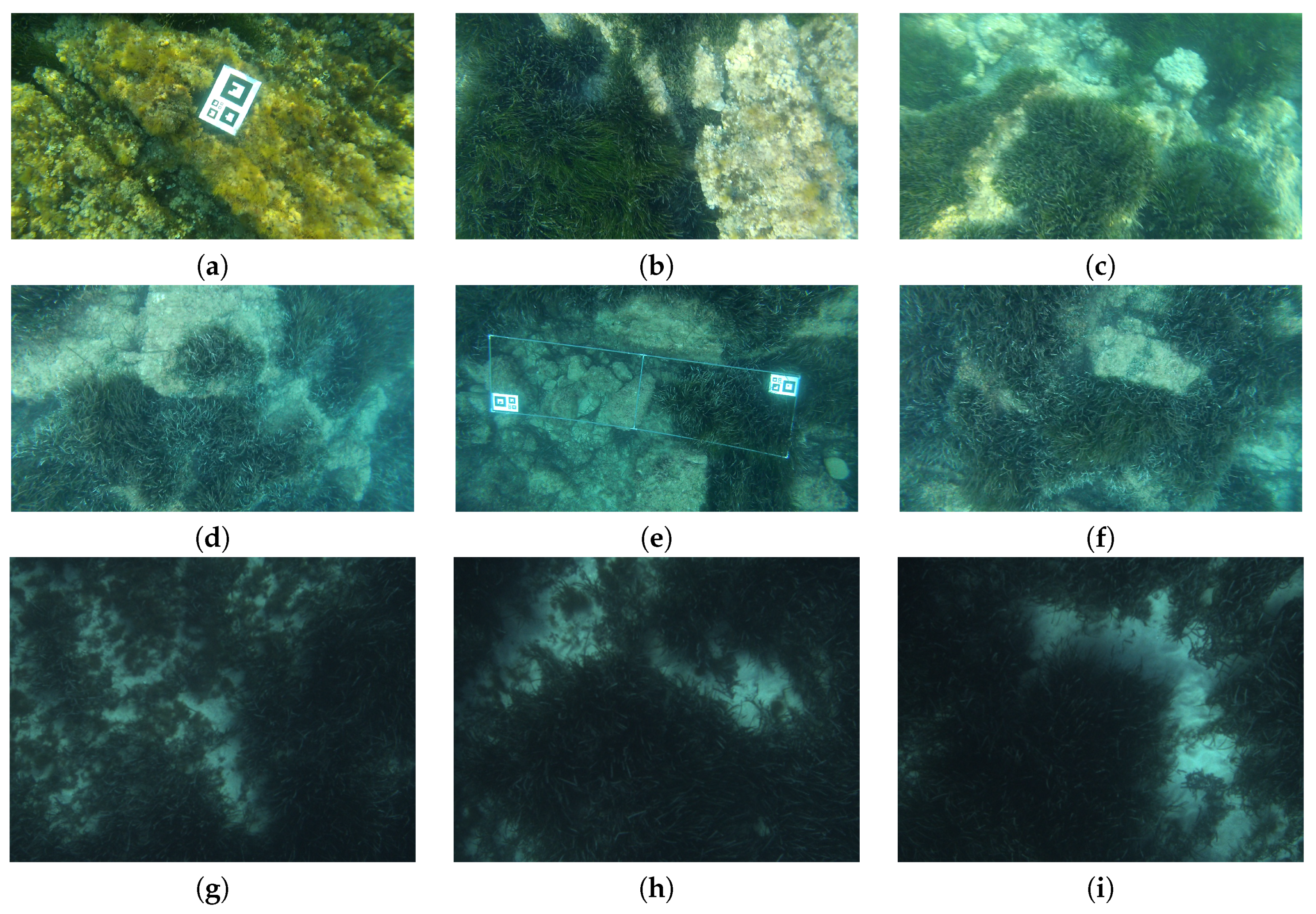

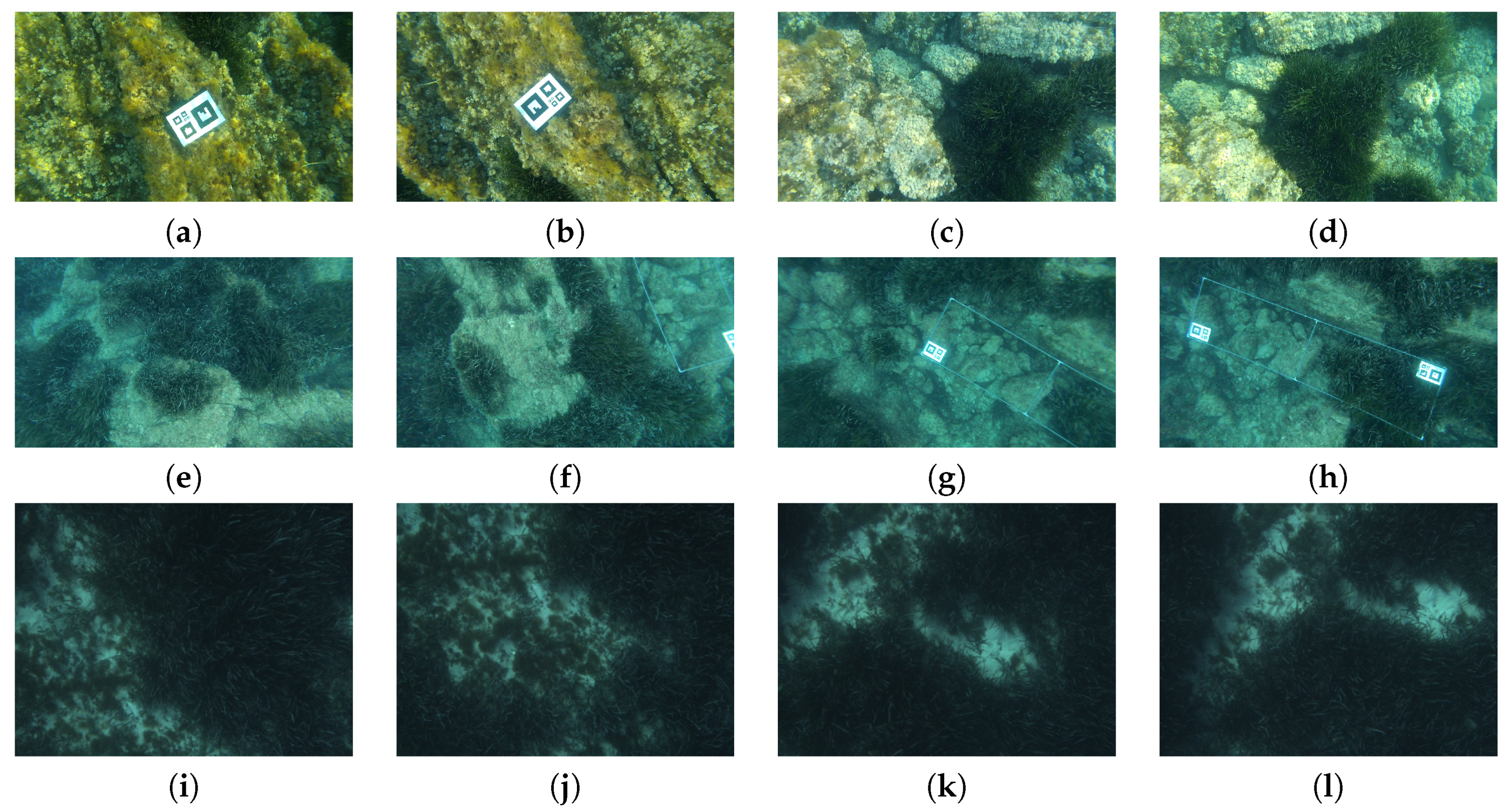

3.1. Experimental Setup

3.2. Experiments and Results

- For each dataset, extract the key images of both video sequences and store them in separated folders

- For each dataset, compute the HALOC global descriptor of each image extracted from both video sequences.

- For each dataset, compute and store in a file, the odometry, frame to frame, for both stored image sets, corresponding to both sessions.

- At this point, for each dataset and for each of their sessions, the key frames and the odometry have been stored and related through successive identifiers. Thereafter, for each dataset run the local SLAM procedure, which:

- (a)

- Starts algorithm of Section 2.2, building the state vector of each session, by steps of N consecutive frames, using the displacements included in each odometry file.

- (b)

- For each newly gathered image (lets call it, the query image), searches for local loop closings on other images of the same dataset which positions are near the query. This search is done only among the images gathered before the query.

- (c)

- Optimize both local graphs according to Section 2.2.2.

- (d)

- For each image of trajectory 2 (called the query), the algorithm searches the best 5 HALOC loop closing potential candidates of trajectory 1. Each candidate, if any, is confirmed by means of Algorithm 1, and filtered out if the number of inliers is lower than the predefined threshold.

- (e)

- Accumulate the number of inter-session true loop closings.

- (f)

- When the number of accumulated inter-session loop closings is greater than a certain threshold, join both sessions in a single pose-based graph. That means transforming all members of the joined state vector in global poses and the corresponding graph nodes, associating to each node the corresponding image.

- Run the Multi-robot SLAM procedure, according to Algorithm 2

- (a)

- Obtain the rest of images from memory and add new nodes according to the successive odometry data of both sessions, as explained in Section 2.3.

- (b)

- Search among all images of session 1 the best 5 candidates to close an inter-session loop with each new query of session 2, and filter out all those that do not present enough inliers after running Algorithm 1. HALOC is, obviously, the method used to find these candidates to close loops inter-sessions. Since each image will be associated with a node of the global graph, computing the transform between two candidates to close a loop and adding this transform between both nodes will be straightforward.

- (c)

- Get the transform between pairs of images that constitute true positives (true loop closings).

- (d)

- Add this transform to the graph as a new pose constrain, in the form of links between two nodes. The nodes will be those related with the images involved in the inter-session loop closing.

- (e)

- Optimize the graph.

- (f)

- Finish the process when all images from both sessions have been already used.

3.3. Some Considerations of the Data Reduction

3.4. Sources Availability

4. Discussion, Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Durrant-Whyte, H.; Bailey, T. Simultaneous Localization and Mapping (SLAM): Part I The Essential Algorithms. Robot. Autom. Mag. 2006, 2, 99–110. [Google Scholar] [CrossRef]

- Burguera, A.; González, Y.; Oliver, G. Underwater SLAM with Robocentric Trajectory Using a Mechanically Scanned Imaging Sonar. In Proceedings of the International Conference on Intelligent Robotis and Systems (IROS), San Francisco, CA, USA, 25–30 September 2011; pp. 3577–3582. [Google Scholar]

- Ferri, G.; Djapic, V. Adaptive Mission Planning for Cooperative Autonomous Maritime Vehicles. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Karlsruhe, Germany, 6–10 May 2013; pp. 5586–5592. [Google Scholar]

- Conte, G.; Scaradozzi, D.; Mannocchi, D.; Raspa, P.; Panebianco, L.; Screpanti, L. Development and Experimental Tests of a ROS Multi-agent Structure for Autonomous Surface Vehicles. J. Intell. Robot. Syst. 2018, 92, 705–718. [Google Scholar] [CrossRef]

- Davison, A.; Calway, A.; Mayol, W. Visual SLAM. IEEE Trans. Robot. 2007, 24, 1088–1093. [Google Scholar] [CrossRef]

- McDonald, J.; Kaess, M.; Cadena, C.; Neira, J.; Leonard, J.J. Real-time 6-DOF Multi-session Visual SLAM over Large-Scale Environments. Robot. Auton. Syst. 2013, 61, 1144–1158. [Google Scholar] [CrossRef]

- Abdulgalil, M.; Nasr, M.; Elalfy, M.; Khamis, A.; Karray, F. Multi-Robot SLAM: An Overview and Quantitative Evaluation of MRGS ROS Framework for MR-SLAM. In International Conference on Robot Intelligence Technology and Applications; Springer: Cham, Switzerland, 2019; Volume 751, pp. 165–183. [Google Scholar]

- Mahdoui, N.; Frémont, V.; Natalizio, E. Communicating Multi-UAV System for Cooperative SLAM-based Exploration. J. Intell. Robot. Syst. 2019, 46, 1–19. [Google Scholar] [CrossRef]

- Shkurti, F.; Chang, W.; Henderson, P.; Islam, M.; Gamboa, J.; Li, J.; Manderson, T.; Xu, A.; Dudek, G.; Sattar, J. Underwater Multi-robot Convoying Using Visual Tracking by Detection. In Proceedings of the International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 4189–4196. [Google Scholar]

- Allotta, B.; Costanzi, R.; Ridolfi, A.; Colombo, C.; Bellavia, F.; Fanfani, M.; Pazzaglia, F.; Salvetti, O.; Moroni, D.; Pascali, M.A.; et al. The ARROWS Project: Adapting and Developing Robotics Technologies for Underwater Archaeology. IFAC-PapersOnLine 2015, 48, 194–199. [Google Scholar] [CrossRef]

- Howard, A. Multi-Robot Simultaneous Localization and Mapping Using Particle Filters. Int. J. Robot. Res. 2006, 25, 1243–1256. [Google Scholar] [CrossRef]

- Kaess, M.; Ranganathan, A.; Dellaert, F. iSAM: Incremental Smoothing and Mapping. IEEE Trans. Robot. (TRO) 2008, 24, 1365–1378. [Google Scholar] [CrossRef]

- Kim, B.; Kaess, M.; Fletcher, L.; Leonard, J.; Bachrach, A.; Roy, N.; Teller, S. Multiple Relative Pose Graphs for Robust Coopetative Mapping. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Anchorage, AK, USA, 3–7 May 2010. [Google Scholar]

- Schuster, M.J.; Schmid, K.; Brand, C.; Beetz, M. Distributed stereo vision-based 6D localization and mapping for multi-robot teams. J. Field Robot. 2019, 36, 305–332. [Google Scholar] [CrossRef]

- Saeedi, S.; Trentini, M.; Seto, M.; Li, H. Multiple-Robot Simultaneous Localization and Mapping: A Review. J. Field Robot. 2016, 33, 3–46. [Google Scholar] [CrossRef]

- Angeli, A.; Doncieux, S.; Meyer, J.; Filliat, D. Real-Time Visual Loop-Closure Detection. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Pasadena, CA, USA, 19–23 May 2008; pp. 1842–1847. [Google Scholar]

- Kim, A.; Eustice, R.M. Real-Time Visual SLAM for Autonomous Underwater Hull Inspection Using Visual Saliency. IEEE Trans. Robot. 2013, 29, 719–733. [Google Scholar] [CrossRef]

- Monga, V.; Evans, B.L. Perceptual Image Hashing Via Feature Points: Performance Evaluation and Tradeoffs. IEEE Trans. Image Process. 2006, 15, 3452–3465. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, H. Visual Loop Closure Detection with a Compact Image Descriptor. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vilamoura, Portugal, 7–12 October 2012; pp. 1051–1056. [Google Scholar]

- Arandjelovic, R.; Zisserman, A. All About VLAD. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Portland, OR, USA, 23–28 June 2013; pp. 1578–1585. [Google Scholar]

- Bonin-Font, F.; Negre, P.; Burguera, A.; Oliver, G. LSH for Loop Closing Detection in Underwater Visual SLAM. In Proceedings of the 2014 IEEE Emerging Technology and Factory Automation (ETFA), Barcelona, Spain, 16–19 September 2014; pp. 1–4. [Google Scholar]

- Negre Carrasco, P.L.; Bonin-Font, F.; Oliver-Codina, G. Global Image Signature for Visual Loop-closure Detection. Autonom. Robots 2016, 40, 1403–1417. [Google Scholar] [CrossRef]

- Jain, U.; Namboodiri, V.; Pandey, G. Compact Environment-Invariant Codes for Robust Visual Place Recognition. In Proceedings of the 14th Conference on Computer and Robot Vision (CRV), Edmonton, AB, Canada, 17–19 May 2017; pp. 40–47. [Google Scholar]

- Glover, A.; Maddern, W.; Warren, M.; Reid, S.; Milford, M.; Wyeth, G. Openfabmap: An Open Source Toolbox for Appearance-based Loop Closure Detection. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Saint Paul, MN, USA, 14–18 May 2011. [Google Scholar]

- Cieslewski, T.; Choudhary, S.; Scaramuzza, D. Data-Efficient Decentralized Visual SLAM. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 2466–2473. [Google Scholar]

- Arandjelović, R.; Gronat, P.; Torii, A.; Pajdla, T.; Sivic, J. NetVLAD: CNN Architecture for Weakly Supervised Place Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 1437–1451. [Google Scholar] [CrossRef] [PubMed]

- Mur-Artal, R.; Montiel, J.M.; Tardos, J.D. ORB-SLAM: A Versatile and Accurate Monocular SLAM System. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An Efficient Alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Zhang, P.; Wang, H.; Ding, B.; Shang, S. Cloud-Based Framework for Scalable and Real-Time Multi-Robot SLAM. In Proceedings of the 2018 IEEE International Conference on Web Services (ICWS), San Francisco, CA, USA, 2–7 July 2018; pp. 147–154. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. The KITTI Vision Benchmark Suite. Available online: http://www.cvlibs.net/datasets/kitti/ (accessed on 7 May 2020).

- Karrer, M.; Schmuck, P.; Chli, M. CVI-SLAM—Collaborative Visual-Inertial SLAM. IEEE Robot. Autom. Lett. 2018, 3, 2762–2769. [Google Scholar] [CrossRef]

- Elibol, A.; Kim, J.; Gracias, N.; García, R. Efficient Image Mosaicing for Multi-robot Visual Underwater Mapping. Pattern Recognit. Lett. 2014, 46, 20–26. [Google Scholar] [CrossRef]

- Young-Hoo, K.; Steven, L. Applicability of Localized-calibration Methods in Underwater Motion Analysis. In Proceedings of the XVIII International Symposium on Biomechanics in Sports; 2000. Available online: https://ojs.ub.uni-konstanz.de/cpa/article/view/2530 (accessed on 7 May 2020).

- Hans-Gerd, M. New Developments in Multimedia Photogrammetry; Wichmann Verlag: Karlsruhe, Germany, 1995. [Google Scholar]

- Pfingsthorn, M.; Birk, A.; Bülow, H. An Efficient Strategy for Data Exchange in Multi-robot Mapping Under Underwater Communication Constraints. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 4886–4893. [Google Scholar]

- Paull, L.; Huang, G.; Seto, M.; Leonard, J. Communication-constrained Multi-AUV Cooperative SLAM. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 509–516. [Google Scholar]

- Jaume I University of Castellón, IRSLab; University of Girona, CIRS; University of Balearic Islands. TWIN roBOTs for Cooperative Underwater Intervention Missions. 2019. Available online: http://www.irs.uji.es/twinbot/twinbot.html (accessed on 7 May 2020).

- Burguera, A.; Bonin-Font, F.; Oliver, G. Trajectory-based visual localization in underwater surveying missions. Sensors 2015, 15, 1708–1735. [Google Scholar] [CrossRef]

- Zhao, B.; Hu, T.; Zhang, D.; Shen, L.; Ma, Z.; Kong, W. 2D Monocular Visual Odometry Using Mobile-phone Sensors. In Proceedings of the 34th Chinese Control Conference (CCC), Hangzhou, China, 28–30 July 2015; pp. 5919–5924. [Google Scholar]

- SRV Group. Systems, Robotics and Vision Group, University of the Balearic Islands. Available online: http://srv.uib.es/projects/ (accessed on 7 May 2020).

- Negre Carrasco, P.L.; Bonin-Font, F.; Oliver, G. Cluster-based Loop Closing Detection for Underwater SLAM in Feature-poor Regions. In Proceedings of the IEEE International Conference on Robotics and Automation, Stockholm, Sweden, 16–21 May 2016; pp. 2589–2595. [Google Scholar] [CrossRef]

- Peralta, G.; Bonin-Font, F.; Caiti, A. Real-time Hash-based Loop Closure Detection in Underwater Multi-Session Visual SLAM. In Proceedings of the Ocean 2019, Marseille, France, 17–20 June 2019. [Google Scholar]

- Kümmerle, R.; Grisetti, G.; Strasdat, H.; Burgard, K.K.W. G2o: A General Framework for Graph Optimization. ICRA. IEEE 2011, 3607–3613. [Google Scholar]

- Newman, P.; Leonard, J.; Rikoski, R. Towards Constant-Time SLAM on an Autonomous Underwater Vehicle Using Synthetic Aperture Sonar. In Proceedings of the Eleventh International Symposium on Robotics Research, Sienna, Italy, 19–22 October 2003; Volume 15, pp. 409–420. [Google Scholar]

- Muller, M.; Surmann, H.; Pervolz, K.; May, S. The Accuracy of 6D SLAM Using the AIS 3D Laser Scanner. In Proceedings of the 2006 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems, Heidelberg, Germany, 3–6 September 2006; pp. 389–394. [Google Scholar]

- Burguera, A.B.; Bonin-Font, F. A Trajectory-Based Approach to Multi-Session Underwater Visual SLAM Using Global Image Signatures. J. Mar. Sci. Eng. 2019, 7, 278. [Google Scholar] [CrossRef]

- Smith, R.; Cheeseman, P.; Self, M. A Stochastic Map for Uncertain Spatial Relationships. In Proceedings of the 4th International Symposium on Robotics Research, Siena, Italy, 19–22 October 1987; pp. 467–474. [Google Scholar]

- Kanzow, C.; Yamashita, N.; Fukushima, M. Levenberg-Marquardt Methods for Constrained Nonlinear Equations with Strong Local Convergence Properties. J. Comput. Appl. Math. 2002, 172, 375–397. [Google Scholar] [CrossRef]

- Gavin, H. The Levenberg-Marquardt Method for Nonlinear Least Squares Curve-Fitting Problems. 2019. Available online: http://people.duke.edu/~hpgavin/ce281/lm.pdf (accessed on 10 May 2002).

- Smith, R.; Self, M.; Cheeseman, P. A Stochastic Map for Uncertain Spatial Relationships. Comput. Sci. 1988. Available online: https://www.semanticscholar.org/paper/A-stochastic-map-for-uncertain-spatial-Smith-Self/76a6c5352a0fbc3fec5395f1501b58bd6566d214 (accessed on 5 May 2002).

- Grisetti, G.; Kuemmerle, R.; Stachniss, C.; Burgard, W. A Tutorial on Graph-Based SLAM. Intell. Transp. Syst. Mag. IEEE 2010, 2, 31–43. [Google Scholar] [CrossRef]

- Carreras, M.; Hernandez, J.; Vidal, E.; Palomeras, N.; Ribas, D.; Ridao, P. Sparus II AUV—A Hovering Vehicle for Seabed Inspection. IEEE J. Ocean. Eng. 2018, 43, 344–355. [Google Scholar] [CrossRef]

- Guerrero, E.; Bonin-Font, F.; Negre, P.L.; Massot, M.; Oliver, G. USBL Integration and Assessment in a Multisensor Navigation Approach for AUVs. IFAC-PapersOnLine 2017, 50, 7905–7910. [Google Scholar]

- García, E.; Ortiz, A.; Bonnín, F.; Company, J.P. Fast Image Mosaicing using Incremental Bags of Binary Words. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016. [Google Scholar]

- Bonin-Font, F.; Massot, M.; Codina, G.O. Towards Visual Detection, Mapping and Quantification of Posidonia Oceanica using a Lightweight AUV. IFAC-PapersOnLine 2016, 49, 500–505. [Google Scholar] [CrossRef]

- García, E.; Ortiz, A. Hierarchical Place Recognition for Topological Mapping. IEEE Trans. Robot. 2017, 33, 1061–1074. [Google Scholar] [CrossRef]

- Bonin-Font, F. Multi-Robot Visual Graph SLAM–An Ilustrative Video. Available online: https://www.youtube.com/watch?v=c7faAm2HIpc (accessed on 16 May 2020).

- Burguera, A. Visual Odometry Sources. 2015. Available online: https://github.com/aburguera/VISUAL_ODOMETRY_2D (accessed on 16 May 2020).

- Negre Carrasco, P.L. ROS C++ Library for Hash-Based Loop Closure (HALOC). Available online: https://github.com/srv/libhaloc (accessed on 2 June 2015).

- Bonin-Font, F. Python Library for Hash-Based Loop Closure (HALOC). Available online: https://github.com/srv/HALOC-Python (accessed on 27 March 2019).

- Bonin-Font, F.; Burguera, A. Sources of Multi-Robot Visual Graph SLAM with a Sample Dataset. Available online: https://github.com/srv/Multi-Robot-Visual-Graph-SLAM (accessed on 7 May 2020).

- Matlab. Localization and Pose Estimation: Pose Graph. Available online: https://es.mathworks.com/help/nav/ref/posegraph.html (accessed on 1 January 2019).

- Quigley, M.; Conley, K.; Gerkey, B.P.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A.Y. ROS: An Open-Source Robot Operating System. In ICRA Workshop on Open Source Software; 2009; Available online: https://blog.acolyer.org/2015/11/02/ros-an-open-source-robot-operating-system/ (accessed on 1 May 2020).

| I1 | I2 | Node I1 | Node I2 | Graph Transform | Ransac Transform | dif:(mod., yaw) |

|---|---|---|---|---|---|---|

| 56 | 280 | 115 | 113 | [3.04;−4.05;0.1711] | [3.12;−3.99;0.18] | (0.00015 m, 0.0089 rad) |

| 64 | 287 | 131 | 126 | [12.10;41.17;0.0633] | [21.71;33.31;−0.03] | (0,018 m, 0.01 rad) |

| 152 | 420 | 307 | 393 | [37.09;31.95;−0.34] | [36.65;31.47;−0.29] | (0,00097 m, 0.05 rad) |

| I1 | I2 | Node I1 | Node I2 | Graph Transform | Ransac Transform | dif:(mod., yaw) |

|---|---|---|---|---|---|---|

| 335 | 1181 | 672 | 602 | [30.43;59.40;2.42] | [−18.61;−1.69;2.61] | (0.148 m, 0.2 rad) |

| 603 | 1190 | 941 | 621 | [1.19;−30.22;−0.024] | [11.77;19.69;0.1959] | (0.097 m, 0.21 rad) |

| 877 | 1211 | 1215 | 662 | [−6.34;−92.66;−0.196] | [24.69;−50.403;−0.314] | (0.099 m, 0.12 rad) |

| 871 | 1211 | 1209 | 662 | [−20.65;−84.08;−3.69 × ] | [14.75;−43.66;−0.086] | (0.0102 m, 0.085 rad) |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bonin-Font, F.; Burguera, A. Towards Multi-Robot Visual Graph-SLAM for Autonomous Marine Vehicles. J. Mar. Sci. Eng. 2020, 8, 437. https://doi.org/10.3390/jmse8060437

Bonin-Font F, Burguera A. Towards Multi-Robot Visual Graph-SLAM for Autonomous Marine Vehicles. Journal of Marine Science and Engineering. 2020; 8(6):437. https://doi.org/10.3390/jmse8060437

Chicago/Turabian StyleBonin-Font, Francisco, and Antoni Burguera. 2020. "Towards Multi-Robot Visual Graph-SLAM for Autonomous Marine Vehicles" Journal of Marine Science and Engineering 8, no. 6: 437. https://doi.org/10.3390/jmse8060437

APA StyleBonin-Font, F., & Burguera, A. (2020). Towards Multi-Robot Visual Graph-SLAM for Autonomous Marine Vehicles. Journal of Marine Science and Engineering, 8(6), 437. https://doi.org/10.3390/jmse8060437