Comparison of Human and Camera Visual Acuity—Setting the Benchmark for Shallow Water Autonomous Imaging Platforms

Abstract

:1. Introduction

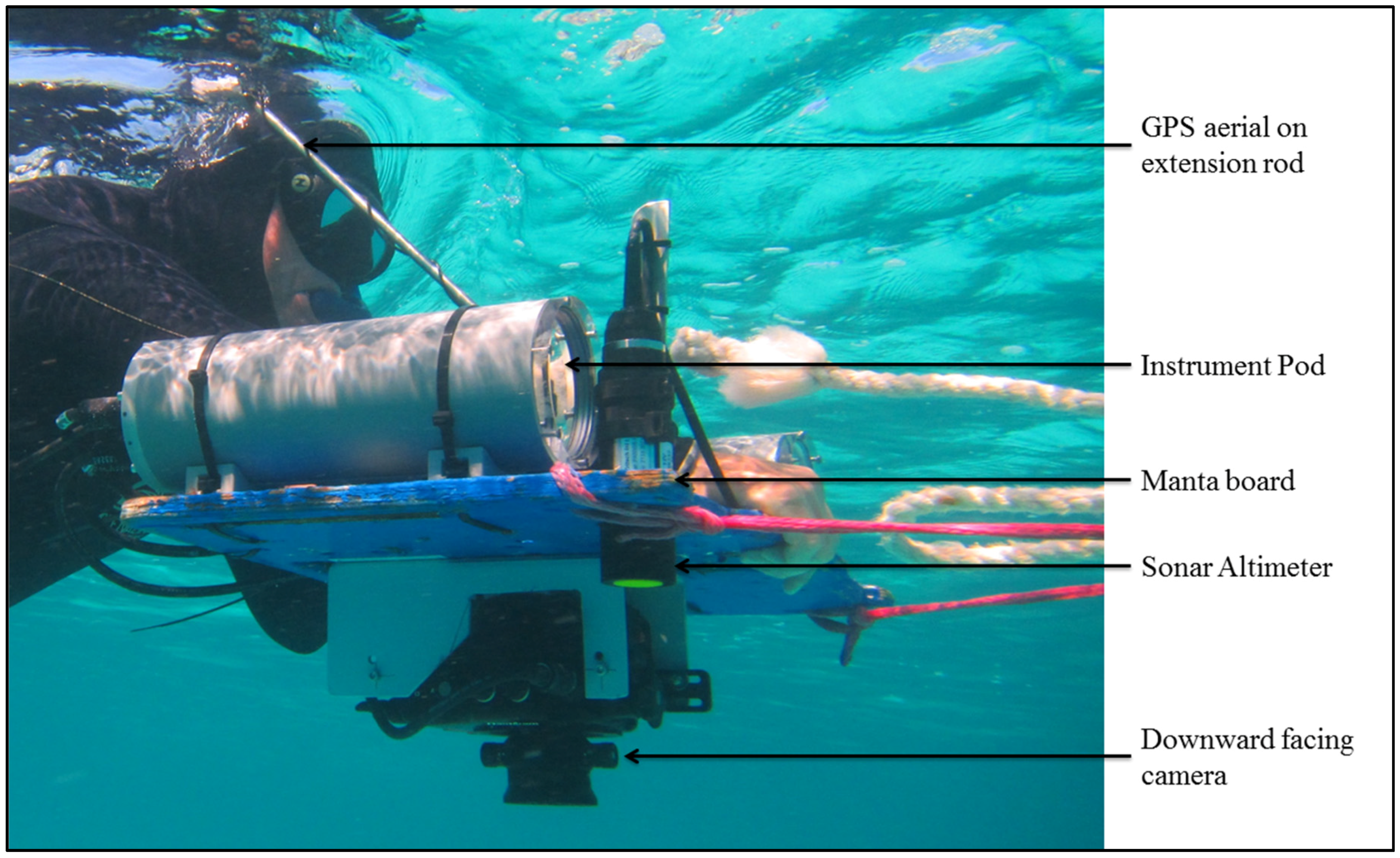

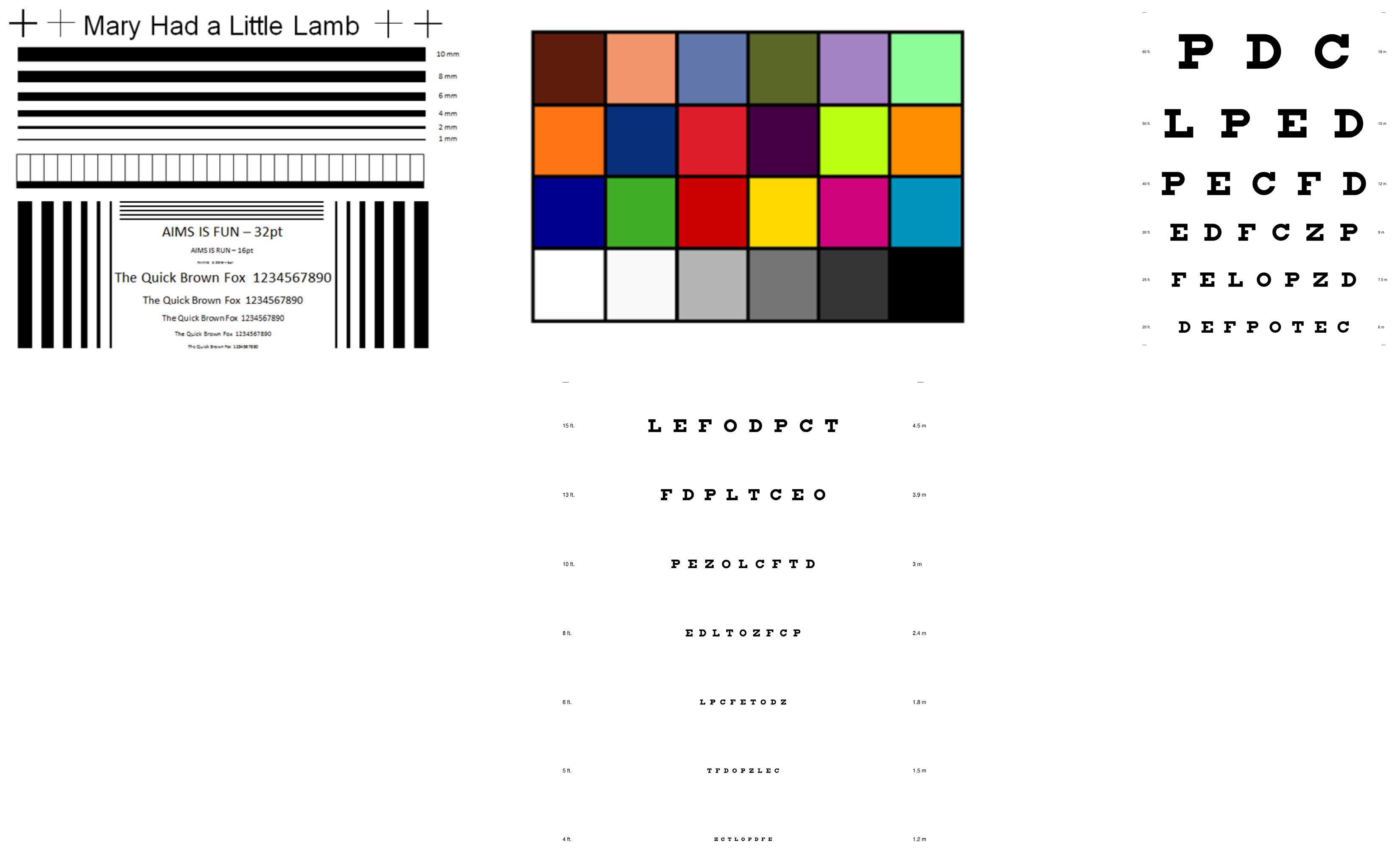

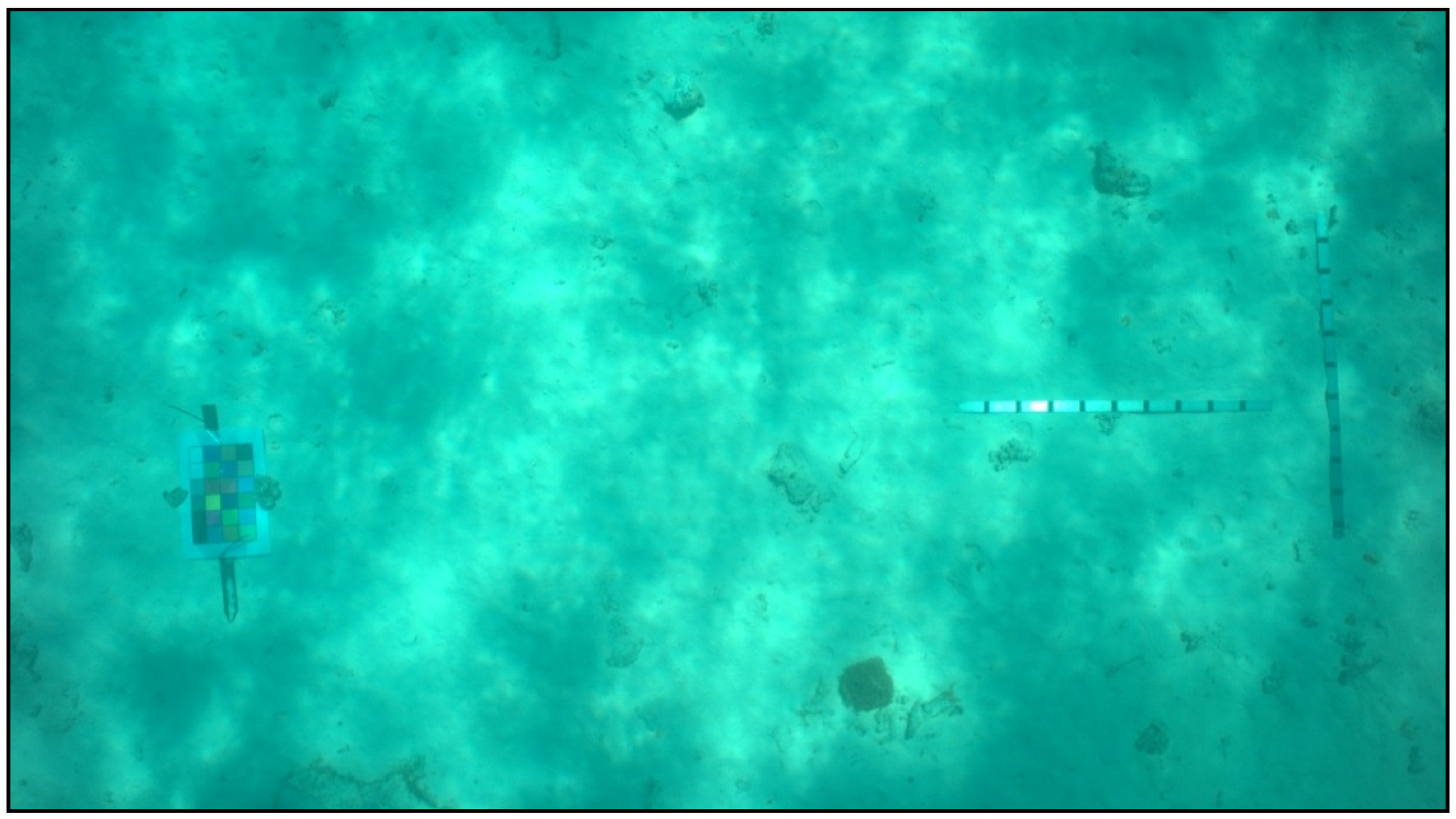

2. Experimental Section

3. Results

3.1. Visual Field of View

3.2. Visual Acuity

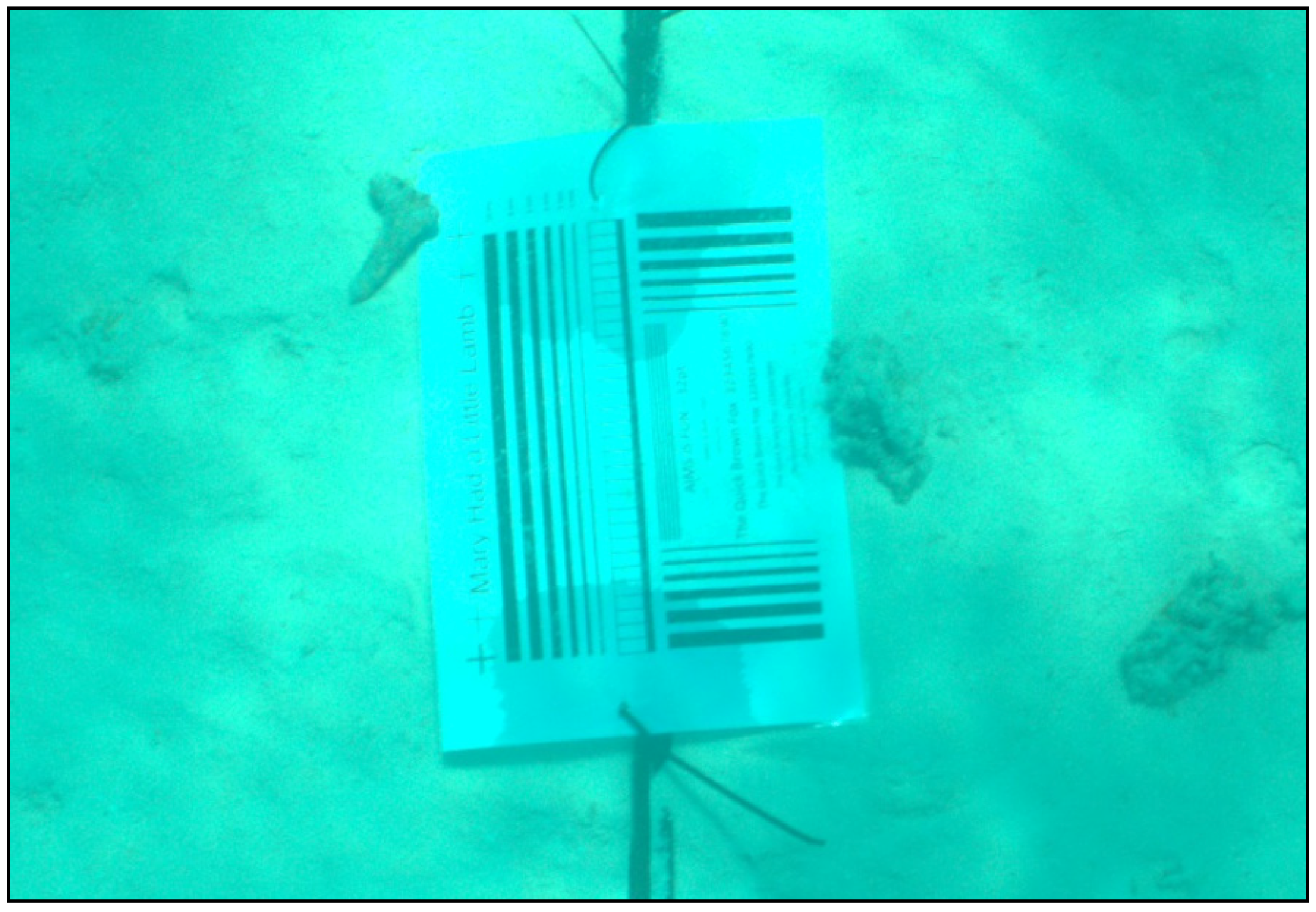

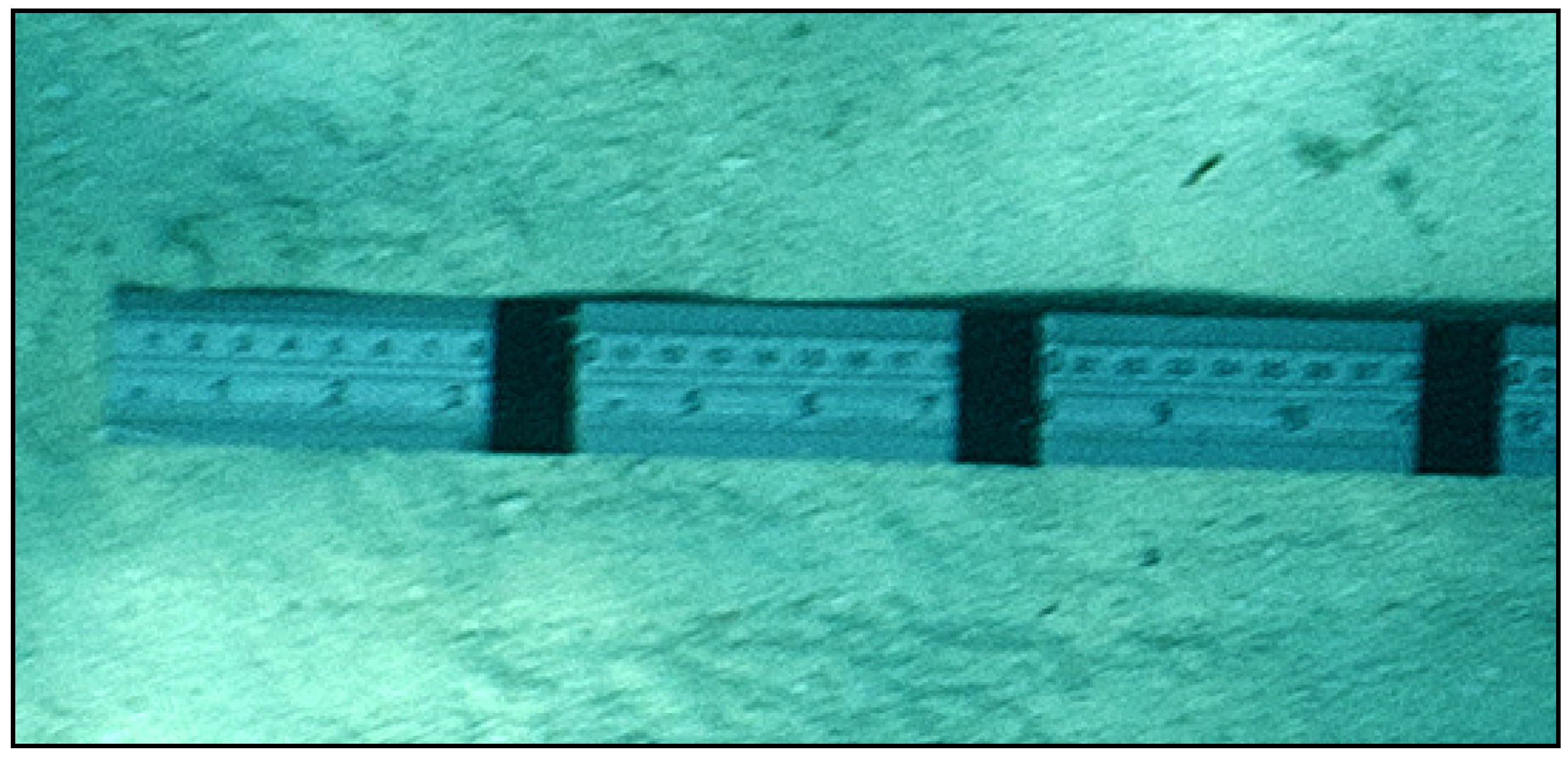

3.3. Resolution

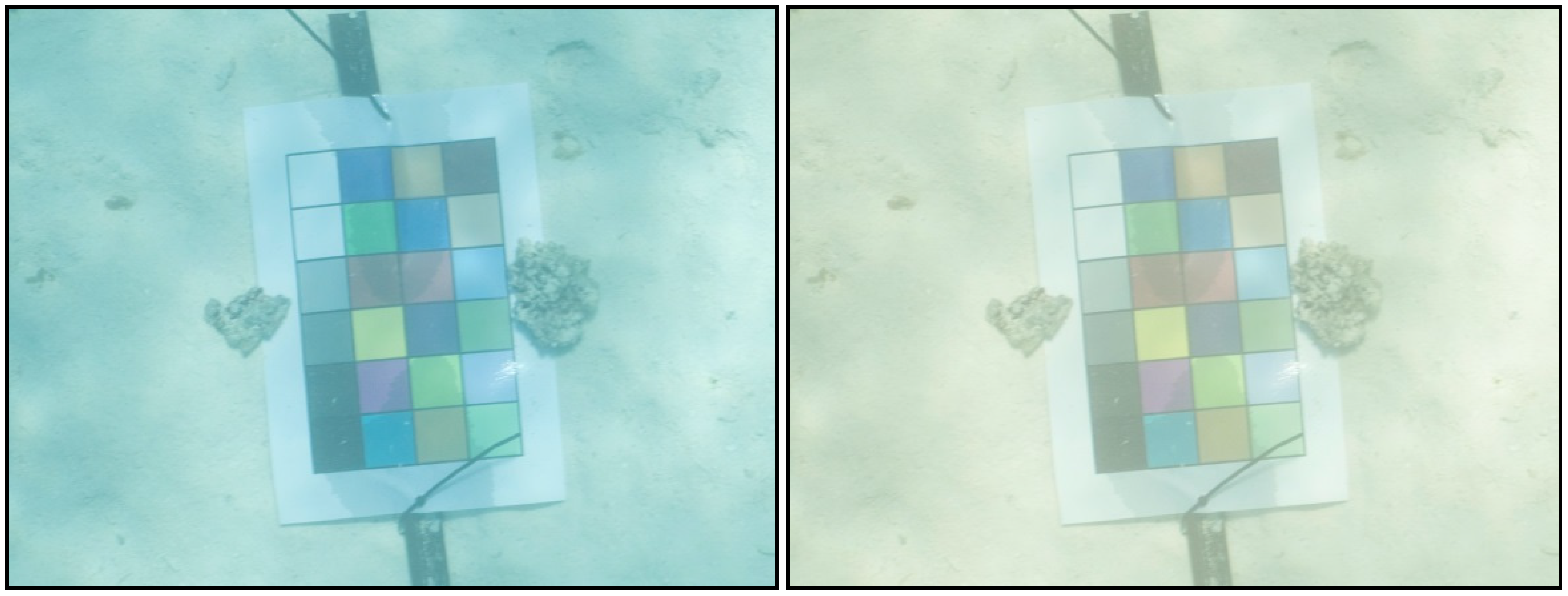

3.4. Color Balance

4. Discussion

4.1. Visual Acuity

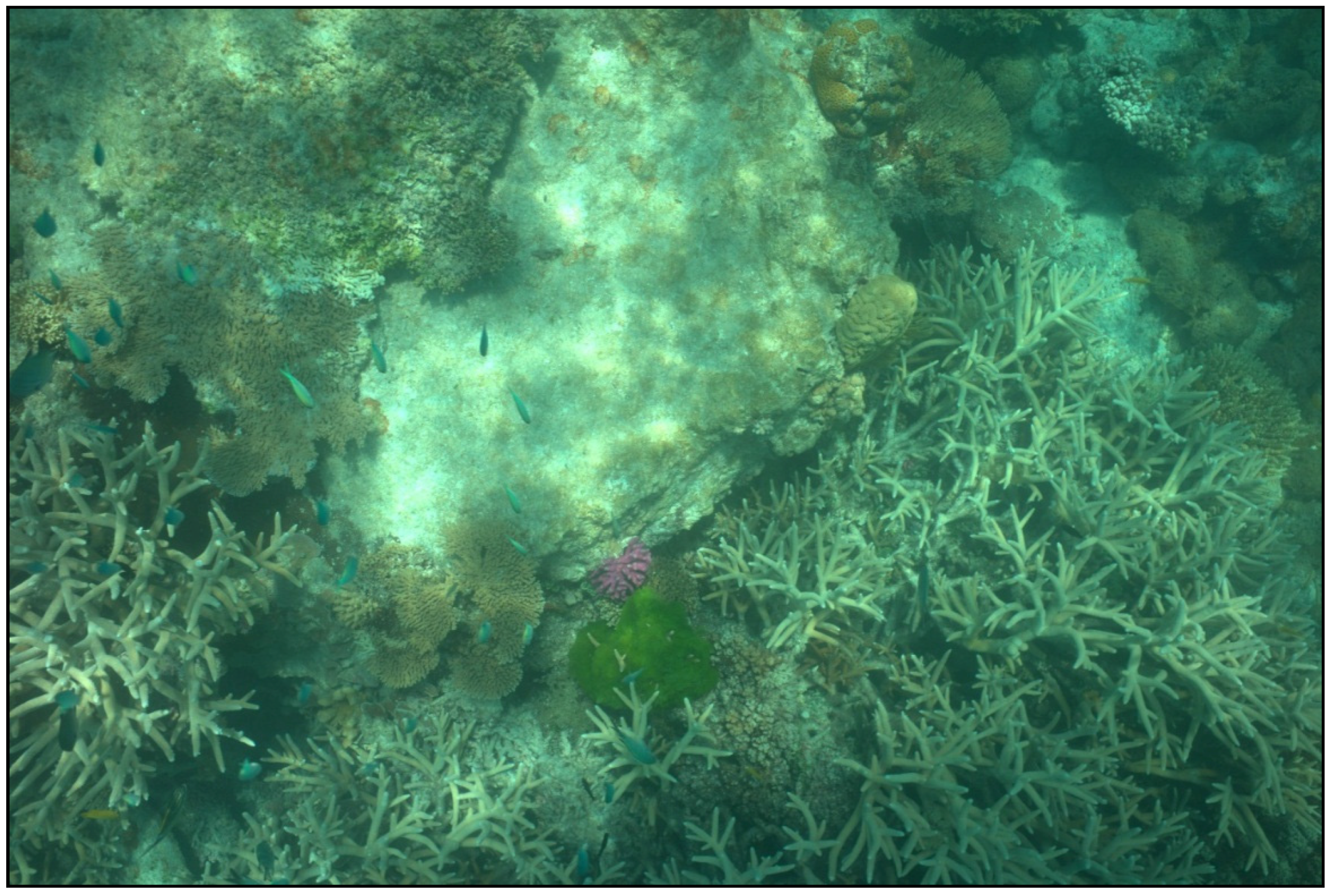

4.2. Real World Use

4.3. Implications for Autonomous Monitoring

5. Conclusions

Supplementary Materials

Supplementary File 1Acknowledgments

Author Contributions

Conflicts of Interest

References

- Boavida, J.; Assis, J.; Reed, J.; Serrão, E.A.; Gonçalves, J.M. Comparison of small remotely operated vehicles and diver-operated video of circalittoral benthos. Hydrobiologia 2016, 766, 247–260. [Google Scholar] [CrossRef]

- Singh, W.; Örnólfsdóttir, E.B.; Stefansson, G. A camera-based autonomous underwater vehicle sampling approach to quantify scallop abundance. J. Shellfish Res. 2013, 32, 725–732. [Google Scholar] [CrossRef]

- Desa, E.; Madhan, R.; Maurya, P. Potential of autonomous underwater vehicles as new generation ocean data platforms. Curr. Sci. 2006, 90, 1202–1209. [Google Scholar]

- Patterson, M.; Relles, N. Autonomous underwater vehicles resurvey bonaire: A new tool for coral reef management. In Proceedings of the 11th International Coral Reef Symposium, Ft. Lauderdale, FL, USA, 7–11 July 2008; pp. 539–543.

- Armstrong, R.A.; Singh, H.; Torres, J.; Nemeth, R.S.; Can, A.; Roman, C.; Eustice, R.; Riggs, L.; Garcia-Moliner, G. Characterizing the deep insular shelf coral reef habitat of the hind bank marine conservation district (US virgin islands) using the seabed autonomous underwater vehicle. Cont. Shelf Res. 2006, 26, 194–205. [Google Scholar] [CrossRef]

- Kocak, D.M.; Dalgleish, F.R.; Caimi, F.M.; Schechner, Y.Y. A focus on recent developments and trends in underwater imaging. Mar. Technol. Soc. J. 2008, 42, 52–67. [Google Scholar] [CrossRef]

- Moran, P.J.; Johnson, D.; Miller-Smith, B.; Mundy, C.; Bass, D.; Davidson, J.; Miller, I.; Thompson, A. A Guide to the Aims Manta Tow Technique; Australian Institute of Marine Science (AIMS): Townsville, Australia, 1989. [Google Scholar]

- Long, B.; Andrews, G.; Wang, Y.-G.; Suharsono. Sampling accuracy of reef resource inventory technique. Coral Reefs 2004, 23, 378–385. [Google Scholar] [CrossRef]

- Mallet, D.; Wantiez, L.; Lemouellic, S.; Vigliola, L.; Pelletier, D. Complementarity of rotating video and underwater visual census for assessing species richness, frequency and density of reef fish on coral reef slopes. PLoS ONE 2014, 9, e84344. [Google Scholar]

- Pelletier, D.; Leleu, K.; Mou-Tham, G.; Guillemot, N.; Chabanet, P. Comparison of visual census and high definition video transects for monitoring coral reef fish assemblages. Fish. Res. 2011, 107, 84–93. [Google Scholar] [CrossRef]

- Harvey, E.; Fletcher, D.; Shortis, M.R.; Kendrick, G.A. A comparison of underwater visual distance estimates made by scuba divers and a stereo-video system: Implications for underwater visual census of reef fish abundance. Mar. Freshw. Res. 2004, 55, 573–580. [Google Scholar] [CrossRef]

- Sloan, L.L. Measurement of visual acuity: A critical review. AMA Arch. Ophthalmol. 1951, 45, 704–725. [Google Scholar] [CrossRef] [PubMed]

- Schulz, J. Geometric optics and strategies for subsea imaging. Subsea Optics Imaging 2013. [Google Scholar] [CrossRef]

- Lyons, M. Photography Calculators. Available online: http://www.tawbaware.com/maxlyons/calc.htm (accessed on 5 November 2015).

- Cambridge in Colour. Cameras versus the Human Eye. Available online: http://www.cambridgeincolour.com/tutorials/cameras-vs-human-eye.htm (accessed on 5 November 2015).

- Ross, H.E.; Nawaz, S. Why do objects appear enlarged under water? Arquivos Brasileiros de Oftalmologia 2003, 66, 69–76. [Google Scholar] [CrossRef]

- Connick, J. Nauticam na-a7/ Sony Review: Mirrorless in Mexico. Available online: http://diveadvisor.com/sub2o/nauticam-na-a7-sony-review-mirrorless-in-mexico# (accessed on 6 January 2016).

- White, B. Nikonos Lenses on a Digital Camera? Available online: http://www.backscatter.com/learn/article/article.php?ID=94 (accessed on 6 January 2016).

- Rorslett, B. Lenses for Nikonos (i-v) Mount. Available online: http://www.naturfotograf.com/lens_nikonos.html (accessed on 6 January 2016).

- Church, J. Jim Church's Essential Guide to Nikonos Systems; Aqua Quest Publications, Inc.: Locust Valley, NY, USA, 1994. [Google Scholar]

- Oliva, A.; Torralba, A. The role of context in object recognition. Trends Cogn. Sci. 2007, 11, 520–527. [Google Scholar] [CrossRef] [PubMed]

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license ( http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bainbridge, S.; Gardner, S. Comparison of Human and Camera Visual Acuity—Setting the Benchmark for Shallow Water Autonomous Imaging Platforms. J. Mar. Sci. Eng. 2016, 4, 17. https://doi.org/10.3390/jmse4010017

Bainbridge S, Gardner S. Comparison of Human and Camera Visual Acuity—Setting the Benchmark for Shallow Water Autonomous Imaging Platforms. Journal of Marine Science and Engineering. 2016; 4(1):17. https://doi.org/10.3390/jmse4010017

Chicago/Turabian StyleBainbridge, Scott, and Scott Gardner. 2016. "Comparison of Human and Camera Visual Acuity—Setting the Benchmark for Shallow Water Autonomous Imaging Platforms" Journal of Marine Science and Engineering 4, no. 1: 17. https://doi.org/10.3390/jmse4010017

APA StyleBainbridge, S., & Gardner, S. (2016). Comparison of Human and Camera Visual Acuity—Setting the Benchmark for Shallow Water Autonomous Imaging Platforms. Journal of Marine Science and Engineering, 4(1), 17. https://doi.org/10.3390/jmse4010017