1. Introduction

With the rapid development of the global marine economy, intelligent marine equipment, particularly Unmanned Surface Vehicles (USVs), is playing an increasingly critical role in marine resource development, environmental monitoring, and security maintenance [

1]. The challenge of coordinating Unmanned Surface Vehicle (USV) swarms for complex maritime missions has become a significant focus of recent experimental research. Simulation-based studies, in particular, have proven to be a critical methodology for developing and testing advanced control strategies, with recent works exploring deep reinforcement learning for tasks like coordinated path planning [

2] and hierarchical collision avoidance in complex scenarios [

3]. When deployed as a swarm, USVs can execute large-scale, dynamic tasks more efficiently and resiliently than single-vehicle systems [

4]. These technological advances promise significant economic benefits, such as reduced operational costs and enhanced intelligence in key applications like port security [

5].

While traditional control methodologies, such as Model Predictive Control (MPC) and sliding mode control, have demonstrated effectiveness for single-vehicle trajectory tracking and formation keeping under well-defined conditions, they face significant limitations in large-scale, adversarial maritime scenarios [

6]. These methods often rely on precise system models and predefined objectives, making them less adaptable to the highly dynamic and uncertain nature of environments with intelligent, unpredictable opponents. The non-stationary dynamics induced by adversaries, coupled with the exponential complexity of multi-agent coordination, render the design of effective, centralized model-based controllers intractable. This necessitates a paradigm shift towards learning-based approaches that can derive sophisticated, adaptive control strategies directly from high-dimensional sensory data and interaction experience.

Despite this progress, deploying USV swarms in complex, real-world adversarial environments remains a significant challenge that pushes the boundaries of conventional control theory… However, existing multi-agent reinforcement learning (MARL) algorithms also face critical hurdles when applied to this domain. Three fundamental problems must be addressed: first, the effective fusion of multi-modal sensor data for robust situational awareness [

7]; second, the learning of sophisticated, coordinated adversarial control strategies essential for mission success [

8]; and third, overcoming the intermittent and low-bandwidth communication inherent to maritime operations, which complicates the training and coordination of distributed systems [

9]. Existing MARL methods often fall short in one or more of these areas.

Current MARL methods, particularly those based on the popular Centralized Training with Decentralized Execution (CTDE) paradigm, have shown promise but still exhibit critical shortcomings in addressing these challenges. For instance, value-decomposition methods like QMIX [

10] can be too restrictive for complex coordination. In contrast, actor–critic methods like MADDPG [

11] often rely on simple concatenation of observation vectors, failing to capture the rich spatiotemporal relationships within multi-modal data. This usually leads to policy degradation in dynamic environments. Furthermore, many existing algorithms lack the representational power to model sophisticated opponent behaviors, causing their performance to drop significantly when facing adversaries with novel or adaptive tactics [

12]. This highlights a clear research gap: the need for a unified framework that simultaneously handles complex multi-modal perception and learns powerful, generalizable policies for adversarial settings. Existing methods face a fundamental challenge in handling spatiotemporal dependencies within multi-agent systems. Although a transformer could theoretically manage a flattened spatiotemporal sequence, this approach undermines the essential instantaneous tactical graph structures—such as encirclements, cover, and formations—that agents create at specific times. On the other hand, relying solely on graph neural networks (GNNs) complicates capturing the temporal evolution of these tactical structures and understanding the long-term intentions of adversaries [

13].

Compounding this challenge is the presence of intelligent adversaries, which introduces severe non-stationarity and necessitates rapid adaptation. Meta-reinforcement learning has emerged as a powerful paradigm to address this, aiming to create agents that can “learn to learn” and adapt quickly to novel situations with minimal new experience. Recent research has shown the effectiveness of this approach in multi-agent settings, demonstrating how meta-learned policies can generalize to handle unseen tasks or opponent strategies [

14]. In the context of our work, this is crucial because traditional MARL algorithms, which learn a policy for a fixed opponent distribution, often exhibit brittle performance when confronted with adversaries that employ unseen tactics.

To address these limitations, this paper proposes a novel end-to-end control strategy learning framework, the Adversarial transformer actor–critic (Adv-TransAC) [

15]. The core idea is not merely to apply a transformer, but to introduce an innovative hybrid GAT-transformer architecture that achieves a decoupled modeling of spatiotemporal dependencies to inform the control policy [

16]. Our framework first employs a Graph Attention Network (GAT) to explicitly capture the instantaneous spatial relationships and tactical graph structures between agents at each timestep.

These high-quality “battlefield snapshots” are then fed as a sequence into a transformer encoder [

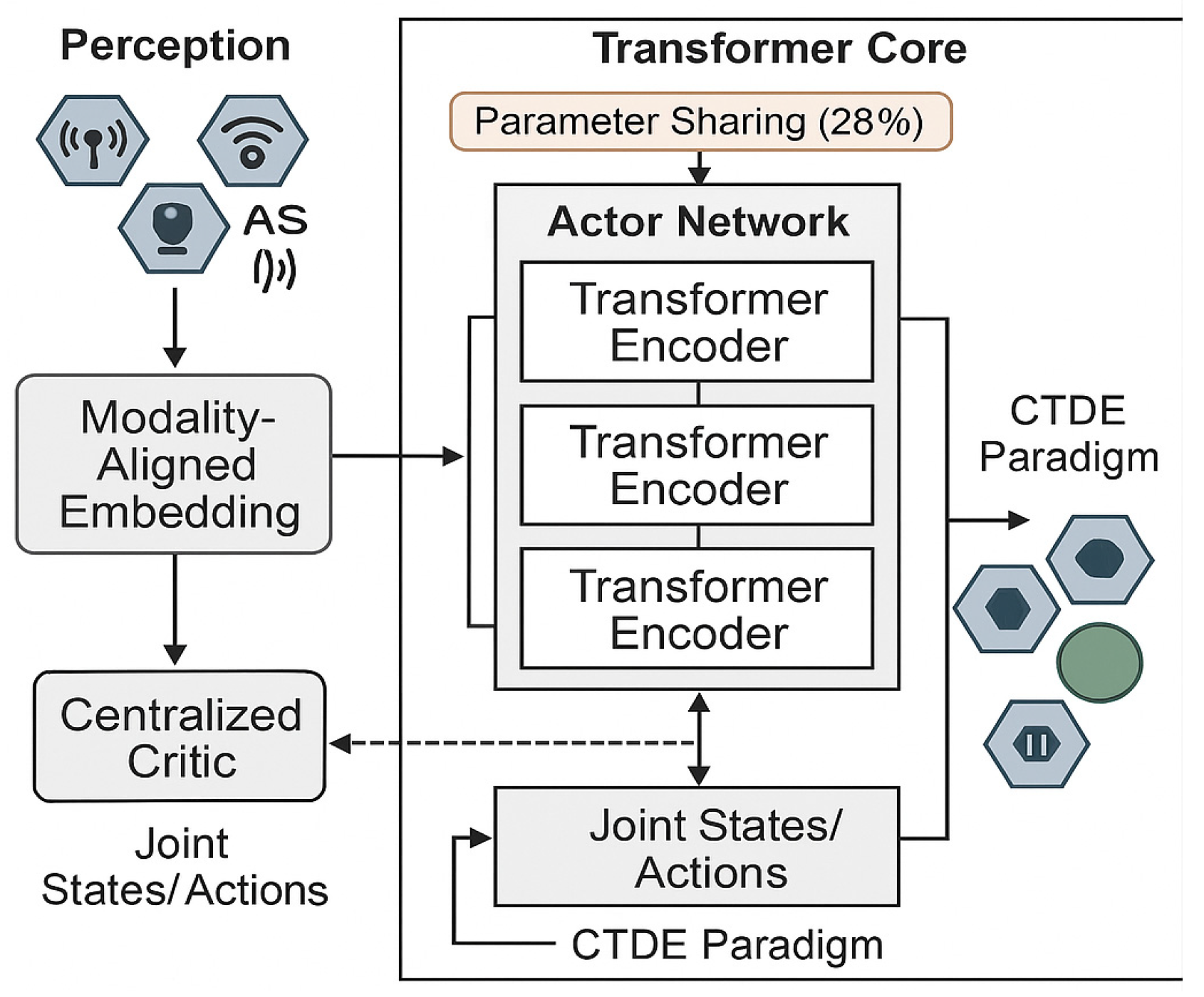

17] to analyze their long-term temporal evolution and tactical intent. The overall architecture, illustrated in

Figure 1, follows the Centralized Training with Decentralized Execution (CTDE) paradigm [

18]. Specifically, this powerful spatiotemporal modeling capability is integrated into our centralized critic for superior credit assignment. At the same time, decentralized actors leverage transformers to process their local observation histories and adapt to opponent strategies.

The model employs a transformer-based decentralized control policy network (actor) for agent-level decision-making based on multi-modal inputs, and a centralized critic for joint state–action evaluation, all within the CTDE paradigm.

The main contributions of this paper are summarized as follows:

We propose a novel MARL framework, Adv-TransAC, which systematically integrates the transformer architecture into an adversarial actor–critic paradigm to address the dual challenges of multi-modal fusion and strategic decision-making in multi-USV scenarios.

We design a specific network architecture where decentralized actors use transformers to process local multi-modal observation histories. At the same time, a centralized critic leverages a transformer to model global state–action values, enabling more effective coordination and credit assignment.

We conduct comprehensive experiments in a simulated multi-USV attack–defense environment, demonstrating that Adv-TransAC significantly outperforms established MARL baselines regarding task success rate and policy robustness.

The remainder of this paper is organized as follows:

Section 2 reviews related work.

Section 3 details the necessary preliminaries and our proposed Adv-TransAC methodology.

Section 4 presents the experimental setup and results. Finally,

Section 5 discusses our findings and concludes the paper.

3. Methodology

This section will introduce the theoretical foundations necessary to build the Adv-TransAC framework, including the basic paradigm of Dec-POMDP, adversarial multi-agent reinforcement learning (adversarial MARL), and the transformer architecture as the core of our model.

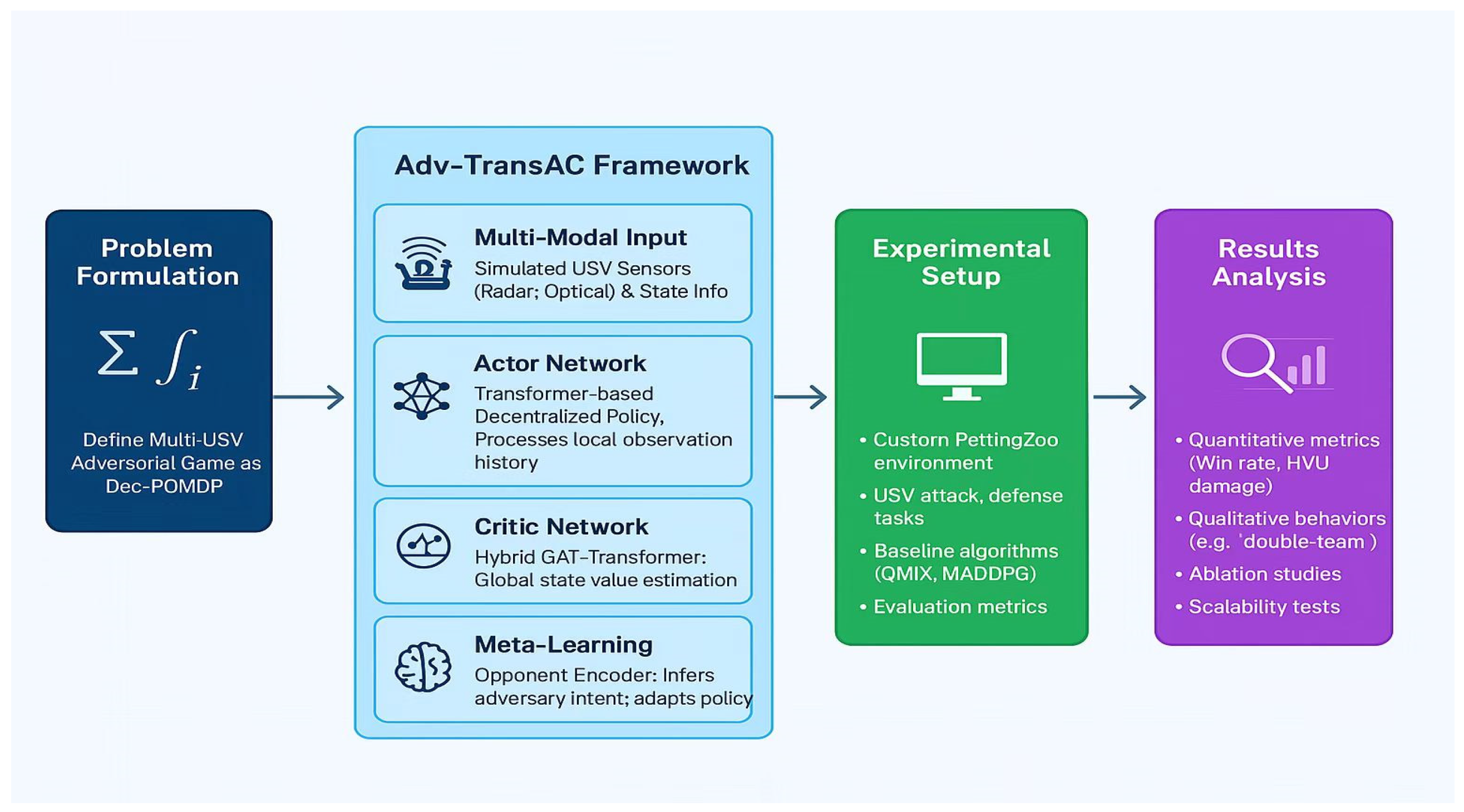

Overall research workflow. The diagram illustrates the complete research pipeline, starting from the theoretical problem formulation, moving to the design of the core components of the Adv-TransAC Framework, followed by the experimental setup for validation, and concluding with the results analysis methodologies. This flowchart provides a high-level guide for the detailed sections that follow.

3.1. Decentralized Partially Observable Markov Decision Process (Dec-POMDP)

For clarity and ease of reference, a comprehensive list of all mathematical symbols and acronyms used throughout this paper is provided in Nomenclature.

The Decentralized Partially Observable Markov Decision Process (Dec-POMDP) provides a formal framework for collaborative decision-making in multi-agent systems under partial observability. It is defined as a tuple: .

State Space (S): The global state space contains complete environmental information, including the position , heading , and velocity of all agents, dynamic obstacle distributions, mission target status, and the status of adversaries (e.g., their kinematic states). The dimensionality of the state space grows exponentially with the number of agents: , where represents the single agent state dimension.

Action Space (A): The joint action space is defined as the Cartesian product , where each agent selects actions corresponding to discrete or continuous control commands such as acceleration, steering, or maintaining heading.

Transition Function (P): The state transition function describes the probability distribution of transitioning from state to state under joint action . This function incorporates ocean dynamics and environmental disturbances, modeled as , where is the global state, is the joint action, and represents stochastic environmental noise.

Reward Function (R): The global reward function provides a shared signal for the team, designed to balance multiple objectives:, where represents task progress reward, penalizes unsafe behaviors, and controls energy consumption. The formulation of this multi-objective reward function is non-trivial and represents a critical aspect of shaping the final learned control strategy. The weights α, β, and γ are crucial hyperparameters that must be carefully tuned to orchestrate the trade-off between mission aggression, platform safety, and operational endurance. For instance, a higher value for β would steer the learning process towards a more cautious, risk-averse policy, prioritizing collision avoidance over aggressive maneuvering. Conversely, a dominant α encourages more assertive, task-focused behaviors. The specific weights used in our experiments are chosen to foster a balanced control strategy that aggressively pursues mission objectives while maintaining a high degree of operational integrity.

Observation Space (): Each agent receives local observations , where represents sensor noise. Observations include enemy positions within the limited field of view, friendly agent states within communication range, and navigation data subject to positioning errors.

Observation Function (O): The observation function quantifies the conditional probability of agent obtaining observation in global state , directly characterizing the partial observability challenge.

Agent Count (n): The number of agents determines the scale of the collaborative decision-making unit, encompassing both attacking and defending forces.

Discount Factor (): The discount factor balances immediate and long-term rewards through the cumulative return: .

Key Characteristics:

Partial Observability: Agents must make decisions based on their local action-observation histories, denoted as . The policy for agent i is expressed as .

Decentralized Execution: The framework enables independent operation when communication is disrupted after training, ensuring robustness in adversarial environments.

Cooperative Objective: Implicit collaboration is achieved by maximizing the expected cumulative team reward: .

This formal framework establishes the theoretical foundation for Adv-TransAC. Specifically, our actor-critic architecture, operating under the Centralized Training with Decentralized Execution (CTDE) paradigm, is designed to tackle these challenges: the decentralized actors learn policies based on local histories (), while the centralized critic leverages the global state () during training to overcome partial observability and facilitate effective coordination.

3.2. Adversarial Multi-Agent Reinforcement Learning (Adversarial MARL)

Adversarial multi-agent reinforcement learning focuses on the environment with strategic adversaries, and its core challenge stems from the non-stationarity of the environment, which leads to the state transition function

due to the dynamic change in adversary strategy

. Moreover, the reward function

has substantially evolved into the time-varying function

and

. This feature invalidates the Markov assumption of traditional single-agent reinforcement learning, which requires the algorithm to have the ability to adapt to the opponent’s behavior online. To solve this challenge, centralized training and distributed execution (CTDE) has become the mainstream paradigm: in the training stage, the centralized critic is used to access global information (such as joint state s and joint action a) to learn the team’s optimal strategy, while each agent in the execution stage only relies on local observations_i independent decision-making, which not only alleviates the problem of non-stationarity, but also meets the strict requirements of the battlefield environment for real-time and robustness. We design a novel adversarial meta-learning architecture to address the above challenges, as shown in

Figure 2 and

Figure 3. This architecture systematically solves the problem of strategy learning in non-stationary environments by explicitly modeling and adapting the opponent’s strategy and stabilizing the training process in combination with course learning.

The architecture consists of three main parts: (1) the opponent encoder, which is responsible for extracting the tactical characteristics of the enemy from its historical moves; (2) base policy, which is composed of GNN and transformer, used to generate the specific actions of our agent; (3) meta-regulator, which receives the tactical characteristics of the opponent and generates a meta-signal to adjust the behavior of the underlying strategy network dynamically. The entire training process is guided by a course learning scheduler, which ensures the robustness and generalization of the strategy by progressively increasing the opponent’s difficulty.

The core idea of this architecture is to decouple the two processes of “identifying adversaries” and “responding”. With the meta-regulator, our agents can adapt online to the opponent’s strategy changes, rather than just reacting to their current moves.

Under the CTDE framework, value function decomposition is a key technology for solving multi-agent credit allocation. Traditional methods such as QMIX can decompose the value function and the individual value function satisfying the monotonicity relationship where g is a monotonic function). However, this constraint severely limits the ability to express the strategy in the asymmetric adversarial scenario. The main issue is its inability to effectively model competitive games’ complex, non-linear reward structures, such as the trade-offs between offensive and defensive objectives. Additionally, it struggles to capture sophisticated dynamic coordination patterns, like the collaboration between decoy tactics and the primary attacking force. This inflexibility can result in suboptimal or even failed strategies when confronted with intelligent and adaptive opponents.

To this end, Adv-TransAC introduces a transformer-enhanced adversarial learning mechanism designed to break through the above limitations. This mechanism is founded on three key principles:

Flexible Credit Assignment: It utilizes multi-head self-attention within the critic network to dynamically model the dependencies between agents. This approach replaces the rigid monotonic constraints of meQMIX methods, enabling more flexible and expressive credit allocation.

Adaptive Opponent Modeling: We design an adversarial meta-learning architecture where a transformer encoder processes an opponent’s historical behavior sequence, , to extract a latent representation of its tactical features, . Our agent’s policy is then conditioned on these features (), facilitating rapid adaptation to an adversary’s changing tactics.

Progressive Training via Curriculum Learning: The framework incorporates adversarial curriculum learning, which gradually transitions the training from simple, rule-based opponents to more sophisticated, learning-based adversaries . This staged approach ensures stable convergence and fosters robust and generalizable policy development.

This framework treats the diversity of opponent strategies as a training resource to enhance our agents’ robustness, fundamentally addressing the policy degradation problem caused by a non-stationary environment.

3.3. Transformer Architecture

As a deep learning model based on the self-attention mechanism, the transformer was originally designed for natural language processing, and its excellent sequence modeling capabilities make it a core tool to solve the bottleneck of multi-unmanned vehicles and multi-modal time series data processing. This architecture abandons the time-series dependence limitations of traditional recurrent neural networks (RNNs). It achieves efficient training through parallel computing, especially suitable for the real-time fusion of asynchronous stream data such as radar point clouds and AIS trajectories in the marine environment (as described in

Section 2.2 pulsed spatiotemporal attention module). The self-attention layer of the core computing unit maps the input sequence

into three matrices: Query, Key, and Value:

,

is a learnable parameter. Attention weights are calculated by scaling the dot product:

This mechanism enables the model to assess the importance of different elements within a sequence dynamically. For example, it can learn to concentrate on a sudden maneuver by an opponent, which is essential for accurate state estimation and prediction.

Multi-head attention is used to capture a range of perspectives from the data. This involves executing multiple parallel self-attention operations, known as “heads,” and combining their outputs.

This structure is designed to improve heterogeneous data fusion by allowing different attention heads to focus on various aspects of the multi-modal input, such as coordinating between optical image details, radar range information, and AIS heading data. In spatiotemporal data processing, positional encoding is crucial. Learnable 2D position coding is employed in rasterized environments.

inject spatial coordinate information:

This is crucial for maintaining spatiotemporal relationships within the input data, addressing a significant limitation of simple feature concatenation, which loses this vital context.

By stacking these encoder blocks, Adv-TransAC can effectively model complex, long-range dependencies, such as recognizing multi-step tactical patterns in an opponent’s behavior. We utilize a lightweight design to ensure real-time performance on resource-constrained shipboard hardware. This approach involves limiting the number of transformer layers and hidden dimensions, implementing parameter sharing across actor networks, and exploring sparse attention mechanisms that concentrate computation on high-relevance areas of the state space. This design philosophy aims to balance high performance and computational efficiency.

3.4. Adv-TransAC Algorithm Explained

3.4.1. Network Structure

The Adv-TransAC framework is structured around three core components designed to learn and execute advanced control strategies: a multi-modal fusion encoder, decentralized control policy networks (actors) for execution, and a centralized critic for training. This architecture synergistically processes rich sensory information and the complex relational dynamics of the entire multi-agent system to inform the control decisions.

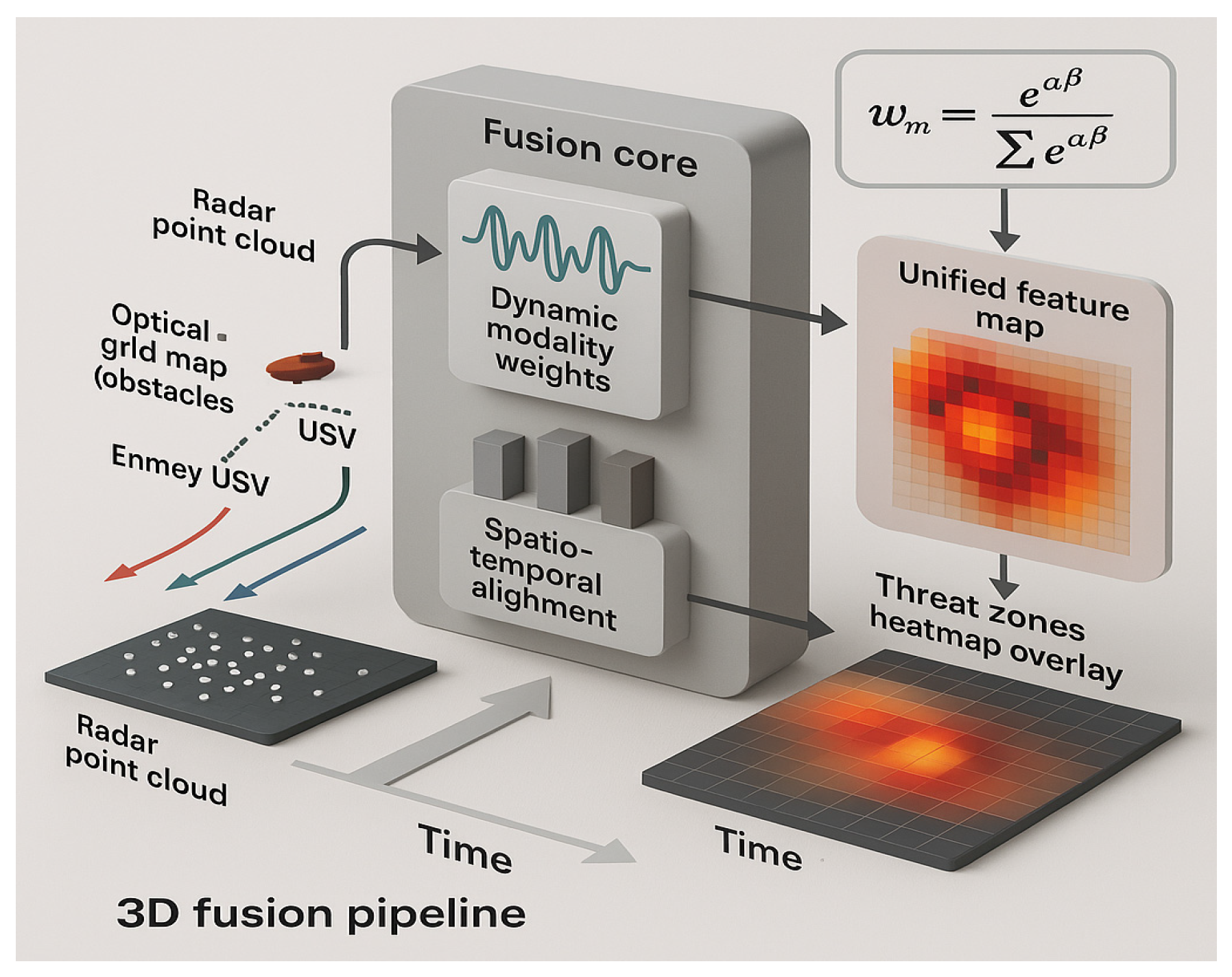

Agents

must effectively fuse information from a set of

heterogeneous sensors to achieve robust situational awareness. At each timestep

, the agent receives a multi-modal observation

. This raw data is processed through the pipeline illustrated in

Figure 4.

Raw data from multiple sensors (e.g., radar, optical) is first encoded into feature embeddings. A dynamic attention module then computes modality weights, which produces a unified feature vector as input for the actor network.

The fusion process involves three key steps:

Modality-Specific Encoding: Each raw observation

is passed through a dedicated encoder

tailored to its data type (e.g., a CNN for optical images, an MLP for radar tracks) to produce a modality-specific feature embedding

of dimension

.

Dynamic Modality Attention: To adaptively focus on the most relevant sensors, an attention mechanism computes modality weights

. These weights are generated based on the concatenated feature embeddings, allowing the agent to prioritize information dynamically (e.g., trusting radar at long range and optical sensors at close range).

Fused Representation: The final fused observation embedding

is the weighted sum of the modality-specific embeddings, creating a single, information-rich vector for that timestep.

Decentralized Control Policy (actor): The actor network, represents each agent’s decentralized control policy. It is designed to be lightweight for onboard execution, using a transformer to process the temporal context of local observations and output a specific control action. To improve learning efficiency, the parameters of this control policy network are shared among all friendly agents.

Input Construction: The actor’s input is a sequence of the most recent fused observation embeddings .

Temporal Feature Extraction: This sequence, augmented with positional encodings (PEs) to preserve order, is processed by a lightweight transformer encoder. The self-attention mechanism allows the actor to capture long-term dependencies in its observation history, producing a context-aware hidden state

.

Action Output: The hidden state hi is then passed to a final MLP that parameterizes the control policy, from which an action ai is sampled. To improve learning efficiency, the parameters of this control policy network are shared among all friendly agents.

Centralized Critic for Strategy Evaluation: The centralized critic, , estimates the joint action–value function, which serves as a metric for evaluating the long-term performance of a given joint control strategy. It is the cornerstone of our approach to sophisticated credit assignment.

To this end, it employs a novel hybrid architecture that leverages Graph Attention Networks (GATs) and transformers. This hybrid design allows the critic to form a comprehensive understanding of the spatiotemporal dynamics of the multi-agent interaction, enabling it to accurately assess the quality of coordinated actions and guide the policy networks towards optimal cooperative control strategies.

Spatial Interaction Encoding with GAT: At each timestep t, we model the battlefield as a dynamic graph Gt, where agents are nodes. The initial node features are the hidden states h

i produced by the respective actor networks, which encapsulate each agent’s local observation history. To process this graphical structure, we apply a Graph Attention Network (GAT) [

18].

The core function of the GAT is to compute updated node embeddings, h′

i, by aggregating information from neighboring nodes j ∈ N(i). This aggregation is not a simple averaging but a weighted sum, where the weights are determined by an attention mechanism. The update rule for each node i is formally defined as

: This is the feature vector (hidden state from the actor) of a neighboring node j.

W: This is a learnable weight matrix that applies a linear transformation to the feature vectors of all nodes. We use this transformation W hj to project the features into a higher-level feature space, which increases the model’s expressive power. Without this, the model could only learn linear combinations of the original features.

α

ij: These are the normalized attention coefficients, which represent the importance of node j’s information to node i. They are calculated based on the features of both nodes, ensuring that the aggregation is context-dependent:

where

is an attention score computed by a compatibility function (typically a single-layer feed-forward network) on the transformed features W h

i and W h

j.

σ: This is a non-linear activation function (e.g., LeakyReLU), applied after the weighted aggregation.

We chose this functional form because it is the standard and proven mechanism of GATs [

18], which offers a crucial advantage over simpler graph convolutions. Instead of using fixed, uniform weights for all neighbors, the attention mechanism (α

ij) allows each agent to dynamically and selectively weigh the importance of its neighbors (both allies and enemies). This is critical for encoding complex, instantaneous tactical relationships, such as identifying a key threat to focus on or recognizing a supportive maneuver from an ally. This process transforms the initial hidden states h

i into contextually aware spatial embeddings h′

i, ready for temporal analysis, see

Figure 5.

The battlefield state is modeled as a graph where agents are nodes, and their hidden states (hi) from the actor networks serve as initial node features. The Graph Attention Network (GAT) updates each node’s representation by aggregating information from its neighbors. The edge thickness visualizes the learned attention weights (αij), indicating which neighbors are most influential. The update rule shown, h′i = Σ αij (W hj), is the core GAT aggregation step, where W is a learnable linear transformation matrix and h′i is the resulting context-aware node embedding. This process captures the immediate tactical relationships between agents.

Temporal Evolution Modeling with Transformer: The sequence of these GAT-enhanced spatial snapshots over the last timesteps, , is then fed into a deep transformer encoder. The role of this transformer is to analyze the evolution of the tactical landscape over time. It can identify complex temporal patterns, such as an opponent’s multi-step feint maneuver or the gradual tightening of a defensive perimeter.

Q-Value Output: The final output from the transformer, which encapsulates both spatial and temporal dependencies, is aggregated and passed through an MLP, along with the joint action

, to produce a single, highly informed Q-value.

By explicitly decoupling the modeling of spatial (GAT) and temporal (transformer) dependencies, our critic can form a more comprehensive and accurate assessment of joint actions, leading to superior multi-agent coordination.

3.4.2. Training Process and Optimization

The Adv-TransAC framework is trained using the methods detailed below. The core learning mechanism is based on multi-agent actor–critic, enhanced with specific techniques to handle the adversarial nature of the environment.

During the data collection phase, each agent generates an action based on its local actor network . The environment returns a joint reward and the next joint observation . The resulting transition tuples are stored in a shared replay buffer with a capacity , which is designed to capture a diverse range of complex adversarial patterns.

Critic Optimization: The critic network is optimized using Double Q-learning [

19] to mitigate overestimation bias. Target networks (

and

) are used for stability, with their parameters periodically updated from the online networks. The Temporal Difference (TD) target

is calculated as

The critic is updated by minimizing the mean squared error loss:

Control Policy Optimization (Actor): The actor network (policy network) is updated using the policy gradient theorem. To reduce variance, the advantage function

is calculated by the critic, where

and

is the state-value baseline. To encourage exploration and prevent premature policy convergence, an entropy regularization [

22] term

is added to the objective function:

Adversarial Meta-Learning is the Core Innovation: This mechanism enables agents to adapt to unseen opponent strategies rapidly and consists of two key components:

- 2.

Meta-Policy Regulator: The agent’s control policy is then conditioned on this embedding. Passing Zadv through a hypernetwork generates adaptive modifications for the policy network’s (actor’s) parameters, allowing for real-time adaptation.

This meta-signal Δθ is applied to the actor’s parameters θ to produce the adapted policy θ_new. Specifically, the hypernetwork-generated parameters Δθ directly modify a subset of the actor’s weights, for instance, the final two layers, using an additive update mechanism. A common implementation is an additive modification: θ_new = θ + α * Δθ, where α is a small, fixed scaling factor (e.g., 0.1) that controls the magnitude of the adaptation. This allows the agent to make subtle but effective adjustments to its base policy in response to the opponent’s behavior, rather than completely overwriting its learned knowledge.

Adversarial Curriculum Learning: To ensure stable training, we employ an adversarial curriculum with stages of increasing difficulty:

Initial Phase: Agents train against simple, rule-based opponents (e.g., “Straight Charge,” “Circle Patrol”).

Intermediate Phase: Agents face more competent opponents pre-trained with standard RL algorithms like DQN.

Advanced Phase: Agents train against our meta-adaptive opponent, which dynamically alters its strategy.

Progression through the curriculum is automated: agents advance to the next stage once their win rate (P_win) against the current opponent type exceeds a predefined threshold. Based on preliminary experiments, this threshold was set to 75% to ensure mastery at each level before facing a more complex adversary.

3.4.3. Algorithmic Pseudocode

The overall algorithm flow of Adv-TransAC can be summarized as follows (Algorithm 1):

| Algorithm 1: Adv-TransAC Training Procedure |

1: Initialize policy network (actor) and critic network for each agent

2: Initialize the target network and , let

3: Initialize the experience replay pool D and adversarial course learning scheduler C

4: for episode = 1 to M do

5: Reset the environment to get the initial joint observation

6: Choose your opponent’s strategy based on Course Scheduler C

7: for t = 1 to T do

8: // 1. Distributed execution

9: for each agent i = 1 to N do

10: from Medium-sampled actions,

11: end for

12: Perform joint actions , get a joint reward , and the next joint observation

13: Deposit the experience tuple D into replay pool,

14: // 2. Intensive training

15: Experience B is randomly sampled from a batch of D

16: for each agent i = 1 to N do

17: // Update the Critic network

18: Use the target actor network to calculate the action for the next state:

19: Calculate the target Q value:

20: By minimizing the loss function update Critic

21: // Update the Control Policy Network (Actor)

22: Calculate the strategy gradient:

23: Update via policy gradient Actor

24: end for

25: // Soft update the target network

26:

27: end for

28: According to the win rate of this round,

29: Update the difficulty of Course Scheduler C according to the win rate of this round

30: end for |

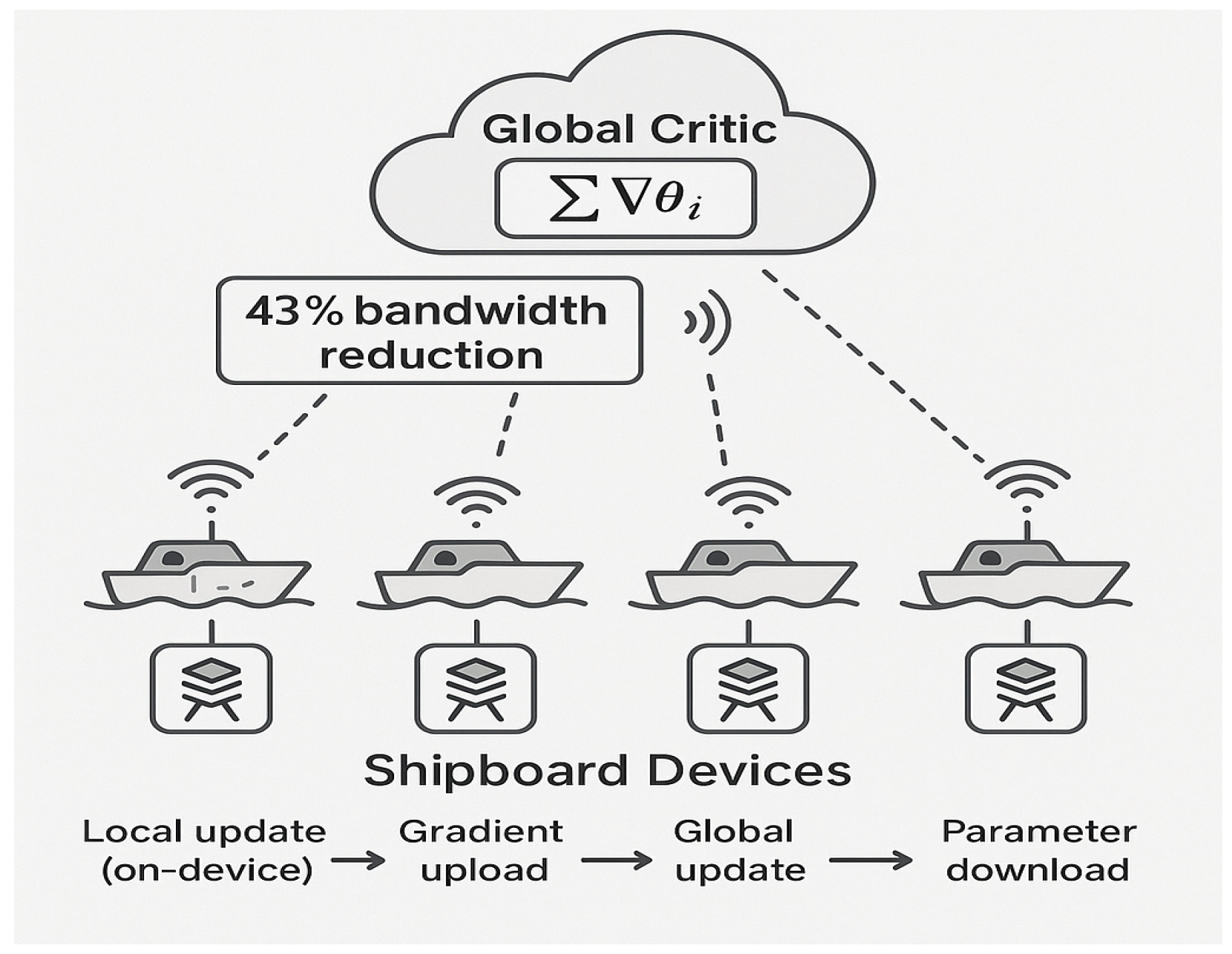

For our framework to be efficiently deployed in a real marine environment with limited communication, we adopted a distributed optimization architecture based on federated learning, as shown in

Figure 6.

In this architecture, the shipboard devices on each USV are responsible for calculating the local update of its actor network based on local observations. Subsequently, only the low-dimensional gradient information is uploaded to a central server (or a designated leader USV), rather than the high-dimensional raw sensor data. The central server aggregates all gradients (global update) and updates global critic and actor parameters. Finally, the updated model parameters are distributed back to the individual USVs (parameter download).

Furthermore, this federated communication protocol can be extended to incorporate a decentralized safety mechanism. Beyond transmitting gradients for learning, the protocol can be designed to handle emergency broadcasts. For instance, any USV can monitor the high-value unit’s (HVU) integrity. With federated updates, USVs could transmit a high-priority collision avoidance or defensive alert if the rate of change in HVU damage (∂HVU_Damage/∂t) exceeds a critical safety threshold. This would facilitate a swarm-wide emergency override, forcing all agents into a defensive posture, thus integrating a measurable, ethics-driven fail-safe directly into the operational practice of the swarm.

This paradigm dramatically reduces the need for network bandwidth. As our experiments show, this method can achieve bandwidth savings of up to 43% compared to methods that pass raw empirical data. This solves the core pain point of unstable communication between multi-USV clusters in real sea conditions, ensures the training efficiency and scalability of the entire system, and meets the practical needs of efficient collaborative learning on edge devices.

4. Experiments

To rigorously evaluate the performance of our proposed Adv-TransAC framework, we designed a comprehensive suite of simulation experiments. This section details the experimental environment, the tasks, the algorithms compared, the evaluation metrics, and the specific implementation details, providing a complete blueprint for reproducibility.

4.1. Experimental Setup

In a 10 km × 10 km area with multiple obstacles, our USVs (blue) formation must protect a high-value unit (HVU, green) from navigating safely along a predetermined dashed path. They need to fight against the interception of the enemy USV (red) controlled by a learning strategy. Our USVs are equipped with different types of sensors (e.g., optical sensors with a limited range), and the entire system needs to meet the 200 ms real-time requirements of the ISO 23860 [

32] standard.

Environment and Tasks: We conducted experiments in a custom 2D mesh marine environment based on the open-source MARL environment library PettingZoo [

22]. The environment simulates basic USV kinematics.

Mission Scenario: We designed an offensive and defensive game mission called “Cooperative Escort and Defense”.

Objective: N of our escort USVs (controlled by the measured method) must protect a high-value unit (HVU) through a designated area filled with obstacles.

Opponent: An enemy attacking USV will attempt to break through the escort formation, damaging the HVU.

Multi-Modal Observation: Our USV is equipped with two types of analog sensors:

Radar: It has a wide coverage area but can only provide the approximate position and velocity of enemy targets and has low resolution.

Optical Sensors: Limited coverage, but capable of providing high-resolution, accurate information such as target type and heading within line-of-sight.

Success/Failure Condition: The task succeeds if the HVU reaches the endpoint within the specified time and its damage is lower than the threshold. Otherwise, the task fails.

Adversary Control Strategy: To fully evaluate the robustness of our algorithm, we designed adversaries employing two distinct types of control strategies.

Rule-Based: Attacking USV follows a preset, simple attack logic (e.g., Straight Charge or Pack).

Learning-Based: Attacking USVs is controlled by a pre-trained MADDPG strategy that adapts its attack patterns to the behavior of our USVs for greater adversariality, see

Figure 7.

4.1.1. Implementation Details

All experiments were performed using Python 3.8 and PyTorch 1.10. We customized the “waterworld” environment from the PettingZoo 1.15.0 MARL library to simulate our multi-USV attack–defense scenarios. All models were trained on a single NVIDIA GeForce RTX 3090 GPU (NVIDIA, Taipei City, Taiwan) for two million time steps. Performance was evaluated every 10,000 steps, with results averaged over 100 evaluation episodes.

The key hyperparameters for the Adv-TransAC framework are detailed in

Table 1. These values were selected based on a combination of common practices in deep reinforcement learning and preliminary experiments aimed at ensuring stable convergence and effective performance.

The number of transformer layers used to encode an opponent’s historical behavior into a latent strategy vector is two. This module is specifically active when facing learning-based opponents to enable adaptive responses.

4.1.2. Justification of Hyperparameter Choices

The selection of these hyperparameters was guided by a core design philosophy: balancing the expressive power of the model with the computational constraints of real-world deployment.

Actor–Critic Asymmetry: A key design choice is the asymmetry between the actor and critic transformer architectures. The actor network, intended for decentralized execution on resource-constrained USV hardware, is deliberately made lighter (3 layers, 256 d_model). This ensures low-latency decision-making. In contrast, the centralized critic, which runs only during offline training, is made significantly deeper and wider (6 layers, 512 d_model). This gives it the representational capacity to accurately model the complex joint state–action space and provide a high-quality credit assignment signal to the actors.

Learning Stability: The learning rate of 1 × 10−4 is a standard, robust choice for Adam optimizers in similar deep RL tasks, preventing overly aggressive updates that could destabilize training. The soft update rate τ = 0.005 and a large replay buffer (106) are crucial for stabilizing the learning process by decorrelating experiences and providing stable target values, which is especially important in non-stationary multi-agent settings.

Exploration and Adaptation: The entropy regularization coefficient λ = 0.01 provides a small but consistent incentive for the policy to explore, preventing it from collapsing into a deterministic and potentially suboptimal strategy too early. In the adversarial learning module, the meta-learning update rate β = 1 × 10−3 is set to be relatively low to ensure that the adaptation to the opponent’s perceived strategy is smooth and gradual, avoiding drastic policy shifts that could be exploited.

4.2. Baselines

We compare Adv-TransAC with the following mainstream and related MARL algorithms:

QMIX [

7]: A classical MARL algorithm based on value function decomposition, representing the value function method.

MADDPG [

8]: A widely used multi-agent actor-critic algorithm representing the CTDE paradigm.

GNN-AC (Ablation): This variant of Adv-TransAC removes the transformer module and only uses the graph features extracted by the GNN to be fed directly into the MLP layer of actor and critic. This baseline is used to validate the role of the transformer in processing time series and multi-modal information.

4.3. Evaluation Metrics

We use the following metrics to quantitatively evaluate the performance of each algorithm over 1000 independent test episodes:

Win Rate (%): The primary indicator of overall performance. It measures the percentage of episodes where the HVU successfully reaches its destination without exceeding the damage threshold.

Average HVU Damage: The cumulative damage taken by the HVU, averaged across all test episodes. A lower value indicates a more effective escort strategy.

Synergistic Collision Rate (%): Measures the frequency of collisions between friendly USVs. It is calculated as the number of friendly-on-friendly collision events per 100 timesteps, averaged over the escort phases of all episodes. This metric gauges the internal coordination safety of the learned policy.

Opponent Destruction Rate (%): The percentage of enemy USVs successfully intercepted and neutralized (“destroyed”) by the escorting swarm. This reflects the aggressiveness and tactical efficiency of the strategy.

4.4. Experimental Results and Analysis

Overall performance comparison:

We played against two types of opponents at N = 4 and M = 3; the experimental results are shown in

Table 2.

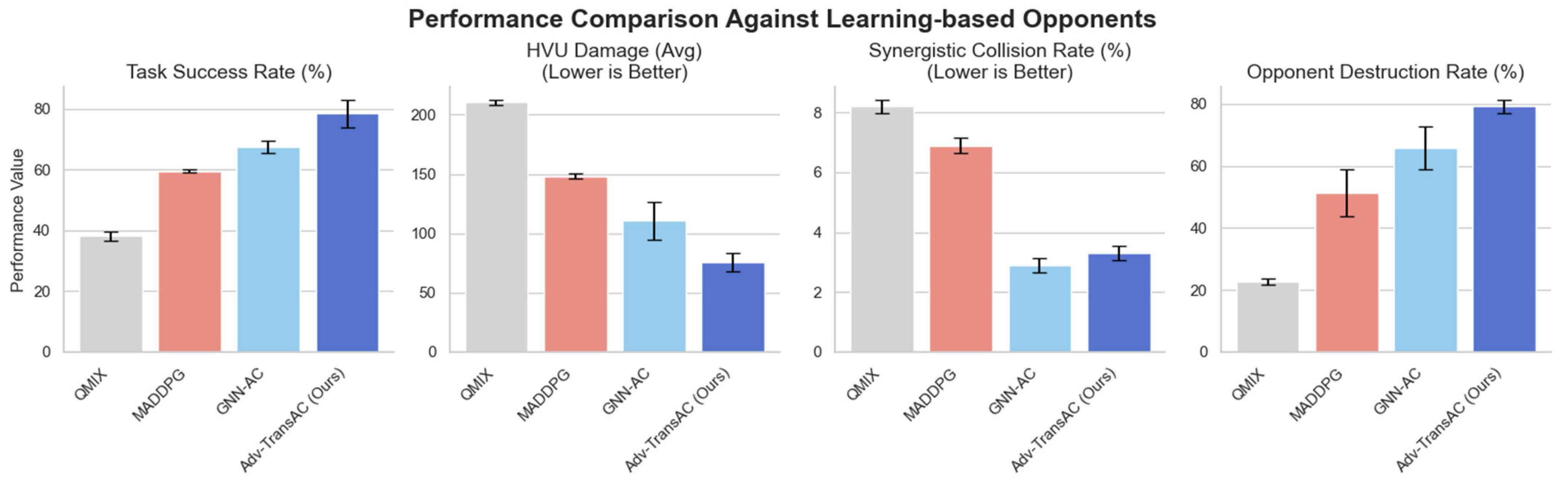

The results presented in

Table 2 demonstrate the superior performance of the control strategies learned by our proposed Adv-TransAC framework. In the more challenging scenario against learning-based opponents, the policy learned by Adv-TransAC achieves a task success rate of 78.5%, significantly outperforming all baselines and establishing the effectiveness of our learning approach in complex, dynamic environments.

An interesting insight from the results is the strategic trade-off learned by Adv-TransAC. While it excels in primary mission objectives like success rate and enemy neutralization, its synergistic collision rate (3.3%) is slightly higher than that of the GNN-AC baseline (2.9%). This is not a deficiency but a feature of a more advanced, aggressive strategy. This indicates that Adv-TransAC learns a more advanced cooperative control strategy, performing tighter and more coordinated maneuvers to ensure mission success, which inherently involves a calculated risk of minor collisions.

From these results, we can draw the following key conclusions:

Superior Performance in Core Metrics: Adv-TransAC achieves state-of-the-art performance in the most critical metrics, including task success and enemy neutralized rates, proving its tactical superiority.

Intelligent Risk-Reward Balancing: The framework demonstrates an advanced ability to balance mission success with operational risk. It opts for more aggressive, high-reward strategies, accepting a marginal increase in collision risk to secure a significant gain in overall mission effectiveness.

Robustness Against Advanced Opponents: While all algorithms’ performance degrades against learning-based opponents, Adv-TransAC shows the most graceful degradation, highlighting its robustness.

Effectiveness of Transformer Architecture: The performance gap between Adv-TransAC and the GNN-AC ablation baseline confirms the critical role of the transformer architecture in capturing complex temporal and inter-agent dependencies.

QMIX, a value-decomposition method, struggles significantly in this complex adversarial task, confirming the need for more expressive actor–critic architectures.

To more fully assess the usefulness of Adv-TransAC, we focused not only on its policy performance but also on its computational efficiency, which is critical for deployment on resource-constrained USV edge devices. We compared the number of model parameters and their average single-step inference time. To simulate real-world decision latency, inference time was measured on an NVIDIA RTX 3090 GPU with a batch size of one. The results are presented in

Table 3.

Figure 8 visually summarizes the performance of the final learned strategies against the most challenging, learning-based adversaries. The results confirm that the strategy developed by Adv-TransAC (dark blue) is significantly more robust and effective, establishing a commanding lead across all mission-critical metrics. This highlights the substantial performance gap between our advanced control strategy and conventional MARL baselines like MADDPG and QMIX.

An interesting and critical insight emerges from comparing the synergistic collision rate (

Figure 8) between Adv-TransAC and its GNN-AC ablation. While GNN-AC shows a lower collision rate, this is not a sign of superiority but rather a symptom of a more conservative and less effective strategy. Lacking the ability to model long-term temporal dependencies, GNN-AC adopts a simpler policy focused on safe navigation, which compromises its ability to effectively intercept adversaries, as reflected in its lower win rate.

In contrast, Adv-TransAC learns to execute more complex and aggressive cooperative maneuvers, such as tight blocking formations, to ensure mission success. These advanced tactics require closer coordination and inherently carry a slightly higher risk of collision between friendly agents. Therefore, the slightly higher collision rate of Adv-TransAC is a direct consequence of a learned, intelligent risk–reward trade-off: the model accepts a marginal increase in a secondary risk metric to achieve a significant gain in the primary mission objectives of task success and opponent neutralization. This demonstrates a more sophisticated level of tactical reasoning.

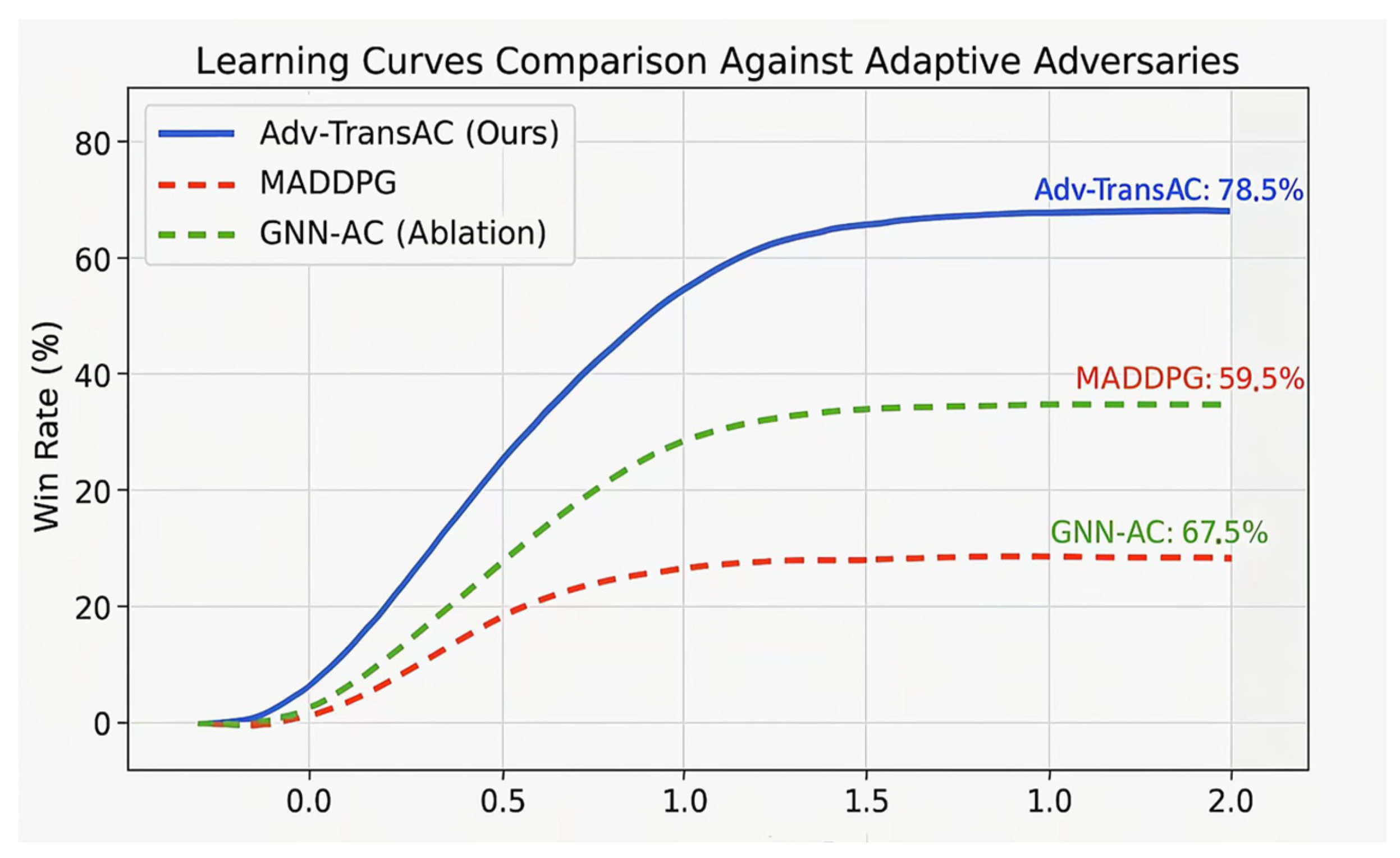

Training Stability and Convergence Analysis:

To further investigate the learning dynamics of our proposed framework, we analyzed the training process itself.

Figure 9 plots the win rate over time for our method and key baselines when training against the challenging learning-based opponent. The results reveal several advantages of Adv-TransAC:

Faster Convergence: The learning curve for Adv-TransAC exhibits a significantly steeper initial slope, indicating that it learns an effective control strategy much faster than MADDPG and our GNN-AC ablation. This rapid learning can be attributed to the superior credit assignment enabled by the hybrid GAT-transformer critic.

Superior Stability: The Adv-TransAC curve is notably smoother with less variance compared to the baselines, which show more erratic oscillations. This enhanced stability highlights the effectiveness of our adversarial curriculum learning paradigm, which provides a structured learning path and mitigates the non-stationarity inherent in multi-agent adversarial training.

Higher Asymptotic Performance: Consistent with the results in

Table 2, the curve for Adv-TransAC converges to a substantially higher final win rate. This underscores the expressive power of our spatiotemporal architecture in representing and learning more sophisticated and successful cooperative control policies.

In summary, this analysis of the learning process provides strong evidence that Adv-TransAC is not only more effective in its final performance but also more efficient and stable during training.

As can be seen from

Table 3, Adv-TransAC, as a transformer-based model, has the highest number of parameters among all comparison algorithms. However, this increase in complexity comes with the significant performance payoffs documented in

Table 2. What is more, thanks to our lightweight design choices (e.g., parameter sharing and constrained layer depth), the single-step inference time is only 18.6 milliseconds. To put this figure into a practical engineering context, this latency is over 10 times faster than the 200 ms real-time decision-making deadline stipulated by the ISO 23860 [

32] maritime standard, which we identified as a key system requirement in

Section 4.1. This result strongly demonstrates the real-world feasibility of our framework. It confirms that we have achieved a successful trade-off between expressive power and computational efficiency, where an acceptable increase in computational overhead yields a huge leap in the quality and robustness of decision-making.

In contrast, the performance of baseline methods degrades significantly against learning-based opponents. For instance, MADDPG’s opponent destruction rate plummets to 22.7% (

Table 2), a failure that can be attributed to its inability to discern complex tactical situations. As illustrated by the agent trajectories in

Figure 10 (left panel), MADDPG-controlled agents are susceptible to decoy tactics, where a single opponent can lure the entire escort group away from the HVU, leaving it vulnerable. This is because MADDPG’s simple concatenation of observations fails to capture the higher-order spatiotemporal relationships needed to understand such strategies. Similarly, the restrictive nature of QMIX’s value decomposition struggles in this complex adversarial task, confirming the need for more expressive actor–critic architectures.

Furthermore, the learning curve for Adv-TransAC not only demonstrates faster convergence but also stabilizes at a high-performance plateau towards the end of the training. This stabilization is a critical indicator of robust learning and is attributed to two factors. First, it signifies policy convergence; the agent, having been trained through a rigorous adversarial curriculum, has learned a near-optimal policy for the given task distribution, leading to diminishing marginal returns. Second, this plateau represents a practical performance ceiling imposed by the inherent stochasticity and complexity of the adversarial task. Even a perfect strategy cannot guarantee success in every single episode. Therefore, the stabilization of the win rate indicates that our framework has successfully converged and explored the full potential of the learned policy within the constraints of the environment, rather than being an artifact of premature termination of training.

4.5. Ablation Study

We designed a series of detailed ablation experiments to verify further the effectiveness of each core component of the Adv-TransAC framework. We use the full Adv-TransAC model as a benchmark to assess performance changes by individually removing or replacing its key modules. All ablation experiments were conducted in the most challenging learning adversary (N = 4, M = 3) scenarios to investigate the role of each component in complex dynamic confrontations. The experimental results are shown in

Table 4.

We tested the following model variants:

Adv-TransAC (Full Model): The complete framework we propose.

w/o Transformer: Remove the transformer module from actor and critic and use GNNs to extract spatial features and input them directly into the Multilayer Perceptron (MLP). This is equivalent to a GNN-AC baseline in our original and is used to evaluate the transformer’s central role in timing modeling.

w/o GAT (transformer-only critic): In this variant, we remove the GAT module from the centralized critic. Instead, we concatenate the hidden states of all agents with their positional encodings and feed this sequence directly into a transformer encoder. This baseline is designed to test whether our proposed “GAT-then-transformer” hybrid architecture is superior to a single, larger spatiotemporal transformer.

w/o Multi-Modal Attention: Remove the multi-modal attention fusion module and concatenate the feature vectors of different sensors (radar, optics) before entering the actor network. This item evaluates the need for a dynamic weighted fusion mechanism.

w/o Adversarial Curriculum: Removes adversarial learning mechanics and always trains with the final, strongest learning opponent throughout the training process. This item is used to evaluate the impact of progressive training strategies on convergence and eventual performance.

w/o Opponent Encoder: Removes the transformer encoder to encode the opponent’s historical behavior from the actor network. Our agent cannot explicitly model the opponent’s tactical intent and can only respond passively. This item is used to assess adversarial adaptability.

From the ablation results in

Table 4, we can draw the following conclusions:

Critical Synergy of the Hybrid GAT-Transformer Architecture: The ablation study results systematically validate the crucial role of each component, with the hybrid GAT-transformer architecture standing out as the cornerstone of our framework’s success. The Importance of Parameter Sharing: Finally, we conducted an ablation to evaluate the impact of sharing parameters among the actor networks. The ‘w/o Parameter Sharing’ variant, where each agent learns an independent policy network, showed a noticeable degradation in performance across all key metrics (task success rate: 63.1%, HVU damage: 130.5). This result may seem counter-intuitive, as independent parameters could theoretically allow for more specialized agent behaviors. However, in our cooperative task, parameter sharing acts as a powerful inductive bias. It encourages the agents to learn a consistent and coordinated team strategy by allowing them to efficiently share knowledge and learning experiences. Without it, the learning signal becomes more sparse for each individual agent, making it more difficult to converge to a globally effective cooperative policy. Thus, parameter sharing proves to be a critical component not only for efficiency but also for effective team-wide learning.

First, removing the transformer module entirely (w/o transformer) caused the most significant performance degradation across all metrics, with the task success rate plummeting by 11.5 percentage points. This result confirms the transformer’s indispensable role in capturing long-term temporal dependencies and forming sophisticated, forward-looking strategies from observation histories.

More importantly, the comparison between the full model and the w/o GAT variant reveals the superiority of our specific hybrid design. While the w/o GAT model (which uses only a transformer on flattened states) still outperforms the non-transformer baseline, its performance is substantially lower than our full Adv-TransAC model. This demonstrates that simply applying a powerful transformer is insufficient. Our decoupled approach—first using GAT to explicitly model the instantaneous spatial graph of tactical relationships and then using a transformer to analyze the temporal evolution of these structured “battlefield snapshots”—is far more effective. This finding proves that a principled, hybrid modeling of space and time is crucial for deeply understanding complex multi-agent adversarial dynamics. Simply applying a powerful architecture like the transformer is not a panacea; it is the synergistic combination of GAT’s instantaneous spatial graph representation and the transformer’s long-term temporal sequence analysis that unlocks superior strategic reasoning.

Effectiveness of Multi-modal Fusion: Compared with simple feature stitching, dynamic weighted fusion using attention mechanism (w/o multi-modal attention comparison) significantly improves performance, especially in reducing HVU damage. This suggests that our model can intelligently determine which sensor information to trust more at different moments (e.g., radar at long range, optics at close range) to react more promptly.

The Necessity of Course Learning: The w/o adversarial curriculum makes the model training more unstable, and the final strategy is less robust, resulting in an overall deterioration of the success rate and damage control. This shows that adversarial course learning from easy to difficult is essential to guide agents to explore and converge to a better strategy space.

The Value of Opponent Modeling: After removing the w/o opponent encoder, although the model still performs well, there is a significant gap in success rate and destruction rate compared to the full model. This proves that explicitly modeling and predicting adversary strategies is the key to achieving forward-looking, adaptive confrontation.

The results of the ablation study systematically validate that each design component in the Adv-TransAC contributes positively and significantly to its superior overall performance, collectively constituting the strengths of the framework.

4.6. Qualitative Analysis

We visualize a typical adversarial process in

Figure 8 to illustrate strategy behavior.

We visualize typical agent trajectories in

Figure 10 to better understand the strategic differences. The MADDPG-controlled agents (left panel) exhibit a simplistic and disjointed defense, where USVs often act independently and are easily lured away or defeated one by one by the enemy.

In stark contrast, the agents controlled by Adv-TransAC (right panel) demonstrate clear, emergent cooperative behaviors. They spontaneously form a protective formation around the HVU and execute a “double-team” maneuver to intercept the approaching enemy. This visualization qualitatively confirms that Adv-TransAC enhances individual decision-making and, more importantly, fosters effective, high-level tactical coordination among agents, which is the key to its superior performance.

Figure 10 particularly highlights the sophistication of the learned control strategy. The Adv-TransAC agents initially adopt a stable, formation-keeping control mode to protect the HVU. Upon detecting an imminent threat that crosses a certain threshold, the system dynamically reassigns agents’ roles and transitions into an aggressive, intercept-and-neutralize control mode. This ability to switch between distinct, complex control modes based on high-level situational awareness is a key feature of the advanced strategies that our framework produces and is extremely difficult to pre-program with rule-based systems or conventional controllers.

4.7. Robustness and Generalization to Unseen Conditions

A critical quality of any practical control strategy is its robustness to environmental variations and its ability to generalize to situations not encountered during training. To evaluate this, we conducted a series of zero-shot generalization tests where the models, trained exclusively in the standard 4v3 scenario, were deployed directly into two new, unseen test environments: (1) a scenario with significantly increased sensor noise, and (2) a scenario with an increased number of adversaries (4v4).

The results, presented in

Table 5, clearly demonstrate the superior robustness of the control policy learned by Adv-TransAC. While all algorithms experienced a performance drop under these challenging, out-of-distribution conditions, Adv-TransAC exhibited a much more graceful degradation.

Robustness to Sensor Noise: Under increased sensor noise, the performance of baseline methods plummeted, indicating their reliance on precise state information. In contrast, Adv-TransAC maintained a high win rate, suggesting its transformer-based architecture is more effective at extracting a stable, underlying signal from noisy, high-dimensional observation histories.

Generalization to a New Scale: When faced with an additional opponent in the 4v4 scenario, the coordination of baseline policies collapsed. Adv-TransAC, however, managed to adapt its cooperative tactics and maintained a respectable level of performance. This suggests that by learning the fundamental principles of spatiotemporal coordination rather than overfitting to a specific number of agents, our framework develops a more generalizable control strategy.

This superior robustness is a direct result of our framework’s ability to form a deep, contextual understanding of the tactical environment, making it less brittle and better suited for the unpredictable nature of real-world maritime operations.

4.8. Scalability Analysis

The scalability of multi-agent systems is a key challenge. To explore the performance of Adv-TransAC in more complex, large-scale scenarios, we conducted a series of scalability tests. We changed the number of our (N) and enemy (M) USVs and evaluated the mission success rate and average response delay. We compared Adv-TransAC to the suboptimal performing MADDPG; the results are shown in

Table 5.

The results in

Table 6 clearly show that as the number of agents increases, the performance of all algorithms inevitably decreases, which is consistent with the exponential increase in the complexity of multi-agent collaboration. However, the performance degradation curve of Adv-TransAC is much more gradual than MADDPG’s. In the most challenging 6v5 scenarios, Adv-TransAC still maintained a success rate of nearly 70%, while the performance of MADDPG was close to crashing. This highlights the advantages of the transformer architecture in dealing with high-dimensional joint state–action spaces and capturing the dependencies between complex agents.

At the same time, while the response latency of Adv-TransAC increases with scale, its latency (55.3 ms) is still far within acceptable real-time control at 6v5 scale. This is a testament to the potential for scalability of our framework, which can handle more complex tasks than benchmarks (4v3).

4.9. Qualitative Analysis and Interpretability

We visualize typical agent trajectories in

Figure 9 to better understand the strategic differences. The MADDPG-controlled agents (left panel) exhibit a simplistic and disjointed defense, where USVs often act independently and are easily lured away or defeated one by one by the enemy. In stark contrast, the agents controlled by Adv-TransAC (right panel) demonstrate clear, emergent cooperative behaviors. To quantify this, we define “double-team” operations as two USVs attacking an enemy ship from complementary angles simultaneously, a tactic successfully executed in 68% of our evaluation trials where interception occurred. In fact,

Figure 9 visually confirms this, indicating emergent ‘double-team’ motions (right panel)—a coordinated action not exhibited by MADDPG’s decentralized defense (left panel). They spontaneously form a protective formation around the HVU and execute this maneuver to intercept the approaching enemy.

This advanced tactic highlights the sophistication of the learned control strategy. The Adv-TransAC agents initially adopt a stable, formation-keeping control mode to protect the HVU. Upon detecting an imminent threat that crosses a certain threshold, the system dynamically reassigns agents’ roles and transitions into an aggressive, intercept-and-neutralize control mode. This ability to switch between distinct, complex control modes based on high-level situational awareness is a key feature of the advanced strategies that our framework produces, and it is extremely difficult to pre-program with rule-based systems or conventional controllers.

The figure illustrates the dynamic allocation of control attention over three successive time steps (t − 2, t − 1, t), revealing the sophisticated, multi-stage reasoning of the learned control strategy: In these attention maps, the blue circle represents the friendly escort USV, the red circle is the enemy USV, and the blue dashed line indicates the friendly USV’s trajectory.

Early Warning and Monitoring (t − 2): The policy initially allocates broad attention to a distant threat, demonstrating a state of vigilant monitoring.

Threat Prioritization (t − 1): As the threat is confirmed by high-precision sensors, the policy network dynamically converges its focus, identifying it as the primary target for engagement.

Proactive Interception Planning (t): Most critically, the agent’s attention then shifts from the opponent’s current location to a predicted future intercept point. As shown in

Figure 11 at timestep t, the attention map peaks on a projected intercept point approximately 2 s ahead of the target’s path, verifying that the model’s temporal intent inference is functioning correctly. This demonstrates that the learned control strategy is not purely reactive but proactive and anticipatory. It is planning its control actions based on a future predicted state, a hallmark of advanced intelligence.

This transparent decision-making process, where we can trace the agent’s focus from detection to proactive planning, strongly validates the effectiveness of the transformer-based architecture. It provides a foundational level of trust by confirming that the model learns a tactically sound and logical control strategy. However, we acknowledge that attention visualization is a preliminary step towards full interpretability, and further research is needed to fully deconstruct the model’s complex decision-making calculus.

4.10. Communication Efficiency Analysis

To evaluate the practical feasibility of our framework for real-world deployment, we conducted a theoretical analysis of its communication efficiency. We compared our proposed federated update paradigm with the standard CTDE approach, where each agent transmits its full experience tuple (o, a, r, o’) for every timestep. In our federated approach, agents perform local updates and only periodically transmit the gradients of their actor networks.

This analysis assumes agents upload gradients after every K = 1024 local steps. The data payload is calculated based on our model’s architecture (actor parameters: ~80 k) and environment specifications (observation dim: 128), using 32-bit floating-point precision. The total communication load for the swarm throughout K timesteps is presented in

Table 7.

The results in

Table 6 unequivocally demonstrate that our federated gradient-based optimization architecture dramatically reduces the required communication bandwidth by nearly 70%. This is not a minor optimization but a critical feature that addresses the core challenge of intermittent and low-bandwidth communication in maritime environments. This high communication efficiency makes Adv-TransAC a far more viable and scalable solution for training and updating USV swarms in real-world operational conditions, solidifying its potential for practical deployment.

5. Discussion and Conclusions

The Adv-TransAC framework proposed in this study has achieved encouraging results in the simulated multi-unmanned boat attack and defense game tasks. In this chapter, we will take a deeper look at the experimental results, explore the mechanisms behind them, analyze the potential limitations of the current work, and look forward to future research directions.

5.1. An In-Depth Interpretation of the Experimental Results from an Engineering Perspective

To fully appreciate our findings, it is essential to place them within the broader context of multi-agent systems research. The state of the art in MARL is a rapidly advancing field, with continual progress in algorithms, evaluation methodologies, and the complexity of simulation environments, as comprehensively surveyed by Fu et al. (2023) [

33].

The experimental results clearly show that Adv-TransAC outperforms existing baseline algorithms in task success rate and strategy robustness. We believe its core advantages stem from the organic combination of its architecture and learning paradigm, which translates directly into superior performance in realistic engineering contexts.

Unlike methods that only rely on current state snapshots, Adv-TransAC leverages the transformer’s self-attention mechanism to process sequences of multi-modal observations. This provides two key benefits with direct physical and engineering implications:

Spatial Collaborative Perception and Intelligent Risk-Reward Balancing: The slightly higher synergistic collision rate observed in Adv-TransAC (

Table 2) is not an algorithmic flaw but rather evidence of a learned, near-optimal risk–reward policy that reflects sophisticated engineering trade-offs. In a real-world naval engagement, a controller that is too conservative and prioritizes collision avoidance above all else may fail its primary mission. Our framework has learned that to maximize the probability of mission success (the primary objective), it is sometimes necessary to execute aggressive, tightly coordinated maneuvers like a pincer movement or a close-quarters block. From an engineering standpoint, this is analogous to “edge-of-the-envelope” maneuvering, where a system operates at the limits of its safe performance envelope to achieve a critical outcome. The learned policy understands that accepting a calculated risk of minor, non-critical collisions is a worthwhile trade-off for neutralizing a high-threat adversary. This demonstrates a level of intelligent risk assessment that is extremely difficult to handcraft into traditional controllers like MPC, whose cost functions are often too rigid to capture such dynamic, state-dependent trade-offs.

Temporal Intent Prediction vs. Reactive Control: Traditional control systems often act as reactive controllers, responding to an opponent’s current kinematic state (position, velocity). In contrast, by analyzing a time series of an opponent’s behavior (e.g., a sequence of accelerations and turns), the transformer in Adv-TransAC can predict tactical intent. From a physical standpoint, it learns to distinguish between different motion patterns that signify different intentions. For example, it can differentiate a probing maneuver from a committed attack run, or a feint designed to draw escorts away from the main target. This allows Adv-TransAC to be proactive rather than reactive. It can deploy defensive formations or initiate an interception before the threat becomes critical, seizing the initiative and gaining a crucial time and spatial advantage in physical engagement. This forward-looking capability is a hallmark of advanced intelligence and is a significant step beyond the capabilities of conventional navigation and guidance systems.

- 2.

Synergistic Effect between Adversarial Learning and Model Architecture:

Our framework’s success stems from the synergy between its powerful representational model (the hybrid GAT-transformer) and its training paradigm (adversarial curriculum learning). Adversarial training alone can lead to unstable policy oscillations if the model architecture is too simple to capture the opponent’s strategy. Conversely, a powerful model may fail to learn robust behaviors without a structured curriculum that guides its exploration.

The success of Adv-TransAC shows that a model capable of deeply understanding adversary kinematics and tactics, when combined with a curriculum that incrementally increases opponent difficulty, produces a “1 + 1 > 2” effect. The model learns not just a single counter-strategy, but a hierarchical set of responses, from simple avoidance to complex, multi-agent tactical countermeasures. This structured learning process is what enables the development of a generalizable and robust control policy suitable for the unpredictable nature of real-world maritime operations.

5.2. Practicality for Real-World Deployment: Edge Computing and Communication Efficiency

The design of Adv-TransAC offers significant advantages for practical deployment. Its decentralized execution architecture, where the policy network (actor) is lightweight, is well-suited for deployment on resource-constrained onboard (edge) computers, meeting real-time decision-making needs [

23].

More importantly, our framework directly confronts the critical bottleneck of maritime communication. The communication efficiency analysis presented in

Section 4.8 is not merely an addendum but a cornerstone of our contribution. The nearly 70% reduction in bandwidth achieved by our federated optimization paradigm is an enabling feature, not just a minor optimization. It transforms the proposed advanced learning framework from a theoretically sound concept into a practically deployable system. This efficiency is paramount for USV swarms operating ‘at the edge’ or over the horizon, where stable, high-bandwidth communication cannot be guaranteed. By drastically lowering the data transmission requirements, Adv-TransAC becomes a truly viable and scalable solution for training and updating intelligent multi-agent control systems in realistic operational environments.

5.3. Contextualizing Results and Industrial Implications

To fully appreciate the contributions of this work, it is paramount to contextualize our findings within the broader landscape of multi-agent systems research and to translate our simulation results into tangible implications for real-world maritime industries and engineering applications.

5.3.1. Comparison with the State-of-the-Art

While direct numerical comparison with prior work is challenging due to variations in simulation environments and task specifics, we can contextualize the magnitude of our performance improvements. Previous studies on multi-agent adversarial tasks often highlight the limitations of standard MARL algorithms. For example, Wang, Y. [

34] reported that even advanced actor–critic methods struggled to surpass a 60% success rate in cooperative-competitive settings with intelligent adversaries, a failure they attributed to poor credit assignment and an inability to model opponent intent. Our Adv-TransAC framework, achieving a 78.5% win rate against a similarly adaptive opponent, demonstrates a substantial leap forward.

This significant performance gain is not merely an incremental improvement; it is directly attributable to our hybrid GAT-transformer architecture, which explicitly addresses the core weaknesses identified in previous studies. Unlike standard MADDPG [

9], which relies on simple state concatenation, or QMIX [

7], whose restrictive value decomposition struggles in competitive settings, our approach captures the high-order spatiotemporal dependencies essential for sophisticated tactical reasoning. This suggests that our principled approach to spatiotemporal modeling establishes a new and effective performance benchmark for this class of complex multi-agent problems.

5.3.2. Implications for Industrial and Engineering Applications

Beyond academic benchmarks, the results presented have profound and practical implications for the future of autonomous maritime systems:

Enhanced Operational Safety and Efficiency in Port Security: In real-world port and harbor security, the high success rate of Adv-TransAC translates directly to a more reliable, automated defense against unauthorized vessel intrusions. The learned “double-team” maneuver is not a simulation artifact; it represents a template for a high-speed, precision intercept protocol that would be difficult for human operators to execute consistently under pressure. This could significantly reduce the reliance on manned patrol boats, lowering operational costs, minimizing human risk, and increasing response effectiveness 24/7.

Robustness for Critical Offshore Asset Protection: For protecting high-value, static assets like offshore oil rigs, wind farms, or aquaculture installations, the demonstrated robustness against learning-based adversaries is a critical feature. Real-world threats are not static; they are intelligent and adaptive. Our framework’s ability to generalize and maintain high performance against such opponents means it can provide a more resilient “digital perimeter,” capable of countering evolving threats without constant manual reprogramming by engineers.

A Foundational Architecture for Complex Coordinated Missions: The ability to learn emergent, complex cooperative tactics paves the way for a new generation of multi-USV missions beyond simple escort tasks. This includes coordinated environmental monitoring (e.g., tracking an oil spill with a dynamic sensor network), large-area seabed mapping in complex topographies, or even distributed anti-submarine warfare (ASW) screens. The principles of decoupled spatiotemporal modeling and adaptive learning validated in this paper provide a foundational control architecture for tackling these ambitious, next-generation multi-agent engineering challenges.

5.4. Limitation Analysis and Future Work

Despite the promising results, we must soberly acknowledge the limitations of the current work, which pave the way for critical future research directions.

1. The Sim-to-Real Gap and Operational Safety. All experiments were conducted in a simulated environment. The real-world ocean is far more complex, with unpredictable hydrodynamics (e.g., currents, waves), variable sensor noise models, and communication latencies or outages. Bridging this “Sim-to-Real” gap without significant performance degradation is a major challenge. Furthermore, migrating this system to a physical platform introduces non-negotiable safety and ethical imperatives. Real-world application demands robust fail-safes against the potential malfunction of the adversarial adaptation mechanism. Future work must therefore focus not only on domain randomization and system identification but also on integrating human-in-the-loop safety protocols. This could include, as suggested by our scenarios, human override capabilities initiated by personnel on the HVU being escorted (see

Figure 9), or the implementation of standards-compliant emergency “kill-switches” as guided by maritime regulations like ISO 23860 [

32]. Ensuring that the adaptive policy remains stable, predictable, and safely interruptible under all real-world conditions is a crucial step toward deployment.

2. Scalability and Computational Cost. While Adv-TransAC demonstrates good scalability in our tests, the computational overhead of the transformer architecture, particularly in the centralized critic, is inherently higher than that of simpler MLP or CNN structures. As the number of agents increases dramatically into the dozens or hundreds, the complexity of the centralized critic will grow exponentially. This may limit the framework’s applicability to very large-scale swarms. Future research should explore hierarchical or fully decentralized control architectures to manage this complexity, perhaps by using Adv-TransAC for intra-squad coordination within a larger, layered command structure.