A Multi-Scale Contextual Fusion Residual Network for Underwater Image Enhancement

Abstract

1. Introduction

- We propose an Adaptive Feature Aggregation Enhancement (AFAE) module, which aggregates multi-scale convolutional and self-attention features through Kernel Fusion Self-Attention (KFSA), enhancing the local and global feature representation capabilities of MCFR-Net.

- We propose a Residual Dual Attention Module (RDAM), which enhances the feature representation of key regions and retains the original information by applying KFSA twice and residual connections, thereby improving the stability and robustness of MCFR-Net.

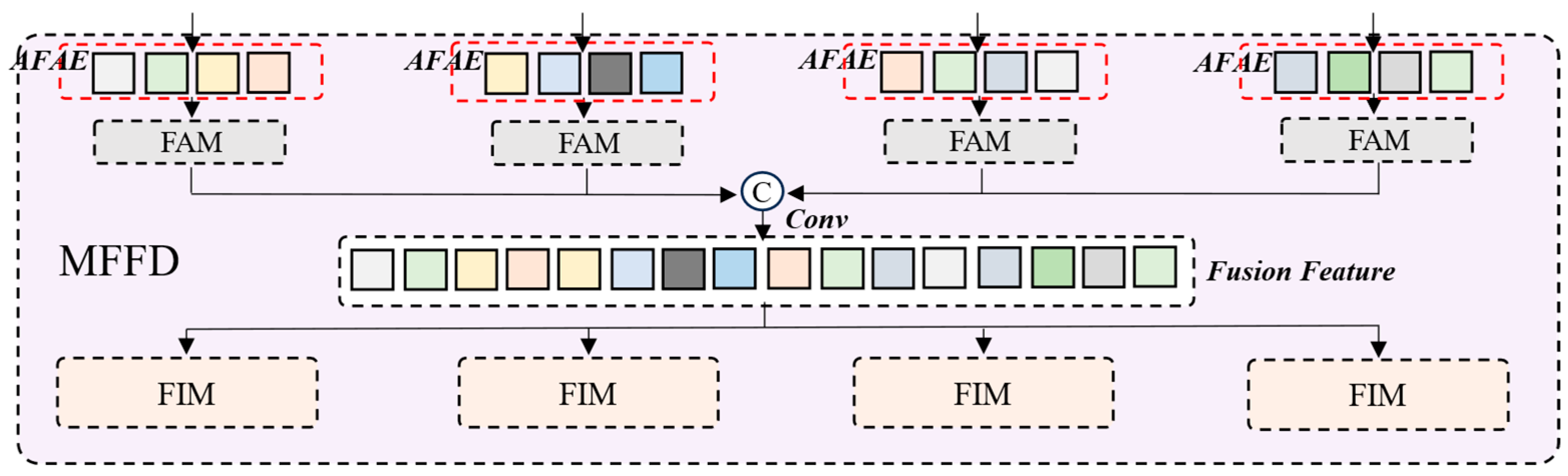

- We design a Multi-Scale Feature Fusion Decoding (MFFD) module to achieve channel mapping and scale alignment of multi-scale features, and fuse and reconstruct multi-resolution detail features.

2. Related Work

2.1. Traditional Methods

2.2. Deep Learning Methods

3. Proposed Model

3.1. Basic Block

3.2. Adaptive Feature Aggregation Enhancement

3.3. Residual Dual Attention Module

3.4. Multi-Scale Feature Fusion Decoding

3.5. Loss Function

4. Experimental Setup and Results

4.1. Implementation Details

4.2. Datasets

4.3. Compared Methods

4.4. Evaluation Metrics

4.5. Comparisons on R90

4.6. Comparisons on C60

4.7. Comparisons on U45

4.8. Comparisons on EUVP

4.9. Parameters and Flops

4.10. Ablation Experiments

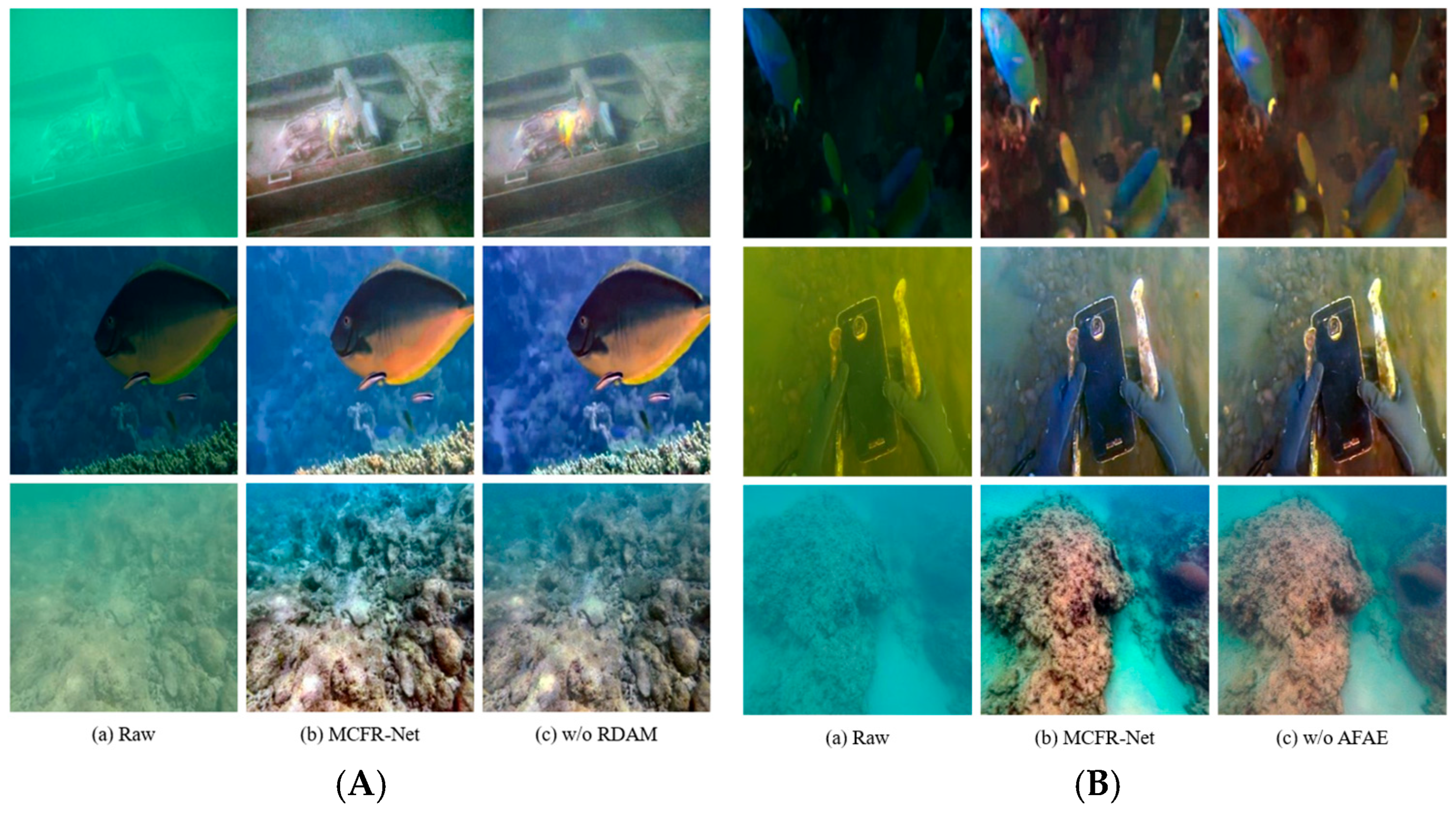

- Analysis of the effect of the Adaptive Feature Aggregation Enhancement module. Noted as no AFAE (w/o AFAE).

- Analysis of the effect of the Residual Dual Attention Module. Noted as no RDAM (w/o RDAM).

- Analysis of the Multi-Scale Feature Fusion Decoding module. Noted as no MFFD (w/o MFFD).

- Analysis of the baseline with all the above modules removed. Noted as Baseline.

4.11. Failure Cases

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhang, W.; Zhuang, P.; Sun, H.H.; Li, G.; Kwong, S.; Li, C. Underwater image enhancement via minimal color loss and locally adaptive contrast enhancement. IEEE Trans. Image Process. 2022, 31, 3997–4010. [Google Scholar] [CrossRef]

- Jiang, Z.; Li, Z.; Yang, S.; Fan, X.; Liu, R. Target oriented perceptual adversarial fusion network for underwater image enhancement. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 6584–6598. [Google Scholar] [CrossRef]

- Zhuang, P.; Wu, J.; Porikli, F.; Li, C. Underwater image enhancement with hyper-laplacian reflectance priors. IEEE Trans. Image Process. 2022, 31, 5442–5455. [Google Scholar] [CrossRef]

- Liu, C.; Shu, X.; Xu, D.; Shi, J. GCCF: A lightweight and scalable network for underwater image enhancement. Eng. Appl. Artif. Intell. 2024, 128, 107462. [Google Scholar] [CrossRef]

- Hong, L.; Shu, X.; Wang, Q.; Ye, H.; Shi, J.; Liu, C. CCM-Net: Color compensation and coordinate attention guided underwater image enhancement with multi-scale feature aggregation. Opt. Lasers Eng. 2025, 184, 108590. [Google Scholar] [CrossRef]

- Liu, Y.; Shao, Z.; Hoffmann, N. Global attention mechanism: Retain information to enhance channel-spatial interactions. arXiv 2021, arXiv:2112.05561. [Google Scholar]

- Liu, C.; Shu, X.; Pan, L.; Shi, J.; Han, B. Multiscale underwater image enhancement in RGB and HSV color spaces. IEEE Trans. Instrum. Meas. 2023, 72, 1–14. [Google Scholar] [CrossRef]

- Jahidul Islam, M. Understanding Human Motion and Gestures for Underwater Human-Robot Collaboration. arXiv 2018, arXiv:1804.02479. [Google Scholar]

- Lu, H.; Li, Y.; Serikawa, S. Underwater image enhancement using guided trigonometric bilateral filter and fast automatic color correction. In Proceedings of the 2013 IEEE International Conference on Image Processing, Melbourne, VIC, Australia, 15–18 September 2013; pp. 3412–3416. [Google Scholar]

- Zhang, S.; Wang, T.; Dong, J.; Yu, H. Underwater image enhancement via extended multi-scale Retinex. Neurocomputing 2017, 245, 1–9. [Google Scholar] [CrossRef]

- Chiang, J.Y.; Chen, Y.C. Underwater image enhancement by wavelength compensation and dehazing. IEEE Trans. Image Process. 2011, 21, 1756–1769. [Google Scholar] [CrossRef] [PubMed]

- Drews, P.L.; Nascimento, E.R.; Botelho, S.S.; Campos, M.F.M. Underwater depth estimation and image restoration based on single images. IEEE Comput. Graph. Appl. 2016, 36, 24–35. [Google Scholar] [CrossRef]

- Li, C.; Guo, J.; Guo, C.; Cong, R.; Gong, J. A hybrid method for underwater image correction. Pattern Recognit. Lett. 2017, 94, 62–67. [Google Scholar] [CrossRef]

- Priyadharsini, R.; Sree Sharmila, T.; Rajendran, V. A wavelet transform based contrast enhancement method for underwater acoustic images. Multidimens. Syst. Signal Process. 2018, 29, 1845–1859. [Google Scholar] [CrossRef]

- Huang, D.; Wang, Y.; Song, W.; Sequeira, J.; Mavromatis, S. Shallow-water image enhancement using relative global histogram stretching based on adaptive parameter acquisition. In MultiMedia Modeling: 24th International Conference, MMM 2018, Bangkok, Thailand, 5–7 February 2018, Proceedings, Part I 24; Springer International Publishing: Cham, Switzerland, 2018; pp. 453–465. [Google Scholar]

- Wang, Y.; Zhang, J.; Cao, Y.; Wang, Z. A deep CNN method for underwater image enhancement. In Proceedings of the 2017 IEEE International Conference on Image Processing, Beijing, China, 17–20 September 2017; pp. 1382–1386. [Google Scholar]

- Li, C.; Guo, C.; Ren, W.; Cong, R.; Hou, J.; Kwong, S.; Tao, D. An underwater image enhancement benchmark dataset and beyond. IEEE Trans. Image Process. 2019, 29, 4376–4389. [Google Scholar] [CrossRef]

- Sun, X.; Liu, L.; Dong, J. Underwater image enhancement with encoding-decoding deep CNN networks. In Proceedings of the 2017 IEEE SmartWorld, Ubiquitous Intelligence & Computing, Advanced & Trusted Computed, Scalable Computing & Communications, Cloud & Big Data Computing, Internet of People and Smart City Innovation, San Francisco, CA, USA, 4–8 August 2017; pp. 1–6. [Google Scholar]

- Qi, Q.; Li, K.; Zheng, H.; Gao, X.; Hou, G.; Sun, K. SGUIE-Net: Semantic attention guided underwater image enhancement with multi-scale perception. IEEE Trans. Image Process. 2022, 31, 6816–6830. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Anwar, S.; Hou, J.; Cong, R.; Guo, C.; Ren, W. Underwater image enhancement via medium transmission-guided multi-color space embedding. IEEE Trans. Image Process. 2021, 30, 4985–5000. [Google Scholar] [CrossRef]

- Fu, Z.; Lin, X.; Wang, W.; Huang, Y.; Ding, X. Underwater image enhancement via learning water type desensitized representations. In Proceedings of the ICASSP 2022–2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; pp. 2764–2768. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Lei, Y.; Yu, J.; Dong, Y.; Gong, C.; Zhou, Z.; Pun, C.M. UIE-UnFold: Deep unfolding network with color priors and vision transformer for underwater image enhancement. In Proceedings of the 2024 IEEE 11th International Conference on Data Science and Advanced Analytics (DSAA), San Diego, CA, USA, 6–10 October 2024; pp. 1–10. [Google Scholar]

- Khan, R.; Mishra, P.; Mehta, N.; Phutke, S.S.; Vipparthi, S.K.; Nandi, S.; Murala, S. Spectroformer: Multi-domain query cascaded transformer network for underwater image enhancement. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2024; pp. 1454–1463. [Google Scholar]

- Hu, X.; Liu, J.; Li, H.; Liu, H.; Xue, X. An effective transformer based on dual attention fusion for underwater image enhancement. PeerJ Comput. Sci. 2024, 10, e1783. [Google Scholar] [CrossRef]

- Li, H.; Li, J.; Wang, W. A fusion adversarial underwater image enhancement network with a public test dataset. arXiv 2019, arXiv:1906.06819. [Google Scholar]

- Islam, M.J.; Xia, Y.; Sattar, J. Fast underwater image enhancement for improved visual perception. IEEE Robot. Autom. Lett. 2020, 5, 3227–3234. [Google Scholar] [CrossRef]

- Peng, Y.T.; Cosman, P.C. Underwater image restoration based on image blurriness and light absorption. IEEE Trans. Image Process. 2017, 26, 1579–1594. [Google Scholar] [CrossRef]

- Peng, Y.T.; Cao, K.; Cosman, P.C. Generalization of the dark channel prior for single image restoration. IEEE Trans. Image Process. 2018, 27, 2856–2868. [Google Scholar] [CrossRef]

- Peng, L.; Zhu, C.; Bian, L. U-shape transformer for underwater image enhancement. IEEE Trans. Image Process. 2023, 32, 3066–3079. [Google Scholar] [CrossRef]

- Lu, Y.; Yang, D.; Gao, Y.; Liu, R.W.; Liu, J.; Guo, Y. AoSRNet: All-in-one scene recovery networks via multi-knowledge integration. Knowl.-Based Syst. 2024, 294, 111786. [Google Scholar] [CrossRef]

- Gonzalez-Sabbagh, S.; Robles-Kelly, A.; Gao, S. DGD-cGAN: A dual generator for image dewatering and restoration. Pattern Recognit. 2024, 148, 110159. [Google Scholar] [CrossRef]

- Yang, M.; Sowmya, A. An underwater color image quality evaluation metric. IEEE Trans. Image Process. 2015, 24, 6062–6071. [Google Scholar] [CrossRef] [PubMed]

- Panetta, K.; Gao, C.; Agaian, S. Human-visual-system-inspired underwater image quality measures. IEEE J. Ocean. Eng. 2015, 41, 541–551. [Google Scholar] [CrossRef]

- Mittal, A.; Soundararajan, R.; Bovik, A.C. Making a “completely blind” image quality analyzer. IEEE Signal Process. Lett. 2012, 20, 209–212. [Google Scholar] [CrossRef]

| Method | MSE↓ | PSNR (dB)↑ | SSIM↑ |

|---|---|---|---|

| UDCP | 5248.02 | 11.85 | 0.59 |

| UIBLA | 1074.79 | 15.77 | 0.86 |

| GDCP | 1781.72 | 17.05 | 0.82 |

| WB | 1464.56 | 17.64 | 0.80 |

| WaterNet | 858.44 | 21.15 | 0.89 |

| FUnIE | 1398.36 | 17.94 | 0.79 |

| Ucolor | 566.86 | 22.46 | 0.90 |

| U-shape | 609.19 | 22.09 | 0.82 |

| AoSR | 1175.05 | 20.22 | 0.87 |

| DGD | 1231.99 | 18.15 | 0.77 |

| MCFR-Net | 340.12 | 24.54 | 0.92 |

| Method | Entropy↑ | UIQM↑ | UCIQE↑ | NIQE↓ |

|---|---|---|---|---|

| UDCP | 6.03 | 2.68 | 0.52 | 6.46 |

| UIBLA | 6.99 | 2.30 | 0.58 | 5.80 |

| GDCP | 7.04 | 2.88 | 0.60 | 7.50 |

| WB | 6.50 | 2.07 | 0.49 | 6.70 |

| WaterNet | 7.24 | 2.69 | 0.55 | 5.79 |

| FUnIE | 6.87 | 4.52 | 0.47 | 7.48 |

| Ucolor | 7.24 | 2.56 | 0.53 | 5.96 |

| U-shape | 7.24 | 4.67 | 0.53 | 6.11 |

| AoSR | 7.16 | 2.37 | 0.51 | 6.07 |

| DGD | 7.07 | 4.68 | 0.49 | 5.67 |

| MCFR-Net | 7.46 | 4.76 | 0.59 | 5.91 |

| Method | Entropy↑ | UIQM↑ | UCIQE↑ | NIQE↓ |

|---|---|---|---|---|

| UDCP | 7.06 | 5.10 | 0.58 | 4.37 |

| UIBLA | 7.12 | 4.73 | 0.56 | 4.05 |

| GDCP | 7.19 | 4.86 | 0.57 | 4.14 |

| WB | 6.69 | 4.58 | 0.50 | 4.76 |

| WaterNet | 7.49 | 5.12 | 0.56 | 5.46 |

| FUnIE | 7.20 | 4.77 | 0.50 | 5.53 |

| Ucolor | 7.53 | 5.03 | 0.56 | 4.69 |

| U-shape | 7.50 | 4.94 | 0.55 | 4.63 |

| AoSR | 7.24 | 4.78 | 0.51 | 4.36 |

| DGD | 7.42 | 4.97 | 0.49 | 4.49 |

| MCFR-Net | 7.75 | 5.67 | 0.62 | 4.17 |

| Method | Entropy↑ | UIQM↑ | UCIQE↑ | NIQE↓ |

|---|---|---|---|---|

| UDCP | 7.09 | 3.99 | 0.60 | 4.42 |

| UIBLA | 7.42 | 3.53 | 0.56 | 4.49 |

| GDCP | 7.51 | 3.77 | 0.58 | 4.45 |

| WB | 6.93 | 3.13 | 0.50 | 4.90 |

| WaterNet | 7.49 | 4.02 | 0.57 | 4.43 |

| FUnIE | 6.99 | 4.38 | 0.49 | 6.07 |

| Ucolor | 7.49 | 4.11 | 0.55 | 4.44 |

| U-shape | 7.46 | 4.72 | 0.54 | 4.86 |

| AoSR | 7.07 | 3.26 | 0.48 | 4.56 |

| DGD | 7.33 | 4.66 | 0.48 | 5.10 |

| MCFR-Net | 7.68 | 4.81 | 0.61 | 4.91 |

| Method | Flops↓ | Params↓ | Time(s)↓ |

|---|---|---|---|

| UDCP | × | × | 0.0185 |

| UIBLA | × | × | 0.0098 |

| GDCP | × | × | 0.2355 |

| WB | × | × | 0.0066 |

| Water-Net | 71.53 G | 1.09 M | 0.0183 |

| FUnIE | 3.59 G | 7.02 M | 0.0029 |

| Ucolor | 443.85 G | 157.40 M | 0.1952 |

| U-shape | 66.20 G | 65.60 M | 0.0111 |

| AoSR | 15.11 G | 1.51 M | 0.0917 |

| DGD | 45.43 G | 8.22 M | 0.0892 |

| MCFR-Net | 268.59 G | 20.71 M | 0.0740 |

| Baseline | MSE↓ | PSNR (dB)↑ | SSIM↑ |

|---|---|---|---|

| MCFR-Net | 340.12 | 24.54 | 0.92 |

| w/o AFAE | 359.55 | 24.38 | 0.92 |

| w/o RDAM | 364.45 | 24.36 | 0.92 |

| w/o MFFD | 476.25 | 24.53 | 0.89 |

| Baseline | 820.19 | 20.85 | 0.80 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, C.; Hong, L.; Fan, Y.; Shu, X. A Multi-Scale Contextual Fusion Residual Network for Underwater Image Enhancement. J. Mar. Sci. Eng. 2025, 13, 1531. https://doi.org/10.3390/jmse13081531

Lu C, Hong L, Fan Y, Shu X. A Multi-Scale Contextual Fusion Residual Network for Underwater Image Enhancement. Journal of Marine Science and Engineering. 2025; 13(8):1531. https://doi.org/10.3390/jmse13081531

Chicago/Turabian StyleLu, Chenye, Li Hong, Yan Fan, and Xin Shu. 2025. "A Multi-Scale Contextual Fusion Residual Network for Underwater Image Enhancement" Journal of Marine Science and Engineering 13, no. 8: 1531. https://doi.org/10.3390/jmse13081531

APA StyleLu, C., Hong, L., Fan, Y., & Shu, X. (2025). A Multi-Scale Contextual Fusion Residual Network for Underwater Image Enhancement. Journal of Marine Science and Engineering, 13(8), 1531. https://doi.org/10.3390/jmse13081531