Dual-Domain Adaptive Synergy GAN for Enhancing Low-Light Underwater Images

Abstract

:1. Introduction

- We designed a Multi-scale Hybrid Attention mechanism that operates across the frequency and spatial domains to achieve parameterized channel reorganization, enabling more effective feature selection and enhancement.

- To improve the network’s adaptability to low-light underwater degradations, we construct an Adaptive Parameterized Convolution that integrates adaptive kernel prediction to dynamically model and represent diverse underwater degradation patterns.

- We propose a Dynamic Content-aware Markovian Discriminator tailored for low-light underwater image features. A gradient constraint strategy is combined to improve the color mapping ability of the network in low light and enhance the color details and overall smoothness of the image.

2. Related Work

2.1. Physical Model-Based Methods

2.2. Image Processing-Based Methods

2.3. Deep Learning-Based Methods

3. Methodology

3.1. Multi-Scale Hybrid Attention

3.2. Adaptive Parameterized Convolution

3.3. Dynamic Content-Aware Markovian Discriminator

3.4. Loss Function

4. Experiment

4.1. Datasets

4.1.1. EUVP

4.1.2. UFO-120

4.2. Experiment Details and Evaluation Metrics

4.2.1. Experiment Environment and Hyperparameters

4.2.2. Evaluation Metrics

4.3. Performance Comparison

4.3.1. EUVP

4.3.2. UFO-120

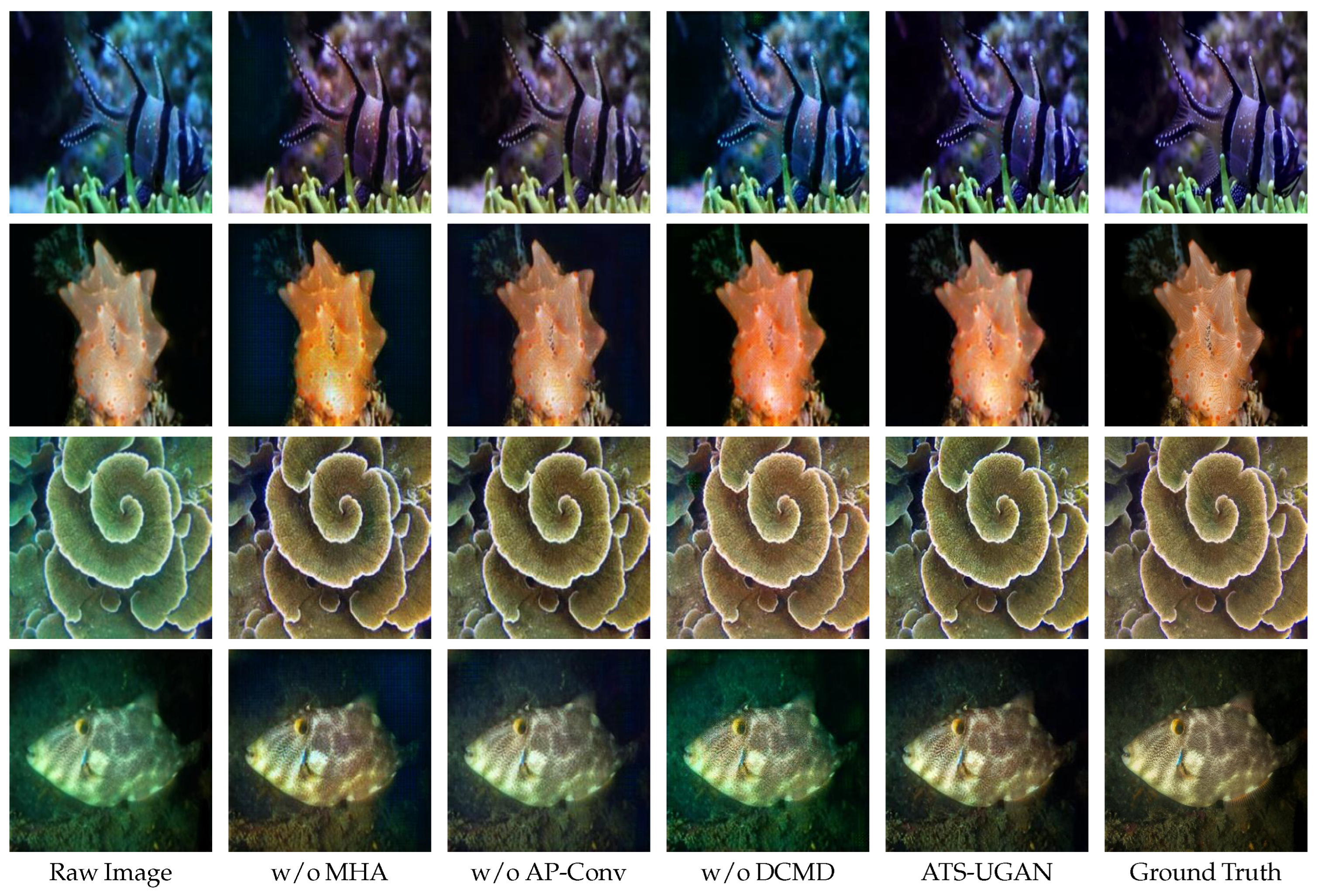

4.4. Ablation Experiments

4.4.1. Multi-Scale Hybrid Attention

4.4.2. Adaptive Parameterized Convolution

4.4.3. Dynamic Content-Aware Markovian Discriminator

4.5. Model Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- An, S.; Xu, L.; Deng, Z.; Zhang, H. DNIM: Deep-sea netting intelligent enhancement and exposure monitoring using bio-vision. Inf. Fusion 2025, 113, 102629. [Google Scholar] [CrossRef]

- Kang, F.; Huang, B.; Wan, G. Automated detection of underwater dam damage using remotely operated vehicles and deep learning technologies. Autom. Constr. 2025, 171, 105971. [Google Scholar] [CrossRef]

- Zhou, J.; Liu, C.; Long, B.; Zhang, D.; Jiang, Q.; Muhammad, G. Degradation-Decoupling Vision Enhancement for Intelligent Underwater Robot Vision Perception System. IEEE Internet Things J. 2025, 12, 17880–17895. [Google Scholar] [CrossRef]

- Saleem, A.; Awad, A.; Paheding, S.; Lucas, E.; Havens, T.C.; Esselman, P.C. Understanding the Influence of Image Enhancement on Underwater Object Detection: A Quantitative and Qualitative Study. Remote Sens. 2025, 17, 185. [Google Scholar] [CrossRef]

- González-Sabbagh, S.P.; Robles-Kelly, A. A Survey on Underwater Computer Vision. ACM Comput. Surv. 2023, 55, 1–39. [Google Scholar] [CrossRef]

- Zhang, W.; Zhuang, P.; Sun, H.H.; Li, G.; Kwong, S.; Li, C. Underwater Image Enhancement via Minimal Color Loss and Locally Adaptive Contrast Enhancement. IEEE Trans. Image Process. 2022, 31, 3997–4010. [Google Scholar] [CrossRef]

- An, S.; Xu, L.; Deng, Z.; Zhang, H. HFM: A hybrid fusion method for underwater image enhancement. Eng. Appl. Artif. Intell. 2024, 127, 107219. [Google Scholar] [CrossRef]

- Chandrasekar, A.; Sreenivas, M.; Biswas, S. PhISH-Net: Physics Inspired System for High Resolution Underwater Image Enhancement. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2024; pp. 1506–1516. [Google Scholar]

- Zhang, W.; Zhou, L.; Zhuang, P.; Li, G.; Pan, X.; Zhao, W.; Li, C. Underwater Image Enhancement via Weighted Wavelet Visual Perception Fusion. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 2469–2483. [Google Scholar] [CrossRef]

- Vasamsetti, S.; Mittal, N.; Neelapu, B.C.; Sardana, H.K. Wavelet based perspective on variational enhancement technique for underwater imagery. Ocean Eng. 2017, 141, 88–100. [Google Scholar] [CrossRef]

- Ma, H.; Huang, J.; Shen, C.; Jiang, Z. Retinex-inspired underwater image enhancement with information entropy smoothing and non-uniform illumination priors. Pattern Recognit. 2025, 162, 111411. [Google Scholar] [CrossRef]

- Xiang, D.; He, D.; Wang, H.; Qu, Q.; Shan, C.; Zhu, X.; Zhong, J.; Gao, P. Attenuated color channel adaptive correction and bilateral weight fusion for underwater image enhancement. Opt. Lasers Eng. 2025, 184, 108575. [Google Scholar] [CrossRef]

- Zhang, W.; Liu, Q.; Feng, Y.; Cai, L.; Zhuang, P. Underwater Image Enhancement via Principal Component Fusion of Foreground and Background. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 10930–10943. [Google Scholar] [CrossRef]

- Jha, M.; Bhandari, A.K. CBLA: Color-Balanced Locally Adjustable Underwater Image Enhancement. IEEE Trans. Instrum. Meas. 2024, 73, 5020911. [Google Scholar] [CrossRef]

- Liu, C.; Shu, X.; Pan, L.; Shi, J.; Han, B. Multiscale Underwater Image Enhancement in RGB and HSV Color Spaces. IEEE Trans. Instrum. Meas. 2023, 72, 5021814. [Google Scholar] [CrossRef]

- Liang, Y.; Li, L.; Zhou, Z.; Tian, L.; Xiao, X.; Zhang, H. Underwater Image Enhancement via Adaptive Bi-Level Color-Based Adjustment. IEEE Trans. Instrum. Meas. 2025, 74, 5018916. [Google Scholar] [CrossRef]

- Chen, Z.; Sun, W.; Jia, J.; Huang, R.; Lu, F.; Chen, Y.; Min, X.; Zhai, G.; Zhang, W. Joint Luminance-Chrominance Learning for Image Debanding. IEEE Trans. Circuits Syst. Video Technol. 2025. [CrossRef]

- Xue, X.; Yuan, J.; Ma, T.; Ma, L.; Jia, Q.; Zhou, J.; Wang, Y. Degradation-Decoupled and semantic-aggregated cross-space fusion for underwater image enhancement. Inf. Fusion 2025, 118, 102927. [Google Scholar] [CrossRef]

- Zhang, S.; Zhao, S.; An, D.; Li, D.; Zhao, R. LiteEnhanceNet: A lightweight network for real-time single underwater image enhancement. Expert Syst. Appl. 2024, 240, 122546. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar]

- Yang, J.; Zhu, S.; Liang, H.; Bai, S.; Jiang, F.; Hussain, A. PAFPT: Progressive aggregator with feature prompted transformer for underwater image enhancement. Expert Syst. Appl. 2025, 262, 125539. [Google Scholar] [CrossRef]

- Shang, J.; Li, Y.; Xing, H.; Yuan, J. LGT: Luminance-guided transformer-based multi-feature fusion network for underwater image enhancement. Inf. Fusion 2025, 118, 102977. [Google Scholar] [CrossRef]

- Du, D.; Li, E.; Si, L.; Zhai, W.; Xu, F.; Niu, J.; Sun, F. UIEDP: Boosting underwater image enhancement with diffusion prior. Expert Syst. Appl. 2025, 259, 125271. [Google Scholar] [CrossRef]

- Zhang, D.; Wu, C.; Zhou, J.; Zhang, W.; Li, C.; Lin, Z. Hierarchical attention aggregation with multi-resolution feature learning for GAN-based underwater image enhancement. Eng. Appl. Artif. Intell. 2023, 125, 106743. [Google Scholar] [CrossRef]

- Wang, H.; Köser, K.; Ren, P. Large Foundation Model Empowered Discriminative Underwater Image Enhancement. IEEE Trans. Geosci. Remote Sens. 2025, 63, 1–17. [Google Scholar] [CrossRef]

- Xu, C.; Zhou, W.; Huang, Z.; Zhang, Y.; Zhang, Y.; Wang, W.; Xia, F. Fusion-based graph neural networks for synergistic underwater image enhancement. Inf. Fusion 2025, 117, 102857. [Google Scholar] [CrossRef]

- Saleh, A.; Sheaves, M.; Jerry, D.; Rahimi Azghadi, M. Adaptive deep learning framework for robust unsupervised underwater image enhancement. Expert Syst. Appl. 2025, 268, 126314. [Google Scholar] [CrossRef]

- Esser, P.; Rombach, R.; Ommer, B. Taming Transformers for High-Resolution Image Synthesis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 12873–12883. [Google Scholar]

- Ho, J.; Jain, A.; Abbeel, P. Denoising Diffusion Probabilistic Models. In Proceedings of the Advances in Neural Information Processing Systems; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2020; Volume 33, pp. 6840–6851. [Google Scholar]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-Resolution Image Synthesis With Latent Diffusion Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 10684–10695. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-To-Image Translation Using Cycle-Consistent Adversarial Networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Zhu, X.; Hu, H.; Lin, S.; Dai, J. Deformable ConvNets V2: More Deformable, Better Results. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Yang, B.; Bender, G.; Le, Q.V.; Ngiam, J. CondConv: Conditionally Parameterized Convolutions for Efficient Inference. In Proceedings of the Advances in Neural Information Processing Systems; Wallach, H., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2019; Volume 32. [Google Scholar]

- Islam, M.J.; Xia, Y.; Sattar, J. Fast Underwater Image Enhancement for Improved Visual Perception. IEEE Robot. Autom. Lett. 2020, 5, 3227–3234. [Google Scholar] [CrossRef]

- Islam, M.J.; Luo, P.; Sattar, J. Simultaneous Enhancement and Super-Resolution of Underwater Imagery for Improved Visual Perception. arXiv 2020, arXiv:2002.01155. [Google Scholar]

- Setiadi, D.R.I.M.; Ghosal, S.K.; Sahu, A.K. AI-Powered Steganography: Advances in Image, Linguistic, and 3D Mesh Data Hiding—A Survey. J. Future Artif. Intell. Technol. 2025, 2, 1–23. [Google Scholar] [CrossRef]

- Jiang, Y.; Gong, X.; Liu, D.; Cheng, Y.; Fang, C.; Shen, X.; Yang, J.; Zhou, P.; Wang, Z. EnlightenGAN: Deep Light Enhancement Without Paired Supervision. IEEE Trans. Image Process. 2021, 30, 2340–2349. [Google Scholar] [CrossRef]

- Li, C.; Guo, C.; Ren, W.; Cong, R.; Hou, J.; Kwong, S.; Tao, D. An Underwater Image Enhancement Benchmark Dataset and Beyond. IEEE Trans. Image Process. 2020, 29, 4376–4389. [Google Scholar] [CrossRef] [PubMed]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.H. Restormer: Efficient Transformer for High-Resolution Image Restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 5728–5739. [Google Scholar]

- Cong, R.; Yang, W.; Zhang, W.; Li, C.; Guo, C.L.; Huang, Q.; Kwong, S. PUGAN: Physical Model-Guided Underwater Image Enhancement Using GAN With Dual-Discriminators. IEEE Trans. Image Process. 2023, 32, 4472–4485. [Google Scholar] [CrossRef] [PubMed]

| Methods | PSNR ↑ | SSIM ↑ | CIEDE2000 ↓ | UIQM ↑ | UCIQE ↑ | NIQE ↓ | LPIPS ↓ | FID ↓ | FLOPs (G) ↓ | Time (s) ↓ |

|---|---|---|---|---|---|---|---|---|---|---|

| EnlightenGAN [38] | 18.3 | 0.65 | 13.2 | 2.15 | 0.48 | 8.7 | 0.46 | 41.51 | 6.42 | 0.014 |

| Water-Net [39] | 21.4 | 0.68 | 11.3 | 2.47 | 0.57 | 7.9 | 0.35 | 76.12 | 11.37 | 0.021 |

| Restormer [40] | 20.8 | 0.72 | 6.3 | 3.27 | 0.71 | 5.1 | 0.44 | 65.60 | 48.75 | 0.125 |

| FUnIE-GAN [35] | 24.3 | 0.79 | 8.5 | 3.01 | 0.67 | 6.2 | 0.27 | 30.10 | 26.10 | 0.060 |

| PUGAN [41] | 26.1 | 0.85 | 5.8 | 3.40 | 0.74 | 4.8 | 0.25 | 28.14 | 30.84 | 0.072 |

| Deep SESR [36] | 27.5 | 0.88 | 5.4 | 3.72 | 0.78 | 4.3 | 0.25 | 29.18 | 36.28 | 0.086 |

| ATS-UGAN (ours) | 28.7 | 0.92 | 4.3 | 4.05 | 0.83 | 3.7 | 0.23 | 21.01 | 28.65 | 0.067 |

| Methods | PSNR ↑ | SSIM ↑ | CIEDE2000 ↓ | UIQM ↑ | UCIQE ↑ | NIQE ↓ | LPIPS ↓ | FID ↓ |

|---|---|---|---|---|---|---|---|---|

| EnlightenGAN [38] | 17.9 | 0.61 | 14.1 | 2.02 | 0.43 | 9.1 | 0.39 | 47.60 |

| WaterNet [39] | 20.1 | 0.69 | 12.5 | 2.31 | 0.53 | 8.2 | 0.36 | 74.85 |

| Restormer [40] | 24.9 | 0.80 | 8.2 | 3.10 | 0.68 | 6.0 | 0.32 | 66.24 |

| FUnIE-GAN [35] | 25.5 | 0.82 | 7.8 | 3.34 | 0.70 | 5.5 | 0.28 | 33.55 |

| PUGAN [41] | 26.4 | 0.84 | 6.0 | 3.55 | 0.75 | 5.0 | 0.25 | 30.98 |

| Deep SESR [36] | 26.9 | 0.87 | 5.5 | 3.65 | 0.77 | 4.6 | 0.26 | 27.43 |

| ATS-UGAN (Ours) | 28.2 | 0.91 | 4.7 | 3.96 | 0.85 | 3.0 | 0.24 | 22.12 |

| MHA | AP-CONV | DCMD | PSNR ↑ | SSIM ↑ | CIEDE2000 ↓ | UIQM ↑ | UCIQE ↑ | NIQE ↓ | LPIPS ↓ | FID ↓ |

|---|---|---|---|---|---|---|---|---|---|---|

| ✗ | ✓ | ✓ | 25.6 | 0.80 | 7.4 | 3.44 | 0.72 | 5.6 | 0.30 | 30.21 |

| ✓ | ✗ | ✓ | 27.3 | 0.85 | 5.1 | 3.76 | 0.78 | 4.4 | 0.27 | 25.80 |

| ✓ | ✓ | ✗ | 25.2 | 0.70 | 5.2 | 3.20 | 0.69 | 4.0 | 0.28 | 26.95 |

| ✓ | ✓ | ✓ | 28.7 | 0.92 | 4.3 | 4.05 | 0.83 | 3.7 | 0.23 | 21.01 |

| MHA | AP-CONV | DCMD | PSNR ↑ | SSIM ↑ | CIEDE2000 ↓ | UIQM ↑ | UCIQE ↑ | NIQE ↓ | LPIPS ↓ | FID ↓ |

|---|---|---|---|---|---|---|---|---|---|---|

| ✗ | ✓ | ✓ | 24.1 | 0.87 | 9.2 | 3.35 | 0.70 | 4.9 | 0.32 | 31.33 |

| ✓ | ✗ | ✓ | 26.9 | 0.82 | 6.7 | 3.60 | 0.76 | 4.2 | 0.28 | 27.90 |

| ✓ | ✓ | ✗ | 24.7 | 0.63 | 6.4 | 3.54 | 0.72 | 3.6 | 0.29 | 28.67 |

| ✓ | ✓ | ✓ | 28.2 | 0.91 | 4.7 | 3.96 | 0.85 | 3.0 | 0.24 | 22.12 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kong, D.; Mao, J.; Zhang, Y.; Zhao, X.; Wang, Y.; Wang, S. Dual-Domain Adaptive Synergy GAN for Enhancing Low-Light Underwater Images. J. Mar. Sci. Eng. 2025, 13, 1092. https://doi.org/10.3390/jmse13061092

Kong D, Mao J, Zhang Y, Zhao X, Wang Y, Wang S. Dual-Domain Adaptive Synergy GAN for Enhancing Low-Light Underwater Images. Journal of Marine Science and Engineering. 2025; 13(6):1092. https://doi.org/10.3390/jmse13061092

Chicago/Turabian StyleKong, Dechuan, Jinglong Mao, Yandi Zhang, Xiaohu Zhao, Yanyan Wang, and Shungang Wang. 2025. "Dual-Domain Adaptive Synergy GAN for Enhancing Low-Light Underwater Images" Journal of Marine Science and Engineering 13, no. 6: 1092. https://doi.org/10.3390/jmse13061092

APA StyleKong, D., Mao, J., Zhang, Y., Zhao, X., Wang, Y., & Wang, S. (2025). Dual-Domain Adaptive Synergy GAN for Enhancing Low-Light Underwater Images. Journal of Marine Science and Engineering, 13(6), 1092. https://doi.org/10.3390/jmse13061092