Abstract

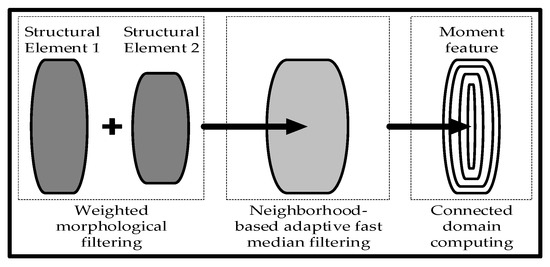

All over the world, many ports have implemented surveillance camera systems to monitor the vessels and activities around them. These types of systems are not very effective in accurately detecting activities around the port due to background noise and congestion interference at the sea surface. This is why it is difficult to accurately detect vessels, especially smaller vessels, when it turns dark. It is known that some vessels do not comply with maritime rules, particularly in port and safety zones; these must be detected to avoid incidents. For these reasons, in this study, we propose and develop an improved multi-structural morphology (IMSM) approach to eliminate all of this noise and interference so that vessels can be accurately detected in real time. With this new approach, the target vessel is separated from the sea surface background through the weighted morphological filtering of several datasets of structural components. Then, neighborhood-based adaptive fast median filtering is used to filter out impulse noise. Finally, a characteristic morphological model of the target vessel is established using the connected domain; this allows the sea surface congestion to be eliminated and the movement of vessels to be detected in real time. Multiple tests are carried out on a small and discrete area of moving vessels. The results from several collected datasets show that the proposed approach can effectively eliminate background noise and congestion interference in video monitoring. The detection accuracy rate and the processing time are improved by approximately 3.91% and 1.14 s, respectively.

1. Introduction

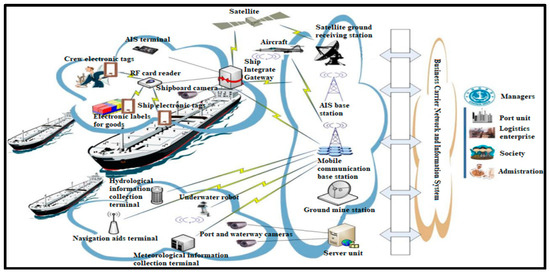

Around ports and secure areas at sea, vessels must comply with certain rules to avoid congestion, accidents, and theft. Therefore, detecting vessels accurately is essential for sea operations in general and, in particular, for ports and secure areas at sea. Research on the detection methods of port vessels is of great significance for realizing the real-time detection of vessels and improving the efficiency of port vessel monitoring systems, especially for ports that use surveillance camera systems. With the development and application of modern sea monitoring systems at various ports, most of the world’s major ports are building extensive CCTV surveillance systems at sea. These ports are committed to building real-time, intuitive, and whole-process CCTV monitoring systems, as shown in Figure 1 [1]. Many ports have vessel traffic service (VTS) systems in place to monitor vessels and activities around them. These VTS systems integrate radar information, DGPS information, and AIS data to accurately monitor vessels at long distances. However, other ports use surveillance camera systems.

Figure 1.

Navigation system architecture.

According to the geographical characteristics of the monitoring system’s control area and the sources of on-site image interference, the characteristics of port surveillance videos are analyzed and summarized in different ways, as follows:

- The target vessel has low contrast: In most cases, the surveillance camera is far away from the target vessel, owing to the relatively vast sea surface. The target vessel only occupies a few pixels in the video image, and its color is relatively close to that of the sea surface background. When the visibility of the sea surface is poor, it is difficult to spot the target in the image [2].

- The noise interference is high, and the sea environment is complex: Regional changes caused by waves on the sea surface are similar to the shape and size of the target vessel, which easily leads to the false detection of vessels. Large areas of sea surface ripples are difficult to remove using common filtering methods. Changes in lighting and the movement of clouds cause background changes over large areas in port video images [3].

- The vessel moves slowly: Under long-distance observation, the position of the target vessel in an image changes slowly and the difference between two images is only a few pixels. This easily leads to the void phenomenon in the central area of the target vessel when using detection methods for moving targets [4].

- The real-time processing of video: Vessel detection methods based on surveillance videos not only ensure the accuracy of system detection but also require real-time video processing. In order to facilitate real-time observation of the test results by maritime regulators, the algorithm needs to be robust [5].

Recently, much research has been carried out in this field. Li et al. [6] proposed the use of range-Doppler processing to improve the signal-to-noise ratio (SNR) before computing the moving target’s trajectory based on a continuously bistatic range. This technology uses Global Navigation Satellite Systems, which are reliable. However, the limited power budget offered by navigation satellites is a primary concern. A hierarchical method was proposed to detect vessels in inland rivers. Multi-spectral information is used to extract the regions of interest, and then the panchromatic band is used to extract vessel candidates. Then, a mixture of multi-scale deformable part models and a histogram of the oriented gradient are used to detect the connected components and the clustered vessels. Finally, a back-propagation neural network is applied to classify the vessel candidates [7]. The advantage of this method is that it can accurately detect vessels in inland rivers as well as in port areas. It can effectively eliminate background noise and congestion interference at sea. However, it considers several mixed algorithms, which makes using this method very complex and difficult. Yu et al. [8] proposed a Multi-Head Self-Attention–You Only Look Once (MHSA-YOLO) method for ship object detection in inland rivers. To strengthen the feature information of the ship objects and reduce the interference of background information, the method incorporates MHSA into the feature extraction process. A more straightforward two-way feature pyramid is created throughout the feature fusion process to improve the fusing of feature information, resulting in an accuracy detection of 97.59%. However, its processing time is low (about 4.7 s to perform a correct detection). When it is getting dark, the detection accuracy is just 91.9%, meaning that this method does not perform well late in the evening. Morillas et al. [9] proposed the use of a block division technique, which splits images into tiny pixel blocks that correspond to small vessel or non-vessel regions. The blocks are classified using a support vector machine utilizing the texture and color information taken from the blocks. Finally, it makes use of a reconstruction algorithm, which extracts the identified vessels and fixes the majority of incorrectly categorized blocks. This method is more computationally efficient because it captures the characteristics of regions more accurately. This method has precision of around 98.14% for the final vessel detection and approximately 96.98% for the classification between vessel blocks and non-vessel blocks. However, the experiments were probably carried out using large vessels. This is because when this method was applied to small vessels, it was discovered that the detection accuracy was lower, with a precision of 93.3% and 90.8% for detection and classification, respectively. Gao et al. [10] proposed a new inter-frame difference algorithm for moving target detection. Texture and color features are used in a two-frame-difference approach. Vessels can be effectively detected, with an accuracy of about 94.7% and a processing time of 2.01 s. However, the false alarm rate is higher for small vessels with low contrast. Tabi et al. [11] designed a vessel detection method with a monitoring system using an automatic identification system and a geographic information system. This vessel detection approach uses mixed Gaussian background modeling, but it results in a large void area in the center of the hull. The accuracy of the detection system is about 92%. However, the processing time is 3.76 s, which is considered poor in engineering applications. Additionally, this approach suffers from serious interference to background updates due to large variations in the sea surface illumination and rapid changes in the cloud background. A detection and classification method for ships based on optical remote sensing images was implemented successfully in the field of sea surface remote sensing [12]. This method has a very simple algorithm that is easy to understand. However, it suffers from a high false detection rate of 0.376. Another detection method using optical satellite imagery was proposed; it captures images in visible light [13]. However, the system relies on daylight and clear skies for efficient operation, making it susceptible to weather conditions and atmospheric interference. It cannot perform properly in poor lighting, which limits its capabilities. Additionally, there are many other methods that can be used for vessel detection, including the method of matching the coastline with the information stored in the Geographic Information System (GIS); the classification of regional homogeneity in an image; methods based on statistical models; methods based on deep learning models; methods based on the standardized difference water index; and more.

All the methods mentioned above can effectively improve the SNR of an image, suppressing background interference. They can detect moving targets against a background with just a little noise. However, they detect small vessels without protecting the edge of the moving target signal from being blurred; this leads to congestion. Given the characteristics of the long distances between port surveillance cameras and vessels, detecting small vessels still presents many challenges, especially when it is getting dark and when they are moving slowly. This defect is caused by significant interference from sea surface noise. Therefore, this study proposes an IMSM which takes into account the noise of the sea environment as well as the movement of vessels in the process of their real-time detection. The primary contributions of this paper are summarized as follows:

- The proposed improved multi-structural morphology approach is designed based on physics and intensive mathematical contexts that result in the accurate detection of target vessels.

- Deep Hough transform (DHT), together with OSTU-based adaptive threshold segmentation, enables the removal of the irrelevant lines/occlusions on images and converts them into binary maps.

- The combination of weighted morphological filtering with neighborhood-based adaptive fast median filtering using the associated domain makes it possible to clearly locate and monitor vessel movements in real time.

The rest of this paper is organized as follows: The development of the port vessel detection system is presented in Section 2. The multi-structural morphology filtering design is described in detail in Section 3. Section 4 focuses on and highlights the scientific applications of the IMSM approach, and Section 5 presents the conclusions of this study.

2. Port Vessel Detection System

2.1. Detection Process

Usually, a frame of an image is read from the image sequence for detection. The detection process includes most of the elements presented in Figure 1, including the satellite, antennas, network equipment, server, cameras (video of the operation), etc. These elements work together to obtain well-defined output video image results.

This study focuses on surveillance camera systems, which are tools that monitor and record activities in a specific environment. These systems consist of cameras, recording devices (DVRs or NVRs), and display systems. These elements all work together to improve security by capturing video footage. The cameras can be analog or IP, offering several functions, including motion detection, night vision, and PTZ (pan, tilt, and zoom). For data transmission, IP systems use network cables, while analog systems use coaxial cables. The recorded videos are stored on hard drives. Digital video recorders (DVRs) handle analog data, and network video recorders (NVRs) manage digital data from IP cameras. There are several types, including wireless, digital IP, and analog cameras, which meet different requirements. Their storage times vary depending on factors such as the resolution of video, the number of cameras recording, and the storage capacity of the device [14].

In surveillance setups, the monitoring and display systems are the two main ways to view videos, including locally and remotely. For local viewing, monitors are often part of the system, allowing users to observe live images directly on site. These can be regular TVs or even computer monitors that connect to the recorder via an HDMI cable. For remote access, modern systems allow users to monitor their monitoring streams remotely, either via the internet or through a dedicated network. Cameras and related devices should be plugged into standard electrical outlets so that they can continue to record and store videos [15].

Video images are powerful tools for communication; they comprise graphic interfaces that allow a digital camera or camcorder to send a video signal to an external viewing or recording device via a video output cable. Original video images contain noise interference, which is a mixture of nuisance alarms and/or false alarms that must be effectively eliminated to avoid misleading results. By definition, any signal produced by an adverse event is called a nuisance alarm, while a signal produced by the system’s electronics that has nothing to do with a sensor or an event is called a false alarm [16,17,18,19,20,21,22,23]. To avoid false alarms, the components of the system must be not only in a good state but also well-connected to each other [24].

2.2. Tracking Path Analysis

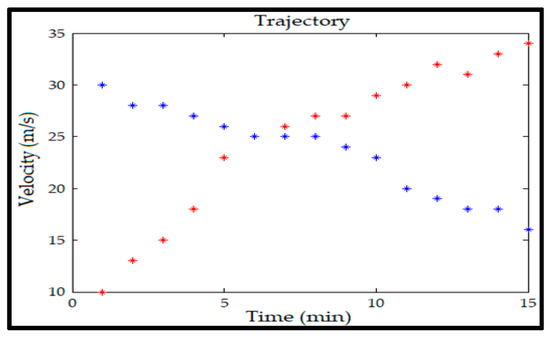

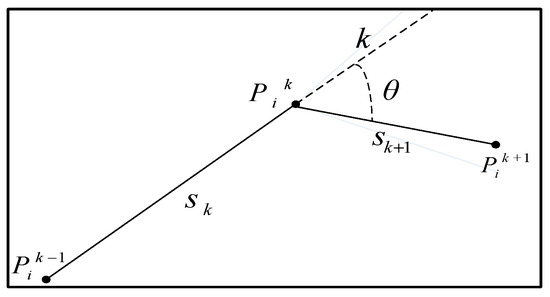

The tracking problem can essentially be defined as the problem of estimating the path or trajectory of a target in an image when the target is moving [25]. In order to observe the trajectory of a target vessel on a sea surface more intuitively, its path needs to be analyzed. Based on the premise that the direction and speed of each pixel in the video image will not change suddenly, the path that the moving target is forming is considered coherent. The path coherence function reflects information such as the direction and velocity of the moving target. It can be used to represent a measure of consistency between the trajectory of a moving target and the constraint of motion; thus, the generated trajectory of a moving target is represented as a series of points in a two-dimensional projection plane, as follows:

where represents two independently moving target trajectories in an image sequence , as shown in Figure 2.

Figure 2.

Trajectories of two independently moving targets.

Let represent the projected coordinates of the sequence point on an image. According to the correlation between the coordinates, the trajectory of the moving target can be represented in the form of a vector, as follows:

The deviation in the path represents the difference between the target and the coherent path, which can be used to measure the consistency of the path of the moving target. Let denote the path deviation of point in image :

where is the path coherence function and represents the motion vector from point to point . The path deviation of target over the whole motion can be expressed as follows:

For independently moving targets, the overall path deviation can be defined as follows:

When path analysis is required for multiple targets at the same time, this can be solved by minimizing the overall path deviation .

Assuming that the video frame rate is high enough, the velocity and direction of motion of the target can be considered to change smoothly between image sequences. Therefore, can be expressed as follows:

where and . The angle and the distances and are shown in Figure 3. The weight coefficients and represent the importance of direction coherence and velocity coherence in path analysis, respectively.

Figure 3.

Schematic diagram of the path coherence function.

When multiple independently moving targets are tracked at the same time, occlusion occurs between them, and the targets in some image frames may partially or completely disappear, which leads to errors in the target trajectory. Minimization of the total trajectory deviation (Equation (5)) using a given path coherence function assumes that the same number of targets is detected in each image in an image sequence and that the detected target points always represent the same targets [26]. However, once a moving target is occluded in an image, these assumptions cannot be satisfied.

To overcome the occlusion problem, other local trajectory constraints must be considered, allowing the trajectory to be incomplete in the event that the target is occluded, disappears, or is not detected. In this study, it is assumed that each vessel is moving at a uniform speed due to their idle motion characteristics in the image and the fact that their directions do not change abruptly [27,28,29,30]. The speed of each vessel is calculated based on the coordinate information obtained from the real-time detection of the target vessel. The vessel motion velocity is taken as the motion assumption condition of the path coherence function . Therefore, the trajectory constraint of the vessel is set as follows:

is the maximum value of the path coherence function, Equation (8) represents any two consecutive trajectory points , and the displacement of must be less than the preset threshold . In Equation (9), when the target trajectory is non-complete, the next frame position of a target is predicted according to the target motion velocity and the video frame rate .

3. Design of Improved Multi-Structural Morphology Filtering Approach

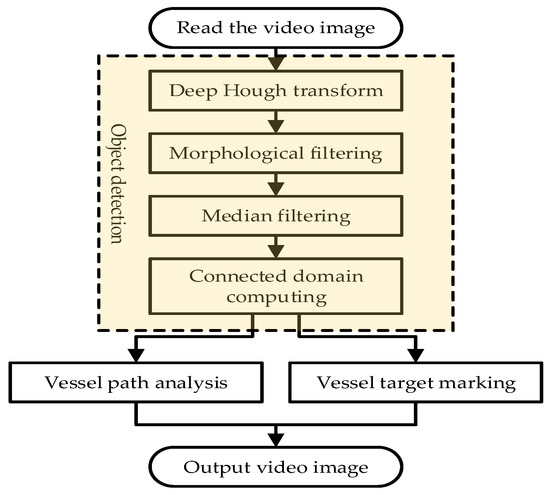

3.1. Target Vessel Detection Process

A frame of an image is read from an image sequence for target vessel detection, and then the Hough transform algorithm, together with the OSTU-based adaptive threshold segmentation method, is used to not only eliminate the image’s superfluous lines and occlusions but also to convert the image into a binary map [31,32]. Next, the weighted morphological filtering of multiple groups of structural elements is carried out to separate the target vessel from the port background. Then, based on the geometric characteristics of the vessel, neighbor-based adaptive fast median filtering is performed to filter out the impulse noise on the image. Subsequently, the connected domain labeling algorithm is performed to obtain the target information of the vessel and establish a model of its morphological characteristics. According to the target detection results, the target vessel is marked on the original image and its movement trajectory is recorded. Finally, the processed video image is output. The detection process and the noise-processing approach proposed in this paper are shown in Figure 4 and Figure 5, respectively. Figure 5 presents the dynamic morphological characteristic model of a target vessel to eliminate the noise interference at the sea surface in the final detection results.

Figure 4.

Flowchart of the multi-structural morphology approach.

Figure 5.

Stray noise removal process.

3.2. Deep Hough Transform

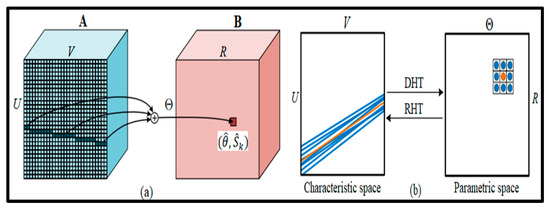

Changes in light and occlusion result in irrelevant lines that are eliminated by means of DHT [33,34,35]. As shown in Figure 6, in a 2D image , there is a straight line that can be parameterized using two parameters.

Figure 6.

The structure of Hough transform. (a) Characteristics in the characteristic space (blue) accrue to a point in the parametric space (pink) called . (b) An example of the suggested context-aware feature aggregation system.

is the angle between and distance and the distance parameter at the origin point . can be parameterized by bias and slope . Additionally, reverse mapping can be performed to translate any valid pair to a line instance. The line-to-parameters and the inverse mapping are defined as follows:

where and represent bijective mapping, and and are quantized into discrete bins that are processed by computer programs. The quantization can be expressed as follows:

where and are the quantized line parameters, while and are the quantization intervals for and , respectively. The number of quantization levels and can be written as follows:

Considering the input image , the convolution neural network characteristics are first extracted, where is the number of channels, while and are the spatial size. Then, DHT takes as the input image and produces the transformed characteristics, . The sizes of the transformed characteristics and are determined using the quantization intervals, as shown in Equation (12) [36,37]. As displayed in Figure 6a, for any line on the image, the characteristics of every pixel along are combined to in the parametric space :

where is the positional index. Equation (11) determines and based on the characteristics of the line , which are quantized into discrete grids using Equation (12). According to the number of and , there is a unique line of candidates denoted by [38]. For all these candidate lines, DHT is performed and their respective characteristics are associated with their corresponding positions in . As shown in Figure 6b, the characteristics of neighboring lines in characteristic space are converted into neighboring points in parametric space. In parametric space, a simple 3 × 3 convolutional operation makes it easy to capture contextual information for the center line (orange). It should be noted that DHT is order-independent in both characteristic space and parametric space, making it highly parallelizable [39].

3.3. Weighted Morphological Filtering

Weighted morphology or mathematical morphology consists of a set of morphological algebraic operations that simplify image data, maintain the basic shape properties of images, and remove extraneous structures for analysis and recognition purposes [40]. Morphological filtering uses nonlinear algebra tools to correct the signal by using the local characteristics of the signal; it can effectively filter out the noise in grayscale images [41]. According to the basic mathematical morphological operations, various transformation operators can be derived, such as the combination of expansion and corrosion, and morphological opening and closing operations can be derived. These morphological operations can be applied to analyze and process image-processing algorithms related to shape and structure, including image segmentation [42], feature extraction, edge detection, image filtering, etc. Let represent the input image and represent the structural elements; then, corrosion and expansion can be defined as follows:

Based on the principle of operation of corrosion and expansion, morphological opening and closing operations can be defined separately as follows:

The top-hat operation is also a classical spatial filtering algorithm derived from the basic operation of mathematical morphology, which is often used as a high-pass filtering operator for image preprocessing [43]. The operation has the effect of polishing the outer boundary of the image. It removes small protrusions of the contour and compares the original image with the morphological image to obtain the top-hat image, defined as follows:

Since morphological filtering is related to the size of the structure, the size of the structure determines the effect of high-pass filtering [44]. When selecting a structure according to the structural characteristics of the target in the image, the smaller the size of the structure selected, the smaller the size of the target that can be preserved, and the better the low-frequency background filtering effect in the image. The minimum target size can be approximated using the structure, as follows:

where and represent the maximum 2D size of the smallest target in the image plane and the maximum 2D size of the structure used for morphological filtering, respectively.

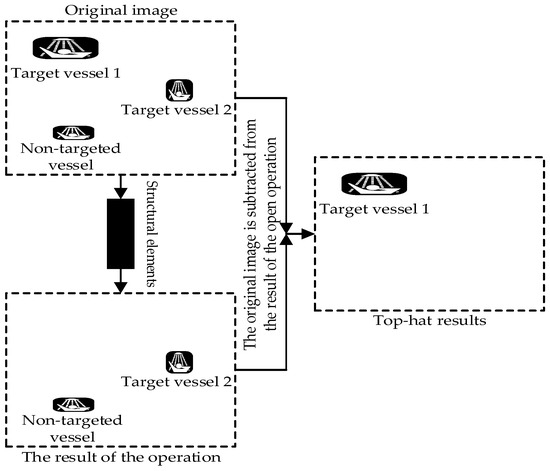

The background suppression algorithm uses the morphological operation results to estimate the background components and then subtracts them from the original image to obtain the target image without the background components [45]. However, when there are multiple targets in the image and the target size difference is large, the traditional morphological filtering of a single structural element will filter out the small targets at the same time as the low-frequency background. This process is shown in Figure 7.

Figure 7.

Results of traditional morphological filtering.

In order to avoid the shortcomings caused by the morphological filtering of a single structural element, this study considers the difference in the geometric characteristics of the target and the background; then, it adopts the weighted open operations of multiple structural elements [46]. Consider the input image represented in the following equation:

where is the weight coefficient, while and are the opening operations of different structures. The value of can be adjusted according to the ratio of the information entropy of the filtered image to the information entropy of the original image. The selection of structural elements is the key to morphological filtering. Thus, as the structural operator of the non-convex set cannot obtain more useful information because most of the line segments connecting the two points are located outside the set, this algorithm selects the cruciform structural elements of the convex set according to the geometric characteristics of the target vessel. The structural elements of and are as follows:

The size of the structural element is large, which will blur the edge details of the object, but the effect of filtering out the background noise is good. The size of the structural element is small, and its filtering effect is poor, but it can effectively protect the edge details of the object. The above two types of structural elements of different sizes are used for weighted open operations, which can filter out background noise that is smaller in size compared to the structural elements. At the same time, the gray value of the image and the large brightness area are basically unaffected by filtering. Since the sea background noise is suppressed, small vessels can be clearly detected; however, their movements still need to be updated. Furthermore, this method can effectively improve the signal-to-noise ratio of an image by enhancing the target and suppressing the background noise [47]. However, it does not protect the edge of the moving target signal from being blurred. Therefore, the median filter is used to not only protect the edge of the moving target signal but also to filter out the impulse noise in the background image.

3.4. Neighbor-Based Adaptive Fast Median Filtering

The filtering principle of the median filter is to sort the neighborhoods of a particular pixel in ascending or descending order and use the sorted median value as the pixel value of other pixels in the pixel neighborhood. That is, by selecting a filtering window, sorting the pixels in the window, and assigning the median value to the current pixel, the median filtering result of the entire image can be obtained by iterating through each pixel on the image [48]. The expression for the median filter is as follows:

At the sea surface of a port, there is a lot of impulse noise, which seriously interferes with the target detection. As such, before vessel detection, the image should be preprocessed to enhance the target vessel and suppress the impulse noise. The traditional median filtering approach uses a fixed-size window, and the loss of subtle points in the image is obvious when filtering [49]. All the pixels in the window must be reordered every time the window is moved; for a window of elements, the algorithm complexity is . This algorithm does not consistently deliver updates as the window moves; therefore, in this study, the fast median filtering based on histogram statistics is adopted. The median value is obtained indirectly by calculating the histogram of the pixels in the window. When the window moves, only the pixels that have moved in and out are updated. A suitable equation is as follows:

and are the average time complexities of the quick sorting of the and elements, respectively, and is a constant. It has been demonstrated that , where is a constant [50]. The de-noising performance of median filtering is related to the density of the input noise signal. If the input noise signal is normally distributed and the mean value is zero, the variance of the output noise signal after median filtering is approximately as follows:

where is the length of the filter window, is the input noise power, is the mean input noise, and is the input noise density function. From the above formula, the median filtering algorithm will effectively filter out the impulse noise with a pulse width of less than .

In order to improve the median filter of a fixed window size and the loss of fine points in the image, the method of increasing the filter window is adopted. At the same time, to avoid misjudging the pixels with higher pixel values at the edge of the target as noise points, the neighborhood pixels are used as the judgment basis for the output. For example, if the window value is [20, 30, 30, 35, 40, 50, 100, 100, 100], 100 is likely to be the target edge point, which is misjudged as noise. Let be the gray value at the position in the image, be the current filtered window, be the maximum filtered window, and be the maximum, minimum, and median values of gray in the filtered window, respectively, and be the number of pixels in the window equal to . The process of adaptive median filtering is as follows [51,52,53]:

- If where T is the threshold, then jumps to step 2; otherwise, increase the window . Then, quickly sort the median values for the new window until the above conditions are met; then the output is .

- If , , then the output is ; otherwise, determine whether is true.

- If it is true, then the output is ;

- If it is not true, then the output is .

3.5. Connected Domain Calculation Based on Moment Features

The morphological features of a vessel can be obtained by using the contour-based connectivity domain marking method [54]. The moment characteristics of the connected region are expressed as follows:

where represents the region of the -order moment, denotes the central moment, and are the region point coordinates, and and represent the region centroid coordinates. In this study, the length of the region , the height of the region , the aspect ratio , and the area of the region are used to describe the target vessel. These four quantities form a four-dimensional target feature vector which is directly deleted when it does not meet the set threshold in the binary image [55]. The feature vector must execute the vessel’s contour moment function.

4. Engineering Application Case

4.1. Simulation Environment and Data Acquisition

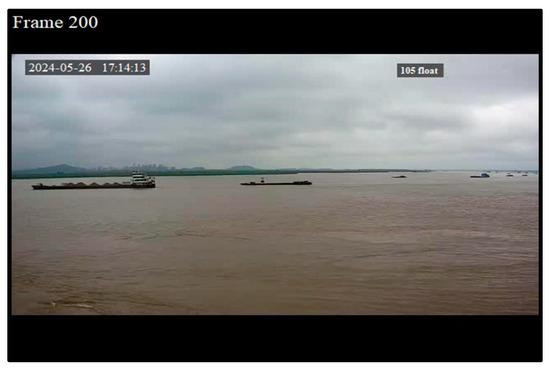

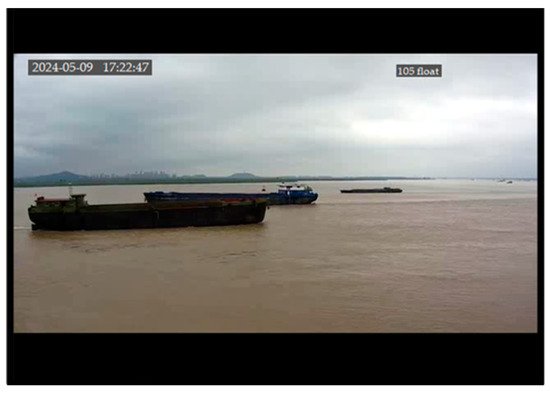

The engineering applications were carried out as part of the East China Sea offshore wind energy project in Zhangjiagang. The surveillance camera used in this application case was located in the port of Zhangjiagang City, at about 800 m from the target vessel. Zhangjiagang port is an important hub for the transportation of goods and large equipment by sea in China. This port has become one of the largest international trading ports in China, with a large flow of seaworthy vessels, mainly including bulk carriers, container vessels, cargo vessels, etc. Figure 8 shows a real video image taken from the port of Zhangjiagang; the video has a duration of 1 min and 30 s, and its resolution is about . A server was used as the simulation and development environment; its memory was 4 GB, the system platform was the 32-bit version of WinXP, the software implementation platforms were VS2010 and OpenCV 2.4.8, and the video frame rate was 24 fps.

Figure 8.

Original video image (frame 200).

4.2. Program Verification

In total, 750 frames of images were continuously collected from the port surveillance video; the length of each frame was 0.28 s. The basic frame used in this application was frame 200. In order to verify the background noise suppression performance of each algorithm used in this study, the mean square error, peak signal-to-noise ratio, and information entropy of the results obtained before and after the use of the algorithm were calculated. The calculation formulas are as follows [56]:

where is the mean square error, is the original image, is the open image, is the peak signal-to-noise ratio, is the maximum value of the image point color, is the information entropy of the image, is the total gray level of the image, and is the probability distribution of pixels with a gray value of . The calculation results for each algorithm, including the morphological filtering and median filtering methods, are shown in Table 1 and Table 2, respectively.

Table 1.

Comparison of the suppression of noise at the sea surface.

Table 2.

Comparison of impulse noise suppression.

The specific steps to complete the proposed approach are as follows.

4.2.1. First Step

The first step is to acquire the original video image. The input image is recorded as .

4.2.2. Second Step

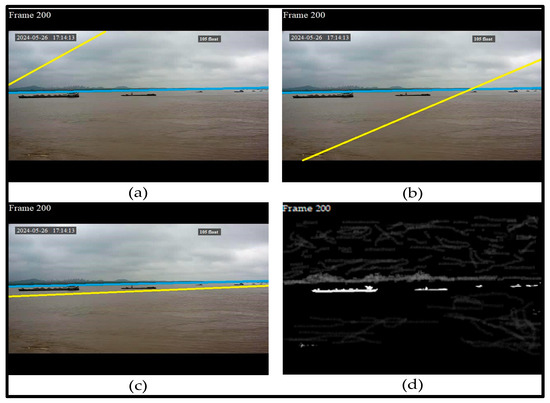

Next, deep Hough transform based on a line detector is used to detect and remove the irrelevant lines caused by changes in light and occlusion. The sea–sky background is segmented to reduce the scanning area and further improve the detection efficiency. Following the translation of the DHT features into the parametric space, the grid location (θ, r) will align to features in the feature space along a whole line. These tasks are performed using Equations (10)–(13). For this study’s application case, , , and . Entities close to the yellow line are translated to surrounding points near the target; the blue line separates the seawater from the sky. The results of this task are displayed in Figure 9.

Figure 9.

Images and annotations (yellow and blue lines) of DHT. (a) S = 0.2; (b) S = 0.5; (c) S = 0.8; (d) converted image.

4.2.3. Third Step

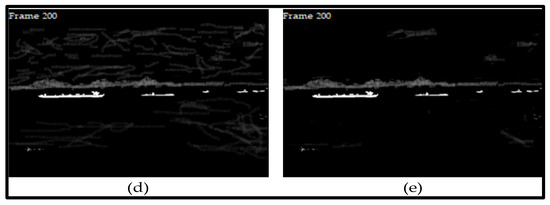

After the image is converted, the weighted morphology process is carried out. A two-stage morphological filtering process is performed, starting with the use of the open and close operations using Equations (20) and (21), where the weight coefficient is , and are the opening operations, and is the structural element. Then, the top-hat transform is used to polish the outer boundary of the image utilizing Equations (18) and (19). The results of these operations can be observed in Figure 10.

Figure 10.

Results of morphological filtering: (d) converted image; (e) results after morphological filtering.

Table 1 summarizes the performance of the morphological filtering algorithm.

Based on these results, it is obvious that the ripples (sea background noise) remaining in the image after applying the weighted morphological filtering were significantly lower. The MSE and the number of corrugated bands, which were lower after filtering, confirm this. However, since the image still had interference, it was necessary to apply the median filter to remove it from the image.

4.2.4. Fourth Step

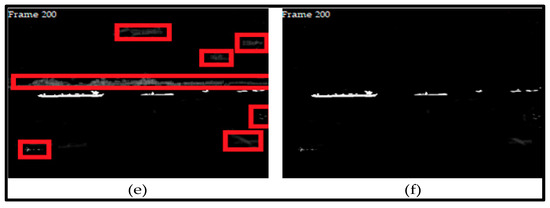

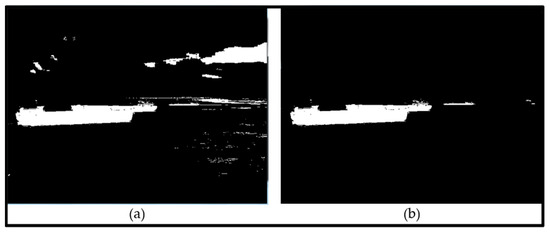

The neighborhood-based adaptive fast median filtering algorithm was adopted using Equations (22)–(24) to remove the impulse noise from the image. The length of the filter window was 4.9. The results before and after processing are displayed in Figure 11, where Figure 11e is the resulting image after the morphological filter was applied. It can be seen from the results that after processing, the random noise caused by the sky and the waves of the sea was almost entirely eliminated; the resulting profile is closer to the real target.

Figure 11.

Effects of median filtering: (e) result before applying the median filtering, the red boxes indicate the remaining background noise in the image. (f) result after median filtering.

Table 2 shows the performance of the neighborhood-based adaptive fast median filtering algorithm.

Then, the connected domain is applied to locate and monitor the vessels’ movement in real time.

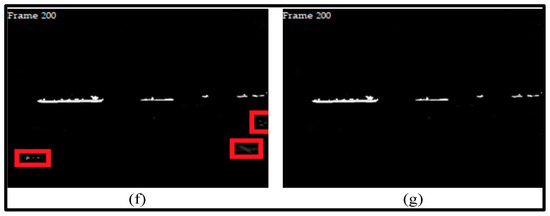

4.2.5. Fifth Step

This step consists of computing the connected domain using Equations (25) and (26). The target feature vector is also calculated. The aspect ratio and the area were set according to multiple experiments. Thus, the number of contours of multiple frames of images is counted and saved, and the influence of discrete surface noise on the vessel detection results is excluded. When the target satisfies both , at the same time and appears in successive frames, it is identified as a target vessel. The detection results and the detected vessels are shown in Figure 12 and Figure 13, respectively.

Figure 12.

(f) result before applying the connected domain, the red boxes indicate the remaining background noise in the image. (g) result after applying the connected domain.

Figure 13.

The outcome of the detected vessels.

The following can be observed in the images:

- Undesirable edges and protrusions in the target areas of the vessels were filtered out.

- The four-dimensional target feature vector was effective, as it showed the contour moment features of the vessels.

- The target vessels were distinguished from the surface noise by setting the aspect ratio and the area width of the connected area.

- The final results in Figure 13 indicate that there are seven vessels detected in this image.

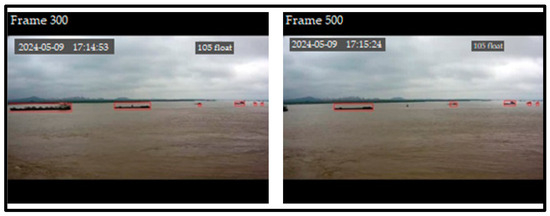

- The same process was run on frames 300 and 500; the results are shown in Figure 14, and they show that frames 300 and 500 detected six and five vessels, respectively.

Figure 14. The outcomes of the detected vessels in different frames.

Figure 14. The outcomes of the detected vessels in different frames.

4.3. Validation of the Approach

Surveillance cameras operate at the port. Two video images (video 1 and video 2) were recorded at the same time late in the afternoon at about 5:20 pm. In total, 60 images were continuously collected from both videos. The original video image is displayed in Figure 15 (video 1 and video 2 had the same images).

Figure 15.

Original video image.

4.3.1. First Phase

The proposed approach was performed only on video 2, not on video 1. The processing results are shown in Figure 16 and Figure 17, respectively.

Figure 16.

Performance results of both videos. (a) Processing result from video 1; (b) processing result from video 2.

Figure 17.

The results of detected vessels.

The recorded results of video 1 and video 2 show the following:

- In video 1, the contrast of distant target vessels was too low, resulting in a relatively high false detection rate.

- From the analysis of the processing time, video 1 took 2.37 s to determine the number of vessels in the image. Video 2 only needed 1.23 s to obtain the number of vessels from the image. It can be concluded that the vessel detection method adopted in this study can meet the requirements of real-time video processing. The processing time was improved by 1.14 s.

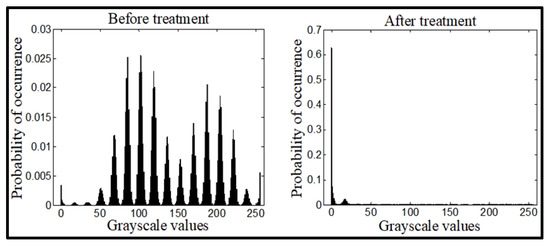

- The original image and the multi-structure diagram were analyzed for significance, and the analysis results are shown in Figure 18.

Figure 18. Significance comparison chart.

Figure 18. Significance comparison chart.

In the image, the following can be observed:

- The pixels of the original image are evenly distributed in a wide range of gray levels.

- After the calculations were complete, the background pixels of the sea surface were mainly concentrated in a very narrow low gray level.

- The pixels corresponding to the target vessels are concentrated at the end of the high gray level, which is conducive to the image segmentation between the target vessels and the background.

- Comparatively, the improved open operations performed better than the traditional open operations.

4.3.2. Second Phase

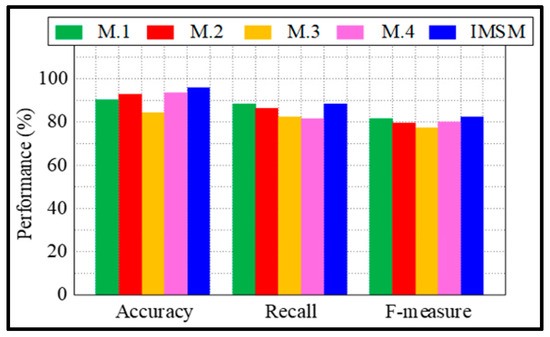

Four other methods, M.1, M.2, M.3, and M.4, were applied to the same video image to detect the vessels in the port area. M.1 is an effective motion object detection method using optical flow estimation under a moving camera [57]. M.2 is a motion detection method for moving camera videos that uses background modeling and FlowNet [58]. M.3 is a three-frame difference algorithm to detect moving objects [59]. M.4 is an approach to ship target detection based on a combined optimization model of dehazing and detection [60]. M.1 inserts a third frame between the first two to acquire the horizontal and vertical flows. The horizontal and vertical flows are then optimized through the use of the gradient function and threshold approach, respectively. Following the filling of the region, the entire motion object boundary might be optimized using the Gaussian filtering technique to provide the final detection results. For M.2, a fusing technique for dense optical flow and a background modeling method are implemented to enhance the image detection outcomes. In the process, deep learning-based dense optical flow is applied along with an adaptive threshold and some post-processing techniques; this not only extracts the moving pixels but also increases the computational cost. Regarding M.3, a three-frame difference algorithm that combines edge information is proposed to enhance moving target detection. The program uses the expansion and corrosion of mathematical morphology to eliminate noise from images. M.4 uses a self-adaptive image dehazing module integrated with a lightweight improved object detection deep learning model to detect ships in foggy images.

We aimed to assess and compare the performance of the proposed approach (IMSM) with that of the other four methods. We assessed the accuracy, recall, and F-measure of the detected vessels to determine the quality of each method. Matching the identified lines with the sea-truth lines was the first step. Let be an image. A matching bipartite image is a set of selected edges, with no two edges sharing the same vertices. We aimed to identify a matching image such that, for every set of sea-truth lines , there was no more than one detected line , and vice versa. In this study, we determined the true positive (TP), false positive (FP), and false negative (FN) rates in accordance with the matching images of p and s. A TP is defined as predicted lines linked with sea-truth lines . An FP is a predicted line that does not match any sea-truth line , while an FN is a sea-truth line that does not match any predicted line. The formulas for determining the accuracy, recall, and F-measure are as follows:

Multiple thresholds (τ = 0.01, 0.02, …, 0.99) were applied to the sea-truth and prediction pairs. As a result, we obtained a number of F-measure, accuracy, and recall scores. The performances of each method were assessed based on the results obtained from these metrics.

Table 3.

Evaluation of different methods.

Figure 19.

Performance chart of the five methods.

The M.1, M.2, and IMSM methods output remarkable results. However, when these three suitable approaches were compared in depth, IMSM had the best processing time (1.23 s), F-measure (0.821), and accuracy (0.94) among the three methods. In addition, its false detection rate (0.04) was the lowest. These results prove the effectiveness and reliability of the proposed approach for the detection of vessels around a surveillance area. In addition, it is evident from the results in Table 3 that edge-guided refinement, irrespective of the dataset, successfully improved the detection outcomes.

5. Discussion

To detect vessels, we introduced an approach that integrates four algorithms. The Hough transform algorithm detects accurately shapes (extraneous lines, circles, edges, etc.) and occlusions in an image. It takes into account multiple transformations that can slow down the detection process. The image is converted into a binary map by means of the OSTU-based adaptive threshold segmentation method.

The weighted morphological filtering approach is very useful for binary images. It handles various shapes and patterns in image analysis. This algorithm is also good for edge detection and noise cancellation. However, it does not perform well on images with high noise, and it can smooth the edges too much, resulting in a loss of fine detail. This is confirmed by the results presented in Table 1. It can be seen that after filtering, there were still about 10 corrugated bands in the image. To solve these limitations, the neighbor-based adaptive fast median filtering method was used. This method preserves the edges and removes impulse noise from the image. It can be seen in Table 2 that after filtering, there were fewer than five corrugated bands in the image. However, it is less effective when noise takes up large parts of the image, and it can blur fine details and textures. The connected domain calculation based on moment features is used due to its high vessel tracking efficiency; it extracts and analyzes the connected components of an image. It provides useful statistical properties for shape analysis. However, it is susceptible to segmentation errors and noise in the image.

Each algorithm has its advantages and limitations. Therefore, we believe that by combining different algorithms with different limitations and advantages, the resulting model can be more efficient.

6. Conclusions

Developing detection methods for vessels and activities around port environments has always been a very challenging task. It is even more difficult when the target vessels are small, and especially when it starts to get dark. Therefore, in this study, we developed an improved multi-structural morphology approach to effectively suppress not only the background noise but also congestion interference at sea so that vessels can be made visible. This makes it possible to accurately detect vessels around surveillance areas in real time. The results of these experiments show that the proposed approach can effectively detect vessels and meet the real-time requirements of video image processing. Additionally, several tests were conducted with four other methods; the results confirm the effectiveness of the proposed approach in terms of its detection accuracy (94%) and processing time (1.23 s). Out of 60 trials, we recorded only a 4% false detection rate, thus proving the reliability of this approach. However, it is worth mentioning that in practice, each method has distinct advantages and their effectiveness depends on the operating environment.

In practice, there is no absolute ideal filtering method, but the characteristics of the de-noising results show the effectiveness of the proposed method in terms of its performance. However, it is worth mentioning that all the experiments in this study were carried out in a single port environment, which was the only one made available to us. We hope to have the opportunity to use our system in another port environment to evaluate the results elsewhere. Additionally, this approach is applicable to surveillance camera systems. We believe that its detection accuracy will be lower for the smallest vessels and smaller activities around port environments. For this reason, the proposed approach should be further improved. Therefore, in our future work we will focus on refining the structure of the proposed approach and improving the four-dimensional target feature vector ability for effective tracking. We also plan to combine our proposed approach with deep learning techniques for the identification, recognition, and classification of vessels and activities around port environments. This should increase the overall detection accuracy of this approach.

Author Contributions

Conceptualization, B.M.T.F. and J.A.; methodology, B.M.T.F.; software, J.A.; validation, J.A., H.C.E.-D., and W.Z.; formal analysis, B.M.T.F.; investigation, H.C.E.-D.; resources, W.Z.; data curation, B.M.T.F.; writing—original draft preparation, B.M.T.F.; writing—review and editing, B.M.T.F.; visualization, B.M.T.F. and H.C.E.-D.; supervision, W.Z. and H.C.E.-D.; project administration, W.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The Institutional Review Board of Shanghai Jianqiao University and the University of Ebolowa have approved the study.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Acknowledgments

The authors would like to say thank you to the R&D Center of Intelligent Systems of Shanghai Jianqiao University and the Electrical, Electronic and Energy Systems Laboratory of the University of Ebolowa for the use of their equipment. Many thanks to the staff of both laboratories. We would also like to say thank you to the all workers at the port (the port of Zhangjiagang City on the East China Sea) where the data were collected for their assistance, kindness, and help (the use of their materials for experiments).

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could appear to influence the work reported in this paper. The authors declare no conflicts of interest.

References

- Sundberg, J.H.; Olin, A.B.; Reddy, S.; Berglund, P.-A.; Svensson, E.; Reddy, M.; Kasarareni, S.; Carlsen, A.A.; Hanes, M.; Kad, S.; et al. Seabird surveillance: Combining CCTV and artificial intelligence for monitoring and research. Remote Sens. Ecol. Conserv. 2023, 9, 435–581. [Google Scholar] [CrossRef]

- Xu, X.Q.; Chen, X.Q.; Wu, B.; Wang, Z.C.; Zhen, J.B. Exploiting high-fidelity kinematic information from port surveillance videos via a YOLO-based framework. Ocean Coast. Manag. 2022, 222, 106117. [Google Scholar] [CrossRef]

- Wang, H.; Wang, T.; Liu, L.; Sun, H.; Zheng, N. Efficient Compression-Based Line Buffer Design for Image/Video Processing Circuits. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2019, 27, 2423–2433. [Google Scholar] [CrossRef]

- Li, D.S.; Zhou, Y.C. Recognition and detection of ports and ships based on image application and non local feature enhancement. In Proceedings of the 2022 IEEE 10th Joint International Information Technology and Artificial Intelligence Conference (ITAIC), Chongqing, China, 17–19 June 2022. [Google Scholar] [CrossRef]

- Liu, J.L.; Yan, X.P.; Liu, C.G.; Fan, A.L.; Ma, F. Developments and Applications of Green and Intelligent Inland Vessels in China. J. Mar. Sci. Eng. 2023, 11, 318. [Google Scholar] [CrossRef]

- Li, Y.; Yan, S.H. Moving Ship Detection of Inland River Based on GNSS Reflected Signals. In Proceedings of the 2021 IEEE Specialist Meeting on Reflectometry Using GNSS and other Signals of Opportunity (GNSS+R), Beijing, China, 14–17 September 2021. [Google Scholar]

- Song, P.F.; Qi, L.; Qian, X.M.; Lu, X.Q. Detection of ships in inland river using high-resolution optical satellite imagery based on mixture of deformable part models. J. Parallel Distrib. Comput. 2019, 132, 1–7. [Google Scholar] [CrossRef]

- Yu, N.J.; Fan, X.B.; Deng, T.M.; Mao, G.T. Ship Detection in Inland Rivers Based on Multi-Head Self-Attention. In Proceedings of the 2022 7th International Conference on Signal and Image Processing (ICSIP), Suzhou, China, 20–22 July 2022. [Google Scholar]

- Morillas, J.R.A.; García, I.C.; Zölzer, U. Ship detection based on SVM using color and texture features. In Proceedings of the 2015 IEEE International Conference on Intelligent Computer Communication and Processing (ICCP), Cluj-Napoca, Romania, 3–5 September 2015. [Google Scholar]

- Gao, F.; Lu, Y.G. Moving Target Detection Using Inter-Frame Difference Methods Combined with Texture Features and Lab Color Space. In Proceedings of the 2019 International Conference on Artificial Intelligence and Advanced Manufacturing (AIAM), Dublin, Ireland, 16–18 October 2019. [Google Scholar]

- Marie, T.F.B.; Han, D.; An, B.; Chen, X.; Shen, S. Design and Implementation of Software for Ship Monitoring System in Offshore Wind Farms. Model. Simul. Eng. 2019, 2019, 3430548. [Google Scholar]

- Li, B.; Xie, X.Y.; Wei, X.X.; Tang, W.T. Ship detection and classification from optical remote sensing images: A survey. Chin. J. Aeronaut. 2021, 34, 145–163. [Google Scholar] [CrossRef]

- Wei, T.; Wang, T.; Dong, T.; Jing, M.; Chen, S.; Xu, C.; Liu, Y.; Wu, J.; Gao, L. Comparative analysis of SAR ship detection methods based on deep learning. In Proceedings of the IET International Radar Conference (IRC 2023), Chongqing, China, 3–5 December 2023. [Google Scholar] [CrossRef]

- Elharrouss, O.; Almaadeed, N.; Al-Maadeed, S. A review of video surveillance systems. J. Vis. Commun. Image Represent. 2021, 77, 103116. [Google Scholar] [CrossRef]

- Brooks, C.J.; Grow, C.; Craig, P.A.; Short, D. Cybersecurity Essentials. In Understanding Video Surveillance Systems, 1st ed.; CRC Press: Boca Raton, FL, USA, 2018; pp. 45–70. [Google Scholar] [CrossRef]

- Fouda, B.M.T.; Yang, B.; Han, D.Z.; An, B.W. Pattern Recognition of Optical Fiber Vibration Signal of the Submarine Cable for Its Safety. IEEE Sen. J. 2021, 21, 6510–6519. [Google Scholar] [CrossRef]

- Fouda, B.M.T.; Yang, B.; Han, D.Z.; An, B.W. Principle and Application State of Fully Distributed Fiber Optic Vibration Detection Technology Based on Φ-OTDR: A Review. IEEE Sen. J. 2021, 21, 16428–16442. [Google Scholar]

- Fouda, B.M.T.; Han, D.Z.; An, B.W. Pattern recognition algorithm and software design of an optical fiber vibration signal based on Φ-optical time-domain reflectometry. Appl. Opt. 2019, 58, 8423–8432. [Google Scholar]

- Fouda, B.M.T.; Han, D.Z.; An, B.W.; Li, J.Y. A research on Fiber-optic Vibration Pattern Recognition Based on Time-frequency Characteristics. Advs. Mech. Engr. 2018, 10, 168781401881346. [Google Scholar]

- Fouda, B.M.T.; Han, D.Z.; An, B.W.; Lu, X.J.; Tian, Q.T. Events detection and recognition by the fiber vibration system based on power spectrum estimation. Advs. Mech. Engr. 2018, 10, 1687814018808679. [Google Scholar] [CrossRef]

- Fouda, B.M.T.; Yang, B.; Han, D.Z.; An, B.W. A Hybrid Model Integrating MPSE and IGNN for Events Recognition along Submarine Cables. IEEE Trans. Instr. Meas. 2022, 71, 6502913. [Google Scholar]

- Fouda, B.M.T.; Han, D.Z.; An, B.W.; Pan, M.Q.; Chen, X.Z. Photoelectric Composite Cable Temperature Calculations and its Parameters Correction. Intl. J. Pow. Electr. 2022, 15, 177–193. [Google Scholar]

- Fouda, B.M.T.; Zhang, W.J.; Han, D.Z.; An, B.W. Research on Key Factors to Determine the Corrected Ampacity of Multicore Photoelectric Composite Cables. IEEE Sens. J. 2024, 24, 7868–7880. [Google Scholar]

- Fouda, B.M.T.; Han, D.Z.; Zhang, W.J.; An, B.W. Research on key technology to determine the exact maximum allowable current-carrying ampacity for submarine cables. Opt. Laser Technol. 2024, 175, 110705. [Google Scholar] [CrossRef]

- Yan, Z.J.; Xiao, Y.; Cheng, L.; He, R.; Ruan, X.; Zhou, X.; Li, M.; Bin, R. Exploring AIS data for intelligent maritime routes extraction. Appl. Ocean Res. 2020, 101, 102271. [Google Scholar] [CrossRef]

- Zhong, Z.J.; Wang, Q. Research on Detection and Tracking of Moving Vehicles in Complex Environment Based on Real-Time Surveillance Video. In Proceedings of the 2020 3rd International Conference on Intelligent Robotic and Control Engineering (IRCE), Oxford, UK, 10–12 August 2020. [Google Scholar]

- Wawrzyniak, N.; Hyla, T.; Popik, A. Vessel Detection and Tracking Method Based on Video Surveillance. Sensors 2019, 19, 5230. [Google Scholar] [CrossRef]

- Cong, Y.; Li, Z.; Liang, J.; Liu, P. Research on video-based moving object tracking. In Proceedings of the 2023 IEEE International Conference on Mechatronics and Automation (ICMA), Harbin, China, 6–9 August 2023. [Google Scholar]

- Chen, M.D.; Ma, J.; Zeng, X.; Liu, K.; Chen, M.; Zheng, K.; Wang, K. MD-Alarm: A Novel Manpower Detection Method for Ship Bridge Watchkeeping Using Wi-Fi Signals. IEEE Trans. Instr. Meas. 2022, 71, 5500713. [Google Scholar] [CrossRef]

- Deng, H.M.; Zhang, Y. FMR-YOLO: Infrared Ship Rotating Target Detection Based on Synthetic Fog and Multiscale Weighted Feature Fusion. IEEE Trans. Instr. Meas. 2023, 73, 1. [Google Scholar] [CrossRef]

- Chithra, A.S.; Roy, R.R.U. Otsu’s Adaptive Thresholding Based Segmentation for Detection of Lung Nodules in CT Image. In Proceedings of the 2018 2nd International Conference on Trends in Electronics and Informatics (ICOEI), Tirunelveli, India, 11–12 January 2018; pp. 1303–1307. [Google Scholar]

- Zhu, Q.D.; Jing, L.Q.; Bi, R.S. Exploration and improvement of Ostu threshold segmentation algorithm. In Proceedings of the 2010 8th World Congress on Intelligent Control and Automation, Jinan, China, 7 July 2010; pp. 6183–6188. [Google Scholar]

- Hough, P.V.C. Method and Means for Recognizing Complex Patterns. U.S. Patent 3069654A, 18 December 1962. [Google Scholar]

- Fernandes, L.A.; Oliveira, M.M. Real-time line detection through an improved hough transform voting scheme. Pattern Recogn. 2008, 41, 299–314. [Google Scholar] [CrossRef]

- Princen, J.; Illingworth, J.; Kittler, J. A hierarchical approach to line extraction based on the Hough transform. Comput. Vis. Graph. Image Process. 1990, 52, 57–77. [Google Scholar] [CrossRef]

- Kiryati, N.; Eldar, Y.; Bruckstein, A.M. A probabilistic hough transform. Pattern Recogn. 1991, 24, 303–316. [Google Scholar] [CrossRef]

- Zhang, Z.; Li, Z.; Bi, N.; Zheng, J.; Wang, J.; Huang, K.; Luo, W.; Xu, Y.; Gao, S. PPGnet: Learning point-pair graph for line segment detection. IEEE Conf. Comput. Vis. Pattern Recog. 2019, 2019, 7105–7114. [Google Scholar]

- Zhao, K.; Han, Q.; Zhang, C.B.; Xu, J.; Cheng, M.M. Deep Hough Transform for Semantic Line Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 4793–4806. [Google Scholar] [CrossRef]

- Akinlar, C.; Topal, C. Edlines: A real-time line segment detector with a false detection control. Pattern Recogn. 2011, 32, 1633–1642. [Google Scholar] [CrossRef]

- Lotufo, R.A.; Audigier, R.; Saúde, A.V.; Machado, R.C. Chapter Six: Microscope Image Processing. In Morphological Image Processing, 2nd ed.; Acadenic Press: Cambridge, MA, USA, 2023; pp. 75–117. [Google Scholar] [CrossRef]

- Pingel, T.J.; Clarke, K.; Mcbride, W.A. An Improved Simple Morphological Filter for the Terrain Classification of Airborne LIDAR Data. J. Photogramm. Remote Sens. 2013, 77, 21–30. [Google Scholar] [CrossRef]

- Thanki, R.M.; Kothari, A.M. Morphological Image Processing. In Digital Image Processing Using SCILAB; Springer: Berlin/Heidelberg, Germany, 2019; pp. 99–113. [Google Scholar]

- Zeng, M.; Li, J.X.; Peng, Z. The design of Top-Hat morphological filter and application to infrared target detection. Infrared Phys. Technol. 2006, 48, 67–76. [Google Scholar] [CrossRef]

- Wang, Z.S.; Yang, F.B.; Peng, Z.H.; Chen, L.; Ji, L.E. Multi-sensor image enhanced fusion algorithm based on NSST and top-hat transformation. Optik 2015, 126, 4184–4190. [Google Scholar]

- Li, Y.B.; Niu, Z.D.; Xiao, H.T.; Li, H. BSC-Net: Background Suppression Algorithm for Stray Lights in Star Images. Remote Sens. 2022, 14, 4852. [Google Scholar] [CrossRef]

- Guan, M.S.; Ren, H.E.; Ma, Y. Multi-sale morphological filtering method for preserving the details of images. Control Systems Engineering. In Proceedings of the 2009 Asia-Pacific Conference on Computational Intelligence and Industrial Applications (PACIIA), Wuhan, China, 28–29 November 2009. [Google Scholar]

- Chen, Z.R.; Chen, A.; Liu, W.J.; Zheng, D.K.; Yang, J.; Ma, X.Y. A sea clutter suppression algorithm for over-the-horizon radar based on dictionary learning and subspace estimation. Digit. Signal Process. 2023, 140, 104131. [Google Scholar] [CrossRef]

- Appiah, O.; Asante, M.; Hayfron-Acquah, J.B. Improved approximated median filter algorithm for real-time computer vision applications. J. King Saud Univ.-Comput. Inf. Sci. 2022, 34, 782–792. [Google Scholar] [CrossRef]

- Villar, S.A.; Torcida, S.; Acosta, G.G. Median Filtering: A New Insight. J. Math Imaging Vis. 2016, 58, 130–146. [Google Scholar] [CrossRef]

- Sonali; Sahu, S.; Singh, A.K.; Ghrera, S.P.; Elhoseny, M. An approach for de-noising and contrast enhancement of retinal fundus image using CLAHE. Opt. Laser Technol. 2019, 110, 87–98. [Google Scholar] [CrossRef]

- Cao, H.C.; Shen, N.J.; Qian, C. An Improved Adaptive Median Filtering Algorithm Based on Star Map Denoising. In CIAC 2023: Proceedings of 2023 Chinese Intelligent Automation Conference; Lecture Notes in Electrical Engineering; Springer: Berlin/Heidelberg, Germany, 2023; Volume 1082, pp. 184–195. [Google Scholar]

- Li, N.Z.; Li, H.Q. Improv. Adapt. Median Filter. Algorithm Radar Image Co-Channel Interf. Suppression. Sens. 2022, 22, 7573. [Google Scholar] [CrossRef] [PubMed]

- Hwang, H.; Haddad, R.A. Adaptive median filters: New algorithms and results. IEEE Trans. Image Process. 1995, 4, 499–502. [Google Scholar] [CrossRef]

- Yang, C.Z.; Fang, L.C.; Fei, B.J.; Yu, Q.; Wei, H. Multi-level contour combination features for shape recognition. Comput. Vis. Image Underst. 2023, 229, 103650. [Google Scholar] [CrossRef]

- Xu, H.R.; Yang, J.Y.; Shao, Z.P.; Tang, Y.Z.; Li, Y.F. Contour Based Shape Matching for Object Recognition. In Intelligent Robotics and Applications. ICIRA; Lecture Notes in Computer Science; Kubota, N., Kiguchi, K., Liu, H., Obo, T., Eds.; Springer: Cham, Germany, 2016; Volume 9834. [Google Scholar] [CrossRef]

- Fouda, B.M.T.; Han, D.Z.; An, B.W.; Chen, X.Z. Research and Software Design of an Φ-OTDR-Based Optical Fiber Vibration Recognition Algorithm. J. Electr. Comp. Engr. 2020, 2020, 5720695. [Google Scholar]

- Zhang, Y.G.; Zheng, J.; Zhang, C.; Li, B. An effective motion object detection method using optical flow estimation under a moving camera. J. Vis. Commun. Image Represent. 2018, 55, 215–228. [Google Scholar] [CrossRef]

- Ibrahim, D.; Irfan, K.; Muhammed, K.; Feyza, S. Motion detection in moving camera videos using background modeling and FlowNet. J. Vis. Commun. Image Represent. 2022, 88, 103616. [Google Scholar]

- Zhang, Z.G.; Zhang, H.M.; Zhang, Z.F. Using Three-Frame Difference Algorithm to Detect Moving Objects. In The International Conference on Cyber Security Intelligence and Analytics. CSIA 2019. Advances in Intelligent Systems and Computing; Springer: Cham, Germany, 2019; Volume 928, pp. 923–928. [Google Scholar] [CrossRef]

- Liu, T.; Zhang, Z.; Lei, Z.; Huo, Y.; Wang, S.; Zhao, J.; Zhang, J.; Jin, X.; Zhang, X. An approach to ship target detection based on combined optimization model of dehazing and detection. Eng. Appl. Artif. Intell. 2024, 127, 107332. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).