Marine Oil Spill Detection from Low-Quality SAR Remote Sensing Images

Abstract

1. Introduction

- An oil film on the ocean surface of an oil spill area usually presents extremely irregular shapes with complex and variable boundaries, and the designs of existing neural network methods do not include networks for oil spills with extremely irregular shapes;

- SAR image noise is multiplicative noise, and the conventional denoising methods will cause the oil spill boundary to be blurred and reduce the segmentation effect;

- The method of relying on the convolution operator to extract local area features will result in an inability to obtain the global background of the image and the loss of contextual information, which is crucial for oil spill detection.

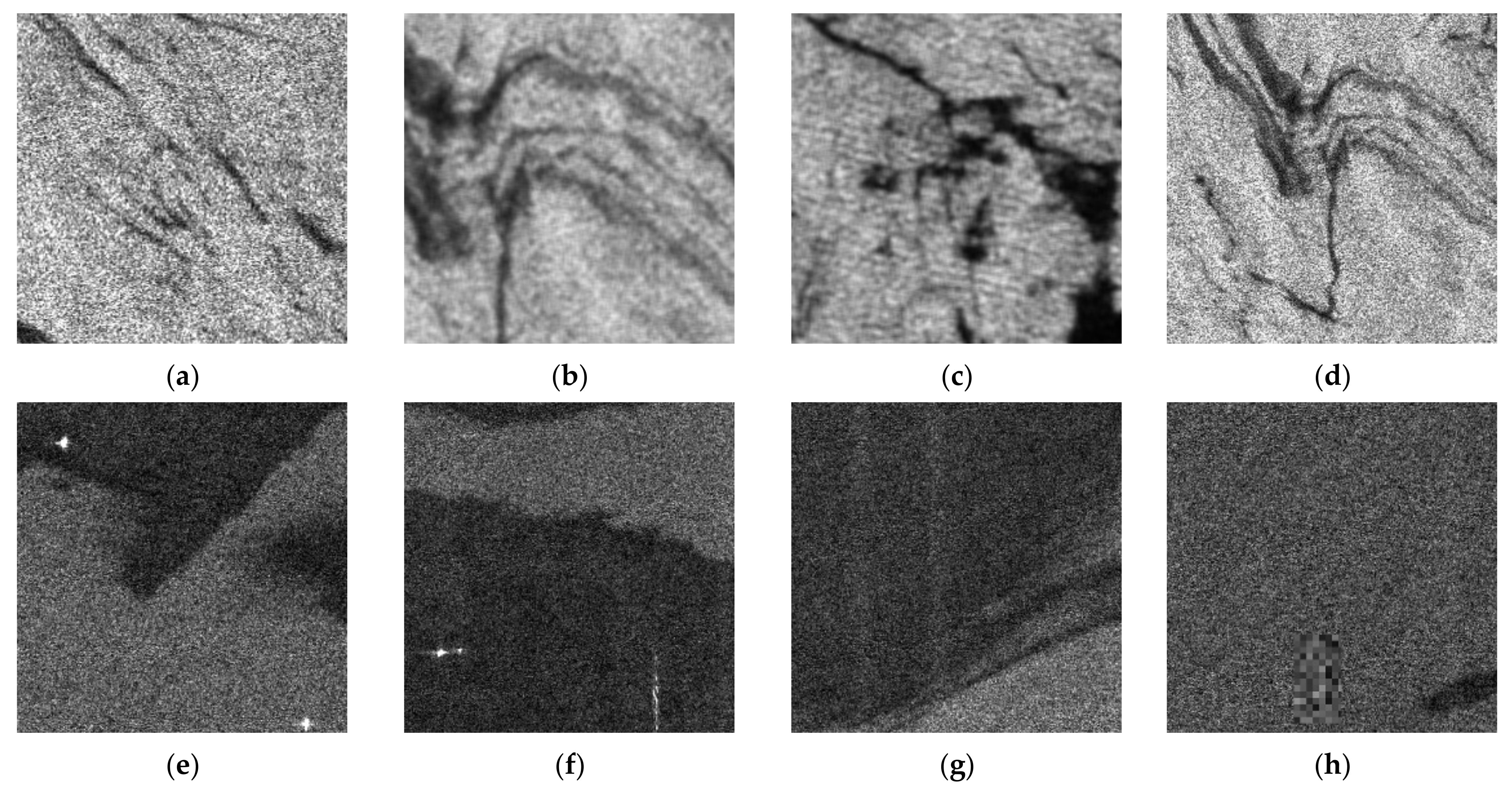

2. Dataset and Analysis

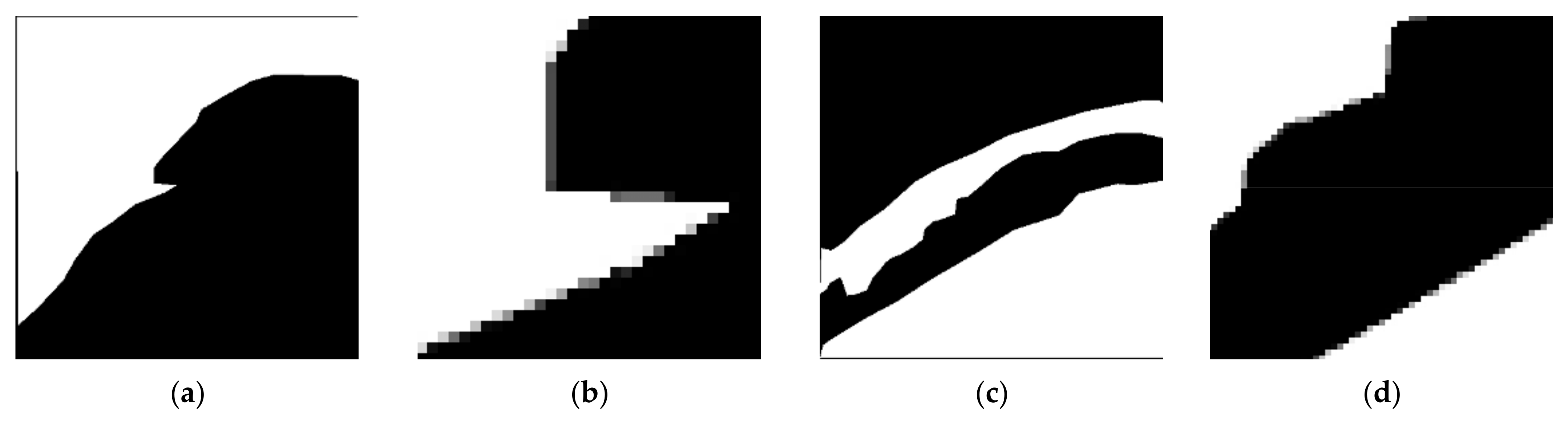

- There are anomalies in the edge classification of the gt_images, as shown in Figure 2a,c. This problem leads to mislabeling, which affects the learning of features by the deep learning network and severely affects the performance evaluation of the recognition model.

- There are pixel values in the gt_images that are neither 0 nor 255, and these values are mainly found at the edges of the oil region, as shown in Figure 2b,d. The oil spill detection problem is a binary classification problem; thus, the specific class of pixels between 0 and 255 cannot be determined.

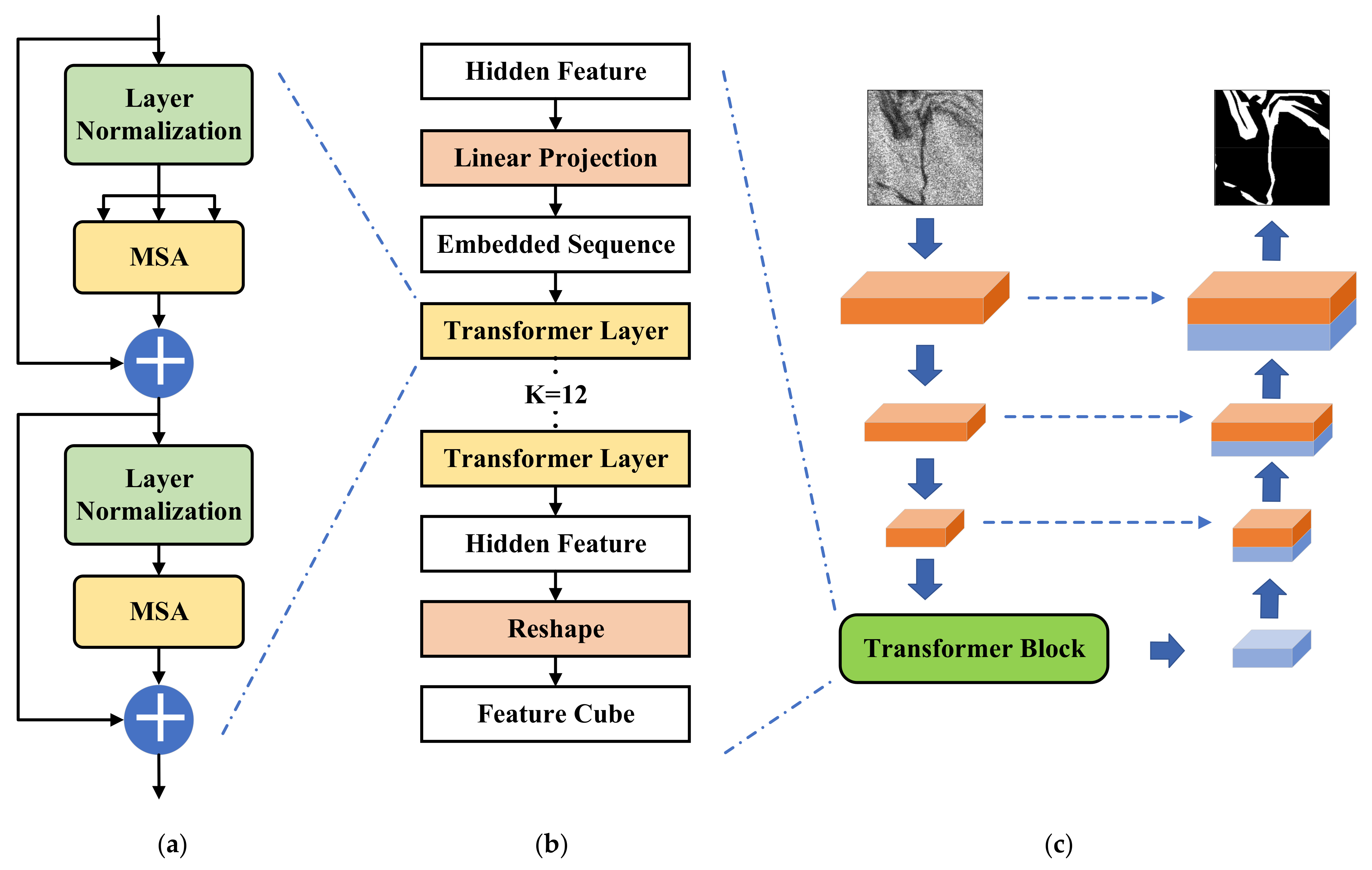

3. TransUNet-Based Oil Spill Detection Model

3.1. Architecture

3.2. Transformer Block

3.3. Loss Function

3.4. Experimental Environment and Control Model Selection

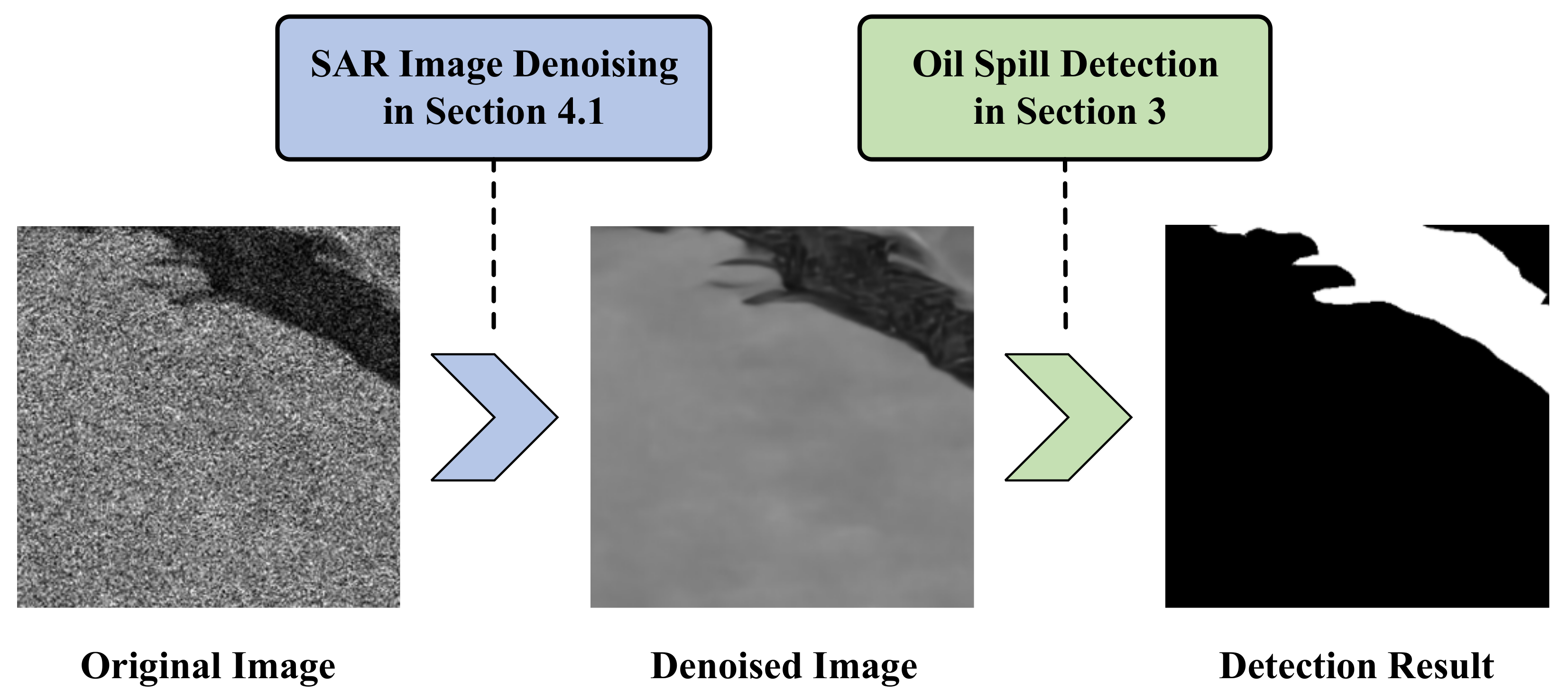

4. Oil Spill Detection Model Based on TransUNet and FFDNet

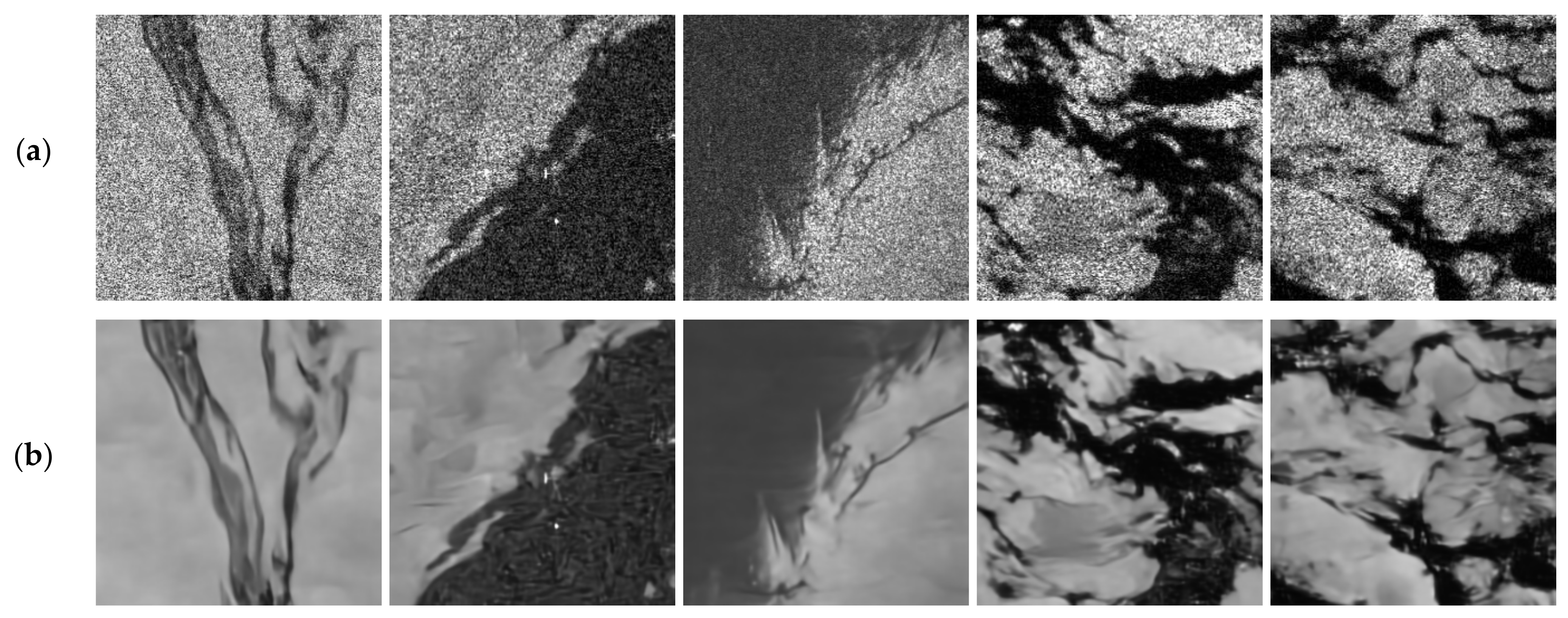

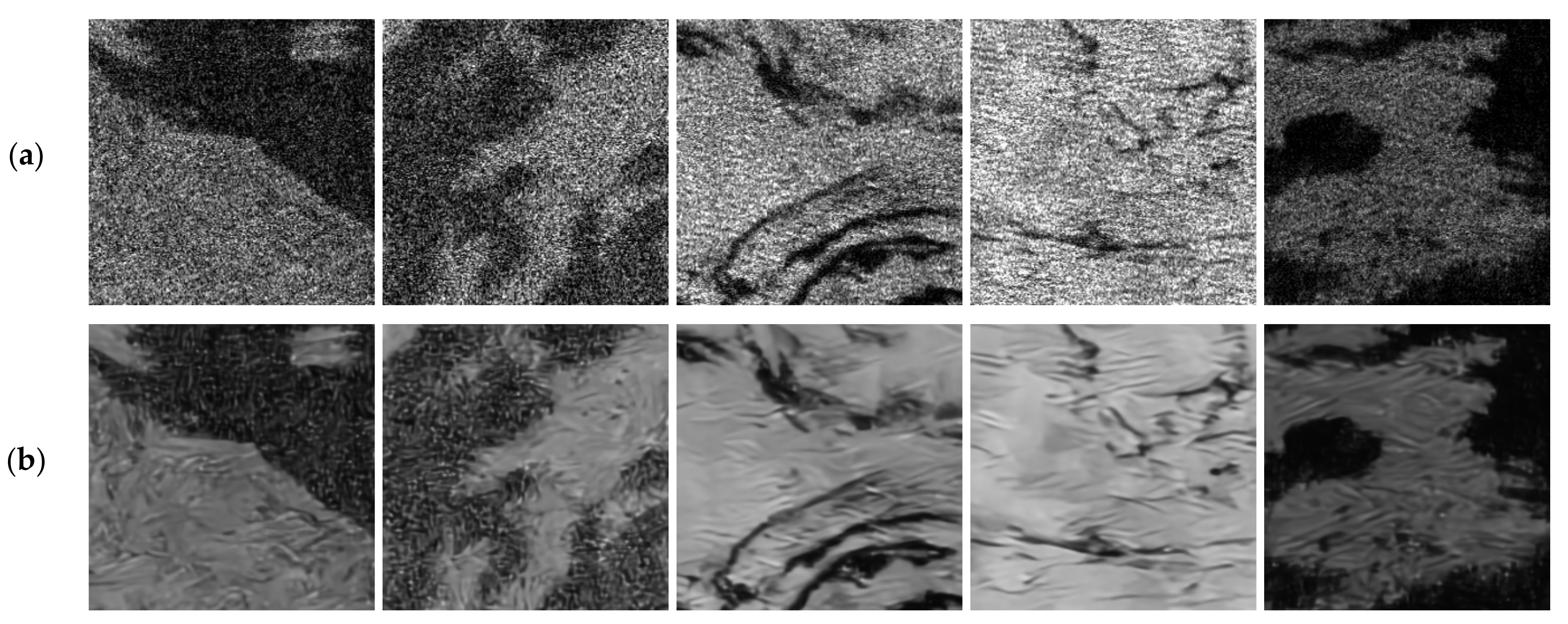

4.1. FFDNet-Based SAR Image Denoising

4.2. Denoising Effect

4.3. Oil Spill Detection Process

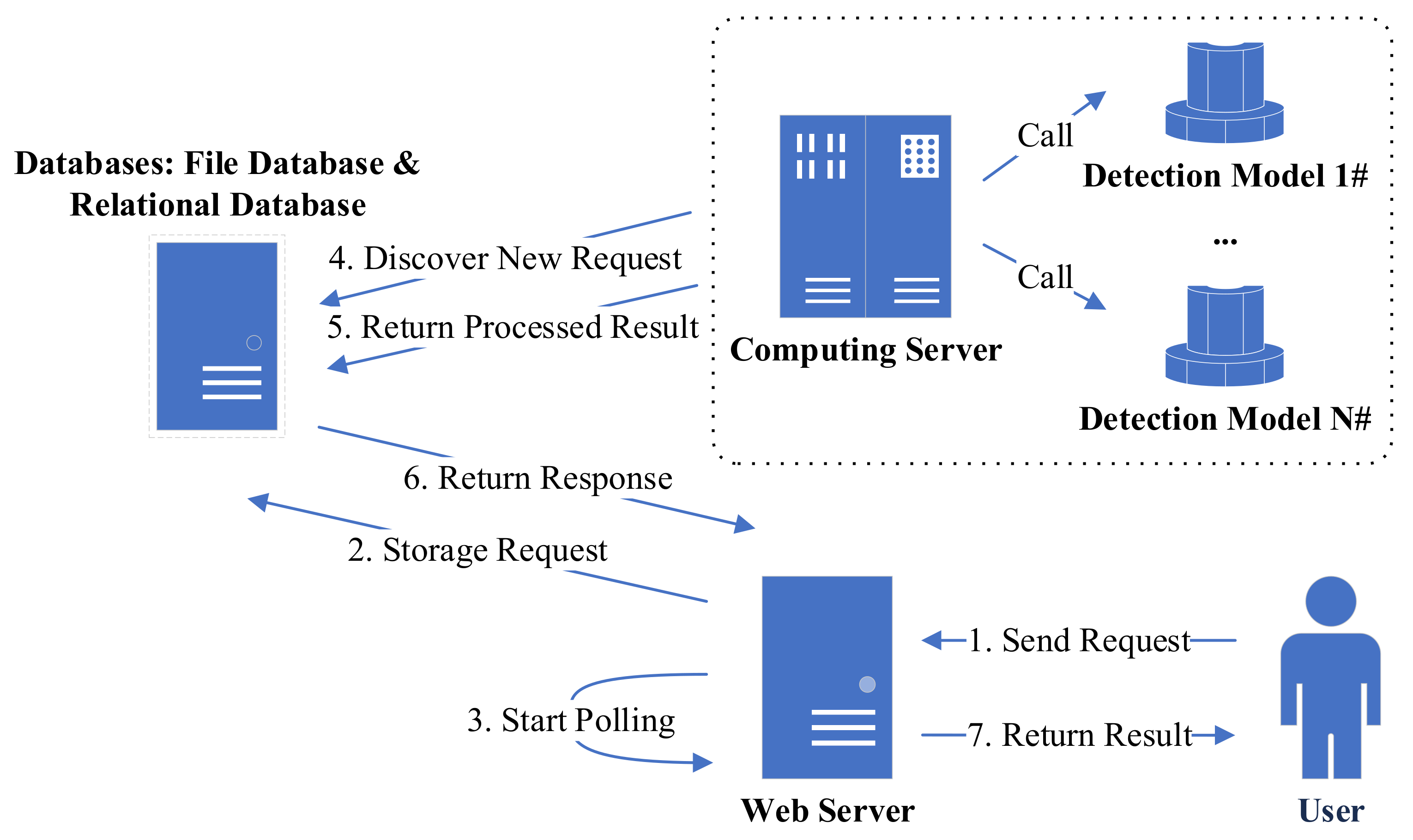

5. Model Ensemble

5.1. Algorithm Design

5.2. System Implementation

6. Experimental Results and Analysis

6.1. Evaluation Metrics

6.2. Experimental Results for the Palsar Dataset

6.3. Experimental Results on the Sentinel Dataset

6.4. Experimental Analysis

6.5. Training and Inference Performance

6.6. Supplementary Explanation

- In the experimental results of the Sentinel sub-dataset presented in reference [26], the UNET-based approach achieved an F1 of 86.10, R of 81.22, and P of 85.61.

- In the experimental results of the Sentinel sub-dataset presented in reference [26], the DLinkNet-based approach achieved an F1 of 87.08, R of 85.22, and P of 85.22.

- In the experimental results of the Palsar sub-dataset presented in reference [35], the UNET-based approach obtained an F1 of 96.36, R of 95.4, and P of 95.35.

7. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Rayner, R.; Jolly, C.; Gouldman, C. Ocean Observing and the Blue Economy. Front. Mar. Sci. 2019, 6, 330. [Google Scholar] [CrossRef]

- Jolly, C. The Ocean Economy in 2030. In Proceedings of the the Workshop on Maritime Cluster and Global Challenges 50th Anniversary of the WP6, Paris, France, 1 December 2016; Volume 1. [Google Scholar]

- Virdin, J.; Vegh, T.; Jouffray, J.-B.; Blasiak, R.; Mason, S.; Österblom, H.; Vermeer, D.; Wachtmeister, H.; Werner, N. The Ocean 100: Transnational Corporations in the Ocean Economy. Sci. Adv. 2021, 7, eabc8041. [Google Scholar] [CrossRef]

- Prince, R.C. A Half Century of Oil Spill Dispersant Development, Deployment and Lingering Controversy. Int. Biodeterior. Biodegrad. 2023, 176, 105510. [Google Scholar] [CrossRef]

- He, F.; Ma, J.; Lai, Q.; Shui, J.; Li, W. Environmental Impact Assessment of a Wharf Oil Spill Emergency on a River Water Source. Water 2023, 15, 346. [Google Scholar] [CrossRef]

- Miloslavich, P.; Seeyave, S.; Muller-Karger, F.; Bax, N.; Ali, E.; Delgado, C.; Evers-King, H.; Loveday, B.; Lutz, V.; Newton, J.; et al. Challenges for Global Ocean Observation: The Need for Increased Human Capacity. J. Oper. Oceanogr. 2019, 12, S137–S156. [Google Scholar] [CrossRef]

- Weller, R.A.; Baker, D.J.; Glackin, M.M.; Roberts, S.J.; Schmitt, R.W.; Twigg, E.S.; Vimont, D.J. The Challenge of Sustaining Ocean Observations. Front. Mar. Sci. 2019, 6, 105. [Google Scholar]

- Li, P.; Cai, Q.; Lin, W.; Chen, B.; Zhang, B. Offshore Oil Spill Response Practices and Emerging Challenges. Mar. Pollut. Bull. 2016, 110, 6–27. [Google Scholar] [CrossRef]

- Pandey, S.K.; Kim, K.-H.; Yim, U.-H.; Jung, M.-C.; Kang, C.-H. Airborne Mercury Pollution from a Large Oil Spill Accident on the West Coast of Korea. J. Hazard. Mater. 2009, 164, 380–384. [Google Scholar] [CrossRef] [PubMed]

- Storrie, J. Montara Wellhead Platform Oil Spill—A Remote Area Response. Int. Oil Spill Conf. Proc. 2023, 2011, 159. [Google Scholar] [CrossRef]

- Carvalho, G.D.A.; Minnett, P.J.; De Miranda, F.P.; Landau, L.; Paes, E.T. Exploratory Data Analysis of Synthetic Aperture Radar (SAR) Measurements to Distinguish the Sea Surface Expressions of Naturally-Occurring Oil Seeps from Human-Related Oil Spills in Campeche Bay (Gulf of Mexico). ISPRS Int. J. Geo-Inf. 2017, 6, 379. [Google Scholar] [CrossRef]

- Xu, H.-L.; Chen, J.-N.; Wang, S.-D.; Liu, Y. Oil Spill Forecast Model Based on Uncertainty Analysis: A Case Study of Dalian Oil Spill. Ocean. Eng. 2012, 54, 206–212. [Google Scholar] [CrossRef]

- Liu, X.; Guo, J.; Guo, M.; Hu, X.; Tang, C.; Wang, C.; Xing, Q. Modelling of Oil Spill Trajectory for 2011 Penglai 19-3 Coastal Drilling Field, China. Appl. Math. Model. 2015, 39, 5331–5340. [Google Scholar] [CrossRef]

- Li, Y.; Yu, H.; Wang, Z.; Li, Y.; Pan, Q.; Meng, S.; Yang, Y.; Lu, W.; Guo, K. The Forecasting and Analysis of Oil Spill Drift Trajectory during the Sanchi Collision Accident, East China Sea. Ocean. Eng. 2019, 187, 106231. [Google Scholar] [CrossRef]

- Li, K.; Yu, H.; Xu, Y.; Luo, X. Scheduling Optimization of Offshore Oil Spill Cleaning Materials Considering Multiple Accident Sites and Multiple Oil Types. Sustainability 2022, 14, 10047. [Google Scholar] [CrossRef]

- Li, K.; Ouyang, J.; Yu, H.; Xu, Y.; Xu, J. Overview of Research on Monitoring of Marine Oil Spill. IOP Conf. Ser. Earth Environ. Sci. 2021, 787, 012078. [Google Scholar] [CrossRef]

- Li, K.; Yu, H.; Yan, J.; Liao, J. Analysis of Offshore Oil Spill Pollution Treatment Technology. IOP Conf. Ser. Earth Environ. Sci. 2020, 510, 042011. [Google Scholar] [CrossRef]

- Brekke, C.; Solberg, A.H.S. Oil Spill Detection by Satellite Remote Sensing. Remote Sens. Environ. 2005, 95, 1–13. [Google Scholar] [CrossRef]

- Hass, F.S.; Jokar Arsanjani, J. Deep Learning for Detecting and Classifying Ocean Objects: Application of YoloV3 for Iceberg–Ship Discrimination. ISPRS Int. J. Geo-Inf. 2020, 9, 758. [Google Scholar] [CrossRef]

- Pinho, J.L.S.; Antunes Do Carmo, J.S.; Vieira, J.M.P. Numerical Modelling of Oil Spills in Coastal Zones. A Case Study. WIT Trans. Ecol. Environ. 2002, 59, 35–45. [Google Scholar]

- Inan, A. Modeling of Oil Pollution in Derince Harbor. J. Coast. Res. 2011, SI 64, 894–898. [Google Scholar]

- Cho, Y.-S.; Kim, T.-K.; Jeong, W.; Ha, T. Numerical Simulation of Oil Spill in Ocean. J. Appl. Math. 2012, 2012, e681585. [Google Scholar] [CrossRef]

- Iouzzi, N.; Ben Meftah, M.; Haffane, M.; Mouakkir, L.; Chagdali, M.; Mossa, M. Modeling of the Fate and Behaviors of an Oil Spill in the Azemmour River Estuary in Morocco. Water 2023, 15, 1776. [Google Scholar] [CrossRef]

- Keramea, P.; Spanoudaki, K.; Zodiatis, G.; Gikas, G.; Sylaios, G. Oil Spill Modeling: A Critical Review on Current Trends, Perspectives, and Challenges. J. Mar. Sci. Eng. 2021, 9, 181. [Google Scholar] [CrossRef]

- Khan, M.; El Saddik, A.; Alotaibi, F.S.; Pham, N.T. AAD-Net: Advanced End-to-End Signal Processing System for Human Emotion Detection & Recognition Using Attention-Based Deep Echo State Network. Knowl.-Based Syst. 2023, 270, 110525. [Google Scholar] [CrossRef]

- Zhu, Q.; Zhang, Y.; Li, Z.; Yan, X.; Guan, Q.; Zhong, Y.; Zhang, L.; Li, D. Oil Spill Contextual and Boundary-Supervised Detection Network Based on Marine SAR Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–10. [Google Scholar] [CrossRef]

- Prajapati, K.; Ramakrishnan, R.; Bhavsar, M.; Mahajan, A.; Narmawala, Z.; Bhavsar, A.; Raboaca, M.S.; Tanwar, S. Log Transformed Coherency Matrix for Differentiating Scattering Behaviour of Oil Spill Emulsions Using SAR Images. Mathematics 2022, 10, 1697. [Google Scholar] [CrossRef]

- Wang, D.; Wan, J.; Liu, S.; Chen, Y.; Yasir, M.; Xu, M.; Ren, P. BO-DRNet: An Improved Deep Learning Model for Oil Spill Detection by Polarimetric Features from SAR Images. Remote Sens. 2022, 14, 264. [Google Scholar] [CrossRef]

- Ma, X.; Xu, J.; Wu, P.; Kong, P. Oil Spill Detection Based on Deep Convolutional Neural Networks Using Polarimetric Scattering Information From Sentinel-1 SAR Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Temitope Yekeen, S.; Balogun, A.; Wan Yusof, K.B. A Novel Deep Learning Instance Segmentation Model for Automated Marine Oil Spill Detection. ISPRS J. Photogramm. Remote Sens. 2020, 167, 190–200. [Google Scholar] [CrossRef]

- Bianchi, F.M.; Espeseth, M.M.; Borch, N. Large-Scale Detection and Categorization of Oil Spills from SAR Images with Deep Learning. Remote Sens. 2020, 12, 2260. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Chen, L.-C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking Atrous Convolution for Semantic Image Segmentation. arXiv 2017. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhu, Q.; Guan, Q. Oil Spill Detection Based on CBD-Net Using Marine SAR Image. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 3495–3498. [Google Scholar]

- SkyTruth Gulf Oil Spill Covers 817 Square Miles. Available online: https://skytruth.org/2010/04/gulf-oil-spill-covers-817-square-miles/ (accessed on 28 July 2023).

- SkyTruth Satellite Imagery Reveals Scope of Last Week’s Oil Spill in Kuwait. Available online: https://skytruth.org/2017/08/satellite-imagery-reveals-scope-of-last-weeks-massive-oil-spill-in-kuwait/ (accessed on 28 July 2023).

- Erkan, U.; Thanh, D.N.H.; Hieu, L.M.; Engínoğlu, S. An Iterative Mean Filter for Image Denoising. IEEE Access 2019, 7, 167847–167859. [Google Scholar] [CrossRef]

- De Oliveira, M.E.; de Oliveira, G.N.; de Souza, J.C.; dos Santos, P.A.M. Photorefractive Moiré-like Patterns for the Multifringe Projection Method in Fourier Transform Profilometry. Appl. Opt. 2016, 55, 1048–1053. [Google Scholar] [CrossRef] [PubMed]

- Luo, P.; Zhang, M.; Ghassemlooy, Z.; Le Minh, H.; Tsai, H.-M.; Tang, X.; Png, L.C.; Han, D. Experimental Demonstration of RGB LED-Based Optical Camera Communications. IEEE Photonics J. 2015, 7, 1–12. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Curran Associates Inc.: Red Hook, NY, USA, 2017; pp. 6000–6010. [Google Scholar]

- Chen, J.; Lu, Y.; Yu, Q.; Luo, X.; Adeli, E.; Wang, Y.; Lu, L.; Yuille, A.L.; Zhou, Y. TransUNet: Transformers Make Strong Encoders for Medical Image Segmentation. arXiv 2021. [Google Scholar] [CrossRef]

- Ajit, A.; Acharya, K.; Samanta, A. A Review of Convolutional Neural Networks. In Proceedings of the 2020 International Conference on Emerging Trends in Information Technology and Engineering (ic-ETITE), Vellore, India, 24–25 February 2020; pp. 1–5. [Google Scholar]

- Liu, B.; Li, Y.; Li, G.; Liu, A. A Spectral Feature Based Convolutional Neural Network for Classification of Sea Surface Oil Spill. ISPRS Int. J. Geo-Inf. 2019, 8, 160. [Google Scholar] [CrossRef]

- Parmar, N.; Vaswani, A.; Uszkoreit, J.; Kaiser, L.; Shazeer, N.; Ku, A.; Tran, D. Image Transformer. In Proceedings of the the 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 4055–4064. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image Is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. arXiv 2021. [Google Scholar] [CrossRef]

- Ruby, U.; Yendapalli, V. Binary Cross Entropy with Deep Learning Technique for Image Classification. Int. J. Adv. Trends Comput. Sci. Eng. 2020, 9, 5393–5397. [Google Scholar] [CrossRef]

- Li, X.; Sun, X.; Meng, Y.; Liang, J.; Wu, F.; Li, J. Dice Loss for Data-Imbalanced NLP Tasks. arXiv 2020. [Google Scholar] [CrossRef]

- Abadi, M. TensorFlow: Learning Functions at Scale. In Proceedings of the the 21st ACM SIGPLAN International Conference on Functional Programming, Nara, Japan, 18–24 September 2016; Association for Computing Machinery: New York, NY, USA, 2016; p. 1. [Google Scholar]

- Pang, B.; Nijkamp, E.; Wu, Y.N. Deep Learning With TensorFlow: A Review. J. Educ. Behav. Stat. 2020, 45, 227–248. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2017. [Google Scholar] [CrossRef]

- He, F.; Liu, T.; Tao, D. Control Batch Size and Learning Rate to Generalize Well: Theoretical and Empirical Evidence. In Proceedings of the Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2019; Volume 32. [Google Scholar]

- Zhang, K.; Zuo, W.; Zhang, L. FFDNet: Toward a Fast and Flexible Solution for CNN-Based Image Denoising. IEEE Trans. Image Process. 2018, 27, 4608–4622. [Google Scholar] [CrossRef] [PubMed]

- Agarap, A.F. Deep Learning Using Rectified Linear Units (ReLU). arXiv 2019. [Google Scholar] [CrossRef]

- Bjorck, N.; Gomes, C.P.; Selman, B.; Weinberger, K.Q. Understanding Batch Normalization. In Proceedings of the Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2018; Volume 31. [Google Scholar]

- Santurkar, S.; Tsipras, D.; Ilyas, A.; Madry, A. How Does Batch Normalization Help Optimization? In Proceedings of the Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2018; Volume 31. [Google Scholar]

- Basrak, B. Fisher-Tippett Theorem. In International Encyclopedia of Statistical Science; Lovric, M., Ed.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 525–526. ISBN 978-3-642-04898-2. [Google Scholar]

- Goodman, J.W. Some Effects of Target-Induced Scintillation on Optical Radar Performance. Proc. IEEE 1965, 53, 1688–1700. [Google Scholar] [CrossRef]

- Kurutach, T.; Clavera, I.; Duan, Y.; Tamar, A.; Abbeel, P. Model-Ensemble Trust-Region Policy Optimization. arXiv 2018. [Google Scholar] [CrossRef]

- Xiao, Y.; Wu, J.; Lin, Z.; Zhao, X. A Deep Learning-Based Multi-Model Ensemble Method for Cancer Prediction. Comput. Methods Programs Biomed. 2018, 153, 1–9. [Google Scholar] [CrossRef]

- Garrett, J.J. Ajax: A New Approach to Web Applications. Available online: https://courses.cs.washington.edu/courses/cse490h/07sp/readings/ajax_adaptive_path.pdf (accessed on 27 July 2023).

- Caelen, O. A Bayesian interpretation of the confusion matrix. Ann. Math. Artif. Intell. 2017, 81, 429–450. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, E.; Zhu, Y. Image Segmentation Evaluation: A Survey of Methods. Artif. Intell. Rev. 2020, 53, 5637–5674. [Google Scholar] [CrossRef]

- Yang, G.; Lei, J.; Xie, W.; Fang, Z.; Li, Y.; Wang, J.; Zhang, X. Algorithm/Hardware Codesign for Real-Time On-Satellite CNN-Based Ship Detection in SAR Imagery. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–18. [Google Scholar] [CrossRef]

- Diana, L.; Xu, J.; Fanucci, L. Oil Spill Identification from SAR Images for Low Power Embedded Systems Using CNN. Remote Sens. 2021, 13, 3606. [Google Scholar] [CrossRef]

| Sub-Dataset | Images | Capture Date | Latitude | Longitude |

|---|---|---|---|---|

| Palsar dataset | ALPSRP230200560 | 21 May 2010 | 28.734° | −90.707° |

| ALPSRP231220540 | 28 May 2010 | 27.739° | −87.821° | |

| ALPSRP231220560 | 28 May 2010 | 28.728° | −88.026° | |

| ALPSRP231220580 | 28 May 2010 | 29.723° | −88.229° | |

| ALPSRP232970570 | 9 June 2010 | 29.224° | −87.056° | |

| ALPSRP232970580 | 9 June 2010 | 29.721° | −87.155° | |

| ALPSRS233043050 | 9 June 2010 | 28.389° | −88.302° | |

| ALPSRP235450560 | 26 June 2010 | 28.728° | −87.488° | |

| ALPSRP237930550 | 13 July 2010 | 28.233° | −87.926° | |

| ALPSRP237930560 | 13 July 2010 | 28.728° | −88.024° | |

| ALPSRP238660500 | 18 July 2010 | 25.758° | −89.029° | |

| ALPSRP238660550 | 18 July 2010 | 28.237° | −89.535° | |

| ALPSRS239753100 | 25 July 2010 | 25.719° | −88.871° | |

| ALPSRP241870520 | 9 August 2010 | 26.749° | −91.371° | |

| Sentinel dataset | IW_GRDH_1SDV_017782_01DCBB_DE28 | 5 August 2017 | 27.311° | 50.597° |

| IW_GRDH_1SDV_017848_01DEC1_EC32 | 9 August 2017 | 28.495° | 48.995° | |

| IW_GRDH_1SDV_017848_01DEC1_C09A | 9 August 2017 | 29.002° | 48.660° | |

| IW_GRDH_1SDV_017855_01DEF7_F48C | 10 August 2017 | 29.439° | 47.535° | |

| IW_GRDH_1SDV_017884_01DFD8_8F93 | 12 August 2017 | 27.014° | 52.812° | |

| IW_GRDH_1SDV_017884_01DFD8_6CAE | 12 August 2017 | 25.505° | 52.485° | |

| IW_GRDH_1SDV_017921_01EOED DB39 | 14 August 2017 | 28.706° | 46.893° |

| Model | TP | FN | FP | TN |

|---|---|---|---|---|

| UNet | 40,471,803 | 1,837,937 | 2,072,259 | 6,473,937 |

| SegNet | 40,544,155 | 1,765,585 | 2,291,311 | 6,254,885 |

| DeepLabV3+ | 40,732,236 | 1,577,504 | 2,199,864 | 6,346,332 |

| TransUNet (Section 3) | 40,862,850 | 1,446,890 | 1,850,104 | 6,696,092 |

| FFDNet-TransUNet (Section 4) | 39,651,870 | 2,657,870 | 1,334,025 | 7,212,171 |

| Multi-model ensemble (Section 5) | 41,158,779 | 1,150,961 | 2,115,620 | 6,430,576 |

| Model | A | P | R | F1 | MIoU |

|---|---|---|---|---|---|

| UNet | 92.31% | 95.13% | 95.66% | 95.39% | 76.77% |

| SegNet | 92.02% | 94.65% | 95.83% | 95.24% | 75.78% |

| DeepLabV3+ | 92.57% | 94.88% | 96.27% | 95.57% | 77.10% |

| TransUNet (Section 3) | 93.52% | 95.67% | 96.58% | 96.12% | 79.77% |

| FFDNet-TransUNet (Section 4) | 92.15% | 96.75% | 93.72% | 95.21% | 77.61% |

| Multi-model ensemble (Section 5) | 93.58% | 95.11% | 97.28% | 96.18% | 79.48% |

| Model | TP | FN | FP | TN |

| UNet | 33,115,771 | 2,777,721 | 4,147,261 | 14,943,951 |

| SegNet | 32,622,424 | 3,271,068 | 3,454,134 | 15,637,078 |

| DeepLabV3+ | 33,987,411 | 1,906,081 | 4,338,061 | 14,753,151 |

| TransUNet (Section 3) | 31,812,614 | 4,080,878 | 2,245,007 | 16,846,205 |

| FFDNet-TransUNet (Section 4) | 32,566,136 | 3,327,356 | 2,930,835 | 16,160,377 |

| Multi-model ensemble (Section 5) | 34,742,854 | 1,150,638 | 4,912,519 | 14,178,693 |

| Model | A | P | R | F1 | MIoU |

| UNet | 87.41% | 88.87% | 92.26% | 90.53% | 75.52% |

| SegNet | 87.77% | 90.43% | 90.89% | 90.66% | 76.42% |

| DeepLabV3+ | 88.64% | 88.68% | 94.69% | 91.59% | 77.37% |

| TransUNet (Section 3) | 88.50% | 93.41% | 88.63% | 90.96% | 78.06% |

| FFDNet-TransUNet (Section 4) | 88.62% | 91.74% | 90.73% | 91.23% | 77.98% |

| Multi-model ensemble (Section 5) | 88.97% | 87.61% | 96.79% | 91.97% | 77.59% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dong, X.; Li, J.; Li, B.; Jin, Y.; Miao, S. Marine Oil Spill Detection from Low-Quality SAR Remote Sensing Images. J. Mar. Sci. Eng. 2023, 11, 1552. https://doi.org/10.3390/jmse11081552

Dong X, Li J, Li B, Jin Y, Miao S. Marine Oil Spill Detection from Low-Quality SAR Remote Sensing Images. Journal of Marine Science and Engineering. 2023; 11(8):1552. https://doi.org/10.3390/jmse11081552

Chicago/Turabian StyleDong, Xiaorui, Jiansheng Li, Bing Li, Yueqin Jin, and Shufeng Miao. 2023. "Marine Oil Spill Detection from Low-Quality SAR Remote Sensing Images" Journal of Marine Science and Engineering 11, no. 8: 1552. https://doi.org/10.3390/jmse11081552

APA StyleDong, X., Li, J., Li, B., Jin, Y., & Miao, S. (2023). Marine Oil Spill Detection from Low-Quality SAR Remote Sensing Images. Journal of Marine Science and Engineering, 11(8), 1552. https://doi.org/10.3390/jmse11081552