Real-World Underwater Image Enhancement Based on Attention U-Net

Abstract

1. Introduction

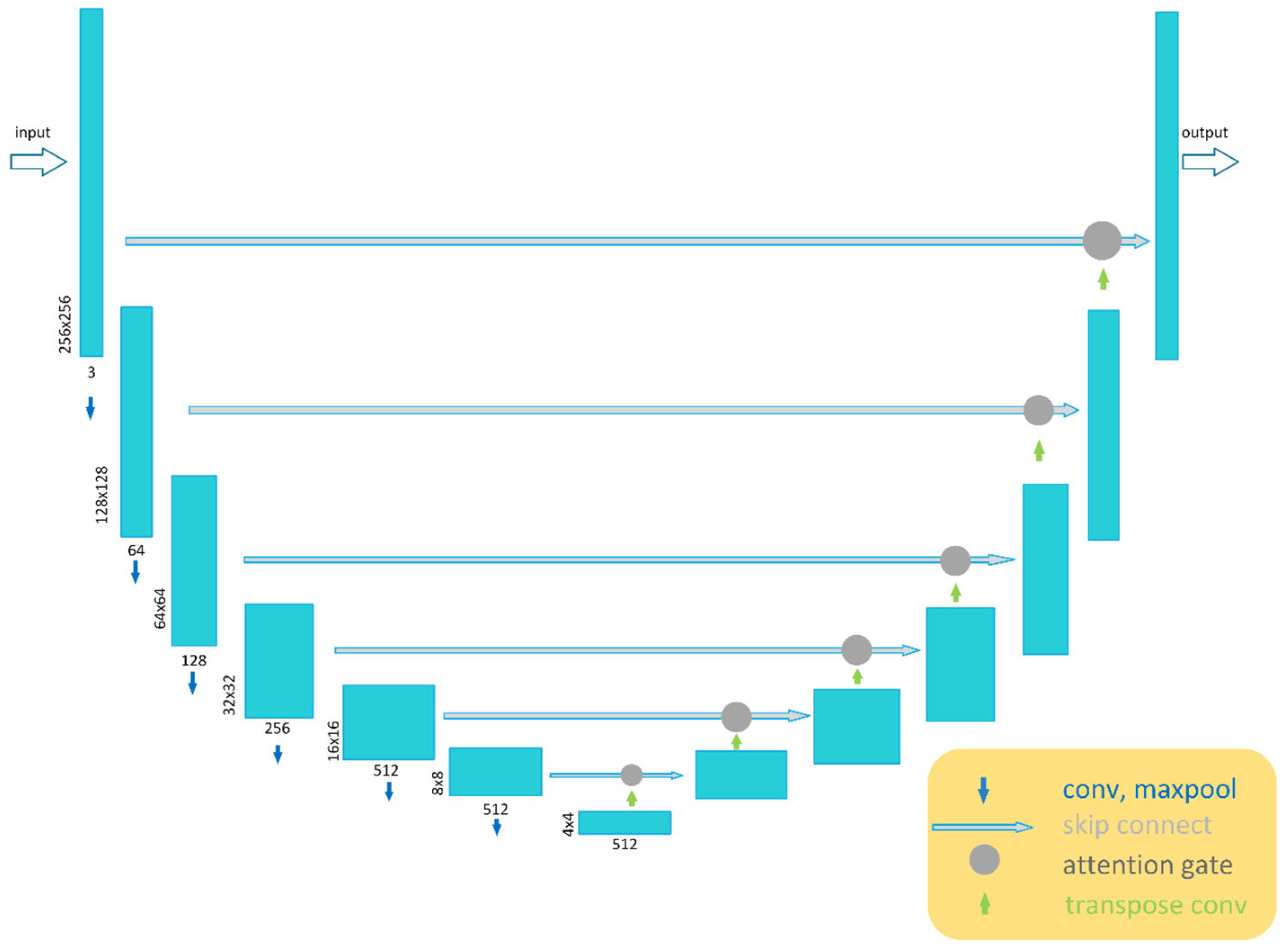

- We propose a generative adversarial network (GAN) for enhancing underwater images based on the attention-gate (AG) mechanism. The AG is integrated into the standard U-Net architecture to screen important feature information;

- We formulate a new objective function and train our model end-to-end on a real-world underwater image dataset. Experiments demonstrate that our model outperforms several state-of-the-art methods in both qualitative and quantitative evaluations.

2. Related Works

2.1. Generative Adversarial Nets

2.2. Conditional Adversarial Nets

3. Proposed Model

3.1. Generator with Skip

3.2. Discriminator

3.3. Loss Function

4. Experimental Results and Analysis

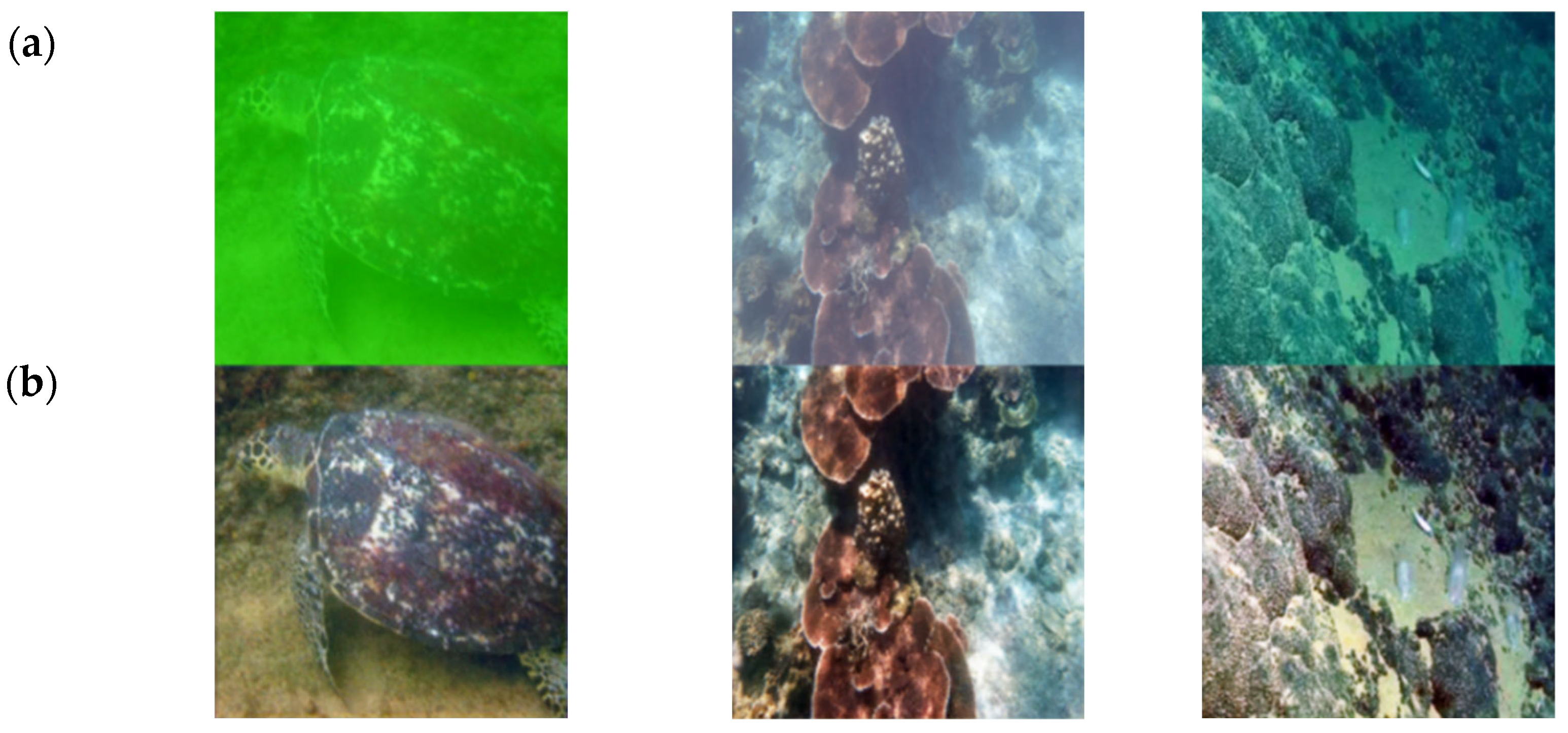

4.1. Datasets

4.2. Experimental Environment

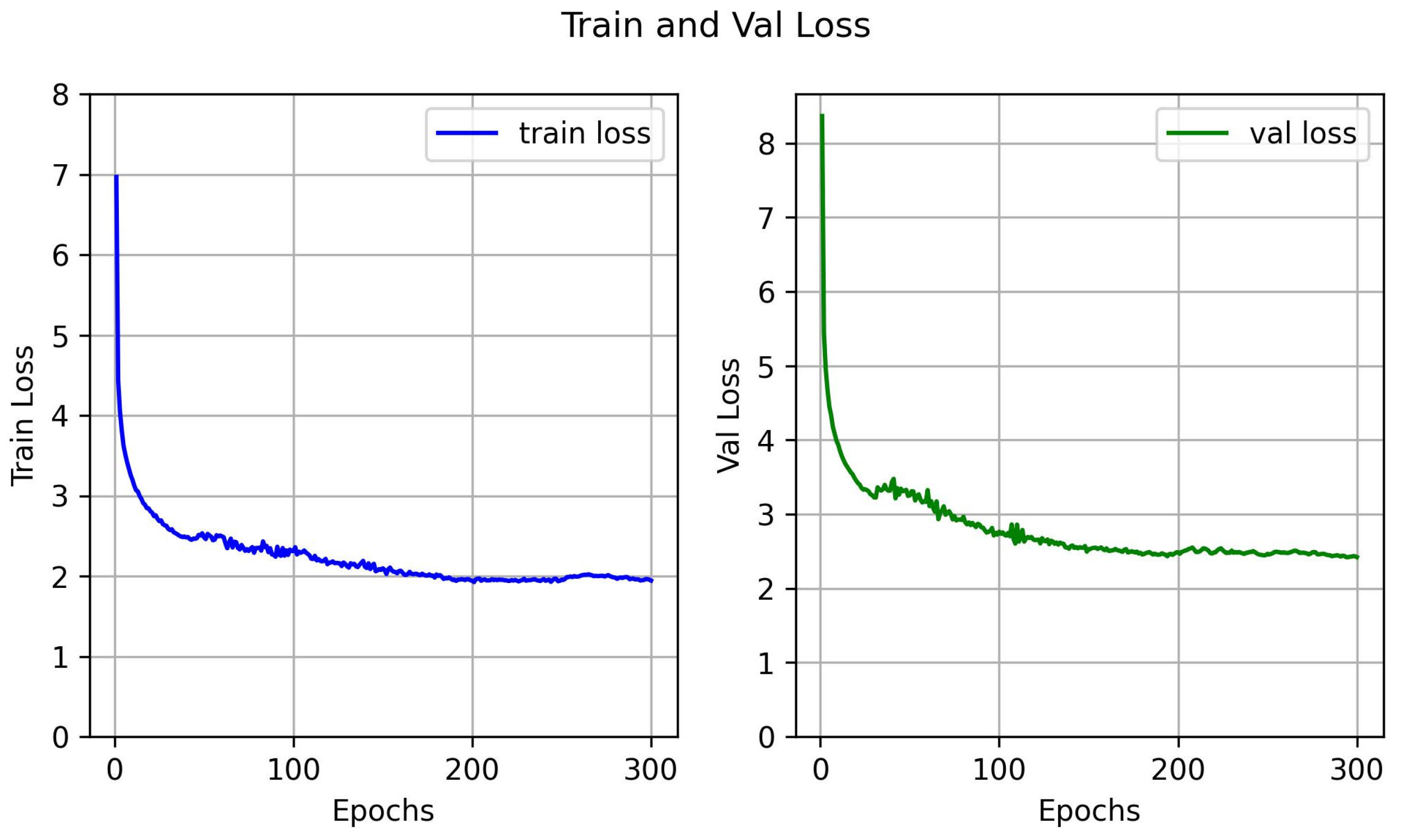

4.3. Evaluations

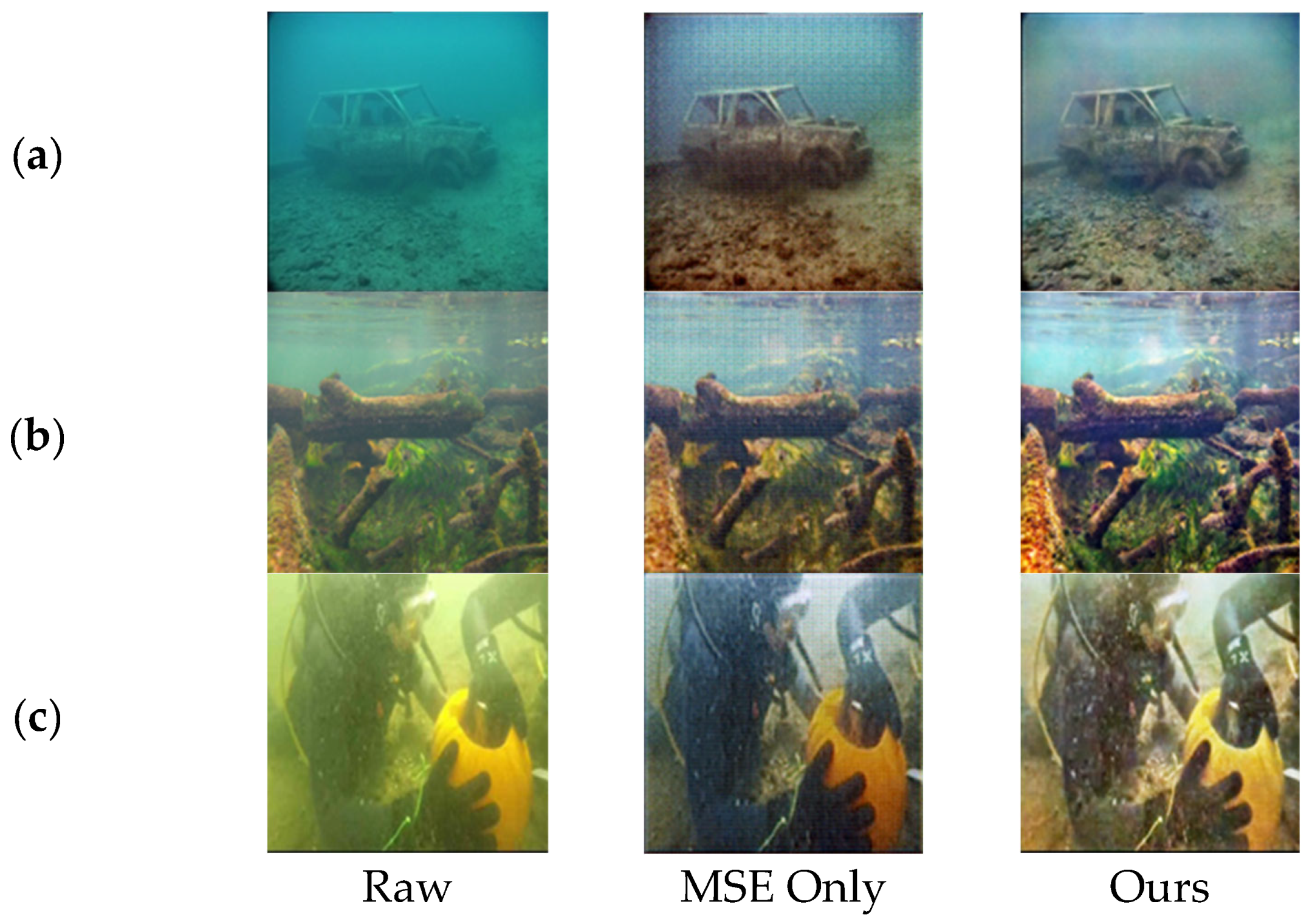

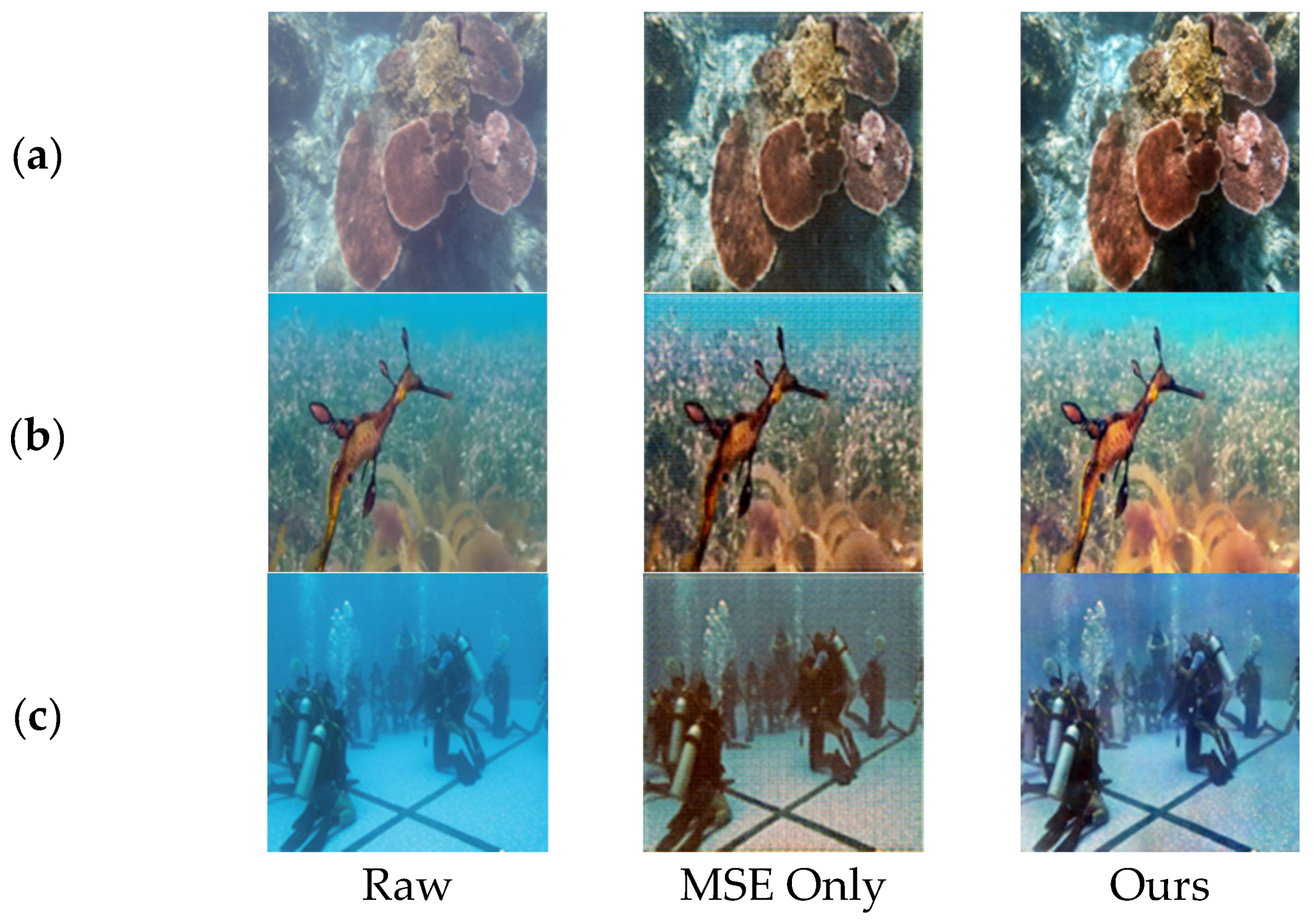

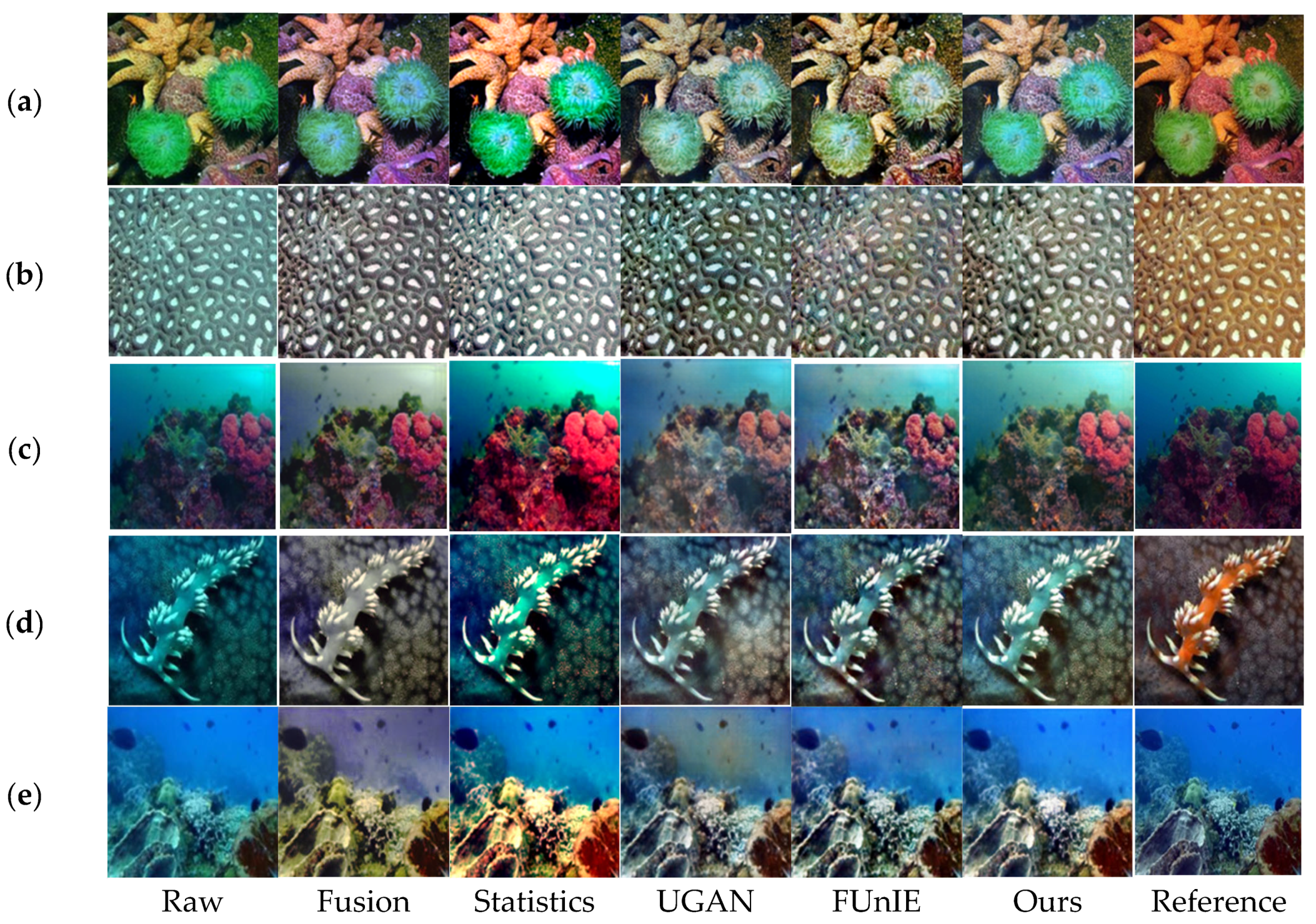

4.3.1. Subjective Evaluation

4.3.2. Objective Evaluation

4.3.3. Ablation Experiments

4.3.4. Generalizability Verification

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Jobson, D.J.; Rahman, Z.; Woodell, G.A.A. Multiscale Retinex for Bridging the Gap Between Color Images and the Human Observation of Scenes. IEEE Trans. Image Process. 1997, 6, 965–976. [Google Scholar] [CrossRef] [PubMed]

- Pizer, S.M.; Johnston, R.E.; Ericksen, J.P.; Yankaskas, B.C.; Muller, K.E. Contrast-limited Adaptive Histogram Equalization: Speed and Effectiveness. In Proceedings of the First Conference on Visualization in Biomedical Computing, Atlanta, GA, USA, 22–25 May 1990; pp. 337–345. [Google Scholar]

- Ancuti, C.O.; Ancuti, C.; De Vleeschouwer, C.; Bekaert, P. Color Balance and Fusion for Underwater Image Enhancement. IEEE Trans. Image Process. 2018, 27, 379–393. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Isola, P.; Zhu, J.; Zhou, T.; Efros, A.A. Image-to-Image Translation with Conditional Adversarial Networks. IEEE Conf. Comput. Vis. Pattern Recognit. 2017, 2017, 1125–1134. [Google Scholar]

- Fan, Y.S.; Niu, L.H.; Liu, T. Multi-Branch Gated Fusion Network: A Method That Provides Higher-Quality Images for the USV Perception System in Maritime Hazy Condition. J. Mar. Sci. Eng. 2022, 10, 1839. [Google Scholar] [CrossRef]

- Li, C.Y.; Guo, C.L.; Ren, W.Q.; Cong, R.M.; Hou, J.H.; Kwong, S.; Tao, D.C. An Underwater Image Enhancement Benchmark Dataset and Beyond. IEEE Trans. Image Process. 2019, 29, 4376–4389. [Google Scholar] [CrossRef]

- Fabbri, C.; Islam, M.J.; Sattar, J. Enhancing Underwater Imagery Using Generative Adversarial Networks. IEEE Int. Conf. Robot. Autom. 2018, 2018, 7159–7165. [Google Scholar]

- Islam, M.J.; Xia, Y.; Sattar, J. Fast Underwater Image Enhancement for Improved Visual Perception. IEEE Robot. Autom. Lett. 2020, 5, 3227–3234. [Google Scholar] [CrossRef]

- Li, C.Y.; Anwar, S.; Porikli, F. Underwater Scene Prior Inspired Deep Underwater Image and Video Enhancement. Pattern Recognit. 2020, 98, 107038. [Google Scholar] [CrossRef]

- Wang, Y.D.; Guo, J.C.; Gao, H.; Yue, H.H. UIEC^2-Net: CNN-based Underwater Image Enhancement Using two Color Space. Signal Process. Image Commun. 2021, 96, 116250. [Google Scholar] [CrossRef]

- Oktay, O.; Schlemper, J.; Folgoc, L.L.; Lee, M. Attention U-Net: Learning Where to Look for the Pancreas. arXiv 2018, arXiv:1804.03999. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M. Generative Adversarial Networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Mirza, M.; Osindero, S. Conditional Generative Adversarial Nets. arXiv 2014, arXiv:1411.1784. [Google Scholar]

- Gatys, L.A.; Ecker, A.S.; Bethge, M. Image Style Transfer Using Convolutional Neural Networks. IEEE Conf. Comput. Vis. Pattern Recognit. 2016, 2016, 2414–2423. [Google Scholar]

- Jin, Y.H.; Zhang, J.K.; Li, M.J. Towards the Automatic Anime Characters Creation with Generative Adversarial Networks. arXiv 2017, arXiv:1708.05509. [Google Scholar]

- Karras, T.; Aila, T.; Laine, S.; Lehtinen, J. Progressive Growing of GANs for Improved Quality, Stability, and Variation. arXiv 2018, arXiv:1710.10196. [Google Scholar]

- Reed, S.; Akata, Z.; Yan, X.C.; Logeswaran, L. Generative Adversarial Text to Image Synthesis. Int. Conf. Mach. Learn. 2016, 48, 1060–1069. [Google Scholar]

- Liu, R.S.; Ma, L.; Zhang, J.A.; Fan, X.; Luo, Z.X. Retinex-inspired Unrolling with Cooperative Prior Architecture Search for Low-light Image Enhancement. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 10556–10565. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. Med. Image Comput. Comput. Assist. Interv. 2015, 8, 234–241. [Google Scholar]

- Johnson, J.; Alahi, A.; Li, F.F. Perceptual Losses for Real-Time Style Transfer and Super-Resolution. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 694–711. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Song, W.; Wang, Y.; Huang, D.; Liotta, A.; Perra, C. Enhancement of Underwater Images with Statistical Model of Background Light and Optimization of Transmission Map. IEEE Trans. Broadcast. 2020, 66, 153–169. [Google Scholar] [CrossRef]

- Chen, Z.; He, Z.; Lu, Z.M. DEA-Net: Single image dehazing based on detail-enhanced convolution and content-guided attention. arXiv 2023, arXiv:2301.04805. [Google Scholar]

- Yang, M.; Sowmya, A. An Underwater Color Image Quality Evaluation Metric. IEEE Trans. Image Process. 2015, 24, 6062–6071. [Google Scholar] [CrossRef] [PubMed]

- Panetta, K.; Gao, C.; Agaian, S. Human-Visual-System-Inspired Underwater Image Quality Measures. IEEE J. Ocean. Eng. 2016, 41, 541–551. [Google Scholar] [CrossRef]

- Huang, X.; Belongie, S. Arbitrary Style Transfer in Real-Time with Adaptive Instance Normalization. IEEE Int. Conf. Comput. Vis. 2017, 2017, 1510–1519. [Google Scholar]

| Method | PSNR (dB) | SSIM |

|---|---|---|

| Fusion | 20.709 | 0.886 |

| Statistic | 20.466 | 0.825 |

| FUnIE | 21.119 | 0.787 |

| UGAN | 20.734 | 0.856 |

| AttU-GAN | 21.852 | 0.875 |

| Method | UCIQE |

|---|---|

| Fusion | 0.926 |

| Statistic | 0.713 |

| FUnIE | 0.782 |

| UGAN | 0.891 |

| DEA-Net | 0.649 |

| AttU-GAN | 0.936 |

| Method | UICM | UISM | UICONM | UIQM |

|---|---|---|---|---|

| Fusion | 5.271 | 6.140 | 0.278 | 2.957 |

| Statistic | 4.482 | 5.646 | 0.208 | 2.537 |

| FUnIE | 5.375 | 6.962 | 0.246 | 3.096 |

| UGAN | 5.363 | 6.658 | 0.251 | 3.015 |

| DEA-Net | 3.420 | 5.328 | 0.236 | 2.513 |

| AttU-GAN | 6.587 | 6.839 | 0.237 | 3.053 |

| Method | PSNR (dB) | SSIM |

|---|---|---|

| MSE Only | 19.760 | 0.807 |

| MAE Only | 21.816 | 0.870 |

| AttU-GAN | 21.852 | 0.875 |

| Method | UCIQE |

|---|---|

| MSE Only | 0.928 |

| MAE Only | 0.713 |

| AttU-GAN | 0.936 |

| Method | UICM | UISM | UICONM | UIQM |

|---|---|---|---|---|

| MSE Only | 6.794 | 6.396 | 0.218 | 2.860 |

| MAE Only | 6.454 | 6.802 | 0.241 | 3.051 |

| AttU-GAN | 6.587 | 6.839 | 0.237 | 3.053 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tang, P.; Li, L.; Xue, Y.; Lv, M.; Jia, Z.; Ma, H. Real-World Underwater Image Enhancement Based on Attention U-Net. J. Mar. Sci. Eng. 2023, 11, 662. https://doi.org/10.3390/jmse11030662

Tang P, Li L, Xue Y, Lv M, Jia Z, Ma H. Real-World Underwater Image Enhancement Based on Attention U-Net. Journal of Marine Science and Engineering. 2023; 11(3):662. https://doi.org/10.3390/jmse11030662

Chicago/Turabian StyleTang, Pengfei, Liangliang Li, Yuan Xue, Ming Lv, Zhenhong Jia, and Hongbing Ma. 2023. "Real-World Underwater Image Enhancement Based on Attention U-Net" Journal of Marine Science and Engineering 11, no. 3: 662. https://doi.org/10.3390/jmse11030662

APA StyleTang, P., Li, L., Xue, Y., Lv, M., Jia, Z., & Ma, H. (2023). Real-World Underwater Image Enhancement Based on Attention U-Net. Journal of Marine Science and Engineering, 11(3), 662. https://doi.org/10.3390/jmse11030662