A Two-Stage Weed Detection and Localization Method for Lily Fields Targeting Laser Weeding

Abstract

1. Introduction

- (1)

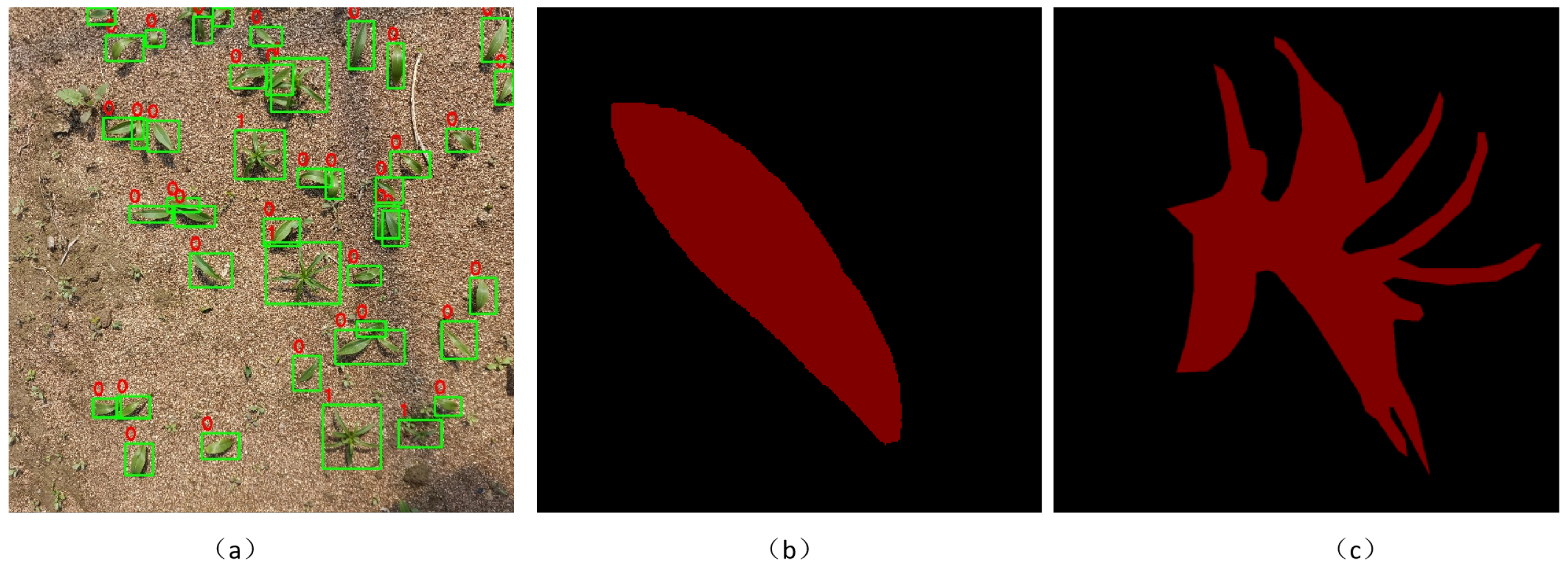

- A high-quality image dataset for weed detection in lily fields was constructed, consisting of 1200 images collected from lily fields in Yuzhong, Lanzhou, Gansu Province, China. The dataset covers various natural conditions, including different times of day, lighting conditions, weed densities, and lily growth stages. All images were manually annotated with high precision. Built under real farmland conditions, the dataset is highly representative and provides reliable data support for the development and evaluation of weed detection algorithms in complex environments.

- (2)

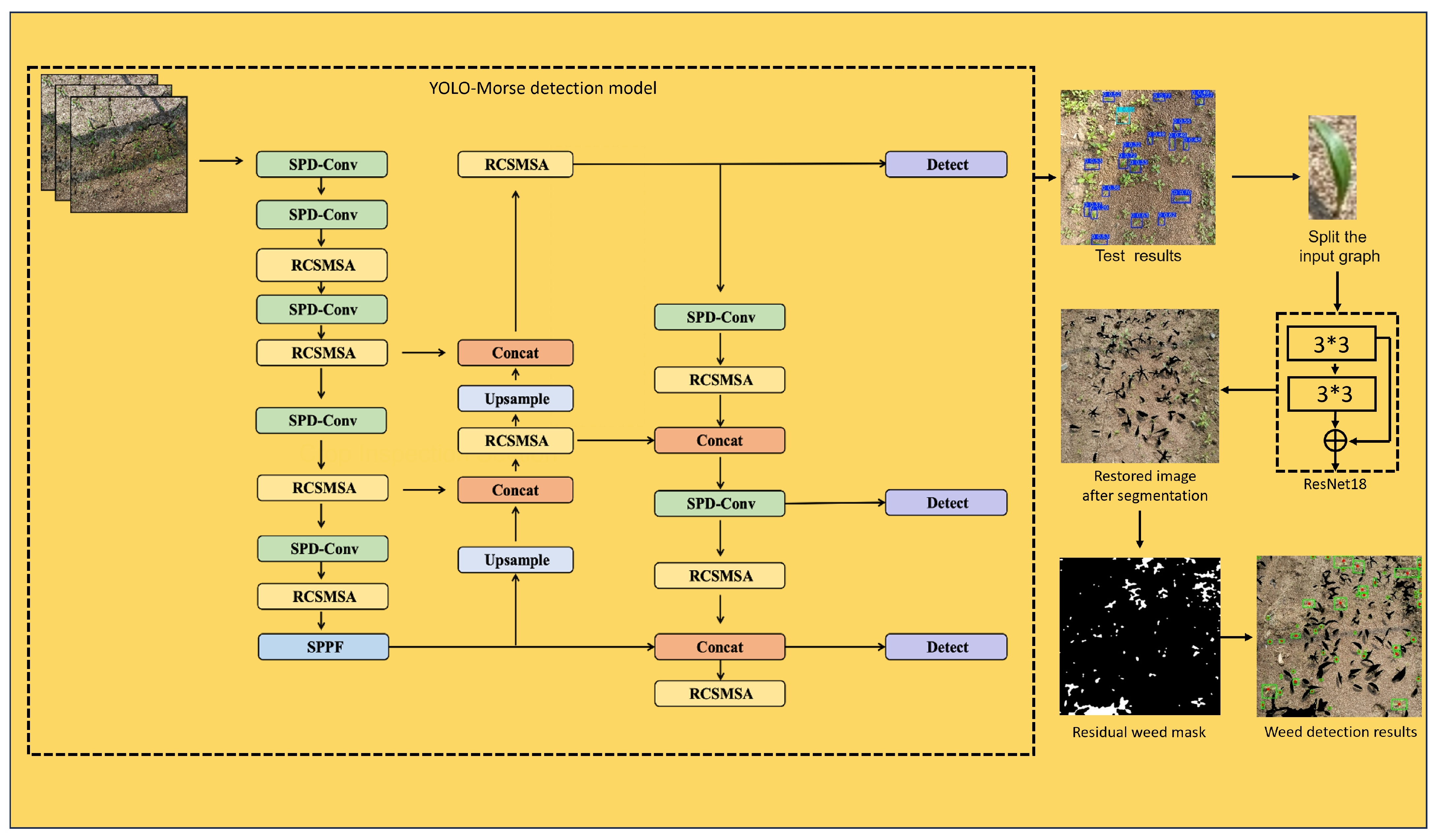

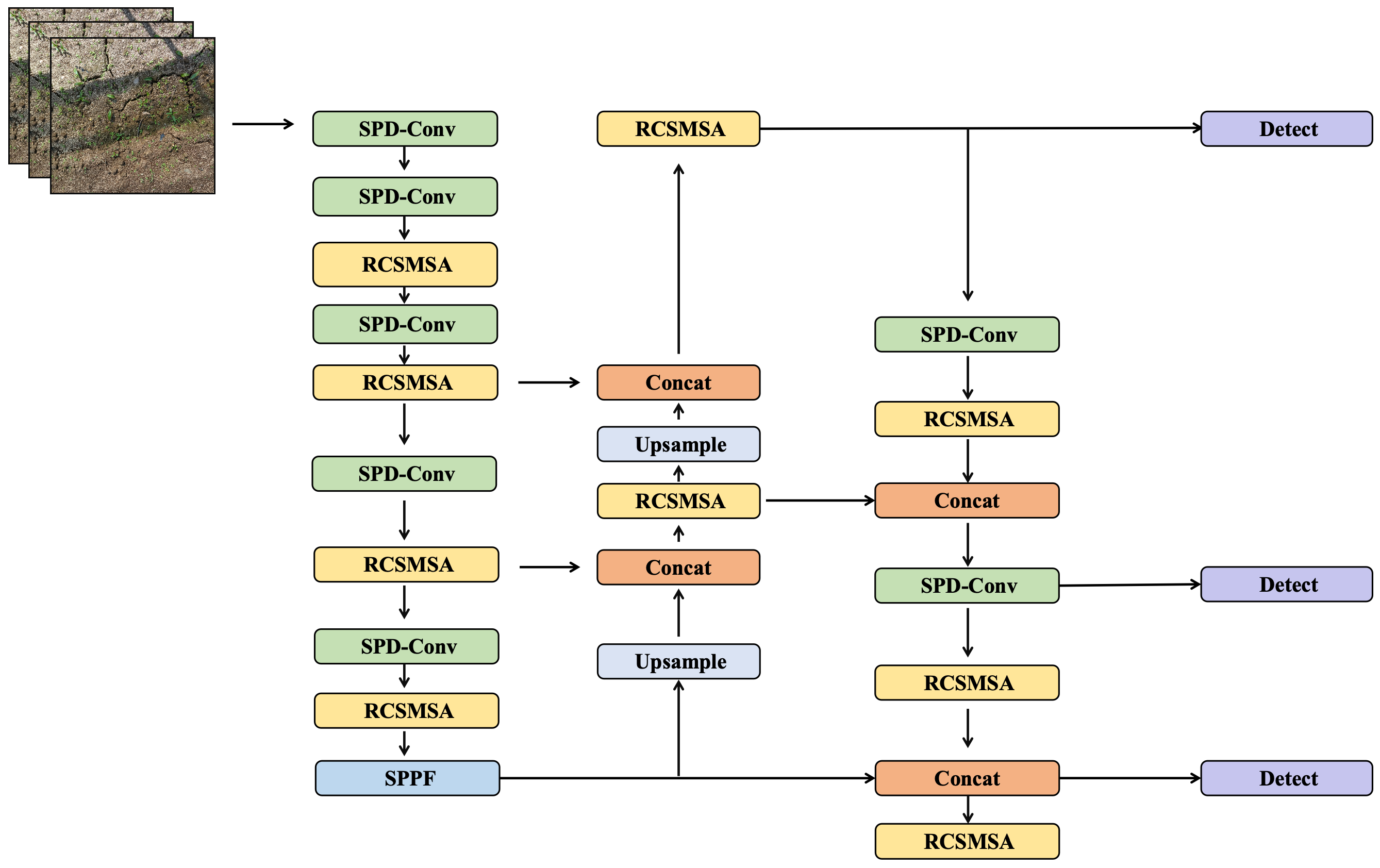

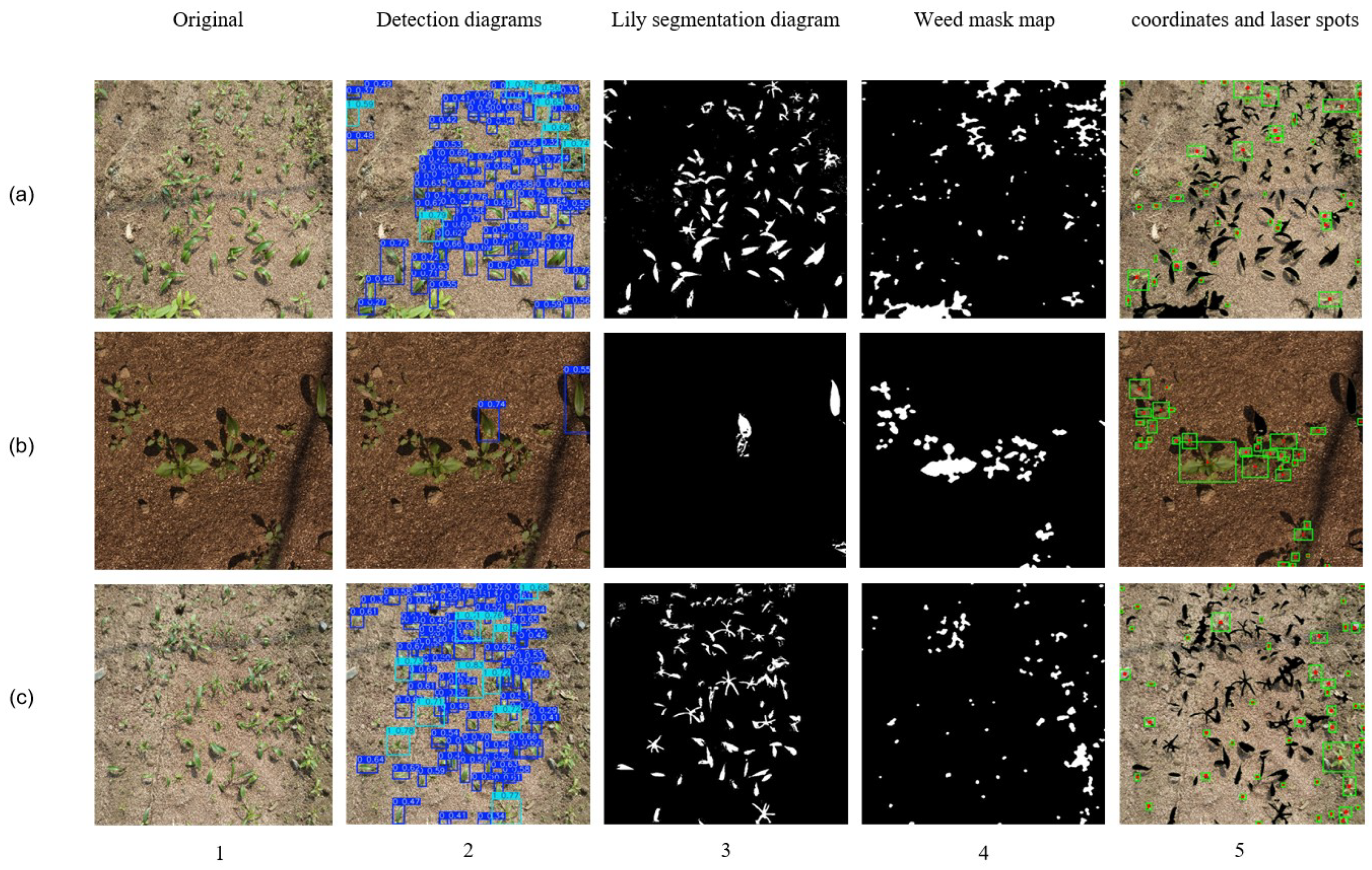

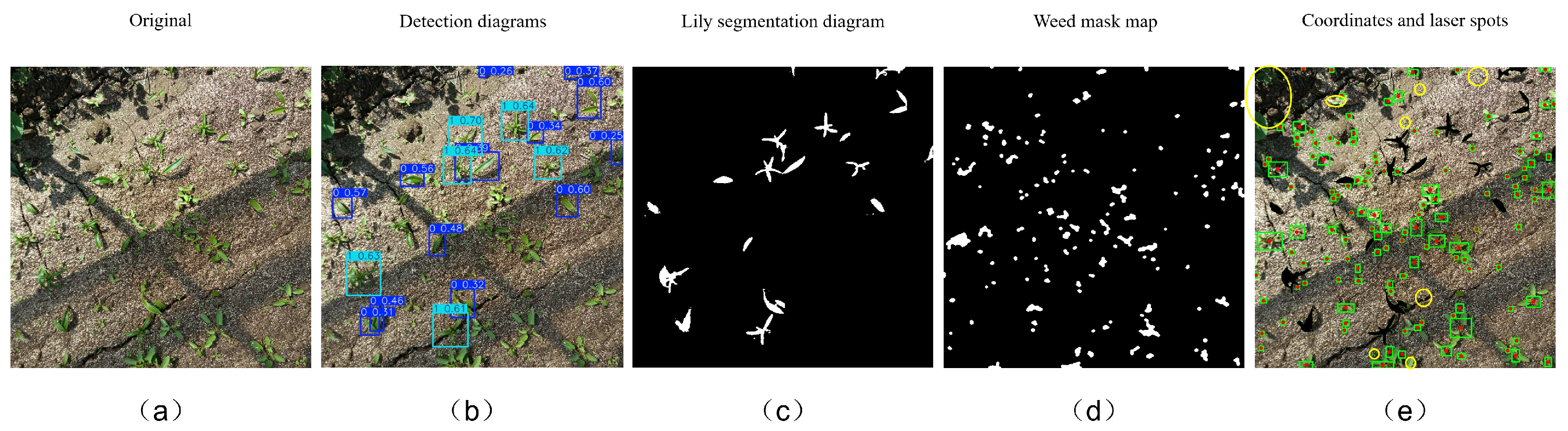

- To address the challenges in lily images, such as small target size, significant pose variations, and distinct phenological stages, this study proposes a collaborative crop-region removal method based on detection and segmentation. In the detection stage, an improved YOLOv8-Morse network is constructed. Specifically, SPD-Conv and ATFI modules are introduced and modified in the feature extraction stage to enhance small object perception and alleviate the issue of sample imbalance. Meanwhile, a multi-scale feature fusion module (MSFM) is designed to strengthen cross-level feature interaction. On this basis, the MSFM is combined with the improved RCS-OSA structure to develop the RCS-MSA attention module, which serves as the core feature enhancement component of the network. In the segmentation stage, a lightweight ResNet18 network is adopted to achieve the high-precision extraction of lily regions while balancing boundary extraction accuracy and computational efficiency. Experimental results demonstrate that the proposed method can effectively separate lily and weed regions under complex field environments, significantly improving the accuracy of subsequent weed detection and providing reliable technical support for intelligent laser weeding.

- (3)

- A weed region extraction and localization strategy based on color space analysis was designed. After removing the lily crop regions, the remaining weed areas in the field image are accurately segmented using HSV color space thresholding combined with morphological processing. The centroid coordinates of the segmented weed regions are then calculated using spatial moments and used as laser targeting positions. This method effectively reduces the system’s reliance on deep models while enhancing the real-time performance and accuracy of weed targeting. Moreover, the method can be extended to other agricultural scenarios with similar crop–weed color characteristics, highlighting its broader applicability.

2. Materials and Methods

2.1. Dataset Acquisition

2.2. Workflow of the Dual-Stage Weed Detection Method

2.3. Improved YOLOv8-Morse Algorithm

2.3.1. RCS-MSA Module

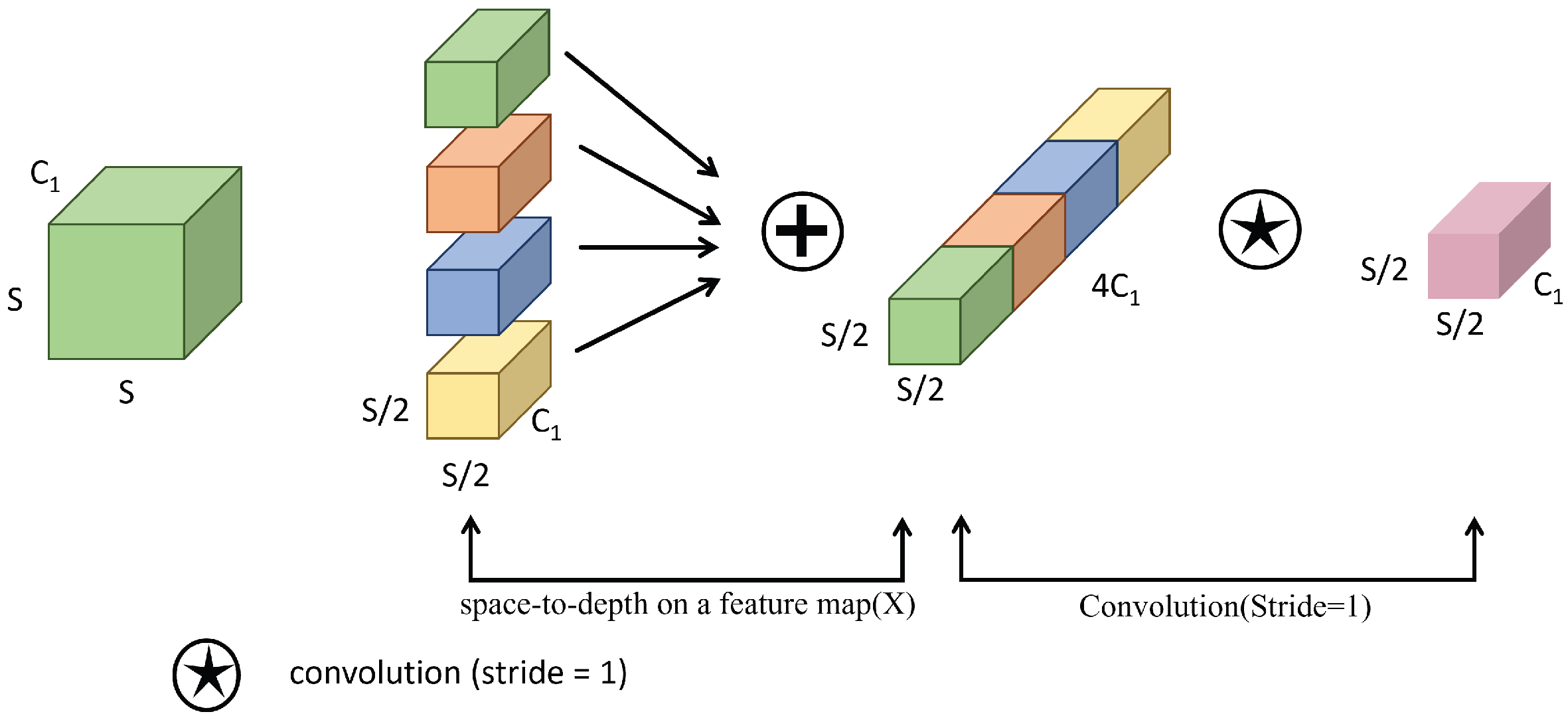

2.3.2. SPD-Conv Module

2.3.3. ATFL Loss Function

2.3.4. Weed Centroid Coordinate Extraction Method

3. Experiments

3.1. Experimental Setup

3.2. Evaluation Metrics

3.3. Result

3.3.1. Comparison of Different Convolutional Blocks for Small Object Detection

3.3.2. Ablation Study Analysis

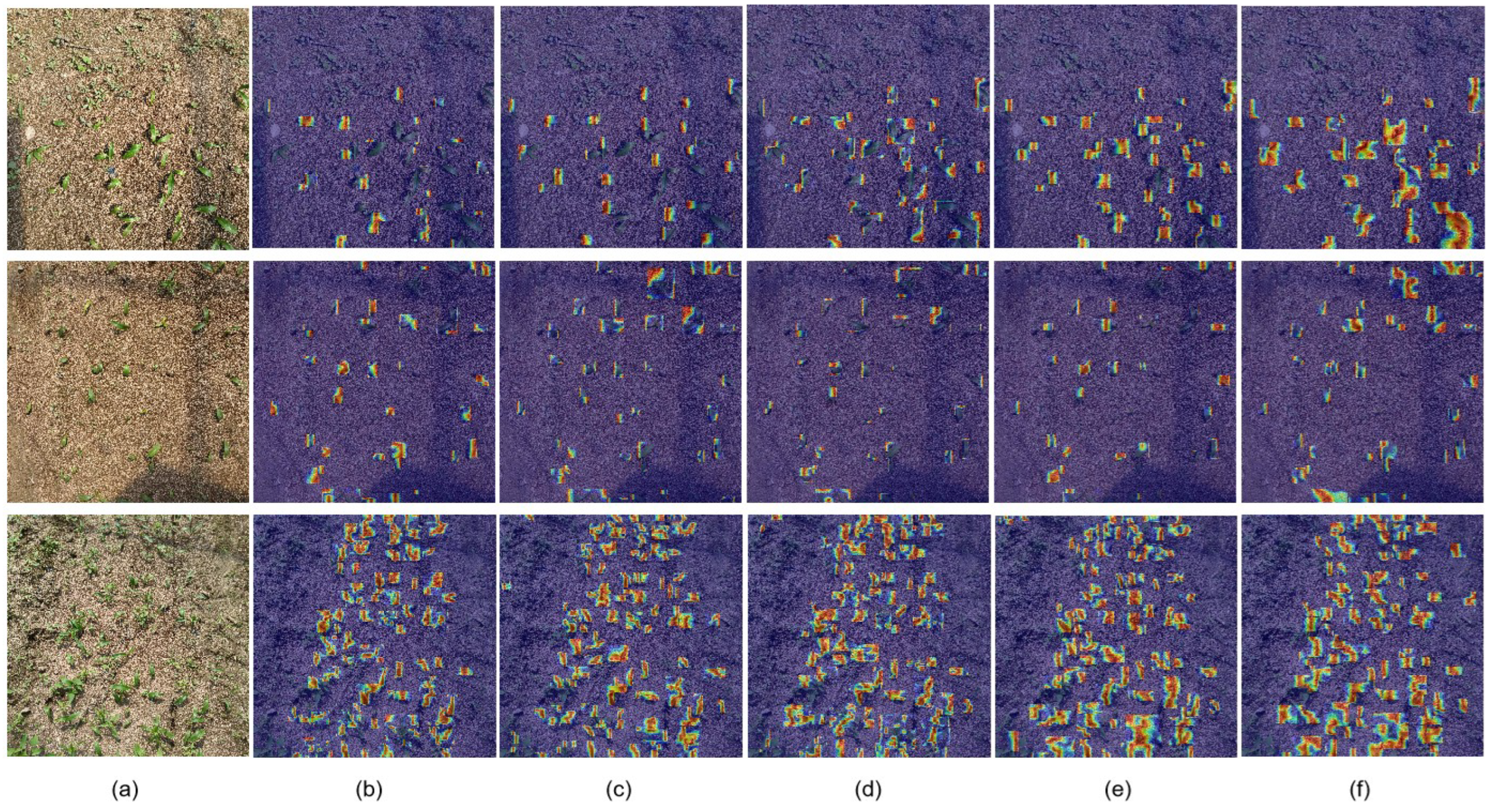

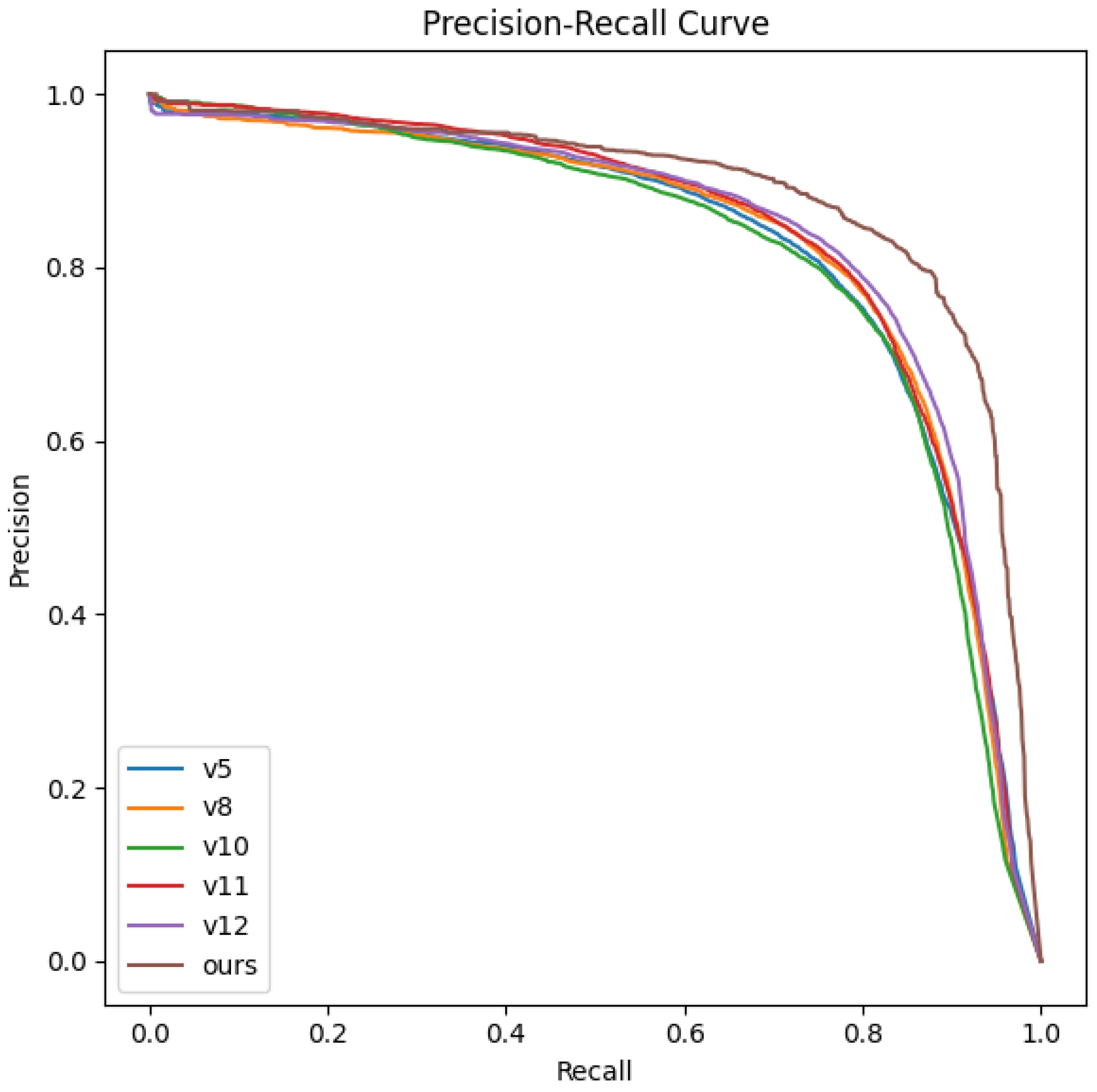

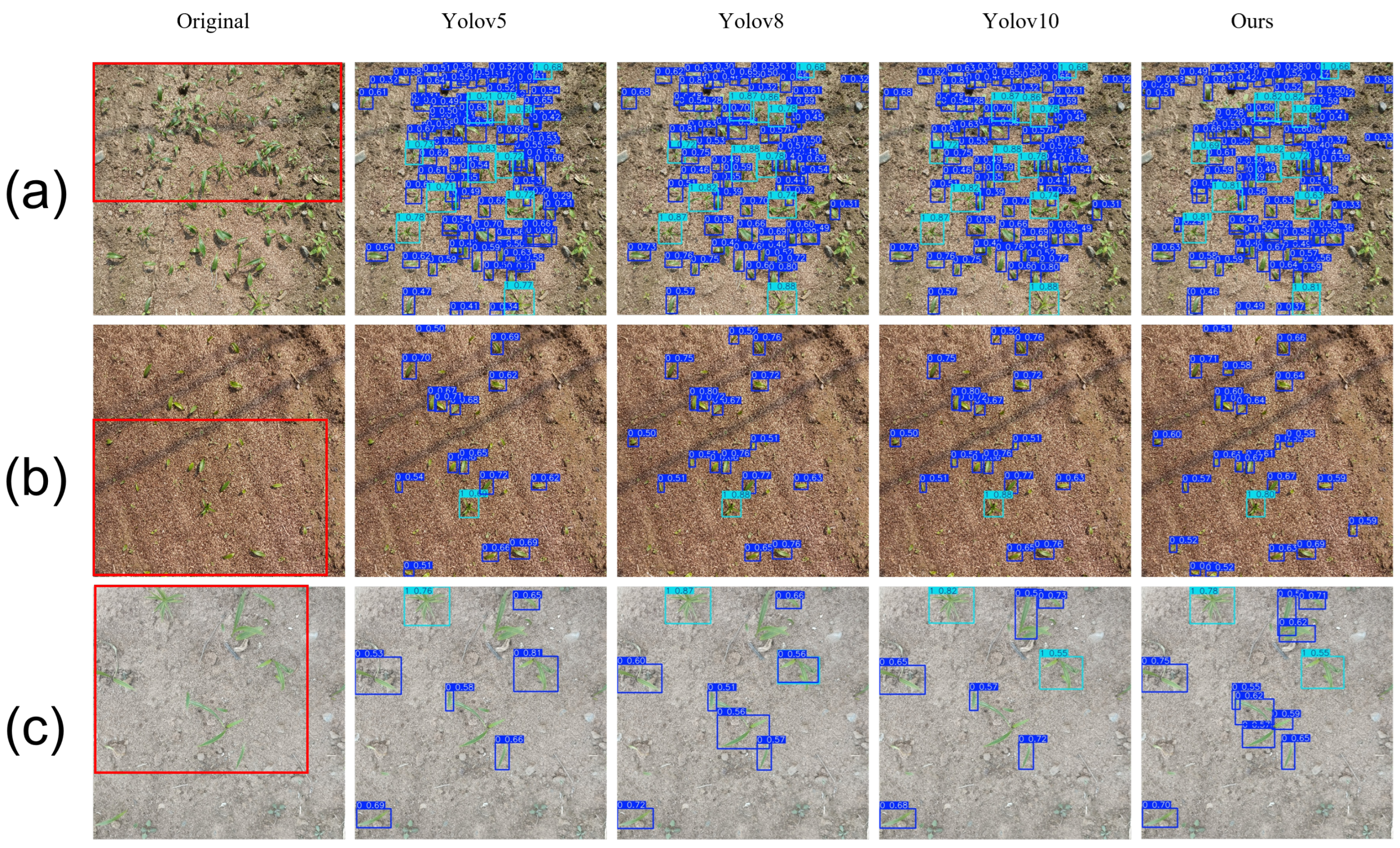

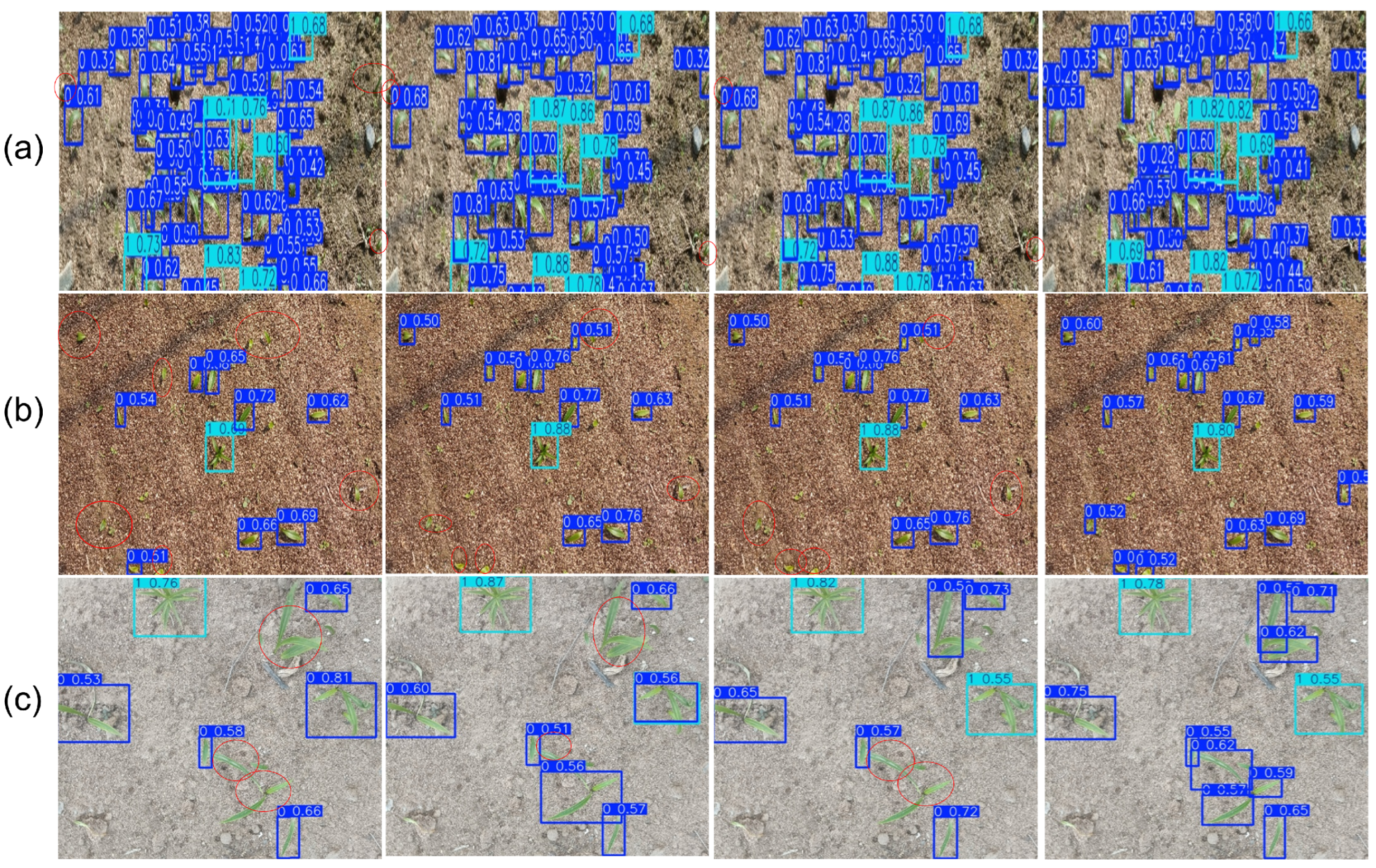

3.3.3. Comparative Analysis of Multiple Model Performances

3.3.4. Visualization of Lily Detection Results

3.3.5. Comparison and Selection of Segmentation Networks

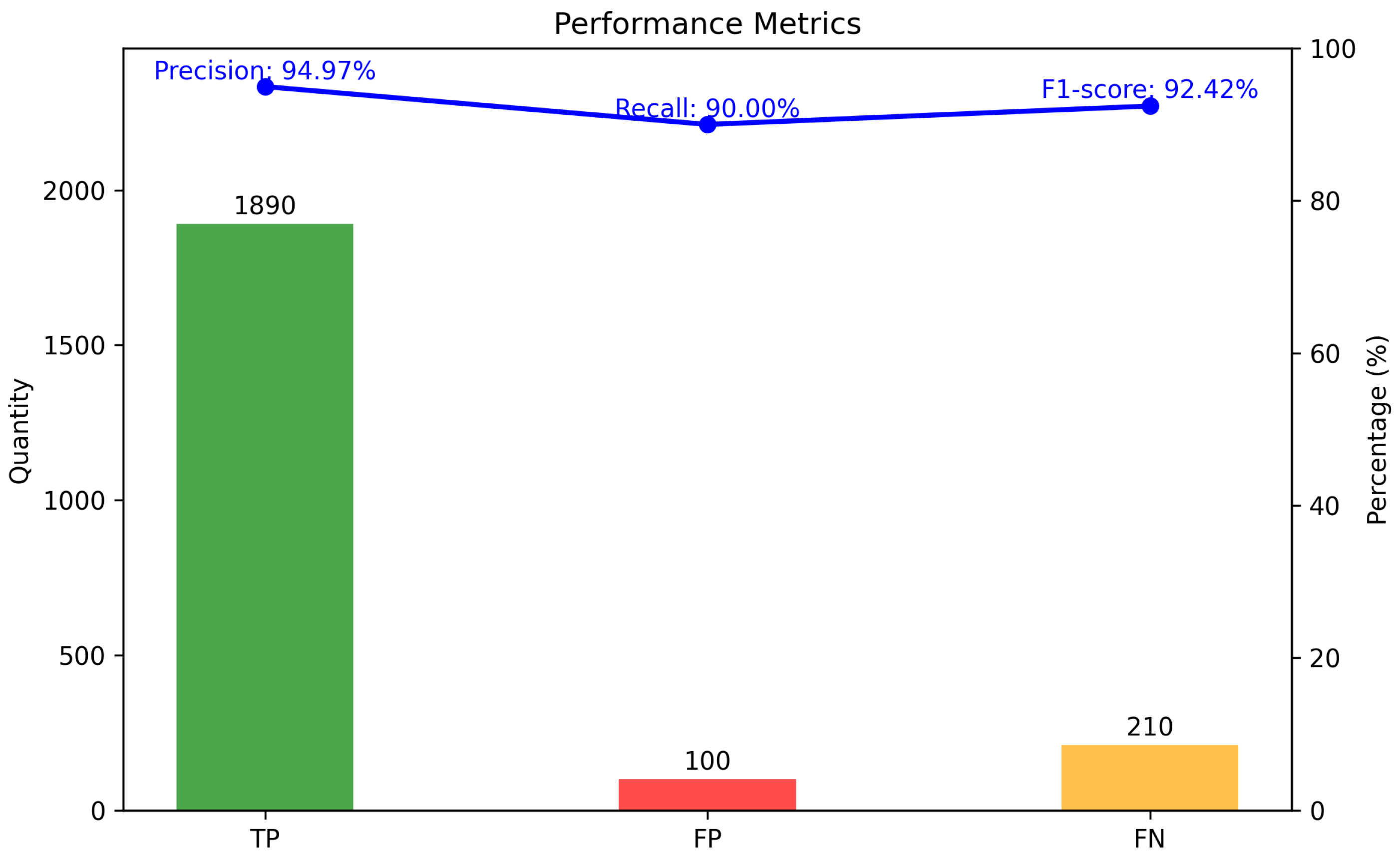

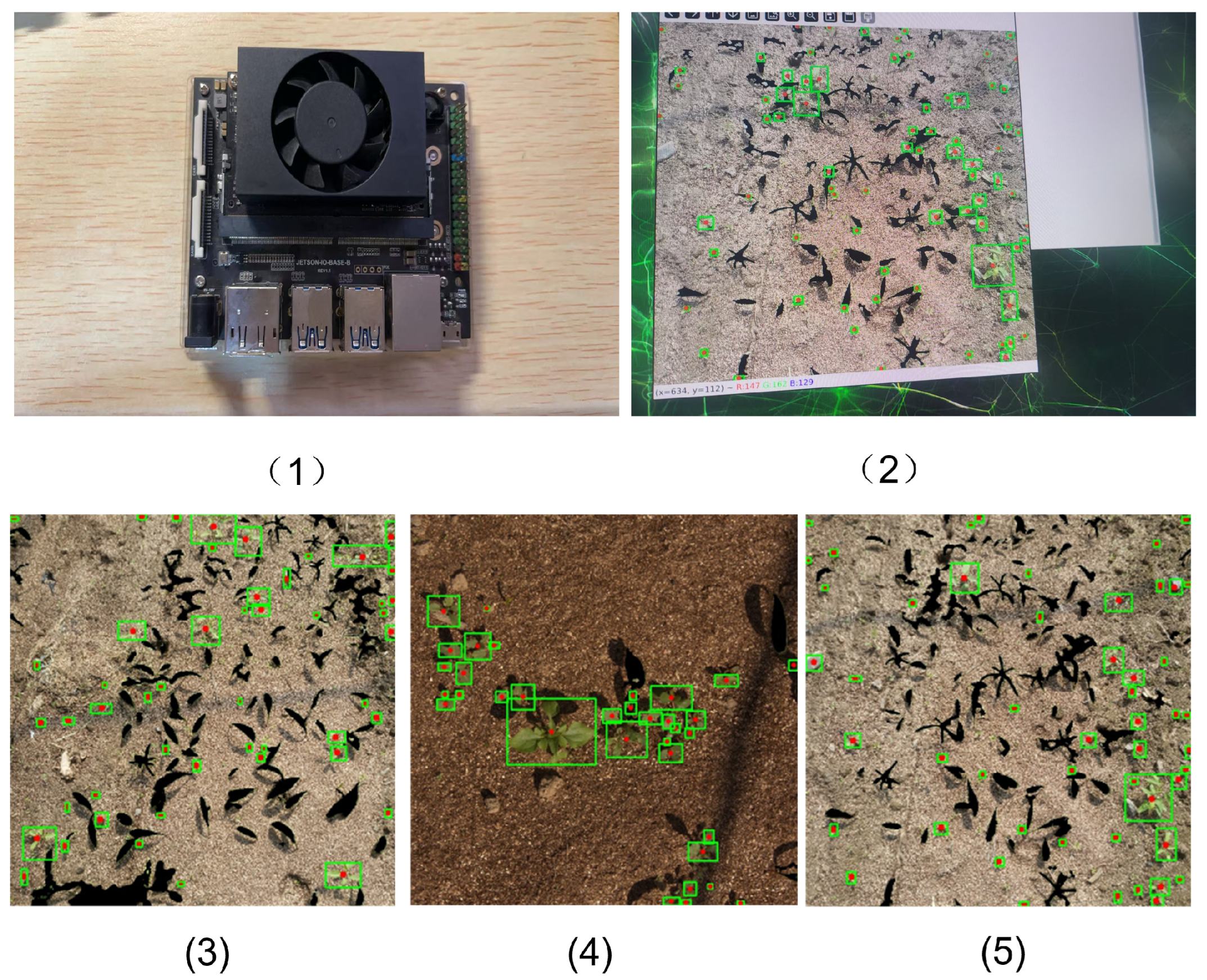

3.4. Overall Scheme Evaluation and Deployment

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Zhang, H.; Jin, L.; Zhang, J.-B.; Niu, T.; Guo, T.; Chang, J. Chemical constituents from the bulbs of Lilium davidii Var. unicolor Anti-Insomnia Effect. Fitoterapia 2022, 161, 105252. [Google Scholar]

- Yang, W.; Wang, P.; Zhang, W.; Xu, M.; Yan, L.; Yan, Z.; Du, W.; Ouyang, L.; Liu, B.; Wu, Z.; et al. Review on preservation techniques of edible lily bulbs in China. CyTA-J. Food 2022, 20, 172–182. [Google Scholar]

- Little, N.G.; DiTommaso, A.; Westbrook, A.S.; Ketterings, Q.M.; Mohler, C.L. Effects of fertility amendments on weed growth and weed–crop competition: A review. Weed Sci. 2021, 69, 132–146. [Google Scholar] [CrossRef]

- Wiafe, E.K.; Betitame, K.; Ram, B.G.; Sun, X. Technical study on the efficiency and models of weed control methods using unmanned ground vehicles: A review. Artif. Intell. Agric. 2025, 15, 622–641. [Google Scholar] [CrossRef]

- Aravind, R.; Daman, M.; Kariyappa, B.S. Design and development of automatic weed detection and smart herbicide sprayer robot. In Proceedings of the 2015 IEEE Recent Advances in Intelligent Computational Systems (RAICS), Kerala, India, 10–12 December 2015; pp. 257–261. [Google Scholar]

- Hamuda, E.; Mc Ginley, B.; Glavin, M.; Jones, E. Automatic crop detection under field conditions using the HSV colour space and morphological operations. Comput. Electron. Agric. 2017, 133, 97–107. [Google Scholar] [CrossRef]

- Zhang, S.; Guo, J.; Wang, Z. Combing K-means Clustering and Local Weighted Maximum Discriminant Projections for Weed Species Recognition. Front. Comput. Sci. 2019, 1, 4. [Google Scholar] [CrossRef]

- Wang, C.; Li, Z. Weed recognition using SVM model with fusion height and monocular image features. Trans. Chin. Soc. Agric. Eng. 2016, 32, 165–174. [Google Scholar]

- Ferro, M.V.; Sørensen, C.G.; Catania, P. Comparison of different computer vision methods for vineyard canopy detection using UAV multispectral images. Comput. Electron. Agric. 2024, 225, 109277. [Google Scholar] [CrossRef]

- Zoubek, T.; Bumbálek, R.; Ufitikirezi, J.D.M.; Strob, M.; Filip, M.; Špalek, F.; Heřmánek, A.; Bartoš, P. Advancing Precision Agriculture with Computer Vision: A Comparative Study of YOLO Models for Weed and Crop Recognition. Crop Prot. 2025, 190, 107076. [Google Scholar]

- Gao, X.; Wang, G.; Zhou, Z.; Li, J.; Song, K.; Qi, J. Performance and speed optimization of DLV3-CRSNet for semantic segmentation of Chinese cabbage (Brassica pekinensis Rupr.) and weeds. Crop Prot. 2025, 195, 107236. [Google Scholar] [CrossRef]

- Zhao, P.; Chen, J.; Li, J.; Ning, J.; Chang, Y.; Yang, S. Design and Testing of an Autonomous Laser Weeding Robot for Strawberry Fields Based on DIN-LW-YOLO. Comput. Electron. Agric. 2025, 229, 109808. [Google Scholar] [CrossRef]

- Su, W.; Yang, W.; Wang, J.; Ren, D.; Chen, D. MKD8: An Enhanced YOLOv8 Model for High-Precision Weed Detection. Agriculture 2025, 15, 807. [Google Scholar] [CrossRef]

- Lu, Z.; Zhang, C.; Lu, L.; Yan, Y.; Jun, W.; Wei, X.; Ke, X.; Jun, T. Star-YOLO: A Lightweight and Efficient Model for Weed Detection in Cotton Fields Using Advanced YOLOv8 Improvements. Comput. Electron. Agric. 2025, 235, 110306. [Google Scholar] [CrossRef]

- Ma, C.; Chi, G.; Ju, X.; Zhang, J.; Yan, C. YOLO-CWD: A Novel Model for Crop and Weed Detection Based on Improved YOLOv8. Crop Prot. 2025, 192, 107169. [Google Scholar] [CrossRef]

- Fan, X.; Sun, T.; Chai, X.; Zhou, J. YOLO-WDNet: A Lightweight and Accurate Model for Weeds Detection in Cotton Field. Comput. Electron. Agric. 2024, 225, 109317. [Google Scholar] [CrossRef]

- Montalvo, M.; Pajares, G.; Guerrero, J.M.; Romeo, J.; Guijarro, M.; Ribeiro, A.; Ruz, J.; Cruz, J. Automatic detection of crop rows in maize fields with high weeds pressure. Expert Syst. Appl. 2012, 39, 11889–11897. [Google Scholar] [CrossRef]

- Tao, T.; Wei, X. STBNA-YOLOv5: An Improved YOLOv5 Network for Weed Detection in Rapeseed Field. Agriculture 2024, 15, 22. [Google Scholar] [CrossRef]

- Rai, N.; Zhang, Y.; Villamil, M.; Howatt, K.; Ostlie, M.; Sun, X. Agricultural Weed Identification in Images and Videos by Integrating Optimized Deep Learning Architecture on an Edge Computing Technology. Comput. Electron. Agric. 2024, 216, 108442. [Google Scholar] [CrossRef]

- Calderara-Cea, F.; Torres-Torriti, M.; Cheein, F.A.; Delpiano, J. A two-stage deep learning strategy for weed identification in grassfields. Comput. Electron. Agric. 2024, 225, 109300. [Google Scholar] [CrossRef]

- Mamat, N.; Othman, M.F.; Abdulghafor, R.; Alwan, A.A.; Gulzar, Y. Enhancing image annotation technique of fruit classification using a deep learning approach. Sustainability 2023, 15, 901. [Google Scholar] [CrossRef]

- Kang, M.; Ting, C.M.; Ting, F.F.; Phan, R.C.-W. RCS-YOLO: A fast and high-accuracy object detector for brain tumor detection. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Vancouver, BC, Canada, 8–12 October 2023; Springer: Cham, Switzerland, 2023; pp. 600–610. [Google Scholar]

- Sunkara, R.; Luo, T. No more strided convolutions or pooling: A new CNN building block for low-resolution images and small objects. arXiv 2022, arXiv:2208.03641. [Google Scholar] [CrossRef]

- Yang, B.; Zhang, X.; Zhang, J.; Luo, J.; Zhou, M.; Pi, Y. EFLNet: Enhancing feature learning network for infrared small target detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5906511. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Wang, Y.; Lu, C. YOLOv10: Real-Time End-to-End Object Detection. Adv. Neural Inf. Process. Syst. 2024, 37, 107984–108011. [Google Scholar]

- Khanam, R.; Hussain, M. YOLOv11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Tian, Y.; Ye, Q.; Doermann, D. YOLOv12: Attention-centric real-time object detectors. arXiv 2025, arXiv:2502.12524. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI), Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Lu, Y. SegFormer: Simple and efficient design for semantic segmentation with transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 12077–12090. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 2002, 86, 2278–2324. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking Atrous Convolution for Semantic Image Segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar] [CrossRef]

| Method | mAP@0.5 (%) | Parameters (M) |

|---|---|---|

| YOLOv8 Original | 82.8 | 12.9 |

| YOLOv8 + SPD-Conv | 84.3 | 13.0 |

| YOLOv8 + CBAM | 81.5 | 11.8 |

| YOLOv8 + SPP | 83.0 | 16.5 |

| YOLOv8 + FPN | 82.8 | 12.9 |

| Model | mAP (%) | Accuracy (%) | Precision (%) | Recall (%) | |||

|---|---|---|---|---|---|---|---|

| RCS-MSA | Spd-Conv | ATFL | Single | More | |||

| × | × | × | 82.8 ± 0.2 | 79.6 ± 0.3 | 78.0 ± 0.2 | 85.9 ± 0.3 | 78.8 ± 0.3 |

| ✓ | × | × | 84.0 ± 0.2 | 80.8 ± 0.3 | 78.6 ± 0.4 | 87.3 ± 0.3 | 80.4 ± 0.3 |

| × | ✓ | × | 84.3 ± 0.2 | 80.6 ± 0.3 | 79.0 ± 0.3 | 88.0 ± 0.3 | 78.8 ± 0.3 |

| × | × | ✓ | 82.9 ± 0.1 | 79.6 ± 0.2 | 78.4 ± 0.2 | 86.1 ± 0.2 | 78.4 ± 0.2 |

| ✓ | ✓ | × | 84.5 ± 0.3 | 81.2 ± 0.2 | 79.5 ± 0.2 | 87.8 ± 0.3 | 80.0 ± 0.2 |

| ✓ | × | ✓ | 84.0 ± 0.2 | 80.2 ± 0.3 | 79.1 ± 0.3 | 86.7 ± 0.2 | 79.5 ± 0.2 |

| × | ✓ | ✓ | 85.2 ± 0.2 | 82.5 ± 0.3 | 79.5 ± 0.3 | 87.9 ± 0.3 | 79.5 ± 0.3 |

| ✓ | ✓ | ✓ | 86.0 ± 0.3 | 83.1 ± 0.3 | 80.5 ± 0.2 | 88.9 ± 0.2 | 80.4 ± 0.3 |

| RCS-MSA | Spd-Conv | ATFL | FLOPs (G) | Memory Usage (M) | GPU Speed (ms) |

|---|---|---|---|---|---|

| × | × | × | 8.1 | 12.9 | 46 |

| ✓ | × | × | 11.9 | 11.4 | 40 |

| ✓ | ✓ | × | 11.6 | 11.6 | 39 |

| ✓ | ✓ | ✓ | 10.1 | 11.2 | 36 |

| Model | mAP (%) | Memory Usage (M) | FLOPs (G) |

|---|---|---|---|

| Faster-RCNN | 70.1 | 120.3 | 12.1 |

| YOLOv5 | 80.3 | 9.55 | 7.1 |

| YOLOv8 | 82.9 | 11.47 | 8.1 |

| YOLOv10 | 81.9 | 10.28 | 8.2 |

| YOLO11 | 82.0 | 9.88 | 6.4 |

| YOLOv12 | 84.0 | 9.0 | 8.0 |

| Ours | 86.0 | 13.0 | 9.0 |

| Model | False Positive Rate (%) | Duplicate Detection Rate (%) |

|---|---|---|

| YOLOv5 | 15 | 10 |

| YOLOv8 | 13 | 12 |

| YOLOv10 | 14 | 15 |

| Ours | 8 | 8 |

| Model | mIoU (%) | Flops (G) | Params (M) |

|---|---|---|---|

| Resnet18 | 87.76 | 92.0 | 11.5 |

| Unet | 82.5 | 605.39 | 29.02 |

| Deeplabv3 | 80.2 | 276.44 | 72.42 |

| Resnet34 | 88.0 | 188.55 | 21.61 |

| Resnet50 | 90.0 | 215.53 | 31.42 |

| Segformer | 82.3 | 75 | 12.5 M |

| LETNet | 83.5 | 52 | 8 M |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, Y.; Liu, C.; Liang, J.; Ji, X.; Li, J. A Two-Stage Weed Detection and Localization Method for Lily Fields Targeting Laser Weeding. Agriculture 2025, 15, 1967. https://doi.org/10.3390/agriculture15181967

Xu Y, Liu C, Liang J, Ji X, Li J. A Two-Stage Weed Detection and Localization Method for Lily Fields Targeting Laser Weeding. Agriculture. 2025; 15(18):1967. https://doi.org/10.3390/agriculture15181967

Chicago/Turabian StyleXu, Yanlei, Chao Liu, Jiahao Liang, Xiaomin Ji, and Jian Li. 2025. "A Two-Stage Weed Detection and Localization Method for Lily Fields Targeting Laser Weeding" Agriculture 15, no. 18: 1967. https://doi.org/10.3390/agriculture15181967

APA StyleXu, Y., Liu, C., Liang, J., Ji, X., & Li, J. (2025). A Two-Stage Weed Detection and Localization Method for Lily Fields Targeting Laser Weeding. Agriculture, 15(18), 1967. https://doi.org/10.3390/agriculture15181967